Abstract

We consider the dissipative spin–orbit problem in Celestial Mechanics, which describes the rotational motion of a triaxial satellite moving on a Keplerian orbit subject to tidal forcing and drift. Our goal is to construct quasi-periodic solutions with fixed frequency, satisfying appropriate conditions. With the goal of applying rigorous KAM theory, we compute such quasi-periodic solutions with very high precision. To this end, we have developed a very efficient algorithm. The first step is to compute very accurately the return map to a surface of section (using a high-order Taylor’s method with extended precision). Then, we find an invariant curve for the return map using recent algorithms that take advantage of the geometric features of the problem. This method is based on a rapidly convergent Newton’s method which is guaranteed to converge if the initial error is small enough. So, it is very suitable for a continuation algorithm. The resulting algorithm is quite efficient. We only need to deal with a one-dimensional function. If this function is discretized in N points, the algorithm requires \(O(N \log N) \) operations and O(N) storage. The most costly step (the numerical integration of the equation along a turn) is trivial to parallelize. The main goal of the paper is to present the algorithms, implementation details and several sample results of runs. We also present both a rigorous and a numerical comparison of the results of averaged and not averaged models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The construction of invariant structures in Celestial Mechanics and Astrodynamics has become of great importance in recent times, both for theoretical reasons and for the practical design of space missions. At present, many space missions are based on the determination of periodic and quasi-periodic orbits. Some notable examples of periodic/quasi-periodic orbits used in mission design are Lyapunov, Lissajous and halo orbits (see, e.g., Celletti et al. 2015; Gómez and Mondelo 2001; Jorba and Masdemont 1999).

The existence and persistence of quasi-periodic orbits were developed by KAM theory (Kolmogorov 1979; Arnol’d 1963; Moser 1962). Making KAM theory into a practical tool is an ongoing and rapidly progressing area, which applies to models of increasing complexity; it also leads to applications and has uncovered new mathematical phenomena.

With these motivations, this work develops a method for the construction of invariant tori in a concrete model of interest in Celestial Mechanics, namely the dissipative spin–orbit problem (see Sect. 2), which describes the rotation about its center of an oblate moon orbiting a planet and subject to tidal forces. Our goal is to develop a method to compute quasi-periodic solutions in the spin–orbit problem. As it is well known, quasi-periodic orbits can be described geometrically as invariant tori on which the motion is conjugate to a rigid rotation. Hence, we will use indistinctly the names quasi-periodic solution and invariant (rotational) torus.

1.1 Overview of the method

The method we develop starts by constructing a surface of section and a return map to it. The invariant tori for the flow correspond to invariant tori for the return map. We show that these return maps for the spin–orbit problem have the remarkable property that they transform the symplectic form into a multiple of itself. These maps are called conformally symplectic systems and enjoy several remarkable properties that lie at the root of a KAM theory and efficient algorithms (see Sect. 4.1).

To find the invariant torus of the map, we follow the approach in Calleja et al. (2013a) and formulate a functional equation for the drift parameter and for the embedding of the torus, whose solutions are obtained formulating a quasi-Newton method that, given an approximate solution of the functional equation, produces another one with a quadratically small reminder. The quasi-Newton method in Calleja et al. (2013a) takes advantage of the conformally symplectic geometric property.

The results of Calleja et al. (2013a) guarantee that the method converges (as a double exponential, as Newton’s methods) if the initial error is small enough (compared to some readily computable condition numbers).

The theorem in Calleja et al. (2013a) also shows that the difference between the true solution and the initial approximation is controlled by the error of the invariance equation, see Eq. (26). Results of this form are called a posteriori theorems in numerical analysis. We also note that one of the consequences of the work in Calleja et al. (2013a) is a local uniqueness for the solutions of (26), except for composition for a rotation. This lack of uniqueness comes from the freedom on the choice of coordinates in the parameterization, but the geometric object and the drift parameter are locally unique.

Since the iterative method converges for small enough error, it will be used as the basis of a continuation method in parameters, which is guaranteed to converge until the assumptions in the theorem fail. Indeed, arguments in Calleja and de la Llave (2010) show that the method can be used as a practical way to compute the breakdown of the torus (see Calleja and Celletti 2010 for an implementation to conformally symplectic maps). Note that the method is backed up by theorems and guaranteed to reach to the boundary of validity of the theorem, if given enough computational resources.

In this paper, we will implement the method with extended arithmetic precision, motivated by the fact that the size of the error needed to apply the a posteriori theorem is typically smaller than what can be obtained in double precision. With modern programming techniques, writing extended precision programs is not much more time consuming than using standard arithmetic. Of course, there is a penalty in speed, but since the algorithm is so efficient, one can still run comfortably even with extended precisions in today’s desktop machines (see Sect. 5.5 for details on timings and resources). Of course, in continuations, it is also possible to run the first iterations in double precision till the error is dominated by the double precision round-off and then run the final iterations in extended precision.

We have also used jet transport in order to get automatically the (first order) variational flow with respect to initial conditions and parameters. In Calleja et al. (2020b), we use the jet transport to get high-order variational flows following the results in Gimeno et al. (2021).

Finally, we have taken advantage of some modern advances such as multicore machines and multithreading given, for instance, continuation iterations in around 1 min when the initial guess is small enough. We did not explore other advanced architectures such as GPU, whose application in Celestial Mechanics is an interesting challenge. Some work on a simpler problem is in Kumar et al. (2021).

1.2 Efficiency and accuracy of the method

The use of return maps is very economical and natural. In the study of invariant tori for differential equations, it is standard to separate the directions along the flow and the transversal directions.

On the one hand, the torus remains very smooth along the directions of the flow for all values of the perturbation parameter. The flow in these directions can just be reduced to the well-studied problem of computing solutions of ODEs, and in fact, there are many different algorithms suitable in different conditions.

On the other hand, the computation of the torus in the directions of the section is a much more complicated problem, since it requires KAM theory and the tori along these directions are much less regular; indeed, for large enough values of the perturbation, the tori may disappear. Nevertheless, even for values of the perturbation parameter where the tori do not exist, the solutions of the differential equations can be comfortably computed.

By dividing the problem into the KAM part for maps and the propagation to the return section, we reduce significantly the difficulty of the KAM part, since the tori have lower dimension. The computation of the return map is more complicated, but it is easily parallelizable and there are many studies on optimizing it. Hence, the breakup ends being rather advantageous. As indicated above, the methodologies of the two parts are very different and each of them can be fine-tuned separately.

The KAM part for maps is well documented in Calleja et al. (2013a); the algorithm therein applies quasi-Newton corrections and takes advantage of several identities related to the fact that the map is conformally symplectic.

The number of explicit steps of the KAM iterative procedure is about a dozen, see Algorithm 5.4. All the elementary steps are well-structured vector operations that are primitives in modern languages or libraries, so that they are not too cumbersome to program.

Quite remarkably, all the steps are diagonal either in a grid representation of the function or in a Fourier representation. Of course, we can switch from one representation to the other using FFT. Hence, for a function discretized in N modes, the quadratically convergent method requires only O(N) storage and \(O(N \log N)\) operations. Note that, in modern computers, the vector operations and the FFT are highly optimized, with specialized hardware.

These KAM algorithms have been implemented for maps given by explicit simple formulas (Calleja and Celletti 2010; Calleja and Figueras 2012; Calleja et al. 2020). In theory, the only thing that one would need to do is to use the return map of the ODE (and its variational equations) in place of the explicit formulas. However, as we report in Calleja et al. (2020a), in contrast with the explicit formulas that have few important harmonics, the return maps have many more relevant harmonics; this requires some adaptations and the phenomena observed are different.

1.2.1 Relation with other methods

The methods of computing invariant tori based on normal form theory require working with functions with as many variables as the phase space, see Stefanelli and Locatelli (2012, 2015). In contrast, our methods require to manipulate only functions with as many variables as the dimension of the tori of the map. Reducing the number of variables in the unknown function is very important, since the number of operations needed to manipulate a function grows exponentially (with a large exponent) with the number of variables. Some recent papers that are also using return maps in Celestial Mechanics are, for instance, Haro and Mondelo (2021) (full-dimensional tori in Hamiltonian systems) and Kumar et al. (2021) (whiskered tori, their stable and unstable manifolds and their intersections in Hamiltonian systems).

It is interesting to compare the methods developed here to Olikara (2016), which uses a discretization of the tori without separating the tori and the flow directions. If the torus is discretized in N points, this method requires \(O(N^2)\) storage and \(O(N^3)\) operations. Notice that N points in 2-D tori give more or less the same precision as \(N^{\frac{1}{2}}\) in 1-D tori.

A curious remark is that the linearization of the invariance Eq. (26) has a spectrum lying in circles as shown by Mather (1968) (see Adomaitis et al. 2007; Haro 2002 for numerical experimentations), so that the Arnold–Krylov methods, successful in other models (Sánchez et al. 2010), do not work very well. The method we use can be understood as saying that, using geometric identities, we get the linearized equations to become constants. (This phenomenon is called automatic reducibility.)

One improvement in continuation methods, after an expensive effort in one step, is that one can compute inverses (Moser 1973; Hald 1975) or diagonalizations (Haro and de la Llave 2006; Jorba and Olmedo 2009), perturbatively. These methods require to store O(N) storage, and the perturbative calculations still require \(O(N \log N)\) operations even if the constants improve.

1.3 The model

We have implemented our results to the so-called dissipative spin–orbit model (Celletti 2010). In this section, we will review the physical bases of the model as well as formulate several variants [so-called time-dependent friction (8) and averaged friction (9)]. These models will be analyzed (numerically and rigorously) in subsequent sections.

The spin–orbit model describes the rotational motion of a triaxial non-rigid satellite whose center of mass moves along an elliptic Keplerian orbit around a central planet. The spin-axis of the satellite is assumed to be perpendicular to the orbital plane and coinciding with the shortest physical axis. The rotation angle of the satellite is the angle between the longest axis of the satellite and a fixed direction, e.g., the periapsis line.

The motion of the rotation angle satisfies a second-order differential equation depending periodically on time, through the orbital elements describing the osculating position of the center of mass of the satellite; such equation depends on two parameters, namely the orbital eccentricity and the equatorial flattening of the satellite. The model equations include a dissipative term due to the non-rigidity of the satellite, since the rotation gives rise to tides that dissipate energy and generate a torque. We adopt the model of Peale (2005) in which the tidal torque is proportional to the angular velocity with a time-periodic coefficient. The dissipative term depends on two parameters: the orbital eccentricity and the dissipative factor, which is determined by the physical features of the satellite.

For typical bodies of the solar system, e.g., the Moon and many others among the biggest satellites, the force induced by the dissipation is much smaller than the conservative part, so that dissipation can be ignored in the description over short times. Nevertheless, since the dissipative forces have consequences that accumulate over time, they are very important in the description of long-term effects.

In the applied literature, it is very common to use a simplified version of the tidal torque that can be obtained by averaging the dissipation over time (Celletti and Chierchia 2009; Celletti and Lhotka 2014; Correia et al. 2004), so that the tidal torque becomes proportional to the derivative of the rotation angle.

One of the advantages of the method in this paper is that it provides a rather general rigorous justification of the averaging method for quasi-periodic solutions. The basic idea is very simple: Using standard averaging methods, we control the \(2\pi \)-time map and, then, the effect of changing the map is controlled by the a posteriori theorem (see Appendix A). For the spin–orbit problem, we also provide another justification that applies to all solutions.

Our formalism provides also rigorous estimates on the validity of the averaging approximation. Standard averaging theory can give estimates on the difference between the return maps in the averaged model and the true model. (Notice that the time of flights in return maps is about 1, so that controlling the averaging method to order 1 is very standard.) Then, we can use the a posteriori format of the KAM theory in Calleja et al. (2013a) to obtain estimates on the difference between the KAM tori and the drift parameters in the averaged and non-averaged cases. This result may look surprising, since one obtains control on solutions (and drift) for very long times. Besides this very general perturbative argument, in Appendix A we present some elementary arguments that, taking advantage of the structure of the system, obtain non-perturbative results.

We conclude by mentioning that the current work has several consequences: In Calleja et al. (2020b), we study the quantitative verification of a posteriori theorems and the quantitative condition numbers, while in Calleja et al. (2020a) we explore numerically the boundary of validity of KAM theory and uncover several phenomena that deserve further mathematical investigation. We hope that this paper (and the companions Calleja et al. 2020b and Calleja et al. 2020a) can stimulate further research, for example, turning the estimates in Calleja et al. (2020b) into rigorous computer-assisted proofs, studying higher-dimensional models, incorporating more advanced computer architectures and explaining the phenomena at breakdown.

1.4 Organization of this paper

This work is organized as follows. In Sect. 2, we present the spin–orbit model with tidal torque. The numerical formulation of the spin–orbit problem is given in Sect. 3, while the spin–orbit map is derived in Sect. 4. The algorithm for the construction of invariant attractors and its applications is presented in Sect. 5. Finally, some conclusions are given in Sect. 6.

2 The spin–orbit problem with tidal torque

Consider the motion of a rigid body, say a satellite \({\mathcal {S}}\), with a triaxial structure, rotating around an internal spin-axis and, at the same time, orbiting under the gravitational influence of a point-mass perturber, say a planet \({\mathcal {P}}\). A simple model that describes the coupling between the rotation and the revolution of the satellite goes under the name of spin–orbit problem, which has been extensively studied in the literature in different contexts (see, e.g., Beletsky 2001; Celletti 1990b, a; Correia et al. 2004; Wisdom et al. May 1984). This model is based on some assumptions that we are going to formulate as follows. Let \({\mathcal {A}}< {\mathcal {B}} < {\mathcal {C}}\) denote the principal moments of inertia of the satellite \({\mathcal {S}}\); then, we assume that:

- H1.:

-

The satellite \({\mathcal {S}}\) moves on an elliptic Keplerian orbit with semimajor axis a and eccentricity \(e\), and with the planet \({\mathcal {P}}\) in one focus;

- H2.:

-

The spin-axis of the satellite coincides with the smallest physical axis of the ellipsoid, namely the axis with associated moment of inertia \({\mathcal {C}}\);

- H3.:

-

The spin-axis is assumed to be perpendicular to the orbital plane;

- H4.:

-

The satellite \({\mathcal {S}}\) is affected by a tidal torque, since it is assumed to be non-rigid.

We adopt the units of measure of time such that the orbital period, say \(T_{orb}\), is equal to \(2\pi \), which implies that the mean motion \(n=2\pi /T_{orb}\) is equal to one.

We define the equatorial ellipticity as the parameter \(\varepsilon >0\) given by

which is a measure of the oblateness of the satellite. When \(\varepsilon = 0\), then \({\mathcal {A}}={\mathcal {B}}\) which means that the satellite is symmetric in the equatorial plane and, because of (H3), it coincides with the orbital plane.

We consider the perturber \({\mathcal {P}}\) at the origin of an inertial reference frame with the horizontal axis coinciding with the direction of the semimajor axis. It is convenient to identify the orbital plane with the complex plane \({\mathbb {C}}\). The location of the center of mass of the rigid body \({\mathcal {S}}\) with respect to the perturber \({\mathcal {P}}\) is given, in exponential form, by \(r \exp (\mathtt {i}f) \in {\mathbb {C}}\), where \(r > 0\) and f are real functions depending on the time t and they represent, respectively, the instantaneous orbital radius and the true anomaly of the Keplerian orbits. Indeed, over time, r and f describe an ellipse of eccentricity \(e\in [0,1)\), semimajor axis a and focus at the origin, see Fig. 1.

Given that the mean motion n has been normalized to one, then the mean anomaly coincides with the time t. By Kepler’s equation (Celletti 2010), we have the following relation between the eccentric anomaly u and the time:

The expressions which relate r and f with the eccentric anomaly (and hence with time through (2)) are given by

Notice that we are assuming in (4) that for \(t=0\), \(f(0) = u(0) = 0\), and consequently, \(f(\pi ) = u(\pi ) = \pi \) when \(t = \pi \). We also recall the following relations between the Keplerian elements that will be useful in the following:

As for the rotational motion, let x be the angle formed by the direction of the largest physical axis, which belongs to the orbital plane, due to the assumptions (H2) and (H3), with the horizontal (or semimajor) axis a.

If we neglect dissipative forces, the equation of motion which gives the dependence of x on time is given by the following expression (Celletti 2010) to which we refer as the conservative spin–orbit equation:

where \(\varepsilon > 0\) is given in (1), \(r(t)=r(u(t);e)\) in (3), \(f(t)=f(u(t);e)\) in (5), and where u is related to t through (2).

The spin–orbit problem: A triaxial satellite \({\mathcal {S}}\) moves around a planet \({\mathcal {P}}\) on an elliptic orbit with semimajor axis a and eccentricity e. The position of the barycenter of \({\mathcal {S}}\) is given by the orbital radius r and the true anomaly f. The rotational angle is denoted by x

If we now assume that the satellite is not rigid, then we must consider a tidal torque, say \({\mathcal {T}}_d\), that acts on the satellite. According to Macdonald (1964), Peale (2005), we can write the tidal torque as a linear function of the velocity:

where \(\eta > 0\) is named the dissipative constant. Since we are interested in astronomical applications, we specify that \(\eta \) depends on the physical and orbital features of the body and takes the form

where \(k_2\) is the second degree potential Love number (depending on the structure of the body), Q is the so-called quality factor (which compares the frequency of oscillation of the system to the rate of dissipation of energy), \(\xi \) is a structure constant such that \(C=\xi mR_e^2\), \(R_e\) is the equatorial radius, M is the mass of the central body \({\mathcal {P}}\), and m is the mass of the satellite \({\mathcal {S}}\). Astronomical observations suggest that for bodies like the Moon or Mercury the dissipative constant \(\eta \) is of the order of \(10^{-8}\).

The dynamics including the tidal torque is then described by the following equation to which we refer as the dissipative spin–orbit equation:

The expression for the tidal torque can be simplified by assuming (as in Peale (2005), Correia et al. (2004)) that the dynamics is essentially ruled by the average \(\overline{{\mathcal {T}}}_d\) of the tidal torque over one orbital period, which can be written as

where (compare with Peale 2005)

When considering the averaged tidal torque, one is led to study the following equation of motion to which we refer as the averaged dissipative spin–orbit equation:

Note that in this model, we consider the average of the tidal forces, but do not average the conservative forces (see Appendix A). This is justified because, as indicated before, in practical problems, the dissipative forces are much smaller than the conservative ones.

Remarks 2.1

-

(i)

The parameter \(\varepsilon \) in (1) is zero only in the case of an equatorial symmetry with \({\mathcal {A}} = {\mathcal {B}}\). In that case, the equation of motion (6) is trivially integrable.

-

(ii)

When \(e=0\), the orbit is circular and therefore \(r = a\) and \(f = t + t _0\). Also, in this case the equation of motion (6) is integrable.

-

(iii)

Equation (6) is associated with the following one-dimensional, time-dependent Hamiltonian function:

$$\begin{aligned} {\mathcal {H}}(y,x,t) = \frac{y^2}{2} - \frac{\varepsilon }{2} \Big (\frac{a}{r(t)}\Big )^3 \cos (2x - 2f(t))\ . \end{aligned}$$(11) -

(iv)

Equations (6), (8) and (10) are defined in a phase space which is a subset of \([0,2\pi )\times {\mathbb {R}}\). Such a phase space can be endowed with the standard scalar product and a symplectic form \(\Omega \), which in our case is just the two-dimensional area in phase space. Even if in two-dimensional phase spaces the area (volume) is the same as the symplectic manifold, in systems with N degrees of freedom (\(N > 1\)) the preservation of the two-form \(\Omega \) is much more stringent than the preservation of the 2N-dimensional volume.

3 Numerical version of the spin–orbit problem

To get a numerical representation of the ordinary differential Eq. (8) (equivalently (6) or (10)), it is convenient to express the equation in terms either of the eccentric anomaly u or the mean anomaly which coincides with t. Although there is a clear bijection between t and u through (2), it seems reasonable to redefine everything in terms of the eccentric anomaly u due to the expressions of r in (3) and f in (4). The procedure to get the equation of motion with u as independent variable is the following.

The expression of f in (5) is given easily in terms of u (and \(e\)). Let \(s(x)=s(x;u,e)\) be the function defined as \(s(x;u,e):=\sin (2x(t)-2f(t))\), where the dependence on u, \(e\) enters through f. Using trigonometric identities, we have an explicit expression in terms of u and \(e\) for the sinus in (8):

Note here the useful relation for the derivatives of s, c:

where

The time change given by (2) leads to

As a consequence, Eq. (8) can be expressed in terms of the independent variable u as

with r defined in (3) and \(s(\beta ) :=s(\beta ; u, e)\) given in (12).Footnote 1

Note that the introduction of u as independent variable implies a non-constant deformation in the angular component. More precisely, if \(y(t) = \frac{\mathrm{d}x(t)}{\mathrm{d}t}\), defining

we obtain

The ODE system (15) can now be integrated by any classical numerical integrator (Hairer et al. 1993) without having to solve the Kepler’s Eq. (2) at each integration step. In the following sections, we will use a Taylor’s integrator (Jorba and Zou 2005a) that we briefly recall for self-consistency in Appendix B. We have used Taylor’s method because it can produce solutions with high accuracy (say \(10^{-30}\)), since it can easily increase the orders of the Taylor’s expansions in order to provide good enough trajectory values. These integrators are also used to produce rigorous enclosures (Berz and Makino 1998). Both features seem to be important toward the goal of producing computer-assisted proofs (progress toward this goal will be reported in Calleja et al. (2020b)) or in the study of phenomena at breakdown that require delicate calculations not easy to make convincing. (Progress toward this goal will be reported in Calleja et al. (2020a).)

Even if not directly used in the present paper, we report in Appendix C the variational equations associated with (15). The variational equations with respect to coordinates and parameters can be used for different purposes (see Calleja et al. 2020b, a), e.g., to compute the parameterization of invariant structures, to get estimates based on the derivative of the flow, to compute chaos indicators like the Fast Lyapunov Indicator (Froeschlé et al. 1997).

4 The conformally symplectic spin–orbit map

In this section, we introduce the notion of conformally symplectic systems (Sect. 4.1), we reduce the study of the spin–orbit problem to a discrete map (Sect. 4.2) and we provide an explicit expression of the conformally symplectic factor (Sect. 4.3).

4.1 Conformally symplectic systems

Conformally symplectic systems are dissipative systems that enjoy the remarkable property that they transform the symplectic form into a multiple of itself.

The formal definition for 2n-dimensional discrete and continuous systems is the following.

Let \({\mathcal {M}} = U\times {\mathbb {T}}^n\) be the phase space with \(U\subseteq {\mathbb {R}}^n\) an open and simply connected domain with smooth boundary. We endow the phase space \({\mathcal {M}}\) with the standard scalar product and a symplectic form \(\Omega \), represented by a matrix J at the point \({{\underline{z}}}\) acting on vectors \({{\underline{u}}},{{\underline{v}}}\in {\mathbb {R}}^{2n}\) as \(\Omega _{{\underline{z}}}({{\underline{u}}}, {{\underline{v}}}) = ({{\underline{u}}}, J({{\underline{z}}}){{\underline{v}}})\).

For the spin–orbit model, the symplectic form \(\Omega \) is represented by the constant matrix J which takes the form

Definition 4.1

A diffeomorphism f on \({\mathcal {M}}\) is conformally symplectic if, and only if, there exists a function \(\lambda :{\mathcal {M}}\rightarrow {\mathbb {R}}\) such that

where \(f^*\) denotes the pull-back of f (i.e., \(f^*\Omega = \Omega \circ f\)) and \(\lambda \) is called the conformal factor.

In the following, we will consider a family \(f _\mu :{\mathcal M}\rightarrow {{\mathcal {M}}}\) of mappings and we will call \(\mu \in {{\mathbb {R}}}\) the drift parameter. Correspondingly, we will replace (18) by

We notice that for \(\lambda =1\), we recover the symplectic case. Moreover, as remarked in Calleja et al. (2013a), for \(n=1\) any diffeomorphism is conformally symplectic with a conformal factor that might depend on the coordinates; in particular, when \(\Omega \) is the standard area, one has either \(\lambda (x)=|\det (Df _\mu (x))|\) or \(\lambda (x)=-|\det (D f _\mu (x))|\). When \(n\ge 2\), it follows that \(\lambda \) is a constant (see, e.g., Banyaga 2002; Calleja et al. 2013a).

Definition 4.1 extends to continuous systems by the use of the Lie derivative.

Definition 4.2

We say that a vector field X is a conformally symplectic flow if, and only if, denoting by \(L _X\) the Lie derivative, there exists a function \(\mu :{\mathbb {R}}^{2n} \rightarrow {\mathbb {R}}\) such that

Denoting by \(\Phi _{t _0}^t\) the flow at time t of the vector field X from the initial time \(t _0\), we observe that (19) implies that

In our applications, we will consider a family of vector fields \(X _\sigma \) depending on a drift parameter \(\sigma \in {\mathbb {R}}\) and we will replace (19) by

In our applications, we will also have to consider time-dependent vector fields. A time-dependent vector field X(t) is conformally symplectic when \(L_{X(t) }\Omega = \mu (t) \Omega \). It is not difficult to show that \(\Phi _a^b\), the diffeomorphism which takes initial conditions at time a to the position at time b (hence \(\Phi _a^c = \Phi _b^c \circ \Phi _a^b\)), satisfies

Note that (20) implies that if X(t) is periodic of period T, the conformal factor of \(\Phi _a^{a+T}\) is independent of a and it is equal to the conformal factor of a vector field with a constant \({\bar{\mu }} = \frac{1}{T} \int _a^{a+T} \mu (s) \, \mathrm{d}s\). This will be useful in the justification of averaging (see Appendix A).

The spin–orbit models described by (8) and (10) are both conformally symplectic. Let us start to show that the averaged system (10) is conformally symplectic. We write (10) as the first-order system

where \(\mu = \eta {\bar{L}}(e)\) and \( \upsilon = \frac{\bar{N}(e)}{{\bar{L}}(e)}\). Hence, \(\mu \) is the conformal factor and \( \upsilon \) is the drift parameter. Denoting by \(i_X\) the interior product and recalling that \(\Omega =\mathrm{d}y\wedge \mathrm{d}x\), we have

Then, we have

Since

we conclude that

A similar computation shows that the model described by (8) is conformally symplectic.

4.2 The spin–orbit map

We introduce the Poincaré map associated with (8) or (10), which allows one to reduce the continuous spin–orbit problem to a discrete system. We denote by \(G _e\) the flow at time \(2\pi \) in the independent variable u associated with the equation of motion (15) in the coordinates \((\beta ,\gamma )\):

with \(\beta (2\pi ;\beta _0,\gamma _0,e) \) and \(\gamma (2\pi ; \beta _0,\gamma _0,e)\) denoting the solution at \(u=2\pi \) with initial conditions \((\beta _0,\gamma _0)\) at \(u = 0\). If we set \(G _e= (G^{(1)} _e, G^{(2)} _e)\), then the Poincaré map associated with (15) is

The Poincaré map \(P_e\) at time \(t = 2\pi \) associated with (8) is then given by the conjugacy

with the (time) change of coordinates from \((x,y)/(2\pi )\) to \((\beta , \gamma )\) given by

Using the map \(G _e\) is very advantageous in numerical implementation because this allows us to avoid to dealing with the Kepler’s Eq. (2). On the other hand, the map \(P _e\) is appropriate for physical interpretations, and the close and explicit relation among them (22) allows to choose the most advantageous one for the task at hand.

4.3 The conformally symplectic factor

Our next task is to find an explicit form for the conformally symplectic factor \(\lambda \) of the Poincaré map associated with (8) or equivalently (15).

By the Jacobi–Liouville theorem, the determinant of the differential of the Poincaré map is obtained by integrating the trace of the Jacobian matrix associated with (8). Since the determinant and the trace of a matrix are invariant under a change of basis, their values are the same if we compute the system (15) via the Jacobian with elements \(a_{ij}\) given by

This leads to the following expression for the conformal factor:

It is remarkable that the integral in (24) can be computed analytically in terms of \(\eta \) and \(e\). To this end, we need the following preliminary result.

Lemma 4.3

If \(e\in [0, 1)\) and \(r = a (1 - e\cos u)\), then

Proof

Since \(e\in [0,1)\), then \((\frac{a}{r})^5\) has no poles in the unit circle. By the change of variables \(z = \exp (\mathtt {i}u)\) and a straightforward application of the residue theorem, we obtain:

where \({{\,\mathrm{Res}\,}}\) denotes the residue of a holomorphic function. To compute the residue, we need to evaluate the poles \(\alpha _\pm \) which are given by \(\alpha _\pm = e^{-1}(1 \pm \sqrt{1 - e^2})\). Since \(|\alpha _- | < 1\), then by an explicit computation, we get:

\(\square \)

The above result leads to the following corollary, which gives an explicit form of the conformal factor of the spin–orbit model described by (8).

Corollary 4.4

The conformally symplectic factor of the \(2\pi \)-time map of the spin–orbit problem with tidal torque given by the system (8) has the following expression:

Remarks 4.5

-

(i)

Note that the result in Corollary 4.4 is also valid under the change of time given in (2). This means that the \(2\pi \)-time map associated with the system (15) has the same symplectic factor, since the determinant is invariant under a change of basis.

-

(ii)

The conformally symplectic factor \(\lambda \) can be either contractive, expansive or neutral. The value \(\lambda \) has a clear dynamical interpretation: At each \(2\pi \)-interval of time, the values move \(\lambda \) far away from unity. We remark that in this work we are interested to the contractive case, namely \(\eta > 0\).

5 The computation of invariant attractors

We consider the model described by Eq. (8), and we provide an algorithm (see Sect. 5.1) for the construction of KAM invariant attractors. The algorithm relies on the fact that, starting from an initial approximate solution, one can construct a better approximate solution. A possible choice for the initial approximate solution is presented in Sect. 5.2. A validation of the goodness of the solution is considered in Sect. 5.3, which provides some accuracy tests. The construction of the invariant attractors through the implementation of the algorithm described in Sect. 5.1 requires some technical procedures, precisely multiple precision arithmetic (see Appendix D) and parallel computing (see Sect. 5.5). Examples of the application of Algorithm 5.4 are given in Sect. 5.6.

5.1 An algorithm for constructing invariant attractors

Invariant attractors for the map \(P_e\) defined in (22) associated with the dissipative spin–orbit Eq. (8) can be obtained by implementing an efficient algorithm based on Newton’s method; this algorithm is also used to give a rigorous proof of invariant tori through KAM theorem, see Calleja et al. (2020b), as well as to give accurate bounds on the breakdown threshold, see Calleja et al. (2020a).

To introduce the algorithm, we need to fix the frequency \(\omega \), that we are going to choose sufficiently irrational, see Definition 5.1, and we need to introduce invariant KAM attractors, see Definition 5.2.

Definition 5.1

The number \(\omega \in {\mathbb {R}}\) is said Diophantine of class \(\tau \) and constant \(\nu \) for \(\tau \ge 1\), \(\nu >0\), and briefly denoted as \(\omega \in {\mathcal {D}}(\nu , \tau )\), if the following inequality holds:

for \(q\in {\mathbb {Z}}\), \(k\in {\mathbb {Z}}\backslash \{0\}\).

We remark that the sets of Diophantine numbers as in Definition 5.1 are such that their union over \(\nu >0\) has full Lebesgue measure in \({\mathbb {R}}\).

Next, we define as follows an invariant attractor with frequency \(\omega \) satisfying (25).

Definition 5.2

Let \(P _e:{\mathcal {M}}\rightarrow {\mathcal {M}}\) be a family of conformally symplectic maps defined on a symplectic manifold \({\mathcal {M}}\subset {\mathbb {T}}\times {\mathbb {R}}\) and depending on the drift parameter \(e\). A KAM attractor with frequency \(\omega \) is an invariant torus described by an embedding \(K _p :{\mathbb {T}}\rightarrow {\mathcal {M}}\) and a drift parameter \(e_p\), satisfying the following invariant equation for \(\theta \in {\mathbb {T}}\):

We will often write (26) in the form

where \(T_\omega \) denotes the shift function by \(\omega \), i.e., \(T _\omega (\theta ) = \theta + \omega \).

The solutions of the invariance Eq. (26) are unique up to a shift. For all real \(\alpha \), if \({\hat{K}} _p(\theta ) :=K _p(\theta + \alpha )\), then \(({\hat{K}} _p, e_p)\) is also a solution of (26). Note that all these solutions parameterize the same geometric object. In Calleja et al. (2013a), there is a simple argument showing that this is the only source of lack of local uniqueness.

We remark that the link between the parameterization of the return map and that of the continuous system is detailed in Appendix E.

Remark 5.3

It is easy to see—even in the integrable case—that to obtain an attractor with a specific frequency, we need to adjust the drift parameter.

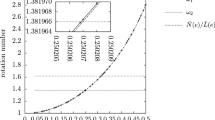

Hence, repeating the calculation several times, we obtain the drift as a function of the frequency. One can invert this—one-dimensional—function and obtain the frequency as a function of the drift, so that the two are mathematically equivalent, see Fig. 2.

For astronomers, the frequency is directly observed and it is natural to think of using the measurements of the frequency to obtain values of the drift.

Theoretical physicists may prefer that the values of the drift are known and that one predicts the frequency.

Both points of view are mathematically equivalent modulo inverting a 1-D function. In astronomy, since there is little a priori information on the values of the elastic properties of the satellites, it seems more natural to study the drift as function of the frequency. On other physical applications, where the values of the model are known from the start, the other point of view may be preferable.

One small technical problem (that can be solved) is that the function is not defined for all values of the frequency. The theory only establishes for a set of large measure. Repeatedly, not all the values of the drift parameter lead to a system that has a rotational attractor.

The starting point of the iterative process in the spin–orbit problem is then \((K,e)\), an approximate solution of the invariance equation (26),

where the “error” E is thought of as small. (Making precise the notion of small will require the introduction of norms.)

Algorithm 5.4 takes the pair \((K , e)\) and produces another approximate solution \(({{\tilde{K}}},{\tilde{e}})\), which satisfies (26) up to an error whose norm is quadratically smaller with respect to E. (Again, making all this precise requires introducing norms.)

We note that all the steps are rather explicit operations taking derivatives, shifting and performing algebraic operations. The most delicate steps are 8, 12, which involve solving cohomology equations and step 9 which involves solving a \(2 \times 2\) linear equation. The assumption of invertibility of this explicit \(2\times 2\) matrix is a non-degeneracy assumption that takes the place of the classical twist condition.

Algorithm 5.4 is based on that described in Calleja et al. (2013a) and adapted for the spin–orbit problem. Even if, for the sake of simplicity, we only present the algebraic operations in the recipe, in Calleja et al. (2013a) there are geometric interpretations that motivate the steps.

Algorithm 5.4

(Newton’s method for finding a torus in the spin–orbit problem)

- \(\star \):

-

Inputs: J as in (17), \(\omega \) a fixed frequency, an initial embedding \(K:{\mathbb {T}} \rightarrow {\mathbb {T}} \times {\mathbb {R}}\), access to the \(2\pi \)-time flow map \(G _e\) of (15) for fixed values \(\varepsilon \) and \(\eta \), change of coordinates depending on \(e\), \(\Psi _e\equiv 2\pi \left( {\begin{matrix} 1 &{} 0 \\ 0 &{} 1-e\end{matrix}} \right) \), and conformally symplectic map \(P _e\equiv \Psi ^{-1} _e\circ G _e\circ \Psi _e\).

- \(\star \):

-

Output: New K and \(e\) satisfying the invariance Eq. (26) up to a given tolerance.

- \(\star \):

-

Notation: If A is defined in \({\mathbb {T}}\), \({\overline{A}} :=\int _{{\mathbb {T}}} A\) and \(A^0 :=A - {\overline{A}}\).

- 1.:

-

\(E \leftarrow P _ e\circ K - K \circ T _\omega \),

\(E _1 \leftarrow E _1 - \mathtt {round}(E _1)\).

- 2.:

-

\(\alpha \leftarrow D K\).

- 3.:

-

\(N \leftarrow (\alpha ^t \alpha ) ^{-1}\).

- 4.:

-

\(M \leftarrow \begin{bmatrix} \alpha&J ^{-1} \alpha N \end{bmatrix}\).

- 5.:

-

\({\widetilde{E}} \leftarrow (M ^{-1}\circ T _\omega ) E\).

- 6.:

-

\(\lambda \) from Corollary 4.4.

- 7.:

-

\(P \leftarrow \alpha N\),

\(S \leftarrow (P \circ T _\omega )^t D P _ e\circ K J ^{-1} P\),

\({\widetilde{A}} \leftarrow M ^{-1} \circ T _\omega D _eP _e\circ K\).

- 8.:

-

\((B _a)^0\) solving \(\lambda (B _a) ^0 - (B _a)^0 \circ T _ \omega = - ({\widetilde{E}} _2)^0\),

\((B _b)^0\) solving \(\lambda (B _b) ^0 - (B _b)^0 \circ T _ \omega = - ({\widetilde{A}} _2)^0\).

- 9.:

-

Find \({\overline{W}} _2\), \(\sigma \) solving the linear system

$$\begin{aligned} \begin{pmatrix} \overline{S} &{} \overline{S(B _b)^0} + \overline{{\widetilde{A}} _1} \\ \lambda - 1 &{} \overline{{\widetilde{A}} _2} \end{pmatrix} \begin{pmatrix} {\overline{W}} _2 \\ \sigma \end{pmatrix} = \begin{pmatrix} - \overline{{\widetilde{E}} _1} - \overline{S(B _a)^0} \\ - \overline{{\widetilde{E}} _2} \end{pmatrix}\ . \end{aligned}$$ - 10.:

-

\((W _2)^0 \leftarrow (B _a)^0 + \sigma (B _b)^0\).

- 11.:

-

\(W _2 \leftarrow (W _2)^0 + \overline{W}_2\).

- 12.:

-

\((W _1)^0\) solving \((W _1) ^0 - (W _1)^0 \circ T _ \omega = - (S W _2)^0 - ({\widetilde{E}} _1)^0 - ({\widetilde{A}} _1)^0 \sigma \).

- 13.:

-

\(K \leftarrow K + M W\),

\(e\leftarrow e+ \sigma \).

Algorithm 5.4 needs some practical remarks:

-

Because of the periodicity condition \(K (\theta + 1) = K (\theta ) + \left( {\begin{matrix} 1 \\ 0 \end{matrix}} \right) \), one can always define the periodic map \({\widetilde{K}}(\theta ) :=K(\theta ) - \left( {\begin{matrix} \theta \\ 0 \end{matrix}} \right) \) which, generically, admits a Fourier series. Then, one obtains that

$$\begin{aligned} K\circ T _\omega = {\widetilde{K}} \circ T _\omega + \left( {\begin{matrix} \omega \\ 0 \end{matrix}} \right) . \end{aligned}$$ -

The function E in step 1 must perform the subtraction in the first component in \({\mathbb {T}}\). For a numerical implementation that can be fulfilled by the assignment \(E _ 1 \leftarrow E _1 - \mathtt {round}(E _1)\), where \(\mathtt {round}\) returns the nearest integer value of its argument. Such a function is commonly provided in almost all programming languages.

-

The matrix M in step 4 is unimodular, which allows to get an easy inverse matrix expression.

-

The quantities \(D P _e\) and \(D _eP _e\) in step 7 are needed to compute the directional variational flow of \(\Phi \), which can be done automatically using the explanations in Section C.1.

-

The stopping criterion is either that \(\Vert E\Vert \) or \(\max \{ \Vert MW \Vert , | \sigma |\}\) is smaller than a prefixed tolerance.

A common numerical representation for periodic mappings is a Fourier series in the inputs \(K_1\) and \(K _2\), which gives us a representation of \(K\equiv (K _1, K _2)\). In such a representation, we can use the Fourier transform, or its numerical version via fast Fourier transform (FFT) algorithm.

Therefore, any periodic mapping, say f, admits two representations, namely in points (or table of values) and in Fourier coefficients. The first one is just the values \((\check{f}_k) _{k = 0}^{n-1}\) of the mapping in an equispaced mesh in [0, 1) of size n. The second one is obtained by the inverse of the fast Fourier transform (IFFT), denoted by \(({\widehat{f}} _k) _{k = 0}^{n-1}\). Notice that because the function is assumed to be real-valued, the two representations can have the same size, i.e., n real values.

Depending on the step in Algorithm 5.4, it may be better to use one representation or the other. For instance, \(P _e\circ K\) in 1 and \(D P _e\circ K\), \(D _eP _e\circ K\) in 7 are better when K is in a table of values, although an ODE version of (8) in terms of Fourier coefficients can be considered.

On the other hand, the composition with \( T _\omega \) and the solution of the cohomological equations in 8 and 12 are easier if K is in Fourier series. Indeed, these equations can be solved in Fourier coefficients, using the following result whose proof is straightforward.

Lemma 5.5

Let \(\eta (\theta ) = \sum _k \eta _k \exp (2\pi \mathtt {i}k \cdot \theta )\), and let \(\omega \) be irrational. Then:

-

(i)

If \(\eta _0 = 0\), then \(\phi \circ T _\omega - \phi = \eta \) has solution \(\phi (\theta ) = \sum _k \phi _k \exp (2\pi \mathtt {i}k \cdot \theta )\) with

$$\begin{aligned} \phi _k = {\left\{ \begin{array}{ll} \frac{\eta _k}{\exp (2\pi \mathtt {i}k \cdot \omega ) - 1} &{} \text {if } k \ne 0, \\ 0 &{} \text {otherwise.} \end{array}\right. } \end{aligned}$$ -

(ii)

If \(\lambda \) is not a root of unit, then \(\phi \circ T _\omega - \lambda \phi = \eta \) has solution with coefficients

$$\begin{aligned} \phi _k = \frac{\eta _k}{\exp (2\pi \mathtt {i}k \cdot \omega ) - \lambda } \ . \end{aligned}$$

5.2 Initial approximation of the invariant curve

Repeated application of the Newton’s method from Algorithm 5.4 produces a very accurate solution provided that one can get a good enough initial approximation. In this section, we address the problem of producing such an initial approximation. Although the methods are rather general, we are going to give the results for the cases of study in this paper. We select the following two frequencies with good Diophantine conditions belonging to the class \({\mathcal {D}}( \frac{2}{3-\sqrt{5}}, 1)\), see Definition 5.1:

and

where we define \(\gamma _g^\pm = \frac{\sqrt{5}\pm 1}{2}\).

One method to provide an initial approximation is, of course, to continue from an integrable case that can be solved explicitly. Another method is to do an easy calculation of an approximate torus. Since the system is dissipative, the torus, if it exists, will be an attractor.

5.2.1 A continuation method from the integrable case

Let us start to analyze the averaged problem for which we look for the drift starting from the integrable case. Fix the frequency \(\omega \) and set \(\varepsilon =0\) in (10); then, the drift \(e\) can be chosen so that

The above equation provides the eccentricity as a function of the frequency. In fact, as noticed in Celletti and Chierchia (2009), for \(\eta \ne 0\) the solution of (10) can be written as

which shows that \(\dot{x}=\frac{{\bar{N}}(e)}{{\bar{L}}(e)}\) is a global attractor for the unperturbed, purely dissipative case \(\varepsilon =0\). Setting \(y=\dot{x}\), we can select as initial starting condition

We remark that, despite the use of the averaged version of the spin–orbit problem given in (10), to approximate an initial guess of the eccentricity in terms of the frequency, we can also use the non-averaged spin–orbit problem (8) to provide an approximated eccentricity, see Sect. 5.2.2.

5.2.2 Direct iteration

We propose a method different from Sect. 5.2.1, which takes advantage of the fact that the torus, if it exists, is an attractor. We do not need to consider the average equation and we can start from the model (8). Hence, we start by selecting a set of points at random; after a transient number of iterations (e.g., choose the transient as the inverse of the dissipation multiplied by a convenient safety factor), we expect that the orbit is close to the attractor. Then, we assess whether indeed this orbit has a rotation number.

Since not all the attractors of dissipative maps are rotational orbits, not all of them should have a rotation number. Of course, there may be situations where the orbit is a chaotic attractor that happens to have a rotation number.

Given the importance of the rotation number, there are quite a number of algorithms to compute it, e.g., Laskar et al. (1992), Athanassopoulos (1998), Laskar (1999), Alsedà et al. (2000), Laskar (1999), Seara and Villanueva (2006), Gómez et al. (2010a), Gómez et al. (2010b), Sánchez et al. (2010). We have used the method in Das et al. (2017) which speeds the convergence to the rotation number. We will present more details of the calculation in Calleja et al. (2020b). We note that the method in Das et al. (2017) gives a very good indication of the existence of an invariant circle. In Das et al. (2017), it is shown that if there is a smooth invariant circle, the convergence of the method to a rotation number is very fast. Hence, the fast convergence of the algorithm is a reasonably good evidence of the existence of a rotational torus. Of course, the convergence of the Newton’s method started in this guess is a much stronger validation of the correctness of the guess.

Figure 2 compares the two approaches explained here and in Sect. 5.2.1. The first one is straightforward, since it consists in plotting the function \({\bar{N}}(e)/{\bar{L}}(e)\) in terms of the eccentricity \(e\). The second one requires a little bit more effort, since it needs to numerically integrate (8) to compute the rotation number.

Eccentricity versus the rotation number denoted by + of the system (8) with \(\varepsilon = 10^{-4}\) and \(\eta =10^{-5}\). Frequencies as in (28) and (29). The small window is just a zoom-in near to \(\omega _2\) which shows that the averaged quantity \({\bar{N}}(e) /{\bar{L}}(e)\) approaches the non-averaged rotation number

5.2.3 Initial approximation for the embedding

Once we have fixed an initial guess for the eccentricity and an initial starting point, we can proceed to get an initial guess of the embedding K which is needed as input in Algorithm 5.4. To this end, we first perform a preliminary transient of iterations of \(G _e\), defined in (21), at the initial point \(\Psi _e(x(0), y(0)/(2\pi ))\), with (x(0), y(0)) given in (30). After that, we can follow the steps in Algorithm 5.6 to get the initial guess for K for Algorithm 5.4.

Algorithm 5.6

(Invariant curve approximation)

- \(\star \):

-

Inputs: Points \((\beta _k, \gamma _k)_{k=0}^{n-1}\subset [0,2\pi ) \times {\mathbb {R}}\) from iteration of the \(2\pi \)-time Poincaré map of (15) with parameter values \(\varepsilon , \eta \), and \(e\) and number of Lagrange interpolation points \(2j\in {\mathbb {N}}\).

- \(\star \):

-

Output: Initial embedding K for Algorithm 5.4 with a given mesh size \(n _\theta \).

- 1.:

-

Sort \((\beta _{i _k}, \gamma _{i _k}) _{k =0}^{n-1}\) such that \(\beta _{i _ 1} \le \cdots \le \beta _{i _n}\).

- 2.:

-

Mesh \({{\overline{\beta }}} _k = 2\pi k / n _\theta \) with \(k = 0, \dotsc , n _\theta -1\).

- 3.:

-

For each \(k = 0, \dotsc , n _\theta - 1\), let \({{\overline{\gamma }}} _k\) be the Lagrange interpolation centered at \((\beta _{i _k},\gamma _{i _k})\) with 2j points \(\gamma _{i _{k}-j \pmod n},\dotsc , \gamma _{i _ {k}+j \pmod n}\) and their respective abscissae.

- 4.:

-

Return the table of values \(K \equiv (\Psi _e^{-1} ({{\overline{\beta }}} _k, {{\overline{\gamma }}} _k)) _{k=0}^{n_\theta -1}\) with \(\Psi _e\) from (23).

5.3 Accuracy tests

We are now looking for \(K :{\mathbb {T}} \rightarrow {\mathbb {T}} \times {\mathbb {R}}\) and the parameter \(e\) so that the invariance Eq. (26) is verified, for \(P _e\) given in (22) and a fixed frequency \(\omega \) like in (28) or (29).

In this process, we have three main sources of error that affect the result.

- \((\mathbf{E1})\):

-

The error of the invariance condition on the table of values. This error is controlled by the Newton’s procedure.

- \((\mathbf{E2})\):

-

The error on the integration. This error is controlled by the numerical integrator when we request absolute and relative tolerances.

- \((\mathbf{E3})\):

-

The error in the discretization. To control this source of errors, we need first to estimate it, and then to be able to change the mesh when the error is too large.

Let us address the error coming from (E3). Let \({\mathcal {A}} \subset {\mathbb {T}}\) be the set of points corresponding to the table of values used in the algorithm. In the case of a Fourier representation of size \(n _\theta \), then \({\mathcal {A}} = \{k / n_\theta \}_{k = 0}^{n_\theta -1}\) is an equispaced mesh of [0, 1). After some iterations of the Newton’s Algorithm 5.4, we obtain a set of values \(\{K (\theta _i)\} _{\theta _i \in {\mathcal {A}}}\) and an eccentricity \(e\) satisfying the invariance equation in a mesh

where \(\delta \) is a fixed tolerance, e.g., \(\approx 10^{-11}\) in double precision. Note that we are not fixing the norm in (31) which typically can be the sup-norm, the analytic norm, etc.

Let us now define \(\delta ^*\) as

The computation of \(\delta ^*\) is in general difficult, and we suggest two standard heuristic alternatives.

The first option is very fast: It consists in looking at the norm of some of the “last” Fourier coefficients and using it as an estimate for the truncation error of the series. Once the Newton’s iteration has converged on a given mesh, we check the size of these coefficients. If one of them is larger than a prescribed threshold, we assume that the interpolation error is too big, and we increase the number of Fourier modes in the direction of these large coefficients.

The second option is to evaluate the error in (31) on a set of values \(\widetilde{{\mathcal {A}}} \subset {\mathbb {T}}\) different from \({\mathcal {A}}\). One can use a thinner set \(\widetilde{{\mathcal {A}}} \) to produce a better estimate of the invariance \(\delta ^*\). This procedure can be computationally expensive. An easier alternative is to consider \(\widetilde{{\mathcal {A}}} \) with the same number of points as \({\mathcal {A}}\). For instance,

with \(\upsilon \) equal to one half of the distance between points of \({\mathcal {A}}\) in the direction \(\theta _i \in {\mathcal {A}}\). In the Fourier case, \(\widetilde{{\mathcal {A}}} = \{(k + 0.5) / n _\theta \} _{k = 0}^{n _\theta - 1}\) should be enough.

Hence, we have a new mesh \(\widetilde{{\mathcal {A}}} \) which is interlaced with the initial mesh \({\mathcal {A}}\). Then, we check that

If this test is not satisfied, we add more Fourier coefficients and we go back to the Newton’s iteration given by Algorithm 5.4. If the test is satisfied, we can either stop and accept the solution or check it again with a thinner mesh.

The key is then to avoid checking with thinner meshes during the computation as much as possible, because it is too costly, and to do just a single check at the end to ensure the accuracy.

5.4 Implementation of the algorithm

Once Algorithm 5.4 is coded so that it becomes a sequence of arithmetic operations (and transcendental functions), it is almost easy to make it run in extended precision arithmetic, see Appendix D.

There are, however, some caveats:

-

One loses the hardware support.

-

The hardware optimized libraries have to be replaced by hand coded libraries. Notably, one cannot use BLAS, LAPACK, or FFTW and they have to be substituted by explicit algorithms. We have used our own implementations.

-

In iterative processes, one has to choose the stopping criteria appropriately. As standard, one writes the stopping criteria as a power of Machine epsilon.

-

For us, the most important point is that, in order to achieve high accuracy of the ODE integration with a reasonable step in a reasonable amount of time, one needs a high-order method.

We have used the Taylor’s method, which is based on computing the Taylor’s expansion of the solution of the equation to a very high order, see Appendix B.

The paper Jorba and Zou (2005a) presents a very general purpose generator of Taylor’s integrators based on automatic differentiation. If one specifies (in a very simple format) a differential equation, the program taylor (supplied and documented in Jorba and Zou (2005a)) generates automatically an efficient Taylor solver written in C. The user can select whether this Taylor solver uses standard arithmetic or extended precision arithmetic (either GMP or MPFR). It is important that Taylor’s methods can work well with different versions of the arithmetic.

One important product of the Taylor’s integrator (Gimeno et al. 2021) is that we obtain very efficient solvers of the variational equations. We will report on them in Appendix C, since they are a natural extension of the Taylor’s integrator. We note that we will not use them in the numerical experiments of this paper, but we will use them in Calleja et al. (2020b). We remark that in mission design, they appear in the method of differential corrections.

5.4.1 Using profilers to detect bottlenecks

The computation of invariant tori with the Newton method that we propose is remarkably fast when the initial guess is good enough. However, the continuation to the breakdown requires to increase the Fourier modes and then the computational cost will increase in proportion.

The key step in a continuation process is the correction of the solution for the new parameter values which are being continued. In our case, it is the Newton step. We have used a C profiler in a single continuation step with multiprecision of 55 digits to realize which are the most CPU-time-consuming parts. We did it for different values of \(\varepsilon \in \{10^{-4}, 2\cdot 10^{-4}\}\), \(\eta \in \{10^{-3}, 10^{-6}\}\), \(\omega \in \{\omega _1, \omega _2\}\), and \(N \in \{128,256\}\) number of Fourier modes getting, in all of them, similar results.

In average, around 98.1% was dedicated to the Newton step correction; inside this step around 97.6% was for the evaluation of the ODE (and its variationals) in the ODE integrator and the correction of the integration stepsize. Inside of it, around 31% was for the addition of jet transport elements, 25% for multiplication, 18% for assignments, and 6.5% for scalar multiplications.

As a consequence, we conclude that the FFT, the solvers of cohomological equations and the shiftings in Algorithm 5.4 are irrelevant in terms of CPU-time as well as the memory allocation. The second conclusion is that the ODE integration is the crucial part. This fact will be exploited and detailed in Sect. 5.5.

5.5 Parallelization

There are several operations in Algorithm 5.4 that are fully independent to each other, such as those steps which are done in a table of values of \(\theta \) and the solution of the cohomological equations using Lemma 5.5.

The use of a profiler shows that the main bottleneck, in terms of CPU-time usage, is the ODE integration involved in \(P _e\), given in (22), and its first-order directional derivatives.

A simple concurrent parallelization for each of the different numerical integrations (previously ensuring that there is non-shared memory between the threads) shows an evident speedup with respect to non-concurrent versions. In our case, we run the code with multiprecision arithmetic, in particular with MPFR, and we must be sure that each of the parallelized parts work correctly with the multiprecision. In the case of MPFR, we must initialize the precision and the rounding mode for each of the different CPUs.

Figure 3 shows the non-parallel execution times and the speedups of Algorithm 5.4 using the initial guess from Algorithm 5.6 with \(\eta = 10^{-6}\), \(\varepsilon =10^{-4}\), 135 digits of precision and different number of modes in the Fourier representation. The figure was done in an Intel Xeon Gold 5220 CPU at 2.20GHz with 18 CPUs with hyperthreading which simulates 36 CPUs.

Note that the non-parallel data in Fig. 3 are extremely well fit by

where T denotes the CPU time in seconds and N the Fourier representation size. This means that the single core time scales behave (in practice) linearly with the size of the problem. The logarithmic correction appearing in the theory of the FFT does not seem to be observable which is due to the fact that the FFT is not the main problem in the performance of our method. Of course, this is significantly better than the \(N^3\) of Newton’s methods based on inverting matrices.

Algorithm 5.4 also accepts other concurrent computations such as the FFT algorithm or the solution of the cohomology equations in steps 8 and 12. The latter did not show an important speedup, presumably because the time spent in these calculations is not so important overall. (The number of points needed is not so large, due to our reduction to 1-D.)

On the left: timings not using parallelization and fit of the times changing the number of Fourier modes. On the right: Speedup by a parallel ODE integration in step 7 of Algorithm 5.4 for different number of threads and different number of Fourier modes

5.6 A practical implementation of Algorithm 5.4

As an example of the implementation of Algorithm 5.4, we provide in Fig. 4 the construction of the invariant attractors for (8) with a small dissipation, say \(\eta =10^{-6}\), and frequencies given by (28) and (29). Figure 5 gives the results for a higher dissipation, namely \(\eta =10^{-3}\).

Both figures show different invariant attractors when the perturbative parameter \(\varepsilon \) changes by using a standard continuation procedure with interpolation. At each of these continuation steps with respect to \(\varepsilon \), we apply Algorithm 5.4 with a tolerance \(10^{-35}\), which refines the embedding \(K_\varepsilon \) and the eccentricity \(e_\varepsilon \), and we also check the accuracy, see Sect. 5.3, to ensure that the numerical solution is accurate enough.

Additionally, we perform an extra refinement at each continuation step to ensure that the plots in Figs. 4 and 5 make the value of x in the range \([0,2\pi )\). More precisely, if \(({{\tilde{K}}} _\varepsilon , e_\varepsilon )\) is the output of Algorithm 5.4, then we assign \(K _\varepsilon \leftarrow {{\tilde{K}}} _\varepsilon \) with \(K _\varepsilon ^1(0) = 0\). That is, we apply a shift \(\alpha \) on \({{\tilde{K}}} _\varepsilon \) with \(\alpha \) so that the first component of \({{\tilde{K}}} _\varepsilon \) at 0 is zero.

The results shown in Fig. 4 give evidence of the effectiveness of the method of constructing invariant attractors, using the reduction to a map and the implementation of Algorithm 5.4.

5.7 An empirical comparison between the model with time-dependent friction (8) and the average friction (10)

In this section, we present a comparison between the numerical results in the model with time-dependent dissipation (8) and the model with the averaged dissipation (10). In Appendix A, we present two rigorous justifications of the averaging procedure.

In Fig. 6, we plot the difference between the drift parameters (namely the eccentricities) of the full and averaged models for the tori with frequencies \(\omega _1\) and \(\omega _2\); such difference is small, say of the order of \(10^{-7}\), even for parameter values close to breakdown. We also note that the y-axis in Fig. 6 is just the difference (without absolute value) which means that \(e > e _{avg}\) at each of the \(\varepsilon \) values.

5.8 Comment for double precision accuracy

The computation with multiprecision is already fast enough thanks to the quadratic convergence of our method. The use of multiprecision allows to get tori of arbitrary accuracy, and in particular, it helps to reach values of the parameter close to the breakdown. These calculations can be used to identify mathematical patterns close to breakdown and to use KAM theory. However, we can also perform double or even float precision computations for values that are not close to the breakdown. As one expects, the required time to converge using the Newton process presented in this paper, see Algorithm 5.4, is smaller than in multiprecision, paying the price of accuracy.

The first reason for this speed is because we request less accuracy—around \(10^{-14}\) for double precision—which makes the algorithm converge in fewer iterations. The second reason is that we avoid the possible overhead of using a software package, such as MPFR, and we can then exploit the hardware optimizations provided by compilers.

After adapting our code to run with double precision, we detect that the values of \(\varepsilon \) for which our method still converges are around \(7.8\times 10^{-3}\) which is far from the \(\varepsilon \sim 10^{-2}\) using more accuracy, the speedup of the parallelization strategy, see Sect. 5.5, behaves similarly, and the time of a continuation step (with one CPU) decreases. It still depends on the number of Fourier modes, and some example of values is reported in Table 1.

In certain problems, it may be worth optimizing the speed (at the price of accuracy and programming time). In such cases where speed is the most important consideration, it may be worth taking advantage of modern computer architectures such as GPUs. (The very structured nature of our algorithm makes it a tantalizing possibility.) We encourage these (or any other) developments on the methods presented here.

6 Conclusions

There are several important features of the method developed in this paper for the construction of invariant tori using the return map; we highlight below some of these features.

-

The method only requires dealing with functions of a low number of variables.

-

The iterative step to construct the tori is quadratically convergent.

-

The operation count and the operation requirements are low.

-

The most costly step (integration of the equations) is very easy to parallelize efficiently. Many other steps of the algorithm involve vector operations, Fourier transforms, which also can take advantage of modern computers but here with less impact in the performance.

-

The method is well adapted to the rather anisotropic regularity of KAM tori and, in the smooth direction, takes advantage of the developments in integration of ODEs.

-

The method is reliable, since it is backed by rigorous a posteriori theorems.

-

The condition numbers required are evaluated in the approximate solution and do not involve global assumptions on the flow such as twist.

-

From the theoretical point of view, the a posteriori theorems give a justification of other heuristic methods that produce approximate solutions including asymptotic expansions and averaging methods.

Notes

We abuse the notation referring \(r(t) = a(1 - e\cos u(t))\) and \(r(u) = a (1 - e\cos u)\) as equal (similarly f(t) and f(u)). Although formally we should label them differently, they are equivalent via the Kepler’s equation.

We omit the discussion of “denormalized numbers”, perhaps the aspect of IEEE 754 that generated the most controversy.

References

Adomaitis, R.A., Kevrekidis, I.G., de la Llave, R.: A computer-assisted study of global dynamic transitions for a noninvertible system. Internat. J. Bifur. Chaos Appl. Sci. Engrg. 17(4), 1305–1321 (2007)

Alsedà, L., Llibre, J., Michał, M.: Combinatorial Dynamics and Entropy in Dimension One, Volume 5 of Advanced Series in Nonlinear Dynamics, 2nd edn. World Scientific Publishing Co., Inc., River Edge, NJ (2000)

Arnol’d, V.I.: Proof of a theorem of A. N. Kolmogorov on the invariance of quasi-periodic motions under small perturbations. Russian Math. Surveys, 18(5), 9–36 (1963)

Athanassopoulos, K.: Rotation numbers and isometries. Geom. Dedicata 72(1), 1–13 (1998)

Banyaga, A.: Some properties of locally conformal symplectic structures. Comment. Math. Helv. 77(2), 383–398 (2002)

Beletsky, V.V.: Essays on the motion of celestial bodies. Birkhäuser Verlag, Basel (2001). Translated from the Russian by Andrei Iacob

Berz, M., Makino, K.: Verified integration of ODEs and flows using differential algebraic methods on high-order Taylor models. Reliab. Comput. 4(4), 361–369 (1998)

Calleja, R.C., Alessandra C., Joan G., Rafael de la L.: Breakdown threshold of invariant attractors in the dissipative spin-orbit problem, Preprint (2020)

Calleja, R.C., Alessandra C., Joan G., Rafael de la L.: KAM estimates in the dissipative spin-orbit problem, To appear in CNSNS (2020)

Calleja, R.C., Celletti, A., de la Llave, R.: KAM estimates for the dissipative standard map. to appear in CNSNS (2020)

Calleja, R., Celletti, A.: Breakdown of invariant attractors for the dissipative standard map. CHAOS 20(1), 013121 (2010)

Calleja, R., Figueras, J.-L.: Collision of invariant bundles of quasi-periodic attractors in the dissipative standard map. Chaos 22(3), 033114 (2012)

Calleja, R., de la Llave, R.: A numerically accessible criterion for the breakdown of quasi-periodic solutions and its rigorous justification. Nonlinearity 23(9), 2029–2058 (2010)

Calleja, R., Celletti, A., de la Llave, R.: A KAM theory for conformally symplectic systems: efficient algorithms and their validation. J. Differ. Equ. 255(5), 978–1049 (2013)

Celletti, A.: Stability and Chaos in Celestial Mechanics. Berlin; published in association with Praxis Publishing, Chichester, Springer-Verlag (2010)

Celletti, A.: Analysis of resonances in the spin-orbit problem in celestial mechanics: higher order resonances and some numerical experiments. II. Z. Angew. Math. Phys. 41(4), 453–479 (1990)

Celletti, A.: Analysis of resonances in the spin-orbit problem in celestial mechanics: the synchronous resonance. I. Z. Angew. Math. Phys. 41(2), 174–204 (1990)

Celletti, A., Chierchia, L.: Quasi-periodic attractors in celestial mechanics. Arch. Ration. Mech. Anal. 191(2), 311–345 (2009)

Celletti, A., Lhotka, C.: Transient times, resonances and drifts of attractors in dissipative rotational dynamics. Commun. Nonlinear Sci. Numer. Simul. 19(9), 3399–3411 (2014)

Celletti, A., Pucacco, G., Stella, D.: Lissajous and halo orbits in the restricted three-body problem. J. Nonlinear Sci. 25(2), 343–370 (2015)

Correia, A.C.M., Laskar, J.: Mercury’s capture into the 3/2 spin-orbit resonance as a result of its chaotic dynamics. Nature, 429(6994), 848–850 (2004)

Das, S., Saiki, Y., Sander, E., Yorke, J.A.: Quantitative quasiperiodicity. Nonlinearity 30(11), 4111–4140 (2017)

Fousse, L., Hanrot, G., Lefèvre. V., Pélissier, P., Zimmermann, P.: MPFR: a multiple-precision binary floating-point library with correct rounding. ACM Trans. Math. Software, 33(2), Art. 13, 15, (2007). https://www.mpfr.org

Froeschlé, C., Lega, E., Gonczi, R.: Fast Lyapunov indicators. Appl. Asteroidal Motion Celestial Mech. Dynam. Astronom. 67(1), 41–62 (1997)

Gimeno, J., Jorba-Cuscó À. Jorba, M., Miguel, N., Zou, M.: Numerical integration of high order variational equations of odes. (2021)

Gómez, G., Mondelo, J.M.: The dynamics around the collinear equilibrium points of the RTBP. Phys. D 157(4), 283–321 (2001)

Gómez, G., Mondelo, J.-M., Simó, C.: A collocation method for the numerical Fourier analysis of quasi-periodic functions. I. Numerical tests and examples. Discrete Contin. Dyn. Syst. Ser. B 14(1), 41–74 (2010)

Gómez, G., Mondelo, J.-M., Simó, C.: A collocation method for the numerical Fourier analysis of quasi-periodic functions. II. Analytical error estimates. Discrete Contin. Dyn. Syst. Ser. B 14(1), 75–109 (2010)

Griewank, A., Walther, A.: Evaluating derivatives. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA, second edition, 2008. Principles and techniques of algorithmic differentiation

Hairer, E., Nø rsett, S.P., Wanner, G.: Solving ordinary differential equations. I, volume 8 of Springer Series in Computational Mathematics. Springer-Verlag, Berlin, second edition, 1993. Nonstiff problems

Hald, O.H.: On a Newton-Moser type method. Numer. Math. 23, 411–426 (1975)

Hale, K.J.: Ordinary differential equations, 2nd edn. Robert E. Krieger Publishing Co. Inc, Huntington, N.Y. (1980)

Haro, À., Canadell, M., Figueras, J.-L., Luque, A., Mondelo, J.-M.: The parameterization method for invariant manifolds, volume 195 of Applied Mathematical Sciences. Springer, [Cham], 2016. From rigorous results to effective computations

Haro, A., de la Llave, R.: Spectral theory and dynamical systems. preprint (2002)

Haro, A., Mondelo, J.M.: Flow map parameterization methods for invariant tori in hamiltonian systems. (2021)

Haro, À., de la Llave, R.: A parameterization method for the computation of invariant tori and their whiskers in quasi-periodic maps: numerical algorithms. Discrete Contin. Dyn. Syst. Ser. B 6(6), 1261–1300 (2006). (electronic)

Jorba, À., Masdemont, J.: Nonlinear dynamics in an extended neighbourhood of the translunar equilibrium point. In: Hamiltonian systems with three or more degrees of freedom (S’Agaró, 1995), volume 533 of NATO Adv. Sci. Inst. Ser. C Math. Phys. Sci., pp. 430–434. Kluwer Acad. Publ., Dordrecht (1999)

Jorba, À., Olmedo, E.: On the computation of reducible invariant tori on a parallel computer. SIAM J. Appl. Dyn. Syst. 8(4), 1382–1404 (2009)

Jorba, À., Zou, M.: A software package for the numerical integration of ODEs by means of high-order Taylor methods. Experiment. Math. 14(1), 99–117 (2005)

Knuth, D.E.: The Art of Computer Programming. Vol. 2: Seminumerical Algorithms, 3rd edn. Addison-Wesley Publishing Co., Reading (1997)

Kolmogorov, A.N.: On conservation of conditionally periodic motions for a small change in Hamilton’s function. Dokl. Akad. Nauk SSSR (N.S.), 98, 527–530 (1954). English translation in Stochastic Behavior in Classical and Quantum Hamiltonian Systems (Volta Memorial Conf., Como, 1977), Lecture Notes in Phys., 93, pages 51–56. Springer, Berlin, (1979)

Kumar, B., Anderson, R.L., de la Llave, R.: Rapid and accurate computation of whiskered tori and their manifolds near resonances in periodically perturbed planar circular restricted 3-body problems. (2021)

Kumar, B., Anderson, R.L., de la Llave, R.: Using GPU’s and the parameterization method for rapid search and refinement of connections between tori in periodically perturbed planar circular restricted 3-body problem. (AAS-349) (2021)

Laskar, J.: Introduction to frequency map analysis. In: Hamiltonian systems with three or more degrees of freedom (S’Agaró, 1995), volume 533 of NATO Adv. Sci. Inst. Ser. C Math. Phys. Sci., pages 134–150. Kluwer Acad. Publ., Dordrecht, (1999)

Laskar, J., Froeschlé, C., Celletti, A.: The measure of chaos by the numerical analysis of the fundamental frequencies. Appl. Stand. Mapping Phys. D 56(2–3), 253–269 (1992)

Macdonald, G.J.F.: Tidal friction. Rev. Geophys. Space Phys. 2, 467–541 (1964)

Mather, J.N.: Characterization of Anosov diffeomorphisms. Nederl. Akad. Wetensch. Proc. Ser. A 71 = Indag. Math. 30, 479–483 (1968)

Moser, J.: On invariant curves of area-preserving mappings of an annulus. Nachr. Akad. Wiss. Göttingen Math.-Phys. Kl. II 1962, 1–20 (1962)

Moser, J.: Stable and Random Motions in Dynamical Systems. Princeton University Press, Princeton, N. J. (1973)

Olikara, Z.P.: Computation of quasi-periodic tori and heteroclinic connections in astrodynamics using collocation techniques. ProQuest LLC, Ann Arbor, MI (2016). Thesis (Ph.D.)–University of Colorado at Boulder

Peale, S.J.: The free precession and libration of Mercury. Icarus 178(1), 4–18 (2005)

Rall, L.B., Corliss, G.F.: An introduction to automatic differentiation. In: Computational Differentiation (Santa Fe, NM, 1996), pp. 1–18. SIAM, Philadelphia, PA (1996)

Sánchez, J., Net, M., Simó, C.: Computation of invariant tori by Newton-Krylov methods in large-scale dissipative systems. Phys. D 239(3–4), 123–133 (2010)

Sanders, J.A., Verhulst, F., Murdock, J.: Averaging methods in nonlinear dynamical systems, volume 59 of Applied Mathematical Sciences. Springer, New York, second edition (2007)

Seara, T.M., Villanueva, J.: On the numerical computation of Diophantine rotation numbers of analytic circle maps. Phys. D 217(2), 107–120 (2006)

Stefanelli, Letizia, Locatelli, Ugo: Kolmogorov’s normal form for equations of motion with dissipative effects. Discrete Contin. Dynam. Systems 17(7), 2561–2593 (2012)

Stefanelli, L., Locatelli, U.: Quasi-periodic motions in a special class of dynamical equations with dissipative effects: a pair of detection methods. Discrete Contin. Dyn. Syst. Ser. B 20(4), 1155–1187 (2015)

Wisdom, J., Peale, S.J., Mignard, F.: The chaotic rotation of Hyperion. Icarus 58(2), 137–152 (1984)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Amadeu Delshams.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.