Abstract

Purpose

PET measures of amyloid and tau pathologies are powerful biomarkers for the diagnosis and monitoring of Alzheimer’s disease (AD). Because cortical regions are close to bone, quantitation accuracy of amyloid and tau PET imaging can be significantly influenced by errors of attenuation correction (AC). This work presents an MR-based AC method that combines deep learning with a novel ultrashort time-to-echo (UTE)/multi-echo Dixon (mUTE) sequence for amyloid and tau imaging.

Methods

Thirty-five subjects that underwent both 11C-PiB and 18F-MK6240 scans were included in this study. The proposed method was compared with Dixon-based atlas method as well as magnetization-prepared rapid acquisition with gradient echo (MPRAGE)- or Dixon-based deep learning methods. The Dice coefficient and validation loss of the generated pseudo-CT images were used for comparison. PET error images regarding standardized uptake value ratio (SUVR) were quantified through regional and surface analysis to evaluate the final AC accuracy.

Results

The Dice coefficients of the deep learning methods based on MPRAGE, Dixon, and mUTE images were 0.84 (0.91), 0.84 (0.92), and 0.87 (0.94) for the whole-brain (above-eye) bone regions, respectively, higher than the atlas method of 0.52 (0.64). The regional SUVR error for the atlas method was around 6%, higher than the regional SUV error. The regional SUV and SUVR errors for all deep learning methods were below 2%, with mUTE-based deep learning method performing the best. As for the surface analysis, the atlas method showed the largest error (> 10%) near vertices inside superior frontal, lateral occipital, superior parietal, and inferior temporal cortices. The mUTE-based deep learning method resulted in the least number of regions with error higher than 1%, with the largest error (> 5%) showing up near the inferior temporal and medial orbitofrontal cortices.

Conclusion

Deep learning with mUTE can generate accurate AC for amyloid and tau imaging in PET/MR.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Amyloid deposits and tau neurofibrillary tangles (NFTs), the two neuropathological hallmarks of Alzheimer’s disease (AD), accumulate decades before neurodegeneration and symptomatic onset and are essential signs for early AD diagnosis. Positron emission tomography (PET) is a powerful imaging tool that has been used to identify and visualize amyloid deposits and progression for over a decade [1, 2]. Recently, high-affinity radiolabels have also been successfully developed for PET imaging of tau NFTs [3, 4]. For both amyloid and tau PET imaging, accurate quantification of the regional cortical uptake is critical for the diagnosis and progression monitoring of AD.

Simultaneous PET/magnetic resonance (MR) has been readily used for neurological applications due to its functional and metabolic imaging capability from PET and MR, excellent soft tissue contrast from MR, as well as benefits in partial-volume and motion corrections. As MR signals arising from protons are not directly related to photon attenuation coefficients, no simple transforms can perform PET attenuation correction (AC) based on MR images. Because the cortical regions are close to the bone, quantitation accuracy of amyloid and tau imaging can be significantly compromised by AC errors in PET/MR. In addition, standardized uptake value ratio (SUVR), a metric widely used for assessment in amyloid and tau PET imaging, can be easily affected by AC errors since the cerebellum cortex (i.e., reference region widely used to calculate SUVR) is close to complicated bone structures (e.g., mastoid process) of the skull [5]. To leverage the quantitative merits of PET/MR for amyloid and tau imaging, accurate AC is indispensable.

Various methods have been proposed for AC in PET/MR [6, 7]. One category of methods segments MR images (e.g., T1-weighted, Dixon, or ultrashort time-to-echo (UTE)/zero time-to-echo (ZTE) images) into different tissue types (i.e., water, fat and bone) and assigns attenuation coefficient to each tissue type to produce the attenuation map [8,9,10,11,12,13]. Another category of methods produces attenuation maps by non-rigidly registering MR images to atlas generated from populational computed tomography (CT) and MR image pairs [14,15,16,17]. Machine learning and joint estimation methods have also been explored [18,19,20,21]. Recently, a UTE/multi-echo Dixon (mUTE) sequence-based method has been proposed to take full advantage of MR physics for AC [22]. The mUTE sequence integrates UTE for imaging short-T2 components (i.e., bone) and multi-echo Dixon for robust water/fat separation into a single acquisition, which enables a physical compartmental model to estimate continuous distributions of attenuation coefficients. However, deficiency exists in the abovementioned MR AC methods. For the segmentation-based method, segmentation errors were observed at boundaries between soft tissue, bone, and air, especially near nasal and mastoid-process regions [23, 24]. The atlas-based method cannot account for pathological regions which are not part of the atlas, and is vulnerable to registration errors and time consuming [10, 25]. Accuracy of the joint-estimation method depends on time-of-flight (TOF) resolution and its computational complexity is another concern. For the mUTE sequence, parameter estimation based on the physical compartmental model is complicated and nontrivial.

Recently, deep learning has shown promising results for synthetic data generation. Various studies based on convolutional neural networks (CNNs) have been proposed for pseudo-CT generation, and showed better results compared with conventional methods for the brain [26,27,28,29,30,31,32,33], pelvis [34, 35], and whole-body [36] regions. Specifically, our group has developed a novel Group-Unet structure [28] which can efficiently handle scenarios with multiple network-input channels [28] and utilize network parameters more efficiently, compared with the popular U-net structure [37]. However, the deep learning methods were mostly assessed using 18F-FDG datasets. It is unclear how these methods could work for amyloid and tau PET imaging, which has higher accuracy demand for PET AC along with the need of comprehensive regional and pixelwise analysis. In addition, existing deep learning methods used Dixon/T1-weighted MR images as the network input. It is unclear whether using more AC-specific MR images as the network input can generate improved results.

In this work, we propose a new deep learning-based AC method, CNN-mUTE, for amyloid and tau imaging by leveraging physics information from the mUTE sequence and the efficiency of Group-Unet in handling multiple input channels of mUTE MR images. The performance of the proposed method was evaluated using in vivo imaging data acquired from thirty-five subjects that underwent both 11C-PiB and 18F-MK6240 scans. We compared the performance of the proposed method with Dixon-based atlas method available in clinical PET/MR scanners, as well as deep learning-based AC methods that use T1-weighted image or Dixon as network input. Freesurfer [38] was used to derive accurate personalized brain masks to evaluate regional errors related to amyloid and tau imaging. Surface analysis was additionally performed to understand errors for the whole cortical regions.

Materials and methods

Participates

Thirty-five subjects (three patients with mild-cognitive impairment and thirty-two cognitively healthy volunteers, 19 males and 16 females, 68.6 ± 11.6 years old [range 47–86]) recruited for Harvard Aging Brain Study (HABS) were scanned under a study protocol that was approved by Massachusetts General Hospital (MGH) Institutional Review Board (IRB). Written informed consent was obtained from all subjects before participation in the study.

MR acquisition

MR acquisitions were performed on a 3-T MR scanner (MAGNETOM Trio, Siemens Healthcare, Erlangen, Germany) using body coil for transmission and 12-channel head coil for reception. T1-weighted anatomical images were acquired using magnetization-prepared rapid acquisition with gradient echo (MPRAGE) sequence. 3D mUTE sequence (Supplementary Fig. 1) [22] was used to acquire Dixon and mUTE images, with the following imaging parameters: image size = 128 × 128 × 128, voxel size = 1.875 × 1.875 × 1.875 mm3, hard radiofrequency (RF) pulse with flip angle = 15° and pulse duration = 100 μs, repetition time (TR) = 8.0 ms, maximum readout gradient amplitude = 19.57 mT/m, maximum readout gradient slew rate = 48.9 mT/m/ms, and acquisition time = 52 s. The final reconstruction from the mUTE sequence resulted in seven images: one UTE image and six multi-echo Dixon images with corresponding echo times (TEs) of 0.07, 2.1, 2.3, 3.6, 3.7, 5.0, and 5.2 ms, respectively.

PET acquisition

Two separate PET/CT examinations were performed for each subject on a whole-body PET/CT scanner (Discovery MI, GE Healthcare, Milwaukee, WI, USA): one is amyloid PET imaging by the administration of 11C-PiB and the other is tau PET imaging based on 18F-MK-6240. For amyloid imaging, the imaging protocol consisted of 555-MBq bolus injection of 11C-PiB followed by a dynamic scan over 70 min. PET Data from 55 to 60 min post-injection were used for evaluation. For tau imaging, the imaging protocol consisted of 185-MBq bolus injection of 18F-MK-6240 followed by a dynamic scan over 120 min. Only data from 85 to 90 min post-injection were evaluated. For both amyloid and tau imaging, PET images were reconstructed using the ordered subset expectation maximization (OSEM) algorithm with point spread function (PSF) modeling with two iterations and sixteen subsets. The voxel size was 1.17 × 1.17 × 2.80 mm3 and the image size was 256 × 256 × 89. The same CT imaging protocol was used for both amyloid and tau imaging, with tube peak voltage = 120 kVp, tube current time product 30 = mAs, in-plane resolution = 0.56 × 0.56 mm2, and slice-thickness = 1 mm.

Neural network details

For the Unet structure [37], the feature size after the first convolutional module needs to be enlarged to fully utilize the input information from multi-contrast MR images or neighboring slices, which increases the total number of parameters dramatically. In this work, Group-Unet structure was employed to efficiently handle scenarios with multiple network-input channels [28] and to utilize network parameters more efficiently, based on the assumption that when the network goes deeper, the spatial information becomes discrete and the traditional convolution module can be replaced by the group convolution module [39]. Details of the Group-Unet structure are shown in Supplementary Fig. 2.

To construct the training pairs, MR images were registered to CT images through rigid transformation using the ANTs software [40]. Random rotation and permutation were performed on the training pairs to avoid over-fitting. When random rotation was applied, interpretation was performed with the voxel size and image size fixed. Though image resolution will be compromised a little due to the interpretation operation, this will not be a problem for attenuation map generation as the attenuation map does not have complicated structures as nature images. The Group-Unet employed in this work was based on 2D convolutions, instead of 3D convolutions, to reduce the GPU memory usage and training parameters, and hence was a 2D network. As PET AC is based on 3D CT images, the network outputs were further combined to construct 3D pseudo-CT images. To reduce the axial aliasing artifacts, four neighboring slices were supplied as the additional network input [41]. With Dixon and MPRAGE images as the network input, the input channel was 5 (neighboring slices); with mUTE images as the network input, the input channel was 5 (neighboring slices) × 7 (number of images). As a result, two network structures were needed due to the varying of network input channels. The only difference between the two networks was the number of input channels (5 vs. 35). Other settings of the network, e.g., number of features after each convolution, were the same. The training parameters were 6.02 million and 6.03 million for the network with 5 input channels and the network with 35 input channels, respectively.

The training objective function was based on the L1-norm loss calculated between the pseudo and the ground-truth CT images. The network was implemented in TensorFlow 1.14 with the Adam optimizer. The learning rate and the decay rates of the default settings in TensorFlow were used. Fivefold cross-validation was utilized to make full use of all the data. The batch size was set to 30, and 600 epochs were used as the training cost function becomes steady after 600 epochs. The training time running 600 epochs was 6.7 h based on the Nvidia GTX 1080 Ti GPU.

Pseudo-CT image analysis

To evaluate the quality of generated pseudo-CT images, we first calculated the relative validation loss of the CT images as:

where CTtruth is the ground-truth CT image and CTgenerated is the generated pseudo-CT image. As bone regions are close to the cortex, they were additionally quantified using the Dice coefficient. Regions with attenuation coefficient higher than 0.1083 cm−1 (200 HU unit) were classified as the bone area.

PET image analysis

To enable better registration between PET and MR images, the PET image of interest was first registered to the first 8-min frame of the dynamic scan, through which it was registered to the MPRAGE image based on rigid registration. Freesurfer was used for cortical parcellation based on the MPRAGE image to get the region of interests (ROIs) for amyloid and tau quantification. Based on the Braak staging [42], the cortical regions crucial to amyloid burden calculation [43, 44]—superior frontal, rostral anterior cingulate, posterior cingulate, precuneus, inferior parietal, supramarginal, medial orbitofrontal, middle temporal, and superior temporal—were used as ROIs for amyloid quantification. As for the quantification of tau imaging, the early Braak stage-related cortices—hippocampus, entorhinal, parahippocampal, inferior temporal, fusiform, posterior cingulate, lingual, and insula—were employed as the evaluation ROIs. The ROIs for the amyloid imaging quantification were mostly near the upper regions of the brain, and the ROIs chosen for the tau imaging quantification were concentrated near the middle/inferior temporal regions. By evaluating the AC quality of both amyloid and tau imaging, the performance of different AC methods was examined for most of the cortex regions.

Compared with amyloid deposits which are more heterogeneously and randomly distributed, the pathway of tau NFTs is more precise. Resolving the topographic patterns of tau retention in PET can enable better disease monitoring, which makes surface analysis a necessary addition to regional analysis for tau imaging. In this work, we have performed additional surface analysis for tau imaging to comprehensively evaluate and understand different AC methods. The surface map of the PET error image was generated for each tau-imaging dataset and was registered to the FSAverage template in Freesurfer to construct the averaged surface map.

For both regional and surface analysis, the relative PET error was used and calculated as:

where PETpseudoCT and PETtrueCT refer to the reconstructed PET images with AC using the pseudo-CT images generated from different MR AC methods and the ground-truth CT image, respectively. For regional analysis, PETpseudoCT and PETtrueCT indicate the mean values inside the specified ROI. For surface analysis, PETpseudoCT and PETtrueCT indicate the values at the vertices. With respect to the global PET analysis, Bland-Altman plots were drawn for both amyloid and tau imaging regarding SUVR to understand PET-error distributions of different methods.

Comparison methods

One popular method available in clinical PET/MR scanners is the Dixon image-based atlas method [45]. This method is denoted as Atlas-Dixon and is adopted for comparison in this work. For the deep learning–based approach, apart from the proposed CNN-mUTE method, we have tested two other cases with different MR images: MPRAGE and Dixon images were used as network input for additional comparison, which are denoted as CNN-MPRAGE and CNN-Dixon, respectively.

Results

Pseudo-CT analysis

Figure 1 shows one example of the generated pseudo-CT images using different methods along with the ground-truth CT image. All deep learning methods generated more accurate bone distributions compared with the Atlas-Dixon method, with CNN-mUTE revealing the most accurate bone details. For all thirty-five datasets, the relative validation loss and the Dice coefficients of the bone regions are shown in Table 1. As observed from the table, deep learning methods showed higher quantification accuracy compared with the Atlas-Dixon method. CNN-MPRAGE and CNN-Dixon showed similar performance and CNN-mUTE showed higher quantification accuracy compared with both CNN-MPRAGE and CNN-Dixon, regarding both relative validation loss and Dice coefficients.

Regional and global PET analysis

We have embedded the generated pseudo-CT images into PET image reconstruction to evaluate PET AC accuracy. Figure 2 shows one amyloid-imaging example of the PET errors (PETpseudoCT − PETtrueCT, unit: SUV) using different methods of the same subject as shown in Fig. 1. The CT/pseudo-CT images and the reconstructed PET images were also presented in the figure to better observe the source of errors. PET error images from the datasets with the largest and the smallest PET regional relative errors using the proposed CNN-mUTE method are shown in Supplementary Fig. 3 and Supplementary Fig. 4, respectively. These correspond to the best and worst cases using the proposed CNN-mUTE method. From these three examples, we can observe that the PET errors from the deep learning methods were smaller than those from the Atlas-Dixon method, especially alongside the bone regions.

Three views of the CT/pseudo-CT images, PET images (11C-PiB, unit: SUV), and the corresponding PET error images (PETpseudoCT − PETtrueCT, unit: SUV) for one subject. For each view, the first row shows the ground-truth CT image (first column) and the pseudo-CT images generated by Atlas-Dixon (second column), CNN-MPRAGE (third column), CNN-Dixon (fourth column), and CNN-mUTE (fifth column), respectively; the second row shows the PET images reconstructed using the ground-truth CT image and different pseudo-CT images; the third row shows the corresponding PET error images for different methods

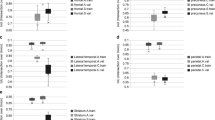

Figure 3 shows the regional PET analysis based on all thirty-five subjects from the amyloid-imaging dataset regarding SUV and SUVR. Figure 4 shows the same analysis based on the tau-imaging dataset. The regional errors from all deep learning methods were smaller than 2% for both amyloid and tau imaging. Although the SUV error in some cortex regions, e.g., hippocampus and posterior cingulate, was relatively small (e.g., lower than 2%) for the Atlas-Dixon method, those small-SUV-error cortex regions displayed much larger error for SUVR since the error in the cerebellum was significant (e.g., around 6%). As SUVR is the metric widely used for amyloid and tau imaging, the Atlas-Dixon method may cause significant errors for amyloid and tau imaging quantification.

Figure 5 shows the Bland-Altman plots for tau imaging regarding SUVR. Based on the distributions, we can see that the deep learning–based methods showed narrower error ranges compared with the Atlas-Dixon method. The error ranges from CNN-MPRAGE and CNN-Dixon were similar, and CNN-mUTE method showed relatively smaller error range compared with both CNN-MPRAGE and CNN-Dixon. The same trend was also observed from the Bland-Altman plot for amyloid imaging regarding SUVR presented in Supplementary Fig. 5.

Bland-Altman plots regarding tau imaging for (a) Atlas-Dixon, (b) CNN-MPRAGE, (c) CNN-Dixon, and (d) CNN-mUTE methods. The x-axis stands for the mean value between the PET images reconstructed using the ground-truth CT and generated pseudo-CT (0.5 × (PETpseudoCT + PETtrueCT), unit: SUVR). The y-axis stands for the difference between the PET images reconstructed using generated pseudo-CT and the ground-truth CT (PETpseudoCT − PETtrueCT, unit: SUVR)

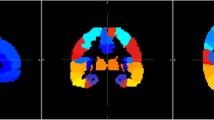

PET surface analysis

Figure 6 shows the averaged surface map for PET SUVR error of different AC methods for tau imaging. The Atlas-Dixon method showed the largest error (e.g., > 10%) at vertices inside superior frontal, lateral occipital, superior parietal, and inferior temporal cortices. The deep learning methods showed smaller error compared with the Atlas-Dixon method for almost all regions. For the deep learning methods, relatively high error (e.g., > 5%) was shown in some vertices near medial orbitofrontal and inferior temporal cortices. CNN-mUTE showed the least number of vertices with errors larger than 1%, followed by CNN-Dixon and CNN-MPRAGE.

Discussion

Compared with other PET applications, amyloid and tau imaging rely more on SUVR-based cortical quantification, which is significantly impacted by the accuracy of pseudo-CT bone map and the PET quantification in the cerebellar cortex. For tau imaging, in addition to regional analysis, evaluation of pixel-wise tracer distribution as well as the topographic patterns is necessary for better disease monitoring and early diagnosis. In this regard, specific evaluations of deep learning–based AC methods for amyloid and tau imaging are necessary. In this work, we have performed AC for amyloid and tau imaging using the proposed CNN-mUTE method, and compared it with deep learning methods based on Dixon and MPRAGE as well as the vendor-available Atlas-Dixon method through detailed regional and surface analysis. Results showed that for SUVR quantification, the Atlas-Dixon method showed relatively large errors. The deep learning methods resulted in lower quantification error compared with the Atlas-Dixon method in both regional and surface analyses. Specifically, the proposed CNN-mUTE method provided better PET AC, in comparison with CNN-MPRAGE and CNN-Dixon methods. This is likely due to the abundance in physical information contained in the multi-contrast images from mUTE, which consist of perfectly registered UTE images for bone imaging and multi-echo Dixon images for robust water/fat separation. Our previous work has shown that a physical model can be derived to map the mUTE images to continuous attenuation coefficient distributions of bone, fat, and water [22]. However, obtaining the model parameters requires solving a nonlinear estimation problem at each voxel, which becomes challenging in the presence of severe B0 and B1 inhomogeneities. Combining the mUTE-based AC method with deep learning further improves the accuracy and robustness of AC in PET/MR. All these results indicate that deep learning–based AC is a powerful technique to predict the attenuation map from MR images for amyloid and tau imaging, which can be further improved when network input is fed with more information of direct physical meaning.

The Group-Unet utilized in this work was developed to efficiently utilize multiple network inputs based on supervised learning [28]. We also developed a 3D Cycle-GAN network which did not need paired CT-MR images and can thus relax the training-data collection requirements [46]. Results presented in [46] showed that 3D Cycle-GAN was better than Dixon-based segmentation and atlas methods, and its performance was a little inferior to Group-Unet (Dice coefficient of the bone region was 0.76 for Group-Unet and 0.74 for Cycle-GAN). Because Cycle-GAN does not utilize the strong pixel-to-pixel loss from paired data, the results of Cycle-GAN are still very promising, especially for applications where registration or obtaining paired data is difficult. For this work, as paired CT-MR images were available, Group-Unet was preferred because of its better performance. In addition, the mUTE sequence generated multiple MR images. If Cycle-GAN was used, one of the generator networks needs to synthesize multiple pseudo-MR images from one CT image, which might not be easy to train as it is a one-to-many mapping. Based on the consideration of network performance and training difficulty, Group-Unet was employed in this work.

As shown by Fig. 3 and Fig. 4, the cerebellar cortex can be largely influenced by the inaccuracy of bone in the pseudo-CT map. This can be a problem especially for amyloid and tau imaging as SUVR, instead of SUV, is widely employed in amyloid and tau imaging to approximate the distribution volume ratio (DVR). The SUVR thresholds are often adopted to separate subjects into different clinical groups in amyloid and tau imaging. We noticed that the PET error maps for SUV and SUVR are quite different when Atlas-Dixon method was used for AC. Initially, we hypothesized that SUVR error may be smaller than SUV error as the errors in the cortex regions and the cerebellar cortex may cancel out. Results in this work demonstrated that this is not the case, as the error distribution for the cerebellar cortex and other cortices can be quite different. Deep learning–based AC methods can generate better bone maps near the cerebellar cortex and can greatly improve the overall SUVR quantification.

For the surface maps of tau imaging shown in Fig. 6, we noticed that for the medial orbitofrontal cortex and the entorhinal cortex, the deep learning–based methods still showed relatively large errors (e.g., > 5%), though smaller than those from the Atlas-Dixon method. We presume this is because the bone near the sinuses and the mastoid part of the temporal bone are much more complicated than the cranial bone, which could be more difficult to be accurately synthesized through MR images. As medial orbitofrontal cortex is one of the composite cortex regions for amyloid burden calculation [44], and inferior and middle temporal cortices are crucial for early-stage tau quantification [42], further improvements of MR AC are still needed for amyloid and tau imaging. In addition, due to limited datasets, validation datasets were not used during network training. The epoch number was fixed at 600 for all network training. This epoch-number choice is not optimal, which is one limitation of current study.

Conclusion

In this work, we have proposed an mUTE-based deep learning method for PET AC of amyloid and tau imaging. The performance of the proposed method was evaluated through regional and surface analyses on 11C-PiB and 18F-MK6240 datasets acquired on thirty-five subjects. Our results show that deep learning–based methods can generate accurate AC for SUVR quantification, with the proposed mUTE-based deep learning method achieving the best AC performance when compared with MPRAGE- and Dixon-based deep learning methods as well as the Dixon-based atlas method.

References

Klunk WE, Engler H, Nordberg A, Wang Y, Blomqvist G, Holt DP, et al. Imaging brain amyloid in Alzheimer’s disease with Pittsburgh compound-B. Ann Neurol. 2004;55:306–19.

Choi SR, Golding G, Zhuang Z, Zhang W, Lim N, Hefti F, et al. Preclinical properties of 18F-AV-45: a PET agent for Aβ plaques in the brain. J Nucl Med. 2009;50:1887–94.

Chien DT, Bahri S, Szardenings AK, Walsh JC, Mu F, Su M-Y, et al. Early clinical PET imaging results with the novel PHF-tau radioligand [F-18]-T807. J Alzheimers Dis. 2013;34:457–68.

Hostetler ED, Walji AM, Zeng Z, Miller P, Bennacef I, Salinas C, et al. Preclinical characterization of 18F-MK-6240, a promising PET tracer for in vivo quantification of human neurofibrillary tangles. J Nucl Med. 2016;57:1599–606.

Dickson JC, O’Meara C, Barnes A. A comparison of CT-and MR-based attenuation correction in neurological PET. Eur J Nucl Med Mol Imaging. 2014;41:1176–89.

Cabello J, Lukas M, Kops ER, Ribeiro A, Shah NJ, Yakushev I, et al. Comparison between MRI-based attenuation correction methods for brain PET in dementia patients. Eur J Nucl Med Mol Imaging. 2016;43:2190–200.

Ladefoged CN, Law I, Anazodo U, Lawrence KS, Izquierdo-Garcia D, Catana C, et al. A multi-centre evaluation of eleven clinically feasible brain PET/MRI attenuation correction techniques using a large cohort of patients. NeuroImage. 2017;147:346–59.

Martinez-Möller A, Souvatzoglou M, Delso G, Bundschuh RA, Chefd’hotel C, Ziegler SI, et al. Tissue classification as a potential approach for attenuation correction in whole-body PET/MRI: evaluation with PET/CT data. J Nucl Med. 2009;50:520–6.

Keereman V, Fierens Y, Broux T, De Deene Y, Lonneux M, Vandenberghe S. MRI-based attenuation correction for PET/MRI using ultrashort echo time sequences. J Nucl Med. 2010;51:812–8.

Berker Y, Franke J, Salomon A, Palmowski M, Donker HCW, Temur Y, et al. MRI-based attenuation correction for hybrid PET/MRI systems: a 4-class tissue segmentation technique using a combined ultrashort-echo-time/Dixon MRI sequence. J Nucl Med. 2012;53:796–804.

Ladefoged CN, Benoit D, Law I, Holm S, Kjær A, Højgaard L, et al. Region specific optimization of continuous linear attenuation coefficients based on UTE (RESOLUTE): application to PET/MR brain imaging. Phys Med Biol. 2015;60:8047.

Sekine T, ter Voert EEGW, Warnock G, Buck A, Huellner M, Veit-Haibach P, et al. Clinical evaluation of zero-echo-time attenuation correction for brain 18F-FDG PET/MRI: comparison with atlas attenuation correction. J Nucl Med. 2016;57:1927–32.

Catana C, van der Kouwe A, Benner T, Michel CJ, Hamm M, Fenchel M, et al. Toward implementing an MRI-based PET attenuation-correction method for neurologic studies on the MR-PET brain prototype. J Nucl Med. 2010;51:1431–8.

Izquierdo-Garcia D, Hansen AE, Förster S, Benoit D, Schachoff S, Fürst S, et al. An SPM8-based approach for attenuation correction combining segmentation and nonrigid template formation: application to simultaneous PET/MR brain imaging. J Nucl Med. 2014;55:1825–30.

Burgos N, Cardoso MJ, Thielemans K, Modat M, Pedemonte S, Dickson J, et al. Attenuation correction synthesis for hybrid PET-MR scanners: application to brain studies. IEEE Trans Med Imaging. 2014;33:2332–41.

Torrado-Carvajal A, Herraiz JL, Alcain E, Montemayor AS, Garcia-Cañamaque L, Hernandez-Tamames JA, et al. Fast patch-based pseudo-CT synthesis from T1-weighted MR images for PET/MR attenuation correction in brain studies. J Nucl Med. 2016;57:136–43.

Sekine T, Buck A, Delso G, Ter Voert EE, Huellner M, Veit-Haibach P, et al. Evaluation of atlas-based attenuation correction for integrated PET/MR in human brain: application of a head atlas and comparison to true CT-based attenuation correction. J Nucl Med. 2016;57:215–20.

Mehranian A, Zaidi H. Joint estimation of activity and attenuation in whole-body TOF PET/MRI using constrained Gaussian mixture models. IEEE Trans Med Imaging. 2015;34:1808–21.

Huynh T, Gao Y, Kang J, Wang L, Zhang P, Lian J, et al. Estimating CT image from MRI data using structured random forest and auto-context model. IEEE Trans Med Imaging. 2016;35:174–83.

Johansson A, Karlsson M, Nyholm T. CT substitute derived from MRI sequences with ultrashort echo time. Med Phys. 2011;38:2708–14.

Zaidi H, Diaz-Gomez M, Boudraa A, Slosman D. Fuzzy clustering-based segmented attenuation correction in whole-body PET imaging. Phys Med Biol. 2002;47:1143.

Han P, Horng D, Gong K, Petibon Y, Johnson K, Ouyang J, et al. MR-based PET attenuation correction using 3D UTE/multi-echo Dixon: in vivo results. J Nucl Med. 2019;60:172.

Aasheim LB, Karlberg A, Goa PE, Håberg A, Sørhaug S, Fagerli U-M, et al. PET/MR brain imaging: evaluation of clinical UTE-based attenuation correction. Eur J Nucl Med Mol Imaging. 2015;42:1439–46.

Lee JS. A review of deep Learning-based approaches for attenuation correction in positron emission tomography. IEEE Trans Radiat Plasma Med Sci. 2020. https://doi.org/10.1109/TRPMS.2020.3009269.

Wagenknecht G, Kaiser H-J, Mottaghy FM, Herzog H. MRI for attenuation correction in PET: methods and challenges. MAGMA. 2013;26:99–113.

Han X. MR-based synthetic CT generation using a deep convolutional neural network method. Med Phys. 2017;44:1408–19.

Liu F, Jang H, Kijowski R, Bradshaw T, McMillan AB. Deep Learning MR Imaging--based attenuation correction for PET/MR imaging. Radiology. 2017:170700.

Gong K, Yang J, Kim K, El Fakhri G, Seo Y, Li Q. Attenuation correction for brain PET imaging using deep neural network based on Dixon and ZTE MR images. Phys Med Biol. 2018.

Ladefoged CN, Marner L, Hindsholm A, Law I, Højgaard L, Andersen FL. Deep learning based attenuation correction of PET/MRI in pediatric brain tumor patients: evaluation in a clinical setting. Front Neurosci. 2018;12.

Spuhler KD, Gardus J, Gao Y, DeLorenzo C, Parsey R, Huang C. Synthesis of patient-specific transmission image for PET attenuation correction for PET/MR imaging of the brain using a convolutional neural network’. J Nucl Med. 2018:jnumed--118.

Hwang D, Kim KY, Kang SK, Seo S, Paeng JC, Lee DS, et al. Improving the accuracy of simultaneously reconstructed activity and attenuation maps using deep learning. J Nucl Med. 2018;59:1624–9.

Arabi H, Zeng G, Zheng G, Zaidi H. Novel adversarial semantic structure deep learning for MRI-guided attenuation correction in brain PET/MRI. Eur J Nucl Med Mol Imaging. 2019:1–14.

Shiri I, Ghafarian P, Geramifar P, Leung KH-Y, Ghelichoghli M, Oveisi M, et al. Direct attenuation correction of brain PET images using only emission data via a deep convolutional encoder-decoder (Deep-DAC). Eur Radiol. 2019:1–13.

Leynes AP, Yang J, Wiesinger F, Kaushik SS, Shanbhag DD, Seo Y, et al. Direct PseudoCT generation for pelvis PET/MRI attenuation correction using deep convolutional neural networks with multi-parametric MRI: zero echo-time and Dixon deep pseudoCT (ZeDD-CT). J Nucl Med. 2017:jnumed-117.

Torrado-Carvajal A, Vera-Olmos J, Izquierdo-Garcia D, Catalano OA, Morales MA, Margolin J, et al. Dixon-VIBE deep learning (DIVIDE) pseudo-CT synthesis for pelvis PET/MR attenuation correction. J Nucl Med. 2018:jnumed--118.

Hwang D, Kang SK, Kim KY, Seo S, Paeng JC, Lee DS, et al. Generation of PET attenuation map for whole-body time-of-flight 18F-FDG PET/MRI using a deep neural network trained with simultaneously reconstructed activity and attenuation maps. J Nucl Med. 2019:jnumed. 118.219493.

Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. Int Conf Med Image Comput Comput Assist Intervention. 2015:234–41.

Fischl B. FreeSurfer Neuroimage. 2012;62:774–81.

Xie S, Girshick R, Dollar P, Tu Z, He K. Aggregated residual transformations for deep neural networks. IEEE Conf Comput Vis Pattern Recognit (CVPR).

Avants BB, Tustison N, Song G. Advanced normalization tools (ANTS). Insight J. 2009;2:1–35.

Kim K, Wu D, Gong K, Dutta J, Kim JH, Son YD, et al. Penalized PET reconstruction using deep learning prior and local linear fitting. IEEE Trans Med Imaging. 2018;37:1478–87.

Braak H, Braak E. Neuropathological stageing of Alzheimer-related changes. Acta Neuropathol. 1991;82:239–59.

Johnson KA, Schultz A, Betensky RA, Becker JA, Sepulcre J, Rentz D, et al. Tau positron emission tomographic imaging in aging and early Alzheimer disease. Ann Neurol. 2016;79:110–9.

Hedden T, Van Dijk KR, Becker JA, Mehta A, Sperling RA, Johnson KA, et al. Disruption of functional connectivity in clinically normal older adults harboring amyloid burden. J Neurosci. 2009;29:12686–94.

Wollenweber SD, Ambwani S, Delso G, Lonn AHR, Mullick R, Wiesinger F, et al. Evaluation of an atlas-based PET head attenuation correction using PET/CT & MR patient data. IEEE Trans Nucl Sci. 2013;60:3383–90.

Gong K, Yang J, Larson PEZ, Behr SC, Hope T, Seo Y, et al. MR-based attenuation correction for brain PET using 3D cycle-consistent adversarial network. IEEE Trans Radiat Plasma Med Sci. 2020. https://doi.org/10.1109/TRPMS.2020.3006844.

Funding

This work was financially supported by the National Institutes of Health under grants RF1AG052653, R21AG067422, R03EB030280, R01AG046396, and P41EB022544.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Author Quanzheng Li has received research support from Siemens Medical Solutions. Other authors declare that they have no conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Advanced Image Analyses (Radiomics and Artificial Intelligence)

Electronic supplementary material

ESM 1

(DOCX 3715 kb)

Rights and permissions

About this article

Cite this article

Gong, K., Han, P.K., Johnson, K.A. et al. Attenuation correction using deep Learning and integrated UTE/multi-echo Dixon sequence: evaluation in amyloid and tau PET imaging. Eur J Nucl Med Mol Imaging 48, 1351–1361 (2021). https://doi.org/10.1007/s00259-020-05061-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00259-020-05061-w