Abstract

We discuss \(L_p\)-estimates for finite difference schemes approximating parabolic, possibly degenerate, SPDEs, with initial conditions from \(W^m_p\) and free terms taking values in \(W^m_p.\) Consequences of these estimates include an asymptotic expansion of the error, allowing the acceleration of the approximation by Richardson’s method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper spatial finite difference schemes for parabolic stochastic partial differential equations (SPDEs) are considered. In the literature finite difference approximations for deterministic partial differential equations are well studied, we refer the reader to [1], to recent results in [3], and the references therein. There is a growing number of publications on finite difference schemes also for SPDEs, see e.g. [2, 10, 22], and their references. In recent papers, see e.g. [6, 7, 9, 11], \(L_2\)-theory is used to estimate in \(W^m_2\)-norms the error of finite difference approximations for the solutions of parabolic SPDEs. Hence error estimates in supremum norms are proved via Sobolev’s embedding if \(2m\) is larger than the dimension \(d\) of the state space \(\mathbb {R}^d\). Therefore to get estimates in supremum norm, in these papers unnecessary spatial smoothness of the coefficients of the equation are required. Moreover, the smoothness conditions in these papers depend on the dimension of the state space. Our aim is to overcome this problem and generalize the results of [7] by giving \(W^m_p\)-norm estimates, assuming that the initial condition is in \(W^m_p\) and the free terms are \(W^m_p\)-valued processes. This forces us to give up part of generality, but important examples, like the Zakai equation in case of uncorrelated noises, are included. Since bounded functions, or more generally, functions with polynomial growth, can be seen as elements of suitable weighted Sobolev spaces with arbitrarily large integrability exponent \(p\), for equations with such data we get dimension-invariant conditions on the smoothness of the coefficients.

It should be noted that the \(L_p\)- and \(L_q(L_p)\)-theory of SPDEs are well developed, see e.g. [12, 15, 16]. Their results, however, will not be used, as these theories deal with uniformly parabolic SPDEs, while the equations in this paper may degenerate and become first order SPDEs.

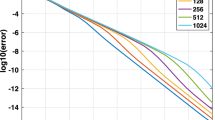

Following the idea seen in [14], to estimate the solutions of finite difference schemes we consider them in the whole space rather than on a grid. Through the estimates obtained for their Sobolev norms on the whole space, this allows us to estimate their supremum norm on a grid. For the finite difference approximations not only their convergence is proved, but also power series expansion in the mesh size is obtained. As in [9], this allows us to accelerate the rate of convergence, using the well known Richardson extrapolation, introduced in [20].

Finally, let us introduce some notation used throughout the paper. We consider a complete probability space \((\Omega ,\mathcal {F},P)\), which is equipped with a filtration \(\mathbb {F}=(\mathcal {F}_t)_{t\ge 0}\) and carries a sequence of independent \(\mathcal {F}_t\)-Wiener martingales \((w^r)_{r=1}^{\infty }\). We use the notation \(\mathcal {P}\) for the \(\sigma \)-algebra of the predictable subsets of \(\Omega \times [0,T]\). It is assumed that \(\mathcal {F}_0\) contains every \(P\)-zero set. For \(p\ge 2\) and \(m\ge 0\), \(W^m_p\) denotes the Sobolev space with exponent \(p\) and order \(m\). For integer \(m\), this is the space of functions whose generalized partial derivatives up to order \(m\) are in \(L_p\), for non integer real \(m\), \(W^m_p\) is a fractional Sobolev space, or, as often cited in the literature, Bessel potential space, for the definition we refer to [21]. The Sobolev spaces of \(l_2\)-valued functions will be denoted by \(W^m_p(l_2)\). We use the notation

for \(v\in (v^1,\ldots ,v^d)\in \mathbb {R}^d\), and

for multi-indices \(\alpha =(\alpha _1,\ldots ,\alpha _d)\in \{0,1,\ldots \}^d\) of length

Derivatives are understood in the generalized sense unless otherwise noted. The summation convention with respect to repeated indices is used thorough the paper, where it is not indicated otherwise.

The paper is organized as follows. Formulation of the problem and the statements of the main results are collected in Sect. 2. The appropriate estimate for the finite difference scheme is derived in Sect. 3, and it is used in the proof of the main results in Sect. 4.

2 Formulation of the Results

We consider the SPDE

for \((t,x)\in [0,T]\times \mathbb {R}^d=:H_T\), with the initial condition

with the summation convention here and in the rest of the paper is used with respect to the repeated indices \(i\), \(j\) and \(r\).

The initial value \(\psi \) is an \(\mathcal {F}_0\)-measurable random variable with values in \(W^1_p\) for a fixed \(p\ge 2\). For all \(i,j=1,2,\ldots ,d\) the coefficients \(a^{ij}=a^{ji}\), \(b^{i}\) and \(c\) are real-valued \(\mathcal {P}\times \mathcal {B}(\mathbb {R}^d)\)-measurable bounded functions, and \(\mu ^{i}=(\mu ^{i r})_{r=1}^{\infty }\) and \(\nu =(\nu ^{r})_{r=1}^{\infty }\) are \(l_2\)-valued \(\mathcal {P}\times \mathcal {B}(\mathbb {R}^d)\)-measurable bounded functions on \(\Omega \times H_T\). The free terms \(f=(f_t)_{t\ge 0}\) and \(g=(g_t)_{t\ge 0}\) are \(W^1_p\)-valued and \(W^1_{p}(l_2)\) -valued adapted processes.

Let \(m\in [1,\infty )\) Set

and let \(K>0\) be a constant. We make the following assumptions.

Assumption 2.1

The derivatives of the coefficients \(b^{i}\) and \(c\) in \(x\in \mathbb {R}^d\) up to order \(\lceil m\rceil \), and the derivatives of \(a^{ij}\) in \(x\) up to order \(\lceil m\rceil +1\) are functions, bounded by \(K\). The \(l_2\)-valued functions \(\mu ^{i}\) and \(\nu \) satisfy either of the following:

-

(i)

their derivatives in \(x\) up to order \(\lceil m\rceil +1\) are functions, in magnitude bounded by \(K\).

-

(ii)

\(\mu =(\mu ^i)_{i=1}^{d}=0\) and the derivatives of \(\nu \) in \(x\) up to order \(\lceil m\rceil \) are functions, in magnitude bounded by \(K\).

Assumption 2.2

Almost surely \(\psi \in W^{m}_p\), and either

-

(i)

\(F_{{m},p}(T)+G_{{m+1},p}(T)<\infty \) (a.s.), or

-

(ii)

\(\mu =0\) and \(F_{{m},p}(T)+G_{{m},p}(T)<\infty \) (a.s.).

Assumption 2.3

Almost surely the matrix valued function

is positive semidefinite for each \((t,x)\in H_T\).

The notion of (generalised) solution is defined as follows.

Definition 2.1

A \(W^1_p\)-valued adapted weakly continuous process \((u_t)_{t\in [0,T]}\) is a solution of (2.1)–(2.2) on the interval \([0,\tau ]\) for a stopping time \(\tau \le T\), if almost surely

for all \(t\in [0,\tau ]\), \(\varphi \in C^{\infty }_0(\mathbb {R}^d)\), where \((v,\varphi )\) denotes the integral

for functions \(\varphi \) and \(v\) on \(\mathbb {R}^d\), when \(v\varphi \in L_1(\mathbb {R}^d)\).

Existence and uniqueness theorems for degenerate SPDEs are established in [19] and [4]. We will need a slight generalization of these results, which will be proven at the end of Sect. 3.

Theorem 2.1

Let Assumptions 2.1, 2.2 and 2.3 hold. Then (2.1)–(2.2) has a unique solution \(u=(u_t)_{t\in [0,T]}\) on \([0,T]\). Moreover, \(u\) is a \(W^m_p\)-valued weakly continuous process, it is strongly continuous with values in \(W^{m-1}_p\), and for all \(l\in [0,m]\) and \(q>0\)

where \(\kappa =0\) if \((\mu ^i)=0\) and \(\kappa =1\) otherwise, and \(N\) is a constant depending only on \(T\), \(d\), \(K\), \(p\), and \(m\).

While Theorem 2.1 is stated for a general equation of the form (2.1)–(2.2), all of the subsequent results will only be proven under the restriction \(\mu =0\).

To introduce the finite difference schemes approximating (2.1) first let \(\Lambda _0,\Lambda _1\subset \mathbb {R}^d\) be two finite sets, the latter being symmetric to the origin, and \(0\in \Lambda _1\setminus \Lambda _0\). Denote

and \(|\Lambda |=\sum _{\lambda \in \Lambda }|\lambda |\). On \(\Lambda \) we make the following assumption.

Assumption 2.4

If any subset \(\Lambda '\subset \Lambda \) is linearly dependent, then \(\Lambda '\) is linearly dependent over the rationals.

Let \(\mathbb {G}_h\) denote the grid

for \(h>0\), and define the finite difference operators

and the shift operators

for \(\lambda \in \Lambda \) and \(h\ne 0\). Notice that \(\delta _{h,0}\varphi =0\) and \(T_{h,0}\varphi =\varphi \). For a fixed \(h>0\) consider the finite difference equation

for \((t,x)\in [0,T]\times \mathbb {G}_h\), with the initial condition

for \(x\in \mathbb {G}_h\), where

for functions \(\varphi \) on \(\mathbb {G}_h\). The coefficients \(\mathfrak {a}_h^{\lambda }\), \(\mathfrak {p}_h^{\gamma }\), and \(\mathfrak {c}_h^{\gamma }\) are \(\mathcal {P}\times \mathcal {B}(\mathbb {R}^d)\)-measurable bounded functions on \(\Omega \times [0,T]\times \mathbb {R}^d\), with values in \(\mathbb {R}\), and \(\mathfrak {p}^0_h=0\) is assumed. All of them are supposed to be defined for \(h=0\) as well, and to depend continuously on \(h\).

Note that Assumption 2.4 ensures that \(\mathbb {G}_h\cap B\) is finite for any bounded set \(B\subset \mathbb {R}^d\). This condition is necessary for (2.5) to be useful from a practical point of view.

One can look for solutions of the above scheme in the space of adapted stochastic processes with values in \(l_{p,h}\), the space of real functions \(\phi \) on \(\mathbb {G}_h\) such that

The similar space is defined for \(l_2\)-valued functions and will be denoted by \(l_{p,h}(l_2)\). For a fixed \(h\) Eq. (2.5) is an SDE in \(l_{p,h}\), with Lipschitz coefficients, by the boundedness of \(\mathfrak {a}_h^{\lambda },\mathfrak {p}_h^{\gamma }\), \(\mathfrak {c}_h^{\gamma }\), and \(\nu ^r\). Hence if almost surely

then (2.5)–(2.6) admits a unique \(l_{p,h}\)-valued solution \((u^h_{t})_{t\in [0,T]}\).

Remark 2.1

By well-known results on Sobolev embeddings, if \(m>k+d/p\), there exists a bounded operator \(J\) from \(W^m_p\) to the space of functions with bounded and continuous derivatives up to order \(k\) such that \(Jv=v\) almost everywhere. In the rest of the paper we will always identify functions with their continuous modifications if they have one, without introducing new notation for them. It is also known, and can be easily seen, that if Assumption 2.4 holds and \(m>d/p\), then the for \(v\in W^m_p\) the restriction of \(Jv\) onto the grid \(\mathbb {G}_h\) is in \(l_{p,h}\), moreover,

where \(C\) is independent of \(v\) and \(h\).

Remark 2.2

The \(h\)-dependency of the coefficients may seem artificial and in fact does not mean any additional difficulty in the proof of Theorems 2.2–2.4 below. However, we will make use of this generality to extend our results to the case when the data in the problem (2.1)–(2.2) are in some weighted Sobolev spaces.

Clearly

as \(h\rightarrow 0\) for smooth functions \(\varphi \), so in order to get that our finite difference operators approximate the corresponding differential operators, we make the following assumption.

Assumption 2.5

We have, for every \(i,j=1,\ldots ,d\)

and for \(P\times dt\times dx\)-almost all \((\omega ,t,x)\) we have

Remark 2.3

The restriction (2.8) together with \(\mathfrak {a}^{\lambda }_0\ge 0\) is not too severe, we refer the reader to [17] for a detailed discussion about matrix-valued functions which possess this property.

Example 2.1

Suppose that the matrix \((a^{ij})\) is diagonal. Then taking \(\Lambda _0=\{e_i:i=1\ldots d\}\) and \(\Lambda _1=\{0\}\cup \{\pm e_i: i=1\ldots d\}\), where \((e_i)\) is the standard basis in \(\mathbb {R}^d\), one can set

with any \(\theta ^i\ge \max (0,-b^i)\), \(i=1\ldots d\).

Example 2.2

Suppose that \((a^{ij})\) is a \(\mathcal {P}\otimes \mathcal {B}(\mathbb {R}^d)\)-measurable function of \((\omega ,t, x)\), with values in a closed bounded polyhedron in the set of symmetric non-negative \(d\times d\) matrices, such that its first and second order derivatives in \(x\in \mathbb {R}^d\) are continuous in \(x\) and are bounded by a constant \(K\). Then it is shown in [17] that one can obtain a finite set \(\Lambda _0\subset \mathbb {R}^d\) and \(\mathcal {P}\otimes \mathcal {B}(\mathbb {R}^d)\)-measurable, bounded, nonnegative functions \(\mathfrak {a}^{\lambda }_0\), \(\lambda \in \Lambda _0\) such that (2.8) holds, and the first order derivatives of \((\mathfrak {a}^{\lambda }_0)^{1/2}\) in \(x\) are bounded by a constant \(N\) depending only on \(K\), \(d\) and the polyhedron. Such situation arises in applications when, for example, \((a^{ij}_t(x))\) is a diagonally dominant symmetric non-negative definite matrix for each \((\omega ,t,x)\), which by definition means that

and hence it clearly follows that \((a^{ij})\) takes values in a closed polyhedron in the set of symmetric non-negative \(d\times d\) matrices. Clearly, this polyhedron can be chosen to be bounded if \((a^{ij})\) is a bounded function. Moreover, in the case \(d=2\) explicit formulas are given in [18] to represent diagonally dominant symmetric non-negative definite matrices \((a^{ij})\) in the form (2.8).

The coefficients of the first and zero order terms, i.e., \(\mathfrak {p}^{\gamma }_h\) and \(\mathfrak {c}^{\gamma }_h\) can be chosen as in Example 2.1.

If \((a^{ij})\) does not depend on \(x\), and it is a bounded \(\mathcal {P}\)-measurable function of \((\omega ,t)\) with values in the set of diagonally dominant symmetric non-negative definite matrices, then we can take

where \((e_i)_{i=1}^d\) is the standard basis in \(\mathbb {R}^d\), and set

with any constants \(\theta ^{ij}\ge \kappa \) and \(\theta ^i \ge \kappa -\tfrac{1}{2}|b^i|\), for \(i,j=1,\ldots ,d\), where \(\kappa \) is any nonnegative constant, and \(a^{\pm }:=(|a|\pm a)/2\) for \(a\in \mathbb {R}\). Then clearly, \(\Lambda _0\), \(\Lambda _1\), \(\mathfrak {a}_h^{\lambda }\), \(\mathfrak {p}_h^{\gamma }\) and \(\mathfrak c_h\) satisfy Assumptions 2.4, 2.5 above, and Assumption 2.6 below.

Since the compatibility condition (2.8)–(2.9) will always be assumed, any subsequent conditions will be formulated for the coefficients in (2.5), which then automatically imply the corresponding properties for the coefficients in (2.1).

Assumption 2.6

The coefficients \(\mathfrak {a}_h^{\lambda }\) (resp., \(\mathfrak {p}_h^{\gamma }\), \(\mathfrak {c}_h^{\gamma }\), \(\nu \)), and their partial derivatives in the variable \((h,x)\) up to order \(\lceil m\rceil +1\) (resp., \(\lceil m\rceil \)) are functions bounded by \(K\).

Assumption 2.7

The initial value \(\psi \) is in \(W^{{m}}_p\), and the free terms \(f\) and \(g\) are \(W^{{m}}_p\)-valued and \(W^{{m}}_p(l_2)\)-valued processes, respectively, such that almost surely \(F_{m,p}(T)+G_{m,p}(T)<\infty \).

We are now about to present the main results. The first three theorems correspond to similar results in the \(L_2\) setting from [7]. The key role in their proof is played by Theorem 3.1 below, which presents an upper bound for the \(W^m_p\) norms of the solutions to (2.5)–(2.6). After obtaining this estimate, Theorems 2.2 through 2.4 can be proved in the same fashion as their counterparts in the \(L_2\) setting. Therefore, in Sect. 4 only a sketch of the proof will be provided in which we highlight the main differences; for the complete argument we refer to [7].

Theorem 2.2

Let \(k\ge 0\) be an integer and let Assumptions 2.4 through 2.7 hold with \(m>2k+3+d/p\). Then there are continuous random fields \(u^{(1)},\ldots u^{(k)}\) on \([0,T]\times \mathbb {R}^d\), independent of \(h\), such that almost surely

for \(t\in [0,T]\) and \(x\in \mathbb {G}_h\), where \(u^{(0)}=u\), \(r^h\) is a continuous random field on \([0,T]\times \mathbb {R}^d\), and for any \(q>0\)

with \(N=N(K,T,m,p,q,d,|\Lambda |).\)

Once we have the expansion above, we can use Richardson extrapolation to improve the rate of convergence. For a given \(k\) set

where \(V\) denotes the \((k+1)\times (k+1)\) Vandermonde matrix \(V=(V^{ij})=(2^{-(i-1)(j-1)})\), and define

where \(h_i=h/2^i\).

Theorem 2.3

Let \(k\ge 0\) be an integer and let Assumptions 2.4 through 2.7 hold with \(m>2k+3+d/p\). Then for every \(q>0\) we have

with \(N=N(K,T,m,k,p,q,d,|\Lambda |).\)

Theorem 2.4

Let \((h_n)_{n=1}^{\infty }\in l_q\) be a nonnegative sequence for some \(q\ge 1\). Let \(k\ge 0\) be an integer and let Assumptions 2.4 through 2.7 hold with \(m>2k+3+d/p\). Then for every \(\varepsilon >0\) there exists a random variable \(\xi _{\varepsilon }\) such that almost surely

for \(h=h_n\).

Remark 2.4

We can use \(h_i=h/n_i\), \(i=1\ldots k\), with any set of different integers \(n_i\), with \(n_1=1\). Then changing the matrix \(V\) to \(\tilde{V}=(\tilde{V}^{ij})=(n_i^{-j+1})\) in (2.12), Theorems 2.3–2.4 remain valid. The choice \(n_i=i\), for example, yields a more coarse grid, and can reduce computation time.

Choosing \(p\) large enough, in some cases one can get rid of the term \(d/p\) in the conditions of the theorems above, thus obtaining dimension-invariant conditions. To this end, first denote the function \(\rho _s(x)=1/(1+|x|^2)^{s/2}\) defined on \(\mathbb {R}^d\) for all \(s\ge 0\). We say that a function \(F\) on \(\mathbb {R}^d\) has polynomial growth of order \(s\) if the \(L_{\infty }\) norm of \(F\rho _s\) is finite. For any integer \(m\ge 0\), the set of functions on \(\mathbb {R}^d\) which have polynomial growth of order \(s\) and whose derivatives up to order \(m\) are functions and have polynomial growth of order \(s\) is denoted by \(P^m_s\), and its equipped with the norm

The similar space is defined for \(l_2\)-valued functions and is denoted by \(P^m_s(l_2)\). Note that for any integers \(m>k\ge 0\), if \(F\in P^m_s\), then its partial derivatives up to order \(k\) exist in the classical sense and along with \(F\) are continuous functions. The polynomial growth property of order \(s\) for functions on \(\mathbb {G}_h\) can also be defined analogously, the set of such functions is denoted by \(P_{h,s}\).

Let \(s\ge 0\) and \(m\) be a nonnegative integer. Consider again the equation

for \((t,x)\in [0,T]\times \mathbb {R}^d\), with the initial condition

where we keep all our measurability conditions from (2.1)–(2.2). However, instead of the integrability conditions on \(\psi , f_t, g_t\), we now assume the following.

Assumption 2.8

The initial data \(\psi \) is an \(\mathcal {F}_0\times \mathcal {B}(\mathbb {R}^d)\)-measurable mapping from \(\Omega \times \mathbb {R}^d\) to \(\mathbb {R}\), such that \(\psi \in P^m_s\) (a.s.). The free data \(f\) and \(g\) are \(\mathcal {P}\times \mathcal {B}(\mathbb {R}^d)\)-measurable mappings from \(\Omega \times [0,T]\times \mathbb {R}^d\) to \(\mathbb {R}\) and \(l_2\), respectively. Moreover, almost surely \((f_t)\) is a \(P^m_s\)-valued process and \((g_t)\) is a \(P^m_s(l_2)\)-valued process, such that

Definition 2.2

A \(\mathcal {P}\times \mathcal {B}(\mathbb {R}^d)\)-measurable mapping \(u\) from \(\Omega \times [0,T]\times \mathbb {R}^d\) to \(\mathbb {R}\) such that \((u_t)_{t\in [0,T]}\) is almost surely a \(P^1_s\)-valued bounded process, is called a classical solution of (2.13)–(2.14) on \([0,T]\), if almost surely \(u\) and its first and second order partial derivatives in \(x\) are continuous functions of \((t,x)\in [0,T]\times \mathbb {R}^d\), and almost surely

for all \((t,x)\in [0,T]\times \mathbb {R}^d\) for a suitable modification of the stochastic integral in the right-hand side of the equation.

If \(m\ge 1\), then as noted above the initial condition and free terms are continuous in space. This makes it reasonable to consider the finite difference scheme (2.5)–(2.6) as an approximation for the problem (2.13)–(2.14).

Theorem 2.5

Let \(k\ge 0\) be integer, and let \(\overline{s}>s\ge 0\) be real numbers. Suppose that Assumptions 2.4 2.5, 2.6, and 2.8 hold with \(m>2k+3\).

-

(i)

Equation (2.13)–(2.14) admits a unique \(P^{m-1}_{\overline{s}}\)-valued classical solution \((u_t)_{t\in [0,T]}\).

-

(ii)

For fixed \(h\) the corresponding finite difference Eqs. (2.5)–(2.6) admits a unique \(P_{h,\overline{s}}\)-valued solution \((u^h_t)_{t\in [0,T]}\).

-

(iii)

Suppose furthermore \(\mathfrak {p}_h^{\gamma }\ge \kappa \) for \(\gamma \in \Lambda _1\), for some constant \(\kappa >0\), and

$$\begin{aligned} \Lambda _0\cup -\Lambda _0\subset \Lambda _1. \end{aligned}$$Then there are continuous random fields \(u^{(1)},\ldots u^{(k)}\) on \([0,T]\times \mathbb {R}^d\), independent of \(h\), such that almost surely

$$\begin{aligned} u^h_t=\sum _{j=0}^k\frac{h^j}{j!}u^{(j)}_t(x)+h^{k+1}r_t^h(x) \end{aligned}$$for \(t\in [0,T]\) and \(x\in \mathbb {G}_h\), where \(u^{(0)}=u\), \(r^h\) is a continuous random field on \([0,T]\times \mathbb {R}^d\), and for any \(q>0\)

$$\begin{aligned}&\mathbb {E}\sup _{t\in [0,T]}\sup _{x\in \mathbb {G}_h}|r_t^h(x) \rho _{\overline{s}}(x)|^q +\mathbb {E}\sup _{t\in [0,T]}|r_t^h\rho _{\overline{s}}|^q_{l_{p,h}}\\&\le N\left( \mathbb {E}\Vert \psi \Vert _{P^m_s}^q +\mathbb {E}\big |\Vert f_t\Vert _{{P^m_s}}+\Vert g_t\Vert _{P^m_s(l_2)}\big |_{L_{\infty }[0,T]}^q\right) \end{aligned}$$with some \(N=N(K,T,m,s,\overline{s},q,d,|\Lambda |,\kappa ).\)

-

(iv)

Let \((h_n)_{n=1}^{\infty }\in l_q\) be a nonnegative sequence for some \(q\ge 1\). Then for every \(\varepsilon , M>0\) there exists a random variable \(\xi _{\varepsilon ,M}\) such that almost surely

$$\begin{aligned} \sup _{t\in [0,T]}\sup _{x\in \mathbb {G}_h,|x|\le M}|u_t(x)-v_t^h(x)| \le \xi _{\varepsilon ,M}h^{k+1-\varepsilon } \end{aligned}$$for \(h=h_n\).

This theorem will be proved in Sect. 4.

Remark 2.5

Condition \(\mathfrak {p}_h^{\gamma }\ge \kappa \) in assertion (iii) of the above theorem is harmless, similarly to the second part of (2.10). As seen in Examples 2.1 and 2.2, we can always satisfy this additional requirement by adding a sufficiently large constant to \(\mathfrak {p}_h^{\gamma }\).

3 Estimate on the Finite Difference Scheme

First let us collect some properties of the finite difference operators. Throughout this section we consider a fixed \(h>0\) and use the notation \(u_{\alpha }=D^{\alpha }u\). It is easy to see that, analogously to the integration by parts,

when \(v\in L_{q/{q-1}}\) and \(u\in L_q\) for some \(1\le q\le \infty \), with the convention \(1/0=\infty \) and \(\infty /(\infty -1)=1\). The discrete analogue of the Leibniz rule can be written as

Finally, we will also make use of the simple identities

and the estimate

valid for \(p\in [1,\infty ]\) and \(v\in W^1_p\), \(h\ne 0\) and \(\lambda \in \mathbb {R}^d\).

Lemma 3.1

For any \(p\ge 2\), \(\lambda \in \mathbb {R}^d\), \(h\ne 0\) and real function \(v\) on \(\mathbb {R}^d\) we can write

where \(F^{h,\lambda }_p(v)\ge 0\), and for \(p>2\), \(q=p/(p-2)\) and for all \(v\in L_p(\mathbb {R}^d)\)

Proof

The derivative of the function \(G(r)=|r|^{p-2}r\) is

so we have

By Jensen’s inequality and the convexity of the function \(|r|^p\),

Hence (3.6) follows by Fubini’s theorem and the shift invariance of the Lebesgue measure. \(\square \)

Lemma 3.2

Let \(m\) be a nonnegative integer, and let \(\alpha \) be a multi-index of length \(m\). Then the following statements hold.

-

(i)

Let \(\mathfrak a\) be a nonnegative function on \(\mathbb {R}^d\) such that its generalised derivatives up to order \(m+1\) are functions, in magnitude bounded by a constant \(K\). If \(m\ge 1\) then let the first order generalised derivatives of \(\sigma :=\sqrt{\mathfrak a}\) be also functions, bounded by \(K\). Then for \(u\in W^m_p\), \(p\in [2,\infty )\), \(\lambda \in \mathbb {R}^d\) and \(h\ne 0\)

$$\begin{aligned} \int _{\mathbb {R}^d}|D^{\alpha }u|^{p-2}D^{\alpha }u D^{\alpha }\delta _{-h,\lambda }({\mathfrak a}\delta _{h,\lambda }u)\,dx \le N|u|^p_{W^m_p}. \end{aligned}$$(3.7) -

(ii)

Let \(\mathfrak p\) be a nonnegative function on \(\mathbb {R}^d\) such that its generalised derivatives up to order \(m\vee 1\) are functions bounded by \(K\). Let \(p=2^k\) for an integer \(k\ge 1\). Then for \(u\in W^m_p\), \(\lambda \in \mathbb {R}^d\) and \(h>0\)

$$\begin{aligned} \int _{\mathbb {R}^d}|D^{\alpha }u|^{p-2}D^{\alpha }u D^{\alpha }({\mathfrak p}\delta _{h,\lambda }u)\,dx \le N|u|^p_{W^m_p}. \end{aligned}$$(3.8)

The constant \(N\) in the above estimates depend only on \(m\), \(p\), \(d\), \(K\) and \(|\lambda |\).

Proof

Recall the notation \(u_{\alpha }=D^{\alpha }u\). For real functions \(v\) and \(w\) defined on \(\mathbb {R}^d\) we write \(v\sim w\) if their integrals over \(\mathbb {R}^d\) are the same. We use the notation \(v\preceq w\) if \(v=w+F\) with a function \(F\) whose integral over \(\mathbb {R}^d\) can be estimated by \(N|u|^p_{W^m_p}\), where \(N\) is a constant depending only on \(m\), \(K\), \(p\), \(d\) and \(|\lambda |\). To prove (3.7) we consider first the case \(m=0\). By (3.1) and Lemma 3.1

where \(F\) is the functional obtained from Lemma 3.1. Consequently, (3.7) holds for \(m=0\). Assume now \(m\ge 1\). Then it is easy to see that

with

where \(A\) is the set of ordered pairs of multi-indices \((\alpha ',\alpha '')\) such that \(|\alpha '|=1\) and \(\alpha '+\alpha ''=\alpha \). By (3.1) and Lemma 3.1

for every \(\varepsilon >0\), where the simple inequality \(2yz\le \varepsilon y^2+\varepsilon ^{-1}z^2\) is used with \( y=\sigma \delta _{h,\lambda }u_{\alpha } \) and \( z=\sum _{(\alpha ',\alpha '')\in A}D^{\alpha '}\sigma \delta _{h,\lambda }u_{\alpha ''} \) . Using (3.9) with \(u_{\alpha }\) in place of \(u\) we get

Combining this with (3.11) with sufficiently small \(\varepsilon \), from (3.10) we obtain

with \(q=p/(p-2)\), which gives (3.7), due to the estimates (3.6) and (3.5).To prove (3.8) notice that for \(p=2^k\)

Hence we can repeatedly use (3.4) and the nonnegativity of \(\mathfrak p_h^{\lambda }\) to get

By (3.1), \(\mathfrak {p}\delta _{h,\lambda }u_{\alpha }^{p}\) has the same integral over \(\mathbb {R}^d\) as \(\delta _{h,-\lambda }\mathfrak {p}u_{\alpha }^p\), and hence (3.8) follows, since \(|\delta _{h,-\lambda }\mathfrak {p}|\le K|\lambda |\) by (3.5). \(\square \)

Corollary 3.1

Let \(m\ge 1\) be an integer and \(p=2^k\) for some integer \(k\ge 1\), and let Assumptions 2.6 and 2.7, along with the condition (2.10) be satisfied. Then for \(u\in W_p^m\), \(f\in W_p^m\), \(g\in W_p^{m}(l_2)\) and for all multi-indices \(\alpha \) of length \(|\alpha |\le m\) we have

for \(P\times dt\)-almost all \((\omega ,t)\in \Omega \times [0,T]\), where \(N\) is a constant depending only on \(d,p, m, |\Lambda |,\) and \(K\).

Proof

Using the notation of the preceding proof, by Hölder’s inequality

The remaining two terms are estimated in Lemma 3.2. \(\square \)

The following is a stochastic version of Gronwall’s lemma, for its proof we refer to [5].

Lemma 3.3

Let \((y_t)_{t\in [0,T]}\), \((F_t)_{t\in [0,T]}\), and \((G_t)_{t\in [0,T]}\) be two nonnegative adapted processes, and let \((m_t)_{t\in [0,T]}\) be a continuous local martingale such that for a constant \(N\) almost surely

for all \(t\in [0,T]\). Assume furthermore that for some \(p\ge 2\) almost surely

Then for every \(q\ge 0\) there exists a constant \(C\), depending only on \(N\), \(q\), \(p\), and \(T\), such that

Consider (2.5) without restricting it to the grid \(\mathbb {G}_h\), that is,

with the initial condition

The solution of (3.13)–(3.14) is understood in the spirit of Definition 2.1.

Definition 3.1

An \(L_p(\mathbb {R}^d)\)-valued continuous adapted process \((u^h_t)_{t\in [0,T]}\) is a solution to (3.13)–(3.14) on \([0,\tau ]\) for a stopping time \(\tau \le T\) if almost surely

for all \(t\le \tau \) and \(\varphi \in C_0^{\infty }(\mathbb {R}^d)\).

Assumption 2.6 implies that the operators \(u\rightarrow L_t^h u\) and \(u\rightarrow (b^r_t u)_{r=1}^{\infty }\) are bounded linear operators from \(W^m_p\) to \(W^m_p\) and to \(W^m_p(l_2)\), respectively, with operator norm uniformly bounded in \((t,\omega )\). Therefore if Assumption 2.7 is also satisfied, (3.13) is a SDE in the space \(W^m_p\) with Lipschitz continuous coefficients. As such, it admits a unique continuous solution.

Theorem 3.1

Let Assumptions 2.6 and 2.7 hold with \(m\ge 1\), and let condition (2.10) be satisfied. Then (3.13)–(3.14) has a unique continuous \(W^m_p\)-valued solution \((u_t^h)_{t\in [0,T]}\), and for each \(q>0\) there exists a constant \(N=N(d,q,p,m,K,T,|\Lambda |)\) such that

for all \(h>0\).

Proof

By the preceding argument, we need only prove estimate (3.16). First let \(m\) and \(p\) be as in the conditions of Corollary 3.1, and fix a \(q>1\). Let \(\alpha \) be a multi-index such that \(|\alpha |\le m\). If we apply Itô’s formula to \(|D^{\alpha }u^h|^p_{L_p}\) by Lemma 5.1 in [13], one can notice that the term appearing in the drift is the left-hand side of (3.12), with \(u^h\) in place of \(u\). Using Corollary 3.1 and summing over \(|\alpha |\le m\) we get

with some \(N\) depending only on \(p, m,\) \(d\), \(|\Lambda |\), and \(K\), where

with \(\alpha \) used as a repeated index. It is clear that

so Lemma 3.3 can be applied to the function \(|u_t^h|^{p}_{W^m_p}\) and the power \(q/p\), which proves (3.16) for integer \(m\), \(p=2^k\).

Note that (3.16) is equivalent to

which implies

for any \(r>1\), with another constant \(N\), independent from \(r\). In other words, this means that for the special case of \(m\) and \(p\) considered so far the solution operator

continuously maps \(\Psi ^m_p\times \mathcal {F}^m_p\times \mathcal {G}^m_p\) to \(\mathcal {U}^m_p\), where

Let us denote the complex interpolation space between any Banach spaces \(A_0\) and \(A_1\) with parameter \(\theta \) by \([A_0,A_1]_{\theta }\). Recall the following interpolation properties (see 1.9.3, 1.18.4, and 2.4.2 from [21])

-

(i)

If a linear operator \(T\) is continuous from \(A_0\) to \(B_0\) and from \(A_1\) to \(B_1\), then it is also continuous from \([A_0,A_1]_{\theta }\) to \([B_0,B_1]_{\theta }\), moreover, its norm between the interpolated spaces depends only on \(\theta \) and its norm between the original spaces.

-

(ii)

For a measure space \(M\) and \(1< p_0,p_1<\infty \),

$$\begin{aligned}{}[L_{p_0}(M,A_0),L_{p_1}(M,A_1)]_{\theta }=L_{p_{\theta }}(M,[A_0,A_1]_{\theta }), \end{aligned}$$where \(1/p_{\theta }=(1-\theta )/p_0+\theta /p_1\).

-

(iii)

For \(m_0,m_1\in \mathbb {R}\), \(1<p_0,p_1<\infty \),

$$\begin{aligned}{}[W^{m_0}_{p_0},W^{m_1}_{p_1}]_{\theta }=W^{m_{\theta }}_{p_{\theta }}, \end{aligned}$$where \(m_{\theta }=(1-\theta )m_0+\theta m_1\), and \(1/p_{\theta }=(1-\theta )/p_0+\theta /p_1\).

Now take any \(p\ge 2\), and take \(p_0\le p\le p_1\) such that \(p_0=2^k\) and \(p=2^{k+1}\) for \(k\in \mathbb {N}\), and set \(\theta \in [0,1]\) such that \(1/p=(1-\theta )/p_0+\theta /p_1.\) By property (ii) we have

and therefore by (i) the solution operator is continuous for any \(p\ge 2\) and integer \(m\ge 1\).

For arbitrary \(m\ge 1\), \(p\ge 2\), set \(\theta =\lfloor m\rfloor /\lceil m\rceil \). Then properties (ii) and (iii) imply that

If Assumptions 2.6 and 2.7 hold, then the solution operator is continuous from \(\Psi ^{\lceil m\rceil }_{p} \times \mathcal {F}^{\lceil m\rceil }_{p} \times \mathcal {G}^{\lceil m\rceil }_p\) to \(\mathcal {U}^{\lceil m\rceil }_{p}\), and from \(\Psi ^{\lfloor m\rfloor }_{p} \times \mathcal {F}^{\lfloor m\rfloor }_{p} \times \mathcal {G}^{\lfloor m\rfloor }_{p}\) to \(\mathcal {U}^{\lfloor m\rfloor }_{p}\). Applying property (i) again therefore yields (3.17) for \(m\), \(p\). Letting \(r\rightarrow \infty \) and keeping in mind that \(u^h\) is a continuous in \(W^m_p\)-valued process, using Fatou’s lemma we get (3.16) when \(q>1\). Hence for \(q>1\) we obtain

for every stopping time \(\tau \le T\), integer \(n\ge 1\), and \(A\in \mathcal {F}_0\), where

and \(N\) is a constant depending only on \(K\), \(T\), \(m\), \(q\), \(d\) and \(|\Lambda |\). By virtue of Lemma 3.2 from [8] this implies

for any \(q>0\) with a constant \(N=N(K,T,p,d,m,|\Lambda |)\). We finish the proof by letting here \(n\rightarrow \infty \). \(\square \)

Proof of Theorem 2.1

By rewriting the equation in the non-divergence form, the theorem for integer \(m\) follows from Theorem 2.1 in [4]. Thus we need only prove it when \(m\) is non integer. From [4], we know that under the conditions of the theorem, (2.1)–(2.2) admits a unique solution \(u\). Moreover, it is \(W^{\lfloor m\rfloor }_p\)-valued, and

holds for \(l=0,1,\ldots ,\lceil m\rceil \) and \(q>0\), where \(\kappa =0\) when \((\mu ^i)=0\) and \(\kappa =1\) when \((\mu ^i)\) is not identically zero. Assume first that \(q>1\). Then following the same interpolation arguments as above, we find that (3.18) holds for all \(l\in [0,\lceil m\rceil ]\), with ess sup in place of \(\sup \) on the left-hand side. Then by substituting \((1-\Delta )^{(m-1)/2}\phi \) in place of \(\phi \) in (2.3), we obtain that for any \(\phi \in C_0^{\infty }\), almost surely for all \(t\in [0,T]\),

where, due to estimates such as

\(\mathbf {F}^i\) and \(\mathbf {G}=(\mathbf {G}^k)_{k=1}^{\infty }\) are predictable processes with values in \(L_p\), such that

\(\square \)

Using Itô’s formula for the \(L_p\) norm from [13], we find that \((1-\Delta )^{(m-1)/2}u\) is a strongly \(L_p\)-valued process, and thus \(u\) is a strongly continuous \(W^{m-1}_p\)-valued process. Hence almost surely

is continuous in \(t\in [0,T]\) for all \(\varphi \in C_0^{\infty }\). Let \(\Phi \) denote the set of those \(C^{\infty }_0\) functions which belong to the unit ball of \(L_{p^{*}}\), where \(p^{*}=p/(p-1)\). Then

This, the continuity in \(t\in [0,T]\) of the expression in (3.19) and the denseness of \(C_0^{\infty }\) in \(W^{-m}_{p^{*}}\) imply that almost surely \(u\) is a \(W^m_p\)-valued weakly continuous process. Consequently, (3.18) holds for all \(l\in [0,m]\) and \(q>1\). Hence using Lemma 3.2 from [8] in the same way as at the end of the proof of Theorem 3.1, we obtain (3.18) for all \(l\in [0,m]\) and \(q>0\).

4 Proof of the Main Results

Proof of Theorems 2.2,2.3,2.4

To prove Theorem 2.2, first consider the functions

for fixed \(\phi \in W^{n+l+2}_p,\psi \in W^{n+l+3}_p\), \(n,l\ge 0\). Applying Taylor’s formula at \(h=0\) up to \(n+1\) terms we get that

with constants \(A_i=1/(i+1)!\) and

where \(N=N(|\Lambda |,d,l,n)\) is a constant. Similarly, or in fact equivalently to the first inequality, we have

for \(\varphi \in W^{n+l+1}_p\), where \(\partial ^0_{\lambda }\) denotes the identity operator. Without going into details, it is clear that, due to Assumption 2.6, from these expansions one can obtain operators \(\mathfrak {L}_t^{(i)}\) for integers \(i\in [0,\lceil m\rceil ]\) such that \(\mathfrak {L}^0_t\phi =\partial _i a^{ij}\partial _j\phi +b^i\partial _i\phi +c\phi \),

and

with \(N=N(|\Lambda |,K,d,p,m)\). The random fields \(u^{(j)}\) in expansion (2.11) can then be obtained from the system of SPDEs

where \(v^{(0)}=u\), the solution of (2.1)–(2.2) when \(\mu =0\). The following theorem holds, being the exact analogue of Theorem 5.1 from [7]. It can be proven inductively on \(j\), by a straightforward application of Theorem 2.1 and (4.1)–(4.2). \(\square \)

Theorem 4.1

Let \(k\ge 1\) be an integer, and let Assumptions 2.5, 2.6 and 2.7 hold with \(m\ge 2k+1\). Then there is a unique solution \(u^{(1)},\ldots , u^{(k)}\) of (4.4)–(4.5). Moreover, \(u^{(j)}\) is a \(W^{m-2j}_p\)-valued weakly continuous process, it is strongly continuous as a \(W^{m-2j-1}_p\)-valued process, and

for \(j=1,\ldots ,k\), for any \(q>0\), with a constant \(N=N(K,m,p,q,T,|\Lambda |)\).

Set

for \(t\in [0,T]\) and \(x\in \mathbb {R}^d\), where \(u^h\) is the solution of (3.13)–(3.14). Then it is not difficult to verify that \(\overline{r}^h\) is the solution, in the sense of Definition 3.1, of the finite difference equation

with initial condition \(\overline{r}^h_0(x)=0\) for \(x\in \mathbb {R}^d\), where

Hence by applying Theorem 3.1 we get

Now using \(m-2k-3>d/p\), for the left-hand side we can write

while (4.3) and the theorem above yield

where \(N\) denotes some constants which depend only on \(K\), \(m\), \(d\), \(q\), \(p\), \(T\) and \(|\Lambda |\). Putting these inequalities together we obtain the estimate

for all \(h>0\) with a constant \(N=N(K, m, d, q, p, T,|\Lambda |)\). Thus we have the following theorem.

Theorem 4.2

Let \(k\ge 0\) be an integer and let Assumptions 2.5, 2.6 and 2.7 hold with \(m>2k+3+d/p\). Then there are continuous random fields \(u^{(1)},\ldots u^{(k)}\) on \([0,T]\times \mathbb {R}^d\), independent of \(h\), such that almost surely

for all \(t\in [0,T]\) and \(x\in \mathbb {R}^d\), where \(u^{(0)}=u\), \(u^h\) is the solution of (3.13)–(3.14), and \({\overline{r}}^h\) is a continuous random field on \([0,T]\times \mathbb {R}^d\), which for any \(q>0\) satisfies the estimate (4.6).

Proof

By Theorems 2.1, 3.1 and 4.1 \(u^h\), \(u^{(0)}\), \(u^{(1)}\),...,\(u^{(k)}\) are \(W^{m-1}_p\)-valued continuous processes. Since due to our assumption \(m-1>d/p\), by Sobolev’s theorem on embedding \(W^m_p(\mathbb {R}^d)\) into \(C_b(\mathbb {R}^d)\) we get that these random fields are continuous in \((t,x)\in [0,T]\times \mathbb {R}^d\). (Remember that we always identify the functions with their continuous version when they have one.) Hence (4.7) holds by the definition of \(\overline{r}^h\), and estimate (4.6) is proved above. \(\square \)

To finish the proof of Theorem 2.2 we need only show that if Assumption 2.4 holds then under the conditions of Theorem 4.2 the restriction of the solution \(u^h\) of (3.13)–(3.14) onto \([0,T]\times \mathbb {G}_h\) is a continuous \(l_p\)-valued process which solves (2.5)–(2.6). To this end note that under the conditions of Theorem 4.2 \(u^h\) is a continuous \(W^{m-1}_p\) valued process, and if Assumption 2.4 also holds then by (2.7) its restriction to \([0,T]\times \mathbb {G}_h\) is a continuous \(l_p\)-valued process. To see that this process satisfies (2.5)–(2.6) we fix a point \(x\in \mathbb {G}_h\) and take a nonnegative smooth function \(\varphi \) with compact support in \(\mathbb {R}^d\) such that its integral over \(\mathbb {R}^d\) is one. Define for each integer \(n\ge 1\) the function \(\varphi ^{(n)}(z)=n^{d}\varphi (n(z-x))\), \(z\in \mathbb {R}^d\). Then by Definition 3.1 we have for \(u^h\), the solution of (2.5)–(2.6), that almost surely

for all \(t\in [0,T]\) and for all \(n\ge 1\). Letting here \(n\rightarrow \infty \), for each \(t\in [0,T]\) we get

almost surely, since \(u^h\), \(\psi \), \(f\), \(\nu \), \(g\) and the coefficients of \(L^h\) are continuous in \(x\), due to Sobolev’s theorem on embedding \(W^m_p(\mathbb {R}^d)\) into \(C_b(\mathbb {R}^d)\) in the case \(m>d/p\). Note that both \(u^h_t(x)\) and the random field on the the right-hand side of Eq. (4.6) are continuous in \(t\in [0,T]\). Therefore we have this equality almost surely for all \(t\in [0,T]\) and \(x\in \mathbb {G}_h\). The proof of Theorem 2.2 is complete.

The extrapolation result, Theorem 2.3, follows from Theorem 2.2 by standard calculations, and hence Theorem 2.4 on the rate of almost sure convergence follows by a standard application of the Borel–Cantelli Lemma.

Proof of Theorem 2.5

Let \(\rho (x)=\rho _{\overline{s}}(\epsilon x) =1/(1+|\epsilon x|^2)^{\overline{s}/2}\), where \(\epsilon >0\) is to be determined later and choose \(p\) large enough so that \(1>d/p\)—and therefore \(m>2k+3+d/p\)—and Assumption 2.7 holds for \(\psi \rho \), \(f\rho \) and \(g\rho \) in place of \(\psi \), \(f\) and \(g\), respectively. After some calculations one gets that \(u\) is the solution of (2.13)–(2.14) if and only if \(u\rho \) is the solution of the equation

for \((t,x)\in [0,T]\times \mathbb {R}^d\), with the initial condition

for \(x\in \mathbb {R}^d\), where the coefficients are given by

\(\square \)

Due to our choice of \(\rho \), these coefficients still satisfy the conditions of Theorem 2.1 from [4]. Applying this theorem, we obtain a \(W^m_p\)-valued unique solution \(v\). Using Sobolev embedding, we get that \(v/\rho \)—which is a solution of (2.13) - is a \(P^{m-1}_p\)-valued process.

One can similarly transform the finite difference equations, using (3.2)–(3.3). It turns out that \(u^h\) is a solution of (2.5)–(2.6) if and only if \(u^h\rho \) is a solution of the equation

for \((t,x)\in [0,T]\times \mathbb {G}_h\) with initial condition

for \(x\in \mathbb {G}_h\), where

with

where \(\mathfrak {a}^{\lambda }\) is understood to be 0 when not defined.

As it was mentioned earlier, the restriction to \(\mathbb {G}_h\) of the continuous modifications of \(\psi \rho ,f\rho ,g\rho \) are in \(l_{p,h}\), \(l_{p,h}\)-valued, and \(l_{p,h}(l_2)\)-valued processes, respectively. The coefficients above are bounded, so as we have already seen, there exists a unique \(l_{p,h}\)-valued solution \(v^h\), in particular, it is bounded. Therefore \(v^h/\rho \) is a solution of (2.5) and has polynomial growth.

By choosing \(\epsilon \) small enough, \(|\delta _{h,\lambda }\rho /\rho |\) can be made arbitrarily small, uniformly in \(x\in \mathbb {R}^d,\lambda \in \Lambda ,|h|<1\). In particular, we can choose it to be small enough such that \(\hat{\mathfrak {p}}_h^{\gamma }\ge 0\). Moreover, all of the smoothness and boundedness properties of the coefficients are preserved. Therefore (4.11)–(4.12) is a finite difference scheme for the Eqs. (4.9)–(4.10) such that it satisfies Assumptions 2.4 through 2.7. Claims (iii) and (iv) then follow from applying Theorems 2.2 and 2.4.

References

Ciaret, P.G., Lions, J.J. (eds.): Handbook of Numerical Analysis. Elsevier, Amsterdam (2013)

Davie, A.M., Gaines, J.G.: Convergence of numerical schemes for solutions of parabolic stochastic partial differential equations. Math. Comput. 70, 121–134 (2001)

Dong, H., Krylov, N.V.: On the rate of convergence of finite-difference approximations for degenerate linear parabolic equations with C1 and C2 coefficients. Electron. J. Differ. Equ. 102, 1–25 (2005). http://ejde.math.txstate.edu MR2162263 (2006i:35008)

Gerencsér, M., Gyöngy, I., Krylov, N.V.: On the solvability of degenerate stochastic partial differential equations in Sobolev spaces. http://arxiv.org/abs/1404.4401

Gyöngy, I.: An introduction to the theory of stochastic partial differential equations, in preparation

Gyöngy, I.: On finite difference schemes for degenerate stochastic parabolic partial differential equations. J. Math. Sci. 179(1), 100–126 (2011)

Gyöngy, I.: On stochastic finite difference schemes. http://arxiv.org/abs/1309.7610

Gyöngy, I., Krylov, N.V.: On the rate of convergence of splitting-up approximations for SPDEs. Prog. Probab. 56, 301–321 (2003). Birkhäuser Verlag

Gyöngy, I., Krylov, N.V.: Accelerated finite difference schemes for linear stochastic partial differential equations in the whole space. SIAM J. Math. Anal. 42(5), 2275–2296 (2010)

Gyöngy, I., Millet, A.: Rate of Convergence of space time approximations for stochastic evolution equations. Potential Anal. 30, 29–64 (2009)

Hall, E.J.: Accelerated spatial approximations for time discretized stochastic partial differential equations. SIAM J. Math. Anal. 44, 3162–3185 (2012)

Kim, K.: \(L_q(L_p)\)-theory of parabolic PDEs with variable coefficients. Bull. Korean Math. Soc. 45(1), 169–190 (2008)

Krylov, N.V.: Itô’s formula for the \(L_{p}\)-norm of stochastic \(W^{1}_{p}\)-valued processes. Probab. Theory Relat. Fields 147(3–4), 583–605 (2010)

Krylov, N.V.: The rate of convergence of finite-difference approximations for Bellman equations with Lipschitz coefficients. Appl. Math. Optim. 52(3), 365–399 (2005)

Krylov, N.V.: An analytic approach to SPDEs, stochastic partial differential equations: six perspectives, Math. Surveys Monogr., vol. 64, pp. 185–242. Amer. Math. Soc., Providence (1999)

Krylov, N.V.: SPDEs in \(L_q((0,\tau ], L_p)\) spaces. Electron. J. Probab. 5(13), 1–29 (2000)

Krylov, N.V.: On factorizations of smooth nonnegative matrix-values functions and on smooth functions with values in polyhedra Appl. Math. Optim. 58(3), 373–392 (2008)

Krylov, N.V.: A priori estimates of smoothness of solutions to difference Bellman equation with linear and quasilinear operators. Math. Comput. 76, 669–698 (2007)

Krylov, N.V., Rozoovski, B.L.: Characteristics of second-order degenerate parabolic Itô equations. J. Sov. Math. 32(4), 336–348 (1986)

Richardson, L.F.: The approximate arithmetical solution by finite differences of physical problems involving differential equations. Philos. Trans. R. Soc. Lond. Ser. A 210, 307–357 (1910)

Triebel, H.: Interpolation Theory—Function Spaces—Differential Operators. North Holland Publishing Company, Amsterdam (1978)

Yoo, H.: Semi-discretizetion of stochastic partial differential equations on \({\mathbb{R}}^1\) by finite difference method. Math. Comput. 69, 653–666 (2000)

Acknowledgments

The authors would like to thank the anonymous referee for her/his remarks, which helped to improve the presentation of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gerencsér, M., Gyöngy, I. Finite Difference Schemes for Stochastic Partial Differential Equations in Sobolev Spaces. Appl Math Optim 72, 77–100 (2015). https://doi.org/10.1007/s00245-014-9272-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-014-9272-2