Abstract

Recently developed quantum algorithms address computational challenges in numerical analysis by performing linear algebra in Hilbert space. Such algorithms can produce a quantum state proportional to the solution of a d-dimensional system of linear equations or linear differential equations with complexity \({{\,\mathrm{poly}\,}}(\log d)\). While several of these algorithms approximate the solution to within \(\epsilon \) with complexity \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\), no such algorithm was previously known for differential equations with time-dependent coefficients. Here we develop a quantum algorithm for linear ordinary differential equations based on so-called spectral methods, an alternative to finite difference methods that approximates the solution globally. Using this approach, we give a quantum algorithm for time-dependent initial and boundary value problems with complexity \({{\,\mathrm{poly}\,}}(\log d, \log (1/\epsilon ))\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Differential equations have extensive applications throughout mathematics, science, and engineering. Numerical methods for differential equations have been widely studied (see for example [25]), giving fast algorithms for solving them using classical computers.

Recent work has developed quantum algorithms with the potential to extract information about solutions of systems of differential equations even faster than is possible classically. This body of work grew from the quantum linear systems algorithm (QLSA) [20], which produces a quantum state proportional to the solution of a sparse system of d linear equations in time \({{\,\mathrm{poly}\,}}(\log d)\). Subsequent work improved the performance of that algorithm [1, 15] and applied it to develop similar quantum algorithms for differential equations.

To achieve this improvement, quantum algorithms must operate under different assumptions than those made for algorithms in classical numerical analysis. To represent the output using \({{\,\mathrm{poly}\,}}(\log d)\) qubits, the output is produced as a quantum state, not as an explicit description of a vector. Furthermore, to facilitate applying Hamiltonian simulation, these quantum algorithms use implicit access to the system of equations (say, through a matrix specified by a sparse Hamiltonian oracle and the ability to prepare quantum states encoding certain vectors). While these assumptions restrict the types of equations that can be addressed and the types of information that can be extracted from the final state, they nevertheless appear capable of producing useful information that cannot be efficiently computed classically.

In this paper, we focus on systems of first-order linear ordinary differential equations (ODEs). Such equations can be written in the form

where \(t \in [0,T]\) for some \(T>0\), the solution \(x(t)\in {\mathbb {C}}^d\) is a d-dimensional vector, and the system is determined by a time-dependent matrix \(A(t)\in {\mathbb {C}}^{d\times d}\) and a time-dependent inhomogeneity \(f(t)\in {\mathbb {C}}^d\). Provided A(t) and f(t) are continuous functions of t, the initial value problem (i.e., the problem of determining x(t) for a given initial condition x(0)) has a unique solution [2].

The Hamiltonian simulation problem is simply the special case of the quantum ODE problem where A is antihermitian and f is zero. A substantial body of work has developed fast quantum algorithms for that special case [6,7,8,9, 11, 13, 27,28,29,30]. Hamiltonian simulation underlies the QLSA [15, 20] which in turn gives algorithms for more general differential equations.

Berry presented the first efficient quantum algorithm for general linear ODEs [5]. His algorithm represents the system of differential equations as a system of linear equations using a linear multistep method and solves that system using the QLSA. This approach achieves complexity logarithmic in the dimension d and, by using a high-order integrator, close to quadratic in the evolution time T. While this method could in principle be applied to handle time-dependent equations, the analysis of [5] only explicitly considers the time-independent case for simplicity.

Since it uses a finite difference approximation, the complexity of Berry’s algorithm as a function of the solution error \(\epsilon \) is \({{\,\mathrm{poly}\,}}(1/\epsilon )\) [5]. Reference [10] improved this to \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\) by using a high-precision QLSA based on linear combinations of unitaries [15] to solve a linear system that encodes a truncated Taylor series. However, this approach assumes that A(t) and f(t) are time-independent so that the solution of the ODE can be written as an explicit series, and it is unclear how to generalize the algorithm to time-dependent ODEs.

While we focus here on extending the above line of work, several other approaches have been proposed for addressing differential equations with quantum computers. Reference [26] used a quantum version of the Euler method to handle nonlinear ODEs with polynomial nonlinearities. This algorithm has complexity logarithmic in the dimension but exponential in the evolution time (as is inevitable for general nonlinear ODEs). Other work has developed quantum algorithms for partial differential equations (PDEs). Reference [16] described a quantum algorithm that applies the QLSA to implement a finite element method for Maxwell’s equations. Reference [17] applied Hamiltonian simulation to a finite difference approximation of the wave equation. Most recently, reference [3] presented a continuous-variable quantum algorithm for initial value problems with non-homogeneous linear PDEs.

Most of the aforementioned algorithms use a local approximation: they discretize the differential equations into small time intervals to obtain a system of linear equations or linear differential equations that can be solved by the QLSA or Hamiltonian simulation. For example, the central difference scheme approximates the time derivative at the point x(t) as

High-order finite difference or finite element methods can reduce the error to \(O(h^k)\), where \(k-1\) is the order of the approximation. However, when solving an equation over the interval [0, T], the number of iterations is \(T/h = \Theta (\epsilon ^{-1/k})\) for fixed k, giving a total complexity that is \({{\,\mathrm{poly}\,}}(1/\epsilon )\) even using high-precision methods for the QLSA or Hamiltonian simulation.

For ODEs with special structure, some prior results already show how to avoid a local approximation and thereby achieve complexity \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\). When A(t) is anti-Hermitian and \(f(t)=0\), we can directly apply Hamiltonian simulation [9]; if A and f are time-independent, then [10] uses a Taylor series to achieve complexity \({{\,\mathrm{poly}\,}}(\log ({1}/{\epsilon }))\). However, the case of general time-dependent linear ODEs had remained elusive.

In this paper, we use a nonlocal representation of the solution of a system of differential equations to give a new quantum algorithm with complexity \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\) even for time-dependent equations. While this is an exponential improvement in the dependence on \(\epsilon \) over previous work, it does not necessarily give an exponential runtime improvement in the context of an algorithm with classical output. In general, statistical error will introduce an overhead of \({{\,\mathrm{poly}\,}}(1/\epsilon )\) when attempting to measure an observable with precision \(\epsilon \). However, achieving complexity \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\) can result in a polynomial improvement in the overall running time. In particular, if an algorithm is used as a subroutine k times, we should ensure error O(1/k) for each subroutine to give an overall algorithm with bounded error. A subroutine with complexity \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\) can potentially give significant polynomial savings in such a case.

Time-dependent linear differential equations describe a wide variety of systems in science and engineering. Examples include the wave equation and the Stokes equation (i.e., creeping flow) in fluid dynamics [24], the heat equation and the Boltzmann equation in thermodynamics [19, 33], the Poisson equation and Maxwell’s equations in electromagnetism [22, 34], and of course Schrödinger’s equation in quantum mechanics. Moreover, some nonlinear differential equations can be studied by linearizing them to produce time-dependent linear equations (e.g., the linearized advection equation in fluid dynamics [12]).

We focus our discussion on first-order linear ODEs. Higher-order ODEs can be transformed into first-order ODEs by standard methods. Also, by discretizing space, PDEs with both time and space dependence can be regarded as sparse linear systems of time-dependent ODEs. Thus we focus on an equation of the form (1.1) with initial condition

for some specified \(\gamma \in {\mathbb {C}}^d\). We assume that A(t) is s-sparse (i.e., has at most s nonzero entries in any row or column) for any \(t\in [0,T]\). Furthermore, we assume that A(t), f(t), and \(\gamma \) are provided by black-box subroutines (which serve as abstractions of efficient computations). In particular, following essentially the same model as in [10] (see also Section 1.1 of [15]), suppose we have an oracle \(O_A(t)\) that, for any \(t \in [0,T]\) and any given row or column specified as input, computes the locations and values of the nonzero entries of A(t) in that row or column. We also assume oracles \(O_x\) and \(O_f(t)\) that, for any \(t \in [0,T]\), prepare normalized states \(|\gamma \rangle \) and \(|f(t)\rangle \) proportional to \(\gamma \) and f(t), and that also compute \(\Vert \gamma \Vert \) and \(\Vert f(t)\Vert \), respectively. Given such a description of the instance, the goal is to produce a quantum state \(\epsilon \)-close to \(|x(T)\rangle \) (a normalized quantum state proportional to x(T)).

As mentioned above, our main contribution is to implement a method that uses a global approximation of the solution. We do this by developing a quantum version of so-called spectral methods, a technique from classical numerical analysis that (approximately) represents the components of the solution \(x(t)_i \approx \sum _j c_{ij} \phi _j(t)\) as linear combinations of basis functions \(\phi _j(t)\) expressing the time dependence. Specifically, we implement a Chebyshev pseudospectral method [4, 21] using the QLSA. This approach approximates the solution by a truncated Chebyshev series with undetermined coefficients and solves for those coefficients using a linear system that interpolates the differential equations. According to the convergence theory of spectral methods, the solution error decreases exponentially provided the solution is sufficiently smooth [18, 31]. We use the LCU-based QLSA to solve this linear system with high precision [15]. To analyze the algorithm, we upper bound the solution error and condition number of the linear system and lower bound the success probability of the final measurement. Overall, we show that the total complexity of this approach is \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\) for general time-dependent ODEs. Informally, we show the following:

Theorem 1

(Informal). Consider a linear ODE (1.1) with given initial conditions. Assume A(t) is s-sparse and diagonalizable, and \(\mathop {\mathrm {Re}}(\lambda _i(t))\le 0\) for all eigenvalues of A(t). Then there exists a quantum algorithm that produces a state \(\epsilon \)-close in \(l_2\) norm to the exact solution, succeeding with probability \(\Omega (1)\), with query and gate complexity \(O\bigl (s\Vert A\Vert T\, {{\,\mathrm{poly}\,}}(\log (s\Vert A\Vert T/\epsilon )))\).

In addition to initial value problems (IVPs), our approach can also address boundary value problems (BVPs). Given an oracle for preparing a state \(\alpha |x(0)\rangle +\beta |x(T)\rangle \) expressing a general boundary condition, the goal of the quantum BVP is to produce a quantum state \(\epsilon \)-close to \(|x(t)\rangle \) (a normalized state proportional to x(t)) for any desired \(t \in [0,T]\). We also give a quantum algorithm for this problem with complexity \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\), as follows:

Theorem 2

(Informal). Consider a linear ODE (1.1) with given boundary conditions. Assume A(t) is s-sparse and diagonalizable, and \(\mathop {\mathrm {Re}}(\lambda _i(t))\le 0\) for all eigenvalues of A(t). Then there exists a quantum algorithm that produces a state \(\epsilon \)-close in \(l_2\) norm to the exact solution, succeeding with probability \(\Omega (1)\), with query and gate complexity \(O\bigl (s\Vert A\Vert ^4T^4\, {{\,\mathrm{poly}\,}}(\log (s\Vert A\Vert T/\epsilon )))\).

We give formal statements of Theorems 1 and 2 in Sects. 8 and 9, respectively. Note that the dependence of the complexity on \(\Vert A\Vert \) and T is worse for BVPs than for IVPs. This is because a rescaling approach that we apply for IVPs (introduced in Sect. 3) cannot be extended to BVPs.

The remainder of this paper is organized as follows. Section 2 introduces the spectral method and Sect. 3 shows how to encode it into a quantum linear system. Then Sect. 4 analyzes the exponential decrease of the solution error, Sect. 5 bounds the condition number of the linear system, Sect. 6 lower bounds the success probability of the final measurement, and Sect. 7 describes how to prepare the initial quantum state. We combine these bounds in Sect. 8 to establish the main result. We then extend the analysis for initial value problems to boundary value problems in Sect. 9. Finally, we conclude in Sect. 10 with a discussion of the results and some open problems.

2 Spectral Method

Spectral methods provide a way of solving differential equations using global approximations [18, 31]. The main idea of the approach is as follows. First, express an approximate solution as a linear combination of certain basis functions with undetermined coefficients. Second, construct a system of linear equations that such an approximate solution should satisfy. Finally, solve the linear system to determine the coefficients of the linear combination.

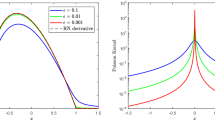

Spectral methods offer a flexible approach that can be adapted to different settings by careful choice of the basis functions and the linear system. A Fourier series provides an appropriate basis for periodic problems, whereas Chebyshev polynomials can be applied more generally. The linear system can be specified using Gaussian quadrature (giving a spectral element method or Tau method), or one can simply interpolate the differential equations using quadrature nodes (giving a pseudo-spectral method) [31]. Since general linear ODEs are non-periodic, and interpolation facilitates constructing a straightforward linear system, we develop a quantum algorithm based on the Chebyshev pseudo-spectral method [4, 21].

In this approach, we consider a truncated Chebyshev approximation x(t) of the exact solution \({{\hat{x}}}(t)\), namely

for any \(n \in {\mathbb {Z}}^+\). (See “Appendix A” for the definition of \(T_k(t)\) and a discussion of its properties.) The coefficients \(c_{i,k} \in {\mathbb {C}}\) for all \(i\in [{d}]_0\) and \(k\in [{n+1}]_0\) are determined by demanding that x(t) satisfies the ODE and initial conditions at a set of interpolation nodes\(\{t_l\}_{l=0}^n\) (with \(1=t_0>t_1>\cdots >t_n=-1\)), where \(x(t_0)\) and \(x(t_n)\) are the initial and final states, respectively. In other words, we require

and

We choose the domain \([-1,1]\) in (2.2) because this is the natural domain for Chebyshev polynomials. Correspondingly, in the following section, we rescale the domain of initial value problems to be \([-1,1]\). We would like to be able to increase the accuracy of the approximation by increasing n, so that

There are many possible choices for the interpolation nodes. Here we use the Chebyshev-Gauss-Lobatto quadrature nodes, \(t_l=\cos \frac{l\pi }{n}\) for \(l \in [{n+1}]_0\), since these nodes achieve the highest convergence rate among all schemes with the same number of nodes [23, 25]. These nodes also have the convenient property that \(T_k(t_l)=\cos \frac{kl\pi }{n}\), making it easy to compute the values \(x_i(t_l)\).

To evaluate the condition (2.2), it is convenient to define coefficients \(c'_{i,k}\) for \(i\in [{d}]_0\) and \(k\in [{n+1}]_0\) such that

We can use the differential property of Chebyshev polynomials,

to determine the transformation between \(c_{i,k}\) and \(c'_{i,k}\). As detailed in “Appendix A”, we have

where \(D_n\) is the \((n+1) \times (n+1)\) upper triangular matrix with nonzero entries

where

Using this expression in (2.2), (2.7), and (2.8), we obtain the following linear equations:

We also demand that the Chebyshev series satisfies the initial condition \(x_i(1)=\gamma _i\) for all \(i\in [{d}]_0\). This system of linear equations gives a global approximation of the underlying system of differential equations. Instead of locally approximating the ODE at discretized times, these linear equations use the behavior of the differential equations at the \(n+1\) times \(\{t_l\}_{l=0}^n\) to capture their behavior over the entire interval \([-1,1]\).

Our algorithm solves this linear system using the high-precision QLSA [15]. Given an encoding of the Chebyshev coefficients \(c_{ik}\), we can obtain the approximate solution x(t) as a suitable linear combination of the \(c_{ik}\), a computation that can also be captured within a linear system. The resulting approximate solution x(t) is close to the exact solution \({\hat{x}}(t)\):

Lemma 1

(Lemma 19 of [18]). Let \({\hat{x}}(t)\in C^{r+1}(-1,1)\) be the solution of the differential equations (1.1) and let x(t) satisfy (2.2) and (2.3) for \(\{t_l=\cos \frac{l\pi }{n}\}_{l=0}^n\). Then there is a constant C, independent of n, such that

This shows that the convergence behavior of the spectral method is related to the smoothness of the solution. For a solution in \(C^{r+1}\), the spectral method approximates the solution with \(n={{\,\mathrm{poly}\,}}({1}/{\epsilon })\). Furthermore, if the solution is smoother, we have an even tighter bound:

Lemma 2

(Eq. (1.8.28) of [31]). Let \({\hat{x}}(t)\in C^{\infty }(-1,1)\) be the solution of the differential equations (1.1) and let x(t) satisfy (2.2) and (2.3) for \(\{t_l=\cos \frac{l\pi }{n}\}_{l=0}^n\). Then

For simplicity, we replace the value \(\sqrt{{2}/{\pi }}\) by the upper bound of 1 in the following analysis.

This result implies that if the solution is in \(C^{\infty }\), the spectral method approximates the solution to within \(\epsilon \) using only \(n={{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\) terms in the Chebyshev series. Consequently, this approach gives a quantum algorithm with complexity \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\).

3 Linear System

In this section we construct a linear system that encodes the solution of a system of differential equations via the Chebyshev pseudospectral method introduced in Sect. 2. We consider a system of linear, first-order, time-dependent ordinary differential equations, and focus on the following initial value problem:

Problem 1

In the quantum ODE problem, we are given a system of equations

where \(x(t) \in {\mathbb {C}}^d\), \(A(t) \in {\mathbb {C}}^{d\times d}\) is s-sparse, and \(f(t) \in {\mathbb {C}}^d\) for all \(t\in [0,T]\). We assume that \(A_{ij},f_i \in C^\infty (0,T)\) for all \(i,j \in [{d}]\). We are also given an initial condition \(x(0) = \gamma \in {\mathbb {C}}^d\). Given oracles that compute the locations and values of nonzero entries of A(t) for any t, and that prepare normalized states \(|\gamma \rangle \) proportional to \(\gamma \) and \(|f(t)\rangle \) proportional to f(t) for any \(t \in [0,T]\), the goal is to output a quantum state \(|x(T)\rangle \) that is proportional to x(T).

Without loss of generality, we rescale the interval [0, T] onto \([-1,1]\) by the linear map \(t \mapsto 1-2t/T\). Under this rescaling, we have \(\frac{\mathrm {d}}{\mathrm {d}{t}} \mapsto -\frac{T}{2}\frac{\mathrm {d}}{\mathrm {d}{t}}\), so \(A \mapsto -\frac{T}{2}A\), which can increase the spectral norm. To reduce the dependence on T—specifically, to give an algorithm with complexity close to linear in T—we divide the interval [0, T] into subintervals \([0,\Gamma _1],[\Gamma _1,\Gamma _2],\ldots ,[\Gamma _{m-1},T]\) with \(\Gamma _0:=0, \Gamma _m:=T\). Each subinterval \([\Gamma _h,\Gamma _{h+1}]\) for \(h\in [{m}]_0\) is then rescaled onto \([-1,1]\) with the linear map \(K_h :[\Gamma _h,\Gamma _{h+1}] \rightarrow [-1,1]\) defined by

which satisfies \(K_h(\Gamma _h)=1\) and \(K_h(\Gamma _{h+1})=-1\). To solve the overall initial value problem, we simply solve the differential equations for each successive interval (as encoded into a single system of linear equations).

Now let \(\tau _h:=|\Gamma _{h+1}-\Gamma _h|\) and define

Then, for each \(h\in [{m}]_0\), we have the rescaled differential equations

for \(t\in [-1,1]\) with the initial conditions

By taking

where \(\Vert {\cdot }\Vert \) denotes the spectral norm, we can ensure that \(\Vert {A_h(t)}\Vert \le 1\) for all \(t \in [-1,1]\). In particular, it suffices to take

Having rescaled the equations to use the domain \([-1,1]\), we now apply the Chebyshev pseudospectral method. Following Sect. 2, we substitute the truncated Chebyshev series of x(t) into the differential equations with interpolating nodes \(\{t_l=\cos \frac{l\pi }{n} : l \in [{n}]\}\), giving the linear system

with initial condition

Note that in the following, terms with \(l=0\) refer to this initial condition.

We now describe a linear system

that encodes the Chebyshev pseudospectral approximation and uses it to produce an approximation of the solution at time T.

The vector \(|X\rangle \in {\mathbb {C}}^{m+p} \otimes {\mathbb {C}}^d \otimes {\mathbb {C}}^{n+1}\) represents the solution in the form

where \(c_{i,l}(\Gamma _{h+1})\) are the Chebyshev series coefficients of \(x(\Gamma _{h+1})\) and \(x_i := x(\Gamma _m)_i\) is the ith component of the final state \(x(\Gamma _m)\).

The right-hand-side vector \(|B\rangle \) represents the input terms in the form

where

Here \(\gamma \) is the initial condition and \(f_{h}(\cos \frac{l\pi }{n})_i\) is ith component of \(f_h\) at the interpolation point \(t_l=\cos \frac{l\pi }{n}\).

We decompose the matrix L in the form

We now describe each of the matrices \(L_i\) for \(i \in [{5}]\) in turn.

The matrix \(L_1\) is a discrete representation of \(\frac{\mathrm {d}{x}}{\mathrm {d}{t}}\), satisfying

(recall from (2.5) and (2.7) that \(D_n\) encodes the action of the time derivative on a Chebyshev expansion). Thus \(L_1\) has the form

where the interpolation matrix is a discrete cosine transform matrix:

The matrix \(L_2(A_h)\) discretizes \(A_h(t)\), i.e.,

Thus

Note that if \(A_h\) is time-independent, then

The matrix \(L_3\) combines the Chebyshev series coefficients \(c_{i,l}\) to produce \(x_i\) for each \(i\in [{d}]_0\). To express the final state \(x(-1)\), \(L_3\) represents the linear combination \(x_i(-1)=\sum _{k=0}^nc_{i,k}T_k(-1)=\sum _{k=0}^n(-1)^kc_{i,k}\). Thus we take

Notice that \(L_3\) has zero rows for \(l\in [{n}]\).

When \(h=m\), \(L_4\) is used to construct \(x_i\) from the output of \(L_3\) for \(l=0\), and to repeat \(x_i\)n times for \(l\in [{n}]\). When \(m+1\le h\le m+p\), both \(L_4\) and \(L_5\) are used to repeat \(x_i\)\((n+1)p\) times for \(l\in [{n}]\). This repetition serves to increase the success probability of the final measurement. In particular, we take

and

In summary, the linear system is as follows. For each \(h \in [{m}]_0\), \((L_1+L_2(A_h))|X\rangle =|B_h\rangle \) solves the differential equations over \([\Gamma _h,\Gamma _{h+1}]\), and the coefficients \(c_{i,l}(\Gamma _{h+1})\) are combined by \(L_3\) into the \((h+1)\)st block as initial conditions. When \(h=m\), the final coefficients \(c_{i,l}(\Gamma _m)\) are combined by \(L_3\) and \(L_4\) into the final state with coefficients \(x_i\), and this solution is repeated \((p+1)(n+1)\) times by \(L_4\) and \(L_5\).

To explicitly illustrate the structure of this system, we present a simple example in “Appendix B”.

4 Solution Error

In this section, we bound how well the solution of the linear system defined above approximates the actual solution of the system of differential equations.

Lemma 3

For the linear system \(L|X\rangle =|B\rangle \) defined in (3.12), let x be the approximate ODE solution specified by the linear system and let \({\hat{x}}\) be the exact ODE solution. Then for n sufficiently large, the error in the solution at time T satisfies

Proof

First we carefully choose n satisfying

where

to ensure that

According to the quantum spectral method defined in Sect. 3, we solve

We denote the exact solution by \({\hat{x}}(\Gamma _{h+1})\), and we let \(x(\Gamma _{h+1})=\sum _{i=0}^d\sum _{l=0}^n(-1)^nc_{i,l}(\Gamma _{h+1})\), where \(c_{i,l}(\Gamma _{h+1})\) is defined in (3.13). Define

For \(h=0\), Lemma 2 implies

For \(h \in [{m}]\), the error in the approximate solution of \(\frac{\mathrm {d}{x}}{\mathrm {d}{t}}=A_h(t)x(t)+f_h(t)\) has two contributions: the error from the linear system and the error in the initial condition. We let \({\widetilde{x}}(\Gamma _{h+1})\) denote the solution of the linear system \(\bigl (L_1 + L_2(A_h)\bigr ) |{\widetilde{x}}(\Gamma _{h+1})\rangle = |B(f_h)\rangle \) under the initial condition \({\hat{x}}(\Gamma _h)\). Then

The first term can be bounded using Lemma 2, giving

The second term comes from the initial error \(\Delta _h\), which is transported through the linear system. Let

where \(E_{h+1}\) is the solution of the linear system with input \(\Delta _h\) and \({\hat{E}}_{h+1}\) is the exact solution of \(\frac{\mathrm {d}{x}}{\mathrm {d}{t}}=A_{h+1}(t)x(t)+f_{h+1}(t)\) with initial condition \(x(\Gamma _h)=\Delta _h\). Then by Lemma 2,

so

Thus, we have an inequality recurrence for bounding \(\Delta _h\):

Now we iterate h from 1 to m. Equation (4.4) implies

so

Therefore

which shows that the solution error decreases exponentially with n. In other words, the linear system approximates the solution with error \(\epsilon \) using \(n={{\,\mathrm{poly}\,}}(\log ({1}/{\epsilon }))\). \( \square \)

Note that for time-independent differential equations, we can directly estimate \(\Vert {\hat{x}}^{(n+1)}(t)\Vert \) using

Writing \(A_h=V_h\Lambda _hV_h^{-1}\) where \(\Lambda _h={{\,\mathrm{diag}\,}}(\lambda _0,\ldots ,\lambda _{d-1})\), we have \(e^{A_h}=V_he^{\Lambda _h}V_h^{-1}\). Thus the exact solution of time-independent equation with initial condition \({\hat{x}}(1)=\gamma \) is

Since \(\mathop {\mathrm {Re}}(\lambda _i) \le 0\) for all eigenvalues \(\lambda _i\) of \(A_h\) for \(i \in [{d}]_0\), we have \(\Vert e^{\Lambda _h}\Vert \le 1\). Therefore

Furthermore, since \(\max _{h,t}\Vert A_h(t)\Vert \le 1\), we have

Thus the solution error satisfies

Note that, although we represent the solution differently, this bound is similar to the corresponding bound in [10, Theorem 6].

5 Condition Number

We now analyze the condition number of the linear system.

Lemma 4

Consider an instance of the quantum ODE problem as defined in Problem 1. For all \(t\in [0,T]\), assume A(t) can be diagonalized as \(A(t)=V(t)\Lambda (t)V^{-1}(t)\) for some \(\Lambda (t)={{\,\mathrm{diag}\,}}(\lambda _0(t),\ldots ,\lambda _d(t))\), with \(\mathop {\mathrm {Re}}(\lambda _i(t))\le 0\) for all \(i\in [{d}]_0\). Let \(\kappa _V:=\max _{t\in [0,T]}\kappa _V(t)\) be an upper bound on the condition number of V(t). Then for \(m,p\in {\mathbb {Z}}^+\) and n sufficiently large, the condition number of L in the linear system (3.12) satisfies

Proof

We begin by bounding the norms of some operators that appear in the definition of L. First we consider the \(l_{\infty }\) norm of \(D_n\) since this is straightforward to calculate:

Thus we have the upper bound

Next we upper bound the spectral norm of the discrete cosine transform matrix \(P_n\):

Therefore

Thus we can upper bound the norm of \(L_1\) as

Next we consider the spectral norm of \(L_2(A_h)\) for any \(h \in [{m}]_0\). We have

Since the eigenvalues of each \(A_h(t_l)\) for \(l \in [{n+1}]_0\) are all eigenvalues of

we have

by (3.8). Therefore

By direct calculation, we have

Thus, for \(n\ge 5\), we find

Next we upper bound \(\Vert L^{-1}\Vert \). By definition,

We express \(|B\rangle \) as

where \(|b_{hl}\rangle := \sum _{i=0}^{d-1}\beta _{hil}|hil\rangle \) satisfies \(\Vert |b_{hl}\rangle \Vert ^2=\sum _{i=0}^{d-1}|\beta _{hil}|^2\le 1\). For any fixed \(h \in [{m+p+1}]_0\) and \(l \in [{n+1}]_0\), we first upper bound \(\Vert L^{-1}|b_{hl}\rangle \Vert \) and use this to upper bound the norm of \(L^{-1}\) applied to linear combinations of such vectors.

Recall that the linear system comes from (2.10), which is equivalent to

For fixed \(h \in [{m+p+1}]_0\) and \(r \in [{n+1}]_0\), define vectors \(x_{hr},x_{hr}' \in {\mathbb {C}}^d\) with

for \(i \in [{d}]_0\). We claim that \(x_{hr} = x_{hr}' = 0\) for any \(r \ne l\). Combining only the equations from (5.17) with \(r \ne l\) gives the system

Consider a corresponding system of differential equations

where \({{\hat{x}}}_{hr}(t) \in {\mathbb {C}}^d\) for all \(t \in [-1,1]\). The solution of this system with \(b=0\) and initial condition \({{\hat{x}}}_{hr}(1) = 0\) is clearly \({{\hat{x}}}_{hr}(t)=0\) for all \(t \in [-1,1]\). Then the nth-order truncated Chebyshev approximation of (5.20), which should satisfy the linear system (5.19) by (2.1) and (2.2), is exactly \(x_{hr}\). Using Lemma 3 and observing that \({\hat{x}}^{(n+1)}(t)=0\), we have

When \(t=t_l\), we let \(|B\rangle =|b_{hl}\rangle \) denote the first nonzero vector. Combining only the equations from (5.17) with \(r = l\) gives the system

Consider a corresponding system of differential equations

with \(\gamma =b_{h0},b=0\) for \(l=0\); or \(\gamma =0,b=b_{hl}\) for \(l\in [{n}]\).

Using the diagonalization \(A_h(t_l)=V(t_l)\Lambda _h(t_l)V^{-1}(t_l)\), we have \(e^A=V(t_l)e^{\Lambda _h(t_l)}V^{-1}(t_l)\). Thus the exact solution of the differential equations (5.20) with \(r=l\) and initial condition \({{\hat{x}}}_{hr}(1)=\gamma \) is

According to Eq. (4.4) in the proof of Lemma 3, we have

where

Now for \(h \in [{m+1}]_0\), we take \(x_{hl}\) to be the initial condition \(\gamma \) for the next subinterval to obtain \(x_{(h+1)l}\). Using (5.24) and (5.25), starting from \(\gamma =b_{h0},b=0\) for \(l=0\), we find

Since \(\Vert \Lambda _h(t_l)\Vert \le \Vert \Lambda \Vert \le 1\) and \(\Lambda _h(t_l)={{\,\mathrm{diag}\,}}(\lambda _0,\ldots ,\lambda _{d-1})\) with \(\mathop {\mathrm {Re}}(\lambda _i)\le 0\) for \(i \in [{d}]_0\), we have \(\Vert e^{2\Lambda _h(t_l)}\Vert \le 1\). Therefore

On the other hand, with \(\gamma =0, b=b_{hl}\) for \(l\in [{n}]\), we have

so

For \(h \in \{m,m+1,\ldots ,m+p\}\), according to the definition of \(L_4\) and \(L_5\), we similarly have

According to (5.24), \({\hat{x}}_{hl}(t)\) is a monotonic function of \(t \in [-1,1]\), which implies

Using the identity

we have

Consider the Chebyshev expansion of \({\hat{x}}_{hl}(t)\) as in (2.1):

By the orthogonality of Chebyshev polynomials (as specified in (A.7)), we have

Using (5.34), this gives

Now we compute \(\Vert |X\rangle \Vert \), summing the contributions from all \(c_{i,r}(\Gamma _h)\) and \(x_{mr}\), and notice that \(c_{i,r}=0\) and \(x_{mr}=0\) for all \(r\ne l\), giving

Finally, considering all \(h\in [{m+p+1}]_0\) and \(l\in [{n+1}]_0\), from (5.16) we have

so

and therefore

Finally, combining (5.14) and (5.41) gives

as claimed. \(\square \)

6 Success Probability

We now evaluate the success probability of our approach to the quantum ODE problem.

Lemma 5

Consider an instance of the quantum ODE problem as defined in Problem 1 with the exact solution \({\hat{x}}(t)\) for \(t\in [0,T]\), and its corresponding linear system (3.12) with \(m,p\in {\mathbb {Z}}^+\) and n sufficiently large. When applying the QLSA to this system, the probability of measuring a state proportional to \(|x(T)\rangle =\sum _{i=0}^{d-1}x_i|i\rangle \) is

where \(x_i\) is defined in (3.13), \(\tau \) is defined in (3.9), and

Proof

After solving the linear system (3.12) using the QLSA, we measure the first and third registers of \(|X\rangle \) (as defined in (3.13)). We decompose this state as

where

When the first register is observed in some \(h \in \{m,m+1,\ldots ,m+p\}\) (no matter what outcome is seen for the third register), we output the second register, which is then in a normalized state proportional to the final state:

with

Notice that

and

Considering the definition of q, the contribution from time interval h under the rescaling (3.2), and the identity (5.33), we have

where \({\hat{x}}_h({\overline{t}})\) is the solution of (4.5) with the rescaling in (3.4). By the orthogonality of Chebyshev polynomials (as specified in (A.7)),

For all \(h\in [{m}]_0\), we have

and therefore

Thus we see that the success probability of the measurement satisfies

as claimed. \(\square \)

7 State Preparation

We now describe a procedure for preparing the vector \(|B\rangle \) in the linear system (3.12) (defined in (3.14) and (3.15)) using the given ability to prepare the initial state of the system of differential equations. We also evaluate the complexity of this procedure.

Lemma 6

Consider state preparation oracles acting on a state space with basis vectors \(|h\rangle |i\rangle |l\rangle \) for \(h\in [{m}]_0,i\in [{d}]_0,l\in [{n}]_0\), where \(m,d,n \in {\mathbb {N}}\), encoding an initial condition \(\gamma \in {\mathbb {C}}^d\) and function \(f_{h}(\cos \frac{l\pi }{n}) \in {\mathbb {C}}^d\) as in (3.15). Specifically, for any \(h\in [{m}]_0\) and \(l\in [{n}]\), let \(O_x\) be a unitary oracle that maps \(|0\rangle |0\rangle |0\rangle \) to a state proportional to \(|0\rangle |\gamma \rangle |0\rangle \) and \(|h\rangle |\phi \rangle |l\rangle \) to \(|h\rangle |\phi \rangle |l\rangle \) for any \(|\phi \rangle \) orthogonal to \(|0\rangle \); let \(O_f(h,l)\) be a unitary that maps \(|h\rangle |0\rangle |l\rangle \) to a state proportional to \(|h\rangle |f_{h}(\cos \frac{l\pi }{n})\rangle |l\rangle \) and maps \(|0\rangle |\phi \rangle |0\rangle \) to \(|0\rangle |\phi \rangle |0\rangle \) for any \(|\phi \rangle \) orthogonal to \(|0\rangle \). Suppose \(\Vert \gamma \Vert \) and \(\Vert f_{h}(\cos \frac{l\pi }{n})\Vert \) are known. Then the normalized quantum state

can be prepared with gate and query complexity O(mn).

Proof

We normalize the components of the state using the coefficients

so that

First we perform a unitary transformation mapping

This can be done in time complexity O(mn) by standard techniques [32]. Then we perform \(O_x\) and \(O_f(h,l)\) for all \(h\in [{m}]_0, l\in [{n}]\), giving

using O(mn) queries. \(\square \)

8 Main Result

Having analyzed the solution error, condition number, success probability, and state preparation procedure for our approach, we are now ready to establish the main result.

Theorem 1

Consider an instance of the quantum ODE problem as defined in Problem 1. Assume A(t) can be diagonalized as \(A(t)=V(t)\Lambda (t)V^{-1}(t)\) where \(\Lambda (t)={{\,\mathrm{diag}\,}}(\lambda _1(t),\ldots ,\lambda _d(t))\) with \(\mathop {\mathrm {Re}}(\lambda _i(t))\le 0\) for each \(i \in [{d}]_0\) and \(t\in [0,T]\). Then there exists a quantum algorithm that produces a state \(x(T)/\Vert x(T)\Vert \)\(\epsilon \)-close to \({\hat{x}}(T)/\Vert {\hat{x}}(T)\Vert \) in \(l^2\) norm, succeeding with probability \(\Omega (1)\), with a flag indicating success, using

queries to oracles \(O_A(h,l)\) (a sparse matrix oracle for \(A_h(t_l)\) as defined in (3.3)) and \(O_x\) and \(O_f(h,l)\) (as defined in Lemma 6). Here \(\Vert A\Vert := \max _{t \in [0,T]}\Vert A(t)\Vert \); \(\kappa _V:=\max _t\kappa _V(t)\), where \(\kappa _V(t)\) is the condition number of V(t); and

The gate complexity is larger than the query complexity by a factor of \({{\,\mathrm{poly}\,}}(\log (\kappa _Vds\Vert A\Vert g'T/\epsilon ))\).

Proof

We first present the algorithm and then analyze its complexity.

Statement of the algorithm. First, we choose m to guarantee

Then, as in Sect. 3, we divide the interval [0, T] into small subintervals \([0,\Gamma _1],[\Gamma _1,\Gamma _2],\ldots ,[\Gamma _{m-1},T]\) with \(\Gamma _0=0, \Gamma _m=T\), and define

Each subinterval \([\Gamma _h,\Gamma _{h+1}]\) for \(h\in [{m-1}]\) is mapped onto \([-1,1]\) with a linear mapping \(K_h\) satisfying \(K_h(\Gamma _h)=1,K_h(\Gamma _{h+1})=-1\):

We choose

where

and

Since \(\max _{t\in [0,T]}\Vert {\hat{x}}^{(n+1)}(t)\Vert \le g'\), by Lemma 3, this choice guarantees

and

Now \(\Vert {\hat{x}}(T)-x(T)\Vert \le \delta \) implies

so we can choose such n to ensure that the normalized output state is \(\epsilon \)-close to \({\hat{x}}(T)/\Vert {\hat{x}}(T)\Vert \).

Following Sect. 3, we build the linear system \(L|X\rangle =|B\rangle \) (see (3.12)) that encodes the quantum spectral method. By Lemma 4, the condition number of this linear system is at most \((\pi m+p+1)(n+1)^{3.5}(2\kappa _V+e\Vert \gamma \Vert _{\infty })\). Then we use the QLSA from reference [15] to obtain a normalized state \(|X\rangle \) and measure the first and third register of \(|X\rangle \) in the standard basis. If the measurement outcome for the first register belongs to

we output the state of the second register, which is a normalized state \(| x(T)\rangle /\Vert | x(T)\rangle \Vert \) satisfying (8.11). By Lemma 5, the probability of this event happening is at least \(\frac{(p+1)(n+1)}{\pi mq^2+(p+1)(n+1)}\). To ensure \(m+p=O(\Vert A\Vert T)\), we can choose

so we can achieve success probability \(\Omega (1)\) with \(O(q/\sqrt{n})\) repetitions of the above procedure.

Analysis of the complexity The matrix L is an \((m+p+1)d(n+1)\times (m+p+1)d(n+1)\) matrix with O(ns) nonzero entries in any row or column. By Lemma 4 and our choice of parameters, the condition number of L is \(O\bigl (\kappa _V(m+p)n^{3.5}\bigr )\). Consequently, by Theorem 5 of [15], the QLSA produces the state \(|x(T)\rangle \) with

queries to the oracles \(O_A(h,l)\), \(O_x\), and \(O_f(h,l)\), and its gate complexity is larger by a factor of \({{\,\mathrm{poly}\,}}(\log (\kappa _Vmnds/\delta ))\). Using \(O(q/\sqrt{n})\) steps of amplitude amplification to achieve success probability \(\Omega (1)\), the overall query complexity of our algorithm is

and the gate complexity is larger by a factor of

as claimed. \(\square \)

In general, \(g'\) could be unbounded above as \(n\rightarrow \infty \). However, we could obtain a useful bound in such a case by solving the implicit Eqs. (8.9) and (8.10).

Note that for time-independent differential equations, we can replace \(g'\) by \(\Vert \gamma \Vert +2\tau \Vert f\Vert \) as shown in (4.20). In place of (8.7) and (8.8), we choose

and

By Lemma 3, this choice guarantees

and

Thus we have the following:

Corollary 1

For time-independent differential equations, under the same assumptions of Theorem 1, there exists a quantum algorithm using

queries to \(O_A(h,l)\), \(O_x\), and \(O_f(h,l)\). The gate complexity of this algorithm is larger than its query complexity by a factor of \({{\,\mathrm{poly}\,}}(\log (\kappa _Vds\gamma \Vert A\Vert \Vert f\Vert T/\epsilon ))\).

The complexity of our algorithm depends on the parameter q in defined in (8.2), which characterizes the decay of the final state relative to the initial state. As discussed in Section 8 of [10], it is unlikely that the dependence on q can be significantly improved, since renormalization of the state effectively implements postselection and an efficient procedure for performing this would have the unlikely consequence \(\mathrm {BQP} = \mathrm {PP}\).

We also require the real parts of the eigenvalues of A(t) to be non-positive for all \(t\in [0,T]\) so that the solution cannot grow exponentially. This requirement is essentially the same as in the time-independent case considered in [10] and improves upon the analogous condition in [5] (which requires an additional stability condition). Also as in [10], our algorithm can produce approximate solutions for non-diagonalizable A(t), although the dependence on \(\epsilon \) degrades to \({{\,\mathrm{poly}\,}}(1/\epsilon )\). For further discussion of these considerations, see Sections 1 and 8 of [10].

9 Boundary Value Problems

So far we have focused on initial value problems (IVPs). Boundary value problems (BVPs) are another widely studied class of differential equations that appear in many applications, but that can be harder to solve than IVPs.

Consider a sparse, linear, time-dependent system of differential equations as in Problem 1 but with a constraint on some linear combination of the initial and final states:

Problem 2

In the quantum BVP, we are given a system of equations

where \(x(t) \in {\mathbb {C}}^d\), \(A(t) \in {\mathbb {C}}^{d\times d}\) is s-sparse, and \(f(t) \in {\mathbb {C}}^d\) for all \(t\in [0,T]\), and a boundary condition \(\alpha x(0)+\beta x(T)=\gamma \) with \(\alpha , \beta , \gamma \in {\mathbb {C}}^d\). Suppose there exists a unique solution \({\hat{x}}\in C^{\infty }(0,T)\) of this boundary value problem. Given oracles that compute the locations and values of nonzero entries of A(t) for any t, and that prepare quantum states \(\alpha |x(0)\rangle +\beta |x(T)\rangle =|\gamma \rangle \) and \(|f(t)\rangle \) for any t, the goal is to output a quantum state \(|x(t^*)\rangle \) that is proportional to \(x(t^*)\) for some specified \(t^*\in [0,T]\).

As before, we can rescale [0, T] onto \([-1,1]\) by a linear mapping. However, since we have boundary conditions at \(t=0\) and \(t=T\), we cannot divide [0, T] into small subintervals. Instead, we directly map [0, T] onto \([-1,1]\) with a linear map K satisfying \(K(0)=1\) and \(K(T)=-1\):

Now the new differential equations are

If we define \(A_K({\overline{t}}):=-\frac{T}{2}A(t)\) and \(f_K({\overline{t}})=-\frac{T}{2}f(t)\), we have

for \({\overline{t}}\in [-1,1]\). Now the boundary condition takes the form

Since we only have one solution interval, we need to choose a larger order n of the Chebyshev series to reduce the solution error. In particular, we take

where \(\Omega \) and \(\omega \) are the same as Theorem 1.

As in Sect. 3, we approximate x(t) by a finite Chebyshev series with interpolating nodes \(\{t_l=\cos \frac{l\pi }{n} : l \in [{n}]\}\) and thereby obtain a linear system

with the boundary condition

Observe that the linear equations have the same form as in (3.10). Instead of (3.11), the term with \(l=0\) encodes the condition (9.8) expanded in a Chebyshev series, namely

for each \(i\in [{d}]_0\). Since \(T_k(t_0)=1\) and \(T_k(t_n)=(-1)^k\), this can be simplified as

If \(\alpha _i+(-1)^k\beta _i=0\), the element of \(|il\rangle \langle ik|\) of \(L_2(A_K)\) is zero; if \(\alpha _i+(-1)^k\beta _i\ne 0\), without loss of generality, the two sides of this equality can be divided by \(\alpha _i+(-1)^k\beta _i\) to guarantee that the terms with \(l=0\) can be encoded as in (3.11).

Now this system can be written in the form of equation (3.12) with \(m=1\). Here L, \(|X\rangle \), and \(|B\rangle \) are the same as in (3.16), (3.13), and (3.14), respectively, with \(m=1\), except for adjustments to \(L_3\) that we now describe.

The matrix \(L_3\) represents the linear combination \(x_i(t^*)=\sum _{k=0}^nc_{i,k}T_k(t^*)\). Thus we take

Since \(|T_k(t^*)|\le 1\), we have

and it follows that Lemma 3 also holds for boundary value problems. Similarly, Lemma 4 still holds with \(m=1\).

We are now ready to analyze the complexity of the quantum BVP algorithm. The matrix L defined above is a \((p+2)d(n+1)\times (p+2)d(n+1)\) matrix with O(ns) nonzero entries in any row or column, with condition number \(O(\kappa _Vpn^{3.5})\). By Lemma 5 with \(p=O(1)\), \(O(q/\sqrt{n})\) repetitions suffice to ensure success probability \(\Omega (1)\). By (9.6), n is linear in \(\Vert A\Vert T\) and poly-logarithmic in \(\Omega \) and \(\omega \). Therefore, we have the following:

Theorem 2

Consider an instance of the quantum BVP as defined in Problem 2. Assume A(t) can be diagonalized as \(A(t)=V(t)\Lambda (t)V^{-1}(t)\) where \(\Lambda (t)={{\,\mathrm{diag}\,}}(\lambda _1(t),\ldots ,\lambda _d(t))\) with \(\mathop {\mathrm {Re}}(\lambda _i(t))\le 0\) for each \(i \in [{d}]_0\) and \(t\in [0,T]\). Then there exists a quantum algorithm that produces a state \(x(t^*)/\Vert x(t^*)\Vert \)\(\epsilon \)-close to \({\hat{x}}(t^*)/\Vert {\hat{x}}(t^*)\Vert \) in \(l^2\) norm, succeeding with probability \(\Omega (1)\), with a flag indicating success, using

queries to \(O_A(h,l)\), \(O_x\), and \(O_f(h,l)\). Here \(\Vert A\Vert \), \(\kappa _V\), g, \(g'\) and q are defined as in Theorem 1. The gate complexity is larger than the query complexity by a factor of \({{\,\mathrm{poly}\,}}(\log (\kappa _Vds\Vert A\Vert g'T/\epsilon ))\).

As for initial value problems, we can simplify this result in the time-independent case.

Corollary 2

For a time-independent boundary value problem, under the same assumptions of Theorem 2, there exists a quantum algorithm using

queries to \(O_A(h,l)\), \(O_x\), and \(O_f(h,l)\). The gate complexity of this algorithm is larger than its query complexity by a factor of \({{\,\mathrm{poly}\,}}(\log (\kappa _Vds\gamma \Vert A\Vert \Vert f\Vert T/\epsilon ))\).

10 Discussion

In this paper, we presented a quantum algorithm to solve linear, time-dependent ordinary differential equations. Specifically, we showed how to employ a global approximation based on the spectral method as an alternative to the more straightforward finite difference method. Our algorithm handles time-independent differential equations with almost the same complexity as [10], but unlike that approach, can also handle time-dependent differential equations. Compared to [5], our algorithm improves the complexity of solving time-dependent linear differential equations from \({{\,\mathrm{poly}\,}}(1/\epsilon )\) to \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\).

This work raises several natural open problems. First, our algorithm must assume that the solution is smooth. If the solution is in \(C^r\), the solution error is \(O(\frac{1}{n^{r-2}})\) by Lemma 1. Can we improve the complexity to \({{\,\mathrm{poly}\,}}(\log (1/\epsilon ))\) under such weaker smoothness assumptions?

Second, the complexity of our algorithm is logarithmic in the parameter \(g'\) defined in (8.2), which characterizes the amount of fluctuation in the solution. However, the query complexity of Hamiltonian simulation is independent of that parameter [7, 30]. Can we develop quantum algorithms for general differential equations with query complexity independent of \(g'\)?

Third, our algorithm has nearly optimal dependence on T, scaling as \(O(T {{\,\mathrm{poly}\,}}(\log T))\). According to the no-fast-forwarding theorem [6], the complexity must be at least linear in T, and indeed linear complexity is achievable for the case of Hamiltonian simulation [14]. Can we handle general differential equations with complexity linear in T? Furthermore, can we achieve an optimal tradeoff between T and \(\epsilon \) as shown for Hamiltonian simulation in [28]?

Finally, can the techniques developed here be applied to give improved quantum algorithms for linear partial differential equations, or even for nonlinear ODEs or PDEs?

References

Ambainis, A.: Variable time amplitude amplification and quantum algorithms for linear algebra problems. In: Proceedings of the 29th Symposium on Theoretical Aspects of Computer Science, pp. 636–647 (2012). arXiv:1010.4458

Apostol, T.M.: Calculus, vol. II. Wiley, Hoboken (1969)

Arrazola, J.M., Kalajdzievski, T., Weedbrook, C., Lloyd, S.: Quantum algorithm for non-homogeneous linear partial differential equations. arXiv:1809.02622

Babolian, E., Hosseini, M.M.: A modified spectral method for numerical solution of ordinary differential equations with non-analytic solution. Appl. Math. Comput. 132(2), 341–351 (2002)

Berry, D.W.: High-order quantum algorithm for solving linear differential equations. J. Phys. A 47(10), 105301 (2014). arXiv:1010.2745

Berry, D.W., Ahokas, G., Cleve, R., Sanders, B.C.: Efficient quantum algorithms for simulating sparse Hamiltonians. Commun. Math. Phys. 270(2), 359–371 (2007). arXiv:quant-ph/0508139

Berry, D.W., Childs, A.M., Cleve, R., Kothari, R., Somma, R.D.: Exponential improvement in precision for simulating sparse Hamiltonians. In: Proceedings of the 46th Annual ACM Symposium on Theory of Computing, pp. 283–292 (2014). arXiv:1312.1414

Berry, D.W., Childs, A.M., Cleve, R., Kothari, R., Somma, R.D.: Simulating Hamiltonian dynamics with a truncated Taylor series. Phys. Rev. Lett. 114(9), 090502 (2015). arXiv:1412.4687

Berry, D.W., Childs, A.M., Kothari, R.: Hamiltonian simulation with nearly optimal dependence on all parameters. In: Proceedings of the 56th Annual IEEE Symposium on Foundations of Computer Science, pp. 792–809 (2015). arXiv:1501.01715

Berry, D.W., Childs, A.M., Ostrander, A., Wang, G.: Quantum algorithm for linear differential equations with exponentially improved dependence on precision. Commun. Math. Phys. 356(3), 1057–1081 (2017). arXiv:1701.03684

Berry, D.W., Novo, L.: Corrected quantum walk for optimal Hamiltonian simulation. Quantum Inf. Comput. 16(15–16), 1295 (2016). arXiv:1606.03443

Bird, R.B.: Transport phenomena. Appl. Mech. Rev. 55(1), R1–R4 (2002)

Brassard, G., Hoyer, P., Mosca, M., Tapp, A.: Quantum amplitude amplification and estimation. Contemp. Math. 305, 53–74 (2002). arXiv:quant-ph/0005055

Childs, A.M.: On the relationship between continuous- and discrete-time quantum walk. Commun. Math. Phys. 294(2), 581–603 (2010). arXiv:0810.0312

Childs, A.M., Kothari, R., Somma, R.D.: Quantum linear systems algorithm with exponentially improved dependence on precision. SIAM J. Comput. 46, 1920–1950 (2017). arXiv:1511.02306

Clader, D.B., Jacobs, B.C., Sprouse, C.R.: Preconditioned quantum linear system algorithm. Phys. Rev. Lett. 110.25, 250504 (2013). arXiv:1301.2340

Costa, P., Jordan, S., Ostrander, A.: Quantum algorithm for simulating the wave equation. arXiv:1711.05394

Gheorghiu, C.-I.: Spectral Methods for Differential Problems. Casa Cartii de Stiinta Publishing House, Cluj-Napoca (2007)

Harris, S.: An Introduction to the Theory of the Boltzmann Equation. Courier Corporation, Dover (2004)

Harrow, A.W., Hassidim, A., Lloyd, S.: Quantum algorithm for linear systems of equations. Phys. Rev. Lett. 103(15), 150502 (2009). arXiv:0811.3171

Hosseini, M.M.: A modified pseudospectral method for numerical solution of ordinary differential equations systems. Appl. Math. Comput. 176(2), 470–475 (2006)

Jackson, J.D.: Classical Electrodynamics. Wiley, Hoboken (2012)

Kahaner, D., Moler, C., Nash, S.: Numerical Methods and Software. Prentice Hall, Upper Saddle River (1989)

Kim, S., Karrila, S.J.: Microhydrodynamics: Principles and Selected Applications. Courier Corporation, Chelmsford (2013)

Kress, R.: Numerical Analysis. Springer, Berlin (1998)

Leyton, S.K., Osborne, T.J.: A quantum algorithm to solve nonlinear differential equations. arXiv:0812.4423

Low, G.H., Chuang, I.L.: Hamiltonian simulation by qubitization. arXiv:1610.06546

Low, G.H., Chuang, I.L.: Optimal Hamiltonian simulation by quantum signal processing. Phys. Rev. Lett. 118(9), 010501 (2017). arXiv:1606.02685

Novo, L., Berry, D.W.: Improved Hamiltonian simulation via a truncated Taylor series and corrections. Quantum Inf. Comput. 17, 0623 (2017). arXiv:1611.10033

Poulin, D., Qarry, A., Somma, R.D., Verstraete, F.: Quantum simulation of time-dependent Hamiltonians and the convenient illusion of Hilbert space. Phys. Rev. Lett. 106, 170501 (2011). arXiv:1102.1360

Shen, J., Tang, T., Wang, L.-L.: Spectral Methods: Algorithms, Analysis and Applications. Springer, Berlin (2011)

Shende, V.V., Bullock, S.S., Markov, I.L.: Synthesis of quantum-logic circuits. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 25(6), 1000–1010 (2006). arXiv:quant-ph/0406176

Thambynayagam, R.K.M.: The Diffusion Handbook: Applied Solutions for Engineers. McGraw-Hill Professional, Pennsylvania (2011)

Thidé, B.: Electromagnetic Field Theory. Upsilon Books, Uppsala (2004)

Acknowledgements

We thank Stephen Jordan and Aaron Ostrander for valuable discussions of quantum algorithms for time-dependent linear differential equations. This work was supported in part by the Army Research Office (MURI award W911NF-16-1-0349), the Canadian Institute for Advanced Research, the National Science Foundation (Grants 1526380 and 1813814), and the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, Quantum Algorithms Teams and Quantum Testbed Pathfinder programs (Grant No. DE-SC0019040).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by M. M. Wolf

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Chebyshev Polynomials

This appendix defines the Chebyshev polynomials and presents some of their properties that are useful for our analysis.

For any \(k \in {\mathbb {N}}\), the Chebyshev polynomial of the first kind can be defined as the function

It can be shown that this is a polynomial of degree k in x. For example, we have

Using the trigonometric addition formula \(\cos (k+1)\theta +\cos (k-1)\theta =2\cos \theta \cos k\theta \), we have the recurrence

(which also provides an alternative definition of the Chebyshev polynomials, starting from the initial conditions \(T_0(x)=1\) and \(T_1(x)=x\)). We also have the bounds

Chebyshev polynomials are orthogonal polynomials on \([-1,1]\) with the weight function \(w(x):=(1-x^2)^{-1/2}\). More concretely, defining an inner product on \(L_w^2(-1,1)\) by

we have

where

It is well known from the approximation theorem of Weierstrass that \(\{T_k(x) : k \in {\mathbb {N}}\}\) is complete on the space \(L_w^2(-1,1)\). In other words, we have the following:

Lemma 7

Any function \(u\in L_w^2(-1,1)\) can be expanded by a unique Chebyshev series as

where the coefficients are

For any \(N \in {\mathbb {N}}\), we introduce the orthogonal projection \(P_N :L_w^2(-1,1)\rightarrow \mathbb {P}_N\) (where \(\mathbb {P}_N\) denotes the set of polynomials of degree at most N) by

By the completeness of the Chebyshev polynomials, we have

and

Finally, we compute the Chebyshev series of \(u'(x)\) in terms of the Chebyshev series of u(x). Since \(T_k(x)=\cos k\theta \) where \(\theta =\arccos x\), we have

Since

we obtain

and

Since \(P_N u(x) \in \mathbb {P}_N\), the derivative of this projection should be in \(\mathbb {P}_{N-1}\). Indeed, we have

Comparing the coefficients of both sides, we find

where \(\sigma _k\) is defined in (A.8).

Since \({\hat{c}}'_k=0\) for \(k\ge N\), we can calculate \({\hat{c}}'_{N-1}\) from \({\hat{c}}_N\) and then successively calculate \({\hat{c}}'_{N-2},\ldots ,{\hat{c}}'_1,{\hat{c}}'_0\). This recurrence gives

Since \({\hat{c}}'_k\) only depends on \({\hat{c}}_j\) for \(j>k\), the transformation matrix \(D_N\) between the values \({\hat{c}}'_k\) and \({\hat{c}}_k\) for \(k \in [{N+1}]_0\) is an upper triangular matrix with all zero diagonal elements, namely

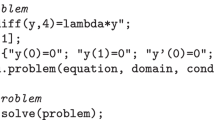

B An Example of the Quantum Spectral Method

Section 3 defines a linear system that implements the quantum spectral method for solving a system of d time-dependent differential equations. Here we present a simple example of this system for the case \(d=1\), namely

where \(x(t),A(t),f(t)\in {\mathbb {C}}\), \(t\in [0,T]\), and we have the initial condition

In particular, we choose \(m=3\), \(n=2\), and \(p=1\) in the specification of the linear system. We divide [0, T] into \(m=3\) intervals \([0,\Gamma _1],[\Gamma _1,\Gamma _2],[\Gamma _2,T]\) with \(\Gamma _0=0, \Gamma _m=T\), and map each one onto \([-1,1]\) with the linear mapping \(K_h\) satisfying \(K_h(\Gamma _h)=1\) and \(K_h(\Gamma _{h+1})=-1\). Then we take the finite Chebyshev series of x(t) with \(n=2\) into the differential equation with interpolating nodes \(\{t_l=\cos \frac{l\pi }{n} : l \in [{2}]\} = \{0,-1\}\) to obtain a linear system. Finally, we repeat the final state \(p=1\) time to increase the success probability.

With these choices, the linear system has the form

with

The vector \(|X\rangle \) has the form

where \(c_l(\Gamma _{h+1})\) are the Chebyshev series coefficients of \(x(\Gamma _{h+1})\) and x is the final state \(x(\Gamma _m)=x(-1)\).

Finally, the vector \(|B\rangle \) has the form

where \(\gamma \) comes from the initial condition and \(f_{h}(\cos \frac{l\pi }{n})\) is the value of \(f_h\) at the interpolation point \(t_l=\cos \frac{l\pi }{n} \in \{0,-1\}\).

Rights and permissions

About this article

Cite this article

Childs, A.M., Liu, JP. Quantum Spectral Methods for Differential Equations. Commun. Math. Phys. 375, 1427–1457 (2020). https://doi.org/10.1007/s00220-020-03699-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-020-03699-z