Abstract

The partition function of the directed polymer model on \({\mathbb {Z}}^{2+1}\) undergoes a phase transition in a suitable continuum and weak disorder limit. In this paper, we focus on a window around the critical point. Exploiting local renewal theorems, we compute the limiting third moment of the space-averaged partition function, showing that it is uniformly bounded. This implies that the rescaled partition functions, viewed as a generalized random field on \({\mathbb {R}}^{2}\), have non-trivial subsequential limits, and each such limit has the same explicit covariance structure. We obtain analogous results for the stochastic heat equation on \({\mathbb {R}}^2\), extending previous work by Bertini and Cancrini (J Phys A Math Gen 31:615, 1998).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Results

We set \({\mathbb {N}}:= \{1,2,3,\ldots \}\) and \({\mathbb {N}}_0 := {\mathbb {N}}\cup \{0\}\). We write \(a_n \sim b_n\) to mean \(\lim _{n\rightarrow \infty } a_n / b_n = 1\). We denote by \(C_b({\mathbb {R}}^d)\) (resp. \(C_c({\mathbb {R}}^d)\)) the space of continuous and bounded (resp. compactly supported) real functions defined on \({\mathbb {R}}^d\), with norm \(|\phi |_\infty := \sup _{x\in {\mathbb {R}}^d} |\phi (x)|\).

1.1 Directed polymer in random environment

One of the simplest, yet also most interesting models of disordered system is the directed polymer model in random environment on \({\mathbb {Z}}^{d+1}\), which has been the subject of the recent monograph by Comets [Com17].

Let \(S=(S_n)_{n\in {\mathbb {N}}_0}\) be the simple symmetric random walk on \({\mathbb {Z}}^d\). The random environment (or disorder) is a collection \(\omega =(\omega _{n,x})_{(n,x)\in {\mathbb {N}}\times {\mathbb {Z}}^d}\) of i.i.d. random variables. We use \(\mathrm P\) and \({{\,\mathrm{\mathrm E}\,}}\), resp. \({{\mathbb {P}}}\) and \({{\mathbb {E}}}\), to denote probability and expectation for S, resp. for \(\omega \). We assume that

Given \(\omega \), polymer length \(N\in {\mathbb {N}}\), and inverse temperature (or disorder strength) \(\beta >0\), the polymer measure \(\mathrm P^\beta _{N}\) is then defined via a Gibbs change of measure for S:

where \(Z_N^{\beta }\) is the normalization constant, called partition function:

(We stop the sum at \(N-1\) instead of N, which is immaterial, for later notational convenience.) Note that \(Z_N^{\beta }\) is a random variable, as a function of \(\omega \).

We use \(\mathrm P_{z}\) and \({{\,\mathrm{\mathrm E}\,}}_{z}\) to denote probability and expectation for the random walk starting at \(S_0=z\in {\mathbb {Z}}^d\). We denote by \(Z_N^{\beta }(z)\) the corresponding partition function:

We investigate the behavior as \(N\rightarrow \infty \) of the diffusively rescaled random field

for suitable \(\beta = \beta _N\), where we agree that \(Z_N^{\beta }(z) := Z_{\lfloor N \rfloor }^{\beta } (\lfloor z \rfloor )\) for non-integer N, z.

In dimension \(d=1\), Alberts, Khanin and Quastel [AKQ14] showed that for \(\beta _N={\hat{\beta }}N^{-1/4}\), the random field (1.5) converges in distribution to the Wiener chaos solution u(t, x) of the one-dimensional stochastic heat equation (SHE)

where \(\dot{W}\) is space-time white noise on \({\mathbb {R}}\times {\mathbb {R}}\). The existence of such an intermediate disorder regime is a general phenomenon among models that are so-called disorder relevant, see [CSZ17a], and the directed polymer in dimension \(d=1\) is one such example.

A natural question is whether an intermediate disorder regime also exists for the directed polymer in dimension \(d=2\). We gave an affirmative answer in [CSZ17b], although the problem turns out to be much more subtle than \(d=1\). The standard Wiener chaos approach fails, because the model in \(d=2\) is so-called marginally relevant, or critical. We will further elaborate on this later. Let us recall the results from [CSZ17b], which provide the starting point of this paper.

Henceforth we focus on \(d=2\), so \(S = (S_n)_{n\in {\mathbb {N}}_0}\) is the simple random walk on \({\mathbb {Z}}^2\). Let

Due to periodicity, if we take \(S_0\in {\mathbb {Z}}^2_{\mathrm{even}}\), then \((n, S_n)\in {\mathbb {Z}}^{3}_{\mathrm{even}}\) for all \(n\in {\mathbb {N}}\). The transition probability kernel of S will be denoted by

by the local central limit theorem, where \(g_u(\cdot )\) is the standard Gaussian density on \({\mathbb {R}}^2\):

For notational convenience, we will drop the conditioning in (1.8) when the random walk starts from zero. The multiplicative factor 2 in (1.8) is due to periodicity, while the Gaussian density \(g_{n/2}(x)\) is due to the fact that at time n, the walk has covariance matrix \(\frac{n}{2} I\).

The overlap (expected number of encounters) of two independent simple symmetric random walks S and \(S'\) on \({\mathbb {Z}}^2\) is defined by

where the asymptotic behavior follows from (1.8). It was shown in [CSZ17b] that the correct choice of the disorder strength is \(\beta =\beta _N= {\hat{\beta }}/\sqrt{R_N}\). More precisely, denoting by \(W_1\) a standard normal, we have the following convergence in distribution:

This establishes a weak to strong disorder phase transition in \({\hat{\beta }}\) (with critical point \({\hat{\beta }}_c=1\)), similar to what was known for the directed polymer model in \({\mathbb {Z}}^{d+1}\) with \(d\ge 3\) [Com17]. It was also proved in [CSZ17b, Theorem 2.13] that for \({\hat{\beta }}< 1\), after centering and rescaling, the random field of partition functions (1.5) converges to the solution of the SHE with additive space-time white noise, known as Edwards–Wilkinson fluctuation. Similar results have been recently obtained in [GRZ17] for the SHE with multiplicative noise.

The behavior at the critical point \({\hat{\beta }}= {\hat{\beta }}_c\), i.e. \(\beta _N = 1 / \sqrt{R_N}\), is quite subtle. For each \(x\in {\mathbb {R}}^2\) and \(t > 0\), the partition function \(Z^{\beta _N}_{Nt}(x\sqrt{N})\) converges to zero in distribution as \(N\rightarrow \infty \), by (1.11), while its expectation is identically one, see (1.4), and its second moment diverges. This suggests that the random field \(x \mapsto Z^{\beta _N}_{Nt}(x\sqrt{N})\) becomes rough as \(N\rightarrow \infty \), so we should look at it as a random distribution on\({\mathbb {R}}^2\) (actually a random measure, see below). We thus average the field in space and define

The first moment of \(Z^{\beta _N}_{Nt} (\phi )\) is easily computed by Riemann sum approximation:

Our main result is the sharp asymptotic evaluation of the second and third moments. These will yield important information on the convergence of the generalized random field (1.12).

Let us first specify our choice of \(\beta = \beta _N\). Recalling that \(\lambda (\cdot )\) is the log-moment generating function of the disorder \(\omega \), see (1.1), we fix \(\beta _N\) such that

Since \(\lambda (t) \sim \frac{1}{2} t^2\) as \(t \rightarrow 0\), we have \(\beta _N \sim 1 / \sqrt{R_N}\), so we are indeed exploring a window around the critical point \({\hat{\beta }}_c=1\). Let us recall the Euler–Mascheroni constant:

Remark 1.1

The asymptotic behavior in (1.10) can be refined as follows:

see [CSZ18, Proposition 3.2]. This leads to an equivalent reformulation of (1.14):

It is possible to express this condition in terms of \(\beta _N\) (see [CSZ18, Appendix A.4]):

where \(\kappa _3,\kappa _4\) are the disorder cumulants, i.e. \(\lambda (t) = \frac{1}{2} t^2 + \frac{\kappa _3}{3!} t^3 + \frac{\kappa _4}{4!} t^4 + O(t^5)\) as \(t \rightarrow 0\).

We define the following special function:

We now state our first result, where we compute the second moment of \(Z^{\beta _N}_{Nt} (\phi )\).

Theorem 1.2

(Second moment). Let \(\phi \in C_c({\mathbb {R}}^2)\), \(t > 0\), \(\vartheta \in {\mathbb {R}}\). Let \(\beta _N\) satisfy (1.14). Then

where the covariance kernel \(K_{t, \vartheta }(\cdot )\) is given by

The same covariance kernel \(K_{t,\vartheta }\) was derived by different methods by Bertini and Cancrini [BC98] for the 2d Stochastic Heat Equation, see Sect. 1.2. It is not difficult to see that

with \(C_t \in (0,\infty )\), and hence the integral in (1.19) is finite.

Remark 1.3

(Scaling covariance). It is easily checked from (1.20) that for any \(t>0\),

This is also clear because we can write \(Z^{\beta _N}_{Nt} (\phi ) = Z^{\beta _N}_{M} (\phi _t)\) with \(M := Nt\) and \(\phi _t(x) := t \, \phi (\sqrt{t} x)\), see (1.12), and note that \(\beta _N\) can be expressed as \(\beta _M\), provided \(\vartheta \) is replaced by \(\vartheta _t = \vartheta + \log t\) (just set N equal to Nt in (1.14), and recall (1.16)).

The starting point of the proof of Theorem 1.2 is a polynomial chaos expansion of the partition function. The variance computation can then be cast in a renewal theory framework, which is the cornerstone of our approach (see Sect. 1.3 for an outline). This allows us to capture the much more challenging third moment of the field. Let us extend the function \(G_\vartheta (w)\) in (1.18) with a spatial component, recalling (1.9):

We can now state the main result of this paper.

Theorem 1.4

(Third moment). Let \(\phi \in C_c({\mathbb {R}}^2)\), \(t > 0\), \(\vartheta \in {\mathbb {R}}\). Let \(\beta _N\) satisfy (1.14). Then

where the kernel \(M_{t, \vartheta }(\cdot )\) is given by

with \({{\mathcal {I}}}^{(m)}_{t, \vartheta }(\cdot )\) defined as follows:

The expression (1.26) reflects a key combinatorial structure which emerges from our renewal framework. Establishing the convergence of the series in (1.25) is highly non-trivial, which shows how delicate things become in the critical window.

We remark that relation (1.24) holds also for the mixed centered third moment with different test functions \(\phi ^{(1)}, \phi ^{(2)}, \phi ^{(3)} \in C_c({\mathbb {R}}^2)\), with the same kernel \(M_{t,\vartheta }(z, z', z'')\). Note that this kernel is invariant under any permutation of its variables, because \({{\mathcal {I}}}_{t,\vartheta }^{(m)}(z, z', z'')\) is symmetric in z and \(z'\) (but not in \(z''\), hence the need of symmetrization in (1.25)).

Let us finally come back to the convergence of the random field \(Z_{Nt}^{\beta _N}(x \sqrt{N})\) of diffusively rescaled partition functions. By averaging with respect to a test function, as in (1.12), we regard this field as a random measure on\({\mathbb {R}}^2\). More explicitly, if we define

we can write \(Z_{Nt}^{\beta _N}(\phi ) = \int _{{\mathbb {R}}^2} \phi (x) \, {\mathscr {Z}}^{\beta _N}_{Nt}(\mathrm {d}x)\), see (1.12). Note that \(({\mathscr {Z}}^{\beta _N}_{Nt})_{N\in {\mathbb {N}}}\) is a sequence of random variables taking values in \({{\mathcal {M}}}({\mathbb {R}}^2)\), the Polish space of locally finite measures on \({\mathbb {R}}^2\) with the vague topology (i.e. \(\nu _n \rightarrow \nu \) in \({{\mathcal {M}}}({\mathbb {R}}^2)\) if and only if \(\int \phi \, \mathrm {d}\nu _n \rightarrow \int \phi \, \mathrm {d}\nu \) for any \(\phi \in C_c({\mathbb {R}}^2)\)). We can make the following remarks.

The convergence of the first moment (1.13) implies tightness of \(({\mathscr {Z}}^{\beta _N}_{Nt})_{N\in {\mathbb {N}}}\), see [K97, Lemma 14.15]. This yields the existence of weak subsequential limits:

$$\begin{aligned} {\mathscr {Z}}^{\beta _N}_{Nt}(\mathrm {d}x) \xrightarrow []{\ d \ } \varvec{{\mathcal {Z}}}(\mathrm {d}x) \qquad \text {as }N\rightarrow \infty \text { along a subsequence}, \end{aligned}$$where the limit \(\varvec{{\mathcal {Z}}}(\mathrm {d}x) = \varvec{{\mathcal {Z}}}_{t,\vartheta }(\mathrm {d}x)\) can in principle depend on the subsequence.

The convergence of the second moment (1.19) implies uniform integrability of \(Z_{Nt}^{\beta _N}(\phi )\). It follows that any subsequential limit \(\varvec{{\mathcal {Z}}}(\mathrm {d}x)\) has mean measure given by Lebesgue measure: \({{\mathbb {E}}}\big [ \int _{{\mathbb {R}}^2} \phi (x) \, \varvec{{\mathcal {Z}}}(\mathrm {d}x) \big ] = \int \phi (x) \, \mathrm {d}x\). Moreover, by (1.19) and Fatou’s Lemma,

$$\begin{aligned} {{\,\mathrm{{\mathbb {V}}ar}\,}}\bigg [ \int _{{\mathbb {R}}^2} \phi (x) \, \varvec{{\mathcal {Z}}}(\mathrm {d}x) \bigg ] \,\le \, \int _{{\mathbb {R}}^2 \times {\mathbb {R}}^2} \phi (z) \, \phi (z') \, K_{t, \vartheta }(z-z') \, \mathrm {d}z \, \mathrm {d}z' \, < \, \infty .\nonumber \\ \end{aligned}$$(1.28)However, this does not rule out that the variance in (1.28) might actually vanish, in which case the limit \(\varvec{{\mathcal {Z}}}(\mathrm {d}x)\) would just be the trivial Lebesgue measure.

The convergence of the third moment (1.24) rules out this triviality. Indeed, (1.24) implies that \({{\mathbb {E}}}[|Z_{Nt}^{\beta _N}(\phi )|^3] \leqslant {{\mathbb {E}}}[Z_{Nt}^{\beta _N}(|\phi |)^3]\) is bounded, so the squares \(Z^{\beta _N}_{Nt}(\phi )^2\) are uniformly integrable and the inequality in (1.28) is actually an equality.

We can combine the previous considerations in the following result.

Theorem 1.5

Let \(t > 0\), \(\vartheta \in {\mathbb {R}}\). Let \(\beta _N\) satisfy (1.14). The random measures \(({\mathscr {Z}}^{\beta _N}_{Nt}(\mathrm {d}x) )_{N\in {\mathbb {N}}}\) in (1.27) admit weak subsequential limits \(\varvec{{\mathcal {Z}}}_{t,\vartheta }(\mathrm {d}x)\), and any such limit satisfies

In particular, every weak subsequential limit \(\varvec{{\mathcal {Z}}}_{t,\vartheta }(\mathrm {d}x)\) is a random measure with the same covariance structure. It is natural to conjecture that the whole sequence \(({\mathscr {Z}}^{\beta _N}_{Nt}(\mathrm {d}x) )_{N\in {\mathbb {N}}}\) has a weak limit, but this remains to be proved.

We conclude with a remark on intermittency. As the asymptotics behavior (1.21) suggests, when we fix the starting point of the partition function instead of averaging over it, i.e. we consider \(Z_N^{\beta _N}\) defined in (1.3), the second moment blows up like \(\log N\). More precisely, in [CSZ18, Proposition A.1] we have shown that as \(N\rightarrow \infty \)

This is a signature of intermittency, because it shows that \({{\mathbb {E}}}\big [ (Z_{N}^{\beta _N})^2 \big ] \gg {{\mathbb {E}}}[Z_N^{\beta _N}]^2 = 1\). It also implies that for any \(q \geqslant 2\) we have the bound

Indeed, since \({{\mathbb {E}}}[Z_N^{\beta _N}] = 1\), we can introduce the size-biased probability \({{\mathbb {P}}}^*(A) := {{\mathbb {E}}}[ \mathbb {1}_A \, Z_N^{\beta _N}]\) and note that \({{\mathbb {E}}}\big [ (Z_{N}^{\beta _N})^q \big ] = {{\mathbb {E}}}^*\big [ (Z_{N}^{\beta _N})^{q-1} \big ] \geqslant {{\mathbb {E}}}^*\big [ Z_{N}^{\beta _N} \big ]^{q-1} = {{\mathbb {E}}}\big [ (Z_{N}^{\beta _N})^2 \big ]^{q-1}\) by Jensen.

Remark 1.6

We formulated our results only for the directed polymer on \({\mathbb {Z}}^{2+1}\), but our techniques carry through for other marginally relevant directed polymer type models, such as the disordered pinning model with tail exponent 1 / 2, and the directed polymer on \({\mathbb {Z}}^{1+1}\) with Cauchy tails (see [CSZ17b]).

1.2 The 2d stochastic heat equation

An analogue of Theorem 1.2 for the stochastic heat equation (SHE) in \({\mathbb {R}}^2\) was proved by Bertini and Cancrini in [BC98], although they did not obtain the analogue of Theorem 1.4. We formulate these results next.

The SHE as written in (1.6) is ill-posed due to the product \(\dot{W}\cdot u\). To make sense of it, we mollify the space-time white noise \(\dot{W}\) in the space variable. Let \(j\in C^\infty _c({\mathbb {R}}^2)\) be a probability density on \({\mathbb {R}}^2\) with \(j(x)=j(-x)\), and let

For \(\varepsilon >0\), let \(j_\varepsilon (x) := \varepsilon ^{-2} j(x/\varepsilon )\). Then the space-mollified noise \(\dot{W}^\varepsilon \) is defined by \(\dot{W}^\varepsilon (t, x) := \int _{{\mathbb {R}}^2} j_\varepsilon (x-y) \dot{W}(t, y)\mathrm {d}y\). We consider the mollified equation

which admits a unique mild solution (with Ito integration).

It was shown in [CSZ17b] that if we rescale \(\beta _\varepsilon := {\hat{\beta }}\sqrt{\frac{2\pi }{\log \varepsilon ^{-1}}}\), then for any fixed \((t,x)\in {\mathbb {R}}^+\times {\mathbb {R}}^2\) the mollified solution \(u^\varepsilon (t,x)\) converges in distribution as \(\varepsilon \rightarrow 0\) to the same limit as in (1.11) for the directed polymer partition function, with \({\hat{\beta }}_c=1\) being the critical point.

In [BC98], Bertini and Cancrini considered the critical window around \({\hat{\beta }}_c =1\) given by

This is comparable to our choice of \(\beta _N\), see (1.14) and (1.17), if we make the identification \(\varepsilon ^2=1/N\) (note that the third cumulant \(\kappa _3=0\) for Gaussian random variables). In this critical window, \(u^\varepsilon (t,x)\) converges to 0 in distribution, while its expectation is constant:

Bertini and Cancrini showed that when interpreted as a random distribution on \({\mathbb {R}}^2\), \(u^\varepsilon (t,\cdot )\) admits subsequential weak limits, and they computed the limiting covariance. This is the analogue of our Theorem 1.2, which we now state explicitly. Let us set

Theorem 1.7

[BC98]. Let \(\beta _\varepsilon \) be chosen as in (1.35). Then, for any \(\phi \in C_c({\mathbb {R}}^2)\),

where \(K_{t, \vartheta }\) is defined as in Theorem 1.2, with

In Sect. 8 we provide an independent proof of Theorem 1.7, which employs the renewal framework of this paper. Note that, by Feynman–Kac formula, the mollified solution \(u^\varepsilon (t,\phi )\) can be interpreted as the partition function of a continuum directed polymer model.

Remark 1.8

The covariance kernel in (1.37) coincides with the one in [BC98, eq. (3.14)], provided we identify the parameter \(\beta \) in [BC98] with \(e^{\vartheta - \gamma }\). If we plug \(\beta = e^{\vartheta - \gamma }\) into [BC98, eq. (2.6)], with \(\vartheta \) given by (1.38), we obtain precisely (1.35).

Our renewal framework leads to analogues of Theorems 1.4 and 1.5 for the SHE. For simplicity, we content ourselves with showing that the third moment is bounded, but the same techniques would allow to compute its sharp asymptotic behavior, as in (1.24)–(1.26).

Theorem 1.9

Follow the same assumptions and notation as in Theorem 1.7. Then

If \(\varvec{u}_\vartheta (t,\cdot )\) is any subsequential weak limit in \({{\mathcal {M}}}({\mathbb {R}}^2)\) of \(u^\varepsilon (t, \cdot )\) as \(\varepsilon \rightarrow 0^+\), then \(\varvec{u}_\vartheta (t,\cdot )\) satisfies the analogues of (1.29)–(1.31), with \(K_{t, \vartheta }(z-z')\) in (1.30) replaced by \(2K_{t, \vartheta }\big (\frac{z-z'}{\sqrt{2}}\big )\).

1.3 Outline of the proof strategy

We present the key ideas of our approach. First we compute the second moment of the partition function, sketching the proof of (1.32). Then we describe the combinatorial structure of the third moment, which leads to Theorem 1.4. This illustrates how renewal theory emerges in our problem.

Second moment. We start from a polynomial chaos expansion of the partition function \(Z_{N}^{\beta }\), which arises from a binomial expansion of the exponential in (1.3) (see Sect. 2.1):

where we set \(\xi _{n,x} = e^{\beta _N\omega _{n,x} -\lambda (\beta _N)}-1\) for \(n\in {\mathbb {N}},x\in {\mathbb {Z}}^2\). Note that \(\xi _{n,x}\) are i.i.d. with mean zero and variance \(\sigma ^2 = e^{\lambda (2\beta )-2\lambda (\beta )}-1\), see (1.14). Then

where we de-fine

Incidentally, (1.40) coincides with the variance of the partition function of the one-dimensional disordered pinning model based on the simple random walk on \({\mathbb {Z}}\) [CSZ18].

The key idea is to view the series of convolutions (1.40) through the lenses of renewal theory. The sequence \(u_n^2\) is not summable, but we can normalize it to a probability on \(\{1,\ldots , N\}\). We thus define a triangular array of independent random variables \((T_i^{(N)})_{i\in {\mathbb {N}}}\) by

We stress that \(R_N = \frac{1}{\pi } \log N + O(1)\) is the same as in (1.10). If we fix \(\beta _N\) satisfying (1.14), and define the renewal process

we can rewrite (1.40) for \(\beta = \beta _N\) as follows:

This shows that \({{\,\mathrm{{\mathbb {V}}ar}\,}}\big [ Z_{N}^{\beta _N} \big ]\) can be interpreted as a (weighted) renewal function for \(\tau _k^{(N)}\).

The renewal process \(\tau _k^{(N)}\) is investigated in [CSZ18], where we proved that \((\tau _{\lfloor s \log N \rfloor }^{(N)}/N)_{s \geqslant 0}\) converges in law as \(N\rightarrow \infty \) to a special Lévy process \(Y = (Y_s)_{s \geqslant 0}\), called the Dickman subordinator, which admits an explicit density:

Then \(\mathrm P(\tau _{\lfloor s \log N \rfloor }^{(N)} \leqslant N) \rightarrow \mathrm P(Y_s \leqslant 1) = \int _0^1 f_s(t) \, \mathrm {d}t\), and by Riemann sum approximation

where \(G_\vartheta (\cdot )\) is the same as in (1.18), which can now be interpreted as a renewal function for the Lévy process Y. This completes the derivation of (1.32).

Similar arguments can be applied to the partition function \(Z_N^{\beta _N}(\phi )\) averaged over the starting point, to prove Theorem 1.2 using renewal theory.

Third moment. The proof of Theorem 1.4 is more challenging. In the second moment computation, the spatial variables \(x_1, \ldots , x_k\) have been summed over to get (1.40), reducing the analysis to a one-dimensional renewal process. Such a reduction is not possible for Theorem 1.4. In addition to the “point-to-plane” partition functions (1.3)–(1.4), it will be important to consider point-to-point partition functions, where we also fix the endpoint \(S_N\):

We need to extend our renewal theory framework, enriching the process \(\tau ^{(N)}_k\) with a spatial component \(S^{(N)}_k\) (see (2.13)–(2.14) below). This will yield the following analogue of (1.44):

which is now a local (weighted) renewal function for the random walk \((\tau ^{(N)}_k, S^{(N)}_k)_{k\geqslant 0}\). Its asymptotic behavior as \(N\rightarrow \infty \) was determined in [CSZ18]:

where \(G_\vartheta (t,z)\), defined in (1.23), is a continuum local renewal function.

We now explain how the second moment of the point-to-point partition function (1.46) enters in the third moment computation. We consider the partition function \(Z_N^{\beta }\) started at the origin, see (1.3), but everything extends to the averaged partition function \(Z_N^{\beta }(\phi )\).

We compute \({{\mathbb {E}}}[(Z_N^{\beta } - 1)^3]\) using the expansion (1.39). This leads to a sum over three sets of coordinates\((n^a_i,x^a_i)\), \((n^b_j,x^b_j)\), \((n^c_l,x^c_l)\), with associated random variables \(\xi _{n,x}\), say

for suitable (explicit) coefficients \(c_{N,\{\ldots \}}\). The basic observation is that if a coordinate, say \((n^a_i, x^a_i)\), is distinct from all other coordinates, then it gives no contribution to (1.48), because the random variable \(\xi _{n_i,x_i}\) is independent of the other \(\xi _{n,x}\)’s and it has \({{\mathbb {E}}}[\xi _{n_i,x_i}]=0\). This means that the coordinates in (1.48) have to match, necessarily in pairs or in triples.Footnote 1 We will show that triple matchings can be neglected, so we restrict to pairwise matchings.

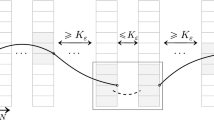

Let \(\varvec{D} \subseteq \{1,\ldots ,N\}\times {\mathbb {Z}}^2\) be the subset of space-time points given by the union of all coordinates \((n^a_i,x^a_i)\), \((n^b_j,x^b_j)\), \((n^c_l,x^c_l)\) in (1.48). By the pairwise matching constraint, any index \((n,x) \in \varvec{D}\) must appear exactly twice among the three sets of coordinates with labels a, b, c. So we can label each index in \(\varvec{D}\) as either ab, bc or ac, and we say that consecutive indexes with the same label form a stretch. This decomposition into stretches will lead to the integral representation (1.26) for the third moment, as we now explain.

Let us write \(\varvec{D} = \{(n_i, x_i): \ i=1,\ldots , r\}\) and consider the case when the first stretch has, say, label ab and length \(k \leqslant r\) (this means that \((n_i, x_i) = (n^a_i,x^a_i) = (n^b_i, x^b_i)\) for \(i=1, \ldots , k\)). The key observation is that, if we fix the last index \((n_k, x_k) = (M, y)\) and sum over the number k and the locations \((n_i,x_i)\) of previous indexes inside the stretch, then we obtain an expression similar to (1.40), except that the last index is not summed but rather fixed to \((n_k, x_k) = (M, y)\) (see Sect. 5 for the details). But this turns out to be precisely the second moment (1.46) of the point-to-point partition function \(Z_M^{\beta }(0,y)\).

In summary, when computing the third moment from (1.48), the contribution of each stretch of pairwise matchings is given asymptotically by (1.47). This is also the case when we consider the partition function \(Z_N^{\beta _N}(\phi )\) averaged over the starting point.

We can finally explain qualitatively the structure of the kernel (1.25)–(1.26) in Theorem 1.4:

the index m of the sum in (1.25) corresponds to the number of stretches;

each stretch gives rise to a kernel \(G_{\vartheta }(b_i - a_i, y_i - x_i)\) in (1.26), by (1.47);

the switch from a stretch to the following consecutive stretch gives rise to the remaining kernels \(g_{\frac{a_i - b_{i-2}}{2}}(x_i - y_{i-2}) \, g_{\frac{a_i - b_{i-1}}{2}}(x_i - y_{i-1})\) in (1.26).

We stress that the knowledge of precise asymptotic estimates such as (1.47) is crucial to compute the limiting expression (1.25)–(1.26) for the third moment.

We refer to Sect. 5 for a more detailed exposition of the combinatorial structure in the third moment calculation, which lies at the heart of the present paper.

1.4 Discussion

To put our results in perspective, we explain here some background. The key background notion is disorder relevance/irrelevance. The directed polymer is an example of a disordered system that arises as a disorder perturbation of an underlying pure model, the random walk S in this case. A fundamental question is whether the disorder perturbation, however small \(\beta >0\) is, changes the qualitative behavior of the pure model as \(N\rightarrow \infty \). If the answer is affirmative, then disorder is said to be relevant; otherwise disorder is said to be irrelevant. For further background, see e.g. the monograph [G10].

For the directed polymer on \({\mathbb {Z}}^{d+1}\), the underlying random walk S is diffusive with \(|S_N|\approx N^{1/2}\), while under the polymer measure \(P^\beta _{N}\), it has been shown that for \(d\ge 3\), there exists a critical value \(\beta _c(d)>0\) such that for \(\beta <\beta _c(d)\), \(|S_N|\approx N^{1/2}\) (see e.g. [CY06]); while for any \(\beta >0\) in \(d=1, 2\) and for \(\beta >\beta _c(d)\) in \(d\ge 3\), it is believed that \(|S_N|\gg N^{1/2}\). Thus the directed polymer model should be disorder irrelevant in \(d\ge 3\), disorder relevant in \(d=1\), while \(d=2\) turns out to be the critical dimension separating disorder relevance vs irrelevance, and disorder should be marginally relevant.

In [AKQ14], Alberts, Khanin and Quastel showed that on the intermediate disorder scale \(\beta _N= {\hat{\beta }}/N^{1/4}\), the rescaled partition functions of the directed polymer on \({\mathbb {Z}}^{1+1}\) converges to the solution of the 1-dimensional SHE (1.6). We note that the idea of considering polymers with scaled temperature had already appeared in the physics literature [BD00, CDR10].

Inspired in particular by [AKQ14], we developed in [CSZ17a] a new perspective on disorder relevance vs irrelevance (see also [CSZ16]). The heuristic is that, if a model is disorder relevant, then under coarse graining and renormalization of space-time, the effective disorder strength of the coarse-grained model diverges. Therefore to compensate, it should be possible to choose the disorder strength \(\beta _N\downarrow 0\) (known as weak disorder limit) as the lattice spacing \(\delta :=1/N\downarrow 0\) (known as continuum limit) in such a way that we obtain a continuum disordered model. In particular, the partition function \(Z^\omega _{N, \beta _N}\) should admit a non-trivial random limit for suitable choices of \(\beta _N\downarrow 0\). In [CSZ17a], we formulated general criteria for the partition functions of a disordered system to have non-trivial continuum and weak disorder limits. These criteria were then verified for the disordered pinning model, a family of (possibly long-range) directed polymer on \({\mathbb {Z}}^{1+1}\), and the random field perturbation of the critical Ising model on \({\mathbb {Z}}^2\). However, the general framework developed in [CSZ17a] does not include models where disorder is only marginally relevant, such as the directed polymer on \({\mathbb {Z}}^{2+1}\), which led to our previous work [CSZ17b] and to our current work.

Disorder relevance/irrlevance is also closely linked to the classification of singular stochastic partial differential equations (SPDE), such as the SHE or the KPZ equation, into sub-critical, critical, or super-critical ones, which correspond respectively to disorder relevance, marginality and disorder irrelevance. For sub-critical singular SPDEs, a general solution theory called regularity structures has been developed in seminal work by Hairer in [H13, H14], and alternative approaches have been developed by Gubinelli, Imkeller, and Perkowski [GIP15], and also by Kupiainen [K14]. However, for critical singular SPDEs such as the SHE in \(d=2\), the only known results so far are: our previous work [CSZ17a], which established a phase transition in the intermediate disorder scale \(\beta _\varepsilon = {\hat{\beta }}(2\pi /\log \frac{1}{\varepsilon })^{1/2}\) and identified the limit in distribution of the solution \(u^\varepsilon (t,x)\) in the subcritical regime \({\hat{\beta }}<1\); the work of Bertini and Cancrini [BC98], which computed the limiting covariance of the random field \(u^\varepsilon (t, \cdot )\) at the critical point \({\hat{\beta }}=1\); and our current work, which establishes the non-triviality of subsequential weak limits of the random field at the critical point \({\hat{\beta }}=1\).

Let us mention some related work on the directed polymer model on the hierarchical lattice. In particular, for the marginally relevant case, Alberts, Clark and Kocić in [ACK17] established the existence of a phase transition, similar to [CSZ17a]. And more recently, Clark [Cla17] computed the moments of the partition function around a critical window for the case of bond disorder. The computations in the hierarchical lattice case employ the independence structure inherent in hierarchical models, which is not available on \({\mathbb {Z}}^d\).

Note added in publication. More recently, Gu, Quastel and Tsai [GQT19] proved the existence of all moments for the 2-dimensional SHE in the critical window. They use different, functional analytic methods inspired by Dimock and Rajeev [DR04].

1.5 Organization of the paper

In Sect. 2, we recall the polynomial chaos expansion for the partition functions and introduce the renewal framework, which are then used in Sect. 3 to prove Theorem 1.2 on the limiting second moment of the partition function. In Sect. 4, we derive a series expansion for the third moment of the averaged point-to-point partition functions, whose terms are separated into two groups: ones with so-called triple intersections, and ones with no triple intersection. Terms with no triple intersection is shown in Sect. 5 to converge to the desired limit, while terms with triple intersections are shown to be negligible in Sect. 7, using bounds developed in Sect. 6. Lastly, in Sect. 8, we prove Theorems 1.7 and 1.9 for the stochastic heat equation.

2 Polynomial Chaos and Renewal Framework

In this section, we describe two key elements that form the basis of our analysis:

- (1)

polynomial chaos expansions, which represent the partition function as a multilinear polynomial of modified disorder random varibles, see Sect. 2.1.

- (2)

a renewal theory framework, which allows to relate the second moment of the partition function to suitable renewal functions, see Sect. 2.2.

We will use \(\mathrm P_{a,x}\) and \({{\,\mathrm{\mathrm E}\,}}_{a,x}\) to denote probability and expectation for the random walk S starting at time a from position \(S_a=x\in {\mathbb {Z}}^2\), with the subscript omitted when \((a,x)=(0,0)\). Recalling (1.7), we define the family of point-to-point partition functions by

The original point-to-plane partition function\(Z_N^\beta (x)\), see (1.4), can be recovered as follows:

We note that the point-to-plane partition function has \({{\mathbb {E}}}[Z_{a,b}^\beta (x)] \equiv 1\), while for the point-to-point partition function we have

the transition probability kernel defined in (1.8). We will need to average the partition functions \(Z_{a,b}^\beta (x,y)\) over either x or y, or both, on the diffusive scale. More precisely, we define for \(N\in {\mathbb {N}}\)

The reason that the terminal function \(\psi \) is only required to be bounded and continuous, while the initial function \(\phi \) is compactly supported is that, we would like to include the case \(\psi \equiv 1\), which corresponds to the point-to-plane polymer partition function. On the other hand, the initial function \(\phi \) plays the role of a test function used to average the partition function. (In general, the fact that at least one between \(\phi \) and \(\psi \) is compactly supported ensures finiteness of the average (2.6).) Note that \(Z_{Nt}^\beta (\phi )\) in (1.12) coincides with \(Z_{0,Nt}^{N,\beta }(\phi ,\psi )\) with \(\psi \equiv 1\). From (2.3) we compute

Note that these expectations are of order 1 for \(a = 0\) and \(b = N\), because \(q_N(y-x) \approx 1/N\) for \(x,y = O(\sqrt{N})\), see (1.8)–(1.9). This explains the normalizations in (2.4)–(2.6).

2.1 Polynomial chaos expansion

Let us start by rewriting the point-to-point partition function from (2.1) as

Using the fact that \(e^{x \mathbb {1}_{\{n\in \tau \}}} = 1 + (e^x-1) \mathbb {1}_{\{n\in \tau \}}\) for \(x\in {\mathbb {R}}\), we can write

The random variables \(\xi _{n,z}\) are i.i.d. with mean zero (thanks to the normalization by \(\lambda (\beta )\)) and with variance \({{\,\mathrm{{\mathbb {V}}ar}\,}}[\xi _{n,z}] = e^{\lambda (2\beta )-2\lambda (\beta )}-1\). Recalling (2.3) and expanding the product, we obtain the following polynomial chaos expansion:

with the convention that the product equals 1 when \(k=1\). We have written \(Z_{a,b}^{\beta }(x,y)\) as a multilinear polynomial of the random variables \(\xi _{n,x}\).

Analogous expansions hold for the averaged point-to-point partition functions: by (2.6)

Similar expansions hold for \(Z^{N, \beta }_{a,b}(x, \psi )\) and \(Z^{N, \beta }_{a,b}(\phi , y)\), without the factor \(\frac{1}{N}\).

2.2 Renewal theory framework

Given \(N\in {\mathbb {N}}\), we define a sequence of i.i.d. random variables \(\big ( (T^{(N)}_i, X^{(N)}_i) \big )_{i\in {\mathbb {N}}}\) taking values in \({\mathbb {N}}\times {\mathbb {Z}}^2\), with marginal law

where we recall that \(q_n(x)\) is defined in (1.8) and \(R_N=\sum _{n=1}^N\sum _{x\in {{\mathbb {Z}}}^2} q_n(x)^2\) is the replica overlap, see (1.10). We then define the corresponding random walkFootnote 2 on \({\mathbb {N}}\times {\mathbb {Z}}^2\)

Note that the first component \(\tau ^{(N)}_k\) is the renewal process that we introduced in Sect. 1.3, see (1.42)–(1.43).

We now describe the link with our model. We note that \(\sigma _N^2\), see (1.14), is the variance of the random variables \(\xi _{n,x} = e^{\beta \omega _{n,x} - \lambda (\beta )}-1\) which appear in (2.11). Recalling (2.1) and (2.3), we introduce a crucial quantity \(U_N(n, x)\), that will appear repeatedly in our analysis, which is a suitably rescaled second moment of the point-to-point partition function:

By (2.11), we then have

Looking at (2.13)–(2.14), we have the following key probabilistic representation:

It is also convenient to define

Thus \(U_N(n,x)\) and \(U_N(n)\) can be viewed as (exponentially weighted) local renewal functions.

We investigated the asymptotic properties of the random walk \((\tau ^{(N)}_k , S^{(N)}_k)\) in [CSZ18]. In particular, introducing the rescaled process

we proved in [CSZ18] that \({\varvec{Y}}^{(N)}\) converges in distribution as \(N\rightarrow \infty \) to the Lévy process \({\varvec{Y}}\) on \([0,\infty ) \times {\mathbb {R}}^2\) with Lévy measure

where \(g_u(x)\) is the standard Gaussian density on \({\mathbb {R}}^2\), see (1.9). Remarkably, the process \({\varvec{Y}}\) admits an explicit density:

which leads to a corresponding explicit expression for the (weighted) local renewal function

where the functions \(G_\vartheta (t)\) and \(G_\vartheta (t,x)\) match with (1.18) and (1.23).

We showed in [CSZ18] that the sharp asymptotic behavior of \(U_N(n,x)\) and \(U_N(n)\) is captured by the functions \(G_{ \vartheta }(n,x)\) and \(G_{ \vartheta }(x)\). Note that for the weight \(\lambda _N\) in (2.17)–(2.18) we can write \(\lambda _N = 1 + \frac{\vartheta }{\log N}(1+o(1))\) as \(N\rightarrow \infty \), by our assumption (1.14). Then we can rephrase [CSZ18, Theorem 1.4 and Theorems 2.3-2.4] as follows.

Proposition 2.1

Fix \(\beta _N\) such that (1.14) holds, for some \(\vartheta \in {\mathbb {R}}\). Let \(U_N(n)\) be defined as in (2.18). For any fixed \(\delta > 0\), as \(N\rightarrow \infty \) we have

where \(G_\vartheta \) is defined in (1.18). Moreover, there exists \(C \in (0,\infty )\) such that for all \(N\in {\mathbb {N}}\)

Proposition 2.2

Fix \(\beta _N\) such that (1.14) holds, for some \(\vartheta \in {\mathbb {R}}\). Let \(U_N(n,x)\) be defined as in (2.16)–(2.17). For any fixed \(\delta > 0\), as \(N\rightarrow \infty \) we have

where \(G_\vartheta (t,x)\) is defined in (1.23). Moreover, there exists \(C \in (0,\infty )\) such that for all \(N\in {\mathbb {N}}\)

We will also need the following asymptotic behavior on \(G_\vartheta (t)\) from [CSZ18].

Proposition 2.3

For every fixed \(\vartheta \in {\mathbb {R}}\), we have that

It follows that there exists \(c_\vartheta \in (0,\infty )\) such that

By direct computation \(\frac{\mathrm {d}}{\mathrm {d}t} {\hat{G}}(t) < 0\) for all \(t \in (0,1)\), hence \({\hat{G}}_\vartheta (\cdot )\) is strictly decreasing.

3 Proof of Theorem 1.2

Recall the definition (1.9) of \(g_t(x)\). Given a bounded function \(\phi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\), we define

The averaged partition function \(Z_{Nt}^{\beta _N}(\phi )\) in Theorem 1.2, see (1.12), coincides with \(Z_{0,Nt}^{N,\beta _N}(\phi ,\psi )\) with \(\psi \equiv 1\), see (2.6). By the expansion (2.12) with \(\psi \equiv 1\), we obtain

We isolate the term \(k=1\), because given \((n_1, x_1)=(m, x)\) and \((n_k, x_k)=(n, y)\), the sum over \(k\geqslant 2\) gives \({{\mathbb {E}}}[Z^{\beta _N}_{m,n}(x, y)^2]=U_N(n-m, y-x)/\sigma _N^2\), by (2.15)–(2.16). Therefore

where in the second equality we summed over \(y\in {\mathbb {Z}}^2\) – this is the reason that only \(U_N(n-m)\) appears instead of \(U_N(n-m,y-x)\); recall (2.17) and (2.18).

We now let \(N\rightarrow \infty \). We first show that the first term in the RHS of (3.3) vanishes as \(O(\sigma _N^2) = O(\frac{1}{\log N})\), see (1.10) and (1.14). Note that for \(v \in (0,1)\) and \(x \in {\mathbb {R}}^2\) we have

see (2.8), (1.8) and (3.1). Then, by Riemann sum approximation, we have

Indeed, the approximation is uniform for \(N\varepsilon< n < Nt\), with fixed \(\varepsilon > 0\), while the contribution of \(n \leqslant N \varepsilon \) is small, for \(\varepsilon > 0\) small, by the uniform bound in (3.4).

It remains to focus on the second term in the RHS of (3.3). By (2.20)–(2.21) and (3.4), together with \(\sigma _N^2 \sim \frac{\pi }{\log N}\), see (1.14) and (1.10), another Riemann sum approximation gives

Integrating out x, we obtain

4 Expansion for the Third Moment

In this section, we give an expansion for the third moment of the partition function, which forms the basis of our proof of Theorem 1.4. We actually prove a more general version for the averaged point-to-point partition functions, which is of independent interest.

Theorem 4.1

(Third moment, averaged point-to-point). Let \(t > 0\), \(\vartheta \in {\mathbb {R}}\) and \(\beta _N\) satisfy (1.14). Fix a compactly supported \(\phi \in C_c({\mathbb {R}}^2)\) and a bounded \(\psi \in C_b({\mathbb {R}}^2)\). Then

where we set \(\Phi _s := \phi * g_{s/2}\) and \(\Psi _s := \psi * g_{s/2}\), see (3.1), and define

We observe that Theorem 1.4 is a special case of Theorem 4.1: it suffices to take \(\psi \equiv 1\) so that \(Z_{0,Nt}^{N,\beta _N}(\phi , \psi ) = Z_{Nt}^{\beta _N}(\phi )\), see (2.6) and (1.12), and it is easy to check that (4.1)–(4.2) match with (1.24)–(1.26), since \(\Psi _s \equiv 1\).

It remains to prove Theorem 4.1. This will be reduced to Propositions 4.2 and 4.3 below. We exploit the multilinear expansion in (2.12) for the partition function, which leads to the following representation for the centered third moment (recall (2.7)–(2.9)):

where we agree that \({\varvec{A}}=(A_1, \ldots , A_{|{\varvec{A}}|})\) with \(A_i=(a_i, x_i)\in {\mathbb {Z}}^3_{\mathrm{even}}\), and \({\varvec{B}}\), \({\varvec{C}}\) are defined similarly, with \(B_j=(b_j, y_j)\), \(C_k=(c_k, z_k)\), and we set for short

(When \(|{\varvec{A}}| = 1\), the product \(\prod _{i=2}^{|{\varvec{A}}|} \ldots \) equals 1, by definition, and similarly for \({\varvec{B}}\) and \({\varvec{C}}\).)

We now split the sum in (4.3) into two parts:

defined as follows:

\(M_{s, t}^{N, \mathrm NT}(\phi , \psi )\) is the sum in (4.3) restricted to \({\varvec{A}}, {\varvec{B}}, {\varvec{C}}\) such that \({\varvec{A}}\cap {\varvec{B}}\cap {\varvec{C}}=\varnothing \), which we call the case with no triple intersections;

\(M_{s, t}^{N, \mathrm{T}}(\phi , \psi )\) is the sum in (4.3) restricted to \({\varvec{A}}, {\varvec{B}}, {\varvec{C}}\) such that \({\varvec{A}}\cap {\varvec{B}}\cap {\varvec{C}}\ne \varnothing \), which we call the case with triple intersections.

These parts are analyzed in the following propositions, which together imply Theorem 4.1.

Proposition 4.2

(Convergence with no triple intersections). Let the assumptions of Theorem 4.1 hold. Then

Proposition 4.3

(Triple intersections are negligible). Let the assumptions of Theorem 4.1 hold. Then

Proposition 4.2 is proved in the next section. The proof of Proposition 4.3 will be given later, see Sect. 7.

5 Convergence Without Triple Intersections

In this section, we prove Proposition 4.2 and several related results.

5.1 Proof of Proposition 4.2

We first derive a representation for \(M^{N, \mathrm NT}_{s, t}(\phi , \psi )\), which collects the terms in the expansion (4.3) with \({\varvec{A}}\cap {\varvec{B}}\cap {\varvec{C}}=\varnothing \).

Denote \({\varvec{D}}:={\varvec{A}}\cup {\varvec{B}}\cup {\varvec{C}}\subset \{s+1, \ldots , t-1\}\times {\mathbb {Z}}^2\), with \({\varvec{D}}=(D_1, \ldots , D_{|{\varvec{D}}|})\) and \(D_i=(d_i, w_i)\). Since \({{\mathbb {E}}}[\xi _z]=0\), the contributions to \(M^{N, \mathrm NT}_{s, t}(\phi , \psi )\) come only from \({\varvec{A}}, {\varvec{B}}, {\varvec{C}}\) where the points in \({\varvec{A}}\cup {\varvec{B}}\cup {\varvec{C}}\) pair up. In particular,

and each point \(D_j\) belongs to exactly two of the three sets \({\varvec{A}}, {\varvec{B}}, {\varvec{C}}\), and hence we can associate a vector \(\varvec{\ell }= (\ell _1, \ldots , \ell _k)\) of labels \(\ell _j \in \{AB,\, BC,\, AC\}\). Note that there is a one to one correspondence between \(({\varvec{A}}, {\varvec{B}}, {\varvec{C}})\) and \(({\varvec{D}}, \varvec{\ell })\). We also recall that \(\xi _{n,z} = e^{\beta _N \omega (n,z) - \lambda (\beta _N)}-1\), hence \(\sigma _N^2={{\mathbb {E}}}[\xi _z^2]\), see (1.14). From (4.3) we can then write

where we agree that \({\varvec{A}}, {\varvec{B}}, {\varvec{C}}\) are implicitly determined by \(({\varvec{D}}, \varvec{\ell })\).

We now make a combinatorial observation. The sequence \(\varvec{\ell }=(\ell _1, \ldots , \ell _{k})\) consists of consecutive stretches\((\ell _1, \ldots , \ell _i)\), \((\ell _{i+1}, \ldots , \ell _j)\), etc., such that the labels are constant in each stretch and change from one stretch to the next. Any stretch, say \((\ell _p, \ldots , \ell _q)\), has a first point \(D_p = (a,x)\) and a last point \(D_q = (b,y)\). Let m denote the number of stretches and let \((a_i, x_i)\) and \((b_i, y_i)\), with \(a_i \leqslant b_i\), be the first and last points of the i-th stretch.

We now rewrite (5.1) by summing over \(m\in {\mathbb {N}}\), \((a_1, b_1, \ldots , a_m, b_m)\), and \((x_1, y_1, \ldots , x_m, y_m)\). The sum over the labels of \(\varvec{\ell }\) leads to a combinatorial factor \(3 \cdot 2^{m-1}\), because there are 3 choices for the label of the first stretch and two choices for the label of the following stretches. Once we fix \((a_1, x_1)\) and \((b_1, y_1)\), summing over all possible configurations inside the first stretch then gives the factor

where we recall that \(U_N\) is defined in (2.15)–(2.16). A similar factor arises from each stretch, which leads to the following crucial identity (see Fig. 1):

with the convention that \(\prod _{i=3}^m \{\ldots \} = 1\) for \(m=2\). Note that the sum starts with \(m=2\) because in (5.1), we have \(|{\varvec{A}}|, |{\varvec{B}}|, |{\varvec{C}}|\ge 1\).

Diagramatic representation of the expansion (5.2) of the third moment. Curly lines between nodes \((a_i,x_i)\) and \((b_i,y_i)\) have weight \(U_N(b_i-x_i,y_i-x_i)\), coming for pairwise matchings between a single pair of copies AB, BC or CA, while solid, curved lines between nodes \((a_i,x_i)\) and \((b_{i-1},y_{i-1})\) or between \((a_i,x_i)\) and \((b_{i-2},y_{i-2})\) indicate a weight \(q_{b_{i-1},a_i}(y_{i-1},x_i)\) and \(q_{b_{i-2},a_i}(y_{i-2},x_i)\), respectively

If we compare (5.2) with (4.5) and (4.2), we see that Proposition 4.2 follows from the following result and dominated convergence. \(\quad \square \)

Lemma 5.1

For \(m\ge 2\), let \(I^{(N, m)}_{Nt}(\phi , \psi ):=I^{(N, m)}_{0, Nt}(\phi , \psi )\) be defined as in (5.2), and let \({{\mathcal {I}}}^{(m)}_t(\phi , \psi )\) be defined as in (4.2). Then

Furthermore, for any \(C>0\) we have

The proof of Lemma 5.1 is given later, see Sect. 5.3. We first prove the next result on \({{\mathcal {I}}}^{(m)}_{t}(\phi , \psi )\), which will reveal a structure that will be used in the proof of Lemma 5.1.

Lemma 5.2

For \(\phi \in C_c({\mathbb {R}}^2)\), \(\psi \in C_b({\mathbb {R}}^2)\), and \({{\mathcal {I}}}^{(m)}_t(\phi , \psi )\) defined as in (4.2), we have:

5.2 Proof of Lemma 5.2

In light of Remark 1.3, we may assume \(t=1\). Recall that

where \(G_\vartheta (t, x):=G_\vartheta (t) g_{t/4}(x)\), with \(g_{t/4}(x)\) being the heat kernel, see (1.9), and \(G_\vartheta \) defined in (1.18). We also recall that \(\Phi _a(x):=(\phi * g_{a/2})(x), \Psi _{1-b}(y)=(\Psi * g_{(1-b)/2} )(y)\).

Note that we obtain an upper bound if we replace \(\phi \) by \(|\phi |\), so we may assume that \(\phi \geqslant 0\). Similarly, we may replace \(\psi \) by the constant \(|\psi |_\infty \), and we take \(|\psi |_\infty \leqslant 1\) for simplicity. We thus bound \({{\mathcal {I}}}^{(m)}(\phi , \psi ) \le {{\mathcal {I}}}^{(m)}(\phi , 1)\), with \(\phi \geqslant 0\), and we focus on \({{\mathcal {I}}}^{(m)}(\phi , 1)\).

We first show that, by integrating out the space variables, we can bound

Note that in (5.6) we have \(\Psi \equiv 1\) (by \(\psi \equiv 1\)) and \(y_m\) appears only in \(G_{\vartheta }(b_m-a_m, y_m-x_m)\). Then we can integrate out \(y_m\in {\mathbb {R}}^2\) to obtain

We are then left with two factors containing \(x_m\), and the corresponding integral is

having used \(\alpha \beta \leqslant \frac{1}{2}(\alpha ^2+ \beta ^2)\) in the last inequality.

We now iterate. Integrating out each \(y_i\), for \(i \geqslant 2\), replaces \(G_{\vartheta }(b_i-a_i, y_i-x_i)\) by \(G_{\vartheta }(b_i-a_i)\), while integrating out each \(x_i\), for \(i\ge 3\), replaces \(g_{\frac{a_i - b_{i-2}}{2}}(x_i - y_{i-2}) \, g_{\frac{a_i - b_{i-1}}{2}}(x_i - y_{i-1})\) by \((2\pi \sqrt{(a_i-b_{i-1})(a_i-b_{i-2})})^{-1}\). This leads to

We finally bound \(\Phi _{a_2}(x_2) \leqslant |\phi |_\infty \), see (3.1), then perform the integrals over \(x_2\) and \(y_1\), which both give 1, and note that \(\int _{{\mathbb {R}}^2} \Phi _{a_1}(x_1)^2 \, \mathrm {d}x_1 \leqslant |\phi |_\infty \int _{{\mathbb {R}}^2} \phi (z) \, \mathrm {d}z\), which yields (5.7).

We can now bound the quantity in Lemma 5.2 using (5.7), to get

It remains to show that \(J^{(m)}\) decay super-exponentially fast. For any \(\lambda >0\), we have

Denote \(u_i:=a_i-b_{i-1}\) and \(v_i:=b_i-a_i\) for \(1\le i\le m\), where \(b_0:=0\). Then observe that \(a_i-b_{i-2}=u_{i-1}+v_{i-1}+u_i\ge u_{i-1}+u_i\). Since \(b_i - b_{i-1} \geqslant v_i\), we can bound \(J^{(m)}\) by

where in the last inequality we have bounded \(G_{\vartheta }(\cdot ) \leqslant {\hat{G}}_{\vartheta }(\cdot )\), see (2.25), and we define

We will show the following results.

Lemma 5.3

There is a constant \({\mathsf {c}}_\vartheta < \infty \) such that for every \(\lambda \geqslant 1\)

Lemma 5.4

For all \(k\in {\mathbb {N}}\), the function \(\phi ^{(k)}(\cdot )\) is decreasing on (0, 1) and satisfies

With Lemmas 5.3 and 5.4, it follows from (5.10) that

If we choose \(\lambda =m\), then by (5.9) and the definition (5.12) of \(C_\lambda \) we get

which concludes the proof of Lemma 5.2. \(\quad \square \)

It remains to prove Lemmas 5.3 and 5.4.

Proof of Lemma 5.3

Recall that \({\hat{G}}_{\vartheta }(\cdot )\) is defined in (2.25) and it is decreasing. Then

hence

We have proved that (5.12) holds, provided we chose \({\mathsf {c}}_\vartheta := 2 \, c_{\vartheta }\). \(\quad \square \)

Proof of Lemma 5.4

The second inequality in (5.13) follows from \(\sum _{i=0}^k \frac{x^i}{i!}\le e^x\).

Let us prove the first inequality in (5.13). Recall the definition (5.11) of \(\phi ^{(k)}\). Then

To iterate this argument and bound \(\phi ^{(k)}\), we claim that

for suitable choices of the coefficients \(c_{k,i}\). For \(k=1\), we see from (5.17) that

Inductively, we assume that (5.18) holds for \(k-1\) and we will deduce it for k. Note that plugging (5.18) for \(k-1\) into (5.11) gives

To identify \(c_{k, i}\) for \(0\le i\le k\), we need the following Lemma, proved later.

Lemma 5.5

For all \(k \in {\mathbb {N}}_0\), we have

If we plug (5.21) with \(k=j\) into (5.20) we get that, for all \(v \in (0,1)\),

This shows that (5.18) indeed holds, with

We have the following combinatorial bound on the coefficients \(c_{k, i}\), which we prove later by comparing with the number of paths for a suitable random walk.

Lemma 5.6

For every \(k\in {\mathbb {N}}\) and \(i \in \{0,\ldots ,k\}\) we have \(c_{k, i} \leqslant 32^k\).

Plugging this bound into (5.18) we obtain, for all \(k\in {\mathbb {N}}\) and \(v \in (0,1)\),

which is the first inequality in (5.13). This concludes the proof of Lemma 5.4. \(\quad \square \)

It remains to prove Lemmas 5.5 and 5.6.

Proof of Lemma 5.5

By a change of variable \(s=vz\),

Let us look at B: the change of variable \(z = v^{\alpha -1}\), with \(\alpha \in (0,1)\), gives

We now look at A: the change of variable \(z = x \, e^2 / v\), with \(x \in (0, \frac{v}{e^2})\), followed by \(x = e^{-2y}\), with \(y \in (\frac{1}{2} \log \frac{e^2}{v}, \infty )\), yields

Let \(({\varvec{N}}_t)_{t\ge 0}\) be a Poisson process with intensity one, and let \((X_i)_{i\ge 1}\) denote its jump sizes, which are i.i.d. exponential variables with parameter one. For all \(t \geqslant 0\) we can write

Choosing \(t = \frac{1}{2}\log \frac{e^2}{ v}\), it follows that

We have thus shown that

which coincides with the RHS of (5.21). \(\quad \square \)

Proof of Lemma 5.6

We iterate the recursion relation (5.22), to get

Since \(c_{1, 1} = 2\) and \(c_{1, 0} = 0\), see (5.19), we can restrict to \(j_1 = 1\). Also observe that

hence we can reverse the order of the sums in (5.27) and write

where \(|{{\mathcal {S}}}_{k}(i)|\) denotes the cardinality of the set

In words, \({{\mathcal {S}}}_{k}(i)\) is the set of non-negative integer-valued paths \((j_1, \ldots , j_k)\) that start from \(j_1 = 1\), arrive at \(j_k = i\), and can make upward jumps of size at most 1, while the downward jumps can be of arbitrary size (with the constraint that the path is non-negative).

To complete the proof, it remains to show that

We define a correspondence which associates to any path \(\varvec{j} = (j_1, \ldots , j_k) \in {{\mathcal {S}}}_{k}(i)\) a nearest neighbor path\(\varvec{\ell } = (\ell _1, \ldots , \ell _n)\), with length \(n = n(\varvec{j}) \in \{k, \ldots , 2k\}\), with increments in \(\{-1,0, 0^*,+1\}\), where by \(0^*\) we mean an increment of size 0 with an extra label “\(*\)” (that will be useful to get an injective map). The correspondence is simple: whenever the path \(\varvec{j}\) has a downward jump (which can be of arbitrary size), we transform it into a sequence of downward jumps of size 1, followed by a jump of size \(0^*\).

Note that if \(m = m(\varvec{j})\) denotes the number of downward jumps in the path \(\varvec{j}\), then the new path \(\varvec{\ell } = (\ell _1, \ldots , \ell _n)\) has length

where \(\sigma _i\) is the size of the i-th downward jump of \(\varvec{j}\). The total size of downward jumps is

Defining \(\Delta ^+(\varvec{j}) := \sum _{i=1}^{k-1} (j_{i+1}-j_i)^+\), we have

However \(\Delta ^+(\varvec{j}) \leqslant k-1\), because the upward jumps are of size at most 1, hence

which shows that \(n = n(\varvec{j}) \leqslant 2k\), as we claimed.

Note that the correspondence \(\varvec{j} \mapsto \varvec{\ell }\) is injective: the original path \(\varvec{j}\) can be reconstructed from \(\varvec{\ell }\), thanks to the labeled increments \(0^*\), which distinguishes consecutive downward jumps from a single downward jump with the same total length. Since the path \(\varvec{\ell } = (\ell _1, \ldots , \ell _n)\) has \(n-1\) increments, each of which takes four possible values, we get the desired estimate:

\(\square \)

5.3 Proof of Lemma 5.1

We follow the same strategy as in the proof of Lemma 5.2.

We first prove the exponential bound (5.4). We recall that \(I^{(N, m)}_{Nt}(\phi , \psi ):=I^{(N, m)}_{0, Nt}(\phi , \psi )\), see (5.2). We may take \(t=1\), \(\phi \ge 0\), and \(\psi \equiv 1\), so that the last terms in (5.2) are \(q^N_{b_{m-1}, t}(y_{m-1}, \psi ) \equiv 1\), \(q^N_{b_{m}, t}(y_{m}, \psi ) \equiv 1\). We can thus rewrite (5.2) as follows:

Similar to (5.7), we first prove the following bound:

for suitable constants \(C_\phi , c < \infty \). We first note that \(y_m\) appears in (5.30) only in the term \(U_N(b_m-a_m, y_m-x_m)\) and hence we can sum it out as

We next sum over \(x_m\): since \(q_{s,t}(x,y) \leqslant \sup _{z} q_{t-s}(z) \leqslant \frac{c}{t-s}\), see (2.3) and (1.8), we have

We can now iterate, integrating out \(y_i\) for \(i \geqslant 2\) and \(x_i\) for \(i \geqslant 3\), to obtain

After bounding \(q^N_{0,a_2}(\phi , x_2) \leqslant |\phi |_\infty \), see (2.8), the sum over \(x_2\) gives 1, because \(q_{b_1, a_2}(y_1,\cdot )\) is a probability kernel. Then the sum over \(y_1\) gives \(U_N(b_1- a_1)\). Finally, the sum over \(x_1\) gives

for a suitable \(c_\phi < \infty \), because \(\phi \) has compact support. This completes the proof of (5.31).

Next we bound \(J^{(N,m)}\) in (5.31), similarly to the continuum analogue (5.10). Namely, we denote \(u_i=a_i-b_{i-1}\) and \(v_i:=b_i-a_i\) for \(1\le i\le m\), with \(b_0:=0\), we insert the factor \(e^{\lambda } \prod _{i=1}^m e^{-\lambda (\frac{v_i}{N})}>1\), and then we use \(a_i-b_{i-2} \geqslant u_{i-1}+u_i\) to obtain the bound

Note that \(\sigma _N^2 \leqslant \frac{c_1}{\log N}\), see (1.14), and \(U_N(u) \leqslant c_2 \, (\mathbb {1}_{\{u=0\}} + \frac{\log N}{N} \, {\hat{G}}_{\vartheta }(\frac{u}{N}) )\), see (2.21) and (2.25). Since \({\hat{G}}_\vartheta (\cdot )\) is decreasing, we can bound the Riemann sum by the integral and get

where in the last inequality we have applied (5.12).

The multiple sum over the \(u_i\)’s in (5.36) is bounded by the iterated integral in (5.10), by monotonicity (note that if we replace \(u_i\) by \(N u_i\), with \(u_i \in \frac{1}{N}{\mathbb {Z}}\cap (0,1)\), then we get the correct prefactor \(1/N^{m}\), thanks to the term \(1/N^2\) in (5.36)). Then

because the integral is at most \(32^m\), by (5.13) (see also (5.14)). Since \(C_\lambda =\frac{{\mathsf {c}}_\vartheta }{2+\log \lambda }\), see (5.12), if we choose \(\lambda \) and N large enough, then it is clear by (5.38) that \(J^{(N,m)}\) decays faster than any exponential in m. This proves (5.4).

We next prove (5.3), for simplicity with \(t=1\). This is easily guessed because \(I^{(N, m)}_{1}(\phi , \psi )\) (see (5.2)) is close to a Riemann sum for \(\pi ^m{{\mathcal {I}}}^{(m)}_{1}(\phi , \psi )\) (see (4.2)), by the asymptotic relations

see (1.10), (1.14), (3.4) and (1.8), (2.22).Footnote 3 We stress that plugging (5.39)–(5.40) into (5.2) we obtain the correct prefactor \(1/N^{2m}\), thanks to the extra term \(1/N^3\) in (5.2).

To justify the replacements (5.39)–(5.40), we proceed by approximations. Henceforth \(m\ge 2\) is fixed. We define \({{\mathcal {I}}}^{(m), (\varepsilon )}_1(\phi , \psi )\) by restricting the integral in (4.2) to the set

where \(b_0 := 0\) and \(a_{m+1} := 1\). Note that \({{\mathcal {I}}}^{(m)}_1(\phi , \psi )-{{\mathcal {I}}}^{(m),(\varepsilon )}_1(\phi , \psi )\) is small, if we choose \(\varepsilon > 0\) small, simply because the integrated integral \({{\mathcal {I}}}^{(m)}_1(\phi , \psi )\) is finite.

We similarly define \(I^{(N,m),(\varepsilon )}_N(\phi , \psi )\) by restricting the sum in (5.2) to the set

where \(b_0 := 0\) and \(a_{m+1} := N\). The difference \(I^{(N,m)}_N(\phi , \psi )-I^{(N,m),(\varepsilon )}_N(\phi , \psi )\) is bounded by the sum in (5.31) restricted to the complementary set of (5.42). By the uniform bound (2.21), this sum is bounded by the integral in (5.7) restricted to the complementary set of (5.41). Then \(I^{(N,m)}_N(\phi , \psi )-I^{(N,m),(\varepsilon )}_N(\phi , \psi )\) is small, uniformly in large N, for \(\varepsilon >0\) small.

As a consequence, to prove (5.3) it suffices to show that

We next make a second approximation. For large \(M > 0\), we define \({{\mathcal {I}}}^{(m),(\varepsilon , M)}_1(\phi , \psi )\) by further restricting the integral in (4.2) to the bounded set

We similarly define \(I^{(N,m),(\varepsilon ,M)}_N(\phi , \psi )\), by further restricting the sum in (5.2) to the set

Clearly, \(\lim _{M\rightarrow \infty } {{\mathcal {I}}}^{(m),(\varepsilon , M)}_1(\phi , \psi ) = {{\mathcal {I}}}^{(m),(\varepsilon )}_1(\phi , \psi )\). We claim that, analogously,

Then we can complete the proof of Lemma 5.1: the asymptotic relations (5.39) and (5.40) hold uniformly on the restricted sets (5.42) and (5.44), so by dominated convergence

It remains to prove (5.45). We can upper bound the difference in (5.45) as in (5.31)–(5.34): we sum out the spatial variables recursively, starting from \(y_m\), then \(x_m\), then \(y_{m-1}\), etc.

When we sum out \(y_m\), if \(|y_m-x_m|>M \sqrt{b_m-a_m}\), then by (5.32) and (2.23) we pick up at most a fraction \(\delta (M) \leqslant C / M^2\) of the upper bound in (5.34). The same applies when we sum out \(y_i\) for \(2 \leqslant i \leqslant m-1\), if \(|y_i-x_i|>M\sqrt{b_i-a_i}\).

When we sum out \(x_m\), if \(|x_m-y_{m-1}|>M\sqrt{a_m-b_{m-1}}\), then we restrict the sum in (5.33) accordingly, and we pick up again at most a fraction \(\delta (M) \leqslant 1 /M^2\) of the upper bound in (5.34), simply because \(\sum _{|x|> M \sqrt{n}} q_n(x) = \mathrm P(|S_n| > M \sqrt{n}) \leqslant 1 / M^2\). The same applies when we sum out \(x_i\) for \(3 \leqslant i \leqslant m-1\).

The same argument applies to the sums over \(x_2\) and \(y_1\), see the lines following (5.34).

For the last sum over \(x_1\), if \(|x_1| > M \sqrt{N}\), by (2.8) and the fact that \(\phi \) has compact support, we pick up at most a fraction \(\delta (M) = O(1/M^2)\) of the sum (5.35).

Since for fixed m, there are only finitely many cases that violate (5.44), while \(\delta (M) \rightarrow 0\) as \(M \rightarrow \infty \), then (5.45) follows readily. \(\quad \square \)

6 Further Bounds Without Triple Intersections

We recall that the centered third moment \({{\mathbb {E}}}\big [ \big ( Z^{N, \beta _N}_{s,t}(\phi , \psi ) - {{\mathbb {E}}}[Z^{N, \beta _N}_{s,t}(\phi , \psi )] \big )^3 \big ]\) of the partition function averaged over both endpoints admits the expansion (4.3). We then denoted by \(M^{N, \mathrm NT}_{s, t}(\phi , \psi )\) the contribution to (4.3) coming from no triple intersecitons, see (4.4).

We now consider the partition functions \(Z^{N, \beta _N}_{s, t}(w, \psi )\), \(Z^{N, \beta _N}_{s, t}(\phi , z)\) averaged over one endpoint, see (2.4), (2.5), and also the point-to-point partition function \(Z^{\beta _N}_{s, t}(w, z)\), see (2.1) (we sometimes write \(Z^{N, \beta _N}_{s, t}(w, z)\), even though it carries no explicit dependence on N).

The centered third moment \({{\mathbb {E}}}\big [ \big ( Z^{N, \beta _N}_{s,t}(*, \dagger ) - {{\mathbb {E}}}[Z^{N, \beta _N}_{s,t}(*, \dagger )] \big )^3 \big ]\) for \(* \in \{\phi , w\}\), \(\dagger \in \{\psi , z\}\) can be written as in (4.3), starting from the polynomial chaos expansions (2.11)–(2.12). In analogy with (4.4), we decompose

where \(M^{N, \mathrm{T}}_{s, t}(*, \dagger )\) and \(M^{N, \mathrm NT}_{s, t}(*, \dagger )\) are the contributions with and without triple intersections. In this section we prove the following bounds, which will be used to prove Proposition 4.3.

Lemma 6.1

(Bounds without triple intersections). Let \(\phi \in C_c({\mathbb {R}}^2)\), \(\psi \in C_b({\mathbb {R}}^2)\) and \(w,z \in {\mathbb {Z}}^2\). For any \(\varepsilon >0\), as \(N\rightarrow \infty \), we have

We prove relations (6.2)–(6.4) separately below. For the quantity \(M^{N, \mathrm NT}_{s, t}(*, \dagger )\), when both arguments \(*, \dagger \) are functions, we derived the representation (5.2). Analogous representations hold when one of the arguments \(*, \dagger \) is a point. For instance, in the point-to-point case:

Note that in contrast to (5.2) there is no factor \(N^{-3}\), because the definition of \(Z^{\beta _N}_{s,t}(w, z)\), unlike \(Z^{N,\beta _N}_{s,t}(\phi , \psi )\), contains no such factor, cf. (2.1) and (2.6).

The identity (6.5) holds also for \(M^{N, \mathrm NT}_{s, t}(\phi , z)\) (replace \(q_{s,a_i}(w,x_i)\) by \(q^N_{s,a_i}(\phi ,x_i)\), \(i=1,2\)) and for \(M^{N, \mathrm NT}_{s, t}(w, \psi )\) (replace \(q_{b_{i},t}(y_{i}, z)\) by \(q^N_{b_{i},t}(y_{i}, \psi )\), \(i=m-1,m\)).

Proof of (6.2)

To estimate \(I^{(N, m)}_{0, N}(w, \psi )\), we replace \(\psi \) by the constant \(|\psi |_\infty \), and we take \(|\psi |_\infty \leqslant 1\). We then focus on \(I^{(N, m)}_{0, N}(w, 1)\), and we can set \(w=0\), by translation invariance. By the analogue of (6.5) (note that \(q^N_{b_i,t}(y_i,\psi ) \equiv 1\) for \(\psi \equiv 1\)), we get

where we stress that the product starts from \(i=2\) and we set \(b_0:=0\) and \(y_0:= 0\). By the definition of \(U_N\) in (2.15)–(2.16), we have the following identity, for fixed \(b_1 \in {\mathbb {N}}\), \(y_1 \in {\mathbb {Z}}^2\):

Therefore we can rewrite (6.6) as

We now sum out the spatial variables \(y_m\), \(x_m\), ..., \(y_2\), \(x_2\), \(y_1\), arguing as in (5.32)–(5.33), to get the following upper bound, analogous to (5.31), for a suitable \(c < \infty \):

Then we set \(u_i := a_i-b_{i-1}\), \(v_i:=b_i-a_i\) for \(2\le i\le m\), and we rename \(u_1 := b_1\). This allows to bound \(a_i - b_{i-2} \geqslant u_i + u_{i-1}\) for all \(i \geqslant 2\) (including \(i=2\), since \(a_i - b_{i-2} = a_2 \geqslant u_2 + b_1\)). Then, for \(\lambda > 0\), we insert the factor \(e^{\lambda } \prod _{i=2}^m e^{-\lambda (\frac{v_i}{N})}>1\) and we estimate, as in (5.36),

The first parenthesis is \(\leqslant c \big ( \tfrac{1}{\log N} + C_\lambda \big )\), see (5.37). Then we replace \(u_i\) by \(N u_i\), with \(u_i \in \frac{1}{N}{\mathbb {Z}}\), and bound Riemann sums by integrals, by monotonicity. This yields (for a possibly larger c)

The integral equals \(\phi ^{(m-1)}(u_1)\), see (5.11). We bound \(U_N(N u_1) \leqslant c_2 \, \frac{\log N}{N} \, {\hat{G}}_{\vartheta }(u_1) \) by (2.21) and (2.25), since \(u_1 > 0\). Recalling that \(\phi ^{(m-1)}(\cdot )\) is decreasing, we get

where for the last inequality, recalling that \({\hat{G}}_{\vartheta }(\cdot )\) is decreasing, we bounded the Riemann sum in brackets by the integral \(\int _0^1 {\hat{G}}_{\vartheta }(u_1) \, \mathrm {d}u_1 \leqslant C_\lambda \), see (5.12).

Putting together (6.5) and (6.12), we can finally estimate

and using the first inequality in (5.13) we obtain

Since \(\lim _{\lambda \rightarrow \infty } C_\lambda = 0\), see (5.12), given \(\varepsilon > 0\) we can fix \(\lambda \) large so that \(32 c \, C_\lambda < \frac{\varepsilon }{2}\). Then for large N the exponent of \((e^2 N)\) in the last term is \(< \varepsilon \), which proves (6.2). \(\quad \square \)

Proof of (6.3)

From the first line of (6.5) we can write

To estimate \(\sum _{1 \leqslant a \leqslant N} \sum _{z \in {\mathbb {Z}}^2} I^{(N, m)}_{0, a}(w, z)\), we use the representation (6.5) with \(s=0\) and \(t=a\). We may also set \(w = 0\) (by translation invariance). We first perform the sum over \(a_1\) and \(b_m\), using (6.7) and the symmetric relation

We then obtain

If we rename \(y_m:=z\) and \(b_m:=a\), then we see that (6.15) differs from (6.8) only for the factor \(\sigma _N^{2(m-2)}\) (instead of \(\sigma _N^{2(m-1)}\)) and for the presence of the last kernel \(q_{b_{m-1},a}(y_{m-1},z) = q_{b_{m-1},b_m}(y_{m-1},y_m)\). The latter can be estimated using (1.8):

for some suitable constant c. As in (6.9), we first sum out the spatial variables, getting

Then we set \(u_1 := b_1\) and \(u_i := a_i-b_{i-1}\), \(v_i:=b_i-a_i\) for \(2\le i\le m\), which allows to bound \(a_i - b_{i-2} \geqslant u_i + u_{i-1}\) for \(i \geqslant 2\), as well as \(b_m - b_{m-1} \geqslant u_m\). Then, for \(\lambda > 0\), we insert the factor \(e^{\lambda } \prod _{i=2}^m e^{-\lambda (\frac{v_i}{N})}>1\) and, by (5.37), we obtain the following analogue of (6.10):

where the extra \(\log N\) comes from having \(\sigma _N^{2(m-2)}\) instead of \(\sigma _N^{2(m-1)}\) (by (1.14) and (1.10)).

We now switch to macroscopic variables, replacing \(u_i\) by \(N u_i\), with \(u_i \in \frac{1}{N}{\mathbb {Z}}\cap (0,1)\), and bound \(U_N(N u_1) \leqslant c_1 \, \frac{\log N}{N} \, {\hat{G}}_{\vartheta }(u_1)\) since \(u_1 > 0\), by (2.21) and (2.25). We then replace the Riemann sum in brackets by the corresponding integrals, similar to (6.11), with an important difference (for later purposes): since \(u_i \in \frac{1}{N} {\mathbb {Z}}\) and \(u_i > 0\), we can restrict the integration on \(u_i \geqslant \frac{1}{N}\) (possibly enlarging the value of c). This leads to

where the factor \(\frac{\log N}{\sqrt{N}}\) comes from the estimate on \(U_N(N u_1)\) and from the last kernel \(1/\sqrt{u_m}\).

If we define \({\widehat{\phi }}^{(k)}(\cdot )\) as the following modification of (5.11):

then, recalling (5.12), we can rewrite (6.18) as follows:

Similar to Lemma 5.4, we have the following bound on \({\widehat{\phi }}^{(k)}\), that we prove later.

Lemma 6.2

For all \(k\in {\mathbb {N}}\), the function \({\widehat{\phi }}^{(k)}(v)\) is decreasing on (0, 1), and satisfies

We need to estimate the integral in (6.20), when we plug in the bound (6.21). We first consider the contribution from \(u < \frac{1}{\sqrt{N}}\). In this case \({\hat{G}}_{\vartheta }(u) \leqslant \frac{4c_{\vartheta }}{(\log N)^2} \frac{1}{u}\), see (2.25), hence

where we first made the change of variables \(e^2Nu=w\), and then \(w=e^{2s}\), and denote \(C = 8e c_{\vartheta }\) for short. Then it follows by (6.21) that

We then consider the contribution from \(u\ge \frac{1}{\sqrt{N}}\). Since \({\hat{G}}_{\vartheta }(u) \leqslant \frac{c_{\vartheta }}{u}\), we have

hence by (6.21)

By (6.13) and (6.20), we finally see that

with \(C' := 3 \cdot 32 c \). If we fix \(\lambda \) large enough, then for large N we have \(64 \, c \, C_{N,\lambda } < 1\) (recall (6.20)), then the first sum in the RHS is finite, in agreement with our goal (6.3). Concerning the second sum, we can estimate it by

If we fix \(\lambda \) large enough, then for large N we have that the exponent is \(32 \, c \, C_{\lambda ,N} < \frac{1}{4}\), hence the last term is o(1) as \(N\rightarrow \infty \). This completes the proof of (6.3). \(\quad \square \)

In order to prove Lemma 6.2, we need the following analogue of Lemma 5.5.

Lemma 6.3

For all \(i\in {\mathbb {N}}_0\) and \(v\in \big (\frac{1}{N}, 1\big )\),

Proof

We can bound

For B, we make the change of variable \(u= \log (e^2Ns)\) to obtain

For A, we make the change of variable \(y=\frac{1}{2}\log e^2Ns\) and apply (5.25) to obtain

Combined with the bound for B, this gives precisely (6.23). \(\quad \square \)

Proof of Lemma 6.2

We follow the proof of Lemma 5.4. We first show that for all \(k\in {\mathbb {N}}\)

for suitable coefficients \({\hat{c}}_{k,i}\). For \(k=1\), note that by (6.19)

Therefore (6.26) holds for \(k=1\) with \({\hat{c}}_{1, 0}=0\) and \({\hat{c}}_{1, 1}=1\).

Assume that we have established (6.26) up to \(k-1\), then

Applying Lemma 6.3, we obtain

This shows that (6.26) holds, provided the coefficients \({\hat{c}}_{k,i}\) satisfy the recursion

which differs from the recursion (5.22) for \(c_{k,i}\) by a missing factor of 2. Note that \({\hat{c}}_{1,1}\) here is also only half of \(c_{1,1}\) in (5.19). Therefore we have the identity \({\hat{c}}_{k,i} = 2^{-k} c_{k,i}\), and Lemma 5.6 gives the bound \({\hat{c}}_{k, i}\le 16^k\). Substituting this bound into (6.26) then proves Lemma 6.2. \(\quad \square \)

Proof of (6.4)

We start from the analogue of (6.5), with \(q_{s,a_i}(w,x_1), q_{s,a_i}(w,x_1)\) replaced by \(q^N_{s,a_i}(\phi ,x_1), q^N_{s,a_i}(\phi ,x_2)\). Applying relation (6.14), we can write

We rename \(y_m:=z\), \(b_m:=a\) and bound \(q_{b_{m-1},a}(y_{m-1},z) \leqslant (c\sqrt{b_m - b_{m-1}})^{-1}\), as in (6.16). Next we sum over the space variables \(y_m, x_m, \ldots \) until \(y_3, x_3, y_2\), as in (5.32)–(5.33), which has the effect of replacing \(U_N(b_i-a_i, y_i - x_i)\) by \(U_N(b_i-a_i)\) and \(q_{b_{i-2}, a_i}(y_{i-2},x_i) \, q_{b_{i-1}, a_i}(y_{i-1},x_i)\) by \(c \, (\sqrt{(a_i-b_{i-1})(a_i - b_{i-2})})^{-1}\). Then we bound \(q^N_{0,a_2}(\phi , x_2) \leqslant |\phi |_\infty \), see (2.8), after which the sum over \(x_2\) gives 1, the sum over \(y_1\) gives \(U_N(b_1- a_1)\), and the sum over \(x_1\) is bounded by \(c \, N\), as in (5.35). This leads to estimate the RHS of (6.29) by

We now set \(u_i := a_i-b_{i-1}\) and \(v_i:=b_i-a_i\) for \(1\le i\le m\), with \(b_0 := 0\), and bound \(a_i - b_{i-2} \geqslant u_i + u_{i-1}\), while \(b_m - b_{m-1} \geqslant u_m\). Then we insert the factor \(e^{\lambda } \prod _{i=1}^m e^{-\lambda (\frac{v_i}{N})}>1\), for \(\lambda > 0\), and by (5.37) we bound the last display by

which is an analogue of (6.17). The exponent of \((\tfrac{1}{\log N} + C_\lambda )\) equals m, because we have m factors \(U_N(b_i - a_i)\), and the extra \(\log N\) comes from having \(m-1\) powers of \(\sigma _N^2\).

We now switch to macroscopic variables, replacing \(u_i\) by \(N u_i\), with \(u_i \in \frac{1}{N}{\mathbb {Z}}\cap (0,1)\), and replace the Riemann sum in brackets by the corresponding integrals, where as in (6.18) we restrict the integration on \(u_i \geqslant \frac{1}{N}\) (possibly enlarging the value of c). This leads to

where the factor \(N^{\frac{3}{2}}\) arises by matching the normalization factor \(N^{-m}\) of the Riemann sum and the term \(N^{-(m-2)-\frac{1}{2}}\) generated by the square roots, when we set \(u_i \rightsquigarrow N u_i\).

Note that the variable \(u_1\) does not appear in the function to be integrated in (6.31), so the integral over \(u_1\) is at most 1. Recalling the definition (6.19) of \({\widehat{\phi }}^{(k)}\), we have

By Lemma 6.2, we have

Therefore, if we set \(C_{\lambda ,N} := C_{\lambda ,N} := \frac{1}{\log N} + C_\lambda \) as in (6.20), recalling (6.5) we get

Given \(\varepsilon > 0\) we can fix \(\lambda \) large so that \(32 c \, C_\lambda < \frac{\varepsilon }{2}\). Then we have \(C_{\lambda ,N} = \frac{1}{\log N} + C_\lambda < \frac{2}{3}\varepsilon \) for large N. This concludes the proof of (6.4). \(\quad \square \)

7 Bounds on Triple Intersections

In this section, we prove Proposition 4.3. First we derive a representation for \(M^{N, \mathrm{T}}_{s, t}(\phi , \psi )\), which denotes the sum in (4.3) restricted to \({\varvec{A}}\cap {\varvec{B}}\cap {\varvec{C}}\ne \varnothing \) (recall (4.4)).

We denote by \({\varvec{D}}=(D_1, \ldots , D_{|{\varvec{D}}|}):={\varvec{A}}\cap {\varvec{B}}\cap {\varvec{C}}\), with \(D_i=(d_i, w_i)\), the locations of the triple intersections. If we fix two consecutive triple intersections, say \(D_{i-1} = (a,w)\) and \(D_i = (b,z)\), the contribution to (4.3) is given by

where \(M^{N, \mathrm{T}}_{a, b}(w, z)\) is defined in (6.1), together with \(M^{N, \mathrm{T}}_{a, b}(\phi , z)\) and \(M^{N, \mathrm{T}}_{a, b}(w, \psi )\). Then we obtain from (4.3) the following representation for \(M^{N, \mathrm{T}}_{s, t}(\phi , \psi )\) (where \({{\mathbb {E}}}[\xi ^3] := {{\mathbb {E}}}[\xi _{n,z}^3]\)):

To prove Proposition 4.3 we may assume \(t=1\), by Remark 1.3, and also \(\phi \ge 0\), \(\psi \ge 0\) (otherwise just replace \(\phi \) by \(|\phi |\) and \(\psi \) by \(|\psi |\) to obtain upper bounds). If we rename \((d_1, w_1) = (a,x)\) and \((d_{|\varvec{D}|}, w_{|\varvec{D}|}) = (b,y)\) in (7.1), we get the upper bound

where we set

Note that \({{\mathbb {E}}}[\xi ^3]\) actually depends on N, and vanishes as \(N\rightarrow \infty \). Indeed, recalling that \(\xi _{n,z} = e^{\beta _N \omega _{n,z} - \lambda (\beta _N)}-1\) and \(\lambda (\beta ) = \frac{1}{2}\beta ^2 +O(\beta ^3)\) as \(\beta \rightarrow 0\), see (2.10) and (1.1), we have

where the last equality holds by (1.14) and (1.10).

Then, to prove Proposition 4.3, by the bound (7.2) it would suffice to show that

so that the series \(\sum _{n=0}^\infty \varrho _N^n = (1-\varrho _N)^{-1}\) is bounded. We are going to prove the following stronger result, which implies the bound \(|M^{N, \mathrm{T}}_{0, N}(\phi , \psi )| = o(N^{-1/2 + \eta })\), for any fixed \(\eta > 0\).

Lemma 7.1

The following relations hold as \(N\rightarrow \infty \), for any fixed \(\varepsilon >0\):

- (a)

\(A_N = o(N^{\varepsilon -1/2})\);

- (b)

\(B_N = o(N^\varepsilon )\);

- (c)

\(\varrho _N = O((\log N)^{-1/2})\).

Before the proof, we recall that \({{\mathbb {E}}}\big [\big (Z^{N, \beta _N}_{a, b}(*, \dagger ) - q^N_{a, b}(*, \dagger )\big )^3\big ] = M^{N, \mathrm{T}}_{a, b}(*, \dagger ) + M^{N, \mathrm NT}_{a, b}(*, \dagger )\), for any \(* \in \{w, \phi \}\), \(\dagger \in \{z, \psi \}\), hence

Also note that \(M^{N, \mathrm NT}_{a, b}(*, \dagger )\ge 0\), see (4.3) and (6.5).

Proof of Lemma 7.1

We first prove point (b). By definition, see (2.7),

If we replace \(\psi \) by the constant 1 in the averaged partition function \(Z^{N,\beta _N}_{b, N}(y, \psi )\) we obtain the point-to-plane partition function \(Z^{\beta _N}_{N-b}(y)\), see (2.4) and (1.4). Then, by (1.32),

Lastly, by (6.2), we have

It suffices to plug these estimates into (7.5) with \(* = y\), \(\dagger = \psi \) and point (b) follows.

Next we prove point (a). First note that

where the last sum converges to \(\int \phi (x) \mathrm {d}x\) by Riemann sum approximation. Next note that we can bound \({{\,\mathrm{{\mathbb {V}}ar}\,}}\big (Z^{N, \beta _N}_{0,a}(\phi , x)\big ) \leqslant |\phi |_\infty ^2 \, {{\mathbb {E}}}[Z^{\beta _N}_a(x)^2] = O(\log N)\), arguing as in (7.6), hence

Lastly, by (6.4), we have

Plugging these estimates into (7.5) with \(* = \phi \) and \(\dagger = x\), point (a) follows.

We finally prove point (c). By the local limit theorem (1.8) we have \(q_a(x)\le \frac{c}{a}\) for some \(c < \infty \), uniformly in \(a\in {\mathbb {N}}\) and \(x\in {\mathbb {Z}}^2\). Therefore, recalling (7.4), we have

Next we bound \({{\,\mathrm{{\mathbb {V}}ar}\,}}\big (Z^{\beta _N}_{0,a}(0, z)\big ) \leqslant \sigma _N^{-2} \, U_N(a,z)\), see (2.15), and note that

by (2.18), (2.20) and (2.25). Bounding \(q_a(x)\le \frac{c}{a}\) and \(\sigma _N^{-2} = O(\log N)\), see (1.14) and (1.10), we obtain

For \(a \leqslant \sqrt{N}\) we can bound \(\log (e^2 N/a) \geqslant \log (e^2 \sqrt{N}) \geqslant \frac{1}{2} \log N\), while for \(\sqrt{N} < a \leqslant N\) we can simply bound \(\log (e^2 N/a) \geqslant \log e^2 = 2\). This shows that the last sum is uniformly bounded, since \(\sum _{a \geqslant 1} \frac{2 \, \log N}{a^2} + \sum _{a > \sqrt{N}} \frac{(\log N)^{2}}{2 \, a^2} = O(\log N) + O(\frac{(\log N)^{2}}{\sqrt{N}})\). We thus obtain

Lastly, by (6.3), we also have

If we plug the previous bounds into (7.5) with \(* = 0\) and \(\dagger = z\), point (c) is proved. \(\quad \square \)

8 Proof for the Stochastic Heat Equation

In this section we prove Theorems 1.7 and 1.9 on the variance and third moment of the solution to the stochastic heat equation.

We first give a useful representation of \(u^\varepsilon (t,\phi ):=\int _{{\mathbb {R}}^2} \phi (x) u^\varepsilon (t,x)\,\mathrm {d}x\). By a Feynman–Kac representation and the definition of the Wick exponential (see [CSZ17b] for details), it follows that \(u^\varepsilon (t,\phi )\) is equal in distribution to the Wiener chaos expansion