Abstract

We study dispersive effects of wave propagation in periodic media, which can be modelled by adding a fourth-order term in the homogenized equation. The corresponding fourth-order dispersive tensor is called Burnett tensor and we numerically optimize its values in order to minimize or maximize dispersion. More precisely, we consider the case of a two-phase composite medium with an eightfold symmetry assumption of the periodicity cell in two space dimensions. We obtain upper and lower bound for the dispersive properties, along with optimal microgeometries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Wave propagation in periodic heterogeneous media is ubiquitous in engineering and science. Denoting by \(\varepsilon \) the small ratio between the period size and a characteristic lengthscale, it can be modeled by the following scalar wave equation

with periodic coefficients \(a_\varepsilon (x):=a\left( {x\over \varepsilon }\right) \), a right hand side f(t, x) and initial date \(u^{\mathrm{init}}(x), v^{\mathrm{init}}(x)\). For simplicity, we assume that the domain of propagation is the full space \(\mathbb R^d\). It does not change much our results to consider another domain but the case of the full space avoids to discuss the boundary conditions, as well as the issue of boundary layers in the homogenization process. Here, a(y) a Y-periodic symmetric tensor and Y is the unit cube \([0,1]^d\). We assume that, for \(0<a_{\min }\le a_{\max }\),

For very small values of \(\varepsilon \), problem (1.1) can be studied by means of the homogenization theory [10, 12, 30, 38, 47, 53]. The result of homogenization theory is that the solution \(u_\varepsilon \) of (1.1) can be well approximated by the solution u of the following homogenized wave equation

where, in the periodic homogenization setting, \(a^*\) is a constant effective tensor given by an explicit formula involving cell problems (see Sect. 2 for details).

Although it is less classical, it is known that the homogenized equation can be improved by adding a small fourth-order operator and modifying the source term. This is the concept of “high order homogenized equation” that goes back to [10, 48] and has been studied by many authors [1, 2, 4, 23, 24, 32, 50]. In the present setting it reads

where \(\mathbb D^*\) is a fourth-order tensor, called Burnett tensor and studied in [19,20,21,22], and \(d^*\) is some second-order tensor (see Sect. 2 for details). Equations (1.3) and (1.4) can be established by two different methods: two-scale asymptotic expansions (Sect. 2), and Bloch wave expansions (Sect. 3). The interpretation of \(\mathbb D^*\) is that it plays the role of a dispersion tensor. This is explained and numerically illustrated in Sect. 4. In particular, for long times of order up to \(\varepsilon ^{-2}\), (1.4) is a better approximation of the wave equation (1.1) than (1.3) (this key observation was first made by [48] and further discussed in the references, just quoted above).

Dispersion is classically defined as the phenomenon by which waves with different wavelengths propagate with different velocities. In practice, it induces severe deformations of the profile of the propagating waves in the long time limit. Here we focus exclusively on dispersion induced by homogenization and not by the more classical dispersion effects arising in the high frequency limit (or geometric optics, see e.g. chapter 3 in [44]). In such a homogenization setting, dispersion can occur only in heterogeneous media. Since composite materials (which are of course heterogeneous) are ubiquitous in engineering, it is therefore very important to study its associated dispersion properties. Dispersion can be a good thing or a bad thing, depending on the type of applications which we have in mind. Clearly, dispersion is a nasty effect if one is interested in preserving the profile of a wave or signal during its propagation. On the contrary, dispersion could be beneficial if one wants to spread and thus diminish the intensity of, say, a sound wave. In any case, it makes sense to optimize the periodic structure, namely the coefficient a(y) in (1.1), in order to achieve minimal or maximal dispersion. There are a few rigorous bounds on the dispersive properties of periodic structures [21, 22] but no systematic numerical study. The goal of the present paper is to make a first numerical investigation in the optimization of these dispersive properties. We restrict our attention to two-phase composite materials with isotropic constituents. In this setting there is an extensive literature on the precise characterization of the set of all possible values of the homogenized tensor \(a^*\) (see [3, 53] and references therein). However, to our knowledge, nothing is known about the Burnett tensor \(\mathbb D^*\) (except the few bounds in [21, 22]). Therefore, we use shape optimization techniques in order to optimize the values of \(\mathbb D^*\) for a two-phase composite with prescribed volume fractions. Since \(\mathbb D^*\) is a fourth-order tensor, to simplify the analysis, we restrict ourselves to a plane 2-d setting and to a geometric 8-fold symmetry in the unit cell Y, which yields a kind of isotropy for \(\mathbb D^*\) (see the beginning of Sect. 9 for a discussion about the relevance of such a symmetry assumption).

A key feature of the dispersion tensor \(\mathbb D^*\) is that, contrary to the homogenized tensor \(a^*\), it is not scale invariant. More precisely, we prove in Lemmas 6.1 and 6.3 that if the periodicity cell is scaled by a factor \(\kappa \) then the dispersion tensor \(\mathbb D^*\) is scaled by \(\kappa ^2\). Actually, even if the periodicity cell is fixed to be the unit cube Y, then the microgeometry can be periodically repeated k times in Y (with \(k\ge 1\) any integer) and the dispersion tensor \(\mathbb D^*\) is thus divided by \(k^2\). In other words, by considering finer details in the unit periodicity cell, the dispersion tensor \(\mathbb D^*\) can be made as small as we want (in norm). As a consequence, the minimization of (a norm of) \(\mathbb D^*\) is an ill-posed problem (except if geometric constraint are added) while one can expect that the maximization problem is meaningful.

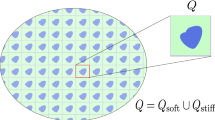

More specifically, we consider coefficients defined by

where \(a^A, a^B>0\) are two constant real numbers, \(Y_A\) and \(Y_B\) are a disjoint partition of Y, \({\mathbf{1}}_{Y_A}(y) , {\mathbf{1}}_{Y_B}(y)\) are the corresponding characteristic functions. Denote by \(\Gamma = \partial Y_A \cap \partial Y_B\) the interface between the two phases A and B. We rely on the level set method [41] for the shape optimization of the interface \(\Gamma \), as is now quite common in structural mechanics [8, 40, 54]. Under an 8-fold symmetry assumption for the unit cell Y, the dispersion tensor \(\mathbb D^*\) is characterized by two scalar parameters \(\alpha \) and \(\beta \). More precisely, for any vector \((\eta _i)_{1\le i\le d}\), Proposition 6.7 states that

We numerically compute bounds on \(\alpha \) and \(\beta \) under two equality constraints for the phase proportions and for the homogenized (scalar) tensor \(a^*\), as well as an inequality constraint for the perimeter of the interface \(\Gamma \). As a first numerical test (in Sect. 9.1) we minimize and maximize the simple objective function \(2\alpha +\beta \), corresponding to \(-\,\mathbb D^*\left( \eta \otimes \eta \right) :\left( \eta \otimes \eta \right) \) for the direction \(\eta =(1,1)\). When maximizing \(2\alpha +\beta \), the perimeter inequality constraint is not necessary and indeed is not active at the optima. However, the perimeter constraint is required and active when minimizing \(2\alpha +\beta \), since smaller details of the phase mixture yield smaller dispersion (this refinement process is stopped by the perimeter constraint). Since this non-attainment process is very general for dispersion minimization (according to our Lemma 6.3), our second numerical test (in Sect. 9.2) focuses only on maximizing dispersion, in the following sense. We compute the upper Pareto front in the plane \((\alpha ,\beta )\), which is classically defined as the set of values \((\alpha ^+,\beta ^+)\) for which there exists no other \((\alpha ,\beta )\) such that \(\alpha \ge \alpha ^+\) and \(\beta \ge \beta ^+\). In crude terms, computing this upper Pareto front can be interpreted as maximizing \(-\,\mathbb D^*\) (although \(\mathbb D^*\) is a tensor not a real number). It turns out that computing this Pareto front is a delicate task since the optimization process is plagued by the existence of many local optima (in contrast, our algorithm easily finds global optima when optimizing the homogenized tensor \(a^*\)). Therefore, we rely on a complicated strategy of continuation, re-initialization and non-convex approximation in order to obtain robust (hopefully global) optimal distributions of the two phases which minimize or maximize dispersion. Our main finding is that the upper Pareto front (which of course depends on the phase properties and proportions) seems to be a line segment. The corresponding optimal configurations are smooth and simple geometric arrangements of the two phases. Note that the checkerboard pattern seems to be optimal for maximal \(\alpha \) and \(\beta \). We conclude this brief description of our results by recognizing that other type of dispersive properties have already been optimized in a different context [34, 46, 52].

Let us now describe the contents of our paper. In Sect. 2 we recall the two-scale asymptotic expansion method for periodic homogenization, as introduced in [10, 12, 47]. We closely follow the presentation of [4]. The main result is Proposition 2.2 which gives (1.4) as a “high order homogenized equation”. In Proposition 2.5 we recall a result of [19] which states that the Burnett tensor \(\mathbb D^*\) is non-positive, making (1.4) an ill-posed equation. This inconvenience will be corrected later in Sect. 4.

In Sect. 3 we recall the classical theory of Bloch waves [12, 17, 19, 45, 56] which is an alternative method for deriving the homogenized problem (1.3), as well as the high order homogenized equation (1.4). The main result is Lemma 3.1, due to [18, 19], which proves that the Burnett tensor \(\mathbb D^*\) is the fourth-order derivative of the so-called first Bloch eigenvalue.

Section 4 explains how to correct Eq. (1.4) to make it well-posed (see Lemma 4.1). The main idea is a Boussinesq trick (i.e., replacing some space derivatives by time derivatives) which is possible because (1.4) is merely an approximation at order \(\varepsilon ^4\).

Section 5 presents some one-dimensional numerical simulations of wave propagation in a periodic medium. It compares the solutions of the original wave equation (1.1) with those of the homogenized equation (1.3) and the Boussinesq version of the high order homogenized equation (1.4). It demonstrates that, for long times of order \(\varepsilon ^{-2}\), approximation (1.4) is much better than (1.3). Section 5 features also some two-dimensional computations where one can see the clear differences, for long times, between the solutions of the homogenized equation (1.3) and the Boussinesq version of the high order homogenized equation (1.4) for either a large or a small value of the dispersion tensor.

Section 6 discusses some properties of the Burnett tensor \(\mathbb D^*\). First, we explain that, contrary to the homogenized tensor \(a^*\), the fourth-order tensor \(\mathbb D^*\) depends on the scaling of the periodicity cell. More precisely, if the cell Y is scaled to be of size \(\kappa >0\), then \(\mathbb D^*\) is scaled as \(\kappa ^2\mathbb D^*\). It implies that small heterogeneities yield small dispersion while large heterogeneities lead to large dispersion (see Lemma 6.3). Second, we prove that a standard 8-fold symmetry assumption of the coefficients a(y) in the unit cell Y (or of the two-phase geometry \({\mathbf{1}}_{Y_A}(y), {\mathbf{1}}_{Y_B}(y)\)) implies that the Burnett tensor \(\mathbb D^*\) is characterized by simply two scalar parameters.

Section 7 computes the shape derivative, i.e. the shape sensitivity, of the tensor \(\mathbb D^*\) with respect to the position of the interface \(\Gamma \). Our main result is Theorem 7.3 which gives a rigorous shape derivative. From a numerical point of view, Theorem 7.3 is difficult to exploit because it involves jumps of discontinuous solution gradients through the interface \(\Gamma \). Therefore, following [5], in Proposition 7.6 we compute a simpler shape derivative for a discretized version of \(\mathbb D^*\).

Section 8 explains our numerical setting based on the level set algorithm of Osher and Sethian [41] and on a steepest descent optimization algorithm. Constraints on the volume, the perimeter and the the homogenized tensor \(a^*\) are enforced by means of Lagrange multipliers. We iteratively update the Lagrange multipliers so that the constraints are exactly satisfied at each iteration of the optimization algorithm.

Section 9 contains our numerical results on the optimization of the Burnett tensor \(\mathbb D^*\) with respect to the interface \(\Gamma \). Since a first numerical test in Sect. 9.1 shows that dispersion can be minimized by a fine fragmentation of the two phase mixture (which is just stopped at a length-scale determined by the perimeter constraint), we later focus on determining the Pareto upper front for dispersion. It is not known if the set of dispersion tensor \(\mathbb D^*\) is convex or if its upper bound is a concave curve in the \((\alpha ,\beta )\) plane. Thus, we explain in Sect. 9.2 that a quadratic function of \(\alpha \) and \(\beta \) is optimized in order to be able to cope with a non-concave upper bound. In the same subsection we explain our intricate optimization strategy in order to avoid the many local optima that can be found. It is a combination of continuation, varying initializations and refinement process of the Pareto front. We are quite confident in our numerical approximation of the Pareto front since we checked it is insensitive to the choice of interface initializations, and of parameters for the minimized quadratic function. In Fig. 17 we compare the upper Pareto front for various aspect ratios of the two phases, while in Fig. 19 the comparison is made for various volume fractions. Eventually, Sect. 9.3 is devoted to the optimization of the other dispersion tensor \(d^*\) which is responsible for the dispersion of the source term in the righ hand side of the high order homogenized equation (1.4).

Finally Sect. 10 is devoted to the (technical) proof of Theorem 7.3 which was stated in Sect. 7.

1.1 Notations

In the sequel we shall use the following notations.

-

1.

\((e_1,\ldots ,e_d)\) denotes the canonical basis of \(\mathbb R^d\).

-

2.

\(Y=[0,1]^d\) denotes the unit cube of \(\mathbb R^d\), identified with the unit torus \(\mathbb R^d/\mathbb Z^d\).

-

3.

\(H^1_{\sharp }(Y)\) denotes the space of Y-periodic functions in \(H^{1}_\mathrm{loc}(\mathbb R^d)\).

-

4.

\(H^1_{\sharp ,0}(Y)\) denotes the subspace of \(H^1_{\sharp }(Y)\) composed of functions with zero Y-average.

-

5.

The Einstein summation convention with respect to repeated indices is used.

-

6.

All tensors are assumed to be symmetric, even if we do not write it explicitly. More precisely, if C is a n-order tensor \(C=\left( C_{i_1\cdots i_n}\right) _{1\le i_1,\ldots ,i_n\le d}\), it is systematically identified with its symmetrized counterpart \(C^S\), defined by

$$\begin{aligned} C^S = \left( {1\over n!}\sum _{\sigma \in \mathfrak {S}_n}C_{\sigma (i_1)\cdots \sigma (i_n)} \right) _{1\le i_1,\ldots ,i_n\le d}, \end{aligned}$$where \(\mathfrak {S}_n\) is the permutation group of order n.

-

7.

If C is a n-order tensor, the notation \(C\nabla ^n u\) means the full contraction

$$\begin{aligned} C\nabla ^n u = \sum _{i_1,i_2,\ldots ,i_n=1}^d C_{i_1,i_2,\ldots ,i_n} \frac{\partial ^n u}{\partial x_{i_1} \cdots \partial x_{i_n}} \,, \end{aligned}$$where C is indistinguishable from its symetric counterpart \(C^S\).

2 Two-scale asymptotic expansions

In this section we briefly recall the method of two-scale asymptotic expansion [10, 12, 47] and, in particular, explain how dispersion can be introduced in a so-called higher-order homogenized equation, as first proposed by [48], and studied by many others [1, 2, 4, 9, 23, 24, 32, 50].

The starting point of the method of two-scale asymptotic expansion is to assume that the solution of (1.1) is given by the following ansatz

where \(y\,\rightarrow \, u_n(t,x,y)\) are Y-periodic. This ansatz is formal since, not only the series does not converge, but it lacks additional boundary layer terms in case of a bounded domain. Plugging this ansatz in (1.1) and using the chain rule lemma for each term

we deduce a cascade of equations which allow us to successively compute each term \(u_n(t,x,y)\). To make this cascade of equations explicit, we introduce the following operators

which satistify, for any function v(x, y),

Then, we deduce the cascade of equations

These equations are solved successively by means of the following lemma, called Fredholm alternative (see [10, 12, 47] for a proof).

Lemma 2.1

For \(g(y)\in L^2(Y)\), consider the following problem

It admits a solution \(w(y) \in H^1_{\sharp }(Y)\), unique up to an additive constant, if and only if the right hand side satisfies the following compatibility condition

Thanks to Lemma 2.1 we now deduce from (2.3) the formulas for successive terms \(u_n\) in the ansatz. These formulas will imply a separation of variables, namely each function \(u_n(t,x,y)\) is a sum of products of cell solutions depending only on y and on space derivatives of the homogenized solution u(t, x). Before we start the study of the cascade of equations, we emphasize two important notations for the sequel. First, according to the Fredholm alternative of Lemma 2.1, all cell solutions, introduced below, have zero-average in the unit cell Y. Second, all tensors below are symmetric (i.e. invariant by a permutation of the indices) since they are contracted with the symmetric derivative tensors \(\nabla ^k_x u(t,x)\). Nevertheless, for the sake of simplicity in the notations, we do not explicitly symmetrize all tensors but the reader should keep in mind that they are indeed symmetric.

Computation of \(u_0\): since the source term is zero, the solution is constant with respect to y,

Computation of \(u_1\): the source term satisfies the compatibility condition and by linearity we obtain

where \(\chi _i\) and \(\chi ^{(1)}_\eta =\sum _{i=1}^d \eta _i \chi _i\) are solutions in \(H^1_{\sharp ,0}(Y)\) of the equations

Computation of \(u_2\): the third equation of (2.3) has a solution if and only if its source term has a zero Y-average, which leads to the homogenized equation

where the homogenized symmetric matrix \(a^*\) is given by

Inserting (2.5), the third equation of (2.3) becomes

Hence, defining for \(i,j\in \{1,\ldots ,d\}\)

\(u_2\) can be written as

where the functions \(\chi _{ij}\) and \(\chi ^{(2)}_\eta :=\chi _{ij}\eta _i\eta _j\) are the solutions in \(H^1_{\sharp ,0}(Y)\) of the equations

Note that only the symmetric part of \(b_{ij}\) plays a role in (2.9) and the same is true for \(\chi _{ij}\) in (2.11).

Computation of \(u_3\): starting from here, namely for \(n\ge 3\), the solvability condition of the Fredholm alternative for the existence of \(u_n\) is

Thus, there exists a solution \(u_3\) in (2.3) if and only if (2.14) is satisfied for \(n=3\) which, since the Y-averages of the cell solutions \(\chi _i\) are zero, leads to an equation for \(\tilde{u}_1\)

with a tensor \(C^*\) defined by

It turns out, by symmetry in i, j, k, that this tensor vanishes, \(C^*=0\) (see [4, 37]). Therefore, since its initial data vanishes, the function \(\tilde{u}_1\) vanishes too,

Let us now compute \(u_3\) which, by (2.3), is a solution of

Replacing \(u_2\) and \(u_1\) by their expressions (2.11) and (2.5), introducing the solutions \(w_k\) in \(H^1_{\sharp ,0}(Y)\) of

the solutions \(\chi _{ijk}\) in \(H^1_{\sharp ,0}(Y)\) of

where

and using (2.6), (2.10), \(u_3\) can be written as

Equation of \(u_4\): there exists a solution \(u_4\) in (2.3) if and only if condition (2.14) for \(n=4\) is satisfied. Replacing \(u_2\) and \(u_3\) by their formulas (2.11) and (2.22) leads to an equation for \(\tilde{u}_2\)

where \(\mathbb B^*\) is defined by

and

We simplify (2.23) by recalling that \(C^*=0\) and using the homogenized equation (2.7) to replace \(\frac{\partial ^2 u}{\partial t^2}\) by \(f+\mathrm{div}\left( a^*\nabla u\right) \). Then, introducing the tensor

we deduce that (2.23) is equivalent to

We do not compute explicitly \(u_4\) (although it is possible) since our only interest in studying the equation for \(u_4\) is to find the homogenized equation (2.27) for \(\tilde{u}_2\). We are now in a position to collect the above results and to give an approximate formula for the exact solution \(u_\varepsilon \) of (1.1)

where u is a solution of the homogenized equation (2.7), \(u_1\) is defined by (2.5) and \(u_2\) by (2.11). Each term, \(u_1\) and \(u_2\) is the sum of a zero Y-average contribution and of \(\tilde{u}_1\) and \(\tilde{u}_2\) defined by

which are defined as the solutions of (2.15) and (2.27), respectively. Furthermore, we know from (2.17) that \(\tilde{u}_1(t,x) = 0\) is identically zero. Therefore, on average, (2.28) implies that

It is possible to find a single approximate equation for \(v_\varepsilon \) by adding equation (2.7) with (2.27) multiplied by \(\varepsilon ^2\): it yields

Neglecting the term of order \(\varepsilon ^4\) in (2.30) gives the “higher order” homogenized equation (1.4), as announced in the introduction.

We summarize our results in the following proposition.

Proposition 2.2

The “high order” homogenized equation of the wave equation (1.1) is

Remark 2.3

Writing an effective equation for a truncated version of the non oscillating ansatz has been studied in various settings (see [10, 23, 32, 48, 50]) under the name of “higher order homogenization”. Proposition 2.2 gives the “second order” homogenized equation which is a proposed explanation of dispersive effects for wave propagation in periodic media [1, 2, 23, 32, 48] or of second gradient theory in mechanics [50].

Remark 2.4

Note that the initial data did not enter the entire asymptotic process which is purely formal at this stage.

A fundamental property of the Burnett tensor \(\mathbb D^*\), discovered by [19], is that it is non-positive.

Proposition 2.5

([19]) The fourth-order tensor \(\mathbb D^*\), defined by (2.26), satisfies for any \(\eta \in \mathbb R^d\)

where \(\chi ^{(1)}_\eta \) is defined by (2.6) and \(\chi ^{(2)}_\eta \) by (2.12).

Remark 2.6

If the tensor \(\mathbb D^*\) were non-negative, Eq. (2.31) would be well-posed. Unfortunately, \(\mathbb D^*\) has the wrong sign, i.e. it is non-positive and (2.31) is thus not well-posed. We shall see in Sect. 4 how to modify it to make it well-posed by using a Boussinesq trick.

Remark 2.7

The tensor \(\mathbb D^*\) arises in the two-scale asymptotic expansion process as the coefficient fourth-order tensor of the fourth-order derivative \(\nabla ^4u\). Recall from our notations that \(\mathbb D^*\nabla ^4u\) means the full contraction of both fourth-order tensors. Therefore, only the symmetric part of \(\mathbb D^*\) is accessible by this method. In other words, \(\mathbb D^*\) belongs to the class of fully symmetric fourth-order tensors which satisfy, for any permutation \(\sigma \) of \(\{i,j,k,l\}\),

This class of fully symmetric fourth-order tensors is completely characterized by the knowledge of their quartic form

Indeed, differentiating the quartic form four times with respect to \(\eta _i,\eta _j,\eta _k, \eta _l\) allows us to recover the (symmetrized) coefficient \(\mathbb D^*_{ijkl}\).

In one space dimension, the formula for \(\mathbb D^*\) is simpler, as stated in the next lemma.

Lemma 2.8

([19]) In one space dimension, we have \(\mathbb D^*=-a^*d^*\) where \(a^*\) is defined by (2.8) and \(d^*\) is defined by (2.25).

3 Bloch wave method

Another method of homogenization is the so-called Bloch wave decomposition method [45, 56]. Its application to periodic homogenization is discussed in [12, 17]. It relies on a family of spectral problems for the operator \(A_{yy}\) in the unit cell Y. More precisely, for a given parameter \(\eta \in Y\), we look for eigenvalues \(\lambda =\lambda (\eta )\) in \(\mathbb R\) and eigenvectors \(\phi =\phi (\eta )\) in \(H^1_{\#}(Y)\), normalized by \(\Vert \phi \Vert _{L^2(Y)}=1\), satisfying

where \(A(\eta )\) is the translated (or shifted) operator defined by

with, of course, \(A(0)=A_{yy}\). The above spectral problem for \(A(\eta )\) in the unit torus Y, the so-called Bloch problem, admits an infinite countable number of non-negative eigenvalues and corresponding normalized eigenfunctions [45, 56]. We are interested in the first eigenvalue \(\lambda _1 (\eta )\) which is the relevant one in the homogenization process. When \(\eta =0\), one can check that \(\lambda _1(0)=0\) is a simple eigenvalue of \(A (0) = A_{yy}\) with constants as eigenfunctions. Regular perturbation theory proves then that \(\lambda _1 (\eta )\) is simple and analytic in a neighborhood of \(\eta =0\). We recall some results from [18, 19] about the fourth-order Taylor expansion of \(\lambda _1 (\eta )\) at \(\eta =0\).

Lemma 3.1

The first eigenvalue \(\lambda _1(\eta )\) admits the following fourth-order expansion:

where \(\frac{1}{8\pi ^2} \nabla _\eta ^2\lambda _1(0) = a^*\) is the homogenized matrix defined by (2.8) and \(\frac{1}{4!(2\pi )^4}\nabla _\eta ^4\lambda _1(0) = \mathbb {D}^*\) is a symmetric fourth-order tensor (called Burnett tensor) which is equivalently defined by

where the functions \(\chi _{ij}\) are defined by (2.12) and \(\hat{\chi }_{ijk}\) are the solutions in \(H^1_{\sharp ,0}(Y)\) of

where \(\chi _k\) are given by (2.6) and \(c_{ijk}\) are given by (2.21).

As usual, the tensor \(\mathbb D^*\) and the functions \(\hat{\chi }_{ijk}\) are understood as symmetrized (this is obvious for \(\mathbb D^*\) which arises as the fourth-order derivative of the eigenvalue \(\lambda _1\)). Note that the functions \(\hat{\chi }_{ijk}\) are different from the previous ones \({\chi }_{ijk}\) defined by (2.20) since \(\hat{\chi }_{ijk}=\chi _{ijk}+a^*_{ij}\,w_k\).

Remark 3.2

As a by-product of Lemma 3.1 it was shown in [18] that the \(\eta \)-derivatives of the first eigenfunction \(\phi _1(y,\eta )\) coincide with the solutions of some cell problems.

A fundamental property of the Bloch waves is that they diagonalize the operator \(A_{yy}\) in \(L^2(\mathbb R^d)\). More precisely, we have the following Bloch wave decomposition written in rescaled variables \(x=\varepsilon y\) and \(\xi = \eta /\varepsilon \).

Lemma 3.3

Any function \(f\in L^2(\mathbb R^d)\) can be decomposed as

where

and \(\phi _n(y,\eta )\) is the n-th normalized eigenfunction of (3.1). Furthermore, it satisfies Parseval equality

We now explain how the Bloch wave method is used for the homogenization of the wave equation (1.1). First, we recall the definition of the Fourier transform \(\hat{f}(\xi )\) of a function \(f(x) \in L^2(\mathbb R^d)\)

For simplicity, let us replace the fixed (with respect to \(\varepsilon \)) initial data and source term in (1.1) by well-prepared initial data and source in terms of Bloch waves. Denoting by \(\hat{u}^{\mathrm{init}}(\xi )\), \(\hat{v}^{\mathrm{init}}(\xi )\) and \(\hat{f}(t,\xi )\) the Fourier transforms of \(u^{\mathrm{init}}(x)\), \(v^{\mathrm{init}}(x)\) and f(t, x) [in the sense of (3.8)], we introduce these new forcing term and initial data

and change (1.1) into

Similarly, using Lemma 3.3, we decompose the solution of (3.10) as

Since the eigenbasis \(\{\phi _n\}\) diagonalizes the elliptic operator, equation (3.10) is reduced to a family of ordinary differential equations: for any \(\xi \in \varepsilon ^{-1}Y\), \(\hat{u}^\varepsilon _1(t,\xi )\) is a solution of the following ordinary differential equation

Using the Taylor expansion (3.2) of \(\lambda _1\), we deduce that

At least formally, dropping the \(\mathcal {O}(\varepsilon ^4)\) in the above equation, \(\hat{u}_1^\varepsilon (t,\xi )\) is well approximated by \(\hat{v}_\varepsilon (t,\xi )\) which is the Fourier transform of the solution \(v_\varepsilon (t,x)\) of the following high order homogenized equation

This equations is identical to the “higher order” homogenized equation (1.4), or (2.30), except for the right hand side which does not feature the additional term \(\varepsilon ^2\mathrm{div}\left( d^*\nabla f\right) \). This is due to our replacement of the original right hand side f by its well-prepared variant \(f_\varepsilon \), defined by (3.9) (see [4] for a more complete explanation).

In any case, the differential operator of the “higher order” homogenized equation is the same whatever the method of derivation, be it two-scale asymptotic expansions or Bloch wave decomposition. Once again, the fourth-order tensor \(\mathbb D^*\) is a manifestation of dispersive effects in the wave propagation.

4 Boussinesq approximation

The high order homogenized equations (1.4), (2.31) and (3.14) are not well posed since, by virtue of Proposition 2.5, the tensor \(\mathbb D^*\) has the “wrong” sign (the bilinear form associated to the operator \(\mathbb D^*\,\nabla _x^4\) is non-positive). The goal of this section is to explain how to modify these equations in order to make them well-posed by using a classical Boussinesq trick (see e.g. [16] for historical references). This trick has been applied in recent works [1, 2, 23, 24, 32]. It is also well known in the study of continuum limits of discrete spring-mass lattices [33].

The key point is that both Eqs. (3.13) and (2.30) are actually defined, up to the addition of a small remainder term of order \(\varepsilon ^4\). Therefore one can modify them adding any term of the same order \(\varepsilon ^4\), without altering their approximate validity. Let us explain the Boussinesq trick for (3.14) (the case of (2.31) is completely similar). We define the minimum value

which is a non-positive number \(m\le 0\) because of Proposition 2.5 (if \(m>0\) were positive, (3.14) would be well posed and there would be nothing to do). Introducing the non-negative second order tensor \(C = -m \mathrm{Id}\ge 0\), we define a fourth order tensor \(\mathfrak {D}^*\) by

which is non-negative in view of (4.1). Then, the Fourier transform of (3.14)

can be replaced by

since truncating (4.3) implies

By the inverse Fourier transform, applied to (4.4), we deduce the following equation

which is well posed because C and \(\mathfrak {D}^*\) are non-negative.

We summarize our result in the following lemma.

Lemma 4.1

Up to an error term of order \(\mathcal {O}(\varepsilon ^4)\), the high order homogenized equation (3.14) is equivalent to

while the high order homogenized equation (1.4) is equivalent to

which are both well posed because \(C\ge 0\) and \(\mathfrak {D}^*\ge 0\).

Remark 4.2

In 1-d, by virtue of Lemma 2.8, we have \(\mathbb D^*=-a^* d^*\) with \(d^*>0\). Therefore, in 1-d it is possible to choose \(\mathfrak {D}^*=0\) and \(C=d^*\). Then, the right hand side of (4.5) is simply f, as in the usual homogenized equation (1.3).

From a numerical point of view, (4.5) should be solved rather than the ill-posed original Eq. (1.4). Of course, any choice of matrix C, which makes (4.2) non-negative, is acceptable. Therefore, there is a whole family of higher order homogenized equation (4.5), all of them being equivalent up to order \(\varepsilon ^4\). In this context, the dispersive effect is measured by the fourth-order tensor \(\mathbb {D}^*\) and not by \(\mathfrak {D}^*\) alone.

It was proved in [23, 32] (see also [2]) that the solution \(v_\varepsilon \) of (4.5) provides an approximation of the exact solution \(u_\varepsilon \) of (1.1), up to an error term of order \(\varepsilon \) in the \(L^\infty _t (L^2_x)\)-norm for long times up to \(T\varepsilon ^{-2}\).

Remark 4.3

The dispersive character of the high order homogenized equation (4.5) can easily be checked for plane-wave solutions, in the absence of any source term. Indeed, plugging in (4.5) a plane wave solution

where \(\mathbf{u}\in \mathbb R\) is the amplitude, \(\omega \in \mathbb R^+\) the frequency and \(\xi \in \mathbb R^d\) the wave number, we obtain the dispersion relation

For \(\varepsilon |\xi |\ll 1\), a Taylor expansion of (4.6) yields

Recall from (4.2) that \(\mathfrak {D}^*= \mathbb {D}^*+ C\otimes a^* + R\), with \(R\cdot \xi \otimes \xi \otimes \xi \otimes \xi \ge 0\) for any \(\xi \). Denoting by \(\xi ^0\) a minimizer in (4.1), we have \(R\cdot \xi ^0 \otimes \xi ^0 \otimes \xi ^0 \otimes \xi ^0=0\) and \(R \xi ^0 \otimes \xi ^0 \otimes \xi ^0 =0\) by minimality. Thus, in this optimal direction we deduce

From (4.6) we deduce the group velocity

where the corrector term can be computed in the optimal direction

Simplifying further to the isotropic case, \(a^* \xi ^0\cdot \xi ^0= a^*|\xi ^0|^2\), we obtain

Since \(m\le 0\) by virtue of (4.1), we deduce that, up to fourth order, the group velocity is smaller when taking into account dispersive effect. Furthermore, to reduce dispersion (namely to have \(V(\xi ^0)\) as close as possible to \(\sqrt{a^*} \xi ^0/|\xi ^0|\)) is equivalent to minimize |m| or, in other words, to minimize the (absolute) value of \(\mathbb {D}^*\).

5 Numerical simulation of the dispersive effect

To illustrate the dispersive effect and explain the role of the high order homogenized equation (4.5), we perform some numerical experiments in 1-d and 2-d. Similar computations previously appeared in [2, 48], therefore our goal is purely pedagogical and illustrative. To simplify, the source term f is set to zero.

5.1 Numerical results in 1-d

We first start with the one-dimensional case. By virtue of Lemma 2.8 the one-dimensional high order homogenized equation (1.4) reads as follows:

By virtue of Lemma 4.1, this equation is equivalent, approximately up to an error of \(\mathcal {O}(\varepsilon ^4)\), to

where \(C\ge 0\) plays the role of a parameter (a scalar in 1-d). Following the test case of [2], in the numerical simulations, the periodic coefficient is

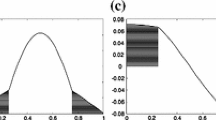

with \(\epsilon =0.05\). Then, the homogenized tensor \(a^*\) and dispersive tensor \(d^*\) are \(a^*=1\) and \(d^*=0.00909633\), respectively. The computational domain is \(\Omega =(-\,0.5,0.5)\), complemented with periodic boundary conditions, and we discretize it with a space step \(\Delta x = 1/2000\) and a time step \(\Delta t =0.02\times \Delta x\). We use a leapfrog scheme in time and, in space, a fourth-order centered finite difference scheme for the diffusion term and a second order centered scheme for the dispersive term. We consider two different sets of initial condition which are non-oscillating. The first type of initial data features a zero initial velocity and triggers two waves (see Fig. 1) propagating symmetrically in opposite directions:

The second set of initial data yields a single wave (see Fig. 2) for the standard homogenized equations, propagating with group velocity \(\sqrt{a^*}\):

In Figs. 1 and 2, we compare the numerical solutions of the original wave equation (1.1), of the homogenized wave equation (1.3) and of the high order homogenized equation (5.1), for three different values of the C parameter, and at different times T. The five different curves are: case 1, the solution of (1.1); case 2, the solution of (1.3); case 3, the solution of (5.1) with \(C=d^*\); case 4, the solution of (5.1) with \(C=2d^*\) and case 5, the solution of (5.1) with \(C=4d^*\).

We notice that all solutions are very close (in the supremum norm) for short times (say \(T=1\)) while for larger times (say \(T=100\)) only the solutions of the high order homogenized equation stay close to the “exact” solution [while the homogenized solution propagates at the wrong speed, a clear manifestation of dispersive effects not taken into account in (1.3)]. At very long times (say \(T=400\)), the agreement between the exact and high order homogenized solutions is very good for the first example but less convincing for the second example: this may be due to the more complex profile of the solutions which may be more prone to numerical diffusion/dispersion that pollute their accuracy for such long times. In any case, the high order homogenized equation (5.1) is clearly a better approximation than the standard homogenized equation (1.3).

5.2 Numerical results in 2-d

We now turn to the two-dimensional case. The goal is here to compare the standard homogenized wave equation and the high order homogenized wave equation for various values of the dispersion tensor. It is almost impossible to make a comparison with the solution of the original wave equation (1.1), because it would require a too fine mesh. Therefore, our goal is to compare an optimal maximal value of the dispersion tensor and a non-optimal one.

The computational domain \(\Omega \) is the unit square with periodic boundary conditions. The initial conditions are set to \(u^{\mathrm{init}}=\{1-8(x-0.5)^2+16(x-0.5)^4) \}^2 \{1-8(y-0.5)^2+16(y-0.5)^4) \}^2\) and \(v^{\mathrm{init}}=0\). We consider a two-phase periodic material with \(a^A=10\) and \(a^B=30\), which corresponds to the material properties of case 3 in Fig. 17. The resulting homogenized coefficient is set to \(a^*=17.325\). The period \(\epsilon \) is set to \(\epsilon =0.05\).

We perform numerical simulations in three cases. The first case is the standard homogenized wave equation. The second case is the high oder homogenized wave equation with an approximate maximal value of \(\alpha =0.05\) and \(\beta =0\) (see Fig. 17). The third case is also the high oder homogenized wave equation with a non-optimal dispersion tensor with small \(\alpha =0.02\) and \(\beta =0\). We rely on a spectral or Fourier-based numerical algorithm for high accuracy and almost no diffusive or dispersive effects of the numerical scheme (as in [48]). More precisely, the initial data is decomposed on Fourier modes and the ordinary differential equations (with respect to time) for each modes are solved exactly. The numerical solution is then recovered by the inverse Fourier transform (of course, a FFT algorithm is used for the sake of CPU time). The mesh sizes for both the space variable x and the Fourier variable \(\xi \) are \(2^{16} \times 2^{16}\). In Fig. 3 are plotted the solution at various times \(t= 20, 50, 100\) in \(\Omega \), as well as a comparison of the three solution along the line \(y=0.5\). One can check that the three solutions become different at large times \(t= 50, 100\). In particular, the solution with larger dispersion (case 2) effect is more different from the homogenized solution (case 1) than the solution with smaller dispersion (case 3).

2-d wave computation. a Case 1 at \(t = 20\), b case 2 at \(t = 20\), c case 3 at \(t = 20\), d plot on the \(y = 0.5\) at \(t = 20\), e case 1 at \(t = 50\), f case 2 at \(t = 50\), g case 3 at \(t = 50\), h plot on the \(y = 0.5\) at \(t = 20\), i case 1 at \(t = 100\), j case 2 at \(t = 100\), k case 3 at \(t = 100\), i plot on the \(y = 0.5\) at \(t = 20\),

We checked that our solutions in Fig. 3 are converged under mesh refinement, namely the results are almost identical for the two meshes \(2^{16} \times 2^{16}\) and \(2^{15} \times 2^{15}\). In other words, the Fourier numerical method is dispersion-free for both meshes up to time \(t=100\).

6 Some properties of the Burnett tensor \(\mathbb D^*\)

We first investigate the dependence of \(\mathbb D^*\) to the choice of the periodicity cell. For any integer \(k\ge 1\), define the coefficients

which are just the periodic repetition of smaller cells of size 1 / k in the unit cell Y. The same microstructure or geometry can be modeled by a or \(a_k\) but with a different value of the small parameter. Define

Then we have

In other words, if more periodic patterns are present in the unit cell, there are less unit cells in the macroscopic domain and the ratio \(\epsilon _k\) is larger. One can reproduce the homogenization process of Sect. 2 with these new coefficients \(a_k\) and small parameters \(\epsilon _k\). According to Proposition 2.2, the new high order homogenized equation of the wave equation (1.1) is

with new homogenized properties \(a^*_k, \mathbb D^*_k, d^*_k\) corresponding to the new coefficient \(a_k\).

Lemma 6.1

For any integer \(k\ge 1\), one has

In other words, \(\varepsilon _k^2 \mathbb D^*_k =\varepsilon ^2 \mathbb D^*\) and \(\varepsilon _k^2 d^*_k = \varepsilon ^2 d^*\).

Proof

Let us denote by \(\chi ^k_{i}\) and \(\chi ^k_{ij}\) the cell solutions for the coefficients \(a_k\). It is easily seen that

from which we deduce the desired properties. \(\square \)

Remark 6.2

As a consequence of Lemma 6.1, the dispersion tensor can be made as small as desired (in norm) by considering smaller and smaller periodic patterns in the unit cell. However, there is no contradiction because the product \(\varepsilon _k^2 \mathbb D^*_k\) is constant. In any case, there is no point in minimizing the norm or a negative linear combination of entries of \(\mathbb D^*\), except if one adds a geometrical constraint (like an upper bound on the perimeter) which would prevent the unlimited fragmentation of the periodic microstructure.

A similar result holds true if one considers a scaled version of the unit cell.

Lemma 6.3

For any real number \(\kappa >0\), consider a scaled periodicity cell \(Z=(0,\kappa )^d\). Introduce the scaled variable \(z:=\kappa y\), with \(y\in Y\), and define the Z-periodic coefficients \(a_\kappa (z):=a(\frac{z}{\kappa })\). Then, its homogenized coefficients satisfy

Proof

Note first that, when computing homogenized formula on Z, one has to average on the cell Z which has volume \(\kappa ^d\). Let us denote by \({\chi }^\kappa _{i}\) and \({\chi }^\kappa _{ij}\) the cell solutions for the coefficients \(\tilde{a}\). It is easily seen that

from which we deduce the desired properties. \(\square \)

Remark 6.4

As a consequence of Lemma 6.3, if one can change the periodicity cell, then the dispersion tensor can be made as large (or small) as desired by considering larger (or smaller) periodicity cells. However, for a given physical configuration, there is no contradiction because the product \(\varepsilon _\kappa ^2 \mathbb D^*_\kappa \) is constant. In any case, if one fix the periodicity cell to be \(Y=(0,1)^d\), then one cannot use this scaling argument and the norm of \(\mathbb D^*\) can be bounded from above. Indeed, in 1-d, for a two-phase mixture of \(a_A, a_B\) in proportions \(\gamma , (1-\gamma )\), the following upper bound on \(-\mathbb D^*\) was proved in [21]

and this upper bound is uniquely attained by a simple laminate of \(a_A, a_B\) with just one point-interface in the unit cell \(Y=(0,1)\).

We now consider the effect of rotations on the periodicity cell. The analytic formula of Lemma 6.5 will be useful to check some of our numerical results which feature equivalent shapes, up to rotations (see Remark 9.7).

Lemma 6.5

Let \(\mathscr {R}\) be a rotation matrix and consider the rotated variable \(z:=\mathscr {R}y\), as well as the rotated material properties \(\tilde{a}(z):=\mathscr {R}a(\mathscr {R}^Tz)\mathscr {R}^T\). Then, the homogenized properties of \(\tilde{a}(z)\) satisfy

Proof

Let us denote by \(\tilde{\chi }_{i}(z)\) and \(\tilde{\chi }_{ij}(z)\) the cell solutions for the coefficients \(\tilde{a}(z)\). One can check that, for any vector \(\eta \in \mathbb R^d\), we have \(\tilde{\chi }^{(1)}_\eta (z) = \chi ^{(1)}_{\mathscr {R}\eta } (\mathscr {R}^Tz)\) and \(\tilde{\chi }^{(2)}_\eta (z) = \chi ^{(2)}_{\mathscr {R}\eta } (\mathscr {R}^Tz)\), from which we deduce the desired properties. \(\square \)

In order to simplify the analysis of the fourth-order tensor \(\mathbb D^*\), we choose to restrict the geometry of the periodic coefficients. From now on we make the following 8-fold symmetry assumption on the periodic coefficient a(y):

-

1.

\(y \rightarrow a(y)\) is a scalar-valued function,

-

2.

a is even in the sense that \(a = a \circ S_i\) for \(1\le i\le d\), where \(S_i\) is the symmetry operator defined by

$$\begin{aligned} S_i(y) = (y_1,\ldots ,y_{i-1}, -y_i, y_{i+1},\ldots , y_d) , \end{aligned}$$ -

3.

a is \(90^\circ \)-rotationally invariant in the sense that \(a = a \circ P_{ij}\) for \(1\le i,j\le d\), where \(P_{ij}\) is the permutation operator defined by

$$\begin{aligned} P_{ij}(y) = P_{ij}(y_1,\ldots ,y_i,\ldots , y_j,\ldots , y_d) = (y_1,\ldots ,y_j,\ldots , y_i,\ldots , y_d) . \end{aligned}$$

Note that, by periodicity of the coefficients, one can consider that the unit cell is \(Y=(-\,1/2,+\,1/2)^d\) and the above assumption it equivalent to symmetries with respect to the principal hyperplanes (orthogonal to the main axis) and to the diagonal hyperplanes passing through the origin.

The following result is then easily proved (see e.g. section 3 in chapter 6 of [10], or [14, 15]).

Lemma 6.6

Under the 8-fold symmetry assumption, if w is a Y-periodic solution of

then \(w\circ S_i\) is a Y-periodic solution of

and \(w\circ P_{ij}\) is a Y-periodic solution of

Proposition 6.7

Under the 8-fold symmetry assumption, the dispersion tensor \(\mathbb D^*\) is characterized by two parameters \(\alpha , \beta \in \mathbb R\)

with constant \(\alpha \) and \(\beta \), independent of the indices i, j such that, for any \(i\ne j\),

Remark 6.8

From Proposition 6.7 and the negative character of the tensor \(\mathbb D^*\) (see Proposition 2.5) we deduce the following bounds on \(\alpha \) and \(\beta \)

The first bound is obtained by considering all components \(\eta _i\) equal to zero, but one. The second bound is obtained by maximizing \(\mathbb D^*\left( \eta \otimes \eta \right) :\left( \eta \otimes \eta \right) \): writing the optimality condition and using the symmetry of the problem, the maximizer is attained when all \(\eta _i^2\) are equal, yielding this second bound.

A similar computation shows that under the assumption of Proposition 6.7, the optimal value of the Boussinesq parameter m, defined by (4.1), is

Proof

The fact that, under the 8-fold symmetry assumption, the homogenized tensor \(a^*\) is scalar is classical, see e.g. Section 1.5 in [30] (the isotropy of \(a^*\) can easily be proved by the same arguments as those below). Using Lemma 6.6 one can check the following symmetry properties on the cell solutions

In particular, \(\chi _{ij}\) and \((\chi _i\chi _j)\) have the same symmetry properties. On the other hand, we also have the following permutation properties

From formula (2.32) in Proposition 2.5 we find that

where the index S means that \(\mathbb D^{*}_{ijkl}\) has to be symmetrized. Now, using the permutation properties of the cell functions, it is easily seen that

Similarly,

is independent of the couple of indices \(i\ne j\). Let us show that all other entries of the tensor \(\mathbb D^*\) vanish. Consider, for example, the entry

We decompose it as

For \(k\ne i,j\), the function \(d^k_{ii}= d^k_{ii} \circ S_j\) is even with respect to \(y_j\), while \(d^k_{ij}= - d^k_{ij} \circ S_j\) is odd with respect to \(y_j\). Therefore, the integrand \(a d^k_{ii} d^k_{ij}\) has zero average on Y. For \(k=i\), \(d^i_{ii}= d^i_{ii} \circ S_i\) is odd with respect to \(y_i\), while \(d^i_{ij}= d^i_{ij} \circ S_i\) is even with respect to \(y_i\) (as the derivative of an odd function). Again, the integrand \(a d^i_{ii} d^i_{ij}\) has zero average on Y. Eventually, for \(k=j\), \(d^j_{ii}= d^j_{ii} \circ S_j\) is odd with respect to \(y_j\) (as the derivative of an even function), while \(d^j_{ij}= d^j_{ij} \circ S_j\) is even with respect to \(y_i\) (as the derivative of an odd function), and the integrand \(a d^j_{ii} d^j_{ij}\) has zero average on Y. This implies that \(\mathbb D^{*}_{iiij} = 0\).

A similar argument work for all other entries \(\mathbb D^{*}_{iijk}\), with different i, j, k, and \(\mathbb D^{*}_{ijkl}\), with different i, j, k, l. A key ingredient is always that \(\chi _{ij}\) and \((\chi _i\chi _j)\) have the same symmetry properties. Therefore, we obtain the desired result. \(\square \)

Remark 6.9

A proof, similar to that of Proposition 6.7, shows that, under the 8-fold symmetry assumption, the second order tensor \(d^*\), defined by (2.25), is isotropic too, i.e. is proportional to the identity matrix.

7 Shape optimization

7.1 Two phase periodic mixture

From now on we shall study dispersive effects for wave propagation in a two-phase periodic medium. More precisely, in the context of periodic homogenization we assume that the unit cell \(Y=(0,1)^d\) is decomposed in two subdomains \(Y^A\) and \(Y^B\), separated by a smooth interface \(\Gamma \) (see Fig. 4). The subdomains \(Y^A\) and \(Y^B\) are filled with an isotropic linear material, which coefficients \(a^A\) and \(a^B\), respectively. We consider only those mixtures which satisfy the 8-fold symmetry assumption of Sect. 6. Our ultimate goal is to find the set of all possible dispersion tensors \(\mathbb D^{*}\) with, possibly, a volume constraint for the two phases, a perimeter constraint (on the measure of \(\Gamma \)) and a prescribed homogenized property \(a^*\). In particular, we would like to know which microstructures in the unit cell yield minimal or maximal values of \(\mathbb D^{*}\). To do so, we study the shape optimization problem which determines the optimal geometry in the unit cell Y in order to minimize some objective function depending on \(\mathbb D^{*}\) or more generally on the first and second-order cell problems (which give the value of \(\mathbb D^{*}\) by virtue of Proposition 2.5). More specifically, we consider coefficients defined by

where \(a^A, a^B>0\) are two constant positive real numbers, \({\mathbf{1}}_{Y_A}(y) , {\mathbf{1}}_{Y_B}(y)\) are the characteristic functions of \(Y^A\) and \(Y^B\).

To optimize the dispersive properties of a periodic two-phase geometry, we consider the following objective function:

where \(\mathscr {J}\) is a smooth function satisfying adequate growth conditions, \(\chi _i\) is the first order cell solution of (2.6), \(\chi _{ij}\) is the second order cell solution of (2.12). By virtue of Proposition 2.5, the entries of the dispersion tensor \(\mathbb D^{*}\) are of the type of (7.1).

We shall minimize the objective function \(J(Y^A)\) with constraints (all of them or just some of them) like volume fraction of \(Y^A\) and \(Y^B\), perimeter or measure of \(\Gamma \), homogenized tensor \(a^*\). As is well known, optimal designs do not always exist in such problems [3, 39, 43, 53], unless some smoothness, geometrical or topological constraint is added. We shall not discuss this issue since our goal is rather numerical than theoretical.

7.2 Shape derivative in the multi-material problem

In order to minimize the objective function (7.1), a gradient based shape optimization algorithm [36, 43, 51] is applied. Most of the works on the Hadamard method for computing shape sensitivity are concerned with problems where the domain boundary is the optimization variable. However, here we rather optimize an interface between two materials and there are fewer works in this setting. Let us mention the cases of Darcy flows [13], conductivity problems [28, 42] and elasticity systems [5, 7, 31]. There are also some works concerned with the optimization of the homogenized tensor \(a^*\) in terms of the cell properties a(y) [11, 26, 49]. In this work, we follow the same approach but applied to the higher order cell problems in homogenization and to the dispersion tensor \(\mathbb D^*\).

To begin with, we recall the approach of Murat and Simon [39] for shape differentiation. For a smooth reference open set \(\Omega \), we consider domains of the type

with a vector field \(\theta \in W^{1,\infty }(\mathbb R^d,\mathbb R^d)\).

Definition 7.1

The shape derivative of \(J(\Omega )\) at \(\Omega \) is defined as the Fréchet derivative in \(W^{1,\infty }(\mathbb R^d,\mathbb R^d)\) at 0 of the application \(\theta \rightarrow J((\mathrm{Id}+\theta )(\Omega ))\) The following asymptotic expansion holds in the neighborhood of \(0 \in W^{1,\infty }(\mathbb R^d,\mathbb R^d)\):

where the shape derivative \(J'(\Omega )\) is a continuous linear form on \(W^{1,\infty }(\mathbb R^d,\mathbb R^d)\).

Lemma 7.2

Let \(\Omega \) be a smooth bounded open set and \(\phi _1(x) \in W^{1,1}(\mathbb R^d)\) and \(\phi _2(x)\in W^{2,1}(\mathbb R^d)\) be two given functions. The shape derivatives of

are

and

for any \(\theta \in W^{1,\infty }(\mathbb R^d;\mathbb R^d)\), respectively, where H is the mean curvature of \(\partial \Omega \), defined by \(H=\mathrm{div}\, n\), n is the unit normal vector on \(\partial \Omega \) and ds is the \((d-1)\)-dimensional measure along \(\partial \Omega \).

In order to differentiate the material properties of a periodic composite material, one has to restrict the directions of differentiation to periodic vector fields. In other words, the boundary of the periodicity cell \(\partial Y\) is not modified and only the interface \(\Gamma \) between the two phases is moved. Thus, from now on the vector field \(\theta \) belongs to \(W^{1,\infty }(\mathbb R^d,\mathbb R^d)\) and is Y-periodic.

Theorem 7.3

The shape derivative of the objective function J, defined in (7.1) reads

with

where \([*]=*^A-*^B\) denotes the jump through the interface \(\Gamma \) and \(n=n^A=-n^B\) is the unit normal vector of \(\Gamma \). The suffix \(*_t\) denotes the tangential component of a vector. The adjoint states \(p_{i}, p_{ij} \in H_{\#,0}^{1}(Y)\) are defined as the unique periodic solutions of the following adjoint problems:

Remark 7.4

In the statement of Theorem 7.3 the Einstein summation convention with respect to repeated indices is used. The solutions of the adjoint equations (7.4) and (7.5) are defined up to an additive constant. They are unique in \(H_{\#,0}^{1}(Y)\), namely when their average on Y is zero. Therefore, when this normalization condition is used, the integral term \((\int _Y {p}_{ij})\) in (7.4) can safely be dropped.

Remark 7.5

The governing equation (2.12) of the second order corrector functions \(\chi _{ij}\) depends on the first order corrector functions \(\chi _i\). Therefore, in numerical practice, the functions \(\chi _{ij}\) are computed after the functions \(\chi _i\). On the other hand, the adjoint equation (7.4) for \(p_i\) depends on \(p_{ij}\), while the other adjoint equation (7.5) depends merely on the corrector functions \(\chi _i\) and \(\chi _{ij}\). Therefore, the second order adjoint functions \(p_{ij}\) are computed first, followed by the computation of the first order adjoint functions \(p_i\). This peculiarity is similar to the backward character of the adjoint equation for a time dependent problem.

The proof of Theorem 7.3 is obtained by a standard, albeit tedious, application of the Lagrangian method for shape derivation (see [7, 28, 42] for similar proofs). For the sake of completeness, it is given in Sect. 10.

7.3 Shape derivative of a discrete approximation

Although the formulation discussed in the previous subsection is satisfying from a mathematical point of view, its numerical implementation is quite tricky since it requires one of the two following delicate algorithms. A first possibility is to re-mesh at each iteration so that the mesh is fitted to the interface \(\Gamma \): then, jumps, as well as continuous quantities, can be accurately computed (see section 6.4 in [6]). A second possibility is to fix the mesh and capture \(\Gamma \) by e.g. a level set function. In this latter case, only approximate jumps and continuous quantities at the interface can be computed (see [7]). Both approaches are not obvious to implement in practice. To avoid these difficulties, we use the approximated shape sensitivity in the multi-material setting proposed in [5, 35, 55]. In this formulation, the optimization problem is first discretized and second its shape sensitivity is derived. Let us introduce a finite-dimensional space of conforming finite elements \(V_h\subset H^1_{0,\#}(Y)\) in which are computed the approximate solutions \(\chi _{i}^h\) of (2.6), \(\chi _{ij}^h\) of (2.12), \({p}_{i}^h\) of (7.4) and \({p}_{ij}^h\) of (7.5). More precisely, \(\chi _{i}^h \in V_h\) and \(\chi _{ij}^h \in V_h\) are the unique solutions of, respectively,

The precise definitions of \({p}_{i}^h\) and \({p}_{ij}^h\) will be given in the proof below. Typically, these approximate solutions are of the type

where \(N_h\) is the dimension of \(V_h\), \(\varphi _k(x)\) are the shape functions and \(\chi _{i,k}(\Gamma )\), resp. \(p_{i,k}(\Gamma )\), are the nodal values of \(\chi _i^{h}\), resp. \(p_i^{h}\), which depend on the interface \(\Gamma \). However, the basis functions \(\varphi _k\) are independent of \(\Gamma \) and, in particular, do not satisfy any special transmission conditions at the interface \(\Gamma \). It implies that the state functions \(\chi _i^h\) are shape differentiable [5].

We introduce the discrete objective function

Proposition 7.6

The discrete objective function \(J_h\) is shape differentiable and its derivative reads

where

Proof

The proof follows that of Proposition 1.5 in [5]. We use the Lagrangian method, which introduces a Lagrangian \(\mathscr {L}_h\) as the sum of the objective function and of the constraints multiplied by Lagrange multipliers, namely the discrete variational formulations (7.6) and (7.7),

where the functions \(\hat{\chi }_{i}^{h},\hat{\chi }_{ij}^{h},\hat{p}_{i}^{h},\hat{p}_{ij}^{h}\) are any functions in \(V_h\) and with

The space \(V_h\) is independent of the interface \(\Gamma \). Therefore, the Lagrangian \(\mathscr {L}_h\) can be differentiated in the usual manner and its stationarity is going to give the optimality conditions of the optimization problem.

By definition the partial derivative of \(\mathscr {L}_h\) with respect to \({p}_{i}^{h}\) and \({p}_{ij}^{h}\) leads to the variational formulation (7.6) and (7.7). Next, the discrete adjoint equations are obtained by taking the partial derivative of \(\mathscr {L}_h\) with respect to the variables \(\chi _{i}^{h}\) and \(\chi _{ij}^{h}\). It yields the following discrete variational formulations

which are approximations of the exact variational formulations of (7.4) and (7.5).

Eventually, by a classical result (see e.g. Lemma 3.5 in [7]), the partial derivative of \(\mathscr {L}_h\) with respect to \(\Gamma \) is precisely the shape derivative of \(J_h\). Applying Lemma 7.2 to the Lagrangian (7.11) leads to (7.10). \(\square \)

8 Level set and optimization algorithms

In order to make it possible to change topology by merging boundaries during the shape optimization procedure, the level set method, introduced by Osher and Sethian [41], is used. As shown in Fig. 5, the basic idea is that the boundary is represented as the zero iso-surface of a level set function \(\phi (y)\) and the subdomains are distinguished by the sign of the level set function \(\phi (x)\). More precisely, the level set function \(\phi (y)\) is defined by

Based on the level set representation, the approximate material property for the finite element analyses is defined as follows:

where \(h_w:\mathbb R\rightarrow \mathbb R\) is a smooth monotone approximate Heaviside function:

where the parameter \(w>0\) is the width of the smoothed approximate interface. There is nothing critical in this interface smoothing process (for example, other functions \(h_w\) could be used), but it makes easier the finite element implementation. For instance, the boundary element method [29] is not required here.

In the level set method for shape optimization, the shape changes during the optimization is represented as an evolution of the level set function. That is, introducing fictitious time \(t\in [0,T]\) (that could be interpreted as a descent step), the shape evolution is obtained by solving the following Hamilton-Jacobi equation:

with periodic boundary conditions and where the normal velocity V is defined as a descent direction for the shape sensitivity

A typical simple choice is to define V as an extension of v [which is defined merely on the interface \(\Gamma \) by (8.4)] to the entire cell Y. However, it is well known that the shape sensitivity does not have sufficient smoothness [36] and following a classical regularization process we replace V by a regularized variant \(V_{reg}\) which is defined as the unique solution in \(H_{\#}^{1}(Y)\) of

where \(\epsilon _r >0\) is a regularization parameter, having the interpretation of a smoothing length (typically of the order of a few mesh cell size). In numerical practice, since the interface \(\Gamma \) is not exactly meshed, we replace the interface integral in the above variational formulation by a volume integral with a smoothed Dirac function \(\delta _w(d_\Gamma (y))\) where \(d_\Gamma \) is the signed distance function to the interface \(\Gamma \) and \(\delta _\eta \) is defined as follows:

where \(\eta >0\) is a small parameter.

In order to minimize numerical dissipation in solving the Hamilton–Jacobi equation (8.3), the level set function is reinitialized as the signed distance function \(d_\Gamma \) at each optimization iteration by solving

starting from the initial condition \(\phi _0(y)\), the prior level set function. This equation, as well as the Hamilton–Jacobi equation (8.3), are solved by a standard second-order upwind explicit finite difference scheme.

In truth, we are performing constrained optimization so that the velocity V is not computed only in terms of the derivative of the objective function (8.4) but also in terms of the constraints derivatives. More precisely, we rely on a standard Lagrangian approach, i.e. we replace the objective function by the Lagrangian which is the sum of the objective function and of the constraints multiplied by Lagrange multipliers. These Lagrange multipliers are updated at each iteration in such a way that the constraints are exactly satisfied.

The optimization process goes on as follows. First, the level set function is initialized to represent an appropriate initial configuration. Second, iterations of a steepest descent method start. Each iteration is made of the following steps. In a first step, the governing equations are solved using the finite element method and the objective function is computed. If the objective function is converged, the optimization is stopped. If not, the adjoint equations are solved in a second step. In a third step, the Lagrange multipliers are estimated to satisfy the constraints and the resulting shape derivative of the Lagrangian is used to deduce the velocity V in (8.3) (this velocity is regularized as explained above). In a fourth step, the level set function is updated based on the Hamilton–Jacobi equation (8.3). Note that the Lagrange multiplier for the volume constraint is computed using Newton’s method so that the volume constraint is exactly satisfied. Finally, if the objective function is improved and all constraints are satisfied, the time increment of the Hamilton–Jacobi equation is increased and we go back to the second step for the next optimization iteration. Otherwise, the time increment is decreased and we go back to the fourth step.

9 Numerical simulations

In our numerical simulations, we impose the 8-fold symmetry condition for the two-dimensional unit cell. We shall call this situation “isotropic” since the homogenized tensor \(a^*\) reduces to a scalar tensor and, by Proposition 6.7, the dispersion tensor \(\mathbb D^*\) is characterized by only two scalar coefficients \(\alpha \) and \(\beta \) in any space dimension, while a general fully symmetric fourth-order tensor depends on 5 independent coefficients in 2-d and 15 in 3-d. This is clearly a major simplification of the computational task of describing all possible values of \(\mathbb D^*\). It is comparable to the assumption of isotropy in linearized elasticity, where such an assumption allowed Hashin and Shtrikman to find their famous bounds on the effective Lamé moduli of two-phase composites [25]. Of course, there may be unit cells without the 8-fold symmetry such that \(a^*\) and \(\mathbb D^*\) are isotropic in the above sense. Of course again, there may be non-isotropic tensors \(\mathbb D^*\) which may achieve more optimal values, at least in some space directions, than any isotropic tensors \(\mathbb D^*\). However, since this is a first numerical study, our assumption of 8-fold symmetry is reasonable, all the more since it is common practice in engineering to favor symmetric designs.

As shown in Fig. 6, the analysis domain is a quarter of the unit cell (for simplicity), while the design domain is one eighth of the unit cell, namely half of the analysis domain. The design is recovered on the other half of the analysis domain by symmetry with respect to the diagonal. The finite element analysis is performed with the FreeFEM++ software [27]. The domain is meshed with triangular elements and we use \(\mathbb P1\) finite elements. The two phases are isotropic with material properties \(a_A=10\) and \(a_B=20\), respectively. In all our numerical examples, we rely on a structured triangular mesh for the finite element analysis. This mesh is obtained from a regular squared mesh by dividing each square in four triangles along its diagonals. The squared mesh is used for the finite differences discretization of the Hamilton–Jacobi equation. The regularization parameter is set to \(\epsilon _r^2=0.05\), the width of the approximated Heaviside function is set to \(w=0.02\) and the width of the approximated Dirac function is \(\eta =0.055\).

9.1 Optimizing an energy associated to \(\mathbb D^*\)

In this subsection, as a first numerical test, we choose the specific direction \(\eta =(1,1)\) and we minimize or maximize the energy \(\mathbb D^*\left( \eta \otimes \eta \right) :\left( \eta \otimes \eta \right) =2\alpha +\beta \) for the Burnett tensor \(\mathbb D^*\) with volume constraint, perimeter constraint and prescribing the homogenized tensor \(a^{*}\) as follows:

where \(G_v \), \(G_p\) and \(G_{a^*} \) are constraint functions for the volume, the perimeter and the homogenized tensor \(a^{*}\), respectively. The constants \(\overline{G_{v}}\), \(\overline{G_{p}}\) and \(\overline{a^{*}}\) are prescribed values for these constraints, respectively. Note that the objective function \(J(\Gamma )=2\alpha (\Gamma ) +\beta (\Gamma )\) is always non-negative, being the second bound established in Remark 6.8. We shall use the optimization algorithm of Sect. 8. However, it is not obvious to find an admissible initial configuration, satisfying all constraints. Therefore, we adopt the following four-step optimization procedure, starting from any initialization:

- Step 1: :

-

optimize \(G_{v}\) alone to satisfy \(G_{v}=0\).

- Step 2: :

-

minimize \(G_{p}\), while keeping \(G_{v}=0\), to satisfy \(G_{p}\le 0\).

- Step 3: :

-

minimize \(G_{a^*}\), with the constraints \(G_{v}=0\) and \(G_{p}\le 0\), to satisfy \(G_{a^*}=0\).

- Step 4: :

-

optimize \(J(\Gamma )\), with the constraints \(G_{v}=0\), \(G_{p}\le 0\) and \(G_{a^*}=0\).

In this subsection, we use a \(50\times 50\) structured mesh for the analysis domain. The isotropic materials A and B have material properties \(a_A=10\) and \(a_B=20\). The upper limit of the perimeter constraint is set to \(\overline{G_p}=1.5\). We consider two cases for the volume constraint: either \(\overline{G_v}=0.9\) or \(\overline{G_v}=0.1\), which can be interpreted as material A being the inclusion in the first case, and material B being the inclusion in the second case. By symmetry, the homogenized tensor \(\overline{a^*}\) is isotropic and its prescribed scalar value is set to 10.705 in the first case and 18.72 in the second case. The relative error for judging whether the constraint function \(G_{a^*}\) is satisfied is set to \(5\times 10^{-3}\).

Figures 7 and 8 show initial and optimal configurations when material A (in black) is the inclusion and when material B (in white) is the inclusion, respectively. We test three different initial configurations, for which the optimal configurations may be quite different. Therefore, we guess there are many local optimal solutions for this type of optimization problems. In the minimization cases, the inclusions are changed to more complex and detailed shape. This is consistent with our Remark 6.2 which states that smaller inclusions yield smaller dispersion (in absolute value). On the other hand, in the maximization cases, the smaller inclusions may merge and give rise to simpler optimal shapes.

Volume fraction \(\overline{G_v}=0.9\) (material A, in black, being the inclusion). a Initial configuration of case 1. b configuration after step 3; \(\alpha =2.094\times 10^{-3}\), \(\beta =2.903\times 10^{-3}\), \(J=7.092\times 10^{-3}\). c minimized solution of case 1; \(\alpha =1.590\times 10^{-3}\), \(\beta =-1.995\times 10^{-3}\), \(J=1.185\times 10^{-3}\), \(J/J_{0}=0.1670\), \(G_p\): active. d maximized solution of case 1; \(\alpha =9.979\times 10^{-4}\), \(\beta =5.932\times 10^{-3}\), \(J=7.928\times 10^{-3}\), \(J/J_{0}=1.179\), \(G_p\): non-active. e Initial configuration of case 2. f configuration after step 3; \(\alpha =1.566\times 10^{-3}\), \(\beta =2.880\times 10^{-3}\), \(J=6.012\times 10^{-3}\). g minimized solution of case 2; \(\alpha =1.979\times 10^{-3}\), \(\beta =-2.425\times 10^{-3}\), \(J=1.533\times 10^{-3}\), \(J/J_{0}=0.2549\), \(G_p\): active. h maximized solution of case 2; \(\alpha =2.296\times 10^{-3}\), \(\beta =1.521\times 10^{-3}\), \(J=6.113\times 10^{-3}\), \(J/J_{0}=1.017\), \(G_p\): non-active. i Initial configuration of case 3. j configuration after step 3; \(\alpha =5.339\times 10^{-4}\), \(\beta =4.862\times 10^{-3}\), \(J=5.929\times 10^{-3}\). k minimized solution of case 3; \(\alpha =9.226\times 10^{-4}\), \(\beta =-4.680\times 10^{-4}\), \(J=1.377\times 10^{-3}\), \(J/J_{0}=0.2323\), \(G_p\): active. l maximized solution of case 3; \(\alpha =7.778\times 10^{-4}\), \(\beta =6.550\times 10^{-3}\), \(J=8.106\times 10^{-3}\), \(J/J_{0}=1.367\), \(G_p\): non-active

Volume fraction \(\overline{G_v}=0.1\) (material B, in white, being the inclusion). a Initial configuration of case 4. b configuration after step 3; \(\alpha =3.808\times 10^{-3}\), \(\beta =5.195\times 10^{-3}\), \(J=1.281\times 10^{-2}\). c Minimized solution of case 4; \(\alpha =3.903\times 10^{-3}\), \(\beta =-6.129\times 10^{-3}\), \(J=1.677\times 10^{-3}\), \(J/J_{0}=0.1308\), \(G_p\): active. d Maximized solution of case 4; \(\alpha =1.332\times 10^{-3}\), \(\beta =1.113\times 10^{-2}\), \(J=1.379\times 10^{-2}\), \(J/J_{0}=1.077\), \(G_p\): non-active. e Initial configuration of case 5. f Configuration after step 3; \(\alpha =2.966\times 10^{-3}\), \(\beta =4.893\times 10^{-3}\), \(J=1.083\times 10^{-2}\). g Minimized solution of case 5; \(\alpha =3.897\times 10^{-3}\), \(\beta =-6.151\times 10^{-3}\), \(J=1.643\times 10^{-3}\), \(J/J_{0}=0.1518\), \(G_p\): active. h Maximized solution of case 5; \(\alpha =1.331\times 10^{-3}\), \(\beta =1.112\times 10^{-2}\), \(J=1.378\times 10^{-2}\), \(J/J_{0}=1.273\), \(G_p\): non-active. i Initial configuration of case 6. j configuration after step 3; \(\alpha =9.965\times 10^{-4}\), \(\beta =8.009\times 10^{-3}\), \(J=1.000\times 10^{-2}\). k Minimized solution of case 6; \(\alpha =2.241\times 10^{-3}\), \(\beta =-2.234\times 10^{-3}\), \(J=2.247\times 10^{-3}\), \(J/J_{0}=0.2247\), \(G_p\): active. l Maximized solution of case 6; \(\alpha =1.334\times 10^{-3}\), \(\beta =1.117\times 10^{-2}\), \(J=1.384\times 10^{-2}\), \(J/J_{0}=1.384\), \(G_p\): non-active.

Remark 9.1

The perimeter constraint is active in all cases of minimizing \(J(\Gamma )\) and non-active in all cases of maximizing \(J(\Gamma )\). This is consistent with our Remark 6.2.

9.2 Upper bounds on the dispersive effect

The goal of this subsection is to numerically find upper bounds on the coefficient \(\alpha \) and \(\beta \) of the isotropic dispersive tensor \(\mathbb D^*\) defined in Proposition 6.7, constituting an upper Pareto front. Recall that this isotropy condition is the result of our \(8-\)fold symmetry assumption in the unit cell which drastically simplifies the task of finding upper bounds. We restrict ourselves to upper bounds since, by virtue of Remark 6.2, an optimal lower bound on \(-\,\mathbb D^*\) is zero (which is achieved by taking smaller and smaller repetition of the same microstructure in the unit cell). Of course, non trivial lower bounds could be found if one adds a perimeter constraint but we did not explore this issue and instead focus only on upper bounds.

We use numerical (gradient-based) optimization to find such upper bounds and, more precisely, the upper Pareto front in the \((\alpha ,\beta )\) plane for given phase properties and proportions. Our goal is thus to obtain the curve of upper bounds for all possible \((\alpha ,\beta )\), which is alike the celebrated Hashin-Shtrikman bounds [25] but for dispersive effects. If the set of all possible \((\alpha ,\beta )\) were convex, then the upper Pareto front could be obtained by maximizing all possible linear convex combination of \(\alpha \) and \(\beta \):

where \(\theta \in [0,1]\) is a parameter, the phase proportion and the homogenized tensor \(a^*\) are constrained, and a perimeter constraint is added on the interface \(\Gamma \) to exclude too fragmented configurations. Unfortunately, it is not known whether the set of all possible \((\alpha ,\beta )\) is convex or not and solving (9.1) for different values of \(\theta \in [0,1]\) would yield an upper bound merely on the convex envelope of this unknown set.

In order to capture a possibly non-convex upper bound, we modify (9.1) by replacing the linear objective function by a rotated quadratic one. The main idea (see Fig. 9) is to locally approximate the Pareto front by parabolas the main axis of which is oriented by an angle \(\theta \) with respect to the horizontal axis. Discretizing uniformly the angle as \(0\le \theta _i \le \frac{\pi }{2}\), for \(i=1,2,\ldots ,n\), we introduce rotated coordinates

where \(\alpha _{\max }\), \(\alpha _{\min }\), \(\beta _{\max }\), \(\beta _{\min }\) are maximum and minimum values for \(\alpha \) and \(\beta \) (see Remark 9.2 for their evaluation). Therefore, \(\alpha _N\) and \(\beta _N\) represent normalized \(\alpha \) and \(\beta \). Then, we replace the linear formulation (9.1) by the following parabolic formulation

where \(c_p>0\) is a parameter for the parabola (we shall discuss its choice in a next subsection). For sufficiently large values of \(c_p\) we expect that such parabolas can better fit the possibly non-convex shape of the \((\alpha ,\beta )\) set, although one can easily imagine non-convex (but highly unlikely) shapes that cannot be approached from the outside by parabolas. All the numerical results in this section have been obtained by using the parabolic formulation (9.2) with a complicated discretization and initialization strategy for the angle \(\theta \) that we now describe (see Fig. 10).

Optimization strategy for obtaining a Pareto front of optimal solutions. a Step 5; optimal solutions for \(\theta _1,\theta _2, \theta _3\) are computed with the initial shape obtained at step 4, b step 6; next point \(\theta _{i+1}\) for maximizing \(J_{i+1}\) is defined as the mid-point of the nearest neighbor pair having the longest distance, c step 7; two optimizations for the new parameter \(\theta _{i+1}\) are run for different initializations, being the optimal solutions for \(\theta _j\) and \(\theta _k\), d step 7; two candidates for the optimal solution of \(J_{i+1}\) are obtained, e step 8; the optimal solution of \(J_{i+1}\) is selected as the best of the two candidates, f step 9; previous solutions are deleted if the new \(i+1\)-th optimal solution is better, g step 9; Pareto front is updated, h step 11; if the solution for \(\theta =0\) is deleted, the new point is set to \(\theta =0\) and the two initializations are taken as the optimal solutions for \(\theta =\pi /2\) and \(\theta =\theta _i\).

Since the results of the previous subsection have shown evidence of possible local maxima for (9.2), we devise a strategy to avoid as much as possible the effect of local optima and blind initializations in the optimization process. The details of our optimization strategy are as follows:

- step 1: :

-

The level set function is initialized and the parabola parameter \(c_p\) is defined.

- step 2: :

-

The function \(G_{v}(\Gamma )\) is optimized until satisfying \(G_{v}(\Gamma )=0\).

- step 3: :

-

The function \(G_{p}(\Gamma )\) is minimized with \(G_{v}(\Gamma )=0\) until satisfying \(G_{p}\le 0\).

- step 4: :

-

The function \(G_{a^*}(\Gamma )\) is minimized with \(G_{v}=0\) and \(G_{p}\le 0\) until satisfying \(G_{a^*}=0\).

- step 5: :

-

As shown in Fig. 10a, starting from the optimal shape of step 4, three optimal solutions are computed by maximizing \(J_i|_{i=1,2,3}\) with constraints, for the angular parameters \(\theta _1=0\), \(\theta _2=\pi /2\) and \(\theta _3=\pi /4\). This is the end of the initialization and we now start iterating by adding more and more angles \(\theta _i\) and removing those which are not optimal (step 9).

- step 6: :

-