Abstract

In this article, the construction of nested bases approximations to discretizations of integral operators with oscillatory kernels is presented. The new method has log-linear complexity and generalizes the adaptive cross approximation method to high-frequency problems. It allows for a continuous and numerically stable transition from low to high frequencies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this article, the efficient numerical solution of Helmholtz problems

used to model acoustics and electromagnetic scattering will be considered. Herein, \(\kappa \) denotes the wave number and \(\varOmega ^c:={\mathbb {R}}^3{\setminus } \overline{\varOmega }\) the exterior domain of the obstacle \(\varOmega \subset {\mathbb {R}}^3\). The parameter \(\alpha \) and the right-hand side \(u_0\) appearing in the impedance condition (1b) are given. A convenient way to solve exterior problems is the reformulation as an integral equation [11, 13, 14] over the boundary \(\varGamma \) of the scatterer \(\varOmega \). The Galerkin discretization leads to large-scale fully populated matrices \(A\in {\mathbb {C}}^{M\times N}\),

with \(i\in I:=\{1,\dots ,M\},\,j\in J:=\{1,\dots ,N\}\), test and ansatz functions \({\varphi }_i,\,\psi _j\), having supports \(X_i:={\text {supp}\,}{\varphi }_i\) and \(Y_j:={\text {supp}\,}\psi _j\), respectively. We consider kernel functions \(K\) of the form

with an arbitrary asymptotically smooth (with respect to \(x\) and \(y\)) function \(f\), i.e., there are constants \(c_{\text {as},1},c_{\text {as},2}>0\) such that for all \(\alpha ,\beta \in {\mathbb {N}}^3\)

An example is \(K(x,y)=S(x-y)\) used in the single layer ansatz, where \(S(x)=\exp ({\text {i}}\kappa |x|)/(4\pi |x|)\) denotes the fundamental solution. Notice that the double layer potential \(K(x,y)=\partial _{\nu _y} S(x-y)\) is of the form (3) only if \(\varGamma \), i.e. the unit outer normal \(\nu \), is sufficiently smooth.

Depending on the application, low or high-frequency problems are to be solved. For low-frequency problems, i.e. for \(\kappa \,{\text {diam}}{\varOmega }\le 1\), the treecode algorithm [5] and fast multipole methods (FMM) [24–26, 35] were introduced to treat \(A\) with log-linear complexity. The panel clustering method [32] is directed towards more general kernel functions. All previous methods rely on degenerate approximations

using a short sum of products of functions \(u_i\) and \(v_i\) depending on only one of the two variables \(x\) and \(y\) chosen from a pair of domains \(X\times Y\) which satisfies the far-field condition

with a given parameter \(\eta _{\text {low}}>0\). Since replacing the kernel function \(K\) in the integrals (2) with degenerate approximations (5) leads to matrices of low rank, a more direct approach to the efficient treatment of matrices (2) are algebraic methods such as mosaic-skeletons [39] and hierarchical matrices [27, 29]. These methods also allow to define approximate replacements of usual matrix operations such as addition, multiplication, inversion, and LU factorization; cf. [23]. An efficient and comfortable way to construct low-rank approximations is the adaptive cross approximation (ACA) method [6]. The advantage of this approach compared with explicit kernel approximation is that significantly better approximations can be expected due the quasi-optimal approximation properties; cf. [7]. Furthermore, ACA has the practical advantage that only few of the original entries of \(A\) are used for its approximation. A second class are wavelet compression techniques [1], which lead to sparse and asymptotically well-conditioned approximations of the coefficient matrix.

It is known that the fundamental solution \(S\) (and its derivatives) of any elliptic operator allows for a degenerate approximation (5) on a pair of domains \((X,Y)\) satisfying (6); see [7]. This applies to the Yukawa operator \(-\Delta +\kappa ^2\) for any \(\kappa \), because the decay of \(S\) benefits from the positive shift \(\kappa ^2\). However, the negative shift \(-\kappa ^2\) in the Helmholtz operator introduces oscillations in \(S\). Hence, for high-frequency Helmholtz problems, i.e. for \(\kappa \,{\text {diam}}{\varOmega }>1\), the wave number \(\kappa \) enters the degree of degeneracy \(k\) in (5) in a way that \(k\) grows linearly with \(\kappa \). In addition to this difficulty, the mesh width \(h\) of the discretization has to be chosen such that \(\kappa h\sim 1\) for a sufficient accuracy of the solution. We assume that

which implies that \(\kappa \sim \sqrt{N}\sim \sqrt{M}\). Notice that the recent formulation [16] allows to avoid the previous condition and hence leads to significantly smaller \(N\). For high-frequency Helmholtz problems, one- and two-level versions [36, 37] were presented with complexity \(O(N^{3/2})\) and \(O(N^{4/3})\), respectively. Multi-level algorithms [2, 18] are able to achieve logarithmic-linear complexity. The previous methods are based on an extensive analytic apparatus that is tailored to the kernel function \(K\). To overcome the instability of some of the employed expansions at low frequencies, a wideband version of FMM was presented in [17]. The \({\mathcal {H}}^2\)-matrix approach presented in [4] is based on the explicit kernel expansions used in [2, 36] for two-dimensional problems.

A well-known idea from physical optics (cf. [21]) is to approximate \(K(\cdot ,y)\) in a given direction \(e\in {{\mathbb {S}}}^2\) by a plane wave. The desired boundedness of \(k\) with respect to \(\kappa \) when approximating

can be achieved if (6) is replaced by a condition which depends on \(\kappa \) and which is directionally dependent. This is exploited by the fast multipole methods presented in [12, 19, 20, 33] and the so-called butterfly algorithm [15, 34]. The aim of this article is to combine this approach with the ease of use of ACA, i.e., our aim is to construct approximations to \(A\) with complexity \(k^2\,N\log {N}\) using only few of the original entries of \(A\). In this sense, this article generalizes ACA (which achieves log-linear complexity only for low-frequencies) to high-frequency Helmholtz problems. An interesting and important property of the new method is that it will allow for a continuous and numerically stable transition from low to high wave numbers \(\kappa \) by a generalized far-field condition that fades to the usual condition (6) if the wave number becomes small. Since we approximate the operator rather than just its application to a vector, this article is expected to lay ground to future work related to the definition of approximate arithmetic operations and hence to efficient preconditioners for high-frequency problems.

The remaining part of this article is organized as follows. In Sect. 2, we prove estimates like (4) for \(\hat{K}\) in a cone around \(e\). The desired asymptotic smoothness of \(\hat{K}\) leads to a far-field condition on the pair of domains \((X,Y)\) on which such estimates are valid. In Sect. 3, these conditions will be accounted for by subdividing the matrix indices hierarchically. It will be seen that the number of blocks resulting from this partitioning is too large to allow for hierarchical matrix approximations with log-linear complexity. Therefore, nested bases approximations are required, and in Sect. 4 directional \({\mathcal {H}}^2\)-matrices will be introduced as a generalization of usual \({\mathcal {H}}^2\)-matrices [28, 31] that incorporate a directional hierarchy. To define approximate arithmetic operations for such matrices, the \({\mathcal {H}}^2\)-matrix operations introduced in [9] have to be generalized to take into account the directional hierarchy.

The results obtained in the first part of this article are amenable to any way of construction of the low-rank approximation, e.g. polynomial interpolation. Sect. 5 is devoted to the construction of directional \({\mathcal {H}}^2\)-matrices using only few of the entries of \(A\). Error estimates for the constructed nested bases are presented and complexity estimates prove the log-linear overall storage and the log-linear number of operations required by the new technique. Finally, Sect. 6 presents numerical experiments that validate our analysis.

2 Directional asymptotic smoothness

In [7] it is proved that the singularity function of any elliptic second-order partial differential operator is asymptotically smooth. The latter property can be used to prove convergence of ACA and hence the existence of degenerate kernel approximations (5). The wave number \(\kappa \) enters the estimates on \(k\) in (5) only through the expression \(c_\kappa :=\max \{1,\kappa \,\max _{x\in X,\,y\in Y}|x-y|\}\), which in general becomes unbounded in the limit \(\kappa \rightarrow \infty \). For parts \(X\) of the domain of small size, i.e. \(\kappa \,{\text {diam}}{X}\le 1\), satisfying (6), \(c_\kappa \) and hence \(k\) in (5) are bounded independently of \(\kappa \). This follows from the fact that the recursive construction of domains satisfying (6) ensures that (6) is sharp in the sense that there is a constant \(q>1\) such that

If the diameters of \(X\) and \(Y\) are comparable, i.e. \({\text {diam}}Y\le c\,{\text {diam}}X\) (which is a valid assumption for \({\mathcal {H}}\)-matrix partitions), then

and thus

is bounded independently of \(\kappa \). In the other case \(\kappa \,{\text {diam}}X>1\), we will not be able to prove asymptotic smoothness with bounded constants. However, a similar property can be proved if the far-field condition (6) is replaced with a frequency dependent condition and if the corresponding far field is subdivided into directions.

For the ease of presentation, \(K\) defined in (3) will be investigated as a function of \(x\) with fixed \(y\). Hence, after shifting \(x\) to \(x+y\) we consider

which can be regarded as \(K\) divided by the plane wave \(\exp ({\text {i}}\kappa (x,e))\) with some given vector \(e\in {\mathbb {S}}^2\); cf. Fig. 1. It will be shown that \(\hat{K}\) is asymptotically smooth with respect to \(x\) in a cone around \(e\). To this end, \(\hat{K}\) will be investigated in the coordinates \(r:=|x|\) and

As a first step, we investigate the derivative of \(\xi \) in direction \(e\), i.e., we assume that \(\cos {\varphi }>0\), where \({\varphi }:=\measuredangle (x,e)\). Notice that the desired smoothness cannot be expected for \(\cos {\varphi }\le 0\); see Fig. 1. Furthermore, it will be seen later that it is required that \(\sin {\varphi }\rightarrow 0\) as \(\kappa \rightarrow \infty \). Hence, the following upper bound on \(|\sin {\varphi }|\) is a valid technical assumption.

Lemma 1

Let \(x\in {\mathbb {R}}^3\) such that \(\cos {\varphi }>0\) and \(|\sin {\varphi }|<1/4\). Then there is a constant \(c_{\text {as},3}>0\) such that

Proof

We may assume that \(e=e_1:=(1,0,0)^T\). Hence, \(x_1=|x|\cos \measuredangle (x,e_1)>0\) and \(\xi (x)=|x|-x_1\). In order to estimate the \(p\)-th order derivative of \(\xi \) with respect to \(x_1\), we define

and extend \(\xi \) regarded as a function in \(x_1\) to

which is holomorphic in \(B_\rho (x_1),\,\rho :=|x|/2\). Consider \(z=\alpha +{\text {i}}\beta \in B_\rho (x_1)\). It is easy to see that any ball \(B_r(x_1)\) of radius \(0<r<x_1/\sqrt{2}\) is contained in the set \(\{(x,y)\in {\mathbb {C}}:x>|y|\}\). The assumption \(|\sin {\varphi }|<1/4\) implies that \(\cos {\varphi }>1/\sqrt{2}\) and hence that \(\rho =|x|/2=x_1/(2\cos {\varphi })<x_1/\sqrt{2}\). In particular, we obtain that \(\alpha >b>0\) and \(\alpha ^2>\beta ^2\). With \(A:=|z^2+b^2|=\sqrt{B^2+4\alpha ^2\beta ^2},\,B:=\alpha ^2-\beta ^2+b^2\), we have

Due to \(|\sqrt{x^2\pm y^2}-|x||\le y^2/\sqrt{x^2\pm y^2}\) for all \(x,y\in {\mathbb {R}}\), it follows that

and

Hence,

which follows from

and in particular \(A\ge |z|^2\). From Cauchy’s differentiation formula we obtain

\(\square \)

Using the previous estimate on the derivatives of \(\xi \), we are now able to estimate the derivatives of \(\hat{K}\) in directions \(e\) and \(e_\perp \in {\mathbb {S}}^2\) perpendicular to \(e\). To this end, we exploit (4) and make use of

which holds true for any direction \(v\). Thus, we require an estimate for \(|\partial ^i_{v} \exp ({\text {i}}\kappa \xi )|\). This will be done in the following two lemmas for the cases \(v=e\) and \(v=e_{\perp }\), respectively.

Lemma 2

Let \(x\) and \({\varphi }\) be as in Lemma 1. Let \(d>1/\kappa \) and define \(\eta :=\kappa \, d^2/r,\,\gamma :=\kappa \, d |\sin {\varphi }|\). Then

where \(c := 2 c_{\text {as},1}\exp (4c_{\text {as},3}\gamma )\) and \(\rho := \max \{c_{\text {as},2},4\} \max \{\gamma ,\eta \}\).

Proof

In order to estimate \(|\partial ^i_{e} \exp ({\text {i}}\kappa \xi )|\), we apply Faà di Bruno’s formula expressed in terms of Bell polynomials \(B_{n,k}\)

Using Lemma 1 and \(\kappa \,d>1\), we obtain

where \(\tilde{\rho }:=\max \{\eta ,\gamma \}\) and \(j_\nu = 0\) for all \(\nu > i-k+1\). From the multinomial theorem for \(\varvec{j}\in {\mathbb {N}}^d\) and \(L:=i-k+1\)

it follows that

and hence \(|\partial ^i_e\exp ({\text {i}}\kappa \xi )| \le \tilde{c}\, i!(2\tilde{\rho }/d)^i\) with \(\tilde{c} := \exp (4 c_{\text {as},3}\gamma )\). Together with (9) this yields

due to \(d/r=\eta /(\kappa \,d)<\eta \le \tilde{\rho }\) and \(\sum _{j=0}^p t^j \le 2\,t^p\) for \(t\ge 2\). \(\square \)

Lemma 3

Let \(e_\perp \in {\mathbb {S}}^2\) be any direction perpendicular to \(e\in {\mathbb {S}}^2\) and let \(d,\eta ,\gamma \) as in Lemma 2 such that \(\eta ,\gamma <1\). Then

where \(\rho := \max \{6/\sqrt{\tilde{\rho }}, 2 c_{\text {as},2}\}\, \tilde{\rho }\) and \(\tilde{\rho }:= \max \{\eta ,\gamma \}\).

Proof

First, we claim that \(\partial _{e_\perp }^i \exp ({\text {i}}\kappa \xi )\) consists of at most \(3^i\) summands of the form

where \(n,m\in {\mathbb {N}}\) with \(2(m+n) \ge i \ge m+n\) and \(|c_g| \le 2^i i!\). This can be seen by induction using

We estimate

Here, we used that \(|(x,e_\perp )| \le r|\sin {\varphi }| = r\gamma /(\kappa \,d)\). As in the proof of Lemma 2 we have that \(d/r <\eta \) and hence

The last estimate follows from \(2m+n\ge i/2\). This implies that \(| \partial ^{i}_{e_\perp } \exp ({\text {i}}\kappa \xi ) | \le i! (6/d)^i \,\tilde{\rho }^{i/2}\) and together with (9) we get

where \(\hat{c} := \max \{6/\sqrt{\tilde{\rho }}, 2 c_{\text {as,2}}\}\). \(\square \)

We return to the general case of estimating the derivatives of \(\hat{K}(x,y)\) for \(x\in X\) and \(y\in Y\). The last two lemmata show that the derivatives of \(\hat{K}\) can be controlled by the parameters \(\eta ,\,\gamma \), and \(d\). Let \(\chi (X)\) denote the Chebyshev center of \(X\). Using the angle condition

and the high-frequency far-field condition

with \(0<\gamma _{{\text {high}}},\eta _{{\text {high}}}<1\), we obtain for the choice \(d={\text {diam}}{X}\) and \(x\mapsto x-y\) that \(d>1/\kappa \) and

The following lemma shows that \(\gamma =\kappa \,{\text {diam}}{X}\sin \measuredangle (x-y,e)\) is bounded by \(\tfrac{\gamma _{{\text {high}}}+\eta _{{\text {high}}}}{1-\eta _{{\text {high}}}}\).

Lemma 4

Let \(X\) and \(Y\) satisfy (10) and (11). Then for \(x\in X\) and \(y\in Y\)

Proof

Let \(u\times v\) denote the cross product of \(u,v\in {\mathbb {R}}^3\). Then

It follows that

Due to \(\eta _{\text {high}}|\chi (X)-y|\ge \kappa ({\text {diam}}{X})^2\ge {\text {diam}}{X}\), we obtain that the denominator of the last expression is bounded from below by \((1-\eta _{\text {high}})|\chi (X)-y|\). Hence,

\(\square \)

As a consequence of the angle condition (10) and the far-field condition (11) we obtain from Lemmas 2 and 3 for \(x\in X\) and \(y\in Y\)

where \(c\) is independent of \(\kappa \), the directions \(e,e_\perp \in {\mathbb {S}}^2\) satisfy \((e,e_\perp )=0\) and \(0<\rho <1\) for small enough \(\eta _{{\text {high}}}\) and \(\gamma _{{\text {high}}}\). Figure 2 shows that the angle condition is not a result of overestimation. The angle of the region in which \(\hat{K}\) is smooth decreases with \(\kappa \).

3 Matrix partitioning

The aim of this section is to partition the set of indices \(I\times J,\,I=\{1,\dots ,N\}\) and \(J=\{1,\dots ,M\}\), of the matrix defined in (2) into sub-blocks \(t\times s,\,t\subset I\) and \(s\subset J\), such that the associated supports

satisfy (11) in the high-frequency case \(\kappa \,{\text {diam}}X_t>1\) and (6) if \(\kappa \,{\text {diam}}{X_t}\le 1\).

Before we discuss the matrix partition, let us make some assumptions that are in line with the usual finite element discretization. The first assumption is that the overlap of the sets \(X_i,\,i\in I\), is bounded in the sense that there is a constant \(\nu >0\) such that

Furthermore, the surface measure \(\mu \) has the property that there is \(c_\varGamma >0\) such that

for all \(X\subset \varGamma \). The usual way of constructing hierarchical matrix partitions is based on cluster trees; see [7, 30]. A cluster tree \(T_I\) for the index set \(I\) is a binary tree with root \(I\), where each \(t \in T_I\) and its successors \(t',t'' \in T_I\) (if they exist) have the properties \(t = t' \cup t'',\,t'\ne \emptyset \ne t''\), and \(t' \cap t'' = \emptyset \). We refer to \(\mathcal {L}(T_I) = \{t \in T_I:t\text { has no successors}\}\) as the leaves of \(T_I\) and define

where \({\text {dist}}(t, s)\) is the minimum distance between \(t\) and \(s\) in \(T_I\). Furthermore,

denotes the depth of \(T_I\). We assume that a cluster tree \(T_I\) is constructed such that there are constants \(c_g,c_G>0\) with

for all \(t\) from the \(\ell \)-th level \(T_I^{(\ell )}\) of \(T_I\). We will make use of the notation \(S_I(t)\) for the set of sons of a cluster \(t\in T_I\). The same properties are also assumed for the sets \(Y_j,\,j\in J\). Under these assumptions it follows that the depth \(L\) of the cluster trees is of the order \(L\sim \log {N}\sim \log {M}\).

A block cluster tree \(T_{I\times J}\) is a quad-tree with root \(I\times J\) satisfying conditions analogous to a cluster tree. It can be constructed from the cluster trees \(T_I\) and \(T_J\) in the following way. Starting from the root \(I\times J\in T_{I\times J}\), let the sons of a block \(t\times s\in T_{I\times J}\) be \(S_{I\times J}(t,s):=\emptyset \) if \(t\times s\) satisfies the low-frequency far-field condition

or the high-frequency far-field condition

or \(\min \{|t|,|s|\}\le {n_{\min }}\) with some given constants \(\eta _{\text {low}},\eta _{\text {high}},{n_{\min }}>0\). In all other cases, we set \(S_{I\times J}(t,s):=S_I(t)\times S_J(s)\). Notice that for \(\kappa =0\) we obtain the usual far-field condition (6). Furthermore, the transition from the low to the high-frequency regime is continuous in the sense that for \(\kappa \max \{{\text {diam}}{X_t},{\text {diam}}{Y_s}\}=1\) the conditions (15b) and (16b) are equivalent with \(\eta _{\text {high}}=\eta _{\text {low}}\). We will, however, keep both constants in order to be able to optimize them independently in the implementation of the algorithm.

The set of leaves of \(T_{I\times J}\) defines a partition \(P\) of \(I\times J\). As usual, we partition \(P\) into admissible and non-admissible blocks

where each \(t\times s\in P_{{\text {adm}}}\) satisfies (15) or (16) and each \(t\times s\in P_{{\text {nonadm}}}\) is small, i.e. satisfies \(\min \{|t|,|s|\}\le {n_{\min }}\). In order to distinguish low and high-frequency blocks, we further subdivide

where \(P_{{\text {low}}} :=\{t\times s\in P:t\times s\text { satisfies (15) }\}\) and \(P_{{\text {high}}}:=P_{{\text {adm}}}{\setminus } P_{{\text {low}}}\).

The following lemma will be the basis for the complexity analysis of the algorithms presented in this article. Notice that this lemma analyzes the so-called sparsity constant of hierarchical matrix partitions introduced in [23] for the far-field condition (6). Since the lemma states that this constant is unbounded with respect to \(\kappa \), usual \({\mathcal {H}}\)-matrices are not able to guarantee logarithmic-linear complexity for the high-frequency far-field condition (16b). Therefore, in the next section a variant of \({\mathcal {H}}^2\)-matrices will be introduced.

Lemma 5

Let \(t\in T_I^{(\ell )}\). The set \(\{s\in T_J:t\times s\in P_{\text {high}}\}\) has cardinality \({\mathcal {O}(1+2^{-\ell }\kappa ^2)}\).

Proof

The assumptions (13) and (14) guarantee that each set

contains at most \(\nu c_G c_\varGamma 2^\ell (2\rho )^2\) clusters \(s\) from the same level \(\ell \) in \(T_J\). This follows from

Let \(s\in T_J\) such that \(t\times s\in P_{\text {high}}\). Furthermore, let \(t^*\) and \(s^*\) be the father clusters of \(t\) and \(s\), respectively. Suppose that \(\max _{y\in Y_s}|\chi (X_t)-y| \ge \rho _0\), where

Then

implies that \(t^*\times s^*\in P_{\text {high}}\). Hence, \(P_{\text {high}}\) cannot contain \(t\times s\), which is a contradiction. It follows that

From (17) we obtain that

and hence the assertion. \(\square \)

3.1 Directional subdivision of high-frequency blocks

In the high-frequency regime, i.e. \(\kappa \min \{{\text {diam}}{X_t},{\text {diam}}{Y_s}\}>1\), the matrix block corresponding to \(t\times s\in P_{\text {high}}\) cannot be approximated independently of \(\kappa \) unless it is directionally subdivided; see the discussion in Sect. 2. In view of the angle condition (10), we partition the space \({\mathbb {R}}^3\) recursively into a hierarchy of unbounded pyramids. The first subdivision partitions \({\mathbb {R}}^3\) into the 6 pyramids defined by the origin and the faces of the unit cube as the pyramids’ bases. In each of the next steps, a pyramid is subdivided by dividing its base perpendicular to a largest side of the base into two equally sized halves. A pyramid \(Z\) resulting from \(\nu \) subdivisions satisfies

where \(e\in {\mathbb {S}}^2\) denotes the vector pointing from the origin to the center of the base of \(Z\). For a given cluster \(t\in T_I^{(\ell )}\) from the \(\ell \)-th level of \(T_I\) let \(\nu _t\) be the smallest non-negative integer such that

where \(\nu _0\in {\mathbb {N}}\) is a fixed value which will be specified later on. Denote by \({\mathcal {E}}(t)\) the set of directions \(e\in {\mathbb {S}}^2\) associated with all pyramids \(Z_e\) after \(\nu _t\) subdivisions. Notice that depending on \(t\) some of the directions will not be used when there are no targets in that particular direction. Such directions can be removed from \(\mathcal {E}(t)\). Then

Given \(t\in T_I\) and \(e\in {\mathcal {E}}(t)\), for \(t'\in S_I(t)\) we define \(e'\in {\mathcal {E}}(t')\) as the axis of the pyramid \(Z_{e'}\) from which \(Z_e\) results after subdivision. Notice that despite \(t'\subset t\) we have \(Z_e\subset Z_{e'}\). Furthermore, let

be the directional far field of \(X_t\). Here, \({\mathcal {F}}(X):=\{y\in {\mathbb {R}}^3:\eta _{\text {high}}\,{\text {dist}}(X,y)\ge \kappa ({\text {diam}}{X})^2\}\) denotes the far field of \(X\subset {\mathbb {R}}^3\).

The following lemma will be important for the construction of nested bases used for the approximation. Notice that the directional far fields are nested up to constants.

Lemma 6

Let \(t'\in S_I(t)\) and \(e'\) be defined as above. Then the directional far field satisfies \({\mathcal {F}}_e(X_t)\subset \tilde{{\mathcal {F}}}_{e'}(X_{t'})\) with

and the constant \(\tilde{\gamma }:= 2^{1-\nu _0}\sqrt{c_g} + (\gamma _{\text {high}}+ \eta _{\text {high}})/(1-\eta _{\text {high}})\).

Proof

For \(y\in {\mathcal {F}}_e(X_t)\) let \(\zeta =\chi (X_{t'})-y-(\chi (X_{t'})-y,e)e,\,\zeta ':=\chi (X_{t'})-y-(\chi (X_{t'})-y,e')e'\), and \(\delta :=\zeta '-\zeta \). Then

and

Using Lemma 4, we obtain from \(\chi (X_{t'})\in X_{t'}\subset X_t\)

From \(e'\in \partial Z_e\) it follows

due to (18) and (14). We obtain

The inclusion \({\mathcal {F}}(X_t)\subset {\mathcal {F}}(X_{t'})\) is obvious due to \(X_{t'}\subset X_t\). \(\square \)

From the previous proof it can be seen that \(|\sin \measuredangle (x,e)|\le \frac{2^{-\nu _0} \sqrt{c_g}}{\kappa \,{\text {diam}}X_t}\) for all \(x\in Z_e\). With Lemma 6 it follows that

provided that \(\nu _0\) from (19) is chosen sufficiently large and \(\eta _{\text {high}}\) is chosen sufficiently small.

Since high-frequency blocks require special attention, we gather column clusters \(s\) which are admissible with \(t\) in direction \(e\in {\mathcal {E}}(t)\) in the (cluster) far field with direction \(e\)

4 Directional \(\varvec{{\mathcal {H}}^2}\)-matrices

From Lemma 5 we see that \({\mathcal {H}}\)-matrices are not able to achieve logarithmic linear complexity. Therefore we employ a nested representation similar to \({\mathcal {H}}^2\)-matrices introduced in [31]. To account for the required directional subdivision, we generalize the concept of nested cluster bases.

Definition 1

A directional cluster basis \(\mathcal {U}\) for the rank distribution \((k_t^e)_{t\in T_I, e\in {\mathcal {E}}(t)}\) is a family \(\mathcal {U}=(U_e(t))_{t\in T_I, e\in {\mathcal {E}}(t)}\) of matrices \(U_e(t) \in {\mathbb {C}}^{t\times k_t^e}\). It is called nested if for each \(t\in T_I{\setminus }\mathcal {L}(T_I)\) there are transfer matrices \(T_e^{t't}\in {\mathbb {C}}^{k^{e'}_{t'}\times k^e_t}\) such that for the restriction of the matrix \(U_e(t)\) to the rows \(t'\) it holds that

For estimating the complexity of storing a nested cluster basis \(\mathcal {U}\), we assume that \(k_t^e \le k\) for all \(t\in T_I,\,e\in {\mathcal {E}}(t)\) with some \(k\in {\mathbb {N}}\). It follows from (14) that the depth \(L\in {\mathbb {N}}\) of \(T_I\) is of the order \(L\sim \log _2(\kappa ^2)\). Since the set of leaf clusters \(\mathcal {L}(T_I)\) constitutes a partition of \(I\) and according to (20) for each cluster \(t\in \mathcal {L}(T_I)\) at most \(|{\mathcal {E}}(t)|\sim \kappa ^2 2^{-\ell }\) matrices \(U_e(t)\) each with at most \(k|t|\) entries have to be stored, \(k|I|\kappa ^2 2^{-L}\sim k\,N\) units of storage are required for the leaf matrices \(U_e(t),\,t\in \mathcal {L}(T_I)\).

Using (7), we can estimate the storage required for the transfer matrices

Definition 2

A matrix \(M\in {\mathbb {C}}^{I\times J}\) is a directional \({\mathcal {H}}^2\)-matrix if \(M_b\) is of low rank for all \(b\in P_{\text {low}}\) and there are nested directional cluster bases \({\mathcal {U}}\) and \({\mathcal {V}}\) such that for \(t\times s \in P_{\text {high}}\)

with coupling matrices \(S(t,s)\in {\mathbb {C}}^{k_t^e\times k_s^{-e}}\) and \(e\in {\mathcal {E}}(t)\) such that \(s\subset F_e(t)\).

The storage cost of the blocks in \(P_{\text {nonadm}}\) and \(P_{\text {low}}\) is bounded by the storage cost of a hierarchical matrix, which is known to be \({\mathcal {O}(\max \{k,{n_{\min }}\}N\log N)}\). We estimate the storage required for the coupling matrices. According to Lemma 5, the number of blocks \(t\times s\in P_{\text {high}}\) is \({\mathcal {O}(2^{-\ell }\kappa ^2)}\) for \(t\in T_I^{(\ell )}\). Thus, the coupling matrices require at most

units of storage. Hence, the overall cost for a directional \({\mathcal {H}}^2\)-matrix is of the order \({\mathcal {O}(k^2N\log N)}\).

4.1 Matrix-vector multiplication

Let \(M\in {\mathbb {C}}^{I\times J}\) be a directional \({\mathcal {H}}^2\)-matrix. Since its structure is similar to an \({\mathcal {H}}^2\)-matrix, the matrix-vector multiplication \(y:=y+Mx\) of \(M\) by a vector \(x\in {\mathbb {C}}^J\) can be done via the usual three-phase algorithm (cf. [31]) which we modified to account for the directions \(e\). The following algorithm is a consequence of the decomposition

with \(e \in {\mathcal {E}}(t)\) such that \(s\subset F_e(t)\).

-

1.

Forward transform

The auxiliary vectors \(\hat{x}_e(s):=V_e(s)^H x_s,\,e\in {\mathcal {E}}(s)\), are computed for all \(s\in T_J\). Exploiting the nestedness of the cluster bases \(\mathcal {V}\) (with transfer matrices \(\bar{T}_e^{s's}\)), one has the following recursive relation

$$\begin{aligned} \hat{x}_e(s)=V_e(s)^H x_s=\sum _{s'\in S_J(s)}(\bar{T}^{s's}_e)^H V_{e'}(s')^H x_{s'} =\sum _{s'\in S_J(s)}(\bar{T}^{s's}_e)^H \hat{x}_{e'}(s'), \end{aligned}$$which has to be applied starting from the leaf vectors \(\hat{x}_e(s),\,e\in {\mathcal {E}}(s),\,s\in \mathcal {L}(T_J)\).

-

2.

Far field interaction

The products \(S(t,s)\hat{x}_{-e}(s)\) are computed and summed up over all blocks \(t\times s\in P_{\text {high}}\):

$$\begin{aligned} \hat{y}_e(t):=\sum _{s:t\times s\in P_{\text {high}}} S(t,s)\hat{x}_{-e}(s),\quad e\in {\mathcal {E}}(t),\, t\in T_I. \end{aligned}$$ -

3.

Backward transform

The vectors \(\hat{y}_e(t)\) are transformed to the target vector \(y\). The nestedness (22) of \(\mathcal {U}\) yields a recursion which descends \(T_I\) for the computation of \(y:=\sum _{t\in T_I} \sum _{e\in {\mathcal {E}}(t)} U_e(t)\hat{y}_e(t)\):

-

(a)

Compute \(\hat{y}_{e'}(t'):=\hat{y}_{e'}(t') + T^{t't}_e\hat{y}_e(t)\) for all \(e\in {\mathcal {E}}(t)\) and all \(t'\in S_I(t)\);

-

(b)

Compute \(y_t:=y_t+\sum _{e\in {\mathcal {E}}(t)}U_e(t)\hat{y}_e(t)\) for all clusters \(t\in \mathcal {L}(T_I)\).

-

(a)

-

4.

Low-frequency interaction For all \(t\times s \in P_{{\text {low}}}\) compute \(y_t:=y_t+W_{ts} Z_{ts}^H x_s\).

-

5.

Near field interaction

For all \(t\times s\in P_{{\text {nonadm}}}\) compute \(y_t:=y_t+M_{ts} x_s\).

The total number of operations of the latter algorithm is bounded by \({\mathcal {O}(k^2 N\log N)}\), which can be proven by observing that at most two floating-point operations are performed for each matrix coefficient stored in the directional \({\mathcal {H}}^2\)-matrix representation.

5 Construction of approximations

Our aim is to approximate \(A\in {\mathbb {C}}^{I\times J}\) defined in (2) with a directional \({\mathcal {H}}^2\)-matrix. Blocks in \(P_{{\text {nonadm}}}\) are stored entrywise without approximation. From (8) it follows that \(K\) is asymptotically smooth with constants independent of \(\kappa \) on domains \(X_t\times Y_s\) corresponding to blocks \(t\times s\in P_{{\text {low}}}\). It follows from the convergence analysis in [7] that the adaptive cross approximation

with appropriately chosen \(\tau \subset t\) and \(\sigma \subset s,\,k_{\varepsilon }:=|\tau |=|\sigma |\sim |\log {\varepsilon }|^2\) independent of \(\kappa \), can be used to generate low-rank approximations \(W_{ts}Z_{ts}^H\approx A_{ts}\), where \(W_{ts}\in {\mathbb {C}}^{t\times k_{\varepsilon }}\) and \(Z_{ts}\in {\mathbb {C}}^{s\times k_{\varepsilon }}\).

In the rest of this section, we will consider the remaining case \(t\times s\in P_{{\text {high}}}\), i.e. we assume that (16) is valid. To be able to apply the results from Sect. 2 and prove existence of low-rank matrix approximations it is required to additionally partition the far field \({\mathcal {F}}(X_t)\) into subsets \({\mathcal {F}}_e(X_t)\) corresponding to directions \(e\in {\mathcal {E}}(t)\). Although ACA generates approximations of high quality, the number of blocks is too large (see Lemma 5) to construct and store the approximations as in the low-frequency case. To overcome this difficulty, we consider the approximation (see [8] for the application of this kind of approximation to Laplace-type problems)

instead of (24), which is of type (23) with coupling matrices \(S(t,s) = A_{\tau _t\sigma _s}\). The aim of this section is to prove error estimates for the special type of low-rank approximation

with nested bases \(\mathcal {U}\) and \(\mathcal {V}\) approximating \(A_{t\sigma ^e_t}(A_{\tau _t\sigma ^e_t})^{-1}\) and \((A_{\tau ^{-e}_s\sigma _s})^{-1}A_{\tau _s^{-e}s}\), respectively.

A crucial part of the approximation (25) is the construction of what we call proper pivots \(\tau _t\subset t\) and \(\sigma _t^e\subset F_e(t),\,|\tau _t|=|\sigma ^e_t|\). They have to guarantee that \(A_{\tau _t\sigma ^e_t}\) is invertible, and for proving error estimates they have to be chosen so that

with some \(c_R>0\); cf. Lemma 9. Hence \(\tau _t\) and \(\sigma _t^e\) represent \(t\) and its far field \(F_e(t)\), respectively. We refer to [8] for a method for choosing \(\tau _t\) and \(\sigma ^e_t\). Note that it is sufficient to choose \(\sigma ^e_t\) so that

Here and in what follows, \({\varepsilon }>0\) denotes a given accuracy that (up to constants) is to be achieved by the approximations. Let \(\{\zeta _1,\dots , \zeta _{k_{\varepsilon }}\}\) be a basis of the space

where \(\varPi _p^3\) denotes the space of polynomials of degree at most \(p\) with respect to each of the three variables, \(k_{\varepsilon }:=\dim \varPi _{p_{\varepsilon }-1}^3=p_{\varepsilon }^3\), and \(p_{\varepsilon }\in {\mathbb {N}}\) is the smallest number such that \(p_{\varepsilon }\ge |\log _\rho {\varepsilon }|\) with \(\rho \) from (12).

Assumption 1

Let \(t\in T_I\). If \(|t|\ge k_{\varepsilon }\), we assume that there is \(\tau _t=\{i_1,\dots ,i_{k_{\varepsilon }}\}\subset t\) such that the following two conditions are satisfied.

-

(i)

Let \(\Lambda \in {\mathbb {R}}^{|t|\times k_{\varepsilon }}\) have the entries \(\Lambda _{ij}=({\varphi }_i/{\Vert {\varphi }_i\Vert }_{L^2(\varGamma )},\zeta _j)_{L^2(\varGamma )},\,i\in t,\,j=1,\dots ,k_{\varepsilon }\). There are coefficients \(\xi _{i\ell }\) such that

$$\begin{aligned} \Lambda _{ij} = \sum _{\ell =1}^{k_{\varepsilon }} \xi _{i\ell } \Lambda _{i_\ell j},\quad i\in t,\;j=1,\dots ,k_{\varepsilon }, \end{aligned}$$(28)and the norm \({\Vert \Xi \Vert }_\infty \) of the matrix \(\Xi =(\xi _{i\ell })_{i\ell }\in {\mathbb {C}}^{t\times k_{\varepsilon }}\) is bounded by a multiple of \(2^{p_{\varepsilon }}\),

-

(ii)

each matrix \(A_{\tau _t F_e(t)},\,e\in {\mathcal {E}}(t)\), has full rank.

In the remaining case \(|t|<k_{\varepsilon }\), we set \(\tau _t=t\) and assume only that \(A_{tF_e(t)}\) has full rank.

To see that these assumptions are reasonable, notice that \(\text {rank}\,\Lambda \le k_{\varepsilon }\), which implies (28). The coefficients \(\xi _{i\ell }\) depend only on the underlying grid. Hence, the boundedness of the norm of \(\Xi \) in \((i)\) is an assumption on the regularity of the discretization and on the choice of the pivots \(\tau _t\). Notice that the assumed bound grows exponentially with \(p_{\varepsilon }\). In practice, it seems that a polynomial growth \({\Vert \Xi \Vert }_\infty \le c p_{\varepsilon }^2\) with respect to \(p_{\varepsilon }\) can be achieved. Hence, this should be regarded as a decent assumption.

The set \(\tau _t\) is not unique. Usually, any sub-set of \(t\) having \(k_{\varepsilon }\) elements will do. To see this, assume for the time being that \({\varphi }_i=\delta _{x_i}\). In this case, the sub-matrix \((\Lambda _{i_\ell j})_{\ell j}\in {\mathbb {R}}^{k_{\varepsilon }\times k_{\varepsilon }}\) of \(\Lambda \) is non-singular if and only if \(\hat{\varPi }_{p_{\varepsilon }-1}^3\) is unisolvent with respect to the points \(x_{i_\ell },\,\ell =1,\dots ,k_{\varepsilon }\), i.e.

for some \(q\in \varPi _{p_{\varepsilon }-1}^3\) implies that \(q=0\). The set of tupels \((x_{i_1},\dots ,x_{i_{k_{\varepsilon }}})\) for which \(\varPi _{p_{\varepsilon }-1}^3\) is not unisolvent is known to be of measure zero (in the parameter domain of \(\varGamma \)); see [38]. Hence, we may assume that \(\tau _t\subset t\) is chosen such that also \((ii)\) is valid. Otherwise, the rank of \(A_{t F_e(t)}\) is already bounded by \(k_{\varepsilon }\).

Notice that with the previous assumptions it is possible to guarantee that the rows \(\tau _t\) used for the approximation of \(A_{ts}\) can be chosen independently of \(s\subset F_e(t)\). This will be crucial for the construction of nested bases.

In the following lemma, we prove error estimates for the multivariate tensor product Chebyshev interpolant \({{\mathfrak {I}}}_p \hat{K}(\cdot ,y)\in \varPi ^3_{p-1}\) with fixed \(y\in Y\) of \(\hat{K}(\cdot ,y):=K(\cdot ,y)\exp (-{\text {i}}\kappa (\cdot -y,e))\).

Lemma 7

Let \(X, Y \subset {\mathbb {R}}^3\) such that \(Y\subset {\mathcal {F}}_e(X)\). Then

where \(c_{{\mathfrak {I}}}(p) := 8\text {e}cp(1+\rho )(1+\frac{2}{\pi }\log p)^3\) with \(c\) and \(\rho \) from (12).

Proof

Without loss of generality we may assume that \(X\) is contained in a cube \(Q=\prod _{i=1}^3 Q_i\) which is aligned with \(e\) and that \(Y\subset {\mathcal {F}}_e(Q)\). Notice that this can be achieved by slightly modifying the constants \(\gamma _{\text {high}},\eta _{\text {high}}\). Let \(\hat{K}_i\) be the function in the \(i\)-th argument of \(\hat{K}(\cdot ,y),\,i=1,2,3\). From (12) we obtain

Using [10, Lemma 3.13], this implies

where \(\tilde{c} := 4\text {e}c(1 + \rho )\). With this estimate the proof can be done analogously to Theorem 3.18 in [7]. \(\square \)

Although the following estimates will hold in any absolute norm, throughout this article the max norm

of \(A\in {\mathbb {C}}^{m\times n}\) will be used if not otherwise indicated. Notice that the max norm is not a matrix norm but satisfies \({\Vert AB\Vert }\le {\Vert A\Vert }{\Vert B\Vert }_1\) and \({\Vert AB\Vert }\le {\Vert A\Vert }_\infty {\Vert B\Vert }\), where \({\Vert \cdot \Vert }_\infty ,\,{\Vert \cdot \Vert }_1\) denote the absolute row and the absolute column sum, respectively.

Lemma 8

Let assumption (i) be valid and let \({\varphi }_i,\,\psi _j\), and \(f\) in (2) be non-negative. Assume that \(c_{\text {as},2}\,\eta _{\text {high}}<1\). For \(t\in T_I\) satisfying \(|t|\ge k_{\varepsilon }\) and \(e\in {\mathcal {E}}(t)\) there is \(\Xi \in {\mathbb {R}}^{t\times k_{\varepsilon }}\) and an \({\varepsilon }\)-independent constant \(c_1>0\) such that

for all \(s\subset J\) satisfying \(Y_s\subset {\mathcal {F}}_e(X_t)\).

Proof

Due to \(K(x,y)=\exp ({\text {i}}\kappa (x-y,e))\hat{K}(x,y)\), we can apply Lemma 7 and obtain for \(x\in X_t,\,y\in Y_s\) that with \(T_{p_{\varepsilon }}(x,y) := \exp ({\text {i}}\kappa (x,e)){{\mathfrak {I}}}_{x,p_{\varepsilon }} \hat{K}(x,y)\in \hat{\varPi }_{p_{\varepsilon }-1}^3\)

According to a remark following (12), we may assume that \(\rho <1\). In addition, without loss of generality we may assume that \({\Vert {\varphi }_i\Vert }_{L^2(\varGamma )}=1\). Then assumption (28) is equivalent with

Hence,

and we see using \(\mu (X_i)\le \pi h^2\) that

with \(\tilde{c}:= c_{\mathfrak {I}}(p_{\varepsilon })(1+{\Vert \Xi \Vert }_\infty ) \sqrt{(k_{\varepsilon }+1)\pi }\). From the Taylor expansion and the asymptotic smoothness of \(f\) it can be seen that for \(c_{\text {as},2}\,\eta _{\text {high}}<1\)

with a constant \(\hat{c}>0\). Estimate (29) and \(1={\Vert {\varphi }_i\Vert }_{L^2(\varGamma )}\le c'h^{-1}{\Vert {\varphi }_i\Vert }_{L^1(\varGamma )}\) imply for \(y\in Y_s\)

From

with \(c''>0\) independent of \(\kappa \) and \(h\) due to (cf. (7))

we obtain exploiting the non-negativity of \({\varphi }_i,\,\psi _j\), and \(f\)

Hence, the matrix \(\Xi \) satisfies

because \(\frac{c_{\mathfrak {I}}(p_{\varepsilon })\hat{c} c'}{c''(\rho +2)^{p_{\varepsilon }}}(1+{\Vert \Xi \Vert }_\infty ) \sqrt{(p_{\varepsilon }^3+1)\pi }\) is bounded by an \({\varepsilon }\)-independent constant \(c_1\) from above due to the assumption that \({\Vert \Xi \Vert }_\infty \) is bounded by a multiple of \(2^{p_{\varepsilon }}\) and \(c_{\mathfrak {I}}(p_{\varepsilon })\) grows at most algebraically with \(p_{\varepsilon }\). \(\square \)

The expression \(c_S:=\max \{c_S^{(r)},c_S^{(c)}, {\Vert (A_{\tau _s^e \sigma _s})^{-1}A_{\tau _s^e s}\Vert }_1: s\in T_J\}\), where

will play a central role in the following error analysis. Notice that \(c_S\) does not depend on the cardinality of the clusters. However, it depends on the choice of the pivots \(\{\tau _t,\sigma _t^e,\tau _s^e,\sigma _s:t\in T_I,\,s\in T_J\}\) and hence on \({\varepsilon }\). Numerical experiments show that \(c_S\le cp_{\varepsilon }^2\).

Lemma 9

Let Assumption 1 be valid. Then for \(t\in T_I\) and \(e\in {\mathcal {E}}(t)\) there are proper pivots \((\tau _t,\sigma ^e_t),\,|\tau _t|=|\sigma _t^e|=\min \{k_{\varepsilon },|t|\}\), i.e., for all \(s\subset J\) satisfying \(Y_s\subset {\mathcal {F}}_e(X_t)\)

where \(c_2({\varepsilon }):=c_1(1+c_S({\varepsilon }))\).

Proof

Since \(A_{\tau _t F_e(t)}\) is assumed to have full rank, there is \(\sigma ^e_t\subset F_e(t),\,|\sigma _t^e|=|\tau _t|=\min \{k_{\varepsilon },|t|\}\), such that \(A_{\tau _t\sigma _t^e}\) is invertible. If \(|t|<k_{\varepsilon }\), we have \(\tau _t=t\) and \(A_{t\sigma _t^e}(A_{\tau _t\sigma ^e_t})^{-1}=I\). Hence, (30) is trivially satisfied. We may hence assume that \(|t|\ge k_{\varepsilon }\). Let \(\Xi \in {\mathbb {R}}^{t\times k_{\varepsilon }}\) be as in Lemma 8. We have

and thus

The second last estimate follows from Lemma 8, because \(Y_{\sigma ^e_t},Y_s\subset {\mathcal {F}}_e(X_t)\). \(\square \)

5.1 Construction of directional cluster bases

Based on the previous estimates, we are going to construct and analyze nested bases approximations. The construction of nested bases is usually done by explicit approximation of the kernel function \(K\); see, for instance, the fast multipole method [37] and the method in [33], which uses interpolation. In this section, we construct the nested bases via a purely algebraic technique which is based on the original matrix entries and thus avoids explicit kernel expansions. In this sense, the presented construction is in the class of kernel independent fast multipole methods; see [3, 19, 40].

We will define a nested basis \(\mathcal {U}\) consisting of matrices \(U_e(t)\in {\mathbb {C}}^{t\times k_{\varepsilon }}\) for each \(t\in T_I\) and \(e\in {\mathcal {E}}(t)\) in a recursive manner starting from the leaves. Due to (16a), it is actually sufficient to consider the sub-tree

of \(T_I\). For its leaf clusters \(t\in \mathcal {L}(\hat{T}_I)\) and \(e\in {\mathcal {E}}(t)\) we set \(U_e(t)=B^{tt}_e\), where for \(t'\subset t\)

Assume that matrices \(U_e(t')\) have already been constructed for the sons \(t'\in S_I(t)\) of \(t\in \hat{T}_I\setminus \mathcal {L}(\hat{T}_I)\) and \(e\in {\mathcal {E}}(t')\). Then in view of (22) we define for \(e\in {\mathcal {E}}(t)\)

where \(e'\in {\mathcal {E}}(t')\) is defined before Lemma 6.

The following lemma estimates the accuracy when expressing \(B_e^{t't}\) by the product \(B_{e'}^{t't'}B_e^{\tau _{t'}t}\). As stated in [8], \(B_e^{t't}\) may be regarded as the algebraic form of an interpolation operator.

Lemma 10

Let \(t'\in \hat{T}_I\) satisfy \(t'\subset t\in \hat{T}_I{\setminus }\mathcal {L}(\hat{T}_I)\) and \(e\in {\mathcal {E}}(t)\). Then for all \(s\subset J\) satisfying \(Y_s\subset {\mathcal {F}}_e(X_t)\) it holds that

with \(c_S = c_S({\varepsilon })\) and \(c_2 = c_2({\varepsilon })\) from Lemma 9.

Proof

It is clear that

Due to (27) and (21), it holds that \(Y_{\sigma _t^e} \subset {\mathcal {F}}_{e'}(X_{t'})\). Hence, Lemma 9 yields

\(\square \)

Theorem 1

Let Assumption 1 be valid. Let \(t\in \hat{T}_I\) and let \(\ell =L(\hat{T}_t)\) denote the depth of the sub-tree \(\hat{T}_t\) of \(\hat{T}_I\) rooted at \(t\in T_I\). Then for all \(e\in {\mathcal {E}}(t)\)

where

Proof

From Lemma 10 we have for \(t\in \hat{T}_I{\setminus }\mathcal {L}(\hat{{T}}_I)\)

We set \(\alpha _{t'}:={\Vert [U_{e'}(t')-B_{e'}^{t't'}]A_{\tau _{t'}\sigma ^e_t}\Vert }\) for \(t'\in S_I(t)\). From (27) and (21) we obtain that \(Y_{\sigma ^e_t}\subset {\mathcal {F}}_{e'}(X_{t'})\). Hence, we can apply the latter inequality recursively replacing \(s\) by \(\sigma _t^e\) and obtain the recurrence relation

We show that

where \(\ell =\ell (t)\) denotes the depth of the sub-tree \(\hat{T}_t\). This estimate is obviously true for leaf clusters \(t\in \mathcal {L}(T_I)\) as \(\alpha _t=0\). Assume that (32) is valid for the sons \(S_I(t)\) of \(t\in \hat{T}_I{\setminus } \mathcal {L}(\hat{T}_I)\). Then (31) proves

Hence,

together with \(c_2=c_1(1+c_S)\) proves the assertion. \(\square \)

Similar results as for the row clusters \(t\) can be obtained for column clusters \(s\in T_J\) and \(e\in {\mathcal {E}}(s)\) provided assumptions analogous to Assumption 1 are made. In particular, this defines clusters \(\sigma _s\subset s\) and \(\tau ^e_s\subset F'_e(s),\,|\tau ^e_s|=|\sigma _s|=\min \{k_{\varepsilon },|s|\}\), where

For \(s'\subset s\) we define

For leaf clusters \(s\in \mathcal {L}(\hat{T}_J)\) and \(e\in {\mathcal {E}}(s)\) we set \(V_e(s)=(C^{ss}_e)^H\). Assuming that matrices \(V_e(s')\) have already been constructed for the sons \(s'\in S_J(s)\) of \(s\in \hat{T}_J\setminus \mathcal {L}(\hat{T}_J)\) and \(e\in {\mathcal {E}}(s')\), we define for \(e\in {\mathcal {E}}(s)\)

where \(e'\in {\mathcal {E}}(s')\) is defined before Lemma 6. Due to the analogy with Lemma 9 and Theorem 1, we omit the proofs of the following error estimates.

Lemma 11

There is \(c_4=c_4({\varepsilon })>0\) such that for all \(s\in T_J\) and \(e\in {\mathcal {E}}(s)\) it holds that

for all \(t\subset I\) satisfying \(X_t\subset {\mathcal {F}}_e(Y_s)\).

Theorem 2

Let \(s\in \hat{T}_J\) and let \(\ell =L(\hat{T}_s)\) denote the depth of the cluster tree \(\hat{T}_s\). Then there is \(c_5=c_5({\varepsilon })>0\) such that for all \(e\in {\mathcal {E}}(s)\)

Using the previously constructed bases \(\mathcal {U}\) and \(\mathcal {V}\), we employ \(S(t,s):=A_{\tau _t\sigma _s}\) in (26) for each block \(A_{ts},\,t\times s\in P_{\text {high}}\). In the following theorem, the accuracy of the nested approximation based on the matrix entries of \(A\) is estimated.

Theorem 3

Let all previous assumptions be valid and \(t\times s\in P_{\text {high}}\). Then there is \(e\in {\mathcal {E}}(t)\) such that \(s\subset F_e(t)\) and the approximation error is bounded by

with \(c_S,c_2,c_3,c_4,c_5\) depending on \({\varepsilon }\) defined above.

Proof

From \(s\subset F_e(t)\) it follows that \(t\subset F'_{-e}(s)\). We have that

From Lemma 9 it follows that \({\Vert A_{t\sigma _s}-B_e^{tt}A_{\tau _t \sigma _s}\Vert }\le c_2 {\varepsilon }{\Vert A_{tJ}\Vert }\), and from Lemma 11 we have that \({\Vert A_{t\sigma _s} -A_{t\sigma _s} C_{-e}^{ss}\Vert } \le c_4 {\varepsilon }{\Vert A_{Is}\Vert }\). Therefore,

Furthermore, Theorems 1 and 2 yield

which proves the assertion. \(\square \)

Remark 1

Furthermore, the \({\Vert \cdot \Vert }_\infty \)-norm of \(U_e(t)\) can be expected to be close to \({\Vert B_e^{tt}\Vert }\) since \(U_e(t)\) approximates \(B_e^{tt}\); cf. Theorem 1. The actual values of \({\Vert U_e(t)\Vert }_\infty \) can easily be evaluated during computation. Hence, it easy to check the quality of the nested approximation via (33). The numerical results in the next section show that these terms are bounded in practice and do not destroy the convergence of the method.

6 Numerical results

We consider the sound soft/hard scattering problem (1) imposing either the Dirichlet datum \(u = u_D\) (sound soft) or the Neumann trace \(\partial _\nu u = v_N\) (sound hard) on the boundary \(\varGamma \). We denote \({\mathfrak {V}}\) the single and \({\mathfrak {K}}\) the double-layer-operator with the kernels \(S(x-y)\) and \(\partial _{\nu _y} S(x-y)\), respectively. Using Green’s representation formula \(u = {\mathfrak {K}}u - {\mathfrak {V}} (\partial _\nu u)\) in \(\varOmega ^c\) and the jump relations, we end up with the integral equation

which can be solved either for the unknown \(\partial _\nu u|_{\varGamma }\) in the case of a Dirichlet problem or for the unknown \(u|_{\varGamma }\) in the Neumann case. The Brakhage–Werner ansatz

with an arbitrary coupling parameter \(\beta >0\) uses an unknown density function \(\phi \) that satisfies the integral equation

In either case, Galerkin discretization of the integral equations leads to a linear system with matrices of the form (2) that can be approximated by directional \({\mathcal {H}}^2\)-matrices. The solution can then be obtained via GMRES using the matrix-vector multiplication from Sect. 4.1, which we proved to have logarithmic-linear complexity.

6.1 Approximation of \(\varvec{{\mathfrak {V}}}\)

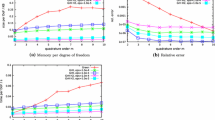

As a first step, we validate the logarithmic-linear complexity of the directional \({\mathcal {H}}^2\)-matrices (labeled dir\({\mathcal {H}}^2\)-ACA). Moreover, we compare our new approach with the standard \({\mathcal {H}}\)-matrix approximation via ACA (labeled \({\mathcal {H}}\)-ACA) and the \({\mathcal {H}}^2\)-matrix approach from [8] (labeled \({\mathcal {H}}^2\)-ACA). In this section, we focus on the approximation of the single-layer operator \({\mathfrak {V}}\). Since we assumed \(\kappa h\) to be constant, we increase \(\kappa \) with growing number of degrees of freedom \(N\). By “acc.” we label the relative error to the full matrix in the Frobenius norm.

Table 1 shows the memory consumption of the approximation of the discretization of \({\mathfrak {V}}\) with piecewise constant ansatz and test functions on the prolate spheroid, i.e. an ellipsoid with ten times the extension in \(x\)-direction. The accuracy for \(N=1{,}905{,}242\) was not computed, because the calculation of the difference to a full matrix in Frobenius norm takes \({\mathcal {O}(N^2)}\) time.

For small \(N\), the three methods have about the same performance. This is due to fact that directional \({\mathcal {H}}^2\)-matrices adapt to the wave number and fade to usual \({\mathcal {H}}\)-matrices for low frequencies. Nevertheless, \({\mathcal {H}}^2\)-ACA seems to be superior with respect to memory consumption. The latter approach, however, is not convenient for Helmholtz-type kernel functions as the running time increases for higher wave numbers. The reason for this behaviour is that the blockwise rank cannot be guaranteed to be constant and thus, the number of apriori pivots must be increased accordingly in order to achieve a prescribed accuracy. The gain of dir\({\mathcal {H}}^2\)-ACA in terms of both time and memory becomes visible for larger \(N\) (see Figs. 3, 4).

6.2 Neumann problem

We consider the sound hard scattering problem and use piecewise linear ansatz and test functions for the Galerkin discretization of (34). The approximate Dirichlet datum \(\tilde{u}_D \approx u|_{\varGamma }\) is obtained from solving (34) with approximations (\({\varepsilon }= 10^{-4}\)) of the discrete operators \(V\) and \(K\) of \({\mathfrak {V}}\) and \({\mathfrak {K}}\). We use the Neumann datum \(v_N:=\partial _\nu S(\cdot -x_0)\) with an interior point \(x_0\in \varOmega \). In this case, we are able to compute the \(L^2\)-error \({\Vert \tilde{u}_D - u\Vert }_{L^2(\varGamma )} /{\Vert u\Vert }_{L^2(\varGamma )}\) to the exact Dirichlet trace given by \(u|_{\varGamma } = S(\cdot -x_0)\). Tables 2 and 4 show the behaviour of the error on the sphere with radius 1 for fixed \(\kappa h\) and fixed \(\kappa \), respectively. Furthermore, the CPU time and the memory consumption required by the approximations to \(V\) and \(K\) is shown and can be seen to behave logarithmic-linear for both fixed and growing wave numbers. As a reference, we made the same computations also using \({\mathcal {H}}\)-matrices. The corresponding results are shown in Tables 3 and 5. As before, the advantage of dir\({\mathcal {H}}^2\)-ACA becomes visible for a growing number of waves. It is remarkable, however, that even in the fixed frequency case the directional approach outperforms \({\mathcal {H}}\)-ACA in terms of memory and computation time for larger degrees of freedom. For smaller degrees of freedom the performance of \({\mathcal {H}}\)-ACA is only slightly better.

6.3 Visualization of Dirichlet problem with Brakhage–Werner

We consider the sound soft scattering problem, i.e. we seek a solution \(u = u_i + u_s\) of the Helmholtz equation (1), where \(u_i(x) := \exp ({\text {i}}\kappa (x,e))\) with \(e = (1,0,1)^T/\sqrt{2}\) denotes the incident acoustic wave and \(u_s\) is the unknown scattered field. The incident wave is reflected on a sound soft obstacle \(\varOmega \), which is described by the Dirichlet condition \( u_s|_{\varGamma } = -u_i|_{\varGamma }\).

The obstacle is composed of 4 cylindrical spheres with 507,904 panels and 253,960 vertices. We solve (36) for the unknown density \(\phi \) with piecewise linear ansatz and test functions. Following [22], we use the coupling parameter \(\beta = \kappa /2\). In a second step, we evaluate the potential (35) on a uniform discretization of a screen behind the obstacle in order to compute the scattered wave \(u_s\). Figure 5 shows the resulting pressure field of the total wave \(|u_i+u_s|\) for \(\kappa = 10\) and \(\kappa = 40\).

References

Alpert, B.K., Beylkin, G., Coifman, R., Rokhlin, V.: Wavelet-like bases for the fast solution of second-kind integral equations. SIAM J. Sci. Comput. 14, 159–184 (1993)

Amini, S., Profit, A.: Multi-level fast multipole solution of the scattering problem. Eng. Anal. Bound. Elements 27(5), 547–654 (2003)

Anderson, C.R.: An implementation of the fast multipole method without multipoles. SIAM J. Sci. Stat. Comput. 13(4), 923–947 (1992)

Banjai, L., Hackbusch, W.: Hierarchical matrix techniques for low- and high-frequency Helmholtz problems. IMA J. Numer. Anal. 28(1), 46–79 (2008). doi:10.1093/imanum/drm001

Barnes, J., Hut, P.: A hierarchical \(\cal O({N}\log {N})\) force calculation algorithm. Nature 324, 446–449 (1986)

Bebendorf, M.: Approximation of boundary element matrices. Numer. Math. 86(4), 565–589 (2000)

Bebendorf, M.: Hierarchical matrices: a means to efficiently solve elliptic boundary value problems. In: Lecture Notes in Computational Science and Engineering (LNCSE), vol. 63. Springer, Berlin (2008). ISBN 978-3-540-77146-3

Bebendorf, M., Venn, R.: Constructing nested bases approximations from the entries of non-local operators. Numer. Math. 121(4), 609–635 (2012)

Börm, S.: \({\cal H}^{2}\)-matrix arithmetics in linear complexity. Computing 77(1), 1–28 (2006)

Börm, S., Löhndorf, M., Melenk, J.M.: Approximation of integral operators by variable-order interpolation. Numer. Math. 99(4), 605–643 (2005)

Brakhage, H., Werner, P.: Über das Dirichletsche Außenraumproblem für die Helmholtzsche Schwingungsgleichung. Arch. Math. 16, 325–329 (1965)

Brandt, A.: Multilevel computations of integral transforms and particle interactions with oscillatory kernels. Comput. Phys. Commun. 65, 24–38 (1991)

Buffa, A., Hiptmair, R.: A coercive combined field integral equation for electromagnetic scattering. SIAM J. Numer. Anal. 42(2), 621–640 (2003)

Burton, A.J., Miller, G.F.: The application of integral equation methods to the numerical solution of boundary value problems. Proc. R. Soc. Lond. A232, 201–210 (1971)

Candès, E., Demanet, L., Ying, L.: A fast butterfly algorithm for the computation of Fourier integral operators. Multiscale Model. Simul. 7(4), 1727–1750 (2009). doi:10.1137/080734339

Chandler-Wilde, S.N., Graham, I.G., Langdon, S., Spence, E.A.: Numerical-asymptotic boundary integral methods in high-frequency acoustic scattering. Acta Numerica 21, 89–305 (2012)

Cheng, H., Crutchfield, W.Y., Gimbutas, Z., Greengard, L.F., Ethridge, J.F., Huang, J., Rokhlin, V., Yarvin, N., Zhao, J.: A wideband fast multipole method for the Helmholtz equation in three dimensions. J. Comput. Phys. 216(1), 300–325 (2006). doi:10.1016/j.jcp.2005.12.001

Darve, E.: The fast multipole method: numerical implementation. J. Comput. Phys. 160(1), 195–240 (2000)

Engquist, B., Ying, L.: Fast directional multilevel algorithms for oscillatory kernels. SIAM J. Sci. Comput. 29(4), 1710–1737 (2007, electronic)

Engquist, B., Ying, L.: A fast directional algorithm for high frequency acoustic scattering in two dimensions. Commun. Math. Sci. 7(2), 327–345 (2009)

Francia, G.T.D.: Degrees of freedom of an image. J. Opt. Soc. Am. 59(7), 799–803 (1969)

Giebermann, K.: Schnelle Summationsverfahren zur numerischen Lösung von Integralgleichungen für Streuprobleme im \(\mathbb{R}^3\). Ph.D. thesis, Universität Karlsruhe (1997)

Grasedyck, L., Hackbusch, W.: Construction and arithmetics of \({\cal H}\)-matrices. Computing 70, 295–334 (2003)

Greengard, L.: The Rapid Evaluation of Potential Fields in Particle Systems. MIT Press, Cambridge (1988)

Greengard, L.F., Rokhlin, V.: A fast algorithm for particle simulations. J. Comput. Phys. 73(2), 325–348 (1987)

Greengard, L.F., Rokhlin, V.: A new version of the fast multipole method for the Laplace equation in three dimensions. In: Acta Numerica, vol. 6, pp. 229–269. Cambridge University Press, Cambridge (1997)

Hackbusch, W.: A sparse matrix arithmetic based on \(\cal H\)-matrices. Part I: introduction to \(\cal H\)-matrices. Computing 62(2), 89–108 (1999)

Hackbusch, W., Börm, S.: Data-sparse approximation by adaptive \({\cal H}^{2}\)-matrices. Computing 69(1), 1–35 (2002)

Hackbusch, W., Khoromskij, B.N.: A sparse \(\cal H\)-matrix arithmetic: general complexity estimates. J. Comput. Appl. Math. 125(1–2), 479–501 (2000). Numerical analysis 2000, vol. VI, Ordinary differential equations and integral equations

Hackbusch, W., Khoromskij, B.N.: A sparse \(\cal H\)-matrix arithmetic. Part II: application to multi-dimensional problems. Computing 64(1), 21–47 (2000)

Hackbusch, W., Khoromskij, B.N., Sauter, S.A.: On \({\cal H}^{2}\)-matrices. In: Bungartz, H.J., Hoppe, R.H.W., Zenger, C. (eds.) Lectures on Applied Mathematics, pp. 9–29. Springer, Berlin (2000)

Hackbusch, W., Nowak, Z.P.: On the fast matrix multiplication in the boundary element method by panel clustering. Numer. Math. 54(4), 463–491 (1989)

Messner, M., Schanz, M., Darve, E.: Fast directional multilevel summation for oscillatory kernels based on Chebyshev interpolation. J. Comput. Phys. 231, 1175–1196 (2012)

Michielssen, E., Boag, A.: A multilevel matrix decomposition for analyzing scattering from large structures. IEEE Trans. Antennas Propag. 44, 1086–1093 (1996)

Rokhlin, V.: Rapid solution of integral equations of classical potential theory. J. Comput. Phys. 60(2), 187–207 (1985)

Rokhlin, V.: Rapid solution of integral equations of scattering theory in two dimensions. J. Comput. Phys. 86(2), 414–439 (1990)

Rokhlin, V.: Diagonal forms of translation operators for the Helmholtz equation in three dimensions. Appl. Comput. Harmon. Anal. 1(1), 82–93 (1993)

Sauer, T., Xu, Y.: On multivariate Lagrange interpolation. Math. Comput. 64(211), 1147–1170 (1995)

Tyrtyshnikov, E.E.: Mosaic-skeleton approximations. Calcolo 33(1–2), 47–57 (1998). Toeplitz matrices: structures, algorithms and applications (Cortona, 1996)

Ying, L., Biros, G., Zorin, D.: A kernel-independent adaptive fast multipole algorithm in two and three dimensions. J. Comput. Phys. 196(2), 591–626 (2004)

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the DFG project BE2626/3-1.

Rights and permissions

About this article

Cite this article

Bebendorf, M., Kuske, C. & Venn, R. Wideband nested cross approximation for Helmholtz problems. Numer. Math. 130, 1–34 (2015). https://doi.org/10.1007/s00211-014-0656-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-014-0656-7