Abstract

We discuss fattening phenomenon for the evolution of sets according to their nonlocal curvature. More precisely, we consider a class of generalized curvatures which correspond to the first variation of suitable nonlocal perimeter functionals, defined in terms of an interaction kernel K, which is symmetric, nonnegative, possibly singular at the origin, and satisfies appropriate integrability conditions. We prove a general result about uniqueness of the geometric evolutions starting from regular sets with positive K-curvature in \({\mathbb {R}}^n\) and we discuss the fattening phenomenon in \({\mathbb {R}}^2\) for the evolution starting from the cross, showing that this phenomenon is very sensitive to the strength of the interactions. As a matter of fact, we show that the fattening of the cross occurs for kernels with sufficiently large mass near the origin, while for kernels that are sufficiently weak near the origin such a fattening phenomenon does not occur. We also provide some further results in the case of the fractional mean curvature flow, showing that strictly starshaped sets in \({\mathbb {R}}^n\) have a unique geometric evolution. Moreover, we exhibit two illustrative examples in \({\mathbb {R}}^2\) of closed nonregular curves, the first with a Lipschitz-type singularity and the second with a cusp-type singularity, given by two tangent circles of equal radius, whose evolution develops fattening in the first case, and is uniquely defined in the second, thus remarking the high sensitivity of the fattening phenomenon in terms of the regularity of the initial datum. The latter example is in striking contrast to the classical case of the (local) curvature flow, where two tangent circles always develop fattening. As a byproduct of our analysis, we provide also a simple proof of the fact that the cross in \({\mathbb {R}}^2\) is not a K-minimal set for the nonlocal perimeter functional associated to K.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we are interested in the analysis of the fattening phenomenon for evolutions of sets according to nonlocal curvature flows. Fattening is a particular kind of singularity which arises in the evolution of boundaries by their (local or nonlocal) curvatures and more generally in geometric evolution of manifolds and is related to nonuniqueness of geometric solutions to the flow. Fattening phenomenon has been studied for mean curvature flow since long time and a complete characterization of initial data which develop fattening is still missing. In the case of the plane, it is known that smooth compact level curves never develop an interior, due to a result by Grayson on the evolution of regular compact curves. This result is no more valid for fractional mean curvature flow in the plane, as proved recently in [12]. We recall that examples of fattening of nonregular or noncompact curves in the plane for the mean curvature flow have been given in [3, 13, 15], where, in particular, the fattening of the evolution starting from the cross is proved. Finally nonfattening for strictly starshaped initial data is proved in [20], whereas nonfattening of convex and mean convex initial data is proved in [1], see also [2, 4].

In this paper we start the analysis of the fattening phenomenon (mostly in the plane) for general nonlocal curvature flows. This problem has not yet been considered in the literature apart from the result in [9] about nonfattening for convex initial data under fractional mean curvature evolution in any space dimension.

Here we will show that some results which are true for the mean curvature flow are still valid, such as nonfattening for regular initial data with positive curvature or strictly starshaped initial data.

Nevertheless, in general, some different behaviors with respect to the mean curvature flow arise, due to the fact that the fattening phenomenon is very sensitive to the strength of the nonlocal interactions. We discuss in particular the evolution starting from the cross in the plane, which develops fattening only if the interactions are sufficiently strong. Moreover, we show an example of a closed curve with positive curvature which fattens, and an example of a closed curve whose evolution by fractional mean curvature flow does not present fattening, differently from the case of the evolution by mean curvature flow.

We now introduce the mathematical setting in which we work. Given an initial set \(E_0\subset {\mathbb {R}}^n\), we define its evolution \(E_t\) for \(t>0\) according to a nonlocal curvature flow as follows: the velocity at a point \(x\in \partial E_t\) is given by

where \(\nu \) is the outer normal at \(\partial E_t\) in x. The quantity \(H_E^K(x)\) is the K-curvature of E at x, which is defined in the forthcoming formula (1.4). More precisely, we take a function \(K:{\mathbb {R}}^n{\setminus }\{0\}\rightarrow [0,+\infty )\) which is a rotationally invariant kernel, namely

for some \(K_0:(0,+\infty ) \rightarrow [0,+\infty )\). We assume that

Given \(E\subset {\mathbb {R}}^n\) and \(x\in \partial E\) we define the K-curvature of E at x, defined by

where, as usual,

We point out that (1.3) is a very mild integrability assumption, compatible with the structure of nonlocal minimal surfaces (see e.g. condition (1.5) in [11]) and which fits the requirements in [8, 16] in order to have existence and uniqueness for the level set flow associated to (1.1) (see Appendix A for the details about this matter).

Furthermore, when \(K(x)= \frac{1}{|x|^{n+s}}\) for some \(s\in (0,1)\), we will denote the K-curvature of a set E at a point x as \(H^s_E(x)\), and we indicate it as the fractional mean curvature of the set E at x.

While the setting in (1.4) makes clear sense for sets with \(C^{1,1}\)-boundaries, as customary we also use the notion of K-curvatures for sets which are locally the graphs of continuous functions: in this case, the K-curvature may be also infinite and the definition is in the sense of viscosity (see [8, 16] and Section 5 in [6]).

We observe that the curvature defined in (1.4) is the the first variation of the following nonlocal perimeter functional, see [7, 17],

and so the geometric evolution law in (1.1) can be interpreted as the \(L^2\) gradient flow of this perimeter functional, as proved in [8].

The existence and uniqueness of solutions for the K-curvature flow in (1.1) in the viscosity sense have been investigated in [16] by introducing the level set formulation of the geometric evolution problem (1.1) and a proper notion of viscosity solution. We refer to [8] for a general framework for the analysis via the level set formulation of a wide class of local and nonlocal translation-invariant geometric flows.

The level set flow associated to (1.1) can be defined as follows. Given an initial set \(E\subset {\mathbb {R}}^n\) and \(C:=\partial E\), we choose a bounded Lipschitz continuous function \(u_E:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) such that

Let also \(u_E(x,t)\) be the viscosity solution of the following nonlocal parabolic problem

Then the level set flow of C is given by

We associate to this level set the outer and inner flows defined as follows:

We observe that the equation in (1.6) is geometric, so if we replace the initial condition with any function \(u_0\) with the same level sets \(\{u_0 \geqslant 0\}\) and \(\{ u_0 > 0 \}\), the evolutions \(E^+(t)\) and \(E^-(t)\) remain the same. For more details, we refer to Appendix A.

The K-curvature flow has been recently studied from different perspectives, in particular the case fractional mean curvature flow, taking into account geometric features such as conservation of the positivity of the fractional mean curvature, conservation of convexity and formation of neckpinch singularities, see [9, 12, 18].

In this paper, we analyze the possible lack of uniqueness for the geometric evolution, i.e. the situation in which \(\partial E^+(t)\ne \partial E^-(t)\), in terms of the fattening properties of the zero level set of the viscosity solutions. To this end, we give the following definition:

Definition 1.1

We say that fattening occurs at time \(t>0\) if the set \(\Sigma _E(t)\), defined in (1.7), has nonempty interior, i.e.

We point out that in [9, Section 6], in the case of fractional (anisotropic) mean curvature flow in any dimension, it has been proved that if the initial set \(E\subseteq {\mathbb {R}}^n\) is convex, then the evolution remains convex for all \(t>0\) and \(E^+(t)=\overline{E^{-}(t)}\), so fattening never occurs.

We start with a result about nonfattening of bounded regular sets with positive K-curvature (for the classical case of the mean curvature flow, see [1, 2, 4]).

Theorem 1.2

Let (1.2) and (1.3) hold. Let \(E\subset {\mathbb {R}}^n\) be a compact set of class \(C^{1,1}\) and we assume that there exists \(\delta >0\) such that

Then \(\Sigma _{ E}(t)\) has empty interior for every t.

We point out that, to get the result in Theorem 1.2, the assumption on the regularity of the sets cannot be completely dropped: indeed in the forthcoming Theorem 1.10 we will provide an example of bounded set in the plane, with a “Lipschitz-type” singularity and with positive K-curvature, which develops fattening.

1.1 Evolution of the cross

We consider now the cross in \({\mathbb {R}}^2\), i.e.

It is well known, see [13], that the evolution of the cross according to the curvature flow immediately develops fattening for \(t>0\). So, an interesting question is if the same phenomenon appears also for general nonlocal curvature flows as (1.1), for kernels which satisfy (1.2) and (1.3). We show that actually the fattening feature in nonlocal curvature flows is very sensitive to the specific properties of the kernel since it depends on the strength of the interactions: we identify in particular two classes of kernels, giving fattening of the cross in the first class, i.e. for kernels which satisfy (1.13), (1.14) below, and nonfattening of the cross in the second class, i.e. for kernels which satisfy (1.19) below.

Remark 1.3

Recalling the notation in (1.7), we observe that

Indeed, up to a rotation of coordinate system, we write \({\mathcal {C}}=\{(y_1,y_2)\in {\mathbb {R}}^2\text { s.t. }y_1y_2\geqslant 0\}\). Define a bounded Lipschitz function \(u_0\) such that \(u_0(y_1,y_2)=u_0(-y_1,-y_2)=-u_0(-y_1,y_2)=-u_0(y_1,-y_2)\), and such that \({\mathcal {C}}= \{(y_1,y_2)\in {\mathbb {R}}^2\text { s.t. }u_0(y_1,y_2)\geqslant 0\}\). Then the solution to (1.6) with initial condition \(u_0\) satisfies

see Appendix A. In particular this implies that \(\big \{ (y_1,y_2)\in {\mathbb {R}}^2 { \text{ s.t. } } y_1y_2=0\big \}\subseteq \big \{ (y_1,y_2)\in {\mathbb {R}}^2 { \text{ s.t. } }u(y_1,y_2, t)=0\big \}= \Sigma _{{\mathcal {C}}}(t)\), that is (1.11) once we rotate back.

We introduce the function

In our framework, the function \(\Psi (r)\) plays a crucial role in quantitative K-curvature estimates, also in view of a suitable barrier that will be discussed in Proposition 3.1 later on. Notice that when \(K(x)=\frac{1}{|x|^{2+s}}\) with \(s\in (0,1)\), the function \(\Psi (r)\) reduces, up to multiplicative constants, to \(\frac{1}{r^s}\).

We suppose that the kernel K satisfies

We will need also the following technical assumption: there exists \(r_0>0\) such that for all \(r\in (0,r_0)\),

This assumption is trivially satisfied if \(K>0\) in \(B_{(3\sqrt{2}+1)r_0}\).

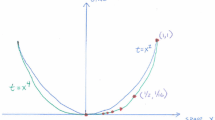

Under these conditions, we have that, for short times, the set \(\Sigma _{ {{\mathcal {C}}} }(t)\) contains a ball centered at the origin (seeFootnote 1 Fig. 1), according to the following result:

The fattening phenomenon described in Theorem 1.4

Theorem 1.4

Assume that (1.2), (1.3), (1.13) and (1.14) hold true. For \(r\in (0,1)\), we define

Then, there exists \(T>0\) such that

for any \(t\in (0,T)\), where r(t) is defined implicitly by

We notice that the setting in (1.15) is well defined in view of the structural assumption in (1.13) and \(\Lambda (r)\), as defined in (1.15), is strictly increasing, which makes the implicit definition in (1.17) well posed.

Remark 1.5

We point out that the structural assumptions in (1.3) and (1.13) are satisfied by kernels of the form \(K(x)= \frac{1}{|x|^{2+s}}\) for some \(s\in (0,1)\), or more generally by kernels such that

Indeed, the upper bound for K in (1.18) plainly implies (1.3). Moreover, the lower bound for K in (1.18) implies that

where \(C_0>0\) is independent of r, and this yields (1.13). Finally as for (1.14), we observe that it is trivially satisfied.

Note that r(t) defined in (1.17) satisfies \(r(t)\geqslant C_0 t^{\frac{1}{\alpha -1}}\), in particular, in the case \(K(x)=\frac{1}{|x|^{2+s}}\), r(t) is proportional to \(t^{\frac{1}{1+s}}\).

As a counterpart of Theorem 1.4, we show that the fattening phenomenon does not occur in straight crosses when the interaction kernel has sufficiently strong integrability properties. Namely, we have that:

Theorem 1.6

Assume (1.2) and (1.3). Suppose also that

for any \(r>0\), and that

Then

Remark 1.7

We notice that conditions (1.3), (1.19) and (1.20) are satisfied by kernels K such that \(K_0\) is nonincreasing, and which satisfy

Indeed, we observe first that in this case (1.3) is automatically satisfied. Moreover, from (1.22), we can take \(K_1:=K_0\) in (1.19) and have that

up to renaming \(C>0\), and so (1.20) is satisfied.

We also observe that condition (1.22) is somewhat complementary to (1.18).

1.2 A remark on K-minimal cones

As a byproduct of the results that we discussed in Sect. 1.1, we observe that actually the cross is not a K-minimal set for the K-perimeter in \({\mathbb {R}}^2\), obtaining an alternative (and more general) proof of a result discussed in Proposition 5.2.3 of [5] for the fractional perimeter (see [19] for a full regularity theory of fractional minimal cones in the plane).

For this, we define

Then, we say that E is a minimizer for \(\mathrm {Per}_K\) in the ball \(B_R\) if

for every measurable set F such that \(E{\setminus } B_R=F{\setminus } B_R\).

Also, a measurable set \(E\subset {\mathbb {R}}^2\) is said to be K-minimal for the K-perimeter if it is a minimizer for \(\mathrm {Per}_K\) in every ball \(B_R\). Then, we have:

Proposition 1.8

Let (1.2) and (1.3) hold, and assume that K is not identically zero. Then \({\mathcal {C}}\subseteq {\mathbb {R}}^2\), as defined in (1.10), is not K-minimal for the K-perimeter.

1.3 Fractional curvature evolution of starshaped sets

Now we restrict ourselves to the case of homogeneous kernels K, i.e. we consider the case (up to multiplicative constants) in which

We start by observing that strictly starshaped sets never fattens, similarly as for the (local) curvature flow (see [20]). A similar result has also been observed in [9, Remark 6.4].

Proposition 1.9

Assume (1.24). Let \({\mathbb {S}}^{n-1}=\{\omega \in {\mathbb {R}}^n{ \text{ s.t. } } |\omega |=1\}\), \( f:{\mathbb {S}}^{n-1}\rightarrow (0, +\infty )\) be a continuous positive function and \(E\subset {\mathbb {R}}^n\) be such that

Then, the set \(\Sigma _{E}(t)\) has empty interior for all \(t>0\).

Now we restrict ourselves to the case of the plane, so \(n=2\). We show that in general, for starshaped sets E which do not satisfy (1.25), we can expect either fattening or nonfattening. We provide two different examples of such sets in \({\mathbb {R}}^2\), which are particularly interesting in our opinion, since they model two different type of singularities that can arise in the geometric evolution of closed curves in \({\mathbb {R}}^2\), that is the “Lipschitz-type” singularity, and the“cusp singularity”. The first example is the “double droplet” in Fig. 2, namely

where \({{\mathcal {G}}}_+\) is the convex hull of \(B_1(-1,1)\) with the origin, and \({{\mathcal {G}}}_-\) the convex hull of \(B_1(1,-1)\) with the origin. The second example is given by two tangent balls

We prove that fattening phenomenon occurs in the first case, whereas it does not occur in the second. It is also interesting to observe that the evolution of \({\mathcal {O}}\) by curvature flow immediately develops fattening, see [3].

The fattening phenomenon described in Theorem 1.10

We start by considering the evolution of the set \({{\mathcal {G}}}\) defined in (1.26). Note that this provides an example of bounded set with positive K-curvature (being contained in a cross with zero K-curvature), whose evolution develops fattening near the origin, as sketched in Fig. 3 and detailed in the following statement.

Theorem 1.10

Assume (1.24) with \(n=2\). Then there exist \({{\hat{c}}} \), \(T>0\) such that

for any \(t\in (0,T)\), where

Remark 1.11

The same result as in Theorem 1.10 holds more generally for kernels \(K_0\) which satisfy (1.2), (1.3), (1.13) and

for some suitable \({{\overline{a}}}\geqslant {{\underline{a}}}>0\).

We now consider the case of two tangent balls as in (1.27), and we show that \({{\mathcal {O}}}(t)\) presents no fattening phenomenon, according to the statement below.

Theorem 1.12

Assume (1.24) with \(n=2\). Then the set \(\Sigma _{{\mathcal {O}}}(t)\) has empty interior for all \(t>0\).

The evolution of the double ball is sketched in Fig. 4: roughly speaking, the set shrinks at its surroundings, emanating some mass from the origin, but it does not possess “gray regions” at its boundary.

The evolution of two tangent balls described in Theorem 1.12

The rest of the paper is organized as follows. Section 2 deals with the fact that the evolution starting from regular sets with positive K-curvature does not fatten and it contains the proof of Theorem 1.2. In Sect. 3 we prove the fattening of the evolution starting from the cross in \({\mathbb {R}}^2\), under assumption (1.13), as stated in Theorem 1.4.

In Sect. 4, we show that under assumption (1.19) the evolution starting from the cross in \({\mathbb {R}}^2\) does not fatten, but coincides with the cross itself, that is we prove Theorem 1.6.

Section 5 contains the proof of the fact that the cross in \({\mathbb {R}}^2\) is never a K-minimal set for \(\mathrm {Per}_K\), thus establishing Proposition 1.8.

The last three sections present the evolution under the fractional curvature flow, i.e., we assume that \(K(x)=\frac{1}{|x|^{n+s}}\). In particular, Sect. 6 is devoted to the proof of the fact that the fractional curvature evolution of strictly starshaped sets does not present fattening, which gives Proposition 1.9.

In Sect. 7, we show an example in \({\mathbb {R}}^2\) of a compact set with positive K-curvature, that is the double droplet, whose fractional curvature evolution presents fattening, thus proving Theorem 1.10.

Then, in Sect. 8 we show that the fractional curvature evolution starting from two tangent balls in \({\mathbb {R}}^2\) does not fatten, which establishes Theorem 1.12.

In Appendix A we review some basic facts about level set flow, moreover we provide some auxiliary results about comparison with geometric barriers and other basic properties of the evolution which are exploited in the proofs of the main results.

1.3.1 Notation

We denote by \(B_r\subset {\mathbb {R}}^n\) the ball centered at (0, 0) of radius r and by \(B_r(x_1,x_2,\dots , x_n)\) the ball of radius r and center \(x=(x_1,x_2,\dots , x_n)\in {\mathbb {R}}^n\).

Moreover \(e_1=(1,0,\dots , 0)\), \(e_2=(0,1, 0,\dots , 0)\) etc, and \({\mathbb {S}}^{n-1}= \{\omega \in {\mathbb {R}}^n\text { s.t. }|\omega |=1\}\).

For a given closed set E, and for any \(x\in {\mathbb {R}}^n{\setminus } E\) we denote by \(\text {dist}(x, E)\) the distance from x to E, that is

Moreover, we will denote with \(d_{E}(x)\) the signed distance function to \(C=\partial E\), with the sign convention of being positive inside E and negative outside, that is

Finally, given two sets \(E, F\subset {\mathbb {R}}^n\), we denote by d(E, F) the distance between the boundary of E and the boundary of F, that is

2 Regular sets of positive K-curvature and proof of Theorem 1.2

Proof of Theorem 1.2

We recall the continuity in \(C^{1,1}\) of the K-curvature proved in [8]. Namely, if \(E^\varepsilon \) is a family of compact sets with boundaries in \(C^{1,1}\) such that \(E^\varepsilon \rightarrow E\) in \(C^{1,1}\) (in the sense that the boundaries converges in \(C^{1}\) and are of class \(C^{1,1}\) uniformly in \(\varepsilon \)) and \(x^\varepsilon \in \partial E^\varepsilon \rightarrow x\in \partial E\), then \(H^K_{E^\varepsilon }(x^\varepsilon )\rightarrow H^K_E(x)\), as \( \varepsilon \searrow 0\).

Now, let E be as in the statement of Theorem 1.2, and define, for \(r>0\),

Then, using also (1.9), we find that there exists \(\varepsilon _0>0\) such that, for all \(\varepsilon \in (0, \varepsilon _0) \), there exists \(0<\delta (\varepsilon )\leqslant \delta \) such that

Fix \(\varepsilon <\varepsilon _0\) and let \({\bar{\delta }}:=\inf _{\eta \in [0, \varepsilon ]} \delta (\eta )>0\). Fix \(0<h<{{\bar{\delta }}}\). For all \(t\in \left[ 0, \frac{\varepsilon }{{{\bar{\delta }}}}\right] \) we define

We observe that C(t) is a supersolution to (1.1), in the sense that it satisfies (A.6). Indeed,

Since \(E\subseteq E^\varepsilon =C(0)\), by Proposition A.10, we get that

This implies that \(E^{+}(s)\subseteq E\) for all \(s\in \left[ 0, \frac{\varepsilon }{{{\bar{\delta }}}}\right] \) and for all \(h<{{\bar{\delta }}}\) and moreover that

Then, by the Comparison Principle in Corollary A.8, we get that

Therefore, recalling Proposition A.12, we get

This gives the desired statement in Theorem 1.2. \(\square \)

3 K-curvature of the perturbed cross and proof of Theorem 1.4

In this section, our state space is \({\mathbb {R}}^2\) and we exploit the notation in (1.12) and consider the cross \({{\mathcal {C}}}\subseteq {\mathbb {R}}^2\) introduced in (1.10). Furthermore, we define, for any \(r>0\), the “perturbed cross”

Then, the following result holds true:

Proposition 3.1

Assume that (1.2) and (1.3) hold true in \({\mathbb {R}}^2\). Then, we have that

for any \(p\in \partial { {{\mathcal {C}}}_r }\). Also, for any \(t\in [-r,r]\),

Proposition 3.1 provides the cornerstone to detect the fattening phenomenon of the K-curvature flow emanating from the cross and lead to the proof of Theorem 1.4. To prove Proposition 3.1, we give the following auxiliary result:

Lemma 3.2

Assume that (1.2) and (1.3) hold true in \({\mathbb {R}}^2\). Then, for any \(t\in [-r,r]\),

Proof

Let

and

see Fig. 5. Notice that \({{\mathcal {C}}}_r\) is the disjoint union of \({{\mathcal {D}}}_r\) and \({{\mathcal {T}}}_r\), hence

while \({\mathbb {R}}^2{\setminus }{{\mathcal {D}}}_r\) is the disjoint union of \({\mathbb {R}}^2{\setminus }{{\mathcal {C}}}_r\) and \({{\mathcal {T}}}_r\), which gives that

Hence, we find that

Now, we claim that, for any \(t\in [-r,r]\),

To this end, we partition \({\mathbb {R}}^2\) into different regions, as depicted in Fig. 6, and we use the notation, for each set \(Y\subseteq {\mathbb {R}}^2\),

In this way, we can write (1.4) as

On the other hand, we can use symmetric reflections across the horizontal straight line passing through the pole (t, r) to conclude that \({{\mathcal {H}}}(U)={{\mathcal {H}}}(U')\). Similarly, we see that \({{\mathcal {H}}}(V)={{\mathcal {H}}}(V')\) and \({{\mathcal {H}}}(W)={{\mathcal {H}}}(W')\). As a consequence, the identity in (3.7) becomes

Now we consider the straight line \(\ell :=\{x_2=x_1-t+r\}\). Notice that \(\ell \) passes through the point (t, r) and it is parallel to two edges of the cross \({{{\mathcal {C}}}_r}\). Considering the framework in Fig. 6, reflecting the set D across \(\ell \) we obtain a set \(D'\subseteq B\), and we write \(B=D'\cup E\), for a suitable slab E. Similarly, we reflect the set A across \(\ell \) to obtain a set \( A'\) which is contained in C, and we write \(C= A'\cup F\), for a suitable slab F, see Fig. 7.

In further details, if \(T:{\mathbb {R}}^2\rightarrow {\mathbb {R}}^2\) is the reflection across \(\ell \), we have that \(T(t,r)=(t,r)\) and \(|T(x-(t,r))|=|T(x)-(t,r)|=|x-(t,r)|\) for every \(x\in {\mathbb {R}}^2\), and thus, by (1.2),

Accordingly, since \(D=T(D')\),

and similarly

Now we consider the straight line \(\ell ':=\{x_2=-x_1+t+r\}\). Notice that \(\ell \) passes through the point (t, r) and it is perpendicular to \(\ell \). We let \(E'\) be the reflection across \(\ell '\) of the set E and we notice that \(E'\supseteq F\). Therefore

From this, (3.9) and (3.10), we obtain

This and (3.8) imply the desired result in (3.5).

and this gives the desired result. \(\square \)

With this, we are now in the position of completing the proof of Proposition 3.1 via the following argument:

Proof of Proposition 3.1

The claim in (3.3) follows from Lemma 3.2. In addition, we have that \({{\mathcal {C}}}\subset {{\mathcal {C}}}_r\), due to (3.1). We also observe that if \(p\in (\partial {{\mathcal {C}}}_r){\setminus } [-r,r]^2\), then \(p\in \partial {{\mathcal {C}}}\). Consequently, by (1.4), for any \(p\in (\partial {{\mathcal {C}}}_r){\setminus } [-r,r]^2\), we have that

Also, by symmetry, we see that \(H^K_{{\mathcal {C}}}(p)=0\) at any point \(p\in \partial {{\mathcal {C}}}\), hence (3.11) gives that \(H^K_{{{\mathcal {C}}}_r}(p)\leqslant 0\) for any \(p\in (\partial {{\mathcal {C}}}_r){\setminus } [-r,r]^2\). Since this inequality is also valid when \( p\in (\partial {{\mathcal {C}}}_r)\cap [-r,r]^2\), due to (3.3), the proof of (3.2) is complete. \(\square \)

With Proposition 3.1, we can now construct inner and outer barriers as in Corollary A.11 to complete the proof of Theorem 1.4. This auxiliary construction goes as follows.

Lemma 3.3

Let \({{\mathcal {C}}}_r\) be as in (3.1). Let \(R:=3\sqrt{2}\,r\) and define, for \(\lambda \in \left[ 0,\frac{r}{2}\right) \),

Then, for any \(p\in (\partial {{\mathcal {C}}}_r^\lambda ){\setminus } B_R\), we have that \(H^K_{{{\mathcal {C}}}_r^\lambda }(p)\leqslant 0\).

Proof

We observe that if \(p\in (\partial {{\mathcal {C}}}_r^\lambda ) {\setminus } B_R\), then \(\partial {{\mathcal {C}}}_r^\lambda \) in the vicinity of p is a segment, and there exists a vertical translation of \({{\mathcal {C}}}\) by a vector \(v_0:=\pm \sqrt{\lambda }\,e_2\) such that \(p\in {{\mathcal {C}}}+v_0\) and \({{\mathcal {C}}}+v_0\subset {{\mathcal {C}}}_r^\lambda \), see Fig. 8. From this, we find that

as desired. \(\square \)

With this, we are ready to complete the proof of Theorem 1.4, by arguing as follows.

Proof of Theorem 1.4

The proof is based on the construction of suitable families of geometric sub and supersolutions starting from the perturbed cross \({\mathcal {C}}_r\), as defined in (3.1), to which apply Corollary A.11.

We observe that

Moreover, we see that

These observations, together with the Comparison Principle in Theorem A.5 and Remark A.6, imply that

Analogously, one can define

Let \(\Psi \) as defined in (1.12). Fixed \(r\in (0,r_0)\), where \(r_0\) is as in (1.14), we define \(r_*(t)\) to be the solution to the ODE

with initial datum \(r_*(0)=r\). We fix \(T>0\) such that \(r_*(t)<r_0\) for all \(t\in [0,T]\). Recalling the definition of \(\Lambda \) in (1.15), it is easy to check that

Now, by (1.17) and (3.16), we see that

Now, recalling the setting in (3.12), we take into account the sets \({{\mathcal {C}}}_{r_*(t)}\) and \({{\mathcal {C}}}_{r_*(t)}^\lambda \), with \(\lambda \in \left[ 0,\frac{r}{2}\right) \) and \(t\in [0,T]\), and we claim that these sets satisfy the assumptions in Corollary A.11, item (ii). To this end, we observe that, in the vicinity of the angular points of \({{\mathcal {C}}}_r\), the complement of \({{\mathcal {C}}}_r\) is a convex set, and therefore condition (A.9) is satisfied by \({{\mathcal {C}}}_{r_*(t)}\). Also, we take

Notice that \(\delta >0\) thanks to (1.13) and (1.14). Then, by Proposition 3.1 and (3.15), we get that at any point \(x=(x_1,x_2)\) of \(\partial {{\mathcal {C}}}_{r_*(t)}\) with \(x_2=\pm r_*(t)\), we have that

In addition, if \(x=(x_1,x_2)\in (\partial {{\mathcal {C}}}_{r_*(t)})\cap B_{4R}\) and \(|x_2|> r_*(t)\), we have that

This and (3.18) give that condition (A.8) is fulfilled by \({{\mathcal {C}}}_{r_*(t)}\).

Furthermore, in light of Lemma 3.3, we know that, for any \(x\in (\partial {{\mathcal {C}}}_{r_*(t)}^\lambda ) {\setminus } B_R\),

which says that condition (A.15) is fulfilled by \({{{\mathcal {C}}}_{r_*(t)}^\lambda }\).

Therefore, we are in the position of using Corollary A.11, item (ii). In this way, we find that

Hence, recalling (3.17),

Taking intersections, in view of (3.13), we obtain that

Analogously, one can use the setting in (3.14), combined with Corollary A.11, item (i), and deduce that

which implies (1.16), as desired. \(\square \)

4 Moving boxes, weak interaction kernels and proof of Theorem 1.6

To simplify some computation, in this section we operate a rotation of coordinates so that

To prove Theorem 1.6, it is convenient to consider “expanding boxes” built by the following sets. For any \(r\in (0,1)\), we define

see Fig. 9.

Then, recalling the notation in (1.19), we have:

Lemma 4.1

Assume that K satisfies (1.2), (1.3) and (1.19) in \({\mathbb {R}}^2\). Then, for any \(p\in \partial {{\mathcal {N}}}_r\),

Simplifications in the computations of Lemma 4.1

Proof

We denote by A and B the two connected components of \({ {{\mathcal {N}}}_r }\) and consider the straight line \(\ell \) passing through p and tangent to \({ {{\mathcal {N}}}_r }\) at p: see Fig. 10. By reflection across \(\ell \), we can consider the regions \(A'\) and \(B'\) which are symmetric to A and B, respectively. In particular, if \(p=(p_1,p_2)\) and \(M(x_1,x_2):=(2p_1-x_1,x_2)\), we have that \(M(A\cup B)=A'\cup B'\) and \(M(B_\varepsilon (p))=B_\varepsilon (p)\), and therefore

thanks to (1.2). Then, denoting by

which is the “white region” in Fig. 10, we see that

Up to rotations, we may assume that

Recalling (1.19), and that \(p_1=r\), we get

where \(\Phi \) is defined in (1.19). Moreover, since \(p_1=r\) and \(p_2\geqslant r\), and \(K_1\) is nonincreasing, we get that \(K_1(|x-p|)\leqslant K_1(|x-(r,r)|)\), for every \(x\in {\mathbb {R}}\times [-r,r]\). As consequence,

From this and (4.5), and recalling (4.4), we obtain that

This and (4.3) give the desired result. \(\square \)

For \(\lambda \in (0,r)\) we define the sets

We observe that for any \(x\in \partial {{\mathcal {N}}}_{r}^\lambda \) there exists a unique point \(x'\in \partial {{\mathcal {N}}}_{r}\) such that \(|x-x'|=d({\mathcal {N}}_{r}^\lambda ,{\mathcal {N}}_{r})=\lambda \). Letting \(v_x:=x-x'\), it follows that \({{\mathcal {N}}}_{r}+v_x\subset {{\mathcal {N}}}_{r}^\lambda \), see Fig. 11. This and Lemma 4.1 give that

With this preliminary work, we can prove Theorem 1.6.

Proof of Theorem 1.6

We note that \({{\mathcal {M}}}_{r}:={{\mathcal {N}}}_{r}^{r/2}\subseteq {\mathcal {C}}\), being \({\mathcal {C}}\) defined in (4.1) and \({{\mathcal {N}}}_{r}^{r/2}\) defined in (4.6), with \(\lambda =r/2\). Moreover, we have that \(d({\mathcal {C}},{{\mathcal {M}}}_r)=r/2>0\). Hence, by Corollary A.8 we get that \( {{\mathcal {M}}}_{r}^+(t)\subseteq {\mathcal {C}}^-(t)\) for all \(t>0\). In particular, since

we see that

Our aim is to construct starting from \({ {{\mathcal {M}}}_{r} }\) a continuous family of geometric subsolutions and then apply Proposition A.10. Fixed \(\varrho \in (0,1)\), we define

Notice that \(F_\varrho \) is strictly increasing, so we can consider its inverse \(G_\varrho \) in such a way that \(F_\varrho (G_\varrho (t))=t\). Then, for \(t\in [0, T]\), we set \( r_\varrho (t):= G_\varrho (t)\) and we consider the evolving sets \( { {{\mathcal {M}}}_{r_\varrho (t)} }\). We remark that

and so \(r_\varrho (0)=\varrho \). In addition, the outer normal velocity of \({ {{\mathcal {M}}}_{r_\varrho (t)} }\) is

So, if

we have that

for all \(x\in \partial {\mathcal {M}}_{r_\varrho (t)}\), thanks to (4.7).

We observe that (4.10) says that (A.8) is satisfied by \({\mathcal {M}}_{r_\varrho (t)}\). So, to exploit Corollary A.11, we now want to check that condition (A.15) is satisfied by the set

We exploit again the estimate (4.7) which gives that

Thus, in view of (4.9),

This gives that \({{\mathcal {M}}}_{r_\varrho (t)}^\lambda \) satisfies condition (A.15) and therefore we can apply Corollary A.11, item (ii).

Then, it follows that, for all \(\varrho \in (0,1)\),

Also, for any \(t>0\), we claim that

To prove this, we argue by contradiction and suppose that \(r_{\varrho _k}(t)\geqslant a_0\), for some \(a_0>0\) and some infinitesimal sequence \(\varrho _k\). Then,

This is in contradiction with (1.20) and so it proves (4.12).

In view of (4.12), we find that

So, recalling (4.8) and (4.11), we conclude that

Analogously, one can define

and see that

Putting together (4.13) and (4.14), we conclude that

and so \(\Sigma _{{\mathcal {C}}}(t)=\partial {\mathcal {C}}\), thus establishing (1.21). \(\square \)

5 K-minimal cones and proof of Proposition 1.8

In this section we show that \({\mathcal {C}}\subseteq {\mathbb {R}}^2\), as defined in (1.10), is never a K-minimal set, under the assumptions (1.2) and (1.3), namely we prove Proposition 1.8. This will be proved using the family of perturbed crosses \({\mathcal {C}}_r\) introduced in (3.1) and the fact that \(H^K_E\) is the first variation of the nonlocal perimeter \(\mathrm {Per}_K\) defined in (1.5), as shown in [8].

Proof of Proposition 1.8

With the notation in (1.10) and (3.1), we claim that that there exists \(r>0\) such that, for all \(R>\sqrt{2}\,r\),

Let \(r>0\) and \(R>\sqrt{2}r\), so that \( {\mathcal {C}}_{r}{\setminus } B_R= {\mathcal {C}}{\setminus } B_R\). Let

Let \(\delta \in (0, r)\) and \(K_\delta (y):=K(y)(1-\chi _{B_\delta }(y))\). We define \(\mathrm {Per}_\delta (E)\) as in (1.5), \(\mathrm {Per}_\delta (E,B_R)\) as in (1.23), and \(H^\delta _E\) as in (1.4), with \(K_\delta \) in place of K. In this setting, we get that

We also observe that

Substituting this identity into (5.2), we find that

Now, given \(x=(x_1,x_2)\in W_r\), we have that \(x\in \partial {\mathcal {C}}_{r(x)}\), with \(r(x):=|x_2|\in (0,r]\), where the notation of (3.1) has been used. Then, by Lemma 3.2, we have that

where \(\Psi _\delta \) is as in (1.12) with \(K_\delta \) in place of K, that is

We write (5.4) as

Therefore, integrating over \(x\in W_r\),

We now observe that

and thus

Hence, plugging this information into (5.5), we conclude that

This and (5.3) give that

Now, as \(\delta \searrow 0\), we have that \(\mathrm {Per}_\delta ({\mathcal {C}}_{r}, B_R)\rightarrow \mathrm {Per}_K({\mathcal {C}}_{r}, B_R)\) and \(\mathrm {Per}_\delta ({\mathcal {C}}, B_R)\rightarrow \mathrm {Per}_K({\mathcal {C}}, B_R)\), by Dominated Convergence Theorem, see [8]. Moreover, \(\Psi _\delta (s)\rightarrow \Psi (s)=\int _{B_{s/4}(7s/4,0)} K(x)\,dx\) a.e. and in \(L^1(0,1)\) by Dominated Convergence Theorem (observe that \(\Psi \in L^1(0,1)\) by assumption (1.3)).

So, letting \(\delta \searrow 0\) in (5.6), we end up with

Recalling that K is not identically zero, we take a Lebesgue point \(\tau _0\in (0,+\infty )\) such that \(K_0(\tau _0)>0\). Then,

Consequently, we take \(\varepsilon _0>0\) such that for all \(\varepsilon \in (0,\varepsilon _0]\) we have that

Then, if \({{\bar{\varepsilon }}}:=\min \left\{ \varepsilon _0,\frac{\tau _0}{100}\right\} \) and \( r\in \left[ \frac{4\tau _0}{7}-\frac{{{\bar{\varepsilon }}}}{14}, \frac{4\tau _0}{7}+\frac{{{\bar{\varepsilon }}}}{14}\right] \), we have that

Now we cover the ring \(A_r:=B_{({7r}/{4})+({r}/{8})}{\setminus } B_{({7r}/{4})-({r}/{8})}\) by \(N_0\) balls of radius r / 4 centered at \(\partial B_{7r/4}\), with \(N_0\) independent of r. Then

thanks to (1.12).

Using this, (5.8) and (5.9), we obtain that, for any \(r\in \left[ \frac{4\tau _0}{7}-\frac{{{\bar{\varepsilon }}}}{14}, \frac{4\tau _0}{7}+\frac{{{\bar{\varepsilon }}}}{14}\right] \),

Then, if \(r_0:=\frac{4\tau _0}{7}+\frac{{{\bar{\varepsilon }}}}{14}\), we have that

and therefore

where (5.10) has been used in the last inequality. In particular,

which combined with (5.7) implies that claim in (5.1) with \(r:=r_0\).

Then, in light of (5.1), we get that \({\mathcal {C}}\) is not a K-minimal set, thus completing the proof of Proposition 1.8. \(\square \)

6 Strictly starshaped domains and proof of Proposition 1.9

Proof of Proposition 1.9

We observe that, due to assumption in (1.25), for every \(\lambda >0\), we have that there exists \(\delta _\lambda >0\) such that the distance between \(\partial E\) and \(\partial (\lambda E)\) is at least \(\delta _\lambda \). Therefore, for any \(\lambda >1\), from Corollary A.8 and Lemma A.13, we deduce that

Then for \(\lambda >1\),

Also, by Proposition A.12,

Therefore we get

This gives the desired statement. \(\square \)

7 Perturbed double droplet and proof of Theorem 1.10

In this section, the state space is \({\mathbb {R}}^2\). Recalling the notation in (1.26), given \(r\in \left( 0,\frac{1}{2}\right) \) we set

where \({{\mathcal {G}}}_0\) is the union in \({\mathbb {R}}^2\) of \({{\mathcal {B}}}^{+}\), which is the convex envelope between \(B_1(\sqrt{2},0)\) and the origin, and \({{\mathcal {B}}}^{-}\), which is the convex envelope between \(B_1(-\sqrt{2},0)\) and the origin, see Fig. 12.

Now, fixed \(\delta \in (0,r)\), we denote by \({{\mathcal {B}}}_{\delta }^+\) the convex envelope between \(B_{1-\delta }(\sqrt{2},0)\) and the origin, and \({{\mathcal {B}}}^{-}_\delta \) the convex envelope between \(B_{1-\delta }(-\sqrt{2},0)\) and the origin. We let

Then we can estimate the K-curvature of \({{\mathcal {G}}}_{\delta ,r}\) as follows:

Lemma 7.1

Assume that (1.2), (1.3) and (1.24) hold true in \({\mathbb {R}}^2\). Then, there exists \(c_\sharp \in (0,1)\) such that the following statement holds true. If \(r\in (0,c_\sharp )\) and \(\delta \in (0,c_\sharp ^4 r)\), then

for any \(p\in \partial { {{\mathcal {G}}}_{\delta ,r} }\). In addition, for any \(p\in (\partial { {{\mathcal {G}}}_{\delta ,r} })\cap ([-2r,2r]\times [-r,r])\),

Proof

Let \(\alpha (\delta ) \) the angle at \(x=0\) in \({\mathcal {B}}_\delta ^+\). Observe that when \(\delta =0\), this angle is \(\pi /2\) and moreover there exist \(\delta _0\) and \(C_0>0\) such that \(|\alpha (\delta )-\frac{\pi }{2}| \leqslant C_0\delta \), for all \(0<\delta <\delta _0\). In particular we may assume that \(\alpha (\delta )\geqslant \pi /3\). We fix then \(\delta \leqslant r<\delta _0\).

First of all note that for all \(p=(p_1,p_2)\in \partial { {{\mathcal {G}}}_{\delta ,r} }\), with \(p_1\geqslant \sqrt{2}-\frac{(1-\delta )^2}{\sqrt{2}}\) (resp. \(p_1\leqslant -\sqrt{2}+\frac{(1-\delta )^2}{\sqrt{2}}\)), then \(p\in \partial B_{1-\delta }(\sqrt{2},0)\) (resp. \(p\in \partial B_{1-\delta }(-\sqrt{2},0)\)), and then

where \(c(1)= H^s_{B_1}\).

We take \(c_\sharp \in (0,1)\) to be taken conveniently small in what follows. We notice that \({{\mathcal {S}}}:=(\partial {{\mathcal {G}}}_{\delta ,r})\cap \{|x_2|=r\}\) consists of four points. We take \(p=(p_1,p_2)\in \partial { {{\mathcal {G}}}_{\delta ,r} }\) such that there exists \(q\in {{\mathcal {S}}}\) such that \(|p-q|<c_\sharp r\) (see e.g. Fig. 13 for a possible configuration).

Then,

while

As a consequence,

as long as \(c_\sharp \) is sufficiently small, which implies (7.3) (and also (7.2)) in this case.

Now consider \(p \in \partial { {{\mathcal {G}}}_{\delta ,r} }\) such that \(p_2\ne r\) and \(d(p,{\mathcal {S}})\geqslant c_\sharp r\). If \(p\in \partial B_{1-\delta }(\pm \sqrt{2},0)\) we are ok, and in the other case, note that we can define a set \({ { {{\mathcal {G}}} } }'\) with \(C^{1,1}\)-boundary (uniformly in \(\delta \) and r) such that \({ { {{\mathcal {G}}}_{\delta ,r} } }\subset { { {{\mathcal {G}}} } }'\) and \({ { {{\mathcal {G}}} } }'{\setminus } B_{1/8}= { { {{\mathcal {G}}}_{\delta ,r} } }{\setminus } B_{1/8}\). Then, we obtain that

for some \(C'\), \(C''>0\), depending only on the local \(C^{1,1}\)-norms of the boundary of \({ { {{\mathcal {G}}} } }'\), and this gives (7.2) in this case.

Finally note that \( {{\mathcal {G}}}_{\delta ,r}\subseteq {\mathcal {C}}_r\), where \({\mathcal {C}}_r\) is the perturbed cross is defined in (3.1). So, if \(p\in \partial { {{\mathcal {G}}}_{\delta ,r} } \cap ([-r,r]\times [-r,r])\), then \(p\in \partial {\mathcal {C}}_r\). Moreover by Lemma 3.2 and the definition of \(\Psi \) in (1.12)

where \(C>0\) is a universal constant. In this case, we notice that \({ {{\mathcal {G}}}_{\delta ,r} }\) and \({{\mathcal {C}}}_r\) coincide in \(B_r\), and, outside such a neighborhood of the origin, they differ by four portions of cones (passing in the vicinity of \({{\mathcal {S}}}\)) with opening bounded by \(C_0\delta \). That is, if we set

we have that

thanks to our assumption on \(\delta \). Consequently

and so, making use of (3.3) and (1.30),

for a suitable \(c_*>0\), as long as \(c_\sharp >0\) is sufficiently small. This establishes (7.3) (and also (7.2)) in this case. \(\square \)

With these auxiliary computations, we can now complete the proof of Theorem 1.10, by arguing as follows.

Proof of Theorem 1.10

Let \(c_\sharp >0\) be as in Lemma 7.1, \(0<\varepsilon <c_\sharp /2\) and \(c_\star :=((c_\sharp -\varepsilon )\,(1+s))^{1/(1+s)}\). We define r(t) such that \(\dot{r}(t)= (c_\sharp -\varepsilon ) r(t)^{-s}\), with \(r(0)=0\). So, we have that \(r(t)=c_\star t^{1/(1+s)}\). Let also

We now estimate the outer normal velocity of \({ { { {{\mathcal {G}}}_{\delta (t),r(t)} } } }\) via Lemma 7.1. First of all, from (7.3) at \(p\in (\partial { { { {{\mathcal {G}}}_{\delta (t),r(t)} } } })\cap \{|x_2|=r(t), |x_1|<\sqrt{2}\}\) we get

Moreover, the shrinking velocity at \(x\in (\partial { { { {{\mathcal {G}}}_{\delta (t),r(t)} } } }) {\setminus }\{|x_2|=r(t)\}\) is at least \(r(t){{\dot{\delta }}}(t)=1/(c_\sharp -\varepsilon )\). This implies that at every \(x\in (\partial { { { {{\mathcal {G}}}_{\delta (t),r(t)} } } }) {\setminus }\{|x_2|=r(t)\}\) we get

by (7.2). Therefore, by Proposition A.10, we get that

Conversely, since \({{\mathcal {G}}}\) is contained in the cross \({{\mathcal {C}}}\), it follows from Corollary A.8 and Theorem 1.4 that

From this and (7.5) it follows that

with \({\hat{c}}:=\min \{ c_\star ,\,c_o\}\), which proves (1.28). \(\square \)

8 Perturbation of tangent balls and proof of Theorem 1.12

Also in this Section, the state space is \({\mathbb {R}}^2\). The idea to prove Theorem 1.12 is to construct inner barriers using “almost tangent” balls and take advantage of the scale invariance given by the homogeneous kernels in (1.24). For this, given \(\delta \in \left[ 0,\frac{1}{8}\right] \), we consider the set

Then, we have that the nonlocal curvature of \({{\mathcal {Z}}}_{\delta ,r}\) is always controlled from above by that of the ball, and it becomes negative in the vicinity of the origin. More precisely:

Lemma 8.1

Assume (1.24) with \(n=2\). Then, for any \(p\in \partial {{\mathcal {Z}}}_{\delta ,r}\) we have that

for some \(C>0\). In addition, there exists \(c\in (0,1)\) such that if \(\delta \in (0,c^2)\) and \( p\in (\partial {{\mathcal {Z}}}_{\delta ,r})\cap B_{cr}\) then

Proof

Notice that \(\partial {{\mathcal {Z}}}_{\delta ,r}\subseteq \big ( \partial B_r\big ( (1+\delta )r,0\big )\big )\cup \big (\partial B_r\big ( (-1-\delta )r,0\big ) \big )\). Moreover, \({{\mathcal {Z}}}_{\delta ,r}\supseteq B_r\big ( (1+\delta )r,0\big )\), as well as \({{\mathcal {Z}}}_{\delta ,r}\supseteq B_r\big ( (-1-\delta )r,0\big )\), hence, in view of (1.4), the nonlocal curvature of \({{\mathcal {Z}}}_{\delta ,r}\) is less than or equal to that of \(B_r\), which proves (8.1).

Now we prove (8.2). For this, up to scaling, we assume that \(r:=1\) and we take \(p\in (\partial {{\mathcal {Z}}}_{\delta ,1})\cap B_{c}\). Without loss of generality, we also suppose that \(p_1\), \(p_2>0\) and we observe that

as long as c is small enough. Indeed if \(x\in B_c(2c,0)\) then we can write \(x=-2ce_1+ce\), for some \(e\in {\mathbb {S}}^1\), and so

This proves (8.3).

Hence, from (1.4) and (8.3), the nonlocal curvature of \({{\mathcal {Z}}}_{\delta ,1}\) at p is less than or equal to the nonlocal curvature of \(B_r\big ( (1+\delta ),0\big )\), which is bounded by some \(C>0\), minus the contribution coming from \(B_c(-2c,0)\). That is,

Also, if \(y\in B_c(2ce_1+p_1, p_2)\), we have that \(|y|\leqslant |y-2ce_1-p|+|2ce_1+p|\leqslant c+2c+|p|\leqslant 4c\), and so

for some \(c_0>0\). So we insert this information into (8.4) and we obtain

as long as c is sufficiently small. This completes the proof of (8.2), as desired. \(\square \)

From Lemma 8.1, we can control the geometric flow of the double tangent balls from inside with barriers that shrink the sides of the picture and make the origin emanate some mass:

Lemma 8.2

There exist \(\delta _0\in (0,1)\), and \({{\bar{C}}}>0\) such that if \(\delta \in (0,\delta _0)\), then

Proof

Fix \(\varepsilon \in (0,1)\), to be taken arbitrarily small in what follows. Let \(\mu \in [0,\sqrt{\varepsilon }]\) and let, for any \(t\in [0,(1-\varepsilon )/C_0)\),

with \(C_0>0\) to be chosen conveniently large. We consider an inner barrier consisting in two balls of radius r(t) which, for any \(t\in [0,(1-\varepsilon )/C_0)\), remain at distance \(2\varepsilon (t)\). Namely, we set

Notice that

We also observe that the vectorial velocity of this set is the superposition of a normal velocity \(-\dot{r} \nu \), being \(\nu \) the interior normal, and a translation velocity \(\pm (\dot{r}+{{\dot{\varepsilon }}}_\mu )e_1\), with the plus sign for the ball on the right and the minus sign for the ball on the left. The normal velocity of this set is therefore equal to

Now, taken a point p on \(\partial {{\mathcal {F}}}_{\varepsilon ,\mu }(t)\), we distinguish two cases. Either \(p\in B_c\), where c is the one given in Lemma 8.1, or \(p\in {\mathbb {R}}^2{\setminus } B_c\). In the first case, we have that

This and (8.7) give that the normal velocity of \({{\mathcal {F}}}_{\varepsilon ,\mu }(t)\) at p is larger than \(-c\), and therefore greater than \(H^K_{ {{\mathcal {Z}}}_{\delta ,r} }(p)\), thanks to (8.2).

If instead \(p\in {\mathbb {R}}^2{\setminus } B_c\), we have that \(|\nu _1(p)|\leqslant 1-c_0\), for a suitable \(c_0\in (0,1)\), depending on c, and therefore

as long as \(C_0\) is sufficiently large. This and (8.7) give that the inner normal velocity of \({{\mathcal {F}}}_{\varepsilon ,\mu }(t)\) at p is strictly larger than \(\frac{C_0\,c_0}{2^{s+1} \,(r(t))^s}\), which, if \(C_0\) is chosen conveniently big, is in turn strictly larger than \(H^K_{ {{\mathcal {Z}}}_{\delta ,r} }(p)\), thanks to (8.1).

In any case, we have shown that the inner normal velocity of \({{\mathcal {F}}}_{\varepsilon ,\mu }(t)\) at p is strictly larger than \(H^K_{ {{\mathcal {Z}}}_{\delta ,r} }(p)\). This implies that \({{\mathcal {F}}}_{\varepsilon ,\mu }(t)\) is a strict subsolution according to Proposition A.10.

Then, by (8.6) and Proposition A.10

for any \(t\in [0,(1-\varepsilon )/C_0)\).

Now, taking \(\mu :=\sqrt{\varepsilon }\) in (8.5), we see that

for all \(t\in [0,\,(1-\varepsilon )/C_0]\). In particular, taking \(t:=\sqrt{\varepsilon }\),

and the latter are two tangent balls at the origin. From this and (8.8), we deduce that

and this implies the desired result by choosing \(\delta :=\sqrt{\varepsilon }\) and \({{\bar{C}}}:= 2(C_0+1)\). \(\square \)

We can now complete the proof of Theorem 1.12 in the following way:

Proof of Theorem 1.12

We observe that, in the setting of Lemma A.13, the result in Lemma 8.2 can be written as

for all \(\delta \in (0,\delta _0)\).

Fix now \(C\geqslant {{\bar{C}}}\) and let \({{\mathcal {U}}}:=(1-C\delta ){{\mathcal {O}}}^+(0)\). Then, by Corollary A.8, we have

for all \(t\geqslant 0\).

Now, in view of Lemma A.13,

and so, combining with (8.9),

Consequently, for any \(t\geqslant \delta \), we can estimate the measure of the fattening set as

We now fix \(t_0\geqslant \delta \) and choose \(C=C(t_0)\geqslant {{\bar{C}}}\) such that

So, by Proposition A.12, we get that

This and (8.10) yield that, for \(t\geqslant t_0\),

Since \(t_0\) was chosen arbitrarily, this completes the proof of Theorem 1.12. \(\square \)

Notes

The pictures of this paper have just a qualitative and exemplifying purpose, to favor the intuition and make the reading simpler. They are sketchy, not quantitatively accurate and they are not the outcome of any rigorous simulation.

As customary, a point of a piecewise \(C^{1,1}\) curve is called “angular” if the tangent directions from different sides are different.

References

Barles, G., Soner, H.M., Souganidis, P.E.: Front propagation and phase field theory. SIAM J. Control Optim. 31(2), 439–469 (1993). https://doi.org/10.1137/0331021

Bellettini, G., Caselles, V., Chambolle, A., Novaga, M.: Crystalline mean curvature flow of convex sets. Arch. Ration. Mech. Anal. 179(1), 109–152 (2006). https://doi.org/10.1007/s00205-005-0387-0

Bellettini, G., Paolini, M.: Two examples of fattening for the curvature flow with a driving force. Atti Accad. Naz. Lincei Cl. Sci. Fis. Mat. Natur. Rend. Lincei (9) Mat. Appl. 5(3), 229–236 (1994)

Biton, S., Cardaliaguet, P., Ley, O.: Nonfattening condition for the generalized evolution by mean curvature and applications. Interfaces Free Bound. 10(1), 1–14 (2008). https://doi.org/10.4171/IFB/177

Bucur, C.: Valdinoci, Enrico: Nonlocal Diffusion and Applications. Lecture Notes of the Unione Matematica Italiana, vol. 20. Springer, Bologna (2016)

Caffarelli, L., Roquejoffre, J.-M., Savin, O.: Nonlocal minimal surfaces. Commun. Pure Appl. Math. 63(9), 1111–1144 (2010). https://doi.org/10.1002/cpa.20331

Cesaroni, A., Novaga, M.: The isoperimetric problem for nonlocal perimeters. Discrete Contin. Dyn. Syst. Ser. S 11(3), 425–440 (2018). https://doi.org/10.3934/dcdss.2018023

Chambolle, A., Morini, M., Ponsiglione, M.: Nonlocal curvature flows. Arch. Ration. Mech. Anal. 218(3), 1263–1329 (2015). https://doi.org/10.1007/s00205-015-0880-z

Chambolle, A., Novaga, M., Ruffini, B.: Some results on anisotropic fractional mean curvature flows. Interfaces Free Bound. 19(3), 393–415 (2017). https://doi.org/10.4171/IFB/387

Chen, Y.G., Giga, Y., Goto, S.: Uniqueness and existence of viscosity solutions of generalized mean curvature flow equations. J. Differ. Geom. 33(3), 749–786 (1991)

Cinti, E., Serra, J., Valdinoci, E.: Quantitative flatness results and BV-estimates for stable nonlocal minimal surfaces. J. Differ. Geom. (2018)

Cinti, E., Sinestrari, C., Valdinoci, E.: Neckpinch singularities in fractional mean curvature flows. Proc. Am. Math. Soc. 146(6), 2637–2646 (2018). https://doi.org/10.1090/proc/14002

Evans, L.C., Spruck, J.: Motion of level sets by mean curvature. I. J. Differ. Geom. 33(3), 635–681 (1991)

Giga, Y.: Surface Evolution Equations A level Set Approach, Monographs in Mathematics, vol. 99. Birkhäuser, Basel (2006)

Ilmanen, T.: Generalized flow of sets by mean curvature on a manifold. Indiana Univ. Math. J. 41(3), 671–705 (1992). https://doi.org/10.1512/iumj.1992.41.41036

Imbert, C.: Level set approach for fractional mean curvature flows. Interfaces Free Bound. 11(1), 153–176 (2009). https://doi.org/10.4171/IFB/207

Maz’ya, V.: Lectures on Isoperimetric and Isocapacitary Inequalities in the Theory of Sobolev Spaces, Heat Kernels and Analysis on Manifolds, Graphs, and Metric Spaces (Paris, 2002), Contemp. Math., vol. 338, pp. 307–340. Amer. Math. Soc., Providence (2003). https://doi.org/10.1090/conm/338/06078

Sáez, M., Valdinoci, E.: On the evolution by fractional mean curvature. Commun. Anal. Geom. 27(1), (2019)

Savin, O., Valdinoci, E.: Regularity of nonlocal minimal cones in dimension 2. Calc. Var. Partial Differ. Equ. 48(1–2), 33–39 (2013). https://doi.org/10.1007/s00526-012-0539-7

Soner, H.M.: Motion of a set by the curvature of its boundary. J. Differ. Equ. 101(2), 313–372 (1993). https://doi.org/10.1006/jdeq.1993.1015

Acknowledgements

This work has been supported by the Australian Research Council Discovery Project DP170104880 “NEW – Nonlocal Equations at Work”, and by the University of Pisa Project PRA 2017 “Problemi di ottimizzazione e di evoluzione in ambito variazionale”. The authors are members of the INdAM-GNAMPA.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Y. Giga.

Appendix A: Viscosity solutions and geometric barriers

Appendix A: Viscosity solutions and geometric barriers

In this appendix, we recall the existence and uniqueness results about the level set flow associated to the nonlocal evolution (1.1), and we provide some auxiliary results which will be useful in the proof of the main theorems. All the results hold in \({\mathbb {R}}^n\) for \(n\geqslant 2\).

Before introducing the level set equation and the notion of viscosity solutions, we briefly discuss the evolution of balls according to the setting in (1.1)–(1.4).

Lemma A.1

Assume that (1.2) and (1.3) hold true. Then for every \(R>0\) there exists \(c(R)> 0\) such that

Moreover the function

is continuous, nonincreasing and such that

Furthermore, if K is a fractional kernel, that is \(K(x)=\frac{1}{|x|^{n+s}}\), then \(c(R)= c(1) R^{-s}\).

Proof

We observe that, in virtue of (1.2) and (1.4), and the fact that \(K(x)\not \equiv 0\), we have that \(H^K_{B_R}(x)>0\) for \(x\in \partial B_R\), it does not depend on x, and finally \(H^K_{B_R}(x)\geqslant H^K_{B_R'}(x)\) if \(R'>R\). Condition (1.3) assures that \(H^K_{B_R}(x)\) is finite for every \(R>0\) and also that

see [8, 16]. For the computation in the case of fractional kernels, see [18]. \(\square \)

Remark A.2

Using Lemma A.1, we study the evolution of a ball \(B_R\) according to the flow in (1.1). Such evolution is given by a ball \(B_{R(t)}\), where

with initial datum \(R(0)=R\). We define

Then C(R) is a monotone increasing function, and the solution R(t) to (A.1) is given implicitly by the formula

Let also

By (A.1) and the monotonicity of \(c(\cdot )\), it is easy to check that

Moreover, from (A.1) we have that

If K is a fractional kernel, that is \(K(x)=\frac{1}{|x|^{n+s}}\), then \(C(R)=\frac{1}{c(1)(s+1)}(R^{s+1}-1)\) and \(T_R=\frac{R^{s+1}}{c(1)(s+1)}\).

We introduce now the notion of viscosity solutions for the level set equation

For more details, we refer to [8, 16]. The viscosity theory for the classical mean curvature flow is contained in [10] and [13], see also [14] for a comprehensive level set approach for classical geometric flows.

Definition A.3

(Viscosity solutions)

-

(i)

An upper semicontinuous function \(u:{\mathbb {R}}^n\times (0, T)\rightarrow {\mathbb {R}}\) is a viscosity subsolution of (A.3) if, for every smooth test function \(\phi \) such that \(u-\phi \) admits a global maximum at (x, t), we have that either \(\partial _t \phi (x,t)\leqslant 0\) if \(D\phi (x,t)=0\), or

$$\begin{aligned} \partial _t \phi (x,t)+|D\phi (x,t)| H^K_{\{y| \phi (y,t)\geqslant \phi (x,t)\}}(x)\leqslant 0 \end{aligned}$$if \(D\phi (x,t)\ne 0\).

-

(ii)

A lower semicontinuous function \(u:{\mathbb {R}}^n\times (0, T)\rightarrow {\mathbb {R}}\) is a viscosity supersolution of (A.3) if, for every smooth test function \(\phi \) such that \(u-\phi \) admits a global minimum at (x, t), we have that either \(\partial _t \phi (x,t)\geqslant 0\) if \(D\phi (x,t)=0\), or

$$\begin{aligned} \partial _t \phi (x,t)+|D\phi (x,t)| H^K_{\{y| \phi (y,t)> \phi (x,t)\}}(x)\geqslant 0 \end{aligned}$$if \(D\phi (x,t)\ne 0\).

-

(iii)

A continuous function \(u:{\mathbb {R}}^n\times (0, T)\rightarrow {\mathbb {R}}\) is a solution to (A.3) if it is both a subsolution and a supersolution.

Remark A.4

It is easy to verify that any smooth subsolution (respectively supersolution) is in particular a viscosity subsolution (respectively supersolution).

Now, we recall the Comparison Principle and the existence and uniqueness results for viscosity solutions to (A.3).

Theorem A.5

Suppose that \(u_0\) is a bounded and uniformly continuous function. Let u (respectively v) be a bounded viscosity subsolution (respectively supersolution) of (A.3). If \(u(x,0)\leqslant u_0(x) \leqslant v(x,0)\) for any \(x\in {\mathbb {R}}^n\), then \(u\leqslant v\) on \({\mathbb {R}}^n\times [0, +\infty )\).

In particular, there exists a unique continuous viscosity solution u to (A.3) such that \(u(x,0)=u_0(x)\) for any \(x\in {\mathbb {R}}^n\).

Moreover if \(u_0\) is Lipschitz continuous then \(u(\cdot , t)\) is Lipschitz continuous, uniformly with respect to t, and

for all \(x,y\in {\mathbb {R}}^n\) and \(t>0\).

Proof

For the proof of the existence and uniqueness result, and for the Comparison Principle, we refer to [16, Theorems 2 and 3], see also [8].

Finally the Lipschitz continuity is a consequence of the Comparison Principle. Indeed, for any \(h\in {\mathbb {R}}^n\), we define

Then, if u is a viscosity solution to (A.3), we have that also \(v_+\) and \(v_-\) are viscosity solutions to the same equation. Moreover,

which implies the desired Lipschitz bound. \(\square \)

Remark A.6

Let \(E\subset {\mathbb {R}}^n\) be a closed set in \({\mathbb {R}}^n\) and let \(u_E(x)\) be a bounded Lipschitz continuous function such that

Let \(u_E\) be the unique viscosity solution to (A.3) with initial datum \(u_E\) and define

The level set flow is defined as

Due to the fact that the operator in (A.3) is geometric, which means that if u is a subsolution (resp. a supersolution) then also f(u) is a subsolution (resp. a supersolution) for all monotone increasing functions f, the following result holds: if \(v_0\) is a Lipschitz continuous function which satisfies (A.4) and v is the viscosity solution to (A.3) with initial datum \(v_0\), then \(E^+(t)=\{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)\geqslant 0\}\) and \(E^-(t)=\{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)> 0\}\).

In particular, the inner flow, the outer flow and the level set flow do not depend on the choice of the initial datum \(u_E\) but only on the set E.

Remark A.7

In the setting of Remark A.2, one can show that \(u(x,t)=R(t)-|x|\), for \(t\in [0, T_R)\), is a viscosity solution to (A.3). Therefore, in this case we have that \(E= B_R\) and \(E^+(t)=\overline{E^-(t)}=B_{R(t)}\).

An important consequence of the Comparison Principle stated in Theorem A.5, is the following result (in which we also use the notation for the distance function introduced in (1.32) and (1.31)).

Corollary A.8

-

(i)

Let \(F\subset E\) two closed sets in \({\mathbb {R}}^n\) such that \(d(F,E)=\delta >0\). Then \(F^{+}(t)\subset E^{-}(t)\) for all \(t>0\), and the map \(t\rightarrow d(F^{+}(t), E^{-}(t))\) is nondecreasing.

-

(ii)

Let \(v:{\mathbb {R}}^n\times [0, T)\rightarrow {\mathbb {R}}\) be a bounded uniformly continuous viscosity supersolution to (A.3), and assume that

$$\begin{aligned} F\subseteq \{x\in {\mathbb {R}}^n\text { s.t. } v(x,0)\geqslant 0\}. \end{aligned}$$Then

$$\begin{aligned} F^+(t)\subseteq \{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)\geqslant 0\}, \end{aligned}$$for all \(t\in (0, T)\).

Moreover, if

$$\begin{aligned} d(F, \;\{x\in {\mathbb {R}}^n\text { s.t. } v(x,0)> 0\})=\delta >0, \end{aligned}$$then

$$\begin{aligned} F^+(t)\subseteq \{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)>0\}, \end{aligned}$$for all \(t\in (0, T)\), and

$$\begin{aligned} d\Big (F^{+}(t), \{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)>0\}\Big )\geqslant \delta . \end{aligned}$$ -

(iii)

Let \(w:{\mathbb {R}}^n\times [0, T)\rightarrow {\mathbb {R}}\) be a bounded uniformly continuous viscosity subsolution to (A.3), and assume that

$$\begin{aligned} E\supseteq \{x\in {\mathbb {R}}^n\text { s.t. } w(x,0)\geqslant 0\}). \end{aligned}$$Then

$$\begin{aligned} E^+(t)\supseteq \{x\in {\mathbb {R}}^n\text { s.t. } w(x,t)\geqslant 0\}, \end{aligned}$$for all \(t\in (0, T)\).

Moreover, if

$$\begin{aligned} d(E,\{x\in {\mathbb {R}}^n\text { s.t. } w(x,0)\geqslant 0\})=\delta >0, \end{aligned}$$then

$$\begin{aligned} E^{-}(t)\supseteq \{x\in {\mathbb {R}}^n\text { s.t. } w(x,t)\geqslant 0\}, \end{aligned}$$for all \(t \in (0,T)\), and

$$\begin{aligned} d\Big (E^{-}(t), \;\{x\in {\mathbb {R}}^n\text { s.t. } w(x,t)\geqslant 0\}\Big )\geqslant \delta . \end{aligned}$$

Proof

First, we prove (i). Since \(F\subseteq E\) and \(d(E, F)=\delta \), then it is easy to check that \(d_E(x)\geqslant d_F(x)+\delta \).

Let now \(C>2 \delta \) and define

So, again we obtain that \(u_F(x)+\delta \leqslant u_E(x)\). Therefore, by the Comparison Principle in Theorem A.5, we get that \(u_E(x,t)\geqslant u_F(x,t)+\delta \) for every \(t>0\).

This in turn implies that \(F^+(t)\subset E^{-}(t)\) and moreover that \(d(F^+(t), E^-(t))\geqslant \delta \), due to the fact that \(u_E(x,t)\) and \(u_F(x,t)\) are 1-Lipschitz in x, by Theorem A.5.

If we repeat the same argument with initial data \(E^{-}(t)\) and \(F^{+}(t)\), we obtain the desired statement in (i).

We prove now (ii). For this, we distinguish two cases: if

we let

Then, by item (i), we get that \(F^+(t)\subseteq E^{-}(t)\) and \(d(F^{+}(t), E^{-}(t))\geqslant \delta \). Let \(u_E\) be the unique viscosity solution to (A.3) with \(u_E(x,0)=v(x,0)\). Then, by the Comparison Principle in Theorem A.5, we get that \(u_{E}(x,t)\leqslant v(x,t)\) for all \(t\in (0, T)\). In turn, this implies that \(E^{-}(t)\subseteq \{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)>0\}\), and this permits to conclude that (ii) holds true, under the assumption in (A.5).

If, on the other hand, we have that (A.5) does not hold, we write

Then, by the uniform continuity of \(v(\cdot ,0)\), we have that for every \(\varepsilon >0\) there exists \(\delta _\varepsilon >0\) such that

So we repeat the argument above (based on (A.5)) substituting v(x, t) with the function \(v(x,t)+\varepsilon \) and E with \(\overline{ \{x\in {\mathbb {R}}^n\text { s.t. } v(x,0)>-\varepsilon \}}\). This gives that \(F^+(t)\subseteq E^{-}(t)\), and \(E^{-}(t) \subseteq \{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)>-\varepsilon \}\) for all \(\varepsilon >0\). Therefore \(F^+(t)\subseteq \{x\in {\mathbb {R}}^n\text { s.t. } v(x,t)\geqslant 0\}\).

This completes the proof of (ii). The proof of (iii) is completely analogous, and we omit it. \(\square \)

Remark A.9

Observe that if E is a compact set and in particular \(E\subseteq B_R\) for some \(R>0\), then by Remark A.7 and Corollary A.8 we have that \(E^{+}(t)\subseteq B_{R(t)}\) where \(R(t)<R\) has been defined in Remark A.2. In particular, there exists \(T_E\leqslant T_R\) such that \(T_E=\sup \{t>0 \) s.t. int\( \,E^+(t)\ne \varnothing \}\).

Now, we define the lower and upper semicontinuous envelopes of a family of sets \(C(t)\subseteq {\mathbb {R}}^n\) as follows:

We have that \(C_\star (t)\subseteq C(t)\subseteq C^\star (t)\). Moreover for any sequence \((x_n, t_n)\rightarrow (x,t)\), if \(x_n\in \overline{C^\star (t_n)}\) then \(x\in \overline{C^\star (t)}\), whereas, if \(x_n\not \in \,\)int\(\,(C_\star (t_n))\), then \(x\not \in \,\)int\(\,(C_\star (t))\).

If \(C^\star (t)=C(t)=C_\star (t)\) for every t, we say that the family is continuous.

We also need a result to compare geometric sub and supersolutions to (1.1) with the level set flow, see [8].

Proposition A.10

Let \(C(t)\subseteq {\mathbb {R}}^n\) for \(t\in [0,T]\), be a continuous family of sets with compact Lipschitz boundaries, which are piecewise of class \(C^{1,1}\) outside a finite number of angularFootnote 2 points.

Fix \(E\subset {\mathbb {R}}^2\) and \(u_E\) a bounded Lipschitz continuous function such that

Consider the inner and outer flows associated to E, according to (1.8).

-

(i)

Assume that there exists \(\delta >0\) such that at every \( x\in \partial C(t)\) where \(\partial C(t)\) is \(C^{1,1}\) there holds

$$\begin{aligned} \partial _t x\cdot \nu (x)\geqslant -H^K_{C(t)}(x)+\delta . \end{aligned}$$(A.6)Moreover, assume that

$$\begin{aligned} \begin{aligned}&{\text{ at } \text{ every } \text{ angular } \text{ point } x\in \partial C(t) \text{ there } \text{ exists } r_0>0 \text{ such } \text{ that }}\\&\text{ the } \text{ set } B(x,r)\cap C(t) \text{ is } \text{ convex } \text{ for } \text{ all } r<r_0. \end{aligned} \end{aligned}$$(A.7)Then, if \(E \subseteq C(0)\), with \(d(E, C(0))=k\geqslant 0\), it holds that \(E^+(t)\subset C(t)\) for all \( t\in [0,T)\), with \(d(E^+(t), C(t))\geqslant k\geqslant 0\).

-

(ii)

Assume that there exists \(\delta >0\) such that at every \(x\in \partial C(t)\) where \(\partial C(t)\) is \(C^{1,1}\) it holds

$$\begin{aligned} \partial _t x\cdot \nu (x)\leqslant -H^K_{C(t)}(x)-\delta . \end{aligned}$$(A.8)Moreover, assume that

$$\begin{aligned} \begin{aligned}&{\text{ at } \text{ every } \text{ angular } \text{ point } x\in \partial C(t) \text{ there } \text{ exists } r_0>0 \text{ such } \text{ that }}\\&{ \text{ the } \text{ set } B(x,r)\cap ({\mathbb {R}}^n{\setminus } C(t)) \text{ is } \text{ convex } \text{ for } \text{ all } r<r_0. } \end{aligned} \end{aligned}$$(A.9)Then, if \(E \supseteq C(0)\), it holds that \(E^+(t)\supseteq C(t)\) for all \( t\in [0, T)\).

Moreover, if \(d(C(0), \{x\in {\mathbb {R}}^n\text { s.t. }u_E(x)> 0\})=k>0\), it holds that \(E^-(t)\supset C(t)\) for all \( t\in [0,T)\), with \(d(E^-(t), C(t))\geqslant k\).

Proof

We give just a sketch of the proof of (i), since it relies on classical arguments in viscosity solution theory and level set methods (the proof of (ii) is analogous), see [8].

For \(\varepsilon >0\) sufficiently small, we define the function

We claim that for \(\varepsilon >0\) sufficiently small (depending on \(\delta \) in (A.6)) the function \(u_\varepsilon \) is a viscosity supersolution to (A.3). If the claim is true, then the statement in (i) is a direct consequence of the Comparison Principle in Corollary A.8.

To prove the claim, for every \(\lambda \in [0, \varepsilon ]\), we define

Note that \(u_\varepsilon =0\) on \(\overline{{\mathbb {R}}^n{\setminus } C(t)}\), \(u_\varepsilon =\lambda \) on \(\partial C_\lambda (t)\) and \(u_\varepsilon =\varepsilon \) on \(C_\varepsilon (t)\).

Due to the regularity assumption on C(t), we have that for every \( \lambda \in [0, \varepsilon ]\), the sets \(C_\lambda (t)\) are Lipschitz continuous, piecewise \(C^{1,1}\) outside a finite number of angular points and satisfy the following property: at every angular point \(x\in \partial C_\lambda (t)\) there exists \(r_0>0\) such that the set \(B(x,r)\cap C_\lambda (t)\) is convex for all \(r<r_0\). Therefore assumption (A.7) is satisfied for every \(C_\lambda \), with \( \lambda \in [0, \varepsilon ]\).

Now we observe that, due to the regularity assumptions and to (A.7), we have that for every \(x_\varepsilon \in \partial C_\varepsilon (t)\) there exists \(x_0\in \partial C (t)\) such that \(|x_0-x_\varepsilon |=\varepsilon \) (\(x_0\) is unique if \(\partial C_\varepsilon (t)\) is \(C^{1,1}\) at \(x_\varepsilon \), and it is eventually non unique if \(x_\varepsilon \) is an angular point). Moreover \(\partial C(t)\) is \(C^{1,1}\) around \(x_0\).

Assume first that \(x_\varepsilon \) is an angular point of \(\partial C_\varepsilon (t)\). We fix \(\zeta (\varepsilon , x_\varepsilon , t)=\zeta _\varepsilon >0\) such that \(\partial C_\varepsilon (t)\) is \(C^{1,1}\) at every \(x\in B(x_\varepsilon , \zeta _\varepsilon )\cap \partial C_\varepsilon (t)\), \(x\ne x_\varepsilon \), \(\partial C(t)\) is \(C^{1,1}\) at every \(x\in B(x_0, \zeta _\varepsilon )\cap \partial C (t)\) (so that the K-curvature is well defined) and moreover there holds

Since the angular points \(x_\varepsilon \) of \(\partial C_\varepsilon (t)\) are finite for every \(t\in [0,T]\), and the interval [0, T] is compact, we can choose \(\zeta _\varepsilon \) independent of \(x_\varepsilon \) and t. Now consider the case in which \(\partial C_\varepsilon (t)\cap B(x_\varepsilon , \zeta _\varepsilon )\) is \(C^{1,1}\). Then we use the continuity of the K-curvature as \(\varepsilon \rightarrow 0\) (see [8]) to see that there exists \(\eta _\varepsilon =\eta (\varepsilon , x_\varepsilon , \zeta _\varepsilon , t)>0\) such that

Finally, due to the compactness of

where \(x_i\) are the angular points of \(\partial C_\varepsilon (t)\), and due to compactness of the time interval [0, T], we observe that we may choose \(\eta _\varepsilon =\eta (\varepsilon , \zeta _\varepsilon )\) independent of \(x_\varepsilon \) and t. In conclusion we get that there exists \(\eta _\varepsilon >0\) depending on \(\varepsilon \) such that for all \(x_\varepsilon \in \partial C_\varepsilon (t)\) which are not angular points there holds

The same argument can be repeated for all \(\lambda \in (0, \varepsilon )\), and so for every \(\lambda \) there exists \(\eta _\lambda >0\) such that (A.10) holds. We define

Now we distinguish different cases according to the position of the point x, in order to prove that \(u_\varepsilon \) is a viscosity supersolution to (A.3).

If \(x\in \,\)int\(\,({\mathbb {R}}^n{\setminus } C(t))\), or \(x\in \) int\((C_\varepsilon (t))\), then actually the equation in (A.3) is trivially satisfied since \(|D u_\varepsilon (x,t)|=0\) and \(\partial _t u_\varepsilon (x,t)= 0\) by the continuity properties of the families C(t) and \(C_\varepsilon (t)\).

Now we suppose that \(x\in \partial C_\varepsilon (t)\). Then it is easy to show that the set of test functions is empty, so again the equation in (A.3) is trivially satisfied.

We finally assume that \(x \in \partial C_\lambda (t)\) for some \(\lambda \in [0,\varepsilon )\). Observe that at every angular point \(x \in \partial C_\lambda (t)\), by the assumption (A.7) (which holds also for \(C_\lambda (t)\) as proved above), the set of test functions is empty so the equation in (A.3) is trivially satisfied. So assume that \(C_\lambda (t)\) is locally of class \(C^{1,1}\) around x. We fix \(x_0\in \partial C(t)\) such that \(|x-x_0|=\lambda \). So, if \(\nu (x)\) is the outer normal to \(\partial C_\lambda (t)\) at x, then \(\nu (x)=\frac{x_0-x}{|x-x_0|}\) and \(\nu (x)=\nu (x_0)\), so it coincides with the outer normal to \(\partial C(t)\) at \(x_0\) and \( \partial _t x_0\cdot \nu (x_0)= \partial _t x\cdot \nu (x)\). Moreover, due to (A.10), and the definition of \(\eta \) in (A.11),we get

Let \(\phi \) be a test function for \(u_\varepsilon \) at (x, t), then \(D\phi (x,t)=-\rho \nu (x) \) for some \(\rho \in [0,1]\) for \(\lambda =0\) and \(D\phi (x,t)=-\nu (x)\) for \(\lambda >0\), whereas \(\phi _t(x,t)=\rho \partial _t x\cdot \nu (x)\) (with \(\rho =1\) as \(\lambda >0\)). Moreover

Therefore, computing the equation at (x, t), we get, using (A.12), (A.13) and (A.6),

So, if we choose \(\varepsilon >0\) sufficiently small, according to \(\delta \), so that \(\eta =\eta (\varepsilon )\leqslant \delta \), then the previous inequality gives that \(u_\varepsilon \) is a supersolution to (A.3), as we claimed. \(\square \)

Now we present the following extension to the noncompact case of Proposition A.10.

Corollary A.11

Let \(C(t)\subseteq {\mathbb {R}}^n\) for \(t\in [0, T)\), be a continuous family of sets with Lipschitz boundaries, which are piecewise of class \(C^{1,1}\) outside a finite number of angular points, and such that there exists \(R>0\) such that \(C(t)\cap ({\mathbb {R}}^n{\setminus } B_R)\) is of class \(C^{1,1}\) for all t.

Fix \(E\subset {\mathbb {R}}^n\) and \(u_E\) a bounded Lipschitz continuous function such that

and consider the inner and outer flows associated to E, according to (1.8).

-

(i)

Assume that there exists \(\delta >0\) such that (A.6) holds for every \(x\in \partial C(t)\cap B_{4R}\). Suppose also that (A.7) holds true.

Moreover, assume that there exists \(\lambda _0\) such that, for all \(\lambda \in [0, \lambda _0]\), it holds that

$$\begin{aligned} \partial _t x\cdot \nu (x)\geqslant -H^K_{C_\lambda (t)}(x) \end{aligned}$$(A.14)for all \(x\in \partial C_\lambda (t)\cap ({\mathbb {R}}^n{\setminus } B_{2R})\), where

$$\begin{aligned} C_\lambda (t):=\{x\in C(t)\text { s.t. } d_{C(t)}(x)\geqslant \lambda \}. \end{aligned}$$Then, if \(E \subset C(0)\), with \(d(E, C(0))=k\geqslant 0\), it holds that \(E^+(t)\subset C(t)\) for all \( t>0\), with \(d(E^+(t), C(t))\geqslant k\geqslant 0\).

-

(ii)

Assume that there exists \(\delta >0\) such that (A.8) holds for every \(x\in \partial C(t)\cap B_{4R}\). Suppose also that (A.9) holds true.

Moreover, assume that there exists \(\lambda _0\) such that for all \(\lambda \in [0, \lambda _0]\), it holds that

$$\begin{aligned} \partial _t x\cdot \nu (x)\leqslant -H^K_{C^\lambda (t)}(x) \end{aligned}$$(A.15)for all \(x\in \partial C^\lambda (t)\cap ({\mathbb {R}}^n{\setminus } B_{2R})\) where

$$\begin{aligned} C^\lambda (t):=\{x\in {\mathbb {R}}^n\text { s.t. } d_{C(t)}\geqslant - \lambda \}. \end{aligned}$$Then, if \(E \supseteq C(0)\), it holds that \(E^+(t)\supseteq C(t)\) for all \( t>0\).

In addition, if \(d(C(0), \{x\in {\mathbb {R}}^n\text { s.t. }u_E(x)> 0\})=k>0\), it holds that \(E^-(t)\supset C(t)\) for all \( t>0\), with \(d(E^-(t), C(t))\geqslant k\).

The proof of Corollary A.11 is similar to that of Proposition A.10, and we omit the details.

We also have the following semicontinuity type result for the outer evolutions.

Proposition A.12

There holds

Proof

We claim that for any fixed \(t>0\) and a.e. in \({\mathbb {R}}^n\),

To show (A.17), it is enough to consider a point \(x\in {\mathrm{int}}(\{u_E\left( \cdot , t\right) \geqslant 0\})\), so that \(\{u_E\left( \cdot , t\right) \geqslant 0\}\supset B_r(x)\) for some \(r>0\). Then, recalling formula (A.2) in Remark A.2, we have that \(C(r(\eta ))=C(r)-\eta \), for \(\eta \in \left( 0, T_r\right) \), where \(T_r>0\) is the extinction time of the ball \(B_r\) under the flow (1.1).

Hence, by Remark A.7 and Corollary A.8 we get

In particular, it follows that

which implies (A.17).

Then, by (A.17) and the Fatou Lemma, for all \(t>0\) we obtain

establishing (A.16). \(\square \)

In the case of homogeneous kernels, i.e. under the assumption in (1.24), the geometric flow possesses a useful time scaling property as follows.

Lemma A.13

Assume that \(K(x)=\frac{1}{|x|^{n+s}}\) for some \(s\in (0,1)\). Let \(\lambda >0\), \(M>0\), \(E\subseteq {\mathbb {R}}^n\) and \(u_{E,\lambda }(x,t)\) be the viscosity solution to (A.3) with initial condition given by

Let also \(E_\lambda ^+(t):=\{x\in {\mathbb {R}}^n { \text{ s.t. } } u_{E,\lambda }(x,t)\geqslant 0\}\) and \(E_\lambda ^-(t):=\{x\in {\mathbb {R}}^n { \text{ s.t. } } u_{E,\lambda }(x,t)>0\}\). Then

Proof

For every \(x\in {\mathbb {R}}^n\) such that \(-M\leqslant d_E(x)\leqslant M\), we have that

Moreover if \(d_E(x)\geqslant M\), then \(\lambda u_{E,1}(x)=\lambda M= u_{E,\lambda }(\lambda x)\), and analogously for \(d_E(x)\leqslant M\). Therefore we get that \(\lambda u_{E,1}\left( \frac{x}{\lambda }\right) =u_{E,\lambda }(x)\). Moreover, by the scaling properties of K, we have that \(H^s_E(x)=\lambda ^{-s} H^s_{\lambda E}(\lambda x)\). Therefore the function \( \lambda u_{E,1}\left( \frac{x}{\lambda }, \frac{t}{\lambda ^{1+s}}\right) \) is a viscosity solution to (A.3), with initial datum \(u_{E,\lambda }(x)\). By the uniqueness of viscosity solutions, given in Theorem A.5, we get that \(\lambda u_{E,1}\left( \frac{x}{\lambda },\frac{t}{\lambda ^{1+s}}\right) =u_{E,\lambda }(x,t)\). From this we deduce the desired statement. \(\square \)

Rights and permissions

About this article

Cite this article

Cesaroni, A., Dipierro, S., Novaga, M. et al. Fattening and nonfattening phenomena for planar nonlocal curvature flows. Math. Ann. 375, 687–736 (2019). https://doi.org/10.1007/s00208-018-1793-6

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00208-018-1793-6