Abstract

In this paper we consider stochastic optimization problems for an ambiguity averse decision maker who is uncertain about the parameters of the underlying process. In a first part we consider problems of optimal stopping under drift ambiguity for one-dimensional diffusion processes. Analogously to the case of ordinary optimal stopping problems for one-dimensional Brownian motions we reduce the problem to the geometric problem of finding the smallest majorant of the reward function in a two-parameter function space. In a second part we solve optimal stopping problems when the underlying process may crash down. These problems are reduced to one optimal stopping problem and one Dynkin game. Examples are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In most articles dealing with stochastic optimization problems one major assumption is that the decision maker has full knowledge of the parameter of the underlying stochastic process. This does not seem to be a realistic assumption in many real world situations. Therefore, different multiple prior models were studied in the economic literature in the last years. Here, we want to mention Duffie and Epstein (1992) and Epstein and Schneider (2003), and refer to Cheng and Riedel (2010) for an economic discussion and further references.

In this setting it is assumed that the decision maker deals with the uncertainty via a worst-case approach, that is, she optimizes her reward under the assumption that the “market” chooses the worst possible prior. This is a natural assumption, and we also want to pursue this approach.

A very important class of stochastic optimization problems is given by optimal stopping problems. These problems arise in many different fields, e.g., in pricing American-style options, in portfolio optimization, and in sequential statistics. Discrete time problems of optimal stopping in a multiple prior setting were first discussed in Riedel (2009) and analogous results to the classical ones were proved. In this setting a generalization of the classical best choice problem was treated in detail in Chudjakow and Riedel (2009). In continuous time the case of an underlying diffusion with uncertainty about the drift is of special interest. The general theory (including adjusted Hamilton-Jacobi-Bellman equations) is developed in Cheng and Riedel (2010). Some explicit examples are given there, but no systematic way for finding an analytical solution is described. In Alvarez (2007) the case of monotonic reward functions for one-dimensional diffusion processes is considered. The restriction to monotonic reward functions simplifies the problem since only two different worst-case measures can arise.

Another class of stochastic optimization problems under uncertainty was dealt with in a series of papers starting with Korn and Wilmott (2002): Portfolio optimization problems are considered under the assumption that the underlying asset price process may crash down at a certain (unknown) time point. The decision maker is again considered to be ambiguity averse in the sense that she tries to choose the best possible stopping policy out of the worst possible realizations of the crash date. See Korn and Seifried (2009) for an overview on existing results.

The aim of this article is to treat optimal stopping problems under uncertainty for underlying one-dimensional diffusion processes. These kinds of problems are of special interest since they arise in many situations and often allow for an explicit solution.

The structure of this article is as follows: In Sect. 2 we first review some well-known facts about the solution of ordinary optimal stopping problems for an underlying Brownian motion. These problems can be solved graphically by characterizing the value function as the smallest concave majorant of the reward function. Then we treat the optimal stopping problem under ambiguity about the drift in a similar way: The result is that the value function can be characterized as the smallest majorant of the reward function in a two-parameter class of functions. The main tool is the use of generalized \(r\)-harmonic functions. The proof is inspired by the ideas first described in Beibel and Lerche (1997). It seems that other standard methods for dealing with optimal stopping problems for diffusions without drift ambiguity [such as Martin boundary theory as in Salminen (1985), generalized concavity methods as in Dayanik and Karatzas (2003), or linear programming arguments as in Helmes and Stockbridge (2010)] are not applicable with minor modifications due to the nonlinear structure coming from drift ambiguity. After giving an example and characterizing the worst-case measure, we generalize the results to general one-dimensional diffusion processes.

In Sect. 3 we introduce the optimal stopping problem under ambiguity about crashes of the underlying process in the spirit of Korn and Seifried (2009). In this situation the optimal strategy can be described by two easy strategies: One pre-crash and one post-crash strategy. These strategies can be found as solutions of a one-dimensional Dynkin game and an ordinary optimal stopping problem, which can both be solved using standard methods. We want to point out that this model is a natural situation where Dynkin games arise and the theory developed in the last years can be used fruitfully. As an explicit example we study the valuation of American call-options in the model with crashes. Here, the post-crash strategy is the well-known threshold-strategy in the standard Black-Scholes setting. The pre-crash strategy is of the same type, but the optimal threshold is lower.

2 Optimal stopping under drift ambiguity

2.1 Graphical solution of ordinary optimal stopping problems

Problems of optimal stopping in continuous time are well-studied and the general theory is well-developed. Nonetheless, the explicit solution to such problems is often hard to find and the class of explicit examples is very limited. Most of them are generalizations of the following situation, that allows for an easy geometric solution:

Let \((W_t)_{t\ge 0}\) be a standard Brownian motion on a compact interval \([a,b]\) with absorbing boundary points \(a\) and \(b\). We consider the problem of optimal stopping given by the value function

where the reward function \(g:[a,b]\rightarrow [0,\infty )\) is continuous and the supremum is taken over all stopping times w.r.t. the natural filtration for \((W_t)_{t\ge 0}\). Here and in the following, \(\mathbb{E }_x\) denotes taking expectation for the process conditioned to start in \(x\). In this case it is well-known that the value function \(v\) can be characterized as the smallest concave majorant of \(g\), see Dynkin and Yushkevich (1969). This means that the problem of optimal stopping can be reduced to finding the smallest majorant of \(g\) in an easy class of functions. For finding the smallest concave majorant of a function \(g\) one only has to consider affine functions, i.e., for each fixed point \(x\in [a,b]\) the value of the smallest concave majorant is given by

where \(h_{c,d}\) is an element of the two-parameter class of affine functions of the form \(h_{c,d}(y)=cy+d\). This problem can be solved geometrically, see Fig. 1. We want to remark that this problem is indeed a semi-infinite linear programming problem:

This gives rise to an efficient method for solving these problems, which can be generalized in an appropriate way, see Helmes and Stockbridge (2010) for an analytical method and Christensen (2012) for a numerical point of view.

The example described above is important both for theory and applications of optimal stopping since by studying it one can obtain an intuition for more complex situations such as finite time horizon problems and multidimensional driving processes, where numerical methods have to be used in most situations of interest.

The goal of this section is to handle optimal stopping problems with drift ambiguity for diffusion processes similarly to the ordinary case discussed above. This gives rise to an easy to handle geometric method for solving optimal stopping problems under drift ambiguity explicitly.

2.2 Special case: Brownian motion

In the following we use the notation of Cheng and Riedel (2010): Let \((X_t)_{t\ge 0}\) be a Brownian motion under the measure \(Q\), fix \(\kappa \ge 0\) and denote by \(\mathcal P ^\kappa \) the set of all probability measures, that are equivalent to \(Q\) with density process of the form

for a progressively measurable process \((\theta _t)_{t\ge 0}\) with \(|\theta _t|\le \kappa \) for all \(t\ge 0\). We want to find the value function

for some fixed discounting rate \(r>0\) and a measurable reward function \(g:\mathbb{R }\rightarrow [0,\infty )\), where \(\mathbb{E }_x^P\) means taking expectation under the measure \(P\) when the process is started in \(x\). Instead of taking affine functions as in Sect. 2.1 we construct another class of appropriate functions based on the minimal \(r\)-harmonic functions (introduced below) for the Brownian motion with drift \(-\kappa \) resp. \(\kappa \) as follows:

Denote the roots of the equation

by \(\alpha _1<0<\alpha _2\) and the roots of

by \(\beta _1<0<\beta _2\). Then \(e^{\alpha _ix},i=1,2,\) are the minimal \(r\)-harmonic functions for a Brownian motion with drift \(-\kappa \), and \(e^{\beta _ix},i=1,2,\) the corresponding functions for a Brownian motion with drift \(\kappa \). Note that \(\beta _1\le \alpha _1\le 0\le \beta _2\le \alpha _2\) and \(\beta _1=-\alpha _2\) and \(\beta _2=-\alpha _1\). For all \(c\in \mathbb{R }\) define the functions \(h_c:\mathbb{R }\rightarrow [0,\infty )\) via

and

For \(c\in \mathbb{R }\), the function \(h_c\) is constructed by smoothly merging \(r\)-harmonic functions for the Brownian motion with drift \(\kappa \) (for \(x\le c\)) and \(-\kappa \) (for \(x> c\)) at their minimum in \(c\). By taking derivatives and taking into account that \(\beta _1=-\alpha _2\) and \(\beta _2=-\alpha _1\), one sees that the function \(h_c\) is indeed \(C^2\).

The set \(\{\lambda h_c:c\in [-\infty ,\infty ],\lambda \ge 0\}\) does not form a convex cone for \(\kappa >0\). This is the main difference compared to the case without drift ambiguity. Therefore, the standard techniques for optimal stopping are not applicable immediately. Nonetheless, this leads to the right \(\mathcal P ^\kappa \)-supermartingales to work with:

Lemma 1

-

(i)

For all \(a,b,x\in \mathbb{R }\) with \(a\le x\le b,\,c\in [-\infty ,\infty ],\,P\in \mathcal P ^\kappa \) and \(\tau =\inf \{t\ge 0:X_t\not \in [a,b]\}\) it holds that

$$\begin{aligned} \mathbb{E }_x^{P}(e^{-r\tau }h_c(X_\tau ){1\!\!\!\mathrm{l}}_{\{\tau <\infty \}})\ge h_c(x) \text{ and} \mathbb{E }_x^{P_c}(e^{-r\tau }h_c(X_\tau )1\!\!\!\mathrm{l}_{\{\tau <\infty \}})=h_c(x), \end{aligned}$$where the measure \(P_c\) is such that

$$\begin{aligned} dX_t=-\kappa \mathrm{sgn}(X_t-c)dt+dW^c_t \end{aligned}$$for a Brownian motion \(W^c\) under \(P_c\).

-

(ii)

For all \(c\in [-\infty ,\infty ]\) and all stopping times \(\tau \) it holds that

$$\begin{aligned} \mathbb{E }_x^{P_c}(e^{-r\tau }h_c(X_\tau )1\!\!\!\mathrm{l}_{\{\tau <\infty \}})\le h_c(x). \end{aligned}$$

-

(iii)

For all \(a,b,x\in \mathbb{R }\) with \(a<x<b,\,P\in \mathcal P ^\kappa \), and \(\tau _a=\inf \{t\ge 0:X_t=a\}, \tau _b=\inf \{t\ge 0:X_t=b\}\) it holds that

$$\begin{aligned}&\mathbb{E }_x^{P}(e^{-r\tau _a}h_\infty (X_{\tau _a}) 1\!\!\!\mathrm{l}_{\{\tau _a<\infty \}})\ge h_\infty (x),\\&\mathbb{E }_x^{P_\infty }(e^{-r\tau _a}h_\infty (X_{\tau _a}) 1\!\!\!\mathrm{l}_{\{\tau _a<\infty \}})=h_\infty (x), \end{aligned}$$and

$$\begin{aligned}&\mathbb{E }_x^{P}(e^{-r\tau _b}h_{-\infty }(X_{\tau _b}) 1\!\!\!\mathrm{l}_{\{\tau _b<\infty \}})\ge h_{-\infty }(x),\\&\mathbb{E }_x^{P_{-\infty }}(e^{-r\tau _b}h_{-\infty }(X_{\tau _b}) 1\!\!\!\mathrm{l}_{\{\tau _b<\infty \}})=h_{-\infty }(x). \end{aligned}$$

Proof

-

(i)

For \(P\in \mathcal P \) with density process \(\theta \), by Girsanov’s theorem, we may write

$$\begin{aligned} X_t=W_t^P+\int \limits _0^t\theta _sds, \end{aligned}$$where \(W^P\) is a Brownian motion under \(P\). Since \(h_c\in C^2\) we can apply Itô’s lemma and obtain

$$\begin{aligned} dh_c(X_t)=h_c^{\prime }(X_t)dW^P_t+(h_c^{\prime }(X_t)\theta _t+1/2h_c^{\prime \prime }(X_t))dt. \end{aligned}$$By construction of \(h_c\), it holds that

$$\begin{aligned} 1/2h_c^{\prime \prime }(X_t)-\kappa \mathrm{sgn}(X_t-c)h_c^{\prime }(X_t)-rh_c(X_t)=0, \end{aligned}$$hence

$$\begin{aligned} e^{-rt}h_c(X_t)&= h_c(X_0)+\int \limits _0^t e^{-ru}(\kappa \mathrm{sgn}(X_u-c)+\theta _u)h_c^{\prime }(X_u)du\\&+\int \limits _0^t e^{-ru}h_c^{\prime }(X_u)dW^P_u. \end{aligned}$$Noting that \((\kappa \mathrm{sgn}(X_u-c)+\theta _u)\ge 0\) iff \(h_c^{\prime }(X_u)\ge 0\), we obtain that the process \((e^{-r(t\wedge \tau )}h_c(X_{t\wedge \tau }))_{t\ge 0}\) is a bounded \(P\)-submartingale. Therefore, by the optional sampling theorem,

$$\begin{aligned} \mathbb{E }_x^P(e^{-r\tau }h_c(X_{\tau }))\ge \mathbb{E }_x^P(h_c(X_{0}))=h_c(x). \end{aligned}$$Under \(P^c\) we see that \((e^{-r(t\wedge \tau )}h_c(X_{t\wedge \tau }))_{t\ge 0}\) is actually a local martingale that is bounded. Therefore, the optional sampling theorem yields equality.

-

(ii)

By the calculation in \((i)\) the process \((e^{-rt}h_c(X_t))_{t\ge 0}\) is a positive local \(P^c\)-martingale, i.e. also a \(P^c\)-supermartingale. The optional sampling theorem for non-negative supermartingales is applicable.

-

(iii)

By noting that \(h_\infty \) is decreasing and \(h_{-\infty }\) is increasing the same arguments as in \((i)\) apply.

The following theorem shows that the geometric solution described in Sect. 2.1 can indeed be generalized to the drift ambiguity case. Moreover, we give a characterization of the optimal stopping set as maximum point of explicitly given functions.

Theorem 1

-

(i)

It holds that

$$\begin{aligned} v(x)=\inf \{\lambda h_{c}(x):c\in [-\infty ,\infty ],\lambda \in [0,\infty ], \lambda h_{c}\ge g\} \text{ for} \text{ all}\,x\in \mathbb{R }. \end{aligned}$$Furthermore, the infimum in \(c\) is indeed a minimum.

-

(ii)

A point \(x\in \mathbb{R }\) is in the optimal stopping set \(\{y:v(y)=g(y)\}\) if and only if there exists \(c\in [-\infty ,\infty ]\) such that

$$\begin{aligned} x\in \mathrm{argmax}\frac{g}{h_c}. \end{aligned}$$

Proof

For each \(x\in \mathbb{R },\,c\in [-\infty ,\infty ]\) and each stopping time \(\tau \) we obtain using Lemma 1 (ii)

Since \(\lambda h_c\ge g\) holds if and only if \(\lambda \ge \sup \left(\frac{g}{h_c}\right)\) we obtain that

For the other inequality consider the following cases:

-

Case 1:

$$\begin{aligned} \sup _{y\in \mathbb{R }}\frac{g(y)}{h_\infty (y)}=\sup _{y\le x}\frac{g(y)}{h_\infty (y)}. \end{aligned}$$Take a sequence \((y_n)_{n\in \mathbb{N }}\) with \(y_n\le x\) such that \({g(y_n)}/{h_\infty (y_n)}\rightarrow \sup _{y\in \mathbb{R }}\frac{g(y)}{h_\infty (y)}\). Then for \(\tau _n=\inf \{t\ge 0: X_t=y_n\}\) using Lemma 1 (iii) we obtain

$$\begin{aligned} v(x)&\ge \inf _P\mathbb{E }_x^P(e^{-r\tau _n}g(X_{\tau _n}) 1\!\!\!\mathrm{l}_{\{\tau _n<\infty \}})\\&= \inf _P\mathbb{E }_x^P(e^{-r\tau _n}h_\infty (X_{\tau _n}) \frac{g}{h_\infty }(X_{\tau _n})1\!\!\!\mathrm{l}_{\{\tau _n<\infty \}})\\&= \frac{g}{h_\infty }(y_n)E_x^{P_\infty }(e^{-r\tau _n}h_\infty (X_{\tau _n}) 1\!\!\!\mathrm{l}_{\{\tau _n<\infty \}})\\&= \frac{g}{h_\infty }(y_n)h_\infty (x)\\&\rightarrow \sup _{y\in \mathbb{R }}\frac{g(y)}{h_\infty (y)} h_\infty (x)~~~~~\text{ for}\;n\rightarrow \infty . \end{aligned}$$Therefore, \(v(x)\ge \inf \{\lambda h_{c}(x):c\in [-\infty ,\infty ],\lambda \in [0,\infty ], \lambda h_{c}\ge g\}\). Moreover, if \(x\) is in the stopping set, i.e. \(v(x)=g(x)\), then we see that \(g(x)/h_\infty (x)=\sup _{y\in \mathbb{R }}\frac{g(y)}{h_\infty (y)}\), i.e. \(x\) is a maximum point of the function \({g}/h_{\infty }\), i.e. \((ii)\).

-

Case 2: The case \(\sup _{y\in \mathbb{R }}{g(y)}/{h_{-\infty }(y)}=\sup _{y\ge x}{g(y)}/{h_{-\infty }(y)}\) can be handled the same way.

-

Case 3:

$$\begin{aligned} \sup _{y\le x}\frac{g(y)}{h_\infty (y)}>\sup _{y\ge x}\frac{g(y)}{h_\infty (y)}~~~\text{ and}~~~\sup _{y\le x}\frac{g(y)}{h_{-\infty }(y)}<\sup _{y\ge x}\frac{g(y)}{h_{-\infty }(y)}. \end{aligned}$$

First we show that there exists \(c^*\in \mathbb{R }\) such that

To this end, write

By construction of \(h_c\) it holds that \(h_c=\min (h_{c,1},h_{c,2})\). Therefore,

Since the functions

and

are continuous as concave functions, we obtain that the function \(c\mapsto \sup _{y\le x}\frac{g(y)}{h_c(y)}\) is continuous. By the same argument, the function \(c\mapsto \sup _{y\ge x}\frac{g(y)}{h_c(y)}\) is also continuous. By the intermediate value theorem applied to the function

there exists \(c^*\) with \(\sup _{y\le x}\frac{g(y)}{h_{c^*}(y)}=\sup _{y\ge x}\frac{g(y)}{h_{c^*}(y)}\) as desired.

Now take sequences \((y_n)_{n\in \mathbb{N }}\) and \((z_n)_{n\in \mathbb{N }}\) with \(y_n\le x\le z_n\) such that

Using \(\tau _n=\inf \{t\ge 0:X_t\not \in [y_n,z_n]\}\) we obtain by Lemma 1 (i)

This yields the result \((i)\). As above we furthermore see that if \(x\) is in the optimal stopping set, then it is a maximum point of \(g/h_{c^*}\), i.e. \((ii)\). \(\square \)

Remark 1

-

1.

We would like to emphasize that we do not need any continuity assumptions on \(g\). This is remarkable, because even for the easy case described at the beginning of this section most standard techniques do not lead to such a general result.

-

2.

A characterization of the optimal stopping points as in Theorem 1 (ii) for the problem without ambiguity can be found in Christensen and Irle (2011).

2.3 Worst-case prior

Theorem 1 leads to the value of the optimal stopping problem with drift ambiguity and also provides an easy way to find the optimal stopping time. Another important topic is to determine the worst-case measure for a process started in a point \(x\), i.e. we would like to determine the measure \(P\) such that \(v(x)=\sup _\tau \mathbb{E }^P_x(e^{-r\tau }g(X_\tau )1\!\!\!\mathrm{l}_{\{\tau <\infty \}})\). Using the results described above the worst-case measure can also be found immediately:

Theorem 2

Let \(x\in \mathbb{R }\) and let \(c\) be a minimizer as in Theorem 1 (i). Then \(P^c\) is a worst-case measure for the process started in \(x\).

Proof

This is immediate from the proof of Theorem 1. \(\square \)

2.4 Example: American straddle in the Bachelier market

Because it is easy and instructive we consider the example discussed in Cheng and Riedel (2010) in the light of our method:

We consider a variant of the American straddle option in a Bachelier market model as follows: As a driving process we consider a standard Brownian motion under \(P^0\) with reward function \(g(x)=|x|\). Our aim is to find the value in 0 of the optimal stopping problem

Using Theorem 1 we have to find the majorant of \(|\cdot |\) in the set

One immediately sees that if \(\lambda h_c(\cdot )\ge |\cdot |\), then \(\lambda h_0(\cdot )\ge |\cdot |\) and furthermore \(\lambda h_0(0)\le \lambda h_c(0)\). Therefore, we only have to consider majorants of \(|\cdot |\) in the set

This one-dimensional problem can be solved immediately. For \(\lambda =\max (|\cdot |/h_0(\cdot ))\) one obtains \(v(0)=\lambda h_0(0)\).

In fact, if \(-b,b\) denote the maximum points of \(|\cdot |/h_0(\cdot )\) we obtain that \(v(x)=\lambda h_0(x)\) for \(x\in [-b,b]\). Moreover, for \(x\not \in [-b,b]\) one immediately sees that there exists \(c\in \mathbb{R }\) such that \(x\) is a maximum point of \(|\cdot |/h_c(\cdot )\) and we obtain

Moreover, the worst-case measure is \(P_0\), i.e. the process \(X\) has positive drift \(\kappa \) on \((-\infty ,0)\) and drift \(-\kappa \) on \([0,\infty )\).

2.5 General diffusion processes

The results obtained before can be generalized to general one-dimensional diffusion processes. The only problem is to choose appropriate functions \(h_c\) carefully. After these functions are constructed the same arguments as in the previous subsections work.

Let \((X_t)_{t\ge 0}\) be a regular one-dimensional diffusion process on some interval \(I\) with boundary points \(a<b,a,b\in [-\infty ,\infty ]\), that is characterized by its generator

for some continuous functions \(\sigma >0,\mu \). For convenience we furthermore assume that the boundary points \(a, b\) of \(I\) are natural. For a generalization to other boundary behaviors see the discussion in (Beibel and Lerche (2000), Section 6). Again denote by \(\mathcal P ^\kappa \) the set of all probability measures, that are equivalent to \(Q\) with density process of the form

for a progressively measurable process \((\theta _t)_{t\ge 0}\) with \(|\theta _t|\le \kappa \) for all \(t\ge 0\). We denote the fundamental solutions of the equation

by \(\psi _+^\kappa \) resp. \(\psi _-^\kappa \) for the increasing resp. decreasing positive solution, cf. (Borodin and Salminen (2002), II.10) for a discussion and further references. Analogously, denote the fundamental solutions of

by \(\psi _+^{-\kappa }\) resp. \(\psi _-^{-\kappa }\). Note that for each positive solution \(\psi \) of one of the above ODEs it holds that

hence all extremal points are minima, so that \(\psi \) has at most one minimum. Therefore, for each \(s\in (0,1)\) the function \(\psi =s\psi _+^{\pm \kappa }+(1-s)\psi _-^{\pm \kappa }\) has a unique minimum point and each \(c\in I\) arises as such a minimum point. Therefore, for each \(c\in (a,b)\) we can find constants \(\gamma _1,...,\gamma _4\) such that the function

is \(C^1\) with a unique minimum point in \(c\) with the standardization \(h_c(c)=1\). More explicitly, \(\gamma _1,...,\gamma _4\) are given by

where

Furthermore, write \(h_a=\psi ^{-\kappa }_+\) and \(h_b=\psi ^{\kappa }_-\). First, we show that the functions \(h_c\) are always \(C^2\).

Lemma 2

For each \(c\in [a,b]\), the function \(h_c\) is \(C^2\).

Proof

For \(c\in \{a,b\}\) the claim obviously holds. Let \(c\in (a,b)\). We only have to prove that \(h_c^{\prime \prime }(c-)=h_c^{\prime \prime }(c+)\). Using Eq. (1), we obtain that

By the choice of \(\gamma _1,\gamma _2\), we obtain

and analogously

This proves the claim.\(\square \)

Now all the arguments given in Sects. 2.2 and 2.3 apply and we again obtain the following results (compare Theorems 1 and 2):

Theorem 3

-

(i)

It holds that

$$\begin{aligned} v(x)=\inf \{\lambda h_{c}(x):c\in [a,b],\lambda \in [0,\infty ], \lambda h_{c}\ge g\} \text{ for} \text{ all}\,x\in I. \end{aligned}$$ -

(ii)

A point \(x\in I\) is in the optimal stopping set \(\{y:v(y)=g(y)\}\) if and only if there exists \(c\in [a,b]\) such that

$$\begin{aligned} x\in \mathrm{argmax}\frac{g}{h_c}. \end{aligned}$$

Theorem 4

Let \(x\in \mathbb{R }\) and let \(c\) be a minimizer as in Theorem 3 (i). Then \(P^c\) is a worst-case measure for the process started in \(x\).

2.6 Example: an optimal decision problem for Brownian motions with drift

The following example illustrates that our method also works in the case of a discontinuous reward function \(g\), where differential equation techniques cannot be applied immediately. Furthermore, we see that our approach can be used for all parameters in the parameter space, although the structure of the solution changes.

Let \(X=\sigma W_t+\mu t\) denote a Brownian motion with drift \(\mu \in (-\infty ,\infty )\) and volatility \(\sigma \) under \(P^0\), and let

The fundamental solutions are given by

where \(\alpha _1<0<\alpha _2\) and \(\beta _1<0<\beta _2\) are the roots of

Using Eq. (2) we obtain

We consider

and \(l^*(c):=\sup _{y\ge 0}\frac{y}{h_c(y)}=\frac{y_c}{h_c(y_c)}\), where \(y_c\) denotes the unique maximum point of \(y/h_c(y),y\ge 0\).

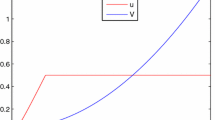

We first consider the case \(1=l_*(0)\ge l^*(0)\). By Theorem 3 (ii), we obtain that \(x=0\) is in the optimal stopping set \(S\) as a maximizer of \(y\mapsto g(y)/h_0(y)\). Furthermore, by decreasing \(c\) to \(-\infty \), we see that \((-\infty ,0]\subseteq S\). Since \(l_*(c)\rightarrow 0\) and \(l^*(c)\rightarrow \infty \) for \(c\rightarrow \infty \), there exists a unique \(c^*\ge 0\) such that \(l_*(c^*)=l^*(c^*)\). Therefore, by Theorem 3 (ii) again, \(x^*:=y_{c^*}\in S\) and by increasing \(c\) to \(\infty \), we obtain that \(S=(-\infty ,0]\cup [x^*,\infty )\). Theorem 3 (i) yields

see Fig. 2 below. By Theorem 4 we furthermore obtain that \(P^{c^*}\) is a worst-case measure for the process started in \(x\in (0,x^*)\). That is, under the worst-case measure, the process has drift \(\mu +\kappa \) on \([0,c^*)\) and drift \(\mu -\kappa \) on \([c^*,x^*]\). Now, we consider the case \(1=l_*(0)< l^*(0)\). By a similar reasoning as in the first case, we see that there exists \(c^*<0\) such that \(l_*(c^*)=l^*(c^*)\). Write \(x_*=c^*<0,x^*=y_{c^*}\). Then, \(S=(-\infty ,x_*]\cup [x^*,\infty )\) is the optimal stopping set and the value function is given by

see Fig. 3 below. The worst-case measure is given by \(P^{c^*}\), which means that the process has drift \(\mu -\kappa \) on \([x_*,x^*]\).

3 Optimal decision for models with crashes

Now denote by \(Y\) a one-dimensional regular diffusion process on an interval \(I\). Denote by \(\mathcal F \) the natural filtration generated by \(Y\). In this section we assume that all parameters of this process are known. This process represents the asset price process of the underlying asset if no crash occurs; therefore for economical plausibility it is reasonable to assume \(I=(0,\infty )\).

Now we modify the process such that at a certain random time point \(\sigma \) a crash of bounded height occurs. To be more precise, let \(c\in (0,1)\) be a given constant that describes an upper bound for the height of the crash. For a given stopping time \(\sigma \) and an \(\mathcal F _\sigma \)-measurable and \([c,1]\)-valued random variable \(\zeta \) we consider the modified process \(X^{\sigma ,\zeta }\) given by

Now we consider the optimal stopping problem connected to the pricing of perpetual American options in this market, i.e., let \(g:(0,\infty )\rightarrow [0,\infty )\) be a continuous reward function. We furthermore assume \(g\) to be non-decreasing, so that a crash always leads to a lower payoff. We fix a constant discounting rate \(r>0\) and furthermore assume that the holder of the option does know that the process will crash once in the future. We assume the crash to be observable for the decision maker, so she will specify her action by a pre-crash stopping time \({\underline{\tau }}\) and a post-crash stopping time \(\overline{\tau }\), i.e. given \(\sigma \) she takes the strategy

where \(\theta _\cdot \) denotes the time-shift operator. As before we assume the holder of the option to be ambiguity averse in the sense that she maximizes her expected reward under the worst-case scenario, i.e. she tries to solve the problem

where \(\tau =\tau _\sigma \) is given as in (3).

Remark 2

Obviously by the monotonicity of the reward function we always have

where \(X^{\sigma }:=X^{\sigma ,c}\).

We obtain the following reduction of the optimal stopping problem under ambiguity about the crashes: It shows that the problem can be reduced into one optimal stopping problem and one Dynkin game for the diffusion process \(Y\) (without crashes).

Theorem 5

-

(i)

Let \(\hat{g}\) be the value function for the optimal stopping problem for \(cY\) with reward \(g\), i.e.

$$\begin{aligned} \hat{g}(y)=\sup _{{\overline{\tau }}}\mathbb{E }_y(e^{ -r{\overline{\tau }}}g(cY_{\overline{\tau }})) \text{ for} \text{ all} y\in (0,\infty ) \end{aligned}$$(5)and let \(\hat{g}<\infty \). Then it holds that

$$\begin{aligned} v(x)= \sup _{{\underline{\tau }}}\inf _\sigma \mathbb{E }_x(e^{ -r{\underline{\tau }}}g(Y_{{\underline{\tau }}})1\!\!\!\mathrm{l}_{\{{{\underline{\tau }}} \le \sigma \}}+e^{-r\sigma }\hat{g}(Y_\sigma ) 1\!\!\!\mathrm{l}_{\{{{\underline{\tau }}}>\sigma \}}). \end{aligned}$$(6) -

(ii)

If \(\overline{\tau }\) is optimal for (5) and \({\underline{\tau }},\sigma \) is a Nash-equilibrium for (6), then \(({\underline{\tau }},\overline{\tau }),\,(\sigma ,c)\) is a Nash-equilibrium for (4).

Proof

-

(i)

First fix \({\underline{\tau }},\,\overline{\tau }\). Then for all \(\sigma \) by conditioning on \(\mathcal F _\sigma \) we obtain

$$\begin{aligned} \mathbb{E }_x(e^{-r{\tau }}g(X^{\sigma }_\tau ))&= \mathbb{E }_x(e^{-r{\underline{\tau }}}g(Y_{{\underline{\tau }}})1\!\!\!\mathrm{l}_{\{{\underline{\tau }}\le \sigma \}}+e^{-r(\sigma +\overline{\tau }\circ \theta _\sigma )}g(cY_{\sigma +\overline{\tau }\circ \theta _\sigma })1\!\!\!\mathrm{l}_{\{{\underline{\tau }}>\sigma \}})\\&= \mathbb{E }_x(e^{-r{\underline{\tau }}}g(Y_{{\underline{\tau }}})1\!\!\!\mathrm{l}_{\{{\underline{\tau }} \le \sigma \}}\\&\quad \quad +e^{-r\sigma }\mathbb{E }_x(e^{-r(\overline{\tau }\circ \theta _\sigma )} g(cY_{\sigma +\overline{\tau }\circ \theta _\sigma })|\mathcal F _\sigma ) 1\!\!\!\mathrm{l}_{\{{\underline{\tau }}>\sigma \}}). \end{aligned}$$By the strong Markov property we furthermore obtain

$$\begin{aligned} \mathbb{E }_x(e^{-r(\overline{\tau }\circ \theta _\sigma )}g(cY_{\sigma +\overline{\tau }\circ \theta _\sigma })|\mathcal F _\sigma ) =\mathbb{E }_{Y_\sigma }(e^{-r{\overline{\tau }}}g(cY_{\overline{\tau }}))\le \hat{g}(Y_\sigma ). \end{aligned}$$Therefore,

$$\begin{aligned} \mathbb{E }_x(e^{-r\tau }g(X^{\sigma }_\tau ))\le \mathbb{E }_x(e^{-r{\underline{\tau }}}g(Y_{{\underline{\tau }}})1\!\!\!\mathrm{l}_{\{{\underline{\tau }}\le \sigma \}} +e^{-r\sigma }\hat{g}(Y_\sigma )1\!\!\!\mathrm{l}_{\{{\underline{\tau }}>\sigma \}}), \end{aligned}$$showing that

$$\begin{aligned} v(x)\le \sup _{{\underline{\tau }}}\inf _\sigma \mathbb{E }_x(e^{-r{\underline{\tau }}} g(Y_{{\underline{\tau }}})1\!\!\!\mathrm{l}_{\{{{\underline{\tau }}}\le \sigma \}}+e^{-r\sigma }\hat{g}(Y_\sigma )1\!\!\!\mathrm{l}_{\{{{\underline{\tau }}} >\sigma \}}). \end{aligned}$$Now take a sequence of \(1/n\)-optimal stopping times \((\overline{\tau }_n)_{n\in \mathbb{N }}\) for the problem (5), i.e.

$$\begin{aligned} \hat{g}(y)\le \mathbb{E }_y(e^{-r\overline{\tau }_n}g(cY_{\overline{\tau }_n}))+\frac{1}{n} \text{ for} \text{ all}\,n\in \mathbb{N }\; \text{ and} \text{ all}\,y. \end{aligned}$$Then

$$\begin{aligned} \mathbb{E }_{Y_\sigma }(e^{-r{\overline{\tau }_n}}g(cY_{\overline{\tau }_n}))\le \hat{g}(Y_\sigma )+\frac{1}{n}, \end{aligned}$$and hence considering the post-crash strategy \(\overline{\tau }_n\) and arbitrary \({\underline{\tau }},\sigma \) we see that

$$\begin{aligned} v(x)+\frac{1}{n}\ge \sup _{{\underline{\tau }}}\inf _\sigma \mathbb{E }_x(e^{ -r{\underline{\tau }}}g(Y_{{\underline{\tau }}})1\!\!\!\mathrm{l}_{\{{{\underline{\tau }}} \le \sigma \}}+e^{-r\sigma }\hat{g}(Y_\sigma )1\!\!\!\mathrm{l}_{\{{{\underline{\tau }}} >\sigma \}}), \end{aligned}$$proving equality.

-

(ii)

is obvious by the proof of (i).

\(\square \)

Remark 3

Note that the arguments used so far have nothing to do with diffusion processes, but can be applied in the same way for general nice one-dimensional strong Markov processes, like one-dimensional Hunt processes. Nonetheless we decided to consider this more special setup because of its special importance and since the theory for explicitly solving optimal stopping problems and Dynkin games is well established.

The previous reduction theorem solves the optimal stopping problem (4) since both problems (6) and (5) are well-studied for diffusion processes, see e.g. the references given above for optimal stopping problems and Ekström and Villeneuve (2006), Alvarez (2008), and Peškir (2011) for Dynkin games. It is interesting to see that the optimal stopping problem under crash-scenarios naturally leads to Dynkin games, which were studied extensively in the last years. The financial applications studied so far were based on Israeli options, which are (at least at first glance) of a different nature, see Kifer (2000).

3.1 Example: call-like problem with crashes

As an example we consider a geometric Brownian motion given by the dynamics

and we take \(g:(0,\infty )\rightarrow [0,\infty )\) given by \(g(x)=(x-K)^+\), where \(K>0\) is a constant. To exclude trivial cases we assume that \(\mu <r\). Then a closed-form solution of the optimal stopping problem

is well known [see e.g. (Peškir and Shiryaev (2006), Chapter VII)] and is given by

where \(\gamma \) is the positive solution to

and \(x^*\) and \(d\) are appropriate constants. Moreover, the optimal stopping time is given by \(\overline{\tau }:=\inf \{t\ge 0: X_t\ge x^*\}\). By Theorem 5 we are faced with the Dynkin game

To solve this problem first note that there exists \(x^{\prime }\in (K,x^*/c)\) such that \(g(x)\le \hat{g}(x)\) for \(x\in (0,x^{\prime }]\) and \(g(x)\ge \hat{g}(x)\) for \(x\in [x^{\prime },\infty )\); indeed, \(x^{\prime }\) is the unique positive solution to

see Fig. 4.

We could use the general theory to solve the optimal stopping game (7), but we can also solve it elementary here:

First let \(x>x^{\prime }\). Then for all stopping times \(\sigma ^*\) with \(\sigma ^*=0\) under \(P(\cdot | Y_0=x)\) and each stopping time \({\underline{\tau }}\) we obtain

with equality if \(\tau =0\,P(\cdot | Y_0=x)\)-a.s. On the other hand for \(\tau ^*=0\) the payoff is \(g(x)\), independent of \(\sigma \).

For \(x\le x^{\prime }\) by by taking \({\underline{\tau }}= \inf \{t\ge 0: X_t\ge x^{\prime }\}\) we have for each stopping time \(\sigma \) by definition of \(x^{\prime }\)

where the last equality holds by the fundamental properties of the minimal \(r\)-harmonic functions, see e.g. (Borodin and Salminen (2002), II.9). By taking any stopping time \(\sigma ^*\le \inf \{t\ge 0:y_t\ge x^{\prime }\}\) and any stopping time \(\tau \) the same calculation holds.

Putting pieces together we obtain that \({\underline{\tau }},\sigma ^*\) is a Nash-equilibrium of the Dynkin game (7) for any stopping time \(\sigma ^*\le {\underline{\tau }}\).

By applying Theorem 5 we get

Proposition 1

The value function \(v\) is given by

and for

and

and any stopping time \(\sigma ^*\le \tau \) it holds that \(({\underline{\tau }},\overline{\tau }),\,(\sigma ^*,c)\) is a Nash-equilibrium of the problem.

The solution to this example is very natural: If the investor expects a crash in the market, then she exercises the option as soon as the asset price reaches the level \(x^{\prime }\) (pre-crash strategy). After the crash, i.e. if the investor does not expect to have more crashes, then she takes the ordinary stopping time, i.e. she stops if the process reaches level \(x^*>x^{\prime }\) (post-crash strategy).

References

Alvarez LHR (2007) Knightian uncertainty, \(\kappa \)-ignorance, and optimal timing. Technical report discussion paper No. 25, Aboa Centre for Economics

Alvarez LHR (2008) A class of solvable stopping games. Appl Math Optim 58(3):291–314. doi:10.1007/s00245-008-9035-z

Beibel M, Lerche HR (1997) A new look at optimal stopping problems related to mathematical finance. Stat Sinica 7(1):93–108

Beibel M, Lerche HR (2000) A note on optimal stopping of regular diffusions under random discounting. Teor Veroyatnost i Primenen 45(4):657–669

Borodin AN, Salminen P (2002) Handbook of Brownian motion–facts and formulae. Probability and its applications, 2nd edn. Birkhäuser Verlag, Basel

Cheng X, Riedel F (2010) Optimal stopping under ambiguity in continuous time. Technical report 429, IWM Bielefeld

Christensen S (2012) A method for pricing American options using semi-infinite linear programming. Math Financ. doi:10.1111/j.1467-9965.2012.00523.x

Christensen S, Irle A (2011) A harmonic function technique for the optimal stopping of diffusions. Stoch Int J Probab Stoch Process 83(4–6):347–363. doi:10.1080/17442508.2010.498915

Chudjakow T, Riedel F (2009) The best choice problem under ambiguity. Technical report 413, IWM Bielefeld

Dayanik S, Karatzas I (2003) On the optimal stopping problem for one-dimensional diffusions. Stoch Process Appl 107(2):173–212

Duffie D, Epstein LG (1992) Asset pricing with stochastic differential utility. Rev Financ Stud 5(3):411–436. http://www.jstor.org/stable/2962133

Dynkin EB, Yushkevich AA (1969) Markov processes: theorems and problems. Translated from the Russian by James S. Wood. Plenum Press, New York

Ekström E, Villeneuve S (2006) On the value of optimal stopping games. Ann Appl Probab 16(3):1576–1596. doi:10.1214/105051606000000204

Epstein LG, Schneider M (2003) Recursive multiple-priors. J Econ Theory 113(1):1–31. http://ideas.repec.org/a/eee/jetheo/v113y2003i1p1-31.html

Helmes K, Stockbridge RH (2010) Construction of the value function and optimal rules in optimal stopping of one-dimensional diffusions. Adv Appl Probab 42(1):158–182

Karatzas I, Shreve SE (1998) Methods of mathematical finance, applications of Mathematics, vol 39. Springer, New York

Kifer Y (2000) Game options. Financ Stoch 4(4):443–463. doi:10.1007/PL00013527

Korn R, Seifried FT (2009) A worst-case approach to continuous-time portfolio optimisation. Albrecher H et al (eds) Advanced financial modelling, Radon Series on Computational and Applied Mathematics, vol 8. Walter de Gruyter, Berlin, pp 327–345

Korn R, Wilmott P (2002) Optimal portfolios under the threat of a crash. Int J Theor Appl Financ 5(2):171–187. doi:10.1142/S0219024902001407

Peškir G (2011) A duality principle for the legendre transform. J Convex Anal (to appear)

Peškir G, Shiryaev AN (2006) Optimal stopping and free-boundary problems. Lectures in Mathematics ETH Zürich. Birkhäuser Verlag, Basel

Riedel F (2009) Optimal stopping with multiple priors. Econometrica 77(3):857–908

Salminen P (1985) Optimal stopping of one-dimensional diffusions. Math Nachr 124:85–101

Acknowledgments

This paper was partly written during a stay at Åbo Akademi in the project Applied Markov processes—fluid queues, optimal stopping and population dynamics, Project number: 127719 (Academy of Finland). I would like to express my gratitude for the hospitality and support. Furthermore, I’m grateful to the anonymous reviewers for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Christensen, S. Optimal decision under ambiguity for diffusion processes. Math Meth Oper Res 77, 207–226 (2013). https://doi.org/10.1007/s00186-012-0425-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-012-0425-2