Abstract

This paper considers estimation of a functional partially quantile regression model whose parameters include the infinite dimensional function as well as the slope parameters. We show asymptotical normality of the estimator of the finite dimensional parameter, and derive the rate of convergence of the estimator of the infinite dimensional slope function. In addition, we show the rate of the mean squared prediction error for the proposed estimator. A simulation study is provided to illustrate the numerical performance of the resulting estimators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since first proposed by Koenker and Bassett (1978), quantile regression has emerged as an important statistical methodology. By estimating various conditional quantile functions, quantile regression complements the focus of classical least squares regression on the conditional mean and explores the effect of covariate on the location, scale and shape of the distribution of the response variable. It has been used in wide range of applications including economics, biology, and finance. A comprehensive review of the theory of quantile regression and some of the most recent development can be found in Koenker (2005).

In order to reduce severe modeling biases caused by the mis-specifying parametric models, there has been an upsurge of interests and efforts in nonparametric models. Although the nonparametric approach is useful in exploring hidden structures and reducing modeling biases, it can be too flexible to make the concise conclusions, and faces the curse of dimensionality due to a large number of covariates. In order to overcome these shortcomings, Engle et al. (1986) proposed the partially linear regression model (PLRM) which allowed some explanatory variables to act in a nonparametric manner, while the others to have a linear relation with the response variable. PLRM not only avoids the curse of dimensionality problem in nonparametric regression, but also retains the interpretation of the effect of the explanatory variables in linear regression. Therefore, PLRM has received considerable attention. For example, several papers have been published on this class of model for the independent and identically distributed case (see Speckman 1988; Shi and Li 1994; Mammen and Geer 1997) as well as for dependent data (see Gao 1995; Fan and Li 1999; Liu 2011), for more details see Härdle et al. (2000). Estimation methods in above mentioned papers are mainly based on the mean regression. As for partially linear quantile regression model, He and Shi (1996) used bivariate tensor-product B-splines to approximate the nonparametric function. He and Liang (2000) introduced partially linear quantile regression in errors-in-variables models.

There is a large collection of functional data literature on mean regression (see Ramsay and Silverman 2005 for a review), but relatively few studies from a quantile regression perspective. Only Cardot et al. (2005) introduced penalized quantile regression when the covariates are functions. It is well known quantile regression approach is insensitive to outliers and more robust than the ordinary least squares method. Moreover, it may even work beautifully when the variance of random error is infinite, while the least squares method breaks down. In addition, fitting data at a set of quantiles provides a more comprehensive description of the response distribution than does the mean. In many applications, the functional impacts of the covariates on the response may vary at different percentiles of the distribution (see Wang et al. 2009). In many practical application, we are interested in the low or high quantile of the response variable when some explain covariates are functions and others may be classifiable variates. In the conditional growth charts, for example, one often uses the gender as classifiable variate because the adult male height is higher than woman’s in general, and uses the entire growth phase as function. The ultimate outcome interest is the adult height. We are very much concerned about the low quantile of adult height, and want to decide which factors stunt height growth and which factors facilitate. All of these motivate us to combine the quantile and semiparametric approaches with functional regression, which result in the functional partially linear quantile regression model (FPLQRM). It is obvious that the proposed FPLQRM is more flexible than functional linear quantile regression model proposed by Cardot et al. (2005), since it not only contains the nonparametric function, but also the linear variables which may be categorical variable. To the best of our knowledge, there is not yet any work that combines the semiparametric and quantile approaches in a functional semiparametric model in existing literature. We attempt to sort out this problem. However, there are many difficulties in this task. Firstly, unlike the square loss function, the quantile loss function is not differentiable at the origin, which makes it difficult to derive large sample properties of the resulting estimators. Secondly, one needs to estimate simultaneously the parametric and nonparametric component which have different convergence rates. In this paper, we develop new estimators for the parameters of functional partially linear quantile regression model from the perspective of the Karhunen-Loéve expansion and show the consistence and asymptotical normality of the resulting estimators.

The remainder of the paper is organized as follows. Section 2 introduces functional partially linear quantile regression model. Section 3 then develops an approach. The large sample properties of the proposed estimators are given in Sect. 4. Simulation studies are presented in Sect. 5. The proofs of the main results are presented in the “Appendix”.

2 Functional partially linear quantile regression model

Let \(Y\) be a real value random variable defined on a probability space \((\Omega ,\mathcal{B },P)\). \({{\varvec{Z}}}=(Z_1,Z_2,\ldots ,Z_p)\) is a \(p-\)dimensional random variables and let \(\{X(t): t \in \mathcal{F }\}\) be a zero mean, second-order stochastic process defined on \((\Omega ,\mathcal{B },P)\) with sample paths in \(L^2(\mathcal{F })\), the Hilbert space containing square integrable functions with inner product \(\langle x, y\rangle =\int _\mathcal{F } x(t)y(t)dt, \forall x, y \in L^2(\mathcal{F })\) and norm \(\Vert x\Vert = \langle x, x\rangle ^{1/2}\). Without loss of generality, we suppose throughout the paper that \( \mathcal{F }=[0,1]\). At a given quantile level \(\tau \in (0, 1)\), the dependence between \(Y\) and \((X,{{\varvec{Z}}})\) is expressed as

where \(\varepsilon (\tau )\) is a random error whose \(\tau \)th quantile equals zero, \(\beta (t,\tau )\) is a square integrable function on [0,1], \({\varvec{\theta }}(\tau )\) is \(p\)-dimensional unknown real vector. In the rest of the article, we will suppress \(\tau \) in \({\varvec{\theta }}(\tau )\) and \(\beta (t,\tau )\) for notational simplicity.

Remark 1

Model (1) generalizes both the linear quantile regression model and functional linear model which correspond to the cases \(\beta =0\) and \({\varvec{\theta }}=0\), respectively. If \(\tau = 0.5\) and \(\varepsilon \) have symmetric distributions with a finite mean, then the median FPLQRM is equal to the conditional mean. Therefore, model (1) also includes the partially functional linear regression model proposed by Shin (2009) and includes the semi-functional partially linear regression model in Aneiros-Pérez and Vieu (2006) given by \(Y={\varvec{\beta }}^T{{\varvec{z}}} + m(X) +\varepsilon \) when \(m(X)=\int _0^1\gamma (t)X(t) dt\).

3 Estimation methods

Let \(\{(X_i,{{\varvec{Z}}}_i, Y_i),i=1,\ldots ,n\}\) be an independent and identically distributed sample which is generated from model (1). Define the covariance function and the empirical covariance function respectively as

and

The covariance function \(K\) defines a linear operator which maps a function \(f\) to \(Kf\) given by \((Kf)(u) =\int K(u,v)f(v)dv\). We shall assume that the linear operator with kernel \(K\) is positive definite. Let \(\lambda _1>\lambda _2>\cdots >0\) and \(\hat{\lambda }_1\ge \hat{\lambda }_2\ge \cdots \ge 0\) be the ordered eigenvalue sequences of the linear operators with kernels \(K\) and \(\hat{K}\), \(\{\phi _j\}\) and \(\{\hat{\phi }_j\}\) be the corresponding orthonormal eigenfunction sequences respectively. It is clear that the sequences \(\{\phi _j\}\) and \(\{\hat{\phi }_j\}\) each forms an orthonormal basis in \(L^2([0, 1])\). Then, the spectral decompositions of the covariance functions \(K\) and \(\hat{K}\) can be written as

and

respectively.

According to the Karhunen-Loève representation, we have

and

where the \(\xi _i\) are uncorrelated random variables with mean 0 and variance \(E[\xi _i^2]=\lambda _i\), and \(\gamma _i=\langle \beta , \phi _i \rangle \), for more details see Ramsay and Silverman (2005). Substituting (2) into model (1), we can get

Therefore, the regression model in (3) can be well approximated by

where \(m\le n\) is the truncation level that trades off approximation error against variability and typically diverges with \(n\). We replace the \(\phi _j\) by \(\hat{\phi }_j\) for \(j=1,\ldots ,m\), model (4) can be rewritten as

where \({{\varvec{U}}}=\{\langle X,\hat{\phi }_j \rangle \}_{j=1,\ldots ,m}\), \({\varvec{\gamma }}=(\gamma _1,\ldots ,\gamma _m)^T\). The quantile coefficient estimates of \({\varvec{\gamma }}\) and \({\varvec{\theta }}\) can be obtained by minimizing

where \(\rho _\tau (u) = u\left\{ \tau -I(u < 0)\right\} \) is the quantile loss function. The solution to (5) satisfies the following gradient condition:

where \(\psi _\tau \) is score function of \(\rho _\tau \).

4 Large sample properties

Before presenting the main asymptotic results, we first introduce some conditions required for our asymptotic properties. Throughout this paper, the constant \(C\) may change from line to line for convenience.

-

C1: \(E\Vert X\Vert ^4<C<\infty \).

-

C2: For each \(j\), \(E[U_j^4]\le C \lambda _j\). For the eigenvalues \(\lambda _j\) and Fourier coefficients \(\gamma _j\), we require that \(\lambda _j-\lambda _{j+1}\ge C^{-1}j^{-a-1}\) and \(|\gamma _j|\le Cj^{-b}\) for \(j>1\), \(a>1\) and \(b>a/2+1\).

-

C3: For the tuning parameter \(m\), we assume that \(m\sim n^{1/(a+2b)}\).

-

C4: \(E\Vert {{\varvec{Z}}}\Vert ^4<\infty \).

Conditions C1–C3 are very common in functional linear regression model (see Hall and Horowitz 2007; Shin 2009). Condition C4 is common in functional partially linear regression model (see Shin 2009).

One complicating issue for FPLQRM comes from the dependence between \({{\varvec{Z}}}\) and \(X\). Similar to Shin (2009), we let \({{\varvec{Z}}}={\varvec{\eta }}+\langle {{\varvec{g}}}, X \rangle \), where \({\varvec{\eta }}=(\eta _{1},\ldots , \eta _{p})\) is zero-mean random variable, \({{\varvec{g}}}=(g_{1},\ldots , g_{p})\) with \(g_j \in L_2([0,1]), j=1,\ldots ,p.\)

C5: \(E[{\varvec{\eta }}] = 0\) and \( E[{{\varvec{\eta }}} {\varvec{\eta }}^T ]=\Sigma \). Furthermore, we need that \(\Sigma \) is a positive definite matrix.

Condition C5 controls the limiting behaviour of the variance of \(\hat{{\varvec{\theta }}}\). Speckman (1988), Moyeed and Diggle (1994), He et al. (2002) and Shin (2009) used a similar device for modeling the dependence between parametric and nonparametric component.

The following Theorem 1 describes the rate of convergence of the functional slope parameter \(\beta \) and the asymptotic normality of constant slope parameters \({\varvec{\theta }}\).

Theorem 1

Under conditions C1–C5, we have

and

where \(\delta _n^2=n^{-(2b+1)/(a+2b)}\), \(\varpi =\frac{\tau (1-\tau )}{f^2(0)}\) with \(f(\varepsilon )\) is density function of the random error.

5 Simulation studies

In this section, we investigate the finite sample performance of the proposed estimation method with Monte Carlo simulation studies. We consider two sample sizes \(n=100\) and \(n=400\). We focus on \(\tau = 0.25,0.5\) and \(\tau =0.75\) in this study.

The data are generated from the following quantile regression model

where \(z_{1}\) follows the standard normal distribution and \(z_{2}\) follows a Bernoulli distribution with 0.5 probability of being 1, \(\theta _1=2\) and \(\theta _2=1\). \(\varepsilon (\tau ) = \varepsilon -F^{-1}(\tau )\) with \(F\) being the CDF of \(\varepsilon \). Here, \(F^{-1}(\tau )\) is subtracted from \(\varepsilon \) to make the \(\tau \)th quantile of \(\varepsilon (\tau )\) zero for identifiability purpose. For the functional linear component, we take the same form as Shin (2009), that is, the functional coefficient \(\beta (t)=\sqrt{2} \sin (\pi t/2)+3 \sqrt{2} \sin (3 \pi t/2)\) and \(X(t)=\sum \nolimits _j \xi _j \phi _j(t)\), where the \(\xi _j\) are distributed as independent normal with mean 0 and variance \(\lambda _j=((j-0.5)\pi )^{-2}\) and \(\phi _j(t)=\sqrt{2} \sin ((j-0.5)\pi t)\).

We consider three cases for generating random error \(\varepsilon \).

-

Case 1. \(\varepsilon \) follows a standard normal distribution.

-

Case 2. \(\varepsilon \) follows a \(t(3)\) distribution. This yields a model with heavy-tailed.

-

Case 3. \(\varepsilon \) follows a standard Cauchy distribution. This yields a model in which the expectation of the response do not exist.

Throughout our numerical studies, we choose the number of eigenfunctions as the minimizer to the following Schwarz-type information criterion,

where \(p=2\), \({\hat{\varvec{\theta }}}_{(m)}\) and \({\hat{\varvec{\gamma }}}_{(m)}\) are the \(\tau \)th quantile estimators obtained from minimizing (5) with \(m\) eigenfunctions; see He et al. (2002) and Wang et al. (2009) for a similar criterion for tuning parameters selection. Based on Condition C3, the optimal order of \(m\) should have the same order as \(n^{1/(a+2b)}\) with \(a>1\) and \(b>a/2+1\). Similar to Zhang and Liang (2011), we propose to choose the optimal knot number, \(m\), from a neighborhood of \(n^{1/5.5}\). In our simulation studies, we have used \([2/3Nr, 4/3Nr]\), where \(Nr =\text{ ceiling }(\text{ n }^{1/5.5})\) and the function ceiling\((\cdot )\) returns the smallest integer not less than the corresponding element. Then the optimal knot number, \(m_{opt}\) , is the one which minimizes the SIC value. That is

Base on 1,000 random perturbations, Table 1 summarizes the bias (Bias) and mean squared error (MSE) of the estimated \(\theta \) with different sample sizes under different quantile level for different cases. Bias is reasonably small in general. We may conclude that this simulation study provides strong evidence in support of the asymptotic theory that we derive in Sect. 4.

Figure 1 shows the boxplots for standard normal random error with different sample sizes and quantile level. For the student t distribution with \(3^{\circ }\) and standard Cauchy random error distribution, the figures perform similarly with the standard normal distribution. To save space, we omit them. From the boxplots, we known that the proposed methods is consistent.

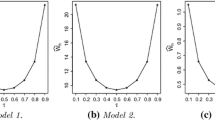

Figures 2, 3 and 4 demonstrate the performance of the curve estimation of the slope parameter \(\beta (\cdot )\) for different cases under different levels with \(n=100\) and show that the estimated curves are very close to the true curve \(\beta (\cdot )\). We may conclude that the proposed estimation of the function \(\beta (\cdot )\) performs reasonably well.

References

Aneiros-Pérez G, Vieu P (2006) Semi-functional partial linear regression. Stat Prob Lett 76:1102–1110

Cardot H, Crambes C, Sarda P (2005) Quantile regression when the covariates are functions. J Nonparametr Stat 17:841–856

Engle R, Granger C, Rice J, Weiss A (1986) Semiparametric estimates of the relation between weather and electricity sales. J Am Stat Assoc 81:310–320

Fan YQ, Li Q (1999) Root-n-consistent estimation of partially linear time series models. J Nonparametr Stat 11:251–269

Gao JT (1995) Asymptotic theory for partly linear models. Commun Stat Theory Methods 24:1985–2009

Hall P, Horowitz JL (2007) Methodology and convergence rates for functional linear regression. Ann Stat 35:70–91

Härdle W, Liang H, Gao JT (2000) Partially linear models. Physica Verlag, Heidelberg

He X, Liang H (2000) Quantile regression estimates for a class of linear and partially linear errors-in-variables models. Statistica Sinica 1:129–140

He X, Shi PD (1996) Bivariate tensor-product B-spline in a partly linear model. J Multivar Anal 58:162–181

He X, Zhu ZY, Fung WK (2002) Estimation in a semiparametric model for longitudinal data with unspecified dependence structure. Biometrika 89:579–590

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Koenker R, Bassett G (1978) Regression quantiles. Econometrica 46:33–51

Liu Q (2011) Asymptotic normality for the partially linear EV models with longitudinal data. Commun Stat Theory Methods 40:1149–1158

Mammen E, Geer S (1997) Penalized quasi-likelihood estimation in partial linear models. Ann Stat 25:1014–1035

Moyeed RA, Diggle PJ (1994) Rate of convergence in semiparametric modeling of longitudinal data. Aust J Stat 36:75–93

Ramsay J, Silverman B (2005) Functional data analysis, 2nd edn. Springer, New York

Shi P, Li G (1994) On the rate of convergence of minimum \(L_1\)-norm estimates in a partly linear model. Commun Stat Theory Methods 23:175–196

Shin H (2009) Partial functional linear regression. J Stat Plan Inference 139:3405–3418

Speckman P (1988) Kernel smoothing in partial linear models. J R Stat Soc Ser B 50:413–436

Stone CJ (1985) Additive regression and other nonparametric models. Ann Stat 13:689–705

Wang H, Zhu ZY, Zhou JH (2009) Quantile regression in partially linear varying coefficient models. Ann Stat 37:3841–3866

Zhang X, Liang H (2011) Focused information criterion and model averaging for generalized additive partial linear models. Ann Stat 39:174–200

Acknowledgments

The authors would like to thank the referees for their helpful comments that led an improvement of an early manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Jiang Du’s work is supported by the National Natural Science Foundation of China (No. 11271039, No. 11101015, No. 11261025), the specialised research fund for the doctoral program of higher education (No. 20091103120012) and the fund from the government of Beijing (No. 2011D005015000007). Sun’s research was supported by grants from the Foundation of Academic Discipline Program at Central University of Finance and Economics and National Statistics Research Projects in 2012.

Appendix

Appendix

Let \({{\varvec{Z}}}=({{\varvec{Z}}}_1^T,\ldots , {{\varvec{Z}}}^T_n)^T\) and \({{\varvec{U}}}=({{\varvec{U}}}_1,\ldots , {{\varvec{U}}}_n)^T\) be the \(n\) by \(p\) design matrix and the \(n\) by \(m\) design matrix for the constant slope parametric and the functional slope parametric component, respectively. Also let \({{\varvec{P}}}= {{\varvec{U}}}({{\varvec{U}}}^T{{\varvec{U}}})^{-1}{{\varvec{U}}}^T, {{\varvec{Z}}}^*=({{\varvec{I}}}-{{\varvec{P}}}){{\varvec{Z}}}\), \({{\varvec{Z}}}^* =({{\varvec{Z}}}^*_1,\ldots ,{{\varvec{Z}}}^*_n)^T\), \({{\varvec{S}}}_n={{{\varvec{Z}}}^*}^T{{\varvec{Z}}}^*\).

Lemma 1

Under conditions C1–C5, one has

Proof

Let \({\varvec{\eta }}=({\varvec{\eta }}_1,\ldots ,{\varvec{\eta }}_n)^T\) and \({{\varvec{Z}}} = ({{\varvec{Z}}}- {\varvec{\Delta }}) +{\varvec{\Delta }}={\varvec{\eta }}+{\varvec{\Delta }}\), where \({\varvec{\Delta }}=(\langle {{\varvec{g}}}, X_1 \rangle ,\ldots \langle {{\varvec{g}}}, X_n \rangle )^T\) and \({{\varvec{g}}}=(g_1,\ldots ,g_p)^T\) is defined in Condition C5. We can write

where

Invoking the central limit theorem, one has

Invoking (7), to prove Lemma 1, it is enough to show that

By Condition C2, the fact \(\Vert \phi _{j}-\hat{\phi }_{j}\Vert ^2=O_p(n^{-1}j^2)\) and the Karhunen-Loève representation, one has

where \(\lambda _{lj}^{^{\prime }}=\langle \hat{\phi }_{j},g_{l}\rangle \) and \(\lambda _{lj}=\langle \phi _{j},g_{l}\rangle , l=1,\ldots ,p.\)

By Condition C3, there exists a matrix \({{\varvec{M}}}\) such that \(\Vert {\varvec{\Delta }}-{{\varvec{U}}}{{\varvec{M}}}\Vert ^{2}=O_p\left( n^{a_1/(a+2b)}\right) \), where \(a_1=\max (3, a+1)\). In addition, as \({{\varvec{P}}}\) is a projection matrix, we have

By Condition C4 and the strong law of large numbers, one has \(\frac{1}{n}{\varvec{\eta }}^T{\varvec{\eta }}\) converges almost surely to \(\Sigma \). For \(k\ne l\), one has

In addition, as \({{\varvec{P}}}\) is a projection matrix, this expression is \(O(m)\). Since \({{\varvec{P}}}\) is a positive semidefine matrix, when \(k=l\),

Invoking (9) and (10), we have

Similarly, we have

Invoking (11) and (12) and Condition C2, we have

and

The proof is hence complete. \(\square \)

Lemma 2

Under conditions C1–C5, we have

where \({ {\varvec{\psi }}({\varvec{\varepsilon }})}=(\psi (\varepsilon _{1}),...,\psi (\varepsilon _{n}))^{T}.\)

Proof

Invoking \({{\varvec{Z}}}={\varvec{\eta }}+{\varvec{\Delta }},\) we have

By Lemma 1, (8) and (11), we have

Thus,

By condition C5 and the central limit theorem, one has

\(\square \)

Proof of Theorem 1

Let

where \({{\varvec{H}}}_{m}=m{{\varvec{U}}}^{T}{{\varvec{U}}}\). Let \(\hat{{\varvec{\xi }}}={\varvec{\xi }}(\hat{{\varvec{\theta }}},\hat{{\varvec{\gamma }}})=(\hat{{\varvec{\xi }}}_{1}^{T},\hat{{\varvec{\xi }}}_{2}^{T})^{T}.\) Now, we show that \(\Vert \hat{{\varvec{\xi }}}\Vert =O_{p}({\varvec{\delta }}_{n}).\) To do so, let \(\tilde{{{\varvec{Z}}}}_{i}=\frac{1}{f(0)}{{\varvec{S}}}_{n}^{-\frac{1}{2}}{{\varvec{Z}}}_{i},\) \(\tilde{{{\varvec{U}}}}_{i}={{\varvec{H}}}_{m}^{-1}{{\varvec{U}}}_{i}, R_{i}=\sum _{j=1}^{m}\langle x_{i},\hat{\phi }_{j}\rangle \gamma _{j0}-\int \nolimits _{0}^{1}\beta (t)x(t)dt\).

Note that \(\Vert \phi _{j}-\hat{\phi }_{j}\Vert ^2=O_p(n^{-1}j^2)\), one has

Thus, one has

which is minimized at \(\hat{{\varvec{\xi }}}\).

By similar arguments to these of Lemma 1 of Cardot et al. (2005) for any \(\kappa >0\), there exists \(L_{\kappa }\) such that

On the other hand, we have

Thus, we have

Then connecting this with Eq. (13), we obtain

Thus, \(\Vert \hat{{\varvec{\xi }}}\Vert =O_{p}(\delta _{n}).\) This together with Lemma 1, and the definition of \(\hat{{\varvec{\xi }}}\), one has

Note that

Now we consider \(K_{n1}\), by the fact that the sequences \(\{\hat{\phi }_j\}\) forms an orthonormal basis in \(L^2([0, 1])\), one has

By Lemma 1 of Stone (1985), it is easy to show that \({{\varvec{H}}}_{m}\) is positive definite for sufficiently large \(n\). Therefore, one has

As a result, we have \( K_{n1}=O_{p}\left( \delta _{n}^{2}\right) \).

Therefore, one has

Next we will show the asymptotic normality of \(\hat{{\varvec{\theta }}}\), let \({\varvec{\xi }}_{1}^{*}=\frac{1}{f(0)}{{\varvec{S}}}_{n}^{-\frac{1}{2}}\sum _{i=0}^{n}{{\varvec{Z}}}_{i}^{*}\psi _{\tau }(\varepsilon _{i})\), according to Lemmas 1 and 2, \({\varvec{\xi }}_{1}^{*}\) is asymptotically normal with variance-covariance \(\frac{\tau (1-\tau )}{f^{2}(0)}I_{p}\).

On the other hand, similar to He and Shi (1996), we can proof that \(\Vert \hat{{\varvec{\xi }}}_{1}^{*}-\hat{{\varvec{\xi }}}_{1}\Vert =o_{p}(1).\) Thus,

Obviously,

This completes the proof of Theorem 1.

Rights and permissions

About this article

Cite this article

Lu, Y., Du, J. & Sun, Z. Functional partially linear quantile regression model. Metrika 77, 317–332 (2014). https://doi.org/10.1007/s00184-013-0439-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-013-0439-7