Abstract

This paper considers the problem of estimating a nonparametric stochastic frontier model with shape restrictions and when some or all regressors are endogenous. We discuss three estimation strategies based on constructing a likelihood with unknown components. One approach is a three-step constrained semiparametric limited information maximum likelihood, where the first two steps provide local polynomial estimators of the reduced form and frontier equation. This approach imposes the shape restrictions on the frontier equation explicitly. As an alternative, we consider a local limited information maximum likelihood, where we replace the constrained estimation from the first approach with a kernel-based method. This means the shape constraints are satisfied locally by construction. Finally, we consider a smooth-coefficient stochastic frontier model, for which we propose a two-step estimation procedure based on local GMM and MLE. Our Monte Carlo simulations demonstrate attractive finite sample properties of all the proposed estimators. An empirical application to the US banking sector illustrates empirical relevance of these methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In most applications in stochastic frontier model, researchers typically assume that the functional form of the frontier takes a specific parametric form (e.g., Cobb–Douglas, translog, constant elasticity of substitution, etc.) for the data they want to analyze. However, economic theories rarely predict specific functional form for the frontier, and consequently, misspecification of the functional form can lead to biased and inconsistent parameter estimators as well as to misleading inference on inefficiencies. In such cases, researchers may wish to adopt a semi- or nonparametric specification.Footnote 1

Nonparametric stochastic frontier models have been previously considered by Fan et al. (1996), Martins-Filho and Yao (2007), Kumbhakar et al. (2007), among others. However, to the best of our knowledge, all of the above papers assumed that the regressors are exogenous. When some or all of the regressors are endogenous, their proposed estimation approaches are no longer valid and need to be modified. An issue that arises from nonparametric estimation and that is specific to stochastic frontier models is that economic theory leads to shape restrictions such as monotonicity, concavity, symmetry and linear homogeneity of the estimated function. Thus, it is important to incorporate these restrictions in nonparametric estimation.

In the context of parametric stochastic frontier models, the problem of endogeneity has been addressed by Kutlu (2010), Tran and Tsionas (2013, (2015), Griffiths and Hajargasht (2016), Karakaplan and Kutlu (2015), Amsler et al. (2016, (2017); Kutlu and Tran (2019), providing more references. In the context of nonparametric regression estimation with shape restrictions and exogenous regressors, some recent works include Hall and Huang (2001), Henderson and Parmeter (2009), Henderson et al. (2012), Du et al. (2013), Sun (2015) and Malikov et al. (2016a), just to name a few. The only paper that we are aware of that allows for endogenous regressors in a nonparametric setting is Freyberger and Horowitz (2015) where they propose monotone restrictions to identify an unknown regression with endogenous discrete covariates under the assumption that the discrete instruments have fewer mass points than covariates.

In this paper, we address the problem of endogeneity of regressors and shape restrictions in estimation of nonparametric stochastic frontier models. We propose several estimation procedures. Our first approach is to extend the nonparametric simultaneous equation models of Su and Ullah (2008) to a stochastic frontier framework where we impose shape restrictions using the method of Du et al. (2013). We propose a three-step estimation algorithm which provides a consistent estimator of the frontier and error variances and can be used to obtain inefficiency predictions. Our second approach is to use a local specification of a parametric frontier in the spirit of Gozalo and Linton (2000) so that the shape restrictions are satisfied locally, and we construct a similar three-step estimation procedure as in the first approach. Our Monte Carlo results show that the two approaches perform equally well in finite samples.

Additionally, we extend the smooth-coefficient stochastic frontier models of Sun and Kumbhakar (2013) to allow for endogenous regressors and develop a two-step estimation procedure for these models. Our approach here is different from the recent work by Kumbhakar et al. (2016) where they consider the estimation of a smooth-coefficient stochastic frontier production system.

The rest of the paper is organized as follows: Section 2 presents the nonparametric stochastic frontier model with endogenous regressors and provides detailed discussion of the two estimation approaches. A smooth-coefficient stochastic frontier model with endogenous regressors and the two-step estimation algorithm are presented in Sect. 3. Section 4 presents the Monte Carlo simulations to assess the performance of the proposed estimators discussed in Sects. 2 and 3. An empirical application from the US banking sector is given in Sect. 5. Section 6 concludes.

2 Nonparametric stochastic frontier model

First, we consider a nonparametric stochastic frontier model (SFM) with endogenous regressors in the simultaneous equation framework of the form:

where \(Y_{i}\) is an observable scalar random variable representing output of firm i, \(g(\cdot )\) denotes the true, unknown structural frontier, \(X_{i}\) is \(d\times 1\) vector of inputs that are endogenous, \(Z_{1i}\) and \(Z_{2i}\) are \(l_{1}\times 1\) and \(l_{2}\times 1\) (where \(l_{2}\ge d)\) vectors of exogenous inputs and instrumental variables, respectively, \(h(\cdot )=(h_{1}(\cdot ),\ldots ,h_{d}(\cdot ))^{'}\) is a \(d\times 1\) vector of functions of the instruments \(Z_{i}\), \(v_{i}\) is a two-sided symmetric random noise, and \(u_{i}\) is a one-sided error representing technical inefficiency of firm i. We are interested in estimating \(g(\cdot )\) consistently so that it satisfies the requisite axioms of production (or cost), and in using this estimate to predict the firm-specific inefficiency levels.

In this paper, we assume that \(u_{i}\) is independent of \(\xi _{i}=(v_{i},\eta _{i})^{'}\) and that \(E(\varepsilon _{i}\vert Z_{i},\eta _{i})=E(\varepsilon _{i}\vert \eta _{i})\), where \(\varepsilon _{i}=v_{i}-u_{i}\), so that \(\varepsilon _{i}\) is uncorrelated with \(Z_{i}\) given \(\eta _i\); we further assume that \(v_{i}\sim N(0,\sigma _{v}^{2})\), \(u_{i}\sim N^{+}(0,\sigma _{u}^{2})\) and, conditional on \(Z_{i}\), \(\xi _{i}\sim N(0,\Omega )\), where \(\Omega =\left( \begin{array}{cc} \sigma _{v}^{2} &{} \Omega _{v\eta }\\ \Omega _{\eta v} &{} \Omega _{\eta \eta } \end{array}\right) \). The assumption that the inefficiency term \(u_i\) is independent of the other error terms can be relaxed by introducing a copula function to model the joint distribution of \((u_i, \xi _i)\) similarly to Amsler et al. (2016). Other assumptions on the marginal distributions of \(u_i\) and \(\xi _i\), and/or allow for \(u_{i}\) to depend on a set of environmental variables can also be considered.Footnote 2 However, these extensions are beyond the scope of this paper and we leave them for future exploration.

Under these assumptions, the density of the endogenous variables conditional on the instruments can be decomposed as follows:

Following the derivations of Amsler et al. (2016), we have:

where \(\eta _{i}=X_{i}-h(Z_{i})\), \(\varepsilon _{i}=Y_{i}-g(X_{i},Z_{1i})\), \(\mu _{ci}=\Omega _{v\eta }\Omega _{\eta \eta }^{-1}\eta _{i}\), \(\lambda =\frac{\sigma _{u}}{\sigma _{c}}\), \(\sigma _{c}^{2}=\sigma _{v}^{2}-\Omega _{v\eta }\Omega _{\eta \eta }^{-1}\Omega _{v\eta }\), \(\sigma ^{2}=\sigma _{u}^{2}+\sigma _{c}^{2}\), \(\phi (\cdot )\) and \(\Phi (\cdot )\) are, respectively, the standard normal density and CDF. Then, the log-likelihood function is given by:

where

and

Unlike Amsler et al. (2016), this likelihood contains nonparametric terms.

2.1 Estimation: semiparametric LIML

Since the functions \(g(\cdot )\) and \(h(\cdot )\) are unknown, the log-likelihood function given in (4)–(6) is not feasible to maximize in practice. First, note that since u is independent of \(\eta \) and uncorrelated with Z, we have from (1):

where the second term on the right-hand side of the last equality in (7) comes from on our assumption of joint normality of v and \(\eta \), and where \(g^{*}(X,Z_{1})=g(X,Z_{1})-\mu _{u}\), with \(\mu _{u}=E(u)=\sqrt{\frac{2}{\pi }}\sigma _{u}\). Thus, from the law of iterated expectations, we have

and Eq. (8) resembles a standard partially linear regression model. In the spirit of Fan et al. (1996), if we can obtain consistent estimates of \(g^{*}(X,Z_{1})\), \(\Omega _{v\eta }\) and \(\Omega _{\eta \eta }\) then by replacing \(g(X,Z_{1})\) by \(g^{*}(X,Z_{1})+\mu _u\) and using the estimates of \(\Omega _{v\eta }\), \(\Omega _{\eta \eta }\) in (5), the remaining parameters can be obtained by maximizing (4). However, the frontier function \(g(X,Z_{1})\) must satisfy relevant shape restrictions such as monotonicity and concavity for it to be considered a production function. Consequently, these restrictions must be imposed in the estimation of \(g(X,Z_{1})\).

In the context of nonparametric simultaneous equation models, Su and Ullah (2008) discuss how to obtain a consistent estimator of the unrestricted function \(g^{*}(X,Z_{1})\) based on a three-step estimation procedure. Thus, one approach is to extend their estimation procedure to partially linear model that allows for resulting frontier to satisfy these restrictions. We call this approach constrained semiparametric limited information maximum likelihood (CSPLIML) method. Alternatively, we can follow Gozalo and Linton (2000) (see also McManus 1994) and approximate the function \(g(X,Z_{1})\) locally by a parametric production function that satisfied these restrictions. That is, \(g(X,Z_{1}) \simeq m(X,Z_{1},\alpha )\). A natural candidate for \(m(\cdot ,\cdot ,\alpha )\) would be the Cobb–Douglas production function, albeit other production functions such as constant elasticity of substitution (CES) or Leontief can also be used.Footnote 3 We call this approach local LIML (LLIML). We now provide a detailed discussion of the two approaches.

2.1.1 CSPLIML

To obtain the constrained estimator of \(g^{*}(X,Z_{1})\), let \(W=(X,Z_{1})\) and Q be a deterministic weighting function such that \(\int _{\mathfrak {R}^{d}}dQ(\xi )=1\), and q be the density of Q with respect the Lebesgue measure in \(\mathfrak {R}^{d}\). Then, the constrained three-step procedure can be constructed as follows.

Step 1 Obtain a consistent estimator of \(h(Z_{i})\) by local linear (or general local polynomial) smoothing of \(X_{i}=(X_{1i},\ldots ,X_{di})^{'}\) on \(Z_{i}\) with kernel \(K_{1}\) and bandwidth sequence \(b_{1}(n)\). Denote the estimates of \(h(Z_{i})\) by \({\hat{h}}(Z_{i})=({\hat{h}}_{1}(Z_{i}),\ldots ,{\hat{h}}_{d}(Z_{i}))^{'}\), and calculate the estimated residuals \({\hat{\eta }}_{i}=({\hat{\eta }}_{i1},\ldots ,{\hat{\eta }}_{id})^{'}\) where \({\hat{\eta }}_{ij}=X_{ij}-{\hat{h}}_{j}(Z_{i})\) for \(j=1,\ldots ,d\). In addition, compute the estimate of \(\Omega _{\eta \eta }\) by \({\hat{\Omega }}_{\eta \eta }=n^{-1}\sum \limits _{i=1}^{n}{\hat{\eta }}_{i}{\hat{\eta }}_{i}^{'}\), and let \({\hat{\eta }}^{*}_{i}={\hat{\Omega }}_{\eta \eta }^{-1}{\hat{\eta }}_{i}\). Note that this step is equivalent to maximizing the log-likelihood function (6).

Step 2 Obtain a consistent restricted estimator of \(m(W,{\hat{\eta }})=g^{*}(W)+\Omega _{v\eta }{\hat{\eta }}^{*}\) by constrained profile likelihood method (or more precisely, constrained profile least squares in the current context) with the corresponding kernel \(K_{2}\) and bandwidth sequence \(b_{2}(n)\). In order to describe the estimator in detail, we need more notation. Let \({\varvec{A}}\) be an \((n\times n)\) matrix with elements \(A_{ij}=A_{i}(W_{j})\), where \(A_{i}(w)\) is the kernel weight (e.g., for a local constant estimator, \(A_{i}(w)=K_{b_{2}}(W_{i}-w)/\sum _{i=1}^{n}K_{b_{2}}(W_{i}-w))\), and let \({\varvec{p}}=(p_{1},\ldots ,p_{n})\) and \({\varvec{p}}_{u}=(\frac{1}{n},\ldots ,\frac{1}{n})\). In the spirit of Du et al. (2013) and Parmeter and Racine (2013), the constrained kernel smoothing estimator is based on the solution \(({\hat{p}}_{1},\ldots ,{\hat{p}}_{n})\) of a standard quadratic programming problem in which the objective function \(D({\varvec{p}})=({\varvec{p}}-{\varvec{p}}_{u})^{'}({\varvec{p}}-{\varvec{p}}_{u})\) is minimized subject to the relevant monotonicity and concavity constraints, as initially proposed by Hall and Huang (2001) in a univariate, single-constraint setting. Specifically, let \(Y=(Y_{1},\ldots ,Y_{n})^{'}\), \(W=(W_{1}^{'},\ldots ,W_{n}^{'})^{'}\), \(W^{*}=({\varvec{I}}_{n}-{\varvec{A}})W\) and \(Y^{*}=({\varvec{I}}_{n}-{\varvec{A}})Y\). Further denote \({\hat{\Omega }}_{v\eta }=(W^{*'}W^{*})^{-1}W^{*'}Y^{*}\) and \({\tilde{Y}}_{i}=Y_{i}-{\hat{\Omega }}_{v\eta }{\hat{\eta }}_{i}^{*}\). Then, the monotonicity and concavity constraints we impose are of the form:

-

(i)

\(\sum _{i=1}^{n}p_{i}A_{i}(w){\tilde{Y}}_{i}-{\tilde{Y}}_{i}\ge 0\)

-

(ii)

\(\sum _{i=1}^{n}p_{i}A_{i}^{({\varvec{s}}_{1})}(w){\tilde{Y}}_{i}\ge 0\), for \({\varvec{s}}_{1} \in {\varvec{S}}_{1}\),

-

(iii)

\(\sum _{i=1}^{n}p_{i}A_{i}^{({\varvec{s}}_{2})}(w){\tilde{Y}}_{i}\le 0\) for \({\varvec{s}}_{2} \in {\varvec{S}}_{2}\),

where \({\varvec{S}}_{1}=\{(1,0,\ldots ,0),(0,1,\ldots ,0),\ldots ,(0,\ldots ,1)\}\); \({\varvec{S}}_{2}=\{(2,0,\ldots ,0), (0,2,\ldots ,0),\ldots ,(0,\ldots ,2)\}\), and \(A_{i}^{({\varvec{s}}_{r})}(w)\) is the rth order partial derivative of \(A_{i}(w)\) with respect to the j-th element of w, \(j=1,\ldots ,d+l_{1}\). Given the solution \(({\hat{p}}_{1},\ldots ,{\hat{p}}_{n})\), we can obtain the restricted estimator of \(g^{*}(w)\) as \({\hat{g}}^{*}(w)=\sum _{i=1}^{n}{\hat{p}}_{i}A_{i}(w){\tilde{Y}}_{i}\).

Step 3 Once the estimates \({\hat{g}}^{*}(X,Z_{1})\) are obtained, by replacing \(g(X,Z_{1})\) in (5) with \({\hat{g}}^{*}(X,Z_{1})+\mu _{u}\), the remaining variance parameters \((\sigma _{v}^{2},\sigma _{u}^{2})\) can be obtained by maximizing Eq. (5). Let \(({\hat{\sigma }}_{v}^{2},{\hat{\sigma }}_{u}^{2})\) denote the resulting estimates, the structural frontier \(g(X,Z_{1})\) can be estimated by \({\hat{g}}(X,Z_{1})={\hat{g}}^{*}(X,Z_{1})+{\hat{\mu }}_{u}\) where \({\hat{\mu }}_{u}={\hat{\sigma }}_{u}\sqrt{2/\pi }\).

The following remarks are worth noting:

Remark 1

As is the case with all nonparametric estimations, implementing the estimator \({\hat{g}}^{*}(\cdot )\) (and hence \({\hat{g}}(\cdot )\)) requires specification of a kernel function and a method of choosing the values for the two bandwidth parameters \(b_{1}(n)\) and \(b_{2}(n)\). Let \(p_1\) and \(p_2\) denote the order of the local polynomials in Steps 1 and 2, respectively. As Su and Ullah (2008) pointed out for the case \(p_{2}=1\), as long as Assumption A5 in their paper is satisfied, the bandwidth parameter \(b_{1}\) does not affect the asymptotic distribution of \({\hat{g}}(\cdot )\), while \(b_{2}\) does. They suggest a systematic method for choosing \(b_{2}\) and a rule of thumb for choosing \(b_{1}\). We follow these suggestions. For the kernel function, we use a product of second-order univariate kernels, and the rule of thumb for selecting \(b_{1}\) is given by \({\hat{b}}_{1}={\hat{b}}_{2}n^{-(\alpha _{2}-\alpha _{1})/(2\zeta )}\) where \(\alpha _{1}=\max \left\{ \frac{p_{2}+1}{p_{1}+1},\frac{p_{2}+3}{2(p_{1}+1)}\right\} \), \(\alpha _{2}=\frac{p_{2}+d+l_{1}-1}{l_{1}+l_{2}}\) , \(\zeta =2(p_{2}+1)+d+l_{1}\), and \({\hat{b}}_{2}\) is the optimal “plug-in” bandwidth which is given by \({\hat{b}}_{2}=\left\{ \frac{(\gamma _{02})^{d+l_{1}}(d+l_{1}){\tilde{c}}_{2}}{(\gamma _{21})^{2} {\tilde{c}}_{1}}\right\} ^{1/(4+d+l_{1})}\times n^{-1/(4+d+l_{1})}\), where \(\gamma _{ij}\)=\(\int _{\mathfrak {R}}t^{i}K_{2}(t)^{j}dt\), for \(i=0,2\) and \(j=1,2\); \({\tilde{c}}_{1}\) and \({\tilde{c}}_{2}\) are defined in equations (2.11) and (2.13) of Su and Ullah (2008).

Remark 2

Footnote 4 The estimation of \(E(Y|X, Z_1, \eta )\) requires an estimate of \(h(Z_i)\). However, it is possible in principle to use the information contained in the constraints on \(E(Y|X, Z_1, \eta )\) during the Step 1 estimation of \(h(Z_i)\). This may provide an improvement to the Step 1 estimator, but its asymptotics does not depend on these constraints and so any potential gains would be limited to finite samples. Also, for large n, the computation in Step 2 can be cumbersome and expensive due to the localization of the entire sample. To reduce this computation burden, Henderson and Parmeter (2015) show that by using a smaller (random) sample of, say, 20 to 30 percent of n, there is almost no loss in the estimation precision. We follow the suggestion of Henderson and Parmeter (2015) in our Monte Carlo simulations and empirical application below.

2.1.2 LLIML

In this section, we describe an alternative estimation procedure for \(m(W,{\hat{\eta }})\) in Step 2 of CSPLIML described above. In what follows, Step 1 and Step 3 are the same as in CSPLIML but Step 2 is different.

Now, instead of directly imposing the constraints in the estimation of \(g^{*}(W)\) in Step 2, we follow Gozalo and Linton (2000) and use an anchoring parametric specification in which all the theoretical restrictions are satisfied in a local approximation of \(g^{*}(W)\). In this paper, we use the Cobb–Douglas production function as the anchoring model. McManus (1994) showed that the nonparametric estimator based on the local version of the Cobb–Douglas specification is efficient among the class of all linear nonparametric estimators and nearly efficient among all nonparametric estimators. McManus (1994) provides a detailed discussion of this point and an empirical application.

We use the following approximation: \(g^{*}(W,{\varvec{\alpha }})\simeq \alpha _{0}W_{1}^{\alpha _{1}}\ldots W_{p}^{\alpha _{p}}\) or its log-linear version, \(g^{*}(W,{\varvec{\alpha }})\simeq \ln {\alpha }_{0}+\sum _{j=1}^{p} \alpha _{j}\ln {W}_{j}\), where \(p=(d+l_{1})\). Note that the Cobb–Douglas functional form explicitly imposes the restriction that \(g^{*}(0, {\varvec{\alpha }})=0\) and the monotonicity and concavity are automatically satisfied. Moreover, one can also impose a constant return to scale, if desired, by writing \(\alpha _{p}=1-\sum _{j=2}^{p-1} \alpha _{j}\).

The estimate of \(g^{*}(W,{\varvec{\alpha }})\) can be obtained by minimizing the following criterion:

where \(K_{H}(\zeta )=\det (H)^{-1}K(H^{-1}\zeta )\), with H being a \((d\times d)\) nonsingular bandwidth matrix, while \(K(\cdot )\) is a real value kernel function. The estimate of \(g^{*}(W)\) is \({\hat{g}}^{*}(w)=g^{*}(w,\hat{{\varvec{\alpha }}}(w))\). Note that for this estimator, the optimal bandwidth can be selected by cross-validation and the bandwidth for the first stage can be selected using the rule of thumb as discussed in Sect. 2.1.1.

2.2 Predicting inefficiency

Once the estimates of the frontier and the variances parameters are obtained, a popular predictor of \(u_{i}\) is \({\hat{u}}_{i}=E(u_{i}|\epsilon _{i})\) as suggested by Jondrow et al. (1982). Amsler et al. (2016) improve upon the predictor by pointing out that given the reduced form equation, a better predictor of \(u_{i}\) can be constructed based on the additional information contained in \(\eta _i\), namely \({\hat{u}}_{i}=E(u_{i}|\epsilon _{i},\eta _{i})\). That is, albeit \(\eta _{i}\) is independent of \(u_{i}\), it is correlated with \(v_{i}\) and hence informative about \(v_{i}\). Following Amsler et al. (2016), we estimate \({\hat{u}}_{i}\) as follows:

where \(\hat{{\tilde{\varepsilon }}}_{i}={\hat{\varepsilon }}_{i}-{\hat{\mu }}_{ci}\) and \({\hat{\sigma }}_{*}^{2}={\hat{\sigma }}_{u}^{2}{\hat{\sigma }}_{c}^{2}/{\hat{\sigma }}^{2}\). Equation (10) provides a better estimate than the original formulation, \(E({\hat{u}}_{i}|\epsilon _{i})\), due to the fact that \(\sigma _{c}^{2}<\sigma _{v}^{2}\). However, the main trade-off or disadvantage is that normality of errors in the reduced form equations is assumed to be correctly specified.

Simar et al. (2017) propose an ingenious alternative by estimating \(E(u_i|Z_{i}, X_i)\) using \({\hat{\sigma }}^2_u\), a nonparametric estimator of \(\sigma ^2_u\), which in their case is allowed to depend on \(Z_i\) and \(X_i\).Footnote 5 An attractive feature of this estimator is that it conditions on observed values of covariates, rather than on the unobserved errors. They obtain \({\hat{\sigma }}^2_u(Z_i, X_i)\) from local estimators of second and third-order (conditional) moments of \(v-u+E(u|X, Z)\), which is a zero-mean error. The higher-order moments of this error can be expressed in terms of (conditional) moments of u which are well known functions of \(\sigma ^2_u(Z_i, X_i)\) if u is half-normal.

This estimator does not require normality of v. Under the additional assumption that v is normal, Simar et al. (2017) mention the option of using a heteroskedastic version of Jondrow et al. (1982). In this case, the predictor of \(u_i\) becomes \(E(u_i|\varepsilon _i, Z_i X_i)\) and we compute it using the formula for \(E(u_i|\varepsilon _i)\) from Jondrow et al. (1982) after replacing \(\sigma _u^2\) with the local estimator \({\hat{\sigma }}_u^2(Z_i, X_i)\).

In a similar manner, we can further improve upon the estimators proposed by Simar et al. (2017) by considering a heteroskedastic version of Amsler et al. (2016). In this case, the predictor of \(u_i\) can be written as \(E(u_i|\varepsilon _i, \eta _i, Z_i X_i)\), where we use \({\hat{\sigma }}_u^2(Z_i, X_i)\) in place of \({\hat{\sigma }}_u^2\) in (11).

3 Semiparametric SFM: smooth coefficients frontier

In Sect. 2, we considered nonparametric specifications of the frontier which are quite flexible and robust to misspecification of the functional form. However, the main drawback is that when the number of regressors is large, the curse of dimensionality prohibits precise estimation in such models. In this section, we propose an alternative class of semiparametric frontiers that are still flexible and yet, more resistant to the curse of dimensionality.

Consider the following semiparametric smooth coefficients (SC) stochastic frontier model:

where \(Y_{i}\), \(X_{i}\), \(v_{i}\) and \(u_{i}\) are defined as in previous section; \(Z_{i}\) is a \(p\times 1\) vector of environmental factors, \(\alpha (Z_{i})\) is an intercept and \(\beta ({Z}_{i})\) is a \(d\times 1\) vector; both are assumed to be unknown but smooth functions of \(Z_{i}\). As before, we allow for some or all components in \(X_{i}\) to be correlated with \(v_{i}\) but assume that \(Z_{i}\) are uncorrelated with \(v_{i}\) and \(u_{i}\), and \(v_{i}\) and \(u_{i}\) are independent. Moreover, we assume that \(v_{i}\sim \hbox {i.i.d.}\, \,N(0,\sigma _{v}^{2})\), \(u_{i}\sim \hbox {i.i.d.}\,\,N^{+}(0,\sigma _{u}^{2}(Z_{i}))\), where \(\sigma _{u}(Z_{i})=\exp (\delta _{0}+Z_{i}^{'}\delta _{1})\). Model (11) is essentially an extension of the model considered by Sun and Kumbhakar (2013) by allowing for the endogeneity of \(X_{i}\).

It is important to note that in practice, the assignment of relevant variables \(X_{i}\) and \(Z_{i}\) is the practitioner’s prerogative, and it may be done on the basis of practical convenience or on the basis of economic theory. Nevertheless, our results do not impose any restrictions on the relationship between \(X_{i}\) and \(Z_{i}\). That is, \(X_{i}\) may or may not be different from or unrelated to \(Z_{i}\).

To estimate the unknown coefficient functions and the variance parameters, we suggest in the spirit of Sun and Kumbhakar (2013), a two-step estimation approach. We now provide details of this estimator.

Let \(\epsilon _{i}=v_{i}-(u_{i}-E(u_{i}|Z_{i}))=v_{i}-u_{i}^{*}\), where the definition of \(u_{i}^{*}\) is apparent, and under our assumption, \(E(u_{i}|Z_{i})=\sqrt{2/ \pi }\sigma _{u}(Z_{i})\). Then, (11) can be expressed as:

where \(\alpha ^{*}(Z_{i})=\alpha (Z_{i})-\sqrt{2/ \pi }\sigma _{u}(Z_{i})\) and \(E(\epsilon _{i})=0\). Define \(\theta (Z_{i})=\left[ \alpha ^{*}(Z_{i}),\beta (Z_{i})^{'}\right] ^{'}\) and \({\tilde{X}}_{i}=\left[ 1,X_{i}^{'}\right] ^{'}\), then (12) can be compactly written as:

Thus, Eq. (13) resembles the smooth-coefficient stochastic frontier model of Sun and Kumbhakar (2013). However, due to the endogeneity of \(X_{i}\), their approach cannot be directly used. To obtain consistent estimators of the unknown functions and parameters of the model, we suggest a two-step estimation procedure where in the first two steps, a local generalized method of moments (LGMM) is used to obtain consistent estimator for the unknown coefficient function \(\theta (Z_{i})\); while in the second step, the MLE is used to obtain the remaining unknown parameters. A detailed description of the two-step estimation procedure is given below.

Step 1: LGMM Estimation of \(\theta (Z_{i}\)).

Suppose there exists an \(1\times l\) (where \(l\ge d\)) vector of instruments \(W_{i}\) (including a constant) such that \(W_{i}\) are uncorrelated with \(\epsilon _{i}\) so that \(\mathrm {E}(W_{i}^{'}\epsilon _{i})=0\). Assume that the elements of \(\theta (Z_{i})\) are twice continuously differentiable in the neighborhood of z. Then, for \(Z_{i}\) in the neighborhood of z, we have the following locally kernel-weighted orthogonality conditions:

where \(K_{hi}(z)=\prod _{j=1}^{p}h_{j}^{-1}k((Z_{i}-z)/h_{j})\) is a product kernel function in which \(k(\cdot )\ge 0\) is a bounded univariate symmetric kernel satisfying the conditions \(\int k(\psi )d\psi =0\), \(\int \psi ^{2}k(\psi )d\psi =c_1>0\) and \(\int k^{2}(\psi )d\psi =c_2>0\), and \(h=(h_{1},\ldots ,h_{p})^{'}\) is a \((p\times 1)\) vector of bandwidths. Thus, Eq. (14) provides the moment restrictions to construct the following local GMM criterion function:

Minimizing (15) with respect to \(\theta (\cdot )\) yields the following local GMM estimator:

where \(\Psi _{h}(z)=K_{hi}(z)W_{i}W_{i}^{'}K_{hi}(z)\). Let \({\hat{\epsilon }}_{i}=Y_{i}-{\tilde{X}}_{i}{\hat{\theta }}(Z_{i})\) be the estimated residuals from (13), then the remaining parameters, namely \(\gamma =(\sigma _{v},\delta ^{'})^{'}\) where \(\delta =(\delta _{0},\delta _{1})^{'}\), can be estimated using the ML procedure below.

Step 2: ML Estimation of \(\gamma \). First, recall that \(\epsilon _{i}\) was defined as \(\epsilon _{i}=v_{i}-u_{i}^{*}=v_{i}-u_{i}+\sqrt{2/ \pi }\sigma _{u}(Z_{i})\), where \(\sigma _{u}(Z_{i})=\exp (\delta _{0}+Z_{i}^{'}\delta _{1})\), and hence can be re-expressed as:

where \({\tilde{u}}_{i}\sim N^{+}(0,1)\). Let \(\sigma _{i}=\sigma _{v}+\sigma _{u}(Z_{i})=\sigma _{v}+\exp (\delta _{0}+Z_{i}^{'} \delta _{1})\), and \(\lambda _{i}=\sigma _{u}(Z_{i})/\sigma _{v}\). Then, under our assumptions, the log-likelihood function associated with (17) is given by:

The MLE of \(\gamma \) is then given by:

where \(\Gamma \) is the parameter space of \(\gamma \) which is assumed to be compact. The estimators of \(\sigma _{u}(Z_{i})\), \(\lambda _{i}\) and \(\mathrm {E}(u_{i}|Z_{i})\) can be obtained as \({\hat{\sigma }}_{u}(Z_{i})=\exp ({\hat{\gamma }}_{0}+Z_{i}^{'}{\hat{\gamma }}_{1})\), \({\hat{\lambda }}_{i}={\hat{\sigma }}_{u}(Z_{i})/{\hat{\sigma }}_{v}\) and \(\hat{\mathrm {E}}(u_{i}|Z_{i})=\sqrt{2/ \pi }{\hat{\sigma }}_{u}(Z_{i})\), respectively. The estimator of \(\hat{\mathrm {E}}(u_{i}|Z_{i})\) is needed to identify the intercept term in (12).

We make the following remarks:

Remark 3

The model given in (13) is identical to the model considered by Tran and Tsionas (2009) and hence, the asymptotic properties of the LGMM estimator \({\hat{\theta }}(Z_{i})\) can be drawn directly from the results of Theorem 1 in their paper. As for the MLE estimator \({\hat{\gamma }}\), it can also be shown to be consistent and asymptotically normal.

Remark 4

It is clear from (13) that the variance structure of \(\epsilon =(\epsilon _{1},\ldots ,\epsilon _{n})^{'}\) is heteroskedastic since \(\hbox {Var}(\epsilon )=\Omega =\sigma _{v}^{2}I_{n}+\Sigma _{u}\) where \(\Sigma _{u}=\hbox {diag}\left( \sigma _{u}^{2}(Z_{1}),\ldots ,\sigma _{u}^{2}(Z_{n})\right) \). Thus, it is tempting to construct an improved (i.e., more efficient) LGMM estimator of \(\theta (Z_{i})\) by modifying the objective function in (15) to account for this variance structure. However, such a LGMM estimator can be problematic for the following two reasons.Footnote 6 First, as the literature has shown (see, e.g., Martins-Filho and Yao 2009; Su et al. 2013; Parmeter and Racine 2019), incorporating the variance-covariance structure in a nonparametric regression model is a delicate balance of bias and efficiency that does not always lead to improvements even in large samples. Second, as argued by Lin and Carroll (2000) for a typical random effects panel data models, when a standard kernel-based estimator is used in estimating the regression, it is often better to use the “working independence” approach by ignoring the correlation structure within a cluster. Thus, additional work on this topic will be fruitful and we leave it for future research.

We now turn to estimation of the marginal effects of inefficiency determinants and inefficiency predictions.

Recall that \(\mathrm {E}(u_{i}|Z_{i})=\mu _{u}(Z_{i})=\sqrt{2/\pi }\exp (\delta _{0}+Z_{i}^{'}\delta _{1})\); hence, the marginal effect of \(\mu _{u}(Z_{i})\) with respect to the \(j^{th}\) element of \(Z_{i}\) (which we will denote as \(z_{ij}\)) can be estimated as:

where \({\hat{\delta }}_{1j}\) is the MLE estimate from Step 2.

Next, given the estimates of the model’s parameters, we compute the efficiency, \({\hat{\xi }}_{i}\), using the following Jondrow et al. (1982) approach to predict \(u_{i}\) conditional on \(\epsilon _{i}\):

where \({\hat{\xi }}_{i}=exp(-{\hat{u}}_{i})\), \({\hat{\sigma }}_{ui}={\hat{\sigma }}_{u}(Z_{i})\), \({\hat{\phi }}_{i}=\phi \left( -\frac{{\hat{\lambda }}_{i}{\hat{\epsilon }}_{i}}{{\hat{\sigma }}_{i}}\right) \) and \({\hat{\Phi }}_{i}=\Phi \left( -\frac{{\hat{\lambda }}_{i}{\hat{\epsilon }}_{i}}{{\hat{\sigma }}_{i}}\right) \).

As alternatives, it is possible to use the estimators of Simar et al. (2017) and the other improved estimators discussed in Sect. 2.2 to obtain \({\hat{\delta }}_{j}\) and \({\hat{\xi }}_{i}\) but we do not pursue these alternatives here.

4 Monte Carlo simulations

4.1 Data generating processes (DGPs)

To examine the finite sample performance of the proposed estimators,

we conduct Monte Carlo experiments. We consider three data generating processes (DGPs):

a. Nonparametric Frontier:

where the errors \(v_{i}\) and \(\eta _{i}\) are generated as

Here, \(\rho =\{0.2, 0.5, 0.8\}\) indicate weak, medium and strong endogeneity, respectively. The one-sided error \(u_{i}\) is generated as \(u_{i}\sim \hbox {i.i.d.}\,N^{+}(0,\lambda ^{2}\sigma _{c}^{2})\), where \(\sigma _{c}^{2}=1-\rho ^{2}\) and we set \(\lambda =\{1.0,2.0\}\). The exogenous variable \(Z_{1i}\) and the instrument \(Z_{2i}\) are generated as i.i.d. each uniformly distributed on the interval [0,2]. It can be easily verified that the above three DGPs satisfy the monotonicity and concavity conditions.

b. Smooth Coefficient Frontier:

where \(\alpha (Z_{i})=\exp (Z_{i})\), \(\beta _{j}=\exp (a_{j}Z_{i})\) for \(j=1,2\) with \(a_{1}=0.5\) and \(a_{2}=0.75\). The term \(u_i\) is generated as \(u_{i} \sim N^{+}(0,\sigma _{u}^{2}(Z_{i}))\), where \(\sigma _{u}^{2}(Z_{i})=\exp (\delta Z_{i})\), and the values of \(\delta \) are implied by \(\lambda =\{1,2\}\). All the remaining variables are generated as in DGP 1. For each DGP, we consider two sample sizes, \(n=\{100, 200, 400, 800\}\), and the number of replications we run is 500.

For comparison purposes, we also include, along with our proposed estimators, the estimators from the following scenarios for DGP 1 and DGP 2: (1) a correct specification of the frontier with endogeneity which we denote as MLE-CE, and this is our benchmark; (2) a correct specification of the frontier but ignoring endogeneity which we call MLE-C; and (3) the Fan et al. (1996) semiparametric estimator that ignores endogeneity which we label as FLW.

4.2 Simulation results

To assess the performance of the proposed estimators, we report, for DGP 1 and DGP 2, the following measures: (i) the average mean squared errors (AMSE) of the estimated CSPLIML and LLIML estimators over the number of replications; (ii) the ratio of AMSE for each estimator, described at the end of previous subsection, relative to that of the proposed estimators over the realized values of X and \(Z_{1}\) and over the number of replications. The values of the ratios that are larger than 1 indicate superior AMSE performance of the proposed approaches; and (iii) the proportion of each constraint being violated. For DGP 3, we report only the average of MSE for the proposed two-step estimator over the number of replications.

For DGP 1 and 2, to obtain the estimator \({\hat{g}}\), we set \(p_{1}=3\) and \(p_{2}=1\), and we use a product of the second-order Epanechnikov kernels standardized to have unit variance:

The bandwidth sequences \((b_{1},b_{2})\) are chosen as previously described. We also conduct experiments with different bandwidth choices with \({\hat{b}}_{1}=c{\hat{b}}_{2}n^{-(\alpha _{2}-\alpha _{1})/(2\zeta )}\) where c is constant and \(c=\{0.5,0.75, 1.25, 1.5, 2.0\}\), and \({\hat{b}}_{2}\) is defined as in Remark 1. Our results indicated that the estimates are similar for various values of c, with the value of \(c=1\) providing the smallest AMSE. Consequently, we set \(c=1\) throughout the simulations.Footnote 7 For DGP 3, the bandwidths are selected based on leave-one-out cross-validation.

The simulation results for DGPs 1 and 2 (nonparametric frontier) are reported in Tables 1 and 2. Note that the row “Violation” contains two entries where the top entry shows the proportion of points violating the monotonicity (m) and the bottom entry shows the proportion of points violating the concavity constraints (c) when we use the proposed CSPLIML and LLIML methods.Footnote 8

First, we discuss the results from the nonparametric frontier. From Tables 1 and 2, we observe that the MSEs for the function \(g(\cdot )\) are very similar for both approaches, and the same is observed for the variance and correlation parameters. As the sample size doubles, the MSEs for \(g(\cdot )\) reduce and, for variance and correlation parameters, the MSEs decrease roughly to half indicating the root-n consistency. The last row of Tables 1 and 2 shows the proportion of violations of the constraints. We observe that the CSPLIML method has more points violating both constraints than the LLIML method, albeit the proportions of both constraints violations are relatively small.

Next, to illustrate the usefulness of nonparametric specification of the frontier when some regressors are endogenous, Tables 3 and 4 provide the comparison of our proposed model/approach to some of the parametric models/approaches discussed at the end of Sect. 4.1 for DGP 1 and 2 (with \(\lambda = 2\) and \(\rho = 0.5\)), respectively. Compared to the benchmark case, we see that our proposed estimators perform reasonably well in terms of MSEs, and as the sample size increases, our proposed semiparametric methods converge to the correctly specified parametric model with endogeneity for both DGPs considered (see MLE-CE column as n increases). In addition, as expected, our results also show that our proposed estimators outperform both the correctly specified parametric model but ignoring endogeneity (MLE-C), and the semiparametric model of Fan et al. (1996) that is also ignoring endogeneity (FLW). The main implication of our results is that ignoring endogeneity when it is present can have serious consequences for the estimation of productivity measures and efficiency.

For the smooth-coefficient frontier model, the results in Table 5 show the proposed two-step estimator is well behaved in finite samples in the sense that when the sample size increases, the MSE of the estimated coefficient functions reduces. In addition, when the sample sizes doubled, the MSE of the estimated variance parameters reduces to roughly half indicating \(\sqrt{n}\)-consistency is achieved for these estimated parameters.

5 Empirical illustration

5.1 Data and model

As an application, we use an input distance function of large US banks based on the same data as in Malikov et al. (2016b), from whom we borrow heavily in this discussion. The data on commercial banks come from Call Reports from the Federal Reserve Bank of Chicago for 2001:Q1–2010:Q4. We use a selected sub-sample of homogeneous large banks, namely those with total assets in excess of one billion dollars (in 2005 US dollars) in the first 3 years of observation. The sample is an unbalanced panel with 2397 bank–year observations for 285 banks.

We define the following desirable outputs of a bank’s production process: consumer loans (\(y_1\)), real estate loans (\(y_2\)), commercial and industrial loans (\(y_3\)) and securities (\(y_4\)). We also include off-balance-sheet income (\(y_5\)) as an additional output. The variable inputs are labor, i.e., the number of full-time equivalent employees (\(x_1\)), physical capital (\(x_2\)), purchased funds (\(x_3\)), interest-bearing transaction accounts (\(x_4\)) and non-transaction accounts (\(x_5\)).

Malikov et al. (2016b) also have input price information which we take into account here as instruments in \(Z_{2i}\) (which includes four log relative prices normalized by the price of \(x_1\)). Moreover, we follow Färe et al. (2005) and standardize all variables prior to the estimation by subtracting their respective sample means and dividing by their sample standard deviations.

We use the above data to estimate both the nonparametric frontier and the smooth-coefficient frontier models. However, our objective is not to determine and compare the performance of both models but merely to illustrate the usefulness of our proposed approaches. Moreover, it is important to note that since we are using panel data, whereas our proposed models are designed for cross-section data, and we need to make assumptions on the temporal behavior of inefficiency, \(u_{it}\) and noise, \(v_{it}\). For simplicity, we assume both \(u_{it}\) and \(v_{it}\) to be independent and identically distributed for nonparametric specification of the frontier model, and independently distributed for the smoothing coefficient frontier model. In addition, we assume no specific temporal behavior on inefficiency which implies a bank can be fully efficient in one year but not in others.

The first model we consider is the smooth-coefficient input distance function in which the translog functional form is used for the specification of the frontier. The translog distance function (IDF) has the form:

The variables in \(Z_{\mathrm{it}1}\) are a time trend and the log equity capital to account for possible size effects. On the other hand, \(Z_{\mathrm{it}2}\) includes \(Z_{\mathrm{it}1}\) plus the four log relative prices and non-performing loans in the previous period. We expect \(\beta _{m}(Z_{\mathrm{it}1})<0\) (\(m=K+1,\ldots ,K+M\)) and \(\beta _{k}(Z_{\mathrm{it}1})>0\) (\(k=1,\ldots ,K\)).

5.2 Empirical results

Our empirical results are summarized in Figs. 1, 2 and 3. In Fig. 1, we plot \(\beta _{j}\)s as a function of log equity (Z) which we normalize in the interval [0, 1]. In panel (a) we report the output-related coefficients and in panel (b) the input-related coefficients. As necessitated by the theory, the output-related coefficients are negative and the input-related coefficients are positive. Moreover, the input distance function should be linearly homogeneous with respect to inputs. Technical change is mostly negative except for values of equity near the median, where it also attains its maximum. As a function of equity, these coefficients show a variety of patterns which makes it necessary to use nonparametric estimation.

Next, we report the estimates of returns to scale (RTS) and the results are given in Fig. 2. The RTS can be estimated using the following equation:

The results in Fig. 2 indicate that large US banks exhibit, in general, decreasing returns to scale except for relatively small banks that are in, approximately, the 30-th percentile of the log-equity distribution. Returns to scale are lower at the median and are close to one for larger banks; see also the evidence in Restrepo-Tobón and Kumbhakar (2015).

Figure 3 reports the sampling distributions of the estimated technical efficiency (panel (a) and productivity measures such as technical change (panel (b)), efficiency change (panel (c)) and productivity growth (panel (d)) which is defined as the sum of technical change and efficiency change. The results in Fig. 3 show that both efficiency change and productivity growth are mainly positive, averaging about 2.4% and 2.7%, respectively, while technical change is close to zero on average and extends from roughly -1.8% to 1.6%. The estimated technical efficiency scores are ranging from 74% to 88% with an average of 83% indicating that for some banks, there is more room for improvement.

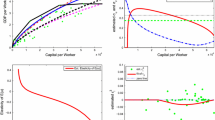

Next, we consider the estimation of nonparametric input distance frontier model where the frontier is left unspecified. For this model, we use both the local LIML and constrained LIML approaches discussed in Sects. 2.1.1 and 2.1.2. Moreover, for comparison purposes, we also estimate a parametric translog frontier as well as a nonparametric frontier model assuming all regressors are exogenous. These comparisons are particularly important as they highlight the impact of endogeneity. For simplicity and ease of presentation, we summarize the results in the form of sample distributions and these results are given in Fig. 4.

Panel (a) in Fig. 4 reports sample distributions of efficiency obtained using LLIML, CSPLIML, a nonparametric model without endogeneity and a parametric (translog) specification that also does not allow for endogeneity. Efficiency distributions are rather similar for LLIML and CSPLIML, and the same is true for the measures of technical change (panel (b)), efficiency change (panel (c)), and productivity growth (panel (d)). Technical change and efficiency change distributions are quite different when endogeneity is ignored. For example, according to local LIML and constrained LIML, technical change averages -0.5% and extends from roughly -1% to slightly over zero, whereas according to the translog and nonparametric model without endogeneity, it averages at 0.5% and spreads from -0.5% to nearly 1%. For efficiency changes, our new techniques give an average close to 1.5%, but the prediction of the models that do not account for endogeneity is that efficiency change averages -0.5% and ranges from roughly -1.5% to slightly over 0.5%. Therefore, there are important differences in terms of productivity growth as local LIML and constrained LIML imply an average value close to 1%, ranging from about zero to nearly 2%. The models that ignore endogeneity imply an average value close to zero, ranging from -1% to 1%, approximately.

The drastic impact of accounting for endogeneity on the average estimated productivity growth in Fig. 4 agrees with what was found in earlier studies. For example, Malikov et al. (2016b) report an average growth rate of 1.1% and a similar sensitivity of estimates to controlling for endogeneity of inputs. They obtain their results using a Bayesian estimation of a directional technology distance function and cost-minimization conditions on the same data set.

6 Concluding remarks

In this paper, we consider the problem of estimating a nonparametric stochastic frontier model with shape restrictions and when some or all regressors are endogenous. The endogeneity is caused by correlation between the symmetric error terms in the production function equation and in the reduced form.

First we propose two estimation procedures that share common features. Both are implemented in a three-step algorithm. Specifically, in the first procedure, we develop a three-step nonparametric constrained limited information maximum likelihood estimator. In the second procedure, we construct a local parametric limited information maximum likelihood estimator, where the constrained nonparametric estimation in the first procedure (Step 2) is replaced by a local parametric approximation.

Additionally, we consider estimation of semiparametric smooth-coefficient stochastic frontier models with endogenous regressors and propose a two-step procedure based on local (or nonparametric) GMM and MLE estimators.

Our Monte Carlo simulations demonstrate an attractive finite sample performance for all estimators proposed in this paper. An empirical application to US banks is presented to illustrate the usefulness of our proposed methods in practice.

Finally, the paper does not pursue several interesting extensions. For example, we do not consider further sources of endogeneity such as dependence between \(u_i\) and \(\xi _i\); we do not consider the problem of testing for parametric versus nonparametric specification of the frontier; nor do we consider the problem of testing constancy of parameters in the smooth-coefficient model. These problems merit a separate paper and are left for future work.

Notes

The term “nonparametric” used in this paper refers specifically to the assumptions on the functional form of the frontier. That is, no specific functional form of the frontier is assumed.

In the parametric stochastic frontier literature, the Cobb–Douglas specification has been used most frequently in practice.

We thank the Special Issue editors for bringing these points to our attention.

We thank an anonymous referee for pointing out this estimator to us.

We would like to thank an anonymous referee for pointing these out.

For conservation of space, we do not report these results here but they are available from the authors upon request.

Let \(x_{(1)} \le x_{(2)} \le \cdots \le x_{(n)}\) be rank ordered values. We say that the function \(g(\cdot )\) violates the monotonically increasing condition at x(i) if \(g(x_{(i)}) < g(x_{(i-1)})\). Moreover, we say that the function \(g(\cdot )\) violates the concavity condition at \(x_{i}\) if its Hessian matrix is positive definite.

References

Amsler C, Prokhorov A, Schmidt P (2014) Using copulas to model time dependence in stochastic frontier models. Econ Rev 33(5–6):497–522

Amsler C, Prokhorov A, Schmidt P (2016) Endogeneity in stochastic frontier models. J Econom 190:280–288

Amsler C, Prokhorov A, Schmidt P (2017) Endogenous environmental variables in stochastic frontier models. J Econom 199:131–140

Battese GE, Coelli TJ (1995) A model for technical inefficiency effects in a stochastic frontier production function for panel data. Empirical Econ 20:325–332

Caudill SB, Ford JM, Gropper DM (1995) Frontier estimation and firm-specific inefficiency measures in the presence of heteroscedasticity. J Bus Econ Stat 13(1):105–111

Du P, Parmeter CF, Racine JS (2013) Nonparametric kernal regression with multiple predictors and multiple shape constraints. Stat Sin 23:1347–1371

Fan Y, Li Q, Weersink A (1996) Semiparametric estimation of stochastic production frontier models. J Bus Econ Stat 14:460–68

Freyberger J, Horowitz JL (2015) Identification and shape restrictions in nonparametric instrumental variables estimation. J Econom 189:41–53

Färe R, Grosskopf S, Noh D-W, Weber W (2005) Characteristics of a polluting technology: theory and practice. J Econom 126:469–492

Gozalo P, Linton O (2000) Local nonlinear least squares: using parametric information in nonparametric regression. J Econom 99:63–106

Griffiths WE, Hajargasht G (2016) Some models for stochastic frontiers with endogeneity. J Econom 190:341–348

Hall P, Huang L-S (2001) Nonparametric kernel regression subject to monotonicity constraints. Ann Stat 624–647

Henderson DJ, List JA, Millimet DL, Parmeter CF, Price MK (2012) Empirical implementation of nonparametric first-price auction models. J Econom 168:17–28

Henderson DJ, Parmeter CF (2009) Imposing economic constraints in nonparametric regression: survey, implementation, and extension. Adv Econom 25:433–69

Henderson DJ, Parmeter CF (2015) Model averaging over nonparametric estimators. In: Essays in Honor of Aman Ullah, advances in econometrics, vol 36

Huang CJ, Liu J (1994) Estimation of a non-neutral stochastic frontier production function. J Prod Anal 5:171–180

Jondrow J, Lovell CK, Materov IS, Schmidt P (1982) On the estimation of technical inefficiency in the stochastic frontier production function model. J Econom 19:233–238

Karakaplan M, Kutlu L (2015) Handling endogeneity in stochastic frontier analysis. Available at SSRN 2607276

Kumbhakar SC, Ghosh S, McGuckin JT (1991) A generalized production frontier approach for estimating determinants of inefficiency in U.S. dairy farms. J Bus Econ Stat 9(3):279–286

Kumbhakar SC, Park BU, Simar L, Tsionas EG (2007) Nonparametric stochastic frontiers: a local maximum likelihood approach. J Econom 137:1–27

Kumbhakar SC, Sun K, Zhang R (2016) Semiparametric smooth coefficient estimation of a production system. Pac Econ Rev 21:464–482

Kutlu L (2010) Battese–Coelli estimator with endogenous regressors. Econ Lett 109:79–81

Kutlu L, Tran KC (2019) Heterogeneity and endogeneity in panel stochastic frontier models. In: Panel data econometrics. Elsevier, pp 131–146

Lin X, Carroll RJ (2000) Nonparametric function estimation for clustered data when the predictor is measured without/with error. J Am Stat Assoc 95:520–534

Malikov E, Kumbhakar SC, Sun Y (2016a) Varying coefficient panel data model in the presence of endogenous selectivity and fixed effects. J Econom 190:233–251

Malikov E, Kumbhakar SC, Tsionas MG (2016b) A cost system approach to the stochastic directional technology distance function with undesirable outputs: the case of US banks in 2001–2010. J Appl Econom 31:1407–1429

Martins-Filho C, Yao F (2007) Nonparametric frontier estimation via local linear regression. J Econom 141:283–319

Martins-Filho C, Yao F (2009) Nonparametric regression estimation with general parametric error covariance. J Multivar Anal 100:309–333

McManus DA (1994) Making the Cobb–Douglas functional form an efficient nonparametric estimator through localization

Parmeter C, Racine J (2019) Nonparametric estimation and inference for panel data models

Parmeter CF, Racine JS (2013) Smooth constrained frontier analysis. Springer, New York, pp 463–488

Restrepo-Tobón D, Kumbhakar SC (2015) Nonparametric estimation of returns to scale using input distance functions: an application to large US banks. Empir Econ 48:143–168

Simar L, Van Keilegom I, Zelenyuk V (2017) Nonparametric least squares methods for stochastic frontier models. J Prod Anal 47:189–204

Su L, Ullah A (2008) Local polynomial estimation of nonparametric simultaneous equations models. J Econom 144:193–218

Su L, Ullah A, Wang Y (2013) Nonparametric regression estimation with general parametric error covariance: a more efficient two-step estimator. Empir Econ 45:1009–1024

Sun K (2015) Constrained nonparametric estimation of input distance function. J Prod Anal 43:85–97

Sun K, Kumbhakar SC (2013) Semiparametric smooth-coefficient stochastic frontier model. Econ Lett 120:305–309

Tran KC, Tsionas EG (2009) Local GMM estimation of semiparametric panel data with smooth coefficient models. Econom Rev 29:39–61

Tran KC, Tsionas EG (2013) GMM estimation of stochastic frontier model with endogenous regressors. Econ Lett 118:233–236

Tran KC, Tsionas EG (2015) Endogeneity in stochastic frontier models: copula approach without external instruments. Econ Lett 133:85–88

Wang H (2002) Heteroscedasticity and non-monotonic efficiency effects of a stochastic frontier model. J Prod Anal 18:241–253

Acknowledgements

We would like to thank the Editors: Subal Kumbhakar, Christopher Parmeter and Amir Malikov, and two anonymous referees to helpful comments and suggestions that led to substantial improvement of the paper. All the remaining errors are our responsibilities. Artem Prokhorov’s research for this paper was supported by a grant from the Russian Science Foundation (Project No.20-18-00365).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Prokhorov, A., Tran, K.C. & Tsionas, M.G. Estimation of semi- and nonparametric stochastic frontier models with endogenous regressors. Empir Econ 60, 3043–3068 (2021). https://doi.org/10.1007/s00181-020-01941-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-020-01941-0

Keywords

- Constrained semiparametric limited information MLE

- Efficiency

- Endogeneity

- Local limited information MLE

- Smooth coefficient

- Stochastic frontier