Abstract

With the emergence of numerical sensors in many aspects of everyday life, there is an increasing need in analyzing multivariate functional data. This work focuses on the clustering of such functional data, in order to ease their modeling and understanding. To this end, a novel clustering technique for multivariate functional data is presented. This method is based on a functional latent mixture model which fits the data into group-specific functional subspaces through a multivariate functional principal component analysis. A family of parsimonious models is obtained by constraining model parameters within and between groups. An Expectation Maximization algorithm is proposed for model inference and the choice of hyper-parameters is addressed through model selection. Numerical experiments on simulated datasets highlight the good performance of the proposed methodology compared to existing works. This algorithm is then applied to the analysis of the pollution in French cities for 1 year.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The modern technologies ease the collection of high frequency data which is of interest to model and understand the studied phenomenon for further analyses. For example in sports, athletes wear devices that collect data during their training to improve their performances and follow their physical constants in order to prevent injuries. This kind of data can be classified as functional data: a quantitative entity evolving along time. For instance in the univariate case, a functional data X is represented by a single curve, \(X(t)\in \mathbb {R}\), \(\forall t \in [0,T]\).

With the growth of smart device market, more and more data are collected for the same individual, such as runner heartbeat and the altitude of his travel. An individual is then represented by several curves. The corresponding multivariate functional data can be written: \(\mathbf{X } = \mathbf{X }(t)_{t\in [0,T]}\) with \(\mathbf{X} (t)=(X^{1}(t),\ldots ,X^{p}(t))'\in \mathbb {R}^{p}, p\ge 2\). We refer to Ramsay and Silverman (2005) for univariate and bivariate examples.

Because of this amount of collected data, there is an increasing need for methods able to identify homogeneous subgroups of data, to make better individualized predictions for example. The clustering of functional data can be addressed with different methods, that can be split into 4 categories according to Jacques and Preda (2014a): the raw data methods that consists of clustering directly the curves on their finite set of points ; the filtering methods that need a first step of smoothing curves into a basis of functions and a second step of clustering the obtained expansion coefficients ; the adaptive methods where clustering and expression of the curves into a finite dimensional space are performed simultaneously ; and the distance-based methods where usual clustering algorithms are applied with specific distances for functional data. Among these categories, there exist numerous works for the clustering of univariate functional data as for instance (James and Sugar 2003; Tarpey and Kinateder 2003; Chiou and Li 2007; Bouveyron and Jacques 2011; Jacques and Preda 2013; Bouveyron et al. 2015; Bongiorno and Goia 2016).

Conversely, only a few exists for clustering multivariate functional data. Singhal and Seborg (2005) and Ieva et al. (2013) use a k-means algorithm based on specific distances between multivariate functional data. Kayano et al. (2010) consider Self-Organizing Maps based on the coefficients of multivariate curves into an orthonormalized Gaussian basis expansions. Tokushige et al. (2007) extend crisp and fuzzy k-means algorithms for multivariate functional data by considering a specific distance between functions. Those methods cluster data by considering that they lie in the same subspace. A new method has been recently published based on a hypothesis testing k-means (Zambom et al. 2019). At each step of the k-means algorithm, the curve belonging decision is based on the combination of two hypothesis test statistics. The performance of their algorithm is compared to distance-based methods and some dimension reduction based methods. Those dimension reduction techniques main principle is to obtain a low-dimensional representation of functions. For example, Ieva and Paganoni (2016) present a generalized functional linear regression model that cluster individuals in two categories. The first step consist of a multivariate functional principal component analysis applied on the variance-covariance matrix on the functional data and their first derivatives. Then, the obtained scores are used as covariates in a generalized linear model to predict the outcome. Yamamoto and Hwang (2017) propose a clustering method that combines a subspace separation technique with functional subspace clustering, named FGRC, that is less sensible to data variance than functional principal component k-means developed by Yamamoto (2012) and functional factorial k-means (Yamamoto and Terada 2014). Finally, Jacques and Preda (2014b) present a Gaussian model-based clustering method based on a principal component analysis for multivariate functional data (MFPCA). One of the benefits of this method is that the dependency between functional variables is managed thanks to the MFPCA. More recently, new methods based on a mix between dimension reduction and nonparametric approaches appear. Indeed, Traore et al. (2019) propose a clustering technique for nuclear safety experiment where one individual curve is decomposed into two new curves that are used in the decision making process. The first step consists in doing a dimension reduction technique on the first curves and applying a hierarchical clustering on those obtained values. Then, a semi-metric is build to compare the second curves, and the clusters are refining thanks to this comparison. But, even if this method is developped to deal with two curves for a same individual, at first the functional data are univariate.

In Jacques and Preda (2014b), MFPCA scores are considered as random variables whose probability distributions are cluster specific. Although this model is far more flexible than other methods due to its probabilistic modeling, it suffers nevertheless from some limitations. Indeed, using an approximation of the notion of density distribution for functional data, the authors modeled only a given proportion of principal components and thus a significant part of the available information is ignored. In this paper, we propose a model which extends Jacques and Preda (2014b) work by modeling all principal components whose estimated variance are non-null. All available information is therefore taken into account. This is a significant advantage because it will give a finner modeling and, consequently, a better clustering in most cases. Moreover, our model allows to use an Expectation Maximisation (EM) algorithm for its inference, with the theoretical guaranties it implies, whereas Jacques and Preda (2014b) use an heuristic pseudo-EM algorithm with no theoretical guaranties. The resulting model can be also viewed as an extension of Bouveyron and Jacques (2011) method to the multivariate case, that is why we will refer to it as the funHDDC model in the following.

The paper is organized as follows. A quick reminder of function data analysis is done in Sect. 2. Section 3 presents principal component analysis for multivariate functional data, as introduced in Jacques and Preda (2014b). Section 4 introduces the mixture model allowing the clustering of multivariate functional data. Section 5 discusses parameters estimation via an EM algorithm, proposes criteria for the selection of number of clusters and computational details. Comparisons between the proposed method and existing ones on simulated and real datasets are presented in Sects. 6 and 7. A discussion concludes the paper in Sect. 8.

2 Functional data analysis

Let us first assume that the observed curves \(\mathbf{X} _{1},\ldots ,\mathbf{X} _{n}\) are independent realizations of a \(L_2\)-continuous multivariate stochastic process \(\mathbf{X } = \{\mathbf{X }(t),t\in [0,T]\}\)\(=\{(X^1(t),\ldots ,X^p(t))\}_{t\in [0,T]}\) for which the sample paths, i.e. the observed curves \(\mathbf{X} _{i}=(X_{i}^{1},\ldots ,X_{i}^{p})\), belong to \(L_2[0,T]\). Without loss of generality, let assume that \(E(\varvec{X})=0\).

In practice, the functional expressions of the observed curves are not known and it is only possible to have access to discrete observations at a finite set of times \({X}_{i}^j(t_1),\ldots ,{X}_{i}^j(t_s)\) with \(0\le t_1\le \cdots ,\le t_s\le 1\) for every \(1\le i \le n\) and \(1\le j \le p\). The first task, when working with functional data, is therefore to convert these discretely observed values to a function \(X_i^j(t)\), computable for any desired argument value \(t\in [0,T]\). One way to do that is interpolation, which is used if the observed values are assumed to be errorless. However, if there is some noise that needs to be removed, a common way to reconstruct the functional form is to assume that the curves \(X_i^j(t)\) can be decomposed into a finite dimensional space, spanned by a basis of functions (Ramsay and Silverman 2005):

where \((\phi _{r}^{j}(t))_{1\le r\le R_{j}}\) is the basis of functions for the jth component of the multivariate curve and \(R_{j}\) the number of basis functions. In order to ease the description of the model, let us introduce the following notations. The coefficients \(c_{ir}^{j}\) can be gathered in the matrix \(\varvec{C}\):

Let also introduce the matrix \(\varvec{\phi (t)}\), gathering the basis functions:

With these notations, Eq. (1) can be rewritten as follows:

The estimation of \(\varvec{C}\) is usually done through least square smoothing (see Ramsay and Silverman 2005). The choice of the basis functions, contained in \(\varvec{\phi }\), has to be made by the user. There is no straight rules about how to choose the appropriate ones (Jacques and Preda 2014a). We can nevertheless recommend the use of a Fourier basis in the case of data with a repetitive pattern, and B-spline functions in most other cases.

3 Multivariate functional principal component analysis

Principal component analysis for multivariate functional data has already been suggested by various authors. Ramsay and Silverman (2005) propose to concatenate observations of the functions measured on a fine grid of points into a single vector and then to perform a standard principal component analysis (PCA) on these concatenated vectors. They also propose to express observations into a known basis of functions and apply PCA on the vector of concatenated coefficients. Both approaches may be problematic when the functions correspond to different observed phenomena. Moreover, the interpretation of multivariate scores for one individual is usually difficult. In Berrendero et al. (2011), the authors propose instead to summarize the curves with functional principal components. For this purpose, they carry out classical PCA for each value of the domain on which the functions are observed and suggest an interpolation method to build their principal functional components. In a different approach, Jacques and Preda (2014b) suggest the MFPCA method, with a normalization step if the units of measurement differ between functional variables. Their method relies on the multidimensional version of the Karhunen–Loève expansion (Saporta 1981). Chiou et al. (2014) also present a normalized multivariate functional principal component analysis which takes into account the differences in degrees of variability and units of measurement among the components of the multivariate random functions. As in Jacques and Preda (2014b), it leads to a single set of scores for each individual. Chen and Jiang (2016) present a multi-dimensional functional principal component analysis and ? a multivariate functional principal component analysis that both can handle data observed on more than one-dimensional domain. Happ and Greven (2015) method can be applied to sparse functional data and includes the MFPCA proposed by Jacques and Preda (2014b) when the interval is [0, T] and steady.

Because our data are collected on the one-dimensional interval [0,T] and with a regular sampling scheme, the MFPCA proposed by Jacques and Preda (2014b) is used in combination with a fine probabilistic modeling of the group-specific densities. The MFPCA method is therefore summarized hereafter. MFPCA aims at finding the eigenvalues and eigenfunctions that solve the spectral decomposition of the covariance operator \(\nu \):

with \(\lambda _{l}\) a set of positive eigenvalues and \(\varvec{f}_{l}\) the set of associated multivariate eigenfunctions. The estimator of the covariance operator can be written as:

Let suppose that each principal factor \(\varvec{f}_{l}\) belongs to the linear space spanned by the matrix \(\varvec{\phi }\):

with \(\varvec{b}_{l}=(b_{l11},\ldots ,b_{l1R_1},b_{l21},\ldots ,b_{l2R_{2}},\ldots ,b_{lp1},\ldots ,b_{lpR_{p}})\). Using Equation (4), the eigen problem (3) becomes:

where \(\varvec{W}=\int _{0}^{T}\varvec{\phi }'(t)\varvec{\phi }(t)\) is a \(R \times R\) matrix, where \(R=\sum _{j=1}^{p}R_{j}\), which contains the inner products between the basis functions. The MFPCA then reduces to eigendecomposition of the matrix \(\frac{1}{\sqrt{n-1}}\varvec{CW}^{1/2}\). Thus, each multivariate curve \(\varvec{X}_{i}\) is identified by its score \(\varvec{\delta }_{i}=(\delta _{il})_{l\ge 1}\) into the basis of multivariate eigenfunctions \((\varvec{f}_{l})_{l\ge 1}\). Scores are obtained from \(\delta _{il}= \varvec{C}_i\varvec{W}\varvec{b'}_l\) where \(\varvec{C}_i\) is the ith row of matrix \(\varvec{C}\).

In practice, due to the fact that each component \(X_{i}^{j}\) of \(\varvec{X}_{i}\) is approximated into a finite basis of functions of size \(R_{j}\), the maximum number of scores which can be computed is \(R=\sum _{j=1}^{p}R_{j}\).

4 A generative model for multivariate functional data clustering

Our goal is to group the observed multivariate curves \(\varvec{X}_{1},\ldots ,\varvec{X}_{n}\) into K homogeneous clusters. At this stage, K is fixed a priori and an estimation procedure for this parameter will be suggested in Sect. 5.3. Let \(Z_{ik}\) be the latent variable such that \(Z_{ik}=1\) if \(\varvec{X}_{i}\) belongs to cluster k and 0 otherwise. In order to ease the presentation of the modeling, let us assume at first that the values \(z_{ik}\) of \(Z_{ik}\) are known for all \(1\le i\le n\) and \(1\le k\le K\) (our goal is in practice to recover them from the data). Let \(n_{k}=\sum _{i=1}^{n}z_{ik}\) be the number of curves within cluster k.

Let suppose that the curves of each cluster can be described into a low-dimensional functional latent subspace specific to each cluster, with intrinsic dimensions \(d_{k}< R\), \(k=1,\ldots ,K\). Curves can be expressed into a group-specific basis \(\{\varphi _{r}^{k}\}_{1\le r\le d_{k}}\), which is determined thanks to the model, and is obtained from \(\{\phi _{r}^{j}\}_{1\le j\le p, 1\le r\le R}\) through a linear transformation:

where \(Q_{k}=(q_{kr\ell })_{1\le r,\ell \le R}\) is the orthogonal \(R\times R\) matrix containing the basis expansion coefficients of the eigenfunctions. \(Q_{k}\) is split for later use into two parts: \(Q_{k}=[U_{k},V_{k}]\) with \(U_{k}\) of size \(R\times d_{k}\) and \(V_{k}\) of size \(R\times (R-d_{k})\), \(U'_{k}U_{k}=I_{d_{k}}\), \(V'_{k}V_{k}=I_{R-d_{k}}\) and \(U'_{k}V_{k}=0\).

Let \((\varvec{\delta }_{i}^{k})_{1\le i \le n_{k}}\) be the MFPCA scores of the \(n_{k}\) curves of cluster k. These scores are assumed to follow a Gaussian distribution

with \(\varvec{\mu }_{k}\in \mathbb {R}^{R}\) the mean function and \(\varvec{\Delta }_{k}\) the covariance matrix with the following form:

The assumption on \(\varvec{\Delta }_{k}\) allows to finely model the variance of the first \(d_{k}\) principal components, while the remaining ones are considered as noise components and modeled by a unique parameter \(b_{k}\). This model will be referred to as \([a_{kj}b_{k}Q_{k}d_{k}]\) hereafter. The model of Jacques and Preda (2014b) is similar but with the constraint \(b_k=0\), for all \(k=1,\ldots ,K\). The latter leads to ignore information contained in the last eigenfunctions, whereas we propose to model it in a parsimonious way.

In addition, different sub-models can be defined depending on the constraints we apply on model parameters, within or between groups, leading to more parsimonious models. This possibility allows to fit into various situations. The following 5 sub-models can be derived from the most general one:

-

\([a_{k}b_{k}Q_{k}d_{k}]\) this model is used if the first \(d_{k}\) eigenvalues are assumed to be common within each group. In this case, there is only 2 eigenvalues in \(\varvec{\Delta }_{k}\), \(a_{k}\) and \(b_{k}\).

-

\([a_{kj}bQ_{k}d_{k}]\) the parameters \(b_{k}\) are fixed to be common between groups. It assumes that the variance outside the group-specific subspaces is common, a usual hypothesis when data are obtained in a common acquisition process.

-

\([a_{k}bQ_{k}d_{k}]\) the parameters \(a_{k}\) are fixed to be common within each group and \(b_{k}\) are fixed to be common between groups.

-

\([ab_{k}Q_{k}d_{k}]\) the parameters \(a_{kj}\) are fixed to be common between and within groups.

-

\([abQ_{k}d_{k}]\) the parameters \(a_{kj}\) and \(b_{k}\) are fixed to be common between and within groups.

In practice, the \(z_{ik}\)’s are not known and our goal is to predict them. That is why an EM algorithm is proposed below in order to estimate model parameters and then to predict the \(z_{ik}\)’s.

5 Model inference and choice of the number of clusters

5.1 Model inference through an EM algorithm

In model-based clustering, the estimation of model parameters is traditionally done by maximizing the likelihood through the EM algorithm (Dempster et al. 1977). The EM algorithm alternates between two steps: the expectation (E) and maximization (M) steps. The E step aims at computing the conditional expectation of the complete log-likelihood using the current estimate of parameters. Then, the M step computes parameter estimates maximizing the expected complete log-likelihood found in the E step.

This section presents the update formulae of the EM algorithm in the case of the \([a_{kj}b_{k}Q_{k}d_{k}]\) model. Update formulae can be easily derived in the same manner for other models. The following proposition provides the expression of the complete log-likelihood associated with the model described above. Proof of this result is provided in “Appendix A.1”.

Proposition 1

The complete log-likelihood of the observed curves under the \([a_{kj}b_{k}Q_{k}d_{k}]\) model can be written as:

where \(\theta =(\pi _k,\varvec{\mu }_k,a_{kj},b_k,q_{kj})_{kj}\) for \(1\le k\le K\) and \(1 \le j \le d_k\), \(q_{kj}\) is the jth column of \(Q_k\), \(C_{k}=\frac{1}{n_{k}}\sum _{i=1}^{n}Z_{ik}(c_{i}-\varvec{\mu }_{k})^{t}(c_{i}-\varvec{\mu }_{k})\) and \(c_i=(c_{ir}^1,\ldots ,c_{ir}^p)\) is a vector of coefficients.

As the group memberships \(Z_{ik}\) are unknown, the EM algorithm starts by computing their conditional expectation (E step) before maximizing the expected complete likelihood (M step).

E step This step aims at computing the conditional expectation of the complete log-likelihood and reduces to the computing of the conditional expectation \(E[Z_{ik}|c_{i},\theta ^{(q-1)}]\), which can be computed as follows.

Proposition 2

For the model \([a_{kj}b_{k}Q_{k}d_{k}]\), the posterior probability that each curve belongs to the kth cluster can be written:

where \(H_{k}^{(q-1)}(c)\) is the cost function defined for \(c \in \mathbb {R}^{R}\) as:

where \(||.||_{\mathcal {D}_{k}}^{2}\) is a norm on the latent space \(\mathbb {E}_{k}\) defined by \(||y||_{\mathcal {D}_{k}}^{2}=y^{t}\mathcal {D}_{k}y\), \(\mathcal {D}_{k}=\tilde{Q}\varvec{\Delta }_{k}^{-1}\tilde{Q}^{t}\) and \(\tilde{Q}\) is a matrix containing the \(d_{k}\) vectors of \(U_{k}\) completed by zeros such as \(\tilde{Q}=[U_{k},0_{R-d_{k}}]\), \(P_{k}\) is the projection operator on the functional latent space \(\mathbb {E}_{k}\) defined by \(P_{k}(c)=\varvec{W}U_{k}U_{k}^{t}\varvec{W}^{t}(c-\varvec{\mu }_k)+\varvec{\mu }_k\).

Proof of this result is provided in “Appendix A.2”.

M step This step estimates the model parameters by maximizing the expectation of the complete log-likelihood conditionally on the posterior probabilities \(t_{ik}^{(q)}\) computed in the previous step. The following proposition provides update formulae for mixture parameters. Proof of these results are provided in “Appendix A.3”.

Proposition 3

For the model \([a_{kj}b_{k}Q_{k}d_{k}]\), the maximization of the conditional expected complete log-likelihood leads to the following update:

-

\(\pi ^{(q)}_{k}=\frac{\eta _{k}^{(q)}}{n},\,\,\,\mu ^{(q)}_{k}=\frac{1}{\eta _{k}^{(q)}}\sum _{i=1}^{n}t_{ik}^{(q)}c_{i}\),

-

the \(d_{k}\) first columns of the orientation matrix \(Q_{k}\) are updated by the eigenfunctions coefficients associated with the largest eigenvalues of \(\varvec{W}^{1/2}C_{k}^{(q)}\varvec{W}^{1/2}\),

-

the variance parameters \(a_{kj}\), \(j=1,\ldots ,d_{k}\), are updated by the \(d_{k}\) largest eigenvalues of \(\varvec{W}^{1/2}C_{k}^{(q)}\varvec{W}^{1/2}\),

-

the variance parameters \(b_{k}\) are updated by \(b_{k}^{(q)}=\frac{1}{R-d_{k}}[tr(\varvec{W}^{1/2}C_{k}^{(q)}\varvec{W}^{1/2})-\sum _{j=1}^{d_{k}}\widehat{a}_{kj}^{(q)}]\).

where \(\eta _{k}^{(q)}=\sum _{i=1}^{n}t_{ik}^{(q)}\) and \(C_{k}^{(q)}=\frac{1}{\eta _{k}^{(q)}}\sum _{i=1}^{n}t_{ik}^{(q)}(c_{i}-\mu _{k}^{(q)})^{t}(c_{i}-\mu _{k}^{(q)})\) is the sample covariance matrix of group k.

To summarize, the algorithm introduced above, named hereafter funHDDC, clusters multivariate functional data through their projection into low dimensional subspaces. Those projections are obtained by performing a MFPCA per cluster thank to an iterative algorithm.

5.2 Estimation of intrinsic dimensions

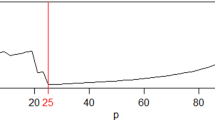

In order to choose the intrinsic dimensions \(d_{k}\) of each cluster, the Cattell’s scree-test (Cattell 1966) is used. This test looks for a drop in the eigenvalues scree. The selected dimension is the one for which the subsequent eigenvalues differences are smaller than a threshold provided by the user or selected using Bayesian information criterion, Akaike information criterion, integrated completed likelihood or slope heuristic (described below).

This estimation of the number of intrinsic dimensions is done within the M step of EM algorithm. It may allow the estimated intrinsic dimensions to vary along the iterations in order to fit well data.

5.3 Choice of the number of clusters

We now focus on the choice of the hyper-parameter K, the number of clusters. The choice of this hyper-parameter is here viewed as model selection problem. Classical model selection tools include the Akaike information criterion (AIC, Akaike 1974), the Bayesian information criterion (BIC, Schwarz 1978) and the integrated completed likelihood criterion (ICL, Biernacki et al. 2000). In the context of mixture model, BIC is certainly the most popular. The BIC criterion can be computed as follows:

with \(l(\hat{\theta })\) the maximum log-likelihood value, m the number of model parameters and n the number of individuals. The criterion penalizes the log-likelihood through model complexity. The model maximizing the criterion is chosen.

Another criterion, that has proved its usefulness, is the slope heuristic (SH, Birge and Massart 2007). This data-driven criterion penalty has a multiplicative factor provided by the linear part of the log-likelihood:

where s is the slope of the linear part of the maximum log-likelihood value \(l(\hat{\theta })\) when plotted against the model complexity. It has to be noticed that this method requires to test a large number of clusters number, or a large number of models, so that there is enough points in the log-likelihood versus model complexity plot (bottom left plot of Fig. 4) to detect a plateau in the log-likelihood.

For either of those criteria, different values for K need to be tested. Then, the one that maximizes the chosen criterion’s value is the best one that has to be kept.

5.4 Computational details

As explained in Sect. 5.1, funHDDC algorithm relies on an EM algorithm. The EM algorithm needs to be initialized, by setting initial values for the partitions. To this end, two initialization strategies are considered: random and kmeans initializations. In the case of random initialization, the partitions are randomly sampled using a multinomial distribution with uniform probabilities. The kmeans strategy consists in initializing the partitions with those obtained by a kmeans algorithm applied directly on the whole set of discretized observations. With kmeans initialization, the EM algorithm usually converges quicker than with random initialization. For both initialization, it is highly recommended, in order to prevent the convergence to a local maximum, to perform multiple initializations of the algorithm and keep the solution maximizing the log-likelihood. The number of initializations is a parameter of funHDDC algorithm that can be tuned by the user.

The funHDDC algorithm is stopped when the difference between two consecutive log-likelihood values is lower than a given threshold \(\epsilon \) or after a maximal number of iterations.

Running times for different sizes of datasets will be presented later, in Sect. 6.4.

6 Numerical experimentation on simulated data

This section presents numerical experiments to illustrate the behavior of the proposed methodology and confront it to competitors from the literature. Firstly, the quality of the model inference algorithm is illustrated on simulated data. Secondly, the sensitivity of the proposed approach to sample size is investigated in term of correct classification rate as well as in term of computational time. Thirdly, BIC and SH are compared for selecting the number of clusters. Finally, funHDDC is confronted to competitors on several datasets. The R code (R Core Team 2017) for our multivariate functional clustering algorithm is available on CRAN in the funHDDC package.

6.1 Simulation setup

In order to ease the reproducibility of the results, we consider 3 simulation scenarios designed as follows.

Scenario A For this first scenario, a sample of 1000 bivariate curves are simulated based on \([a_kb_kQ_kD_k]\) model. To do so, scores are simulated according to a Gaussian model with mean \(\mu \) and diagonal variance \(\Delta \). Curves coefficients can be rebuild based on \((\delta _{il})_{l\ge 1}=\varvec{CWb'}_l\) as shown in Sect. 2. The number of clusters is fixed to \(K=3\) and mixing proportions are equal. Scores are generated from a multivariate normal distribution with the following parameters:

where d is the intrinsic dimension of subgroups, \(\mu \) is the mean vector of size 70, a is the value of the d-first diagonal elements of \(\Delta \) and b the value of the (70-d)-last ones. Curves are smoothed using a basis of 35 Fourier functions (cf. top panel of Fig. 1).

Scenario B The second simulation setting is inspired by the data simulation process of Ferraty and Vieu (2003), Preda (2007) and Bouveyron et al. (2015), and therefore will not favor our approach in the comparison. For this simulation, the number of clusters is fixed to \(K=4\). A sample of 1000 bivariate curves is simulated according to the following model for \(t\in [1,21]\):

where \(U\sim \mathcal {U}(0,0.1)\) and \(\epsilon (t)\) is a white noise independent of U and such that \(\mathbb {V}ar(\epsilon (t))=0.25\). The functions \(h_1\) and \(h_2\) are defined, for \(t\in [1,21]\), by \(h_1(t)=(6-|t-7|)_+\) and \(h_2(t)=(6-|t-15|)_+\) where \((\cdot )_+\) means the positive part. The mixing proportions are equal, and the curves are observed in 101 equidistant points. The functional form of the data is reconstructed using a cubic B-spline basis smoothing with 25 basis functions (cf. middle panel of Fig. 1).

Scenario C For this third simulation scenario, the number of clusters is fixed to \(K=4\). A sample of 1000 bivariate curves is simulated according to the following model for \(t\in [1,21]\):

where \(U, \epsilon (t), h_1\) and \(h_2\) are defined as before. The mixing proportions are equal, and the curves are observed in 101 equidistant points. The functional form of the data is reconstructed using a cubic B-splines basis smoothing with 25 basis functions. As shown in Fig. 1 (bottom), the 4 groups cannot be distinguished with one variable only: indeed group 3 (green) is similar to group 1 (black) for variable \(X_1(t)\) and similarly group 4 (blue) is similar to group 1 (black) for variable \(X_2(t)\). Consequently, any univariate functional clustering methods applied either on variable \(X_1(t)\) or \(X_2(t)\) should fail.

For each scenario, the estimated partitions are compared to the true partition with the adjusted Rand index (ARI, Rand 1971). This criterion value is less than or equal to 1, with 1 representing a perfect agreement between the true partition and the one estimated by the algorithm, and 0 a random agreement. The algorithm settings used for all simulations are the following: the threshold of the Cattell’s scree-test for the selection of intrinsic dimensions \(d_k\) is fixed to 0.2 (the optimal threshold value should be chosen using BIC or slope heuristic), the stopping criterion for the EM algorithm is a growth of the log-likelihood lower than \(\epsilon =10^{-3}\) or a maximal number of iterations of 200, the initialization of the algorithm is done through a random partition in the introductory example, and with the kmeans strategy the model selection and benchmark experiments, in order to speed up the convergence.

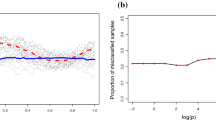

6.2 An introductory example

In order to illustrate the good behavior of the inference algorithm, we first consider a single simulation according to Scenario A, which is overall a difficult situation. The algorithm is run for \(K=3\) groups with the model \([a_k b_k Q_k D_k]\) which has been used to generate the data and the simulation setting is repeated 50 times. Figure 2 allows the comparison of the fitted values with the actual ones of model parameters. Parameter a turns out to be well estimated for all 3 clusters, whereas parameters b and d are only well estimated for 2 clusters out of 3. Indeed, this simulation scenario has one mixture component with a low signal-to-noise ratio which disturbs the estimation of \(d_k\) for this component, and therefore also perturb the estimation of the noise variance \(b_k\). Nevertheless, the fact that our model actually models the variance within the whole space (and not only a part as in Jacques and Preda 2014b), allows us to correctly recover the cluster partition even in difficult estimation conditions.

To assess the clustering quality, the funHDDC algorithm is now run for \(K=3\) groups with all 6 sub-models and the simulation setting is repeated 50 times. The quality of the estimated partitions is evaluated using the ARI and results are given in Table 1. As expected, the best result is obtained for the model \([a_k b_k Q_k D_k]\) which has been used to generate data and it shows that the algorithm correctly recovers the cluster pattern. It is worth noticing that the other models also have satisfying performances.

6.3 Sample size influence

In order to evaluate the sensitivity of the proposed approach to the sample size, we now consider 50 simulations according to Scenario B, and with different sample size: 1000, 500, 200 and 30. Table 2 presents the corresponding results. The impact of the sample size is not really significant between 1000 to 200. For very small sample size, 30 observations for 3 clusters, the quality of the partition estimation significantly decreases, but such small sample size are seldom used in practice for clustering studies.

6.4 Computational time and cost

When dealing with multivariate functional data, a big issue consists of scalability and computational effort in performing analyses. We will present here the impact of sample size and number of functional variables on running time.

Firstly, funHDDC algorithm is applied for \(K=4\) groups on scenario B bivariate functional data. Then, a second scenario with four functional variables is built based on scenario B with the two additional functional variables be cosinus and sinus functions. The computer used for the experiments has a Windows 10 operating system, Intel(R) Core(TM) i7-6700 CPU 3.40GHz processor and 8.00 Go of RAM memory. The associated running times, estimated with Sys.time R function, are shown in Table 3.

6.5 Model selection

In this section, the selection of the number of clusters is investigated. As previously mentioned, two criteria are used: BIC and the slope heuristic. Data are generated from Scenario A. This simulation setting has been repeated 50 times and the 6 sub-models have been estimated for a number of clusters from 2 to 10.

Figures 3 and 4 show for one simulation with the model \([a_{k} b_k Q_{k}D_{k}]\), the values of BIC and the slope heuristic in view of the number of clusters. For this simulation, both the slope heuristic and BIC succeed in selecting the right number of clusters. On Fig. 4, the left plot corresponds to the log-likelihood function with respect to the number of free model parameters. The red line is estimated using a robust linear regression and its slope coefficient is used to compute the penalized log-likelihood function shown on the right plot.

Table 4 summarized the results of the 50 simulations for the BIC and the slope heuristic. The BIC criterion has some difficulties to estimate the actual number of clusters K. Indeed, depending on the simulation, BIC selects between 2 and 3 clusters and succeed in 46% of simulations in the case of \([a_kb_kQ_kD_k]\) model. The slope heuristic is conversely more efficient to recover the actual number of groups, in about 66% of simulations in the case of \([a_kb_kQ_kD_k]\) model.

6.6 Benchmark with existing methods

In this section, the proposed clustering algorithm is compared to competitors of the literature: Funclust (from Funclustering package, Jacques and Preda 2014b), kmeans-d1 and kmeans-d2 (our own implementation of Ieva et al. 2013) and FGRC (provided at our request by the authors Yamamoto and Hwang 2017). All algorithms are applied for \(K=3\) groups for Scenario A and \(K=4\) groups for Scenario B and Scenario C. These methods are compared on the basis of the 3 simulation settings and according to the adjusted Rand index (ARI).

Table 5 presents clustering accuracies for the 10 tested models and the best funHDDC model selected at each iteration by slope heuristic or BIC. These scenarios seem to be hard situations since only funHDDC performs well for the 3 of them. Those good results for funHDDC are due to the fact that the MFPCA are carried out cluster per cluster. FGRC performed well for 2 out of 3 scenarios, it is the second best method behind funHDDC. Let also remark that both kmeans methods have a high variance. The SH does not perform as well as in the previous example. SH seems to be a good criterion to select the number of clusters, but, with a number of clusters fixed, the BIC seems to be a better criterion for model selection. One can also wonder if this counter performance of the SH is not linked to the small number of tested models.

7 Case study: analysis of pollution in French cities

This section focuses on the analysis of pollution data in French cities. The monitoring and the analysis of such data is of course important in the sense that they could help cities in designing their policy against pollution. As a reminder, pollution kills at least nine million people and costs trillions of dollars every year, according to the most comprehensive global analyses to date.Footnote 1

7.1 Data

This dataset deals with pollution in some French cities (available on 3 different websites).Footnote 2 It has been documented by Atmo France, a federation which monitor air quality in France. It gathered 5 categories of pollutants measured hourly since 1985. In this study we choose to work on Ozone value (\(\upmu \) g/m\(^3\)) and PM10 particles (\(\upmu \) g/m\(^3\)) measured in 84 France southern cities. The regions affected are Nouvelle Aquitaine, Auvergne Rhône Alpes and Provence Alpes Côte d’Azur (cf. Fig. 5). A period of 1 year from 1/01/2017 to 31/12/2017 is considered. The goal of this study is to characterize the daily evolution of these polluants. In order to do this, data are split into daily curves, and the clustering algorithm has been carried out on all the daily curves for all the cities. Doing this, geographical and temporal dependencies between the daily curves are ignored in this preliminary study. Finally, we remove from the analysis the daily curves which have more than 4 missing values or for which there is missing values at the beginning or at the end of the period. The number of bivariate curves to analyse is thus 25,658.

The functional form of the data is reconstructed using a cubic B-spline smoothing with 10 basis functions. As we can see in Fig. 6 (bottom), the presence of missing values does not disrupt smoothing. Data are collected through calibrated meteorological stations, we consider that data are obtained in a common acquisition process, then the noise is assumed to be the same for all stations. So, our algorithm has been applied with \([a_{kj}bQ_{k}D_{k}]\) model on smoothed data with a varying number of clusters, from 2 to 20. The BIC criteria is used to choose an appropriate number of clusters because there is here not enough models to use the slope heuristic criteria.

7.2 Results

According to BIC, the best partition for \([a_{kj}bQ_kD_k]\) model is with 6 clusters (cf. Table 6).

The main sources of variation of Ozone and particles PM10 overall mean curves can be studied thanks to the MFPCA performed for each subgroup. The solid curve on Figs. 7 and 8 represents the overall mean curve and the blue and red ones show the effect of adding (resp. subtracting) a multiple of the first eigenfunction and can be interpret as the first source of variation of the overall mean. According to this plot the first source is an amplitude variation for Ozone. For PM10 particles it is the peaks size that varies the most between groups.

Looking at the groups mean curves (cf. Fig. 7 for Ozone and Fig. 8 for PM10 particles) we can see that Ozone mean curves are more variable than PM10 particles ones. We can also see a common pattern between groups. For the PM10 particles, the mean curves of each group have a wavy shape with a first summit at night and a second at mid-afternoon. There is two main patterns in the O3 curves. During a day, the Ozone concentration has a tendency to decrease from midnight to midday and to increase until reaching a plateau between 5 p.m. and 8 p.m. for the first pattern. For the second one, the Ozone concentration is stable from midnight to 2 p.m. and increases until reaching a maximum at 8 p.m. But it is the level of concentration that varies the most from a group to another.

The first group is characterized by the lower concentration of Ozone along the day. This group gathers winter days (cf. Fig. 9) for cities mostly in urban area (cf. Table 7). Ozone is a product of photochemical reaction between various pollutants when there is a lot of sunlight. The low duration of sunshine during winter can explain those low values. Its Ozone mean curve is very close to the third group one, they differ from their PM10 particle concentration. Indeed, first group average curve is three times higher than third group one. It gathers days the most contaminated by particles PM10 (with the highest concentration along the day). For that matter, the European Union recommends that PM10 concentrations should not to be higher than 50 \(\upmu \) g/m\(^3\) in daily mean more than 35 days per year and in this group the mean value is above this threshold at any time. Whereas the third group gathers fall and winter days (cf. Fig. 9). The black group has the highest values of Ozone (cf. Fig. 7 group 5). Its maximum is reached between 5 p.m. and 10 p.m. This can be due to exhaust gas when people commute from their work to their home.

To conclude, the use of multiple variables to cluster cities allow the distinction between different pollution profiles. Those results enable local councils to have a look at the daily pollution of their towns along the year. Those results have especially highlighted critical days in particles PM10 pollution, that can lead to recommendations in order to try to lower these levels the next year. However we have to stay vigilant about the interpretation of those results. In fact, the measurement of contaminating elements are very localized, some sensors are located near companies and thus are not always representative of the pollution of the whole city in which they are located.

8 Discussion and conclusion

This work was motivated by the will to provide a new clustering method for multivariate functional data, called funHDDC, which takes into account the possibility that data live in subspaces of different dimensions. The method is based on a multivariate functional principal component analysis and a functional latent mixture model. Its efficiency has been demonstrated on simulated datasets and the proposed technique outperforms state-of-the-art methods for clustering multivariate functional data. Notice also that this new algorithm works in the univariate case as well and, therefore, generalizes the original funHDDC algorithm (Bouveyron and Jacques 2011). It is available on CRAN as the funHDDC package. The proposed methodology has been applied to analyze 1-year pollution records in 84 cities in France, with meaningful results.

Among possible further work, it could be interesting to consider wavelet smoothing instead of Fourier or B-spline smoothing. Indeed, wavelet smoothing may keep more information in the case of peaked data than the B-spline smoothing, which was used in this paper. However, the proposed model will have to be adapted to this smoothing because of the specific nature of wavelets. Similarly, further developments of the proposed approach could be investigated in order to take into account dependency between observations, following the large literature about dependent functional data. Finally, it would be interesting to study the question of clustering multivariate functional data in the presence of outliers. The presence of outliers may indeed bias the model inference if they are not correctly detected and managed. An extension of this work to the case of robust clustering for multivariate functional data may fellow the literature about robust clustering (Byers and Raftery 1998; Hennig and Coretto 2007; Gallegos and Ritter 2005; Basso et al. 2010; Gallegos and Ritter 2009 and, for a review, Bouveyron et al. 2019, Chapter 3).

References

Akaike H (1974) A new look at the statistical model identification. IEEE Tran Autom Control 9:716–723

Basso RM, Lachos VH, Cabral CRB, Ghosh P (2010) Robust mixture modeling based on scale mixtures of skew-normal distributions. Comput Stat Data Anal 54(12):2926–2941

Berrendero J, Justel A, Svarc M (2011) Principal components for multivariate functional data. Comput Stat Data Anal 55:2619–263

Biernacki C, Celeux G, Govaert G (2000) Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Trans PAMI 22:719–725

Birge L, Massart P (2007) Minimal penalties for Gaussian model selection. Probab Theory Relat Fields 138:33–73

Bongiorno EG, Goia A (2016) Classification methods for hilbert data based on surrogate density. Comput Stat Data Anal 99(C):204–222

Bouveyron C, Jacques J (2011) Model-based clustering of time series in group-specific functional subspaces. Adv Data Anal Classif 5(4):281–300

Bouveyron C, Come E, Jacques J (2015) The discriminative functional mixture model for the analysis of bike sharing systems. Ann Appl Stat 9(4):1726–1760

Bouveyron C, Celeux G, Murphy T, Raftery A (2019) Model-based clustering and classification for data science: with applications in R. Statistical and probabilistic mathematics. Cambridge University Press, Cambridge

Byers S, Raftery AE (1998) Nearest-neighbor clutter removal for estimating features in spatial point processes. J Am Stat Assoc 93(442):577–584

Cattell R (1966) The scree test for the number of factors. Multivar Behav Res 1(2):245–276

Chen L, Jiang C (2016) Multi-dimensional functional principal component analysis. Stat Comput 27:1181–1192

Chiou J, Chen Y, Yang Y (2014) Multivariate functional principal component analysis: a normalization approach. Stat Sin 24:1571–1596

Chiou JM, Li PL (2007) Functional clustering and identifying substructures of longitudinal data. J R Stat Soc Ser B Stat Methodol 69(4):679–699

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc 39(1):1–38

Ferraty F, Vieu P (2003) Curves discrimination: a nonparametric approach. Comput Stat Data Anal 44:161–173

Gallegos MT, Ritter G (2005) A robust method for cluster analysis. Ann Stat 33(1):347–380

Gallegos MT, Ritter G (2009) Trimming algorithms for clustering contaminated grouped data and their robustness. Adv Data Anal Classif 3:135–167

Hennig C, Coretto P (2007) The noise component in model-based cluster analysis. Springer, Berlin, pp 127–138

Ieva F, Paganoni AM (2016) Risk prediction for myocardial infarction via generalized functional regression models. Stat Methods Med Res 25:1648–1660

Ieva F, Paganoni A, Pigoli D, Vitelli V (2013) Multivariate functional clustering for the morphological analysis of ECG curves. J R Stat Soc Series C (Appl Stat) 62(3):401–418

Jacques J, Preda C (2013) Funclust: a curves clustering method using functional random variable density approximation. Neurocomputing 112:164–171

Jacques J, Preda C (2014a) Functional data clustering: a survey. Adv Data Anal Classif 8(3):231–255

Jacques J, Preda C (2014b) Model based clustering for multivariate functional data. Comput Stat Data Anal 71:92–106

James G, Sugar C (2003) Clustering for sparsely sampled functional data. J Am Stat Assoc 98(462):397–408

Kayano M, Dozono K, Konishi S (2010) Functional cluster analysis via orthonormalized Gaussian basis expansions and its application. J Classif 27:211–230

Petersen KB, Pedersen MS (2012) The matrix cookbook. http://www2.imm.dtu.dk/pubdb/p.php?3274, version 20121115

Preda C (2007) Regression models for functional data by reproducing kernel hilbert spaces methods. J Stat Plan Inference 137:829–840

R Core Team (2017) R: a language and environment for statistical computing. R foundation for statistical computing, Vienna, Austria, https://www.R-project.org/

Ramsay JO, Silverman BW (2005) Functional data analysis, 2nd edn. Springer series in statistics. Springer, New York

Rand WM (1971) Objective criteria for the evaluation of clustering methods. J Am Stat Assoc 66(336):846–850

Saporta G (1981) Méthodes exploratoires d’analyse de données temporelles. Cahiers du Bureau universitaire de recherche opérationnelle Série Recherche 37–38:7–194

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6(2):461–464

Singhal A, Seborg D (2005) Clustering multivariate time-series data. J Chemom 19:427–438

Tarpey T, Kinateder K (2003) Clustering functional data. J Classif 20(1):93–114

Tokushige S, Yadohisa H, Inada K (2007) Crisp and fuzzy k-means clustering algorithms for multivariate functional data. Comput Stat 22:1–16

Traore OI, Cristini P, Favretto-Cristini N, Pantera L, Vieu P, Viguier-Pla S (2019) Clustering acoustic emission signals by mixing two stages dimension reduction and nonparametric approaches. Comput Stat 34(2):631–652

Yamamoto M (2012) Clustering of functional data in a low-dimensional subspace. Adv Data Anal Classif 6:219–247

Yamamoto M, Terada Y (2014) Functional factorial k-means analysis. Comput Stat Data Anal 79:133–148

Yamamoto M, Hwang H (2017) Dimension-reduced clustering of functional data via subspace separation. J Classif 34:294–326

Zambom AZ, Collazos JA, Dias R (2019) Functional data clustering via hypothesis testing k-means. Comput Stat 34(2):527–549

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We would like to thank the LabCom ’CWD-VetLab’ for its financial support. The LabCom ’CWD-VetLab’ is financially supported by the Agence Nationale de la Recherche (Contract ANR 16-LCV2-0002-01).

Appendix: Proofs

Appendix: Proofs

1.1 Proof of Proposition 1

where \(z_{ki}{=}1\) if \(x_i\) belongs to the cluster k and \(z_{ki}=0\) otherwise. \(f(x_i,\theta _k)\) is a Gaussian density, with parameters \(\theta _k=\{\mu _k,\Sigma _k\}\). So the complete log-likelihood is written:

For the \([a_{kj}b_kQ_kd_k]\) model, we have:

Let \(n_k=\sum _{i=1}^nz_{ki}\) be the number of curves within cluster k, the complete log-likelihood is then written:

The quantity \((x_i-\mu _k)^tQ_k\Delta _k^{-1}Q_k^t(x_i-\mu _k)\) is a scalar, so it is equal to it trace:

Well \(tr([(x_i-\mu _k)^tQ_k]\times [\Delta _k^{-1}Q_k^t(x_i-\mu _k)])=tr([\Delta _k^{-1}Q_k^t(x_i-\mu _k)]\times [(x_i-\mu _k)^tQ_k])\), consequently:

where \(C_k=\frac{1}{n_k}\sum _{i=1}^{n}z_{ki}(x_i-\mu _k)^t(x_i-\mu _k)\) is the empirical covariance matrix of the kth element of the mixture model. The \(\Delta _k\) matrix is diagonal, so we can write:

where \(q_{kj}\) is jth column of \(Q_k\). Finally,

1.2 Proof of Proposition 2

But, \(\Sigma _k=Q_k\Delta _k Q_k^t\) and \(Q_k^tQ_k=I_R\), hence:

Let \(Q_k=\tilde{Q_k}+\bar{Q_k}\) where \(\tilde{Q_k}\) is the \(R\times R\) matrix containing the \(d_k\) first columns of \(Q_k\) completed by zeros and where \(\bar{Q_k}=Q_k-\tilde{Q_k}\). Notice that \(\tilde{Q_k}\Delta _k^{-1}\bar{Q_k^t}=\bar{Q_k}\Delta _k^{-1}\tilde{Q_k^t}=O_p\) where \(O_p\) is the null matrix. So,

Hence,

With definitions \(\tilde{Q_k}[\tilde{Q_k^t}\tilde{Q_k}]=\tilde{Q_k}\) and \(\bar{Q_k}[\bar{Q_k^t}\bar{Q_k}]=\bar{Q_k}\), we can rephrase \(H_k(x)\) as:

We define \(\mathcal {D}_k=\tilde{Q_k}\Delta _k^{-1}\tilde{Q_k^t}\) and the norm \(||.||_{\mathcal {D}_k}\) on \(\mathbb {E}_k\) such as \(||x||_{\mathcal {D}_k}=x^t\mathcal {D}_kx\). So, on one hand:

On the other hand:

Consequently,

Knowing \(P_k\), \(P_k^{\bot }\) and \(||\mu _k-P_k^{\bot }||^2=||x-P_k(x)||^2\), we have:

Moreover, \(log|\Sigma _k|=\sum _{j=1}^{d_k}log(a_{kj})+(R-d_k)log(b_k)\). Finally,

1.3 Proof of Proposition 3

Parameter\(Q_{k}\) We have to maximise the log-likelihood under the constraint \(q_{kj}^tq_{kj}=1\), which is equivalent to looking for a saddle point of the Lagrange function:

where \(\omega _{kj}\) are Lagrange multiplier. So we can write:

Therefore, the gradient of \(\mathcal {L}\) in relation to \(q_{kj}\) is:

As a reminder, when W is symmetric, then \(\frac{\partial }{\partial x}(x-s)^TW(x-s)=2W(x-s)\) and \(\frac{\partial }{\partial x}(x^Tx)=2x\) (cf. Petersen and Pedersen (2012)), so:

where \(\sigma _{kj}\) is the jth diagonal term of matrix \(\Delta _k\).

So,

\(q_{kj}\) is the eigenfunction of \(W^{1/2}C_kW^{1/2}\) which match the eigenvalue \(\lambda _{kj}=\frac{\omega _{kj}\sigma _{kj}}{\eta _k}=W^{1/2}C_kW^{1/2}\). We can write \(q_{kj}^tq_{kl}=0\) if \(j\ne l\). So the log-likelihood can be written:

we substitute the equation \(\sum _{j=d_k+1}^R\lambda _{kj}=tr(W^{1/2}C_kW^{1/2})-\sum _{j=1}^{d_k}\lambda _{kj}\):

In order to minimize \(-2l(\theta )\) compared to \(q_{kj}\), we minimize the quantity \(\sum _{k=1}^K\eta _k\sum _{j=1}^{d_k}\lambda _{kj}(\frac{1}{a_{kj}}-\frac{1}{b_k})\) compared to \(\lambda _{kj}\). Knowing that \((\frac{1}{a_{kj}}-\frac{1}{b_k})\le 0, \forall j=1,\ldots ,d_k,\)\(\lambda _{kj}\) has to be as high as feasible. So, the jth column \(q_{kj}\) of matrix Q is estimated by the eigenfunction associated to the jth highest eigenvalue of \(W^{1/2}C_kW^{1/2}\).

Parameter\(a_{kj}\) As a reminder \((ln(x))'=\frac{x'}{x}\) and \((\frac{1}{x})'=-\frac{1}{x^2}\). The partial derivative of \(l(\theta )\) in relation to \(a_{kj}\) is:

The condition \(\frac{\partial l(\theta )}{\partial a_{kj}}=0\) is equivalent to:

Parameter\(b_k\) The partial derivative of \(l(\theta )\) in relation to \(b_{k}\) is:

But,

so:

The condition \(\frac{\partial l(\theta )}{\partial b_{k}}=0\) is equivalent to:

Rights and permissions

About this article

Cite this article

Schmutz, A., Jacques, J., Bouveyron, C. et al. Clustering multivariate functional data in group-specific functional subspaces. Comput Stat 35, 1101–1131 (2020). https://doi.org/10.1007/s00180-020-00958-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-020-00958-4