Abstract

With the rapid development of artificial intelligence and intelligent manufacturing, the traditional teaching-playback mode and the off-line programming (OLP) mode cannot meet the flexible and fast modern manufacturing mode. Therefore, the intelligent welding robots have been widely developed and applied into the industrial production lines to improve the manufacturing efficiency. The sensing system of welding robots is one of the key technologies to realize the intelligent robot welding. Due to its unique characteristics of good robustness and high precision, the structured light sensor has been widely developed in the intelligent welding robots. To adapt to different measurement tasks of the welding robots, many researchers have designed different structured light sensors and integrated them into the intelligent welding robots. Therefore, the latest research and application work about the structured light sensors in the intelligent welding robots is analyzed and summarized, such as initial weld position identification, parameter extraction, seam tracking, monitoring of welding pool, and welding quality detection, to provide a comprehensive reference for researchers engaged in these related research work as much as possible.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the modern manufacturing industry, the welding robot technology has been widely applied into many scenarios, such as automobile manufacturing, ship building, and industrial production. It is a typical representative of current intelligent manufacturing. It could greatly improve the automation level of manufacturing industry, guarantee the safety of staff, keep the production quality, and improve the technical level of industrial production.

At present, the common path programming modes of the welding robots in both manufacturing production and research work could be mainly divided into three categories: teaching-playback mode, the OLP mode [1,2,3], and the intelligent path programming mode [4]. The traditional teaching-playback mode still plays an important role in the current production and manufacturing. However, in the actual welding production, the pose of welding targets is often changeable due to the effect of installation error and processing error. Meanwhile, some disturbance information inevitably exists in the welding process. The teaching-playback mode cannot perceive these changes and lacks certain flexibility to the changeable working environment. With the development of computer-aided-design (CAD) technology and computer graphics, the OLP mode is gradually applied into the path programming of welding robots. Through the construction of working environment and tool-center-point (TCP) calibration, the OLP mode could well accomplish the tasks simulation, anti-collision detection, and motion instructions generation and path planning of welding robots. However, the OLP mode is only suitable for the structured production environment. With the change of current manufacturing mode, the working environment is becoming more and more complex and unstructured. It will bring some challenges to the high-precision and real-time construction of virtual welding environment and affect the three-dimensional (3D) path programming efficiency of OLP mode. Meanwhile, like the teaching-playback mode, the OLP mode also cannot perceive the changes of industrial environment and lack certain flexibility. The intelligent path programming of welding robots is the current main development direction of modern manufacturing. Combined with different robot sensors, the intelligent welding robots could complete the autonomous robot welding through environmental perception. However, the intelligent welding robot is a complex system and it contains many important links, such as initial weld position identification, parameter extraction, seam tracking, monitoring of welding pool, and welding quality detection. Due to the technical difficulties, it is currently under development stage by researchers and companies. And there is not a mature fully intelligent path programming system yet.

The sensor system is the key link of the intelligent welding robots. During the past two decades, a number of researchers have sought to develop and improve the robot sensors to adapt different welding tasks. The common sensors on the welding robots include arc sensors [5,6,7], ultrasound sensors [8,9,10,11], infrared sensors [12,13,14,15], sound sensors [16,17,18], magneto-optical sensors [19,20,21,22], and vision sensors [23,24,25,26,27]. Compared with other sensors, vision sensors have the characteristics of non-contact measurement and high precision. Meanwhile, it could obtain much information about welding environment. Therefore, vision-based detection has been widely applied into much welding tasks, such as seam tracking [28,29,30,31], seam extraction [32,33,34], welding quality control [35,36,37], and defect detection [38,39,40]. Based on the great advantages, it has become an important research direction on the research work about automatic welding robots.

According to the light source of vision sensors, vision sensors could be divided into two major categories: active light vision sensors and passive light vision sensors. Compared with the passive light vision sensors, the active light vision sensors show better robustness on complex welding environment. The structured light sensor is a typical representative of the active light vision sensor. Due to its great characteristics of good robustness and high precision, the structured light sensor has been widely applied into the intelligent welding robots. To finish different measurement tasks of welding robots, the researchers have designed and improved different types of structured light vision sensors to improve the measurement precision and efficiency. Therefore, this paper summarizes and analyzes these latest research and applications work of the structured light vision sensors in the intelligent welding robots, which could provide some reference for researchers engaged in this research direction.

The rest of this paper is organized as follows. Section 2 introduces the common structured light vision sensors. Section 3 analyzes and summarizes the research status of the structured light vision sensors in the intelligent welding robots. Section 4 is about the discussion of the structured light sensors. The last part is the conclusion and prospect of this paper.

2 Sensing system

The vision sensor is the core component of the intelligent welding robots. Based on vision sensing system, the researchers have built different experimental platforms of welding robots according to different welding tasks. A typical system framework of the intelligent welding robot is shown in Fig. 1. It mainly consists of three parts: industrial robot system, vision system, and arc welding system. The vision system consists of vision sensor and vision computer to which is for the perception of welding environment. The industrial robot system consists of industrial manipulator, robot controller, and teaching box, which is used for the task execution of welding robots. The arc welding system is used to finish the workpiece welding, which is comprised by digital welding machine, welding shield gas, and wire feeder.

Because of the strong advantages of high precision and good robustness of structured light vision sensors, in order to realize intelligent welding robots, much researchers continuously improve and optimize the sensor structures, and design different types of structured light vision sensors. They are applied into different 3D measurement applications of the robot welding to improve the automation level of welding robots and meet the needs of flexible and high-precision modern manufacturing.

At present, faced with much research work about the intelligent welding robots, the common types of structured light sensors are laser structured light, cross structured light, multi-lines structured light, circle structured light, grid structured light, lattice structured light, etc. Figure 2 shows some typical examples of the common structured light sensors.

3 Sensor applications

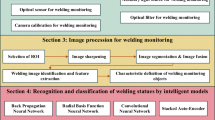

Combined with the structured light sensors, the intelligent welding robots could realize the autonomous 3D path programming and robot welding through the perception of the working environment. However, the fully intelligent welding robot is a relatively complex system and it is still in the research stage due to the technical difficulties faced with complex welding environment. As shown in Fig. 3, to realize autonomous welding task, the intelligent welding robot system involves many key technologies, such as initial point guidance, seam tracking, parameter extraction, monitoring of welding pool, and welding quality detection.

To solve the above key problems shown in Fig. 3, a considerable amount of literatures have been published about the intelligent welding robot to solve one or more problems. Due to the advantages of good robustness and high precision, the structured light sensors have much applications in these parts. According to the above research objects, researches have done much work and design different types of structured light sensors.

Here, the latest application work of the structured light sensors in these aspects is summarized in detail to provide a reference for the researchers engaged in this research direction.

3.1 Initial weld position identification

The initial position alignment of weld seam is the one of the basic but key technologies for the intelligent welding robots. Meanwhile, it is also the basis of seam tracking. The image- based method is the common initial point guiding method which realizes the finding of initial seam point through the image processing of welding images, and it has been widely applied into the initial position alignment [49,50,51,52]. However, it has poor robustness against complex factors of welding environment, such as rust, environment change, surface defects, and scratch. And some researchers have proposed some initial position alignment methods based on the structured light sensors.

At present, there are much research work about the initial point guiding on butt joints. However, the research work about fillet joint is relatively less. To realize robust initial point finding of fillet joints, as shown in Fig. 4, the laser structured light sensor was applied into the automatic welding production in ref. [53]. The slope difference algorithm was adopted to realize the finding of initial point of weld seam without any information. Meanwhile, this method could also solve the end point finding of weld seam. Through experiment verification, the proposed algorithm could well meet the real industrial production.

To realize the initial point guiding of micro-gap weld, an initial point guiding algorithm based on the laser structured light sensor was proposed in ref. [54] and it was applied into a mobile welding robot as shown in Fig. 5a. The image coordinates of initial point were calculated by image processing of welding images. Then, the 3D coordinates of seam points were obtained according to the camera model and the distance between feature points and initial point. The flow chart of the initial point finding is shown in Fig. 5b. Finally, the initial point guiding of micro-gap weld is realized by the proportion-integration-differentiation (PID) controller in Fig. 5c.

a Mobile welding robot. b The controller for initial point guiding [54]

In ref. [55], an initial point finding method based on point cloud processing was proposed. The laser structured light sensor was used to scan the whole workpiece to get the 3D point cloud of welding workpiece. To get the entire workpiece form the point cloud, the KD-Tree was proposed to remove the complex background for the following seam path extraction. During the real welding production, the workpiece models always could be approximately constructed by different plane models. The point cloud segmentation was done by the Random Sample Consensus (RANSAC) algorithm to get these planes. Based on the geometric model of weld seam, the initial point finding could be realized through point cloud processing. Figure 6 shows the main procedures and results of initial point finding on fillet joints.

3.2 Seam extraction

Combined with the vision sensors to realize automatic 3D path extraction, it could avoid tedious manual path teaching and has better programming flexibility and faster programming speed [56,57,58]. Meanwhile, the real-time and high-precision 3D seam extraction is also the basis of seam tracking and controlling for welding robots.

The laser structured light sensor always belongs to local sensor and it is only applied into local searching applications, such as seam type recognition or seam tracking. Compared with the laser structured light sensor, the grating projection sensor could acquire the whole 3D information about the welding work piece and it is suitable for the global seam extraction. To avoid cumbersome manual 3D path teaching, in our previous work, a grating projection system based on a digital light processing (DLP) projector was designed as the vision sensor of welding robot to realize the off-line 3D path teaching [59]. Figure 7 a shows the flow chart of 3D reconstruction based on grating projection system. According to the spatial structure information of welding work pieces, a seam extraction algorithm based on point cloud processing was proposed. Firstly, to overcome the effect of weak texture and weak contrast, the grating projection system based on grid code patterns was used to realize the 3D reconstruction of work pieces. Secondly, the 3D seam points were extracted based on the spatial shape information of V-type butt joints. Thirdly, the back-propagation (BP) neural network fusion with genetic algorithm (GA) was used to do path fitting to guarantee the smoothing of seam path. Finally, the pose estimation of weld seam was done to solve the pose of welding gun. Faced with the complex work pieces, the welding robots could adjust its pose in real time according to the seam pose which could well keep the welding quality. Figure 7b–g show the special results of seam extraction and pose estimation.

a The grating projection system. b, c 3D reconstruction and feature extraction. d, e Path fitting. f, g Pose estimation [59]

To detect the whole welding work piece, in ref. [59], there is a shortcoming of limited measurement precision faced with broader working view for the grating projection sensor. To realize high-precision seam extraction, on the basis of seam extraction in Fig. 7, based on the idea from coarse to fine, a “global-local” seam extraction method was proposed, as shown in Fig. 8a [60]. Through the coarse extraction and local fine scanning, the proposed algorithm could well realize high-precision seam path teaching of different weld seams. Here, combined with the stereo vision, the DLP projector was programmed to project the triple stripes to construct the line structured light sensor as a local sensor to realize high-precision 3D measurement. Meanwhile, the kernelized correlation filters (KCF) algorithm was proposed to realize the real-time feature extraction of welding images which could solve different seam types, such as lap joints, butt joints, and fillet joints. Figure 8b and c separately show the feature extraction results of lap joint and fillet joint.

a The flow chart of 3D seam path teaching. b, c Feature extraction [60]

Zeng et al. proposed a 3D off-line path teaching method of narrow butt joint based on laser structured light sensor [61]. Due to the narrow gap formed by the two butting steel plates, part of the structured light stripe could pass through the narrow gap of butt joint as shown in Fig. 9a. The reflection brightness of the structured light stripe on the narrow gap and the surface of the steel sheet would be different. According to these brightness characteristics, the seam extraction could be done by image processing of laser stripe. Through the close-range scanning of butt joint, the normal vectors of seam points could also be acquired through the point cloud processing. Figure 9b shows the 3D position and pose of narrow butt joint.

a The stripe reflection situation of narrow seam. b 3D seam path and normal vectors of seam points [61]

To get the parameters of multi-layer and multi-pass welding, a grid structured light sensor was designed to get the position of weld seam which could acquire more information than the laser structured light sensor [45], as shown in Fig. 10. Through average smoothing, threshold, image thinning, and feature extraction, the image coordinates of seam points could be obtained. Experiments showed that the measurement error of the grid structured light sensor was less than 0.5mm.

a Grid images. b The procedures of image processing [45]

3.3 Seam tracking

Until now, seam tracking is still a research focus in intelligent welding robots. The conventional seam extraction methods are mainly based on morphological image processing. Researchers have done much work and proposed many different algorithms to realize real-time and high-precision seam tracking. As shown in Fig. 11, the core links mainly include different morphological processing operators, such as image pre-processing, region-of-interest(ROI) extraction, stripe thinning, linear fitting, and feature extraction [62,63,64,65,66]. Meanwhile, the image difference is also necessary faced with the situation of the strong arc light.

However, there are many types of weld seam in real industrial production. The seam extraction method based on morphological image processing is always designed for a particular weld seam. To realize seam extraction of different seam types, the operator of feature extraction should be considered according to the shape feature of different weld seams. Therefore, the algorithm flexibility is relatively poor. Meanwhile, faced with much image morphological processing steps, the real-time performance of seam tracking will be affected.

In ref. [67], a seam tracking system based on the cross mask structured light sensor was proposed. The cross mask structured light sensor could well facilitate the calculation process of ROI extraction. Based on the ROI area, a modified template matching was proposed to realize feature extraction. Aimed at the shape of V-type butt joint, three templates were designed to get the key features of V-type butt joints, as shown in Fig. 12a. And the proportional-derivative (PD) controller was adopted to realize seam tracking. Figure 12b shows the 3D profile of the V-type butt joint. Experiments showed that the processing speed of each welding image could up to 100.76 ms. Compared with seam extraction based on morphology operations, the processing speed of the proposed algorithm was greatly improved.

a Templates of seam features. b 3D profile of V-type butt joint [67]

Aimed at seam tracking of different weld grooves, Lü et al. investigated the relationship between the feature points and the shapes of different weld seams, and a feature extraction algorithm based on slope change was proposed [68], as shown in Fig. 13. The central line of the laser stripe could be obtained through image processing of welding images, such as image pre-processing, ROI extraction, direction template, and ridge line tracking. On the basis, the seam point could be extracted by slope analysis. Experiment tests showed that the calculation time of the proposed algorithm could up to 22ms and it could well meet the requirement of real-time seam tracking. However, the proposed algorithm is based on the shape features of weld seams, and it realizes the feature extraction based on the stripe shapes on the workpieces, so it has relatively poor robustness against strong arc light.

The schematic diagram of experiment system [68]

With the fast development of machine learning and computer vision, the tracking precision and speed of object tracking have been greatly improved [69,70,71,72]. Based on the good detection performance of object trackers, Zou et al. investigated the characteristics of object trackers on welding images and proposed a seam tracking system based on object tracking. The object trackers were proposed to realize feature extraction of welding images, such as continuous convolution operator tracker (CCOT) [73], KCF tracker [74], and spatiotemporal context tracker (STC) [75]. They could well overcome the influence of strong arc light and estimate the positions of seam points, as shown in Fig. 14. Meanwhile, they showed faster processing speed than the morphological image processing. Based on the adaptive fuzzy controller, seam tracking of different welding grooves could be done. Experiments showed that the tracking error on different weld seams was less than 0.4mm. Therefore, the object tracker has good adaptability on different work piece, but it relies on the manual object marking of initial frame. In general, the object tracker provides a good solution for real-time seam tracking.

3.4 Monitoring of welding pool

The monitoring of welding pool is also a key link in the intelligent welding robot system to keep welding quality. Through the monitoring of welding pool, the parameters of welding pool could be obtained, such as weld pool width, length, area, interception area, and convexity [76,77,78,79]. And these parameters could be used as feedback signal of the welding controller for realize weld joint penetration control.

To realize the intelligent welding robots, according to the behavior of welder, a lattice structured light sensor was designed [46], as shown in Fig. 15a. The vision sensor projected the lattice patterns to the surface of welding pool. To acquire the distorted patterns, an image plane was installed in front of the welding torch and a camera was installed behind the image plane to obtain the reflected dot matrix. Through image processing of the distorted dot matrix, 3D reconstruction and parameter extraction of welding pool could be done as shown in Fig. 15b. And the Adaptive Neural Fuzzy Inference System (ANFIS) was adopted to simulate the behavior of welders to realize the penetration control.

a The framework of welding robot system. b Image processing and 3D reconstruction [46]

In ref. [43], a multi-lines structured light sensor was designed to investigate the behavior of the welders, as shown in Fig. 16a. Like ref. [46], the multi-line stripes were projected to the surface. And the camera was used to capture the distorted stripes on the image plane. And the parameters of welding pool could be obtained by image processing of the distorted stripes. Meanwhile, the wire inertial measuring unit (IMU) sensor was installed in the welder to record the pose of welding torch. On the basis, an improved Unscented Kalman Filter (UKF) algorithm was proposed to remove the noise of IMU sensor. Figure 16 b shows the welding experiment data of novice welder, such as IMU data, the parameters of welding pool, and the top and backside appearance of weld beads. Through the statistics and analysis of the behavior of welders with different welding levels, the welding experience of welder could be obtained and it could be well used to serve the research work of the intelligent welding robot and guide the training of new welders.

a The experimental system. b Experiments of novice welder [43]

During the real welding, to keep welding quality, the welders have better robustness on some changes of welding process than machine algorithms. However, the machine algorithms have better response speed than welders. To improve the intelligence of welding robots, the machine algorithms and human intelligence were fused [80]. A lattice structured light sensor was designed and installed in the end of Universal Robot UR-5. Meanwhile, a virtual welding torch was designed to realize augmented reality (AR) welding, as shown in Fig. 17a. The auto regression moving average model, ANFIS model, and iterative local ANFIS model were separately used to model the super welder, AR welder, and NoAR welder. Faced with different welding situations, the super welder, AR welder, and NoAR welder separately showed different performance on welding. To obtain the better weld beads, the fuzzy weighting–based fusing algorithm was proposed to fuse these three models. Figure 17b shows the robustness test results of welding current change. Experiments showed that the proposed algorithm could acquire better robustness on welding current change and input noise.

In ref. [81, 82], a frequency-based method was proposed to monitor of welding pool. Unlike other research work, the frequency characteristics of welding pool were investigated. To perceive the whole welding pool, a welding robot system based on laser structured light sensor was designed as shown in Fig. 18a. The frequency value could be obtained through the combination of image processing and fast Fourier transformation (FFT). Through much experiment verification, the relationship between frequency and penetration status of welding pool could be set up, such as full penetration, partial penetration, and critical penetration. Figure 18b and c separately show the frequency ranges of partial penetration and full penetration. According to the frequency value, the penetration status could be obtained and it also could be used for penetration control. To get the frequency characteristics of welding pool, the proposed algorithm relies on the image processing of sequence welding images, so it is not suitable for the real-time monitoring of welding pool.

a The experimental system. b, c The relationship between frequency and different penetration status [81]

3.5 Welding quality detection

Welding quality not only affects the appearance of different products but also affects the strength of the weld beads. Bad welding quality even will bring some potential dangers to safe production [83, 84]. Therefore, it is necessary to realize welding quality detection for the safe production.

During the real welding process, some parameters are necessary for welding quality controlling and detection, such as bead width, filling depth, and groove width. In ref. [85], a measurement and detection method of welding defect was proposed based on the laser structured light sensor. Combined with the vision sensor and vision model, the 3D profile of weld beads could be acquired. Figure 19 shows the 3D reconstruction results of weld beads with different welding quality. Based on the geometric models of weld beads, some key parameters could be extracted, such as bead width, filling depth, and groove width.

Chu et al. proposed a post-welding quality detection method based on laser structured light sensor [86]. The laser structured light sensor was used to scan the weld bead to get the point cloud of weld bead, as shown in Fig. 20a. A mathematical model of weld bead was established as shown in Fig. 20b. It was compose by some key parameters, such as weld width, reinforcement, undercut, and plate displacement. Figure 20c shows the curves of different parameters of weld bead. It could well serve the on-line welding defect detection, such as plate displacement and undercut.

a Point cloud of weld bead. b Typical parameters of weld bead. c Measurement results of weld bead [86]

In ref. [87], a low-cost inspection method for weld beads based on the structured light sensor was proposed, as shown in Fig. 21a. The structured light sensor was used to scan the whole welding work piece to get the 3D point cloud. On the basis, the point cloud segmentation of welding work piece was proposed to realize the bead separation from the point cloud, as shown in Fig. 21b. It could well remove other parts and focus on the processing of weld beads. According to the results of point cloud segmentation, the depth map could be obtained which could be used for fast qualitative evaluation of weld beads.

a The special procedure of the proposed algorithm. b The segmentation of weld bead. c Depth map of weld bead [87]

4 Discussion

This paper reviews the advanced structured light sensors in the intelligent welding robots. Faced with the complex welding environment, there is no mature fully intelligent welding robot system according to our present knowledge. In the current research work, researchers are always aimed at the special one or more problems of in intelligent welding system to carry out their research work. Therefore, the major contribution of this paper reviews the latest research work of the structured light sensors in these key issues of intelligent welding robots, such as initial point guidance, seam tracking, parameter extraction, monitoring of welding pool, and welding quality detection.

Faced with the much experiment platforms by different researchers, due to the differences of the robot platforms, experiment conditions, vision sensors, camera products, calibration precision, etc, there is not any effective tool to fairly evaluate and compare the performance of these systems or methods. Therefore, this paper only reviews the proposed algorithms or the designed structured light sensors.

5 Conclusion

In this paper, a detailed review about the advances techniques of the structured light sensing in the intelligent welding robots has been presented. This paper has tried to provide a comprehensive study about the structured light sensing in the intelligent welding robots to present a valuable reference for researchers engaged in related research work.

During much research work, except for the structured light sensors, researchers have also proposed and designed other different sensors to adapt to complex welding environment and overcome the influence of strong arc light, metallurgy, heat transfer, chemical reaction, arc physics, and deformation, such as passive vision sensors, time of flight (TOF) sensors, arc sensors, sound sensors, and magneto-optical sensors. This paper only reviews the structured light vision techniques in welding robots. In the future, a more comprehensive study about the sensing techniques in intelligent welding robots will be done.

References

Mitsi S, Bouzakis K-D, Mansour G, Sagris D, Maliaris G (2005) Off-line programming of an industrial robot for manufacturing. Int J Adv Manuf Technol 26(3):262–267

Chan SF, Kwan R (2003) Post-processing methodologies for off-line robot programming within computer integrated manufacture. J Mater Process Tech 139(1–3):8–14

Maiolino P, Woolley R, Branson D, Benardos P, Popov A, Ratchev S (2017) Flexible robot sealant dispensing cell using RGB-D sensor and off-line programming. Robotics Comput Integr Manuf 48:188–195

Ong SK, Chong JWS, Nee AYC (2010) A novel AR-based robot programming and path planning methodology. Robotics Comput Integr Manuf 26(3):240–249

Le J, Zhang H, Xiao (2017) Circular fillet weld tracking in GMAW by robots based on rotating arc sensors. Int J Adv Manuf Technol 88(9-12):2705–2715

Le J, Zhang H, Chen XQ (2018) Realization of rectangular fillet weld tracking based on rotating arc sensors and analysis of experimental results in gas metal arc welding. Robotics Comput Integr Manuf 49:263–276

Zhang S, Shengsun Hu, Wang Z (2016) Weld penetration sensing in pulsed gas tungsten arc welding based on arc voltage. J Mater Process Tech 229:520–527

Russell AM, Becker AT, Chumbley LS, Enyart DA, Bowersox BL, Hanigan TW, Labbe JL, Moran JS, Spicher EL, Zhong L (2016) A survey of flaws near welds detected by side angle ultrasound examination of anhydrous ammonia nurse tanks. J Loss Prevent Proc 43:263–272

Chen C, Fan C, Lin S, Cai X, Yang C, Zhou L (2019) Influence of pulsed ultrasound on short transfer behaviors in gas metal arc welding. J Mater Process Tech 267:376–383

Petcher PA, Dixon S (2015) Weld defect detection using PPM EMAT generated shear horizontal ultrasound. NDT &E Int 74:58–65

Klimenov VA, Abzaev YuA, Potekaev AI, Vlasov VA, Klopotov AA, Zaitsev KV, Chumaevskii AV, Porobova SA, Grinkevich LS, Tazin ID (2016) Structural state of a weld formed in aluminum alloy by friction stir welding and treated by ultrasound. Russ Phys J + 59(7):971–977

Zhu J, Wang J, Su N, Xu G, Yang M (2017) An infrared visual sensing detection approach for swing arc narrow gap weld deviation. J Mater Process Tech 243:258–268

Yu P, Xu G, Gu X, Zhou G, Tian Y (2017) A low-cost infrared sensing system for monitoring the MIG welding process. Int J Adv Manuf Technol 92(9–12):4031–4038

Wikle Iii HC, Kottilingam S, Zee RH, Chin BA (2001) Infrared sensing techniques for penetration depth control of the submerged arc welding process. J Mater Process Tech 113(1-3):228–233

Bai P, Wang Z, Hu S, Ma S, Liang Y (2017) Sensing of the weld penetration at the beginning of pulsed gas metal arc welding. J Manuf Process 28:343–350

Bo C, Wang J, Chen S (2010) A study on application of multi-sensor information fusion in pulsed GTAW. Ind Robot 37(2):168–176

Pal K, Pal SK (2010) Study of weld joint strength using sensor signals for various torch angles in pulsed MIG welding. CIRP Ann-Manuf Techn 3(1):55–65

Bo C, Chen S (2010) Multi-sensor information fusion in pulsed gtaw based on fuzzy measure and fuzzy integral. Assembly Autom 30(3):276–285

Gao X, Liu Y, You D (2014) Detection of micro-weld joint by magneto-optical imaging. Opt Laser Technol 62:141–151

Gao X, Chen Y (2014) Detection of micro gap weld using magneto-optical imaging during laser welding. Int J Adv Manuf Technol 73(1–4):23–33

Gao X, Mo L, Xiao Z, Chen X, Katayama S (2016) Seam tracking based on Kalman filtering of micro-gap weld using magneto-optical image. Int J Adv Manuf Technol 83(1–4):21–32

Gao X, Zhen R, Xiao Z, Katayama S (2015) Modeling for detecting micro-gap weld based on magneto-optical imaging. J Manuf Syst 37:193–200

Sun J, Li C, Wu X, Palade V, Fang W (2019) An effective method of weld defect detection and classification based on machine vision. IEEE T Ind Inform

Zhao Z, Deng L, Bai L, Yi Z, Han J (2019) Optimal imaging band selection mechanism of weld pool vision based on spectrum analysis. Opt Laser Technol 110:145–151

Xiong J, Zou S (2019) Active vision sensing and feedback control of back penetration for thin sheet aluminum alloy in pulsed MIG suspension welding. J Process Contr 77:89–96

Abu-Nabah BA, ElSoussi AO, Alami A, ElRahman KA (2018) Virtual laser vision sensor environment assessment for surface profiling applications. Measurement 113:148–160

Abu-Nabah BA, ElSoussi AO, Alami A, ElRahman KA (2016) Simple laser vision sensor calibration for surface profiling applications. Opt Laser Eng 84:51–61

Rout A, Deepak BBVL, Biswal BB (2019) Advances in weld seam tracking techniques for robotic welding: a review. Robotics Comput Integr Manuf 56:12–37

Wang X, Li B, Zhang T (2018) Robust discriminant correlation filter-based weld seam tracking system. Int J Adv Manuf Technol 98(9–12):3029–3039

Zhang Y-x, You D-y, Gao X-d, Na S-J (2018) Automatic gap tracking during high power laser welding based on particle filtering method and BP neural network. Int J Adv Manuf Technol 96(1–4):685–696

Xu Y, Gu F, Chen S, Ju JZ, Ye Z (2014) Real-time image processing for vision-based weld seam tracking in robotic GMAW. Int J Adv Manuf Technol 73(9–12):1413–1425

Zhang K, Yan M, Huang T, Zheng J, Li Z (2019) 3D reconstruction of complex spatial weld seam for autonomous welding by laser structured light scanning. J Manuf Process 39:200–207

He Y, Xu Y, Chen Y, Chen H, Chen S (2016) Weld seam profile detection and feature point extraction for multi-pass route planning based on visual attention model. Robotics Comput Integr Manuf 37:251–261

Xu Y, Gu F, Lv N, Chen S, Ju JZ (2015) Computer vision technology for seam tracking in robotic GTAW and GMAW. Robotics Comput Integr Manuf 32:25–36

Yang L, Li E, Long T, Fan J, Mao Y, Fang Z, Liang Z (2018) A welding quality detection method for arc welding robot based on 3D reconstruction with SFS algorithm. Int J Adv Manuf Technol 94 (1–4):1209–1220

Xiong J, Zou S (2019) Active vision sensing and feedback control of back penetration for thin sheet aluminum alloy in pulsed MIG suspension welding. J Process Contr 77:89–96

Zhang Z, Chen H, Xu Y, Zhong J, Lv N, Chen S (2015) Multisensor-based real-time quality monitoring by means of feature extraction, selection and modeling for Al alloy in arc welding. Mech Syst Signal Pr 60:151–165

Han Y, Fan J, Yang X (2020) A structured light vision sensor for on-line weld bead measurement and weld quality inspection. Int J Adv Manuf Technol 106(5):2065–2078

Ye D, Hong GS, Zhang Y, Zhu K, Fuh JYH (2018) Defect detection in selective laser melting technology by acoustic signals with deep belief networks. Int J Adv Manuf Technol 96(5–8):2791–2801

Lin J, Yu Y, Ma L, Wang Y (2018) Detection of a casting defect tracked by deep convolution neural network. Int J Adv Manuf Technol 97(1–4):573–581

Fan J, Jing F, Yang L, Long T, Tan M (2019) An initial point alignment method of narrow weld using laser vision sensor. Int J Adv Manuf Technol, 1–12

Zhang L, Ye Q, Yang W, Jiao J (2014) Weld line detection and tracking via spatial-temporal cascaded hidden Markov models and cross structured light. IEEE T Instrum Meas 63(4):742–753

Zhang G, Yu S, Gu YF, Fan D (2017) Welding torch attitude-based study of human welder interactive behavior with weld pool in gtaw. Robotics Comput Integr Manuf 48:145–156

Xu P, Tang X, Yao S (2008) Application of circular laser vision sensor (CLVS) on welded seam tracking. J Mater Process Tech 205(1–3):404–410

Zhang C, Li H, Jin Z, Gao H (2017) Seam sensing of multi-layer and multi-pass welding based on grid structured laser. Int J Adv Manuf Technol 91(1–4):1103–1110

Liu YK, Zhang WJ, Zhang YuM (2014) A tutorial on learning human welder’s behavior: sensing, modeling, and control. J Manuf Process 16(1):123–136

Iakovou D, Aarts R, Meijer J (2005) Sensor integration for robotic laser welding processes. In: International Congress on Applications of Lasers & Electro-Optics, pp 2301–2309. LIA

Xiao Z (2011) Research on a trilines laser vision sensor for seam tracking in welding. In: Robotic welding, intelligence and automation. Springer, pp 139–144

Zhu YZh, Lin T, Piao YJ, Chen SB (2005) Recognition of the initial position of weld based on the image pattern match technology for welding robot. Int J Adv Manuf Technol 26(7–8):784–788

Chen X, Chen S, Lin T, Lei Y (2006) Practical method to locate the initial weld position using visual technology. Int J Adv Manuf Technol 30(7–8):663–668

Chen X, Chen S (2010) The autonomous detection and guiding of start welding position for arc welding robot. Ind Robot 37(1):70–78

Fang Z, Xu D, Tan M (2013) Vision-based initial weld point positioning using the geometric relationship between two seams. Int J Adv Manuf Technol 66(9–12):1535–1543

Liu FQ, Wang ZY, Yu J (2018) Precise initial weld position identification of a fillet weld seam using laser vision technology. Int J Adv Manuf Technol 99(5–8):2059–2068

Fan J, Jing F, Yang L, Teng Lo, Tan M (2018) A precise initial weld point guiding method of micro-gap weld based on structured light vision sensor. IEEE Sens J 19(1):322–331

Zhang L, Xu Y, Du S, Zhao W, Hou Z, Chen S (2018) Point cloud based three-dimensional reconstruction and identification of initial welding position. In: Transactions on intelligent welding manufacturing. Springer, pp 61–77

Dinham M, Gu F (2013) Autonomous weld seam identification and localisation using eye-in-hand stereo vision for robotic arc welding. Robotics Comput Integr Manuf 29(5):288–301

Li J, Jing F, Li E (2016) A new teaching system for arc welding robots with auxiliary path point generation module. In: 2016 35th Chinese Control Conference (CCC). IEEE, pp 6217–6221

Jin Z, Li H, Zhang C, Wang Q, Gao H (2017) Online welding path detection in automatic tube-to-tubesheet welding using passive vision. Int J Adv Manuf Technol 90(9–12):3075–3084

Yang L, Li E, Long T, Fan J, Liang Z (2019) A novel 3-D path extraction method for arc welding robot based on stereo structured light sensor. IEEE Sens J 19(2):763–773

Yang L, Li E, Long T, Fan J, Liang Z (2018) A high-speed seam extraction method based on the novel structured-light sensor for arc welding robot: a review. IEEE Sens J 18(21):8631–8641

Zeng J, Chang B, Du D, Peng G, Chang S, Hong Y, Li W, Shan J (2017) A vision-aided 3D path teaching method before narrow butt joint welding. Sensors 17(5):1099

Gu WP, Xiong ZY, Wan W (2013) Autonomous seam acquisition and tracking system for multi-pass welding based on vision sensor. Int J Adv Manuf Technol 69(1–4):451–460

Luo H, Chen X (2005) Laser visual sensing for seam tracking in robotic arc welding of titanium alloys. Int J Adv Manuf Technol 26(9–10):1012–1017

Shen H, Lin T, Chen S, Li L (2010) Real-time seam tracking technology of welding robot with visual sensing. J Intell Robot Syst 59(3–4):283–298

Gao X, You D, Katayama S (2012) Seam tracking monitoring based on adaptive Kalman filter embedded Elman neural network during high-power fiber laser welding. IEEE T Ind Electron 59(11):4315–4325

Fang Z, Xu D, Tan M (2011) A vision-based self-tuning fuzzy controller for fillet weld seam tracking. IEEE-ASME T Mech 16(3):540–550

Kiddee P, Fang Z, Tan M (2016) An automated weld seam tracking system for thick plate using cross mark structured light. Int J Adv Manuf Technol 87(9–12):3589–3603

Lü X, Gu D, Wang Y, Qu Y, Qin C, Huang F (2018) Feature extraction of welding seam image based on laser vision. IEEE Sens J 18(11):4715–4724

He Z, Yi S, Cheung Y-M, You X, Tang YY (2017) Robust object tracking via key patch sparse representation. IEEE T Cybernetics 47(2):354–364

Čehovin L, Leonardis A, Kristan M (2016) Visual object tracking performance measures revisited. IEEE T Image Process 25(3):1261–1274

Comaniciu D, Ramesh V, Meer P (2003) Kernel-based object tracking. IEEE T Pattern Anal 25(5):564–575

Bolme DS, Ross Beveridge J, Draper BA, Lui YM (2010) Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. IEEE, pp 2544–2550

Zou Y, Chen T (2018) Laser vision seam tracking system based on image processing and continuous convolution operator tracker. Opt Laser Eng 105:141–149

Zou Y, Wang Y, Zhou W, Chen X (2018) Real-time seam tracking control system based on line laser visions. Opt Laser Technol 103:182–192

Zou Y, Chen X, Gong G, Li J (2018) A seam tracking system based on a laser vision sensor. Measurement 127:489–500

Pinto-Lopera J, Motta JST, Alfaro SA (2016) Real-time measurement of width and height of weld beads in GMAW processes. Sensors 16(9):1500

Wang Z, Zhang YM, Yang R (2013) Analytical reconstruction of three-dimensional weld pool surface in GTAW. J Manuf Process 15(1):34–40

Zhang WJ, Liu YK, Wang X, Zhang YM (2012) Characterization of three dimensional weld pool surface in GTAW. Weld J 91(7):195s–203s

Zhang WJ, Zhang X, Yu MZ (2015) Robust pattern recognition for measurement of three dimensional weld pool surface in GTAW. J Intell Manuf 26(4):659–676

Liu YK, Zhang YM (2017) Fusing machine algorithm with welder intelligence for adaptive welding robots. J Manuf Process 27:18–25

Li C, Yu S, Gu YF, Yuan P (2018) Monitoring weld pool oscillation using reflected laser pattern in gas tungsten arc welding. J Mater Process Tech 255:876–885

Yu S, Li C, Du L, Gu YF, Ming Z (2016) Frequency characteristics of weld pool oscillation in pulsed gas tungsten arc welding. J Manuf Process 24:145–151

He K, Li X (2016) A quantitative estimation technique for welding quality using local mean decomposition and support vector machine. J Intell Manuf 27(3):525–533

Zhang H, Hou Y, Zhao J, Wang L, Xi T, Li Y (2017) Automatic welding quality classification for the spot welding based on the Hopfield associative memory neural network and Chernoff face description of the electrode displacement signal features. Mech Syst Signal Pr 85:1035–1043

Li Y, Li YF, Wang QL, Xu D, Tan M (2010) Measurement and defect detection of the weld bead based on online vision inspection. IEEE T Instrum Meas 59(7):1841–1849

Chu H-H, Wang Z-Y (2016) A vision-based system for post-welding quality measurement and defect detection. Int J Adv Manuf Technol 86(9–12):3007–3014

Rodríguez-Martín M, Rodríguez-Gonzálvez P, González-Aguilera D, Fernández-Hernández J (2017) Feasibility study of a structured light system applied to welding inspection based on articulated coordinate measure machine data. IEEE Sens J 17(13):4217–4224

Acknowledgments

The authors would like to thank the anonymous referees for their valuable suggestions and comments.

Funding

This work was supported by the National Natural Science Foundation of China (No.61473265,61803344,61773351), Science and Technology Research Project in Henan Province of China (No.202102210098), Robot Perception and Control Support Program for Outstanding Foreign Scientists in Henan Province of China (NO.GZS201908), and Innovation Research Team of Science & Technology in Henan Province of China (No.17IRTSTHN013).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, L., Liu, Y. & Peng, J. Advances techniques of the structured light sensing in intelligent welding robots: a review. Int J Adv Manuf Technol 110, 1027–1046 (2020). https://doi.org/10.1007/s00170-020-05524-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-020-05524-2