Abstract

Ever increasing functionality and complexity of products and systems challenge development companies in achieving high and consistent quality. A model-based approach is used to investigate the relationship between system complexity and system robustness. The measure for complexity is based on the degree of functional coupling and the level of contradiction in the couplings. Whilst Suh’s independence axiom states that functional independence (uncoupled designs) produces more robust designs, this study proves this not to be the case for max-/min-is-best requirements, and only to be true in the general sense for nominal-is-best requirements. In specific cases, the independence axiom has exceptions as illustrated with a machining example, showing how a coupled solution is more robust than its uncoupled counterpart. This study also shows with statistical significance, that for max- and min-is-best requirements, the robustness is most affected by the level of contradiction between coupled functional requirements (p = 1.4e−36). In practice, the results imply that if the main influencing factors for each function in a system are known in the concept phase, an evaluation of the contradiction level can be used to evaluate concept robustness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many products from hairdryers to systems like a spacecraft become more and more complex and integrated. Functionality is being added with every product generation as technology advances. For example, Figs. 1 and 2 show exemplarily the evolution of car safety features and added technology for every generation of the Apple iPhone, respectively. The performance but also the robustness against variation and noise factors of the functions is of high importance.

Evolution of car safety features (Jackson 2013)

Added functionality for every generation of the Apple iPhone (Apple 2015)

The pursuit of robustness, i.e. insensitivity to variation in noise (type I Robust Design) and design parameters (type II Robust Design), challenges the developing companies. “Small changes to a complex coupled system can result in large unexpected changes in behaviour, possibly taking the system outside of its designers’ expected operating regime” (Gribble 2001). The analysis of large-scale design/engineering networks points towards the same conclusion that complexity i.e. “design coupling” tends to negatively influence system robustness (Braha and Bar-Yam 2004, 2007). The question arises how large the impact of complexity on robustness is and whether generalizations can be made.

There are various design guidelines available fostering a “good” and robust design. One of them—axiomatic design (AD) by Nam P. Suh (2001)—addresses the complexity and coupling of the design. The first axiom promotes independence of functions which is said to produce inherently more robust designs (Suh 2001, p. 125, 126). A designer should first and foremost seek for an uncoupled or decoupled design and then, in adherence to the second axiom, minimize the information content. Slagle (2007) investigated the influence of the system architecture on the robustness and proposed 9 principles. Among those are the principles of “Independence” and “Simplicity” in accordance with the notion of Suh. However, it is not always practically possible to uncouple or decouple functions due to other conflicting DfX requirements. Furthermore, with respect to robustness the first axiom is not always true in reality as there are instances where a coupled design has a lower information content, which actually produces a higher probability of success and robustness.

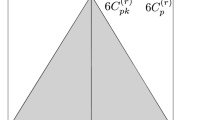

Consider a machine that can position a drill with an accuracy of 0.02 mm (μ = 0, σ = 0.02 mm) in the x direction and 0.005 mm (μ = 0, σ = 0.005 mm) in the y direction. Let us also say that the tolerances on the position of the hole are ±0.04 mm in the x and ±0.03 mm in the y direction. If the workpiece is oriented square to the axes, the mapping from design parameter (DP) to functional requirement (FR) is diagonal and hence the design uncoupled (design 1). The probability of success is about p = 95 %. However, if the part is reoriented at an angle of about 30 degrees, the FR-DP mapping is coupled (design 2) and the probability of success rises to about p = 97.5 %, which is roughly a factor of 2 drop in failure rate (see Figs. 3, 4). This example provides proof that axiom 1 is not always true; however, the authors believe that axiom 1 is still an incredibly valuable design principle (Ebro and Howard 2016) that should be used and taught despite the odd exception.

2 Research delimitation and methodology

The aim of this research is to investigate the link between the complexity of a design and its robustness. In order to understand this link first, several terms need to be defined. The robustness of a design is a key factor in achieving the desired quality of a product where yield = f (robustness, variation). Therefore, in order to increase the yield, either the variation (coming from manufacturing, assembly, ambient conditions, time, load, the material and signal) needs to be reduced, or the robustness of the design (inherent in the product architecture, geometry and dimensions) needs to be increased.

In this research, we have chosen numerical analysis as a means for simulating the yield values since empirical data necessary to obtain meaningful statistical results would be unfeasible. The variation of the different design parameters has been modelled using a Monte Carlo simulation. By setting up the analysis in this way, it can be deduced that the designs that produced the greatest yield are therefore the most robust.

In order to create the designs, 250 different design architectures have been modelled based on the hierarchical probability model by Frey and Li (2008) (see the complex systems modelling approach later), each with differing complexity. In this paper, the authors define complexity to be related to the degree of coupling of the functions in the design (directly related to axiom 1) (Summers and Shah 2010) and the level of contradiction of the couplings. The definitions for coupling and contractions are best laid out in a previous research by Göhler and Howard (2015) in the following:

-

A coupling with low level of contradiction (positive coupling): When changing a design parameter can lead to improvements in both of its coupled functions (Fig. 5).

-

A coupling with high level of contradiction (negative coupling): When changing a design parameter will only positively affect one of the coupled functions as the other will be negatively affected (Fig. 6).

- RQ1:

-

Is there an association between the degree of coupling in a design and its robustness?

- RQ2:

-

Is there an association between the level of contradiction in a design and its robustness?

2.1 A practical example case

To illustrate the practical implication of contradicting and positive couplings, consider an automobile diaphragm spring clutch as shown in Fig. 7. The release bearing pushes the diaphragm spring inwards forcing it to buckle and release the pressure plate from pressing clutch plate and flywheel together.

Adopted from Hillier and Coombes (2004)

Schematic of a diaphragm spring clutch.

Assuming the main functional requirements and design parameters to be the ones listed in Table 1, a simplified model using response surface methodology (RSM) (Box and Wilson 1951) yields the governing Eqs. (1–5).

In linearized and simplified form, the functional dependencies of the five main functions of the clutch (Eqs. 1–5) can be summarized using Suh’s design matrix (DM) either quantitatively using partial derivatives or qualitatively as shown in Eq. (6). The arrows next to the FRs and the entries in the DM show the desired tendency of the value for the FRs and associated DPs. The design is coupled and is not easy to decouple or uncouple without changing the whole concept. However, since many of the requirements tend in the “same direction” the couplings are supporting (positive) couplings with no negative impact. Only the force required to disengage the clutch is in contradiction to the other requirements. However, solutions with for example an increased length of the lever arm or a hydraulic actuation could decrease the maximum required force.

3 A complex systems model

3.1 Assumptions

A product or system usually comprises of multiple functions and sub-functions that interact and are more or less coupled through the structural realization of the product or system (compare to the simplified diaphragm spring clutch example with its five functions which are coupled through the design parameters). For the presented model, a system is defined by the governing equations of its functions. All information and dependencies between design parameters, noise factors and functional outputs are assumed to be known. However, for real-world examples, this would be unrealistically resource intensive. The probabilistic modelling approach used in this study enables the investigator to generalize from a population of systems, but also easily alter assumptions of the model to match new findings and to check the robustness of the results. It is further assumed that the random parameter set \(x_{1} \ldots x_{n}\) is a valid solution to the design problem and all \(m\) functions are satisfactory fulfilled in that point. An optimization for maximum robustness is out of scope for this study. In a real design situation, there would also be weighting factors for each function meaning certain functions are more important or critical than others. The nature of the functions may also differ, some being more binary in nature, either functioning or non-functioning, where others would have a continuous spectrum of performance. For the purpose of this study, it is assumed that all functions are equally weighted and have a continuous nature. It is also assumed that the relative variation is the same for all influencing factors.

3.2 Model characteristics and set-up

In a previous study, Frey and Li (2008) adapted the hierarchical probability model (HPM) developed by Chipman et al. (1997) to assess the effectivity of parameter design methods. The HPM is solely a model for single functions and has in this work been extended for complex products and systems. Looking only at a small number of systems can be misleading since there are examples for complex but robust (aero engines, see also Carlson and Doyle 2000) but also simple and non-robust systems (GM ignition switch Eifler et al. 2014). The purpose of the surrogate model presented in the following is to be able to analyse a population of systems in a quick and inexpensive manner to be able to probabilistically assess the association between complexity and robustness. The model builds upon the nature of functional dependencies as observed in real-world systems. Three main characteristics and regularities can be seen from empirical data that have also widely been used in the design of experiment (DoE) context (see for example Box and Meyer 1986; Wu and Hamada 2011).

-

1.

Sparsity of effects: Experiments have shown that “the responses [of functions] are driven largely by a limited number of main effects and lower-order interactions in most of the systems, and that higher-order interactions usually are relatively unimportant” (Kutner et al. 2004). In other words, there are usually only a small number of factors or parameters in systems that are actually influencing the performance of the functions. These are called to be “active” (Lenth 1989). This follows along with the well known Pareto’s principle, also commonly referred to as the 80/20-rule stating that 80 % of the effects comes from 20 % of all influencing factors. In terms of modelling, this characteristic reduces the complexity and eases the representation of a function.

-

2.

Hierarchy: Another common observation is that main effects are typically stronger than second-order interactions which are usually larger than third-order interactions and so on (Wu and Hamada 2011).

-

3.

Inheritance: Empirical data reveal that interaction effects are more likely to be active if the interacting parameters’ main effects are active (Wu and Hamada 2011).

To capture the entire product or system, which can be seen as a set of coupled functions, the model of Frey and Li (2008) has been extended. Equations (7) through (14) describe the main structure of the hierarchical probability systems model (HPSM). The HPSM describes functional response hyper-surfaces of multi-factor–multi-function systems that reflect observed functional regularities of sparsity, hierarchy and inheritance. In the way it is set up, it allows the investigator to adjust model parameters and probabilities to match assumptions and empirical data.

The hierarchical probability model by Frey & Li has been augmented by a functional dimension. There are \(m\) functions in a system with the ith function’s performance \(y_{l} \left( {x_{1} , \ldots ,x_{n} } \right)\) expressed by a third-order polynomial equation that covers the effects of all \(n\) parameters \(x_{1} \ldots x_{n}\) and their interactions up to third order (Eq. 7). Modelling up to the third order is a sensible way of covering the most common effects without over-complicating the model. \(x_{1} \ldots x_{n}\) are the influencing parameters to the entire system (Eq. 8). These can be design parameters or part properties that can be controlled by the designer or environmental effects outside of the control of the engineers. In contrast to the model by Frey and Li, the differentiation between design parameters and noise factors is not necessary, since the analysis of couplings and contradictions is independent of the nature of the influencing factor. However, the distinction can easily be reintegrated to the model. For the remainder of the article, design parameters and noise factors will be referred to as influencing parameters (IPs).

The IPs are described by \(\bar{x}\), a vector of continuous variables each randomly assigned between 0…1 to be able to vary the parameters for the assessment of the system robustness. The hierarchical probability model by Frey & Li has only two levels [0, 1] for \(x\). It reflects the original experiments the model is based on, which chose the candidate range for \(x\) to cover the highest and lowest anticipated \(x\). The experimental error from observations ε is irrelevant for this model and has been omitted. The probability \(p\) that a main effect is active \(\left( {\delta_{i} = 1} \right)\) is described by Eq. (9). \(p\) is a probability value that incorporates the sparsity characteristic to the system. Equation (10) and (11) provide the probabilities that second- and third-order effects are active dependent on their parental main effects’ activity. This introduces the characteristic of inheritance to the system. Lastly, Eqs. (12–14) prescribe the \(\beta\) coefficients, i.e. the magnitudes of the effects on the functional output \(y\), dependent on the associated effect being active or not (\(\delta = 1\) or \(\delta = 0\), respectively). As opposed to IPs, the effect magnitudes solely depend on the underlying natural laws and are therefore theoretically unbounded (see example Fig. 8). To reflect that, the coefficients are random normally distributed values with mean \(\mu = 0\) and variance \(\sigma^{2} = d^{2}\) for active effects and \(0\) for inactive effects. Note that even active effects can have insignificant effects on the function since the mean of β is set to zero. Inactive effects have been omitted for this model opposed to the underlying model to avoid coupling in all possible parameters and allow for independence of the functions in the system as this is good design practice. Depending on the investigation, the constant \(\beta_{0}\) can be chosen to ensure non-zero or positive values of y or simply be set to zero without loss of generality. The hierarchical structure of effects is described by Eqs. (13) and (14) reducing the second- and third-order effects by \(\frac{1}{{s_{1} }}\) and \(\frac{1}{{s_{2} }}\), respectively.

3.3 Types of functional requirements

There are three types of functional requirements as described in (Taguchi et al. 2005)—maximum is best, minimum is best and nominal is best. The first two differ only in the sign and can be described in the same manner. These requirements are functionally bound only by a minimum (for maximum is best) or maximum (for minimum is best) requirement. However, physical constraints limit the maximum performance. An example for a minimum-is-best requirement is the weight of an airplane. The weight determines the fuel consumption and the lift needed. However, since a certain payload capability is required which again requires a certain lift and thrust the structural rigidity sets the lower bound for the empty weight of the airplane. An example for a maximum-is-best requirement is a simple pair of scissors where the length of the lever arm determines the cutting force. The lower limit of the size of the pair of scissors is set by the minimum required cutting force, the upper bound by the sheer size and ergonomics. Nominal-is-best requirements are functionally constraint on the upper and lower bound. A push button of a device, for example, should not be too easy or too hard to push since the user would associate both with a malfunction.

In the presented surrogate system model, the functions have requirements of the type maximum is best and minimum is best. Nominal-is-best requirements can be modelled as two separate functions one minimum is best, the other maximum is best with the same set of beta coefficients.

4 System evaluation

The developed surrogate model realistically describes a product or system with multiple functions and multiple influencing parameters. For this study, the system properties of interest are the complexity, i.e. the couplings and their level of contradiction, and the robustness. However, the model is not limited to complexity and robustness studies but can also be used for other investigations like optimization or design of experiments investigations.

4.1 Coupling and contradiction

A system as described in the presented model consists of multiple functions with multiple influencing parameters and their interactions. A common way to measure the complexity of the system is to evaluate the degree of coupling between the single functions, i.e. how many parameters are shared and what the influence of these parameters on the individual function is (Summers and Shah 2010). In axiomatic design (AD), Nam P. Suh distinguishes between three different types of systems and stresses the importance of the independence of functions for a predictable and high performance (design axiom 1).

-

1.

Uncoupled systems

-

2.

Decoupled systems

-

3.

Coupled systems

However, no further distinction between systems of the same type is made on the conceptual level. Meaning that in cases where uncoupling or decoupling of the system cannot be achieved due to, for example, other DfX constraints, there is no means to further screen and compare the goodness of concepts. Suh’s second design axiom, the information axiom, aims at the probability of achieving the required performances of the designed functions, which needs further and more detailed insights about the requirements on the one hand and the production capabilities on the other hand. A sensible extension of the independence axiom is to assess a system’s complexity by evaluating the level of contradiction imposed onto the design, as discussed earlier in this paper.

In the presented study, the contradiction of a function in a system is described by the comparison of the influences \(\gamma_{ijk}\) of the single parameters on the different functions (Eq. 15). For this purpose, the weighted ratio was taken, reflecting the correlation of two functions in a particular parameter. The contradiction \(c_{{ijk_{l} }}\) of a function l with respect to a parameter \(x_{i} x_{j} x_{k}\) is then defined as the maximum of the weighted ratios evaluated against all other functions w (Eq. 16). Note that there is minus sign to get a positive contradiction value.

The highest possible value of contradiction in an IP is therefore \(c_{{ijk_{l} }} = 1\) in the case that two functions share the same IP which accounts for 100 % of the functions’ performance with opposite signs on the betas and therefore opposite requirements for this parameter or property. To describe the contradiction of a function, the sum is taken over all the individual contradictions in the IPs (Eq. 17).

As for the contradiction value of functions to single IPs, the function contradiction \(c_{l}\) is bounded to 1 (or 100 %). \(c_{l}\) = 100 % relates to a fully contradicted function meaning that the function shares all of its IPs with other functions with entirely contradicting requirements towards the IPs. To evaluate the contradiction level of a system, the most contradicted function was taken (Eq. 18).

The example of the diaphragm spring clutch introduced in Sect. 2 yields a contradiction value of 0.7. The force to disengage the clutch (F) and the responsiveness (R) have strongly contradicting requirements with respect to the relevant IPs (k and t). However, as mentioned before the force to disengage the clutch can be addressed by a supporting function like a lever arm or hydraulic actuator. This essentially decouples the functions leading also to a lower contradiction score. Neglecting the disengagement force as a function leads to a contradiction value of 10 %.

It has to be noted that this is a simplification for the description of a system’s contradiction level to ensure applicability in practice. There are instances where couplings and contradictions span multiple functions complicating the metric significantly.

4.2 Robustness

The robustness level of a product or system describes its functional insensitivity to variation of any kind whilst satisfactory meeting all functional requirements. The sources of variation can be categorized in manufacturing, assembly, load, environment, material, signal and time-dependent variation (Ebro et al. 2012). Robustness can be evaluated in many ways (Göhler et al. 2016). However, most metrics to describe robustness only address single functions. Among those are for example, the signal-to-noise ratio (Taguchi et al. 2005), derivative-based and variance-based as well regression-based sensitivities indices (Saltelli et al. 2008). In robust design optimization (RDO), this trade-off problem is addressed with multi-objective optimization algorithms. For example, Bras and Mistree (1993) utilize the methodology of compromise decision support problems (cDSP).

For this study, the evaluation of the system robustness is based on the idea that a robust system is less sensitive to ingoing variation and therefore has a larger design space also called common range which is the overlap between the design range and the system range (Suh 2001). Monte Carlo analysis (MCA) is used to alter all influencing parameters simultaneously in order to cover the entire system range. However, the definition of the common range is dependent on a “goodness” criterion for the systems’ individual functional performance. Using this criterion to judge if a parameter set leads to the system being acceptable or unacceptable is similar to reliability assessments where a system can also only have two states: working or failed. The number of successes in the MCA is a measure of how big the common range is and therefore how robust the system is to variation. In the remainder of this paper, we will refer to this robustness score as the yield \(Y\).

The MCA comprises of \(b\) iterations (trials) where the parameters \(x_{1} \ldots x_{n}\) are varied randomly in a specified interval \(v_{DP}\) for allowed variations to derive the varied parameter set \(x_{i}^{'}\) (Eq. 19). The performance ratio \(pr_{l}\) for the individual functions \(y_{l}\) is computed and compared to the yield criterion \(z\) (Eqs. 20 + 21). If the performance ratio is greater than or equal to the yield criterion, this iteration (in design terms: combination of varied parameters) is consider a “success”. As discussed earlier in this article, only max-is-best and min-is-best requirements are taken into account for this study. In this case, \(z\) can be interpreted as minimum required performance relative to the nominal performance. The yield, i.e. the ratio of successes to trials, is normalized with the number of influential factors \(a\) in the system (Eqs. 22 + 23). Even though a parameter is active, its contribution can be very low. By normalizing with the number of influencing factors, the robustness scores can be made comparable.

5 Model execution

MATLAB is used to compute a data set of \(q\) systems with the presented hierarchical probability system model and to evaluate their functional contradiction and robustness. Analysing a population of systems yields the advantage to detect trends and correlations. Due to the probabilistic set-up of the model there is a chance of “zero” functions where all coefficients \(\beta\) are zero for a function. In that case, the product or system would fail to accomplish one of the required functions. Those systems are considered incomplete and erased from the data set. Furthermore, there is a chance for the parameter set \(\bar{x}\) being the only solution for \(z = 0\) and low numbers of influencing factors. These cases have also been disregarded.

The values for the probabilities and factors for the single functions in the model have been adapted from Frey and Li (2008), who investigated various empirical examples to extract those, to ensure the link to real-world systems. Table 2 states all probabilities and factors used in the model.

Given the probabilities in Table 2, the system model has been set up for n = 5 influencing parameters and m = 5 functions in a population of q = 250 systems. These model parameters have been chosen to keep the computational effort to a reasonable extent whilst ensuring well distributed data points across the whole range of contradiction and level of robustness and therefore ensuring the power of the data. The dependence of the selected number of influencing parameters and functions on the outcome will be investigated and discussed later in the paper. The MCA sample size has been selected to \(b = 1000000\) following a study of the convergence for the slope and the intercept in the linear regression model (for the case \(v_{\text{DP}} = 10\,\%\), \(z = 0\)) as a balance between computational time and accuracy (see Fig. 9).

6 Results

6.1 Association between coupling and robustness

Figure 10 shows a scatter plot of the normalized yield and therefore of the system robustness against the number of couplings in a system for \(q = 250\) simulated systems with a uniformly distributed variation \(v_{\text{DP}} = 10\,\%\) in the IPs and a yield limit of \(z = 0\). In the context of manufacturing variation, a normal distribution is often used to reflect the nature of the variation. With the focus on the system and common range, i.e. the robustness, a uniform distribution of the variation in the IPs was chosen to cover the system range as efficiently as possible. Two data points for the example of the diaphragm spring clutch (with and without the function for the disengagement force) have been added to the plot to illustrate the connection to a real-world design example. The Pearson’s and Spearman’s tests for independence have been conducted to quantify the association. With p values of 0.13 and 0.11, respectively, the tests suggest independence. That means that considering the number of couplings alone does not give any insights to how robust a system is, addressing Research Question 1.

6.2 Association between functional contradiction and robustness

The scatter plot in (Fig. 11) shows the normalized yield against the functional contradiction as defined in the previous section for the same model run as before. To describe the association, the linear least square fit (Eq. 24) with its 95 % confidence bounds is included in the plot. Again, the data points for the example of the diaphragm spring clutch have been added to the plot.

As can be seen from the scatter plot (Fig. 11), there is strong association between the level of contradiction in the functional requirements and the yield, i.e. the robustness of the system. With a p value of the F-statistic of 1.4e−36, the association is statistically significant. The Pearson’s and Spearman’s tests confirm this. Also, the 95 % confidence bounds do not include a zero slope, which would potentially mean independence. This result addresses Research Question 2.

6.3 Sensitivity to assumptions and model set-up

To verify the validity of the outcome and the independence to the model assumptions, some variations of the model have been investigated. Table 3 summarizes the model variants with their parameters and results.

As can be read off from Table 3, the association between the level of contradiction and the normalized yield is statistically significant for the model variants 1–7. In particular, the results show that the association holds also for systems with higher numbers of IPs \(n\) and functions \(m\). Furthermore, the results from variant 4 suggest that the association is independent of the setting of the variation interval \(v_{\text{DP}}\). However, the yield is strongly associated with the minimum required performance of the functions \(z\). Since main effects with linear correlations to the functional performance are most likely and most powerful in the presented model, it is expected that for decreasing yield limits to equal or close to the magnitude of the ingoing variation \(v_{\text{DP}}\), the yield increases and becomes independent of the level of contradiction. In simple terms, this means that as the specification window becomes wider, finding a design solution becomes easier and easier with a huge range of values to choose from. At a certain point, the specification windows are so large that the contradictions cause a relatively minor limitation to the parameter selection range and therefore have little impact on the yield. The results for variants 5–8 confirm this. Figure 12 shows the linear fits for a decreasing yield limit \(z = 0 \ldots - 0.1\). The ingoing variation is in all cases \(v_{\text{DP}} = 10\;\%\). All other model parameters are also kept.

7 Discussion

Various scholars have investigated the relation between robustness to variation and complexity. However, there is no universal definition of complexity, and therefore, the studies had often different foci and levels of detail. One of the most influential frameworks in this field is axiomatic design, as discussed in this paper. Suh (2001) defines complexity “as a measure of uncertainty on achieving the specified FRs”. The metric of the information content, which is defined as the logarithm of the inverse of the probability of success, is used both as metric for robustness but also complexity (El-Haik and Yang 1999). Magee and de Weck (2004) describe a complex system as “a system with numerous components and interconnections, interactions or interdependence […]”. In accordance with this definition, some complexity metrics can be found in the literature that are based on the part and interface count as well as the number of part and interface types (Slagle 2007). These, however, are very simplified metrics and not appropriate to be used in the context of robustness due to the lack of the functional dimension. In the original robust design approach by Taguchi, system complexity plays a minor role. Implicitly, a less complex design can easily be optimized in the parameter design phase. On the other hand, a certain complexity is necessary to be able to find more robust parameter settings (Taguchi et al. 2005). Taguchi’s view on complexity, however, refers therefore more to the sheer number of parameters. Summers and Shah (2010) distinguish between complexity metrics based on the size (“information that is contained within a problem”), coupling (“connections between variables at multiple levels”) and solvability (the difficulty of solving a design problem) for the evaluation of parametric and geometric embodiment design problems (see also Braha and Maimon 1998). In this study, we define complexity through the degree of coupling and the level of contradiction between functional requirements. This is an extension of the ideas of the independence axiom. Suh presents a mathematical argumentation showing that for deterministic worst case considerations, the allowable variations in the design parameters \(\Delta {\text{DP}}\) for specified variation limits of the functional requirements \(\Delta {\text{FR}}\) are greatest for uncoupled designs (Suh 2001). However, these robustness calculations are dependent on set \(\Delta {\text{FRs}}\) implying that all FRs are of the type nominal is best, which cannot always be assumed as shown in the clutch example case. Furthermore, an example for a more robust coupled design has been presented in the opening of this paper (Figs. 3, 4).

To the authors’ knowledge, the presented study is the first attempt to relate robustness and complexity quantitatively using a model-based probabilistic approach. We found that for max- and min-is-best requirements, it is not the coupling of functions itself, but rather the level of contradiction of the couplings that influences robustness. As long as contradictions in the requirements imposed on the parameters, properties and dimensions of the system can be avoided, coupling does not inherently harm the robustness. Descriptive studies like the one by Frey et al. (2007) support this finding with an empirical analysis of complex systems. They assessed part counts and complexity of airplane engines against their reliability and found that despite the constantly increasing degree of coupling and integration, the reliability of aero engine improved. Braha and Bar-Yam (2007, 2013) studied the network topology of four large-scale product development networks. They defined coupling with the concept of assortativity, which describes the tendency of nodes (IPs in the case of engineering design networks) with high connectivity to connect with other nodes with high connectivity. Networks with high assortativity are inherently more complex which tends to reduce system robustness. Contradiction as defined in this study can be seen as a measure of assortativity in the domain of unipartite networks. Furthermore, it was found that systems are robust and error tolerant to variation in random nodes but vulnerable to perturbation in the highly connected central nodes (“design hubs”) (Albert and Barabási 2002; Braha et al. 2013; Sosa et al. 2011). In the engineering design context, this refers to the necessity to control the design but also the variation of the most influential parameters with contradicting requirements (Braha and Bar-Yam 2004, 2007). Carlson and Doyle (2000) proposed and discussed the framework of HOT (Highly Optimized Tolerances). They argue that evolving complex systems which underwent numerous generations are extremely robust to designed-for variation, but “hypersensitive to design flaws and unanticipated perturbations”. The increase in robustness is driven by continuous development and improvement including solving of known imperfections and contradictions. This view supports the results of the presented study. An implication of these findings is that the TRIZ contradiction matrix (Altshuller 1996) is likely to be a suitable method for increasing system robustness at a conceptual level. The method suggests that the contradicting parameters are the limiting factors of a design and inventive principles can be identified to overcome the contradictions “without compromise”.

A limitation of the presented study is that as of now, the model features only maximum-is-best and minimum-is-best requirements. Nominal-is-best requirements have been neglected. To extend the insight to all types of requirements, further investigations are needed. Further, this study is based on the analysis of a population of complex systems generated with the model proposed in this paper. We found clear correlations between the level of contradiction and robustness. Whilst definite predictions for the robustness of single systems cannot be made, it can be concluded that the chance that a contradicted system is less robust is high.

8 Concluding remarks

In this study, we extended the hierarchical probability model by Frey and Li (2008) to model complex systems and their functional responses for the case of maximum-is-best and minimum-is-best requirements. The model was used to assess how a system’s robustness to variation is influenced by design complexity in terms of the degree of functional coupling and the level of contradiction between the functional requirements.

In answer to Research Question 1, the correlation between the number of couplings in the system and the system robustness was found not to be statistically significant.

In answer to Research Question 2, a statistically significant association between the level of contradiction and system robustness was found (p = 1.4e−36) where an increase in contradiction is associated with a decrease in robustness.

These results have great implications on our understanding of the nature of complexity and robustness. Suh suggests two design axioms which can be, to an extent, “accepted without proof” (as per the definition of an axiom). The robustness claims of the independence axiom are based on assumptions about the fill of the design matrices and the nature of the functional requirements, which are not always fulfilled in real-world examples. The results in this study challenge Suh’s theory about the negative impact of coupling in systems with max- and min-is-best requirements, stressing that it is actually the level of contradiction of the couplings that determines the level of robustness. Uncoupled designs are by definition free from coupling and therefore contradictions and as a result are inherently robust relative to coupled designs (in general). However, there are specific examples where this does not hold, since coupling can be used to reduce the number of influencing factors, it is possible to reduce the overall variability and therefore improve the robustness by the introduction of positive couplings (couplings without contradiction).

In practical terms, the knowledge of the association between system robustness and functional coupling can be used in early design stages. When the first concepts and embodiments are produced, engineers are often able to identify the most influential properties and dimensions for the performance of the single functions, making it possible to evaluate contradictions to a certain level. A robustness evaluation can therefore be conducted on the different concepts based on the level of contradiction identified within the concepts. Further, the design focus and control should lay on the coupled and contradicted parameters. However, for precise evaluations of functional performances, yield and reliability, detailed models and experiments are necessary. Knowing about contradicting and competing requirements provides insight into the robustness characteristics of complex products or systems that can be utilized to minimize risk and make more educated concept selections.

References

Albert R, Barabási A-L (2002) Statistical mechanics of complex networks. Rev Mod Phys 74(1):47–97. doi:10.1103/RevModPhys.74.47

Altshuller G (1996) And suddenly the inventor appeared: TRIZ, the theory of inventive problem solving. Technology

Apple (2015) Apple iPhone technical specifications. https://support.apple.com/specs/iphone

Box GEP, Meyer RD (1986) An analysis for unreplicated fractional factorials. Technometrics. doi:10.2307/1269599

Box GEP, Wilson KB (1951) On the experimental attainment of optimum conditions. J R Stat Soc 13(1959):1–45. doi:10.1007/978-1-4612-4380-9_23

Braha D, Bar-Yam Y (2004) Information flow structure in large-scale product development organizational networks. J Inf Technol 19(4):244–253. doi:10.1057/palgrave.jit.2000030

Braha D, Bar-Yam Y (2007) The statistical mechanics of complex product development: empirical and analytical results. Manag Sci 53(7):1127–1145. doi:10.1287/mnsc.1060.0617

Braha D, Maimon O (1998) The measurement of a design structural and functional complexity. IEEE Trans Syst Man Cybern Part A Syst Hum 28(4):527–535. doi:10.1109/3468.686715

Braha D, Brown DC, Chakrabarti A, Dong A, Fadel G, Maier JRA, Wood K (2013) DTM at 25: essays on themes and future directions. In: Proceedings of the 2013 ASME international design engineering technical conferences & computers and information in engineering conference IDETC/CIE. Portland, Oregon, pp 1–17

Bras BA, Mistree F (1993) Robust design using compromise decision support problems. Eng Optim. doi:10.1080/03052159308940976

Carlson JM, Doyle J (2000) Highly optimized tolerance: robustness and power laws in. Complex Systems. doi:10.1103/PhysRevE.60.1412

Chipman H, Hamada M, Wu CFJ (1997) Variable-selection Bayesian approach for analyzing designed experiments with complex aliasing. Technometrics 39:372–381. doi:10.1080/00401706.1997.10485156

Ebro M, Howard TJ (2016) Robust design principles for reducing variation in functional performance. J Eng Des. doi:10.1080/09544828.2015.1103844

Ebro M, Howard TJ, Rasmussen JJ (2012) The foundation for robust design: enabling robustness through kinematic design and design clarity. In: Proceedings of international design conference, DESIGN, vol DS 70, pp 817–826

Eifler T, Olesen JL, Howard TJ (2014) Robustness and reliability of the GM ignition switch—a forensic engineering case. In: 1st International symposium on robust design, pp. 51–58

El-Haik B, Yang K (1999) The components of complexity in engineering design. IIE Trans 31(10):925–934. doi:10.1080/07408179908969893

Frey DD, Li X (2008) Using hierarchical probability models to evaluate robust parameter design methods. J Qual Technol 40(1):59–77

Frey D, Palladino J, Sullivan J, Atherton M (2007) Part count and design of robust systems. Syst Eng 10(3):203–221. doi:10.1002/sys.20071

Göhler SM, Howard TJ (2015) The contradiction index—a new metric combining system complexity and robustness for early design stages. In: Proceedings of the ASME 2015 international design engineering technical conferences & computers and information in engineering conference, pp 1–10

Göhler SM, Eifler T, Howard TJ (2016) Robustness metrics: consolidating the multiple approaches to quantify robustness. J Mech Des. doi:10.1115/1.4034112

Gribble SD (2001) Robustness in complex systems. In: Proceedings eighth workshop on hot topics in operating systems, pp. 17–22. doi:10.1109/HOTOS.2001.990056

Hillier VAW, Coombes P (2004) Hillier’s fundamentals of motor vehicle technology. Nelson Thornes, Cheltenham

Jackson A (2013) A road to safety: evolution of car safety features. http://visual.ly/evolution-car-safety-features

Kutner MH, Nachtsheim C, Neter J (2004) Applied linear regression models. McGraw-Hill/Irwin, New York

Lenth R (1989) Quick and easy analysis of unreplicated factorials. Technometrics. doi:10.2307/1269997

Magee CL, de Weck OL (2004) Complex System Classification. Incose. doi:10.1002/j.2334-5837.2004.tb00510.x

Saltelli A, Ratto M, Andres T, Campolongo F, Cariboni J, Gatelli D, Tarantola S (2008) Global sensitivity analysis: The primer. Wiley, Chichester

Slagle JC (2007) Architecting complex systems for robustness. In: Master's Thesis, Massachusetts Institute of Technology

Sosa M, Mihm J, Browning T (2011) Degree distribution and quality in complex engineered systems. J Mech Des 133(10):101008. doi:10.1115/1.4004973

Suh NP (2001) Axiomatic design: advances and applications. Oxford University Press, New York

Summers JD, Shah JJ (2010) Mechanical engineering design complexity metrics: size, coupling, and solvability. J Mech Des. doi:10.1115/1.4000759

Taguchi G, Chowdhury S, Wu Y (2005) Taguchi’s quality engineering handbook. Wiley, Hoboken

Wu CJ, Hamada MS (2011) Experiments: planning, analysis, and optimization. Wiley, Hoboken

Acknowledgments

The authors would like to thank Novo Nordisk for their support for this research project.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Göhler, S.M., Frey, D.D. & Howard, T.J. A model-based approach to associate complexity and robustness in engineering systems. Res Eng Design 28, 223–234 (2017). https://doi.org/10.1007/s00163-016-0236-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00163-016-0236-1