Abstract

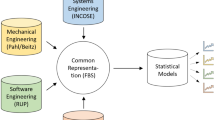

This paper presents a quantitative method for analysing process models of designing independently of the specific design domain. The method uses the situated function–behaviour–structure framework as the basis for a simulation model of a designer acting according to these models. The results of these simulations are sequences of design issues that are analysed using cumulative occurrence graphs with associated quantitative measures. The paper illustrates the approach by analysing and comparing three models of designing from different domains: Pahl and Beitz’ model of engineering design, the rational unified process of software design and a model of design for six sigma in service design. The quantitative results indicate some commonalities across the different models. These commonalities are related to the start of cognitive effort spent on design issues, the continuity of the cognitive effort throughout the design process and the constancy of the speed with which design issues are generated.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Designing is a complex activity that has attracted a significant amount of attention from different research domains, trying to demystify its manifold processes. One of the biggest challenges is to define designing as a unique activity while it is used in a vast range of domains such as engineering, software, graphical interfaces and electronics, to name a few. Understanding the commonalities amongst different expressions of designing is a foundational step in developing a universal understanding of design (Asimow 1962; Lawson 1980; Cross 1982; Dym 1994; Visser 2009).

We hypothesize that designing is an act that is independent of the domain of its application, in the sense that different domains have the same understanding of this act even if they use different terms to describe it. This paper presents an approach to testing this hypothesis based on analysing and comparing models of designing from different domains. There have been similar efforts in the past. For example, in 1998 an international workshop organized by Grabowski et al. (1998) brought together design theorists from different disciplines, aiming to build a unified or universal design theory. Discussions concentrated on finding out whether differences between the models were caused by different concepts or just by different terms for the same concept. Such discussions have continued until today (Sim and Duffy 2003; Frey and Dym 2006; Boon and Knuuttila 2009; Vermaas 2009, 2014; Eder 2012; Chakrabarti and Blessing 2014; Lindemann 2014).

Approaches to extracting commonalities across different models of designing have been limited to qualitative analyses. In this paper, we propose a quantitative approach to analysing and comparing models of designing. It is based on simulations of the design process using the domain-independent function–behaviour–structure (FBS) ontology and its derivative, the situated FBS framework. The simulation models are constructed by mapping the models of designing onto the 20 processes defined in the situated FBS framework and aggregating them to the six design issues of requirement issues, function issues, expected behaviour issues, structure behaviour issues, structure issues and description issues. The cumulative occurrence of the six design issues over the course of a simulation is analysed in terms of their first occurrence (representing the start of cognitive effort spent on design issues), their continuity (characterizing the cognitive effort spent on design issues towards the end of designing), their linearity (representing the constancy of the speed with which design issues are produced) and their slope (representing the rate at which design issues are produced). The quantitative approach presented in this paper is applied to Pahl and Beitz’ model of engineering design, the rational unified process of software design and the design for six sigma model of service design.

This paper is structured as follows: Sect. 2 presents the three models of designing that will be used to demonstrate the approach and outlines their common overall process structure qualitatively. Section 3 develops a simulation model for these domain-specific models of designing based on the steps contained within them. Section 4 presents the results derived from running the simulation for each of the three models of designing. Section 5 describes the commonalities found, and Sect. 6 discusses some conclusions that can be drawn from the results. Appendices include the situated FBS framework and the mappings between the three models and the FBS design issues.

2 Three domain-specific models of designing

Domain-specific models differ from each other mostly in the concepts they use for describing the respective artefacts to be designed. These models commonly represent designing as a phase-based activity (Tate and Nordlund 1996) where the state of the design gradually progresses from abstract to concrete. We chose three phase-based models of designing from disparate design domains as a basis for our analyses: engineering design, software design and service design.

Engineering design is a design discipline with a long tradition in developing models of designing. One of the most detailed and established models in this discipline is Pahl and Beitz’ (2007) systematic approach, which was first published in its German edition in 1977. It describes designing as a sequence of four phases: (1) task clarification, (2) conceptual design, (3) embodiment design and (4) detail design. Task clarification is concerned with collecting, formulating and documenting the requirements of the product to be designed. Conceptual design aims to identify the basic principles and outline of a design solution (or concept). Embodiment design then elaborates the design into a layout that satisfies various technical and economic criteria. Detail design finalizes the design and prepares production documents. Each of the four phases comprises a sequence of activities that may be executed iteratively. After every phase, a “decision-making step” is performed to assess the results of the phase and decide whether the subsequent phase can be started or whether the phase needs to reiterate. Here, “[t]he smallest possible iteration loop is desirable” (ibid, p. 129). Pahl and Beitz do not explicitly exclude iterations across different phases. On the other hand, the “phase-based” character of the systematic approach clearly favours a “waterfall” view where iterations are to occur only within a phase (Tate and Nordlund 1996; Unger and Eppinger 2011). Table 1 shows the phases of Pahl and Beitz’ systematic approach and the activities associated with each phase.

The discipline of software design has also brought about several models of designing. Here, one of the most widely used models is the rational unified process (RUP). Although it was primarily developed as a commercial product, its basic concepts outlined by Kruchten (2004) form a publicly available and highly cited model of designing. RUP defines the following phases for software design processes: (1) inception, (2) elaboration, (3) construction and (4) transition. Inception deals with understanding the requirements and defining the scope of the design. Elaboration specifies and prototypes the main features and architecture of the software design solution. Construction elaborates this solution by developing the complete set of features and implementing all the components of the software. Transition focuses on verifying design quality, manufacturing and delivering the software to the user. Kruchten (2004) suggests this four-phase process be executed iteratively. He also suggests that the specific activities within each phase are to be configured depending on the needs of the individual design project. On the other hand, he describes “typical iteration plans” (ibid, Chapter 16) that can be viewed as a representative sequence of activities that is likely to cover most instances of software design processes. Table 2 summarizes the phases and activities in such a “typical” configuration of RUP.Footnote 1

Service design is a more recent discipline with few existing process models. One of them is design for six sigma (DFSS), which has been used to describe both designing products and designing services (or processes). One of the many variants of DFSS that is specific to designing services is the identify–conceptualize–optimize–validate (ICOV) model presented by El-Haik and Roy (2005). We will refer to this model as DFSS–ICOV in this paper. It proposes the following phases: (1) identify, (2) conceptualize, (3) optimize and (4) validate. The identify phase collects and analyses the requirements for the service to be designed, by listening to both the “voice of the customer” and the “voice of the business”. The conceptualize phase determines the technical requirements and basic components of the service. The optimize phase aims to configure the service in a way to achieve the best possible performance. The validate phase tests and refines the service and prepares its launch. At the end of every phase in DFSS–ICOV, there is a review to decide whether to proceed to the next phase or whether to rework some decisions. Table 3 shows the phases and activities described in this model.

While there are obvious domain-specific differences between the three models, we can already extract a first commonality: all three models use four sequential phases with similar goals, Table 4. As designing proceeds through the four phases, its focus ultimately shifts from the design problem (phase 1) to the design solution (phase 4), with two intermediate stages: one stage (phase 2) generates a list of general concepts that have the potential of being used as starting points for synthesis of variations (“concept structure”). The other stage (phase 3) turns these general concepts into specific solutions with respect to formulated goals, constraints or resources (“solution structure”). This general four-phase model is consistent with the widely held understanding of designing as a progression from the abstract to the concrete (Roozenburg and Cross 1991; Welch and Dixon 1994; Hubka and Eder 1996).

Despite these commonalities, there is also a significant difference between the three models: while the number of activities in the systematic approach (29) and RUP (35) is quite similar, the number of activities in DFSS–ICOV (7) is much smaller. This raises doubts about the usefulness of choosing DFSS–ICOV for comparison against the other models of designing. In Sect. 3.2, we show that DFSS–ICOV will become more fine-grained after applying the FBS coding scheme to become more comparable against the other models of designing. In addition, Sect. 3.3 will show that the measures we use for analysing and comparing different models are independent of the number of activities or steps described within a model.

3 Developing a simulation model

Models of designing are generally understood as guidelines to be used by designers when tackling a design task. If we can describe the activities of a designer who follows the guidelines provided by a specific model, we can simulate the design process represented in the model. This section presents how such a simulation model can be produced in two steps: generalizing the concepts and terms used by a specific model of designing into FBS design issues and mapping the model onto the situated FBS framework.

3.1 Generalising model-specific concepts into FBS design issues

Each of the three models of designing describes sequences of activities within the four design phases. The models differ not only in the number of these activities, but also in the terms and concepts they use to describe the output of every activity. For a more detailed analysis, we need to map the specific concepts used in the models onto a uniform, generic coding schema. One such schema is the FBS design issue schema that has previously been used for analysing design protocols (Gero and McNeill 1998; Kan and Gero 2005). It consists of six design issues: requirements, function, expected behaviour, behaviour derived from structure (or, shorthand, structure behaviour), structure and description.

Requirements include all expressions of customer or market needs, demands, wishes and constraints that are explicitly provided to the designers at the outset of a design task. For example, requirement issues include “technical performance requirements […] articulated by the customer” (Pahl and Beitz 2007, p. 150), “stakeholder requests” (Kruchten 2004, p. 166) and “customer needs and wants” (El-Haik and Roy 2005, p. 84).

Function includes teleological representations that can cover any expression related to potential purposes of the artefact. These representations may be flow based or state based (Chittaro and Kumar 1998). Unlike requirement issues, function issues are not directly provided to the designer; they are generated by the designer based on interpretations of requirement issues. Function issues in the systematic approach include “the intended input/output relationship of a system” (Pahl and Beitz 2007, p. 31) and some examples of needs related to safety, aesthetics or economic properties. Function issues in RUP include the notion of a use case as a “sequence of actions a system performs that yields an observable result of value to a particular actor” (Kruchten 2004, p. 98), and some “nonfunctional requirements” that “deliver the desired quality to the end user” (ibid, p. 159). Function issues in DFSS–ICOV include “service and process functional requirements” that are derived from those requirements provided by the customer (El-Haik and Roy 2005, p. 87).

Expected behaviour includes attributes that describe the artefact’s expected interaction with the environment. They can be used as guidance and measurable assessment criteria for potential design solutions. Expected behaviour issues in the systematic approach include “physical effects” describing the “working principles” of the interactions between different parts of the design object (Pahl and Beitz 2007, p. 40), as well as “technical, economic and safety criteria” used for design evaluation (ibid, p. 193). Similarly, expected behaviour issues in RUP are captured by the “design model” that “consists of a set of collaborations of model elements that provide the behaviour of the system” (Kruchten 2004, p. 177), and “measurable testing goals” (ibid, p. 253) that are often subsumed in “nonfunctional requirements”. Expected behaviour issues in DFSS–ICOV include “CTSs (critical-to-satisfaction requirements, also known as big Ys)” (El-Haik and Roy 2005, p. 33) and some “functional requirements” such as the (expected) “service time” (ibid, p. 96). CTSs are considered expected behaviour (rather than function) because they specify measurable objectives with “acceptable performance levels” (Yang and El-Haik 2003).

Structure behaviour (or “behaviour derived from structure”) includes those attributes of the artefact that are measured, calculated or derived from the observation of a specific design solution and its interaction with the environment. Instances of structure behaviour must be of the same type as instances of expected behaviour, so as to allow for the comparison and evaluation of design solutions. As a result, structure behaviour issues cover the same notions in the three models of designing as outlined for expected behaviour issues.

Structure includes the components of an artefact and their relationships. They can appear either as a “concept structure” or as a “solution structure”, which are the outputs of phases 2 and 3 in Table 1. The former includes Pahl and Beitz’ (2007, p. 40) “working surfaces” and “working materials”, Kruchten’s (2004, p. 174) “classes and subsystems” and El-Haik and Roy’s (2005, p. 6) “design parameters”. The latter includes Pahl and Beitz’ (2007, p. 227) “layout” and “form”, Kruchten’s (2004, p. 256) “code” and El-Haik and Roy’s (2005, p. 7) “detail designs”.

Description includes any form of design-related representations produced by a designer, at any stage of the design process. The descriptions presented in the systematic approach include sketches, CAD models, requirements lists, physical prototypes, calculations and other documentation produced by mechanical engineers. Descriptions in RUP include storyboards, UML models, code files, test plans and other representations produced by software designers. Descriptions in DFSS–ICOV include house of quality diagrams, FMEA worksheets, process maps and concept selection matrices, among many others.

3.2 Mapping the models of designing onto the situated FBS framework

Every activity described in the three models of designing is concerned with generating one or more design issues. These activities may be mapped onto the eight fundamental processes defined in the FBS framework (Gero 1990), labelled 1–8 in Fig. 1:

-

1.

Formulation transforms requirements into functions (R → F) and functions into expected behaviour (F → Be).

-

2.

Synthesis transforms expected behaviour into structure (Be → S).

-

3.

Analysis transforms structure into structure behaviour (S → Bs).

-

4.

Evaluation compares expected behaviour with structure behaviour (Be ↔ Bs).

-

5.

Documentation transforms structure into a description (S → D).

-

6.

Reformulation type 1 transforms structure into new structure (S → S′).

-

7.

Reformulation type 2 transforms structure into new expected behaviour (S → Be′).

-

8.

Reformulation type 3 transforms structure into new function (S → F′ via Be).

For simulating the design process, however, these processes are still too coarse-grained as they do not include the situation in which they are performed. A more detailed view is provided by the situated FBS (sFBS) framework (see Appendix 1) that represents designing as the interaction of a designer with the design situation (Gero and Kannengiesser 2004). This framework defines 20 discrete processes that include a number of cognitive and physical activities, such as the interpretation of requirement lists and design representations, the reflection on current or past design experiences, the decision-making regarding the current design state space and physical actions including sketching, calculating and documenting.

Mapping the activities described in a model of designing onto the sFBS framework allows considering the designer’s situated interactions in the simulation model. At the same time, the basic representation of designing in terms of the six design issues is maintained. This is because the results of executing the 20 processes are specialized classes of design issues that can be aggregated back to the original six categories. The aggregation of the 20 sFBS processes to the six FBS design issues is shown in Table 5.

The mappings onto the sFBS framework require some interpretation of each model of designing in terms of elementary steps and the logical sequences of these steps. The three models presented in Sect. 2 provide sufficient elaboration and illustration to support this interpretation for most of their defined activities. Take the first activity, “Define basic market demands”, described within Pahl and Beitz’ design phase of task clarification. This activity requires as input the interpretation of a “development order” or “product proposal” that contains the product’s desired “functionality and performance”, which in the FBS design issue system is a requirement issue (interpreted by process 1 in the sFBS framework). Next, “basic market demands”, such as “suitable for tropical conditions” and “P > 20 kW” (Pahl and Beitz 2007, p. 147), are constructed by the designer as “implicit requirements, i.e. they are not articulated by the customer” (ibid, p. 150). We map these market demands onto function and expected behaviour issues (constructed by processes 4 and 5). They are compiled in a “requirements list” and “Quality Function Deployment (QFD)” diagrams (ibid, p. 145) that represent description issues (produced by processes 18 and 17). As shown in Table 6, these mappings result in five elementary design steps, each of which produces one design issue, and their logical sequence (more detailed comments for each of the mappings in the three models of designing can be found in Appendices 2, 3 and 4).

This method of coding and mapping was applied to all three models of designing, which was done in consensus between the two authors of this paper who are experts in FBS coding. The systematic approach has 87 mappings, RUP has 100 mappings, and DFSS–ICOV has 41 mappings.

The three sets of mappings of elementary steps can be viewed as a basis for simulation models that need to be complemented with assumptions regarding:

-

1.

The number of occurrences of every elementary step, and

-

2.

the number of iterations within a design phase (we assume that no cross-phase iterations will occur, given the “waterfall” nature of the models).

The first of these assumptions cannot be made without knowledge of specific instances of designing including knowledge about the novelty and complexity of the design task. Staying on the model level rather than the instance level, our working assumption is that every elementary step occurs only once within the same iteration. This assumption is used for each of the three models and will be revisited in the discussion of results.

The second assumption is similarly based on task- and designer-specific knowledge that is not available at this general level. However, in the case of RUP, Kruchten (2004, p. 133) states that there are three typical scenarios regarding the number of iterations for each of the four phases within RUP (phase 1: inception; phase 2: elaboration; phase 3: construction; phase 4: transition; see Table 4). These scenarios are shown in Table 7. They account for various influences on the design process, including task-related, technical, organizational, personal, market-related and other factors.

For the systematic approach and DFSS–ICOV, no concrete scenarios are detailed in the literature. Based on the high-level structural similarity of our three models (as shown in Sect. 2), an initial working assumption is that the scenarios in Table 7 will be used across all three models. We will revisit this assumption in the discussion of results.

Applying the three generic scenarios to each model of designing produces the data sets shown in Table 8. The number of steps for each model is calculated based on multiplying the number of elementary steps in each phase according to the number of iterations defined for the specific scenario. For example, DFSS–ICOV for scenario 1 has:

3.3 Quantitative analysis

Having pre-processed the models of designing as sequences of steps, each of which produces an FBS design issue, allows applying cumulative occurrence analysis (Gero et al. 2014). This analysis has previously been applied to coded design protocols where designing is represented as a sequence of segments each producing one ontological design issue (Kannengiesser et al. 2013). The cumulative occurrence c of design issue x at design step n is defined as \(c = \sum\nolimits_{i = 1}^{n} {x_{i} }\) where x i equals 1 if design step i is coded as x and 0 if design step i is not coded as x. Plotting the results of this equation on a graph with the design steps n on the horizontal axis and the cumulative occurrence c on the vertical axis will visualize the occurrence of the design issues. Figure 2 shows a general representation of such a graph.

Drawing on Gero et al. (2014), four measures are used for analysing the cumulative occurrence-based representations of the different models of designing:

-

First occurrence at start: This measure indicates whether design issues first occur near the start of designing or at a later stage.

-

Continuity: This measure indicates whether design issues occur throughout designing or only up to a certain point.

-

Linearity: This measure indicates whether the speed at which design issues are generated (or the cognitive effort expended on these design issues) is constant. It is measured using the coefficient of determination (R 2) that indicates linear fit and ranges from 0 to 1. As a threshold for linear fit, we set the commonly used value of 0.95; i.e. if R 2 is at least 0.95, the graph is considered linear.

-

Slope: This measure represents the rate at which design issues are generated. It is calculated only for graphs that are found to be linear.

The two measures of continuity and first occurrence at start are direct characterizations of cumulative occurrence, both available from a qualitative assessment of the graph. They can tell us whether a design issue is focused on from the very start of the design process and whether it is continuously focused on throughout the design activity, respectively. The measures of slope and linearity have been derived using a grounded theory approach, through a process of discovery by looking at the results of preliminary simulation runs where some design issue graphs appeared to be linear.

All of these measures are independent of the number of design steps. This allows comparing models of designing that have different levels of detail and different numbers of iterations. We pose only one restriction on calculating slope and linearity to ensure sufficient statistical significance: that the number of occurrences per design issue is at least 10.

4 Simulation results

In this section, we present the measures we derived from analysing the three models of designing. These measures are presented in Tables 9, 10, 11, 12, 13 and 14. In addition, to allow readers to carry out their own qualitative assessments, we also provide the raw data in the form of graphs representing the cumulative occurrence of design issues for scenario S2. These graphs are shown in Figs. 3, 4 and 5. The vertical lines in these figures separate the four phases in each model. They help in locating the occurrence of design issues within the respective model of designing, which is useful for deriving the measures of “first occurrence at start” and “continuity”.

As a first observation, we note that there are no or only small differences among the three scenarios within each model of designing. All qualitative measures (first occurrence at start, continuity, and linearity) are the same for S1, S2 and S3 of each model. Differences in slope are not significant across the three scenarios.

When comparing the three models of designing with each other, we can make the following observations:

-

First occurrence at start: In all three models, requirement issues, function issues, expected behaviour issues and description issues occur at the start (or in phase 1) of the design process. And in all three models, structure behaviour issues and structure issues occur later (in phase 2).

-

Continuity: The cumulative occurrence of requirement issues, function issues and expected behaviour issues is discontinuous in all three models. Structure behaviour issues, structure issues and description issues are continuous in all three models.

-

Linearity: The cumulative occurrence of function issues in all three models is nonlinear, whereas the cumulative occurrence of structure issues and description issues in all three models is linear. For expected behaviour issues and structure behaviour issues, the results are inconsistent across the models.

-

Slope: Slopes could not be compared for requirement issues (insufficient data), function issues (no linear graphs) and expected behaviour issues (linearity only for RUP). The slopes for other design issues were compared using one-way ANOVA tests, resulting in commonalities being found for neither structure behaviour issues (F 2,4 = 319.341, p < 0.05), structure issues (F 2,6 = 11.889, p < 0.05) nor description issues (F 2,6 = 220.841, p < 0.05).

5 Discussion of results

The results can be discussed in terms of the commonalities found across the three models of designing and in terms of the assumptions underlying the simulation models.

5.1 Identifying commonalities across the three models of designing

Our analysis has uncovered a number of commonalities among the three models of designing, independent of the number of iterations in each model (see Sect. 5.2 for an explanation of why they are independent). Table 15 summarizes our findings, using “+” and “−” symbols, to indicate the existence of a commonality: “+” indicates commonalities as specified on top of the table, while “−” indicates commonalities as the negation of what is specified on top. These “negative commonalities” can only be derived from binary measures such as “first occurrence at start”; the negations of the other measures are too broad to allow similar inverse conclusions. Empty spaces in the table mean that there is no commonality.

Commonalities regarding the first occurrence of design issues near the start were found for all design issues. While requirements issues, function issues, expected behaviour issues and description issues occur near the start, structure behaviour issues and structure issues occur later. Commonalities regarding the continuity of the graph were found for structure behaviour issues, structure issues and description issues. The commonality of linearity was identified for structure issues and description issues. There are no commonalities regarding slope.

Some of the commonalities are consistent with the general goals of each of the four phases of the models, as introduced in Sect. 2. In the three models, requirement issues, function issues, expected behaviour issues and description issues start occurring in phase 1 as they are needed to define and document the design problem. The occurrence of these issues, except for description issues that continue to occur until the end, tends to diminish later as the focus of designing shifts towards possible design solutions. Structure issues and structure behaviour issues start occurring later and continue to occur until the final design solution is determined, validated and documented. The existence of linearity of structure and description issues in all three models of designing is the most surprising outcome of our analysis. It implies that during designing, a uniform cognitive effort is expended on these issues, which has not been explicitly stated in previous work. Yet, the amount of cognitive effort differs as suggested by non-existence of a common slope for structure and description issues across different models of designing.

5.2 Revisiting assumptions for the simulation models

The results of applying our approach shed some light on the validity of the assumptions used for constructing the simulation models (see Sect. 3.2).

Our first assumption was that every design step occurs only once within the same iteration. In common design practice, this assumption is not realistic, because incomplete knowledge and design complexity often require repeating the same or similar design activities multiple times (Wynn et al. 2007). However, these task- and designer-specific variables cannot be taken into account for analysing models of designing that are independent of particular instances. Therefore, the validity of the one-execution-per-step assumption must be based on its usefulness in analysing and comparing different models rather than its relation to the practice of designing. Increasing the number of executions per step, uniformly across all steps of a model, would not lead to changes in the four measures except for changed values for slopes. Even if the number of executions can vary for different steps in a model, only the shape of the graph would be affected in terms of its linearity or nonlinearity, not a change from one shape to another. As a result, our assumption of one execution per step seems to be a useful and valid choice.

Our second assumption was related to the number of iterations of the different phases within a model of designing. We took Kruchten’s (2004) three “typical” scenarios for RUP, each of which defines different numbers of iterations for the four phases, and applied them to the other models. The results show that the behaviour of the cumulative occurrence graphs in all three models of designing did not vary for the different scenarios. We might therefore simplify the assumption to include only one simple scenario where there are no iterations for any of the four phases. This would also facilitate the application of our approach to models that cannot be mapped onto the four-phase process structure. For example, the VDI-2221 model (VDI 1985) has seven phases, and some variants of DFSS such as define–measure–analyse–design–verify (DMADV) and identify–define–design–optimize–validate (IDDOV) have five phases.

6 Conclusion

This paper proposed a quantitative approach for the analysis of domain-specific models of designing. Its application to three models of designing demonstrates its applicability to domains as different as engineering, software and service design. Based on its ontological foundations, the approach allows comparisons between models from different design domains. The comparison of the models analysed in this paper shows that there are some strong commonalities that provide support for the hypothesis that designing is an act that is independent of the domain of its application. This may have important implications for design education: If designing is foundational and is shown to be domain independent and different to science and humanities, then consideration should be given to teaching design in parallel with science and humanities.

The work presented in this paper should be regarded as preliminary, both in terms of the method used and the findings regarding commonalities across design domains. The method presented is limited to the descriptive capacities of the FBS ontology. We have used this ontology since many researchers have already used it as a coding scheme for representing design processes in various domains. Other design ontologies could be used for coding models of designing if they have the capacity to provide a coding scheme for design processes. However, to the best of our knowledge, no other ontological coding scheme exists with similar domain independence as the FBS coding scheme. Further research in the analysis method is therefore more likely to focus on the particular measures used, including their definition and completeness. Graphs other than those based on cumulative occurrence may be used with different associated measures.

The findings of our comparisons of engineering, software and service design are limited by the choice of models of designing. We chose the systematic approach, RUP and DFSS–ICOV because of their popularity and their detailed level of description. Yet, it remains to be tested if analysing other models in the same domain produce similar results. A difficulty here is that many domain-specific models of designing are quite coarse-grained and cannot be easily represented in sufficient detail as needed for the proposed simulation model and associated statistical analyses.

The results presented in this paper provide a starting point for future research. For example, they could be compared with empirical research, as there are many protocol studies available using the same FBS design issue scheme. Such comparisons would provide the basis to examine differences between models of designing and designing as practised.

Notes

For the inception phase we use the workflow defined for the requirements discipline and omit the design project management activities that are included in Kruchten’s “typical” Inception phase. We view these management activities as beyond the scope of a model of designing. For the Transition phase, where there are no “typical” activities defined, we use Kruchten’s deployment workflow.

References

Asimow M (1962) Introduction to design. Prentice-Hall, Englewood Cliffs

Boon M, Knuuttila T (2009) Models as epistemic tools in engineering sciences. In: Meijers (ed) Philosophy of technology and engineering sciences. Elsevier, Amsterdam, pp 693–726

Chakrabarti A, Blessing LTM (2014) Theories and models of design: a summary of findings. In: Chakrabarti A, Blessing LTM (eds) An anthology of theories and models of design. Springer, London, pp 1–46

Chittaro L, Kumar AN (1998) Reasoning about function and its applications to engineering. Artif Intell Eng 12(4):331–336

Cross N (1982) Designerly ways of knowing. Des Stud 3(4):221–227

Dym C (1994) Engineering design: a synthesis of views. Cambridge University Press, Cambridge

Eder WE (2012) Comparison of several design theories and methods with the legacy of Vladimir Hubka. Public Report, The Design Society

El-Haik B, Roy DM (2005) Service design for six sigma: a roadmap for excellence. Wiley, Hoboken

Frey DD, Dym CL (2006) Validation of design methods: lessons from medicine. Res Eng Des 17(1):45–57

Gero JS (1990) Design prototypes: a knowledge representation schema for design. AI Mag 11(4):26–36

Gero JS, Kannengiesser U (2004) The situated function–behaviour–structure framework. Des Stud 25(4):373–391

Gero JS, McNeill T (1998) An approach to the analysis of design protocols. Des Stud 19(1):21–61

Gero JS, Kannengiesser U, Pourmohamadi M (2014) Commonalities across designing: empirical results. In: Gero JS (ed) Design computing and cognition’12. Springer, Berlin, pp 285–302

Grabowski H, Rude S, Grein G (1998) Universal design theory. Shaker Verlag, Aachen

Hubka V, Eder WE (1996) Design science: introduction to the needs, scope and organization of engineering design knowledge. Springer, London

Kan J, Gero JS (2005) Design behaviour measurement by quantifying linkography in protocol studies of designing. In: Gero JS, Lindemann U (eds) Human behaviour in designing’05. Key Centre of Design Computing and Cognition, University of Sydney, Sydney, pp 47–58

Kannengiesser U, Williams C, Gero JS (2013) What do the concept generation techniques of TRIZ, morphological analysis and brainstorming have in common? In: DS 75-7: Proceedings of the 19th international conference on engineering design (ICED13), design for harmonies, vol 7: human behaviour in design, Seoul, Korea

Kruchten P (2004) The rational unified process: an introduction. Addison-Wesley, Upper Saddle River

Lawson B (1980) How designers think: the design process demystified. Architectural Press, Amsterdam

Lindemann U (2014) Models of design. In: Chakrabarti A, Blessing LTM (eds) An anthology of theories and models of design. Springer, London, pp 121–132

Pahl G, Beitz W (2007) Engineering design: a systematic approach. Springer, Berlin

Roozenburg NFM, Cross NG (1991) Models of the design process: integrating across the disciplines. Des Stud 12(4):215–220

Sim SK, Duffy AHB (2003) Towards an ontology of generic engineering design activities. Res Eng Design 14(4):200–223

Tate D, Nordlund M (1996) A design process roadmap as a general tool for structuring and supporting design activities. In: Proceedings of the second world conference on integrated design and process technology (IDPT-Vol. 3). Society for Design and Process Science, Austin, TX, pp 97–104

Unger D, Eppinger S (2011) Improving product development process design: a method for managing information flows, risks, and iterations. J Eng Des 22(10):689–699

VDI (1985) VDI-Richtlinie 2221 (Entwurf): Methodik zum Entwickeln und Konstruieren technischer Systeme und Produkte. VDI-Verlag, Düsseldorf

Vermaas PE (2009) The flexible meaning of function in engineering. In Norell Bergendahl M et al (eds) Proceedings of the 17th international conference on engineering design (ICED’09), vol 2. The Design Society, pp 113–124

Vermaas PE (2014) Design theories, models and their testing: on the scientific status of design research. In: Chakrabarti A, Blessing LTM (eds) An anthology of theories and models of design. Springer, London, pp 47–66

Visser W (2009) Design: one, but in different forms. Des Stud 30(3):187–223

Welch RV, Dixon JR (1994) Guiding conceptual design through behavioral reasoning. Res Eng Des 6(3):169–188

Wynn DC, Eckert CM and Clarkson PJ (2007) Modelling iteration in engineering design. In: International conference on engineering design (ICED’07), Paris, France, pp 561/1–561/11

Yang K, El-Haik B (2003) Design for six sigma: a roadmap for product development. McGraw-Hill, New York

Acknowledgments

This research is supported in part by Grants from the US National Science Foundation Grant Nos. IIS-1002079, CMMI-1400466 and CMMI-1161715. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: The situated FBS framework

See Fig. 6.

The external world contains objects and representations in the environment of the designer.

The interpreted world contains experiences, percepts and concepts produced by the designer’s interactions with the external world.

The expected world contains the designer’s hypotheses, goals and expected results of actions.

1.1 Explanation of symbols

- Fei :

-

Expected function

- Fi :

-

Interpreted function

- Fe :

-

External function

- FRe :

-

Requirement on function

- Bei :

-

Expected behaviour

- Bi :

-

Interpreted behaviour

- Be :

-

External behaviour

- BRe :

-

Requirement on behaviour

- Sei :

-

Expected structure

- Si :

-

Interpreted structure

- Se :

-

External structure

- SRe :

-

Requirement on structure

Appendix 2: Pahl and Beitz’ systematic approach

Appendix 3: Rational unified process

Appendix 4: DFSS–ICOV

Rights and permissions

About this article

Cite this article

Kannengiesser, U., Gero, J.S. Is designing independent of domain? Comparing models of engineering, software and service design. Res Eng Design 26, 253–275 (2015). https://doi.org/10.1007/s00163-015-0195-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00163-015-0195-y