Abstract

Polynomial chaos (PC) methods with Gauss-type quadrature formulae have been widely applied for robust design optimization. During the robust optimization, gradient-based optimization algorithms are commonly employed, where the sensitivities of the mean and variance of the output response with respect to design variables are calculated. For robust optimization with computationally expensive response functions, although the PC method can significantly reduce the computational cost, the direct application of the classical finite difference method for the analysis of the design sensitivity is impractical with a limited computational budget. Therefore, in this paper, a semi-analytical design sensitivity analysis method based on the PC method is proposed, in which the sensitivity is directly derived based on the Gauss-type quadrature formula without additional function evaluations. Comparative studies conducted on several mathematical examples and an aerodynamic robust optimization problem revealed that the proposed method can reduce the computational cost of robust optimization to a certain extent with comparable accuracy compared with the finite difference-based PC method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The objective of robust design optimization is the optimization of the product performance while minimizing the sensitivity of the performance to uncertainties, which has been widely applied to aerospace engineering (Xiong et al. 2015a; Li et al. 2013; Wu et al. 2018), automobile engineering (Zhang et al. 2007), and civil engineering (Cheng et al. 2016) fields. As is common knowledge, a key component of robust design optimization is the estimation of the mean and variance of the output response, which can be achieved by the use of a metamodel to replace the original output response (Rehman et al. 2014; Janusevskis and Rodolphe 2013). An alternative method involves the development of efficient uncertainty propagation approaches, among which the polynomial chaos (PC) method has been widely studied and applied, as it provides a solid mathematical foundation and fast convergence rate (Xiu and Karniadakis 2002). With the PC method, a random variable can be represented as a stochastic metamodel, and the mean and variance of the random variable can then be analytically obtained. Wu et al. (Wu et al. 2017) and Dodson and Parks (Dodson and Parks 2015) respectively applied the PC method to aerodynamic robust optimization design. Suryawanshi and Ghosh employed the PC method to analyze the aeroelastic stability problems on a rectangular unswept cantilever wing model (Suryawanshi and Ghosh 2016). Xia et al. applied an adaptive PC method to quantify the performance impact of flow and geometric uncertainties for a turbine blade (Xia et al. 2019). Fisher et al., Xiong et al., and Wang et al. respectively applied the PC method to robust trajectory optimization (Fisher and Bhattacharya 2011; Xiong et al. 2015b; Wang et al. 2019a), to increase the robustness of the planned trajectory in response to various disturbances that is beneficial to closed-loop control. In addition to the aleatory uncertainty, the PC method was proposed for the realization of robust design optimization under mixed (aleatory and epistemic) uncertainties, in which the evaluation method for the robustness index under mixed uncertainties was presented (Zhang and Serhat 2013; Zhang 2013). The focus of this study was on PC-based robust optimization under the consideration of aleatory uncertainties.

It is common knowledge that during the gradient-based robust optimization process, the calculation of the design sensitivity is required, i.e., the sensitivities of the first two statistical moments (mean and standard deviation) of the output response with respect to the design variables. The commonly used method for sensitivity analysis is the finite difference method (FDM). For robust optimization, although the PC method can significantly reduce the computational cost when compared with the traditional Monte Carlo simulation (MCS) method for statistical moment calculations, a certain number of additional function evaluations are required for the design sensitivity analysis when employing the traditional FDM. In addition, there is a significant increase in the number of function evaluations required for high-dimensional problems. However, for practical engineering design problems, nonlinear, high-dimensional, and time-consuming high-fidelity simulation is typically required, such as the finite element analysis (FEA) and the computational fluid dynamics (CFD). Furthermore, the direct application of FDM to the analysis of the design sensitivity during robust optimization is impractical with a limited computational budget.

Design sensitivity analysis has been previously investigated in relation to robust design optimization (Keshavarzzadeh et al. 2016; Keshavarzzadeh et al. 2017; Kumar et al. 2018; Miranda et al. 2016; Du and Leifsson 2019; Rumpfkeil 2013) and reliability-based design optimization (Keshavarzzadeh et al. 2016; Keshavarzzadeh et al. 2017; Cho et al. 2016; Lee et al. 2011a; Lee et al. 2011b), for the improvement of the efficiency and accuracy of design. The studies conducted by Cho et al., Lee et al., and Choi et al. in refs. (Cho et al. 2016; Lee et al. 2011a; Lee et al. 2011b) were focused on reliability-based design optimization, in which the MCS method was employed for reliability analysis, and the design sensitivity of the probability of failure was derived using a first-order score function. Rumpfkeil developed a gradient-based robust optimization algorithm based on kriging, in which the design sensitivity was derived analytically based on the kriging metamodel (Rumpfkeil 2013). For PC-based design optimization under uncertainties, several studies have been conducted to develop design sensitivity approaches to save computational cost (Keshavarzzadeh et al. 2016; Keshavarzzadeh et al. 2017; Kumar et al. 2018; Miranda et al. 2016; Du and Leifsson 2019). In these works, the PC method was applied for uncertainty quantification and the design sensitivity was derived based on the PC model by using the adjoint method. However, it is limited to systems with known governing equations, and user familiarity with the physical system mechanism is required, given that the sensitivity information from adjoint method is utilized. In practical engineering, oftentimes the response models in optimization design are computationally expensive black-box-type response functions, such as the CFD/FEA simulation model that has been calibrated by specialists, and the complex coupled multidisciplinary car or flight vehicle system involving various simulation models from different disciplines, and it is difficult or even impossible to derive the governing equation of the entire system. Therefore, the abovementioned design sensitivity method for PC-based design optimization under uncertainties in refs. (Keshavarzzadeh et al. 2016; Keshavarzzadeh et al. 2017; Kumar et al. 2018; Miranda et al. 2016; Du and Leifsson 2019) may be difficult to apply in this case.

For the construction of the PC model for the statistical moment estimation, a commonly used method for the calculation of the PC coefficients is the least square estimation method (Ghisu and Shahpar 2017; Ghisu and Shahpar 2018). An alternative method is the Galerkin projection method (Xiu and Karniadakis 2003), in which the PC coefficients are calculated using a series of Gaussian-type quadrature formulae, such as the full factorial numerical integration (Lee et al. 2009; Lee et al. 2008) and sparse grid numerical integration (Xiong et al. 2010). The Gaussian quadrature is formulated as a weighted summation of the function response values on several Gaussian quadrature nodes, which provides a potential method for the calculation of the design sensitivity of statistical moments. Therefore, in this study, a semi-analytic design sensitivity analysis method was developed for the PC-based robust optimization, for the reduction of the computational cost. The design sensitivities of the first two statistical moments with respect to the design variables are directly derived based on the Gaussian quadrature formula employed for the statistical moment estimation within the PC method by using the chain rule in conjunction with the FDM strategy, instead of referring to several additional function evaluations, as is the case with the direct FDM. Moreover, when compared with the existing approach by Keshavarzzadeh et al., the proposed method can be more conveniently applied to systems with black-box-type response functions (Keshavarzzadeh et al. 2016; Keshavarzzadeh et al. 2017).

The remainder of this paper is organized as follows. In Section 2, the Gaussian quadrature-based PC method is briefly reviewed. In Section 3, the proposed semi-analytic design sensitivity analysis method is described in detail. Section 4 presents comparative studies on the robust optimization of several mathematical examples, in addition to an airfoil aerodynamic optimization problem, for the verification of the effectiveness of the proposed method. The conclusion is then presented in Section 5.

2 Review of Gaussian quadrature-based PC method in robust optimization

In this study, given that the probability-based robust optimization with random uncertainty was considered, a typical and widely applied robust design optimization problem that can be classified as “sensitivity robustness approach” (Beyer and Sendhoff 2007) was addressed, which can be expressed as follows:

where x = [x1, ⋯, xd] is the random design vector with mean values of \( {\mu}_{x_1},\cdots, {\mu}_{x_d} \); q = [q1, ⋯, qm] is the random parameter vector; μ and σrepresent the mean and standard deviation of the variable, respectively; the subscripts f and g denote the objective and constraint functions corresponding to the original deterministic optimization problem, respectively; k* is a constant employed in robust optimization to ensure the robustness of optimal solution that is typically set as k* = 3; \( {\mu}_f^{\ast } \) and \( {\sigma}_f^{\ast } \)are the constant scaling factors specified by users to scale μf and σf to the same magnitude that is beneficial to find the optimum; and w1 w2 are weight coefficients defined by the users with some preferences or a priori assumptions.

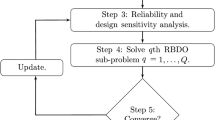

Figure 1 presents the flowchart of the PC-based robust design optimization using the gradient-based optimization algorithm. During each optimization iteration, the mean and standard deviation values of the original objective f(x) and constraint functions gi(x) (μf,σf, \( {\mu}_{g_i} \), and \( {\sigma}_{g_i} \)) are calculated using the PC method at the current design point. Moreover, the procedure can be briefly expressed as follows, by using the response function y = g(x) for illustration.

Step 1: represent the output response y as a pth-order PC model with respect to the random design variables x and random parameters q using the generalized PC method (Xiu and Karniadakis 2003). Here, p represents the maximum order of the polynomial terms (Φi(ξ), i = 0, ⋯, P) in the PC model.

where ξ = [ξ1, ⋯, ξd, ξd + 1, ⋯, ξd + m] represents the standard random vector transformed from the original random vector X = [x1, ⋯, xd, q1, ⋯, qm] based on their distribution information (Xiu and Karniadakis 2002); the number of polynomial terms is P + 1 = (p + d + m)!/(d + m)!/p! = (p + D)!/D!/p! with D as the sum of the dimensions of x and q; and \( {\Phi}_i\left(\boldsymbol{\upxi} \right)=\prod \limits_{k=1}^D{\varphi}_i^k\left({\xi}_k\right) \) is the product of the one-dimensional orthogonal polynomial basis function (\( {\varphi}_i^k\left({\xi}_k\right) \)) that corresponds to the standard random variable in each dimension.

Step 2: calculate the PC coefficient bi(i = 0, ⋯, P).

Using the Galerkin projection method, the two sides of Eq. (2) are projected onto each orthogonal polynomial Φi(ξ). Then, each PC coefficient is calculated as follows using the orthogonality of the orthogonal polynomials.

where E[·] indicates the expectation.

The computational cost of Eq. (3) is mainly due to the calculation of the numerator, which can be carried out using a Gaussian quadrature-type numerical integration method such as the commonly used full factorial numerical integration (FFNI) (Lee et al. 2009; Lee et al. 2008) for lower-dimensional problems, or sparse grid numerical integration (SGNI) for higher-dimensional problems (Ghisu and Shahpar 2017). With FFNI, the numerator can be calculated using a direct full tensor product Gaussian quadrature formula, as expressed below.

where ωj. k and xj. k are the kth weight and one-dimensional node for the jth input random variable of X = [x1, ⋯, xd, q1, ⋯, qm], respectively; mj represents the corresponding number of nodes; and the value of ξ employed in Φi(ξ) is calculated by transforming from the d-dimensional node \( \left({x}_{1.{j}_1},\cdots, {x}_{D.{j}_D}\right) \) based on the distribution information of X.

With SGNI, the D-dimensional sparse grid nodes with an accuracy level K are first obtained using a special tensor product operation, as follows:

where i1, i2, ⋯, iD determines the number of nodes in each dimension mj(j = 1, ⋯, D), |i| = i1 + i2 + ⋯ + iD, Q = K + D; and \( {U}_D^K \) represents the collection of sparse grids, i.e., the combination of all the multi-dimensional nodes produced by a certain number of direct smaller-size tensor products.

For each combination {i1, ⋯, iD} that satisfies K + 1 ≤ |i| ≤ Q, the direct full tensor product is used to generate D-dimensional nodes. Based on Eq. (5), a total of N D-dimensional nodes {x1, ⋯xk, ⋯, xN} can be obtained, and the weight coefficient for \( {\boldsymbol{x}}_k=\left[{x}_{1.{j}_1}^k,\cdots, {x}_{D.{j}_D}^k\right] \) is calculated as follows:

where \( {\omega}_{1.{j}_1}\kern0.5em \cdots \kern0.5em {\omega}_{D.{j}_D} \) denotes the product of the one-dimensional weights.

Thus, E[yΦi(ξ)] can be calculated as follows:

Step 3: when the PC coefficients are obtained, estimate the first two statistical moments of the random output response y.

3 Proposed semi-analytical design sensitivity analysis method based on PC

As shown in Fig. 1, in addition to the statistical moment calculation, the design sensitivity (\( \frac{\mathrm{d}F}{\mathrm{d}{\mu}_{x_j}} \), \( \frac{\mathrm{d}{G}_i}{\mathrm{d}{\mu}_{x_j}} \), j = 1, ⋯, d) should also be obtained. To reduce the computational cost, a semi-analytical design sensitivity analysis method based on PC was developed. Based on Eqs. (8), (9), and (3), the design sensitivity of the mean (μy) and standard deviation (σy) of the output response with respect to the design variable \( {\mu}_{x_j} \) can be calculated as follows.

Equations (10) and (11) can be further expressed as the following equations:

where \( {x}_{j.{i}_j} \)and \( {\omega}_{j.{i}_j} \) are the node and weight coefficients in the jth dimension, respectively, which correspond to the random variable xj.

In Eqs. (12) and (13), given that the value of the weight \( {\omega}_{j.{i}_j} \) is only determined by the number of nodes mj and distribution of xj, \( \frac{\mathrm{d}{\omega}_{j.{i}_j}}{\mathrm{d}{\mu}_{x_j}}=0 \). Moreover, given that the nodes \( {x}_{j.{i}_j}\left({i}_j=1,\cdots, {m}_j\right) \) in each dimension are centered around the mean \( {\mu}_{x_j} \) with fixed spacing, \( \frac{\mathrm{d}{x}_{j.{i}_j}}{\mathrm{d}{\mu}_{x_j}} \) = 1. Therefore, the critical task is the calculation of the partial derivative \( \frac{\mathrm{\partial E}\left[y{\Phi}_i\left(\boldsymbol{\upxi} \right)\right]}{\partial {x}_{j.{i}_j}} \).

3.1 Design sensitivity analysis method based on PC-FFNI

When FFNI is used for the PC coefficient calculation based on Eq. (4), the partial derivatives \( \frac{\mathrm{\partial E}\left[y{\Phi}_i\left(\boldsymbol{\upxi} \right)\right]}{\partial {x}_{j.{i}_j}} \) in Eqs. (12) and (13) can be expressed as follows.

The partial derivative with respect to the node in Eq. (14) can be approximately calculated based on the data (nodes) employed for the previous statistical moment estimation using the finite difference strategy, without the requirement of additional function evaluations. Using the two-dimensional node as an example for illustration, it is assumed that the one-dimensional nodes are located from left to right in each dimension by their sequence numbers (i.e., the subscripts). Based on the three-point Lagrange interpolation polynomial, the partial derivative at any location can be calculated using the forward, center, and backward difference methods as follows.

Three adjacent nodes l1, l2, and l3 with their corresponding function response values g1, g2, and g3 are selected, based on which two approximate partial derivatives \( {r}_1=\frac{g_2-{g}_1}{l_2-{l}_1} \) and \( {r}_2=\frac{g_3-{g}_2}{l_3-{l}_2} \) that correspond to nodes \( {l}_1^{\hbox{'}}=\frac{l_1+{l}_2}{2} \) and \( {l}_2^{\hbox{'}}=\frac{l_2+{l}_3}{2} \), respectively, can be obtained. The partial derivatives ra at any location la can then be approximately calculated using the Lagrange linear interpolation polynomial (Abramowitz et al. 1966), as follows:

According to the locations of nodes l1, l2, and l3, there are three possible cases with respect to the calculation of Eq. (15).

Case 1: when ij = 1(j = 1, ⋯, d), as shown in Fig. 2a, let l1 = xj.1,l2 = xj.2,l3 = xj.3, and la = l1. The partial derivative can be approximated by the forward difference method based on Eq. (15), as follows.

Case 2: when ij = mj, as shown in Fig. 2b, let \( {l}_1={x}_{j.{i}_j-2} \),\( {l}_2={x}_{j.{i}_j-1} \), \( {l}_3={x}_{j.{i}_j} \), and la = l3. Similar to the derivation of Eq. (16), the partial derivative can be approximated using the backward difference method as follows.

Case 3: when ij ≠ 1 and ij ≠ mj, as shown in Fig. 2c, let \( {l}_1={x}_{j.{i}_j-1} \),\( {l}_2={x}_{j.{i}_j} \), \( {l}_3={x}_{j.{i}_j+1} \), and la = l2. The partial derivative can be approximated using the central difference method as follows.

In Eqs. (16)–(18), h1 = l2 − l1, h2 = l3 − l2, and

For higher-dimensional cases (D ≥ 3), the partial derivative can be easily derived for each dimension using the abovementioned methods. To apply the proposed design sensitivity analysis method, the number of one-dimensional nodes for each dimension is required to be mj ≥ 3. This requirement is satisfied for practical problems using the PC method with FFNI. It should be noted that the design sensitivity obtained by the proposed method is theoretically inaccurate when the performance function g(x) is highly nonlinear. However, the local sensitivity requires calculation within a very small design region; thus, the error induced by the Lagrange linear interpolation is not excessively large, as verified in the comparative studies on robust optimization, which are presented further in this paper.

3.2 Design sensitivity analysis method for PC-SGNI

When SGNI is employed for the PC coefficient calculation, the partial derivatives in Eqs. (12) and (13) can be calculated as follows, based on Eq. (7).

where Ω represents the collection of all the multi-index combinations {i1, ⋯, iD} that satisfy K + 1 ≤ |i| ≤ Q; and \( {x}_{j.{i}_j} \) and \( {\omega}_{j.{i}_j} \) are the node and weight coefficients in the jth dimension, respectively, which correspond to one small-size direct full tensor product.

The partial derivative with respect to the node in Eq. (20) can be calculated in the same manner as the FFNI. However, given that SGNI employs the special tensor product, which is a combination of certain small-size direct full tensor products, for the removal of several unimportant integration nodes, the number of one-dimensional nodes for each dimension in the small-size full tensor product may be mj = 1, 2, ⋯, which cannot satisfy mj ≥ 3. Therefore, minor modifications should be made. Using the two-dimensional node with K = 2 as an example for illustration, five groups of small-size full tensor products were generated using SGNI (see Fig. 3). The partial derivative with respect to xj can be calculated with respect to the following two cases.

Case 1: when there are one or two nodes present in the jth dimension xj for a given group, as illustrated in Fig. 3 (Case 1). The partial derivative cannot be calculated in this case. Several additional nodes based on the original response function are employed for the calculation of the partial derivative, and the induced number of function evaluations is large, especially for higher-dimensional problems. Therefore, the response values of the added nodes are approximately calculated by the PC model, to reduce the computational cost. The partial derivative is then calculated in the same manner as that of FFNI presented above. Given that the PC method is a metamodel in the stochastic domain, such an approximation of the original function response on a few nodes during the design sensitivity analysis calculation would definitely induce some error especially when nonlinear terms with higher polynomial order are involved in the response function. However, such error can be reduced to some extent by increasing the order of PC model. Here, the added nodes are selected as the Gaussian quadrature nodes to ensure a high accuracy.

Case 2: when there is a minimum of three nodes in the jth dimension, as shown in Fig. 3 (Case 2). The calculation procedure of the partial derivative is the same as that of the FFNI.

Compared with FFNI, the application of SGNI to design sensitivity analysis by the proposed method would clearly save the number of functional calls and relieve the curse of dimensionality, which however would reduce the accuracy. As fewer sample points are employed, the accuracy of PC model by SGNI is reduced, which would further reduce the accuracy of statistical moment calculation of output response as well as output response prediction, and finally decrease the accuracy of design sensitivity analysis.

For certain larger-scale problems with very high-dimensional random inputs, adaptive methods have been developed by removing several unimportant orthogonal polynomials from the full PC model, or by the adaptive collocation of the integration nodes during the calculation of the PC coefficients for the reduction of the computational cost (Blatman and Sudret 2011; Perkó et al. 2014; Lucor and Karniadakis 2004). In this case, the design sensitivity is calculated using the developed PC model in the same manner without any modifications. In this paper, the Gaussian-type quadrature formula was employed for constructing PC model. However, due to its high flexibility and low computational cost, the least square estimation method is increasingly applied to the PC method for uncertainty propagation and robust optimization. Therefore, it is necessary to implement a design sensitivity analysis for the least square estimation-based PC method for robust optimization in future work.

4 Comparative studies

To demonstrate the effectiveness of the proposed semi-analytical design sensitivity analysis method, the evaluation of the design sensitivity of two mathematical examples is presented in Section 4.1, followed by the robust optimization of three mathematical examples using the proposed design sensitivity method in Section 4.2. The order of the PC models was set as p = 2, the number of one-dimensional Gaussian quadrature nodes for the FFNI was set as m = 3, and the accuracy level for the SGNI was set as K = 2. Based on the data employed for the statistical moment estimation using the PC method, the design sensitivity was derived for the PC model using the proposed semi-analytical DSA method (denoted by DSA-PC). The traditional finite difference method (FDM) in conjunction with the PC method was also employed for comparison (denoted by FDM-PC), in which the PC method was used for the statistical moment calculation, and the FDM for the sensitivity analysis, by carrying out uncertainty propagation (UP) with the PC method at two points adjacent to the current design point. For the FDM, to ensure the accuracy as much as possible, the central difference method was used to calculate the design sensitivity at xj in Section 4.1.

The step size clearly has a substantial impact on the calculated partial derivative. Therefore, before calculating design sensitivity, a step convergence test that calculates design sensitivities with different step sizes was conducted, based on which a suitable step size was selected for each example in Section 4.1; while for robust optimization in Sections 4.2 and 4.3, as the optimization toolbox in MATLAB was employed, Δ and the finite difference method (central/back/forward-difference) were automatically assigned by the optimization algorithm in MATLAB. The design sensitivity was simultaneously calculated by the FDM based on the estimated statistical moments with the MCS (denoted by FDM-MCS), for which the MCS was employed for the statistical moment estimation, and the FDM for the sensitivity analysis, by carrying out UP with MCS at two points adjacent to the current design point. The results generated by FDM-MCS were employed as the reference values. The total number of function calls (N) required by the moment estimation and design sensitivity analysis was compared. The sequential quadratic programming (SQP) provided in MATLAB was employed as the optimization algorithm considering its high efficiency and good performance. As SQP is a gradient-based optimization algorithm, a local optimal solution may be obtained if a poor initial point is provided. Therefore, as is commonly done in practice, optimization with different starting points is tested to obtain the optimal solutions, among which the best feasible one is selected as the final optimal solution.

4.1 Design sensitivity analysis of mathematical examples

A two-dimensional problem with normal distributed random inputs was first tested:

The FFNI was employed to calculate the PC coefficients. The convergence test for MCS with different number of sample points (n) was firstly done for this example to determine a suitable number of sample points (n), and the 95% confidence interval that was estimated by running 20 times the MCS was calculated. The results of the convergence test were shown in Fig. 4. It was found that when n = 107, the confidence interval was very small and the mean and standard deviation tended to be constants, indicating that the results are accurate enough and very close to the real values. Therefore, to ensure the effectiveness of comparison, MCS with n = 107 were conducted to estimate statistical moments for FDM-MCS. For all the rest mathematical examples (Sections 4.1 and 4.2), the same convergence test was also done for FDM-MCS to determine a suitable n for MCS firstly. For space limit, the results of the convergence test for the rest examples were not shown. The step size was set as Δ = 10−4 considering accuracy after the step convergence test.

The results obtained by the three approaches (DSA-PC, FDM-PC, FDM-MCS) were shown in Table 1. The proposed DSA-PC method was found to generally produce the same results as the FDM-PC, which were similar to those generated by the FDM-MCS. However, the DSA-PC required significantly fewer function calls than FDM-PC (9 vs. 36), as it carried out no additional function calls to calculate the design sensitivity.

A higher-dimensional problem (d = 10) with normal distributed random inputs was then tested to verify the effectiveness of the proposed method in a higher-dimensional design sensitivity analysis. The step size was set as Δ = 10−4.

For the DSA-PC and FDM-PC, the PC coefficients were calculated by SGNI, given that the dimension was relatively high. Similarly, for FDM-MCS, the number of sample points of MCS was set as n = 107 by the convergence test. The results obtained by DSA-PC, FDM-PC, and FDM-MCS were shown in Table 2. The proposed DSA-PC method was found to basically produce very similar results to the FDM-PC, and the results of both methods were similar to those of the FDM-MCS. However, the FDM-PC generally exhibited a slightly higher accuracy than the DSA-PC. This can be attributed to the use of SGNI for the calculation of the PC coefficients for the DSA-PC, in which several response values were approximated by the PC method instead of referring to the original response function, to reduce the computational cost. Thus, a degree of error was presented into the design sensitivity analysis, which only had a slight influence, given that the approximation by the PC was relatively accurate. However, the design sensitivity with respect to x4 produced by the DSA-PC was clearly less accurate than that of the FDM-MCS; the difference of DSA-PC relative to FDM-PC is up to 15.43%. This is because the response function was more nonlinear with respect to x4, and the PC model with order p = 2 would produce relatively large error on response prediction, which would induce large error on sensitivity calculation for x4 when SGNI is employed. The FDM-PC exhibited a higher accuracy than the DSA-PC, but the DSA-PC required a significant lower number of function calls (231 vs. 4620) due to the employment of the proposed design sensitivity method. To reduce such error, the order of PC model is increased from p = 2 to p = 3 and the design sensitivities were calculated again. As the errors of sensitivities with respect to x4 were relatively large with p = 2, here only results with respect to x4 are shown: \( \mathrm{d}{\mu}_g/\mathrm{d}{\mu}_{x_4} \) = 0.1419, \( \mathrm{d}{\sigma}_g/\mathrm{d}{\mu}_{x_4} \) = 0.1197. Cleary, with the increase of PC order, the accuracy of design sensitivity by the proposed DSA-PC method is improved. The results from the two examples demonstrate the accuracy and effectiveness of the proposed semi-analytical design sensitivity method.

4.2 Robust optimization of mathematical examples

4.2.1 Example 1

The two random design variables x1 and x2 were assumed to be normally distributed with the standard deviation as σ = 0.4. The robust optimization was carried out using the DSA-PC, FDM-PC, and FDM-MCS (with n = 107 for MCS); and FFNI was employed to calculate the PC coefficients. In optimization, the starting point was set as x0 = [3.0, 5.0]. Once optimization is completed, the confirmed results (Fc and Gc) were obtained by substituting the obtained optimal design variables into the original functions (f and g), respectively, using the MCS method (n = 107) with the consideration of the same uncertainties. The optimal design variables, the confirmed results, and the total number of function calls of the three methods were presented in Table 3.

As can be seen from Table 3, the three methods obtained feasible optimal solutions with the satisfied constraint G. Moreover, the optimal design variables (\( {\mu}_{x1}^{\ast },{\mu}_{x2}^{\ast } \)), in addition to the confirmed objectives of the FDM-PC and DSA-PC, were slightly different. However, they were very similar to those generated by the FDM-MCS. As the value of constraint G = μg − 3σg ≥ 0 depends on μg, and the magnitude order of the constraint function G is small (10−1), a small difference in \( {\mu}_{x1}^{\ast } \) or \( {\mu}_{x2}^{\ast } \) would induce relatively large difference in Gc (FDM-MCS: 0.2149; DSA-PC: 0.2968; FDM-PC = 0.2580). Therefore, the error of Gc seems substantially high. To reduce such error, a higher PC order for constraint function g can be employed. However, for practical optimization problems, it is required to find an effective design solution that is feasible (i.e. satisfies the constraints), and it is the optimal design solution that is cared about by users, not the exact values of constraints (only need to be feasible). Moreover, the total number of function calls (N) during optimization was reduced by 27.8% using the proposed DSA-PC method when compared with the FDM-PC.

4.2.2 Example 2

The distribution parameters of the random variables and random parameters are shown in Table 4. The robust design optimization was carried out using the DSA-PC, FDM-PC, and FDM-MCS (n = 107 for MCS). The FFNI was employed to calculate the PC coefficients for the moment estimation. In optimization, the starting point was set as x0 = [3.0, 0.1].

Table 5 presents the optimization results, in which Fc, \( {G}_1^c \), and \( {G}_2^c \) represent the results confirmed using the MCS method (n = 107). As can be seen from Table 5, the results obtained by the FDM-PC and DSA-PC were approximately equal, which were in good agreement with those obtained by the FDM-MCS. Moreover, when compared with the FDM-PC, the function evaluations of the original objective f and constraints g for the DSA-PC were both reduced by 38.9%. The proposed DSA-PC method can significantly reduce the computational cost while maintaining an adequate accuracy of design sensitivity.

4.2.3 Example 3

The distribution parameters of the random variables are shown in Table 6, in which “Range” represents the design region of the design variable during optimization; “Std” denotes the standard deviation of the distribution; and A and B represent the upper and lower limits of the uniform distributed random design variable, respectively. The robust design optimization was carried out using the DSA-PC, FDM-PC, and FDM-MCS (n = 107 for MCS). Considering that it was a relatively high-dimensional problem, SGNI was employed to calculate the PC coefficients for the moment estimation. In optimization, the starting point was set as x0 = [8.0, 4.0, 2.0, 1.0, 3.0, 1.0, 5.0, 6.0].

As can be seen from the optimization results shown in Table 7, the optimal solutions obtained by the FDM-PC and DSA-PC were very similar, but the FDM-PC exhibited a slightly higher accuracy than the DSA-PC. This can be attributed to the use of additional nodes based on the approximation of PC model, for the calculation of a sensitivity term, which introduces a degree of error into the final design sensitivity. Such error does not have large impact on the optimal solution of this example when p = 2, even if the highest order of the response functions is 4, demonstrating the effectiveness of approximation with PC for high-dimensional problems in the DSA-PC. However, when compared with the FDM-PC, the DSA-PC reduced the required number of function evaluations of the original objective f and constraint g by 43.0%. The proposed DSA-PC method can significantly reduce the computational cost while maintaining an adequate accuracy of design sensitivity.

Further, to investigate the impact of sensitivity estimation error induced by Lagrange linear interpolation on the optimization convergence speed, the evolutions of the objective function (F) for the proposed DSA-PC and the FDM-MCS during the optimization process for the three examples above were shown in Figs. 5, 6, and 7, respectively. Three noteworthy observations were made.

Firstly, it was found that with the increase of iteration number, the values of F for both methods (DSA-PC and FDM-MCS) for the three examples were generally decreased rapidly, and remained almost unchanged at the end of optimization, indicating that the optimal solutions were convergent. Meanwhile, the values of F produced by both approaches were close to each other, especially for Example 2. Generally, only slightly more (1 or 3) iteration numbers were required by DSA-PC.

Secondly, compared with Example 1 and Example 2, more iterations were required for Example 3. The interpretation is as follows. Example 3 was relatively a high-dimensional (d = 8) problem; and meanwhile, the SGNI technique was employed for calculating the PC coefficients, and the response values of some added nodes were approximately calculated by the PC model, inducing another source of error in sensitivity calculation. However, from Fig. 7, it was noticed that F of the proposed DSA-PC method rapidly approached to the optimal solution.

Thirdly, it was also noticed that the difference in F during the whole iteration process produced by both methods for Example 1 was the largest, which was relatively large even at the initial starting design point. The interpretation is that for Example 1, the response function f(x1, x2) is relatively more nonlinear and the standard deviation of design variable is relatively large (σ=0.4, 8% ~ 12% of the mean value), and thus relatively large errors would be induced in the statistical moment calculation using 2-order PC and further in sensitivity analysis. However, from the optimal results shown in Table 3, it was found that the results produced by DSA-PC were close to that of FDM-MCS, which further demonstrated the high accuracy of sensitivity estimation.

All these observations indicated that the sensitivity estimation error induced by Lagrange linear interpolation of the proposed DSA-PC method had a very small impact on the optimization convergence, which was attributed to the high accuracy of sensitivity estimation.

4.3 Airfoil robust optimization design

The proposed DSA-PC method was applied to an airfoil robust optimization problem, and the NACA0012 airfoil was considered the baseline (Wang et al. 2019b). The objective was the determination of the optimal geometric parameters of the airfoil, to maximize the lift-drag ratio of the airfoil subject to the thickness constraint of the airfoil. The nominal flow condition considered corresponds to the angle of attack α = 1.55°, Mach number Ma = 0.70 and Reynolds number Re = 9e6. It is a typical flight Reynolds number of a commercial aircraft. The B-spline curve (Wang and Liu 2016) with eight control points was employed for the shape parameterization of the airfoil, where the horizontal locations of the eight control points were fixed as l = [0.2, 0.4, 0.6, 0.8, 0.8, 0.6, 0.4, and 0.2] and the vertical locations were free to move, as shown in Fig. 8. The original airfoil was considered the baseline with the vertical locations of the eight control points as zbaseline = [0.0574, 0.0580, 0.0456, 0.0262, − 0.0262, − 0.0456, − 0.0580, and − 0.0574]. The vertical locations of the eight control points were considered design variables x = [x1, ⋯, x8].

Clearly, from the physical mechanism, Re and α have large impacts on the result of CFD simulation. Generally, only one category of fluid viscosity is investigated in a study, and it is impossible to study the impacts of all the different kinematic viscosities. Therefore, as for the impact of Re, we mainly considered the influence of Ma. For robust optimization, all the design variables xi(i = 1, …, 8) were assumed to be uncertain considering the manufacturing error and in accordance with the normal distribution, with the standard deviation as \( {\sigma}_{x_i}=0.0015 \). Meanwhile, Ma and α were assumed to follow uniform distribution with variations ±0.1 and ±0.5° around their nominal values (α = 1.55, Ma = 0.70), respectively. The airfoil robust optimization was formulated as follows.

where \( {\mu}_{T_w} \) is the mean value of the maximum relative thickness Tw.

Computational fluid dynamics (CFD) was employed for the aerodynamic analysis, to obtain the lift and drag. In addition, the mesh was generated using the CFD pre-processor Gambit software. During the aerodynamic analysis, the k-omega two-equation turbulent model was employed, and Fluent 17.0 was used as the CFD solver. In order to verify the accuracy of the result of CFD simulation, the grid convergence test was firstly performed on the baseline airfoil at the nominal flow condition (α = 1.55°, = 0.70, and Re = 9e6) and the results were shown in Table 8. From Table 8, it was observed that when the grid density was increased to some value (far field: 150; airfoil boundary: 300), the lift-drag ratio remained almost unchanged. Thus, the number of nodes in the grid far field was set as 150, whereas the number of airfoil boundary nodes was set as 300 in CFD simulation of this study.

Then, the lift (Cl) and drag (Cd) coefficients of the baseline NACA 0012 for the nominal flow condition (α = 1.55°, = 0.70 and Re = 9e6) calculated by CFD were compared with the experimental data from Harris’s test in the Langley 8-Foot Transonic Pressure Tunnel (Harris 1981), which were shown in Table 9. It was noticed that the CFD results were very close to the experimental data, validating the effectiveness of CFD analysis in this work.

Robust optimization was carried out using the DSA-PC and FDM-PC, and the deterministic optimization (DO) was carried out without considering any uncertainties for comparison. Moreover, SGNI with an accuracy level of K = 2 was employed for the PC coefficient calculation. For\( {\mu}_{T_w} \), given that it did not require a computationally complex CFD analysis, it was directly obtained using the MCS method.

The optimal airfoil geometries generated using the DSA-PC, FDM-PC, and DO were shown in Fig. 9. It was observed that when compared with the original airfoil, the airfoils optimized using the deterministic optimization (DO) and robust optimization (RO) methods were both more bent, and the curvatures of the upper wings were increased, especially for the one produced by DO, which increased the airflow speed. Therefore, the air pressure of the upper wing decreased after optimization, resulting in an increase in the lift coefficient. In addition, the thickness of the leading edge of the optimized airfoils was obviously reduced compared with the original baseline one, which would weaken the shock wave area, and thus reduce the drag coefficient. Finally, an increase in lift-drag ratio was obtained through optimization. Furthermore, the airfoil generated from the robust optimization using the DSA-PC was almost overlapped to that of the FDM-PC. These results demonstrate the effectiveness of the proposed DSA-PC method.

The static pressure contours were shown in Fig. 10. As can be seen from the figure, when compared with the baseline airfoil, the static pressures of the upper surfaces of the airfoils generated by the RO and DO methods were smaller (the area in blue increased after optimization); whereas the static pressures of the lower surface were larger (the area in green increased after optimization). This indicates an increase in the differential pressure between the upper and lower surfaces; thus, there would be an increase in the lift of the airfoil after optimization. Moreover, the static pressure cloud produced by the DSA-PC was very similar to that of the FDM-PC. These results further demonstrate the effectiveness of the proposed DSA-PC method.

The pressure coefficients of the upper and lower surfaces of the airfoil were illustrated in Fig. 11. It could be noticed that compared with the baseline airfoil, the upper surface pressure coefficients of all the optimized airfoils were decreased, while the lower surface pressure coefficients were increased. Meanwhile, the gap between the lower and upper surfaces was increased after optimization, which was the largest for DO. These results indicate that the pressure difference between the upper and lower surfaces is increased, resulting in an increase in lift after optimization.

The evolution of the objective function (F) during the optimization process was shown in Fig. 12. As the computational cost using MCS-PC (MCS was directly employed for statistical moment calculation) was relatively large, it was not tested for this example and only FDM-PC was compared. It was found that with the increase of iteration number, the values of objective function F of both methods (DSA-PC and FDM-PC) were generally decreased, and remained almost unchanged at the end of the optimization, indicating that the optimal results were convergent. Meanwhile, the values of F produced by DSA-PC and FDM-PC were similar to each other, and slightly more iterations were required by DSA-PC. These observations showed great agreements with those of the mathematical examples in Section 4.2. For DSA-PC, SGNI was used as it was relatively a high-dimensional problem, in which PC was employed to approximate the response values of some added nodes, and thus more or less errors would be induced. Therefore, a larger iteration number of DSA-PC was required. However, such approximation basically did not have any impact on the obtained performance of the airfoil.

The optimal results of the different methods are shown in Table 10, in which N represents the function evaluations of the CFD for the calculation of the lift-drag ratio during optimization. From Table 10, several noteworthy observations can be made. First, both RO and DO increased the lift-drag ratio of the airfoil (μf) by optimization and satisfied the thickness constraint. This was consistent to the results shown in Figs. 6, 7, and 8, that the lift was increased and the drag was decreased after optimization. Second, the obtained lift-drag ratio (μf) of the DO was larger than those of the two ROs; whereas the standard deviation (σf) of the two ROs were significantly smaller, thus exhibiting better robustness with respect to uncertainties. This was consistent with the scenario of robust optimization. Third, the airfoil thickness of the leading edge of the two ROs and DO was obviously reduced compared with that of the original baseline airfoil, and it was decreased more for ROs, which could result in a decrease in the drag coefficient. This perfectly corresponded to the drag valueμCdthat it was decreased after optimization. In conclusion, the results provided in Figs. 9, 10, 11, and 12 and Table 10 suggested that the increase in the lift-drag ratio for DO was mainly ascribed to the increase in lift, while it was mainly ascribed to the decrease in drag for ROs. Fourth, the results of the proposed DSA-PC were similar to those of the FDM-PC, whereas the DSA-PC significantly reduced the computational cost (N) by approximately 41.6% due to the employment of the semi-analytical design sensitivity analysis method. All these results presented above demonstrate the effectiveness of the proposed DSA-PC method.

In addition, optimization was also done considering only uncertainties from design variables, of which the optimized airfoils were shown in Fig. 13. It was found that the airfoils generated by DO and ROs did not exhibit clear difference, indicating that the uncertainty in airfoil geometry has small impact on the lift-to-drag ratio. According to all the optimal results provided, we can conclude that Ma and α have large impact on the airfoil performance, which should be taken into account during the airfoil design.

5 Conclusion

In this paper, to reduce the computational cost of the calculation of the local design sensitivity during robust design optimization with computationally expensive response functions and difficulty in applying the adjoint method, a semi-analytical sensitivity method is proposed based on the PC method in conjunction with the Gaussian quadrature formulae. With the proposed method, the local design sensitivity during the optimization iteration is directly derived in the PC model using the nodes for the statistical moment calculation, instead of employing many additional function evaluations. Several mathematical examples and an aerodynamic robust optimization problem were used to demonstrate the effectiveness of the proposed design sensitivity analysis method. The results proved that the proposed method can lead to satisfactory design sensitivities and optimal solutions that are close to those produced by the traditional finite difference method, while clearly reduce the computational cost of robust optimization. For input random dimension within 10, the proposed method can save up to 43.0% functional calls. The higher of the random input dimension, the more obvious of the computational saving. For problems with highly nonlinear response functions, the proposed method with a low PC order may yield evident errors, which however can be reduced by increasing the PC order. Future work will be focused on developing a design sensitivity analysis approach for the least square estimation-based PC method for robust optimization.

Abbreviations

- b :

-

Coefficients of polynomial chaos model

- d :

-

Dimension of design variables

- N :

-

Number of function evaluations

- m :

-

Dimension of random parameters

- E(•):

-

Operation of calculation expectation

- f(x):

-

Objective function

- g(x):

-

Constraint function

- p :

-

Order of polynomial chaos model

- K :

-

Accuracy level of sparse grid

- x :

-

Random design vector

- x :

-

Random design variable

- q :

-

Random parameter vector

- q :

-

Random parameter variable

- ω :

-

Gauss-type quadrature weight coefficient

- y(x):

-

Output response

- μ :

-

Mean value

- σ :

-

Standard deviation value

- Φ:

-

Multi-dimensional orthogonal polynomial

- φ :

-

One-dimensional orthogonal polynomial basis function

- DSA:

-

Design sensitivity analysis

- FDM:

-

Finite difference method

- FFNI:

-

Full factorial numerical integration

- MCS:

-

Monte Carlo simulation

- SGNI:

-

Sparse grid numerical integration

References

Abramowitz M, Stegun IA, Romer RH (1966) Handbook of mathematical functions with formulas, graphs, and mathematical tables. Phys Today 19(1):120–121. https://doi.org/10.1115/1.3625776

Beyer HG, Sendhoff B (2007) Robust optimization–a comprehensive survey. Comput Methods Appl Mech Eng 196:3190–3218. https://doi.org/10.1016/j.cma.2007.03.003

Blatman G, Sudret B (2011) Adaptive sparse polynomial chaos expansion based on least angle regression. J Comput Phys 230(6):2345–2367. https://doi.org/10.1016/j.jcp.2010.12.021

Cheng Q, Wang SW, Yan CC (2016) Robust optimal design of chilled water systems in buildings with quantified uncertainty and reliability for minimized life-cycle cost. Energ Buildings 126:159–169. https://doi.org/10.1016/j.enbuild.2016.05.032

Cho H, Choi KK, Lee I et al (2016) Design sensitivity method for sampling-based RBDO with varying standard deviation. ASME J Mech, Des 138(1):011405. https://doi.org/10.1115/1.4031829

Dodson M, Parks GT (2015) Robust aerodynamic design optimization using polynomial chaos. J Aircraft 46(2):635–646. https://doi.org/10.2514/1.39419

Du X, Leifsson L (2019) Optimum aerodynamic shape design under uncertainty by utility theory and metamodeling. Aerosp Sci Technol 105464. https://doi.org/10.1016/j.ast.2019.105464

Fisher J, Bhattacharya R (2011) Optimal trajectory generation with probabilistic system uncertainty using polynomial chaos. J Dyn Sys, Meas, Control 133(1):014501. https://doi.org/10.1115/1.4002705

Ghisu T, Shahpar S (2017) “Toward affordable uncertainty quantification for industrial problems-part i: theory and validation.” ASME Turbo Expo 2017: Turbomachinery Technical Conference and Exposition, 26–30 June, North Carolina, USA

Ghisu T, Shahpar S (2018) Affordable uncertainty quantification for industrial problems: application to aero-engine fans. J Turbomach 140(6):061005–061005-12. https://doi.org/10.1115/1.4038982

Harris CD (1981) Two-dimensional aerodynamic characteristics of the NACA 0012 airfoil in the Langley 8-Foot Transonic Pressure Tunnel. NASA Ames Research Center, NASA TM 81927

Janusevskis J, Rodolphe LR (2013) Simultaneous kriging-based estimation and optimization of mean response. J Global Optim 55(2):313–336. https://doi.org/10.1007/s10898-011-9836-5

Keshavarzzadeh V, Meidani H, Tortorelli DA (2016) Gradient based design optimization under uncertainty via stochastic expansion methods. Comput Method Appl M 306:47–76. https://doi.org/10.1016/j.cma.2016.03.046

Keshavarzzadeh V, Fernandez F, Tortorelli DA (2017) Topology optimization under uncertainty via non-intrusive polynomial chaos expansion. Comput Method Appl M 318:120–147. https://doi.org/10.1016/j.cma.2017.01.019

Kumar D, Raisee M, Lacor C (2018) Combination of polynomial chaos with adjoint formulations for optimization under uncertainties. In: Hirsch C et al (eds) Uncertainty management for robust industrial design in aeronautics. Springer, Cham. https://doi.org/10.1007/978-3-319-77767-2_35

Lee SH, Choi HS, Kwak BM (2008) Multilevel design of experiments for statistical moment and probability calculation. Struct Multidiscip O 37(1):57–70. https://doi.org/10.1007/s00158-007-0215-2

Lee SH, Chen W, Kwak BM (2009) Robust design with arbitrary distributions using Gauss-type quadrature formula. Struct Multidiscip O 39(39):227–243. https://doi.org/10.1007/s00158-008-0328-2

Lee I, Choi KK, Zhao L (2011a) Sampling-based RBDO using the stochastic sensitivity analysis and dynamic kriging method. Struct Multidiscip O 44(3):299–317. https://doi.org/10.1007/s00158-011-0659-2

Lee I, Choi KK, Noh Y et al (2011b) Sampling-based stochastic sensitivity analysis using score functions for RBDO problems with correlated random variables. ASME J Mech, Des 133(2):021003. https://doi.org/10.1115/1.4003186

Li J, Gao ZH, Huang JY, Zhao K (2013) Robust design of NLF airfoils. Chin J Aeronaut 26(2):309–318. https://doi.org/10.1016/j.cja.2013.02.007

Lucor D, Karniadakis GE (2004) Adaptive generalized polynomial chaos for nonlinear random oscillators. SIAM J Sci Comput 26(2):720–735. https://doi.org/10.1137/S1064827503427984

Miranda J, Kumar D, Lacor C (2016) Adjoint-based robust optimization using polynomial chaos expansions. In: VII European congress on computational methods in applied sciences and engineering, 5–10 June, Crete Island, Greece

Perkó Z, Gilli L, Lathouwers D, Kloosterman JL (2014) Grid and basis adaptive polynomial chaos techniques for sensitivity and uncertainty analysis. J Comput Phys 260(3):54–84. https://doi.org/10.1016/j.jcp.2013.12.025

Rehman S, Matthijs L, Fred VK (2014) Efficient kriging-based robust optimization of unconstrained problems. J Comput Phys 5(6):872–881. https://doi.org/10.1016/j.jocs.2014.04.005

Rumpfkeil MP (2013) Robust design under mixed aleatory/epistemic uncertainties using gradients and surrogates. J Uncertainty Anal Appl (1):7. https://doi.org/10.1186/2195-5468-1-7

Suryawanshi A, Ghosh D (2016) Reliability based optimization in aeroelastic stability problems using polynomial chaos based metamodels. Struct Multidiscip O 53:1069–1080. https://doi.org/10.1007/s00158-015-1322-0

Wang GL, Liu PQ (2016) A method of shape parameterization based on B-spline for wing design. Civil Aircr Desig Res (3):6–15. (In Chinese). https://doi.org/10.19416/j.cnki.1674-9804.2016.03.002

Wang F, Yang S, Xiong F et al (2019a) Robust trajectory optimization using polynomial chaos and convex optimization. Aerosp Sci Technol 92:314–325. https://doi.org/10.1016/j.ast.2019.06.011

Wang F, Xiong F, Chen S et al (2019b) Multi-fidelity uncertainty propagation using polynomial chaos and Gaussian process modeling. Struct Multidiscip O 4(60):1583–1604. https://doi.org/10.1007/s00158-019-02287-7

Wu X, Zhang W, Song S (2017) Robust aerodynamic shape design based on an adaptive stochastic optimization framework. Struct Multidiscip O 57(3):1–13. https://doi.org/10.1007/s00158-017-1766-5

Wu X, Zhang W, Song S (2018) Robust aerodynamic shape design based on an adaptive stochastic optimization framework. Struct Multidiscip O 57:639–651. https://doi.org/10.1007/s00158-017-1766-5

Xia Z, Luo J, Liu F (2019) Performance impact of flow and geometric variations for a turbine blade using an adaptive NIPC method. Aerosp Sci Technol 90:127–139. https://doi.org/10.1016/j.ast.2019.04.025

Xiong FF, Xiong Y, Greene S et al (2010) A new sparse grid based method for uncertainty propagation. Struct Multidiscip O 27(3):1118–1139. https://doi.org/10.1007/s00158-009-0441-x

Xiong FF, Yang SX, LIU Y et al (2015a) Analysis method of engineering probability uncertainty. Science Press (In Chinese), Beijing. https://doi.org/10.1016/j.tws.2007.05.007

Xiong F, Xiong Y, Xue B (2015b) Trajectory optimization under uncertainty based on polynomial chaos expansion. AIAA Guidance, Navigation, and Control Conference, 5-9 Jan, Kissimmee, Florida. AIAA 2015-1761. https://doi.org/10.2514/6.2015-1761

Xiu D, Karniadakis GE (2002) The Wiener-Askey polynomial chaos for stochastic differential equations. SIAM J Sci Comput 24(2):619–644. https://doi.org/10.1137/S1064827501387826

Xiu D, Karniadakis GE (2003) Modeling uncertainty in flow simulations via generalized polynomial chaos. J Comput Phys 187:137–167. https://doi.org/10.1016/S0021-9991(03)00092-5

Zhang Y (2013) “Efficient uncertainty quantification in aerospace analysis and design.” Ph.D dissertation, Missouri University of Science and Technology

Zhang Y, Serhat H (2013) Robust design optimization under mixed uncertainties with stochastic expansions. J Mech Design 135(8):081005. https://doi.org/10.1115/1.4024230

Zhang Y, Zhu P, Chen GL (2007) Lightweight design of automotive front side rail based on robust optimization. Thin Wall Struct 45(7):670–676. https://doi.org/10.1016/j.tws.2007.05.007

Funding

The work was supported by the National Numerical Wind Tunnel Project (grant number NNW2020ZT7-B31) and Science Challenge Project (grant number TZ2018001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Replication of results

The results shown in the manuscript can be reproduced. Considering the size limit of the uploaded supplementary material, the codes for two of the mathematical examples for UP (Example 1 in Section 4.1 and Example 2 in Section 4.2) were uploaded as supplementary material. The remaining examples are very easy to implement by changing the response functions and sample points based on the codes provided to obtain the results shown in the manuscript.

Additional information

Responsible Editor: Erdem Acar

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(Zip 73.8 kb)

Rights and permissions

About this article

Cite this article

Ren, C., Xiong, F., Mo, B. et al. Design sensitivity analysis with polynomial chaos for robust optimization. Struct Multidisc Optim 63, 357–373 (2021). https://doi.org/10.1007/s00158-020-02704-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-020-02704-2