Abstract

Variable correlation commonly exists in practical engineering applications. However, most of the existing polynomial chaos (PC) approaches for uncertainty propagation (UP) assume that the input random variables are independent. To address variable correlation, an intrusive PC method has been developed for dynamic system, which however is not applicable to problems with black-box-type functions. Therefore, based on the existing data-driven PC method, a new non-intrusive data-driven polynomial chaos approach that can directly consider variable correlation for UP of black-box computationally expensive problems is developed in this paper. With the proposed method, the multivariate orthogonal polynomial basis corresponding to the correlated input random variables is conveniently constructed by solving the moment-matching equations based on the correlation statistical moments to consider the variable correlation. A comprehensive comparative study on several numerical examples of UP and design optimization under uncertainty with correlated input random variables is conducted to verify the effectiveness and advantage of the proposed method. The results show that the proposed method is more accurate than the existing data-driven PC method with Nataf transformation when the variable distribution is known, and it can produce accurate results with unknown variable distribution, demonstrating its effectiveness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Uncertainty propagation (UP) methods, which could be used to quantify uncertainty in system output performance based on random or noisy inputs, are of great importance for design under uncertainty especially for problems with computationally expensive simulation analysis models of black-box type (such as finite element analysis and computational fluid dynamics). A variety of probabilistic UP approaches have been developed (Lee and Chen 2009), among which the polynomial chaos (PC) technique is a rigorous one due to its strong mathematical basis. By using PC, the function with random inputs can be represented as a stochastic meta-model, based on which lower-order statistical moments as well as reliability of the function output can be derived efficiently to facilitate the implementation of design optimization under uncertainty scenarios like robust design optimization (Xiong et al. 2011a) and reliability-based design optimization (Coelho and Bouillard 2011). As it has high efficiency and accuracy for UP, the PC method has been extensively applied to engineering problems, such as the propagation of uncertainty in composite structures (Mukhopadhyay et al. 2016), the robust aerodynamic optimization of airfoil (Dodson and Parks 2015), marine vessel (Wei et al. 2018), and trajectory of the flight vehicle (Prabhakar et al. 2010).

The original PC method employs the Hermite orthogonal polynomial as the basis, which exhibits slow convergence when the input random variable follows non-Gaussian distribution (William and Meecham 1968). To address this issue, Xiu and Karniadakis extended the original PC method to a generalized one (gPC) that can directly deal with five typical types of distribution based on the Askey scheme (Xiu and Karniadakis 2003). In addition, to solve UP problems with arbitrary distribution forms and improve the applicability of the PC method, a Gram-Schmidt PC method (GS-PC) using Schmidt orthogonalization and a multi-element generalized PC method (ME-PC) were developed by Wittevee and Bijl (Witteveen and Bijl 2013) and Wan and Karniadakis (Wan and Karniadakis 2006), respectively. However, due to cost limitations, the complete probabilistic distribution of input random variable may not exist with insufficient data in practical engineering.

To solve this problem, some non-probabilistic methods of UP could be used, such as random sets (Zhang and Achari 2010), interval probabilities (Xiao et al. 2016; Liu et al. 2019), interval theory (Xia et al. 2017; Li et al. 2017), fuzzy logic (Abou 2012), etc. On the other hand, in the classical probability theory, Oladyshkin and Nowak proposed a non-intrusive data-driven PC (DD-PC) method without referring to the distribution functions, with which the univariate orthogonal polynomial basis is constructed by matching certain order of statistical moments of the input random variable based on the discrete data (Oladyshkin et al. 2011; Oladyshkin and Nowak 2012). Later, to improve the accuracy and efficiency of the DD-PC method, they applied the Bayesian method to calibrate the DD-PC model (Oladyshkin et al. 2013). To solve higher-dimensional UP problems, an enhanced DD-PC method was developed by Wang et al. through extending the Galerkin projection method to DD-PC, in which the quadrature nodes and weights required by PC coefficient calculation were obtained by solving the moment-matching equations (Wang et al. 2017). Zhou et al. improved the accuracy of DD-PC method with equilibrium sampling strategy and weighted least-square method (Guo et al. 2019). The DD-PC methods have demonstrated to have a wider scope of application compared to the existing PC approaches (gPC, GS-PC, ME-PC) that require the complete probabilistic distributions of input random variables.

However, for all the existing PC methods including gPC, GS-PC, ME-PC, and DD-PC, it is generally assumed that the input random variables are independent to each other during the construction of PC model. However, in practical engineering applications, oftentimes, the input random variables are statistically correlated, such as the material properties and fatigue properties in structural analysis (Socie 2003), and the length and width of a beam structure considering earthquake resistance (Du 2008). To deal with the correlated input random variables, the transformation methods, such as Rosenblatt transformation (Rosenblatt 1952), orthogonal transformation (Rackwitz and Flessler 1978) and Nataf transformation (Kiureghian and Liu 1986), can be employed to first transform the correlated random input variables into independent standard normal ones. UP with PC is then conducted with respect to these variables. Noh et al. made a comparative study of Rosenblatt transformation and Nataf transformation and applied them to design optimization under uncertainty of a coil spring with correlation between the inner diameter and the wire diameter (Noh et al. 2009). Song et al. applied the Nataf transformation to statistical sensitivity analysis considering correlated input random variables (Song et al. 2011). However, these transformation methods would inevitably induce errors, which may be large especially when the correlated input random variables follow complex non-Gaussian probabilistic distributions or the response function are highly nonlinear (Lebrun and Dutfoy 2009). Moreover, when the correlated input random variable does not have complete distribution function due to cost limit and exists as data, these transformation approaches that are based on variable probabilistic distribution are clearly inapplicable. In this case, the existing DD-PC methods cannot work, not to mention the PC methods that require the complete probabilistic distribution (such as gPC, GS-PC, and ME-PC). To address this issue, Paulson et al. (Paulson et al. 2017) developed an intrusive arbitrary polynomial chaos method considering correlated input random variables for UP of dynamic system, in which the orthogonal polynomial basis was constructed for correlated random variables using the Gram-Schmidt orthogonalization technique based on the statistical moments of correlated variables and Galerkin projection was employed for PC coefficient calculation (Appendix). Later, Wang et al. (Wang et al. 2019) applied this method to probabilistic load flow. However, for black-box computationally expensive problems, it is impossible to apply the intrusive PC method.

Therefore, as an improvement of the existing DD-PC method, a new non-intrusive DD-PC approach that can directly consider the correlation of input random variables during the orthogonal polynomial construction (short for DD-PC-Corr in this paper) is developed for black-box computationally expensive problems in this work. The multivariate orthogonal polynomial basis is constructed by solving the moment-matching equations based on the correlation statistical moments of input random variables conveniently, rather than resorting to the Gram-Schmidt orthogonalization process that is much more complicated. Meanwhile, a comprehensive comparative study on problems with different nonlinearity and random variable information (different distribution types and raw data) for UP and design optimization under uncertainty are conducted to fully explore the effectiveness and advantage of the proposed method. With the proposed method, the scenario of correlation statistical moment that is defined as certain order of statistical moment considering the variable correlation is introduced based on the discrete data of input random variables. Through matching zero to certain order of correlation statistical moments, the multivariate orthogonal polynomial considering the correlation of input random variables is directly constructed based on moment-matching equations. The regression technique is employed to calculate the PC coefficients. When the probabilistic distribution of correlated random input is known, no transformation is required by the proposed method, and thus, the accuracy of UP would be improved compared to all the existing PC approaches (gPC, GS-PC, ME-PC, and DD-PC). When the probabilistic distributions of correlated random input variables are unknown and only some raw data exist, all the existing PC approaches cannot work, while the proposed DD-PC-Corr method is still applicable.

The rest of this article is organized as follows. The proposed DD-PC-Corr method that can directly consider correlated input random variables is presented in Sect. 2. In Sect. 3, the proposed method is applied to several numerical examples for UP to explore its effectiveness and accuracy. In Sect. 4, the proposed method is further employed to robust design optimization of a mathematical example and reliability-based design optimization of a coil spring problem. Conclusions are drawn in Sect. 5.

2 The proposed DD-PC-Corr method

As an improvement of the existing DD-PC approach, a new non-intrusive DD-PC-Corr method is proposed in this paper to make the PC theory applicable to UP problems with correlated input random variables that do not have complete probabilistic distribution functions. For the existing DD-PC approaches, the univariate orthogonal polynomial basis corresponding to each input random variable is constructed without considering variable correlation, while for the proposed one, the multivariate orthogonal polynomial basis for the correlated input random variables is directly constructed, without referring to transformation. A step-by-step description of the proposed DD-PC-Corr method that can directly consider variable correlation is given as follows. For brevity, a function y = g(x) with a d-dimensional input random vector x = [x1, …, xd] is used for illustration of UP. It is assumed that x1, …, xz(z ≤ d) are correlated to each other, while the others (xz + 1, …, xd) are independent.

-

Step 1: Represent the stochastic output y as a PC model of order H:

where bi represents the ith PC coefficient; ⊗ denotes the tensor product operation; Q + 1 (\( Q+1=\frac{\left(d+H\right)!}{d!H!} \)) is the number polynomial terms in the PC model.

In (1), P(x1, …, xz) and \( \overline{P}\left({x}_{z+1},\dots, {x}_d\right) \) are the orthogonal polynomials corresponding to correlated input random variables x1, …, xz and the independent random variables xz + 1, …, xd, respectively. The numbers of terms for P(x1, …, xz) and \( \overline{P}\left({x}_{z+1},\dots, {x}_d\right) \) are \( \frac{\left(z+H\right)!}{z!H!} \) and \( \frac{\left(d-z+H\right)!}{\left(d-z\right)!H!} \), respectively. \( \overline{P}\left({x}_{z+1},\dots, {x}_d\right) \) is constructed by conducting direct tensor product on the univariate orthogonal polynomial basis as follows:

where \( {\phi_j}^{\left({\alpha}_j\right)}\left({x}_j\right) \) represents the univariate orthogonal polynomial basis constructed by matching statistical moments from 0 to some order of xj.

The construction of \( {\phi_j}^{\left({\alpha}_j\right)}\left({x}_j\right) \) is exactly the same as that of the existing DD-PC methods (Oladyshkin et al. 2011; Oladyshkin and Nowak 2012), and thus is not introduced in detail here. The main contribution of this work lies in the construction of P(x1, …, xz) that can directly consider variable correlation. The kth (\( k=0,1,\dots, \frac{\left(z+H\right)!}{z!H!} \)) multivariate orthogonal polynomial P(k)(x1, …, xz) is defined as

where ps(k) is the polynomial coefficient to be solved, \( {\alpha}_j^s \) is the power of xj, and it clearly satisfies \( 0\le \sum \limits_{j=1}^z{\alpha}_j^s\le H \).

Taking z = 2 and H = 2 for example, there are 6 orthogonal polynomial terms with polynomial order no more than 3 and P(k)(x1, …, xz)(k = 0, 1, …, 5) can be constructed as below:

-

Step 2: Solve polynomial coefficient ps(k) in (3) to generate the multivariate orthogonal polynomial basis considering variable correlation.

According to the orthogonality property of the polynomial, it can be obtained

where δkl is the Kronecker delta, Ωc stands for the original correlated random variable space, and Γ(x) represents the joint cumulative distribution function (CDF) of the correlated random variables.

Given the assumption that all the polynomial coefficients in (3) \( {p}_s^{(k)}\left(k\le \frac{\left(z+H\right)!}{z!H!}\right) \) are not equal to 0 and \( {p}_k^{(k)}=1 \) for simplicity, a new set of equations can be obtained as below by conducting inner product between P(r)(r = 0, 1, …, k − 1) and P(k) based on (5):

By expanding the second equation in (6), one can obtain

From the first equation in (6), it can be derived that

Substituting (8) into (7), (7) can be simplified as

As has been assumed that \( {p}_k^{(k)}=1 \), (9) can be further transformed as

Similarly, by expanding the third equation in (6), one can obtain

Based on the first equation in (6) and (9), it can be derived that

Substituting (12) and (13) into (11), (11) can be simplified as

As \( {p}_2^{(2)}=1 \), (14) can be further transformed as

By respectively employing the same way above on the 4th, 5th,…, kth equation in .(6), one can further obtain k-3 simplified equations. Combing these simplified equations ((10), (15),…), a new set of equations can be obtained as below:

Define \( {\int}_{x_1,\dots, {x}_z\in {\Omega}_c}\underset{j=1}{\overset{z}{\Pi}}{\left({x}_j\right)}^{\left({\alpha}_j^a+{\alpha}_j^b\right)}\boldsymbol{d}\Gamma \left({x}_1,\dots, {x}_z\right)={\mu}_{a,b} \), and rewrite (16) in the matrix form as

In (17), μa, b(a = 0, 1, …, k; b = 0, 1, …, k − 1) is named as the correlation statistical moment of x1, …, xz in this paper, which can directly take the correlation among x1, …, xz into account. If the distribution information is unknown, μa, b can be easily calculated from the given discrete data of x1, …, xz. Equation (17) can be easily solved to obtain the unknown polynomial coefficients in (3), and the construction of multivariate orthogonal polynomial P(k) for correlated input random variables is completed.

-

Step 3: Once P(x1, …, xz) and \( \overline{P}\left({x}_{z+1},\dots, {x}_d\right) \) are constructed, the regression (Isukapalli et al. 2000) or Galerkin projection (Xiu and Karniadakis 2002) techniques can be employed to calculate the PC coefficients bi in the PC model.

It should be pointed out that for the Galerkin projection method, the efficient Gaussian quadrature numerical integration technique is generally employed, where the Gaussian quadrature nodes are required to be calculated. However, for the proposed method, the multivariate orthogonal polynomial for the correlated input random variables is constructed, of which the zeros (Gaussian quadrature nodes) are difficult to obtain. Therefore, other numerical integration methods are employed in replacement of Gaussian quadrature, which however requires a great amount of nodes to calculate the PC coefficients. This is generally unaffordable to problems with computational expensive simulation models. Therefore, the regression method is recommended to calculate the PC coefficients for the proposed method. During sampling, if the distributions of input random variables are known, the Latin Hypercube sampling method considering sample weights (Xiong et al. 2011b) is employed to generate sample points for regression. If only data exist for the input random variables, the input sample points are selected from the given raw data to ensure the space-filling property and spatial uniformity as far as possible.

-

Step 4: Once the PC coefficients are calculated, a cheap stochastic meta-model is constructed, based on which Monte Carlo simulation (MCS) is conducted to obtain the probabilistic characteristics of output y.

For the proposed method, the main computational cost comes from Step 3, as many function calls are required. And the computational cost of Step 1 and Step 2 from the orthogonal polynomial construction is almost negligible compared to Step 3. As an improvement of the existing DD-PC method, the proposed method will be degraded into the existing DD-PC when the variable correlation is zero.

3 Numerical test for uncertainty propagation

In this section, the proposed DD-PC-Corr method is applied to four mathematical examples and one coil spring problem to verify its effectiveness and accuracy for UP with correlated input random variables. The results of MCS obtained by repeatedly calling the original response function with 106 runs are employed as the benchmark to validate the effectiveness of the proposed method. The first four statistical moments (mean, standard deviation, skewness, kurtosis) and the probability of failure of the output response are calculated (short for M, Std, Ske, Kur, and Pf in this paper), of which the errors relative to MCS are calculated.

Clearly, the order of polynomial is an important tuning parameter for PC. In literature, many works have been done to determine it in a scientific way (Hampton and Doostan 2018; Sinou and Jacquelin 2015). However, the main contribution of the proposed method lies in the construction of the orthogonal polynomial basis considering variable correlation using moment-matching, and the rest of procedures such as the calculation of PC coefficients are basically the same as the existing PC approaches. Therefore, the order of the PC model is simply set as H = 3 considering the compromise between accuracy and computational cost. Meanwhile, the regression method (Isukapalli 1999) is employed to calculate the PC coefficients, in which the sample size is set as twice the number of unknown PC coefficients as is commonly done in literature (Hosder et al. 2007).

3.1 Mathematical examples

To fully investigate the effectiveness of the proposed method, four mathematical examples with varying nonlinearity and different distribution forms of input random variables are considered, which are shown in Table 1. In Table 1, N, U, and Rayl represent normal, uniform, and Rayleigh distribution, respectively. For Case 1 to Case 3, the probabilistic distribution of the input random variable is known and correlated, and the existing DD-PC method with Nataf transformation is also tested for comparison. For Case 4, the input random variables do not have probabilistic distributions and are correlated, which exist as some raw discrete data. In this case, the existing DD-PC method with Nataf transformation cannot work, and only the proposed method is tested. For all the cases, different strengths of correlation (ρ = 0, 0.5, 0.8; weak, medium and strong) are considered.

The first four statistical moments (M, Std, Ske, and Kur) and the probability of failure (Pf) of the output response for the proposed DD-PC-Corr method (denoted by Proposed), the existing DD-PC method with Nataf transformation (denoted by Existing) and MCS are shown in Figs. 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, and 16, in which the errors relative to MCS for the proposed and existing methods are shown numerically above the corresponding bars (all the errors are in the form of percentage error). From these figures, some noteworthy observations can be made.

Firstly, for Case 1 to Case 3 with known distributions of the input random variables, the results of the proposed DD-PC-Corr method are clearly much more accurate than the existing DD-PC method with Nataf transformation for various correlation coefficients, which is owed to the direct consideration of the variable correlation in the construction of orthogonal polynomial basis. For the existing method, the Nataf transformation is employed to transform the correlated input random variables into independent standard normal ones when the input random variables are correlated, in which more or less errors would be induced to UP. Specially, for Examples 2–4, as the functions are more nonlinear than Example 1 (linear), the errors of the existing method are clearly larger. For Example 1, as the function is linear, the results produced by the proposed method are almost the same to those of MCS.

Secondly, compared to Case 1, the errors of Case 2 and Case 3 for the existing method are generally larger and the advantage of proposed method in accuracy is more obvious, especially for the errors of skewness and kurtosis. The interpretation is that uniform and Rayleigh distributions are considered in Case 2 and Case 3, and larger errors are induced by the Nataf transformation compared to those of Case 1 with normal distribution. Moreover, for the estimation of skewness and kurtosis with PC theory, as the nonlinearity is higher, larger errors are induced by the Nataf transformation compared to those of mean and variance.

Thirdly, in Case 4 that the distribution of input random variable is unknown, the proposed method considering variable correlation can produce accurate results that are very close to MCS, while the existing DD-PC method with Nataf transformation even cannot work.

All these results demonstrate the effectiveness and advantage of the proposed method.

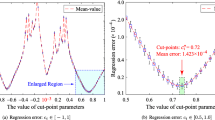

To study the convergence property, the relative errors (%) of the statistical moments and Pf with different PC orders obtained by the proposed DD-PC-Corr method and the existing DD-PC method with Nataf transformation are illustrated in Figs. 17, 18, 19, and 20. For space limit, only the results of Function 2 with large correlation (ρ = 0.8 or strong) are shown here. It is noticed that for both methods, with the increase of the PC order, the relative errors significantly decrease, and the decline of the proposed method is clearly more rapid, exhibiting better convergence property. Meanwhile, the errors of the proposed method are evidently smaller compared to the existing approach with the same order of PC model (i.e., the same number of function calls). These results demonstrate the effectiveness and good convergence property of the proposed method.

3.2 Coil spring

A coil spring problem adopted from (Arora 2004) (see Fig. 21) is further employed to investigate the effectiveness of the proposed method. It is originally a design problem and is modified to an example for UP in this subsection. In the manufacturing process, the inner diameter D (unit, in) and the wire diameter d (unit, in) may be physically correlated, which are assumed to follow normal distributions (D ∼ N(1, 0.12), d ∼ N(0.15, 0.012)). The rest of the parameters are considered to be deterministic, which are explained in Table 2.

We are concerned about the mass of the coil spring, which is calculated as follows:

Similarly, different strength of the correlation coefficient between D and d (ρDd) is considered. The first four statistical moments (M, Std, Ske, and Kur) of the output response for the proposed DD-PC-Corr method (denoted by Proposed) and MCS are shown in Fig. 22, in which the errors relative to MCS for the proposed are shown numerically above the corresponding bars (all the errors are in the form of percentage error). From Fig. 22, it is noticed that all the results calculated by the proposed DD-PC-Corr method are very close to those produced by MCS with different correlation strength, and the relative errors are basically within 1%. Generally, the relative errors of skewness and kurtosis are larger than those of mean and standard deviation, as the nonlinearity of skewness and kurtosis estimation within PC theory is higher. These results show great agreements to those obtained in Sect. 3.1, and further demonstrate the effectiveness of the proposed method.

4 Numerical test for design optimization under uncertainty

In this section, the proposed DD-PC-Corr method is employed to robust optimization of one mathematical example and reliability-based design optimization of a coil spring problem to further verify its effectiveness and accuracy in addressing UP with correlated input random variables. Meanwhile, optimization is also conducted with MCS for UP, of which the results are used as benchmark to validate the effectiveness of the proposed method. Once the optimization is done, MCS is conducted to obtain the confirmed results, through substituting the obtained optimal design variables by DD-PC-Corr and MCS-based design optimization into the objective and constraint functions considering uncertainties of input random variables. The order of PC model is set as H = 3.

4.1 Mathematical example

Robust design optimization on a mathematical example shown in (19) is firstly employed with the proposed DD-PC-Corr method for statistical moment calculation. Different correlation coefficients (ρ = 0, 0.5, 0.8) are considered. Table 3 shows the results of robust optimization using the proposed method and MCS, in which FC and GC denote the confirmed objective and constraint function values. From this table, it is found that the results of the proposed DD-PC-Corr method are very close to those produced by the MCS method for different correlation coefficients, and the relative errors are all within 5%, indicating the effectiveness and accuracy of the proposed DD-PC-Corr method. Moreover, for different correlation coefficients, the obtained optimal solutions always satisfy the constraints:

4.2 Coil spring

The reliability-based design optimization (RBDO) formulation of the coil spring problem mentioned in Sect. 3.2 is shown in (20):

where μ represents the mean of the variable, and Pi denotes the probability of failure of the ith limit state function.

The optimization of the coil spring problem aims to find the design variables (the means of the inner diameter D and wire diameter d) of the coil spring, to minimize the mass subject to constraints on the deflection δ, the shear stress τ, and the vibration frequency ω of the spring. The inner diameter D and the wire diameter d are considered to be uncertain and follow normal distribution, with standard deviation as 0.1 and 0.01, respectively. In the manufacturing process, D and d may be physically correlated to each other. Ignoring such correlation may significantly impact the performance of the spring, and thus, it is necessary to consider it. In this work, different correlation coefficients ρDd = [0, 0.5, 0.8] are tested. The proposed DD-PC-Corr method and MCS is employed to estimate the probability of failure and mean of mass in RBDO.

The optimal results of RBDO using the proposed DD-PC-Corr method and MCS and the confirmed results of the original design (μD = 1, μd = 0.15) are displayed in Table 4. As shown in the table, for different correlation coefficients, the proposed method can produce feasible optimal results that are very close to those of MCS, demonstrating the effectiveness of the proposed method. Meanwhile, for different correlation coefficients, with the employment of RBDO, the mean value of spring mass and the failure probability of the deflection are significantly reduced by RBDO compared with the original design, which clearly improve the reliability. For the original design, the failure probability of the deflection (P1) is almost 100%, yielding terrible reliability.

5 Conclusions

In this paper, a new non-intrusive data-driven polynomial chaos (DD-PC) method that can directly consider variable correlation into the construction of PC model is developed for uncertainty propagation with black-box computational expensive function. With the proposed method, the multivariate orthogonal polynomial basis that can consider variable correlation is constructed by solving the moment-matching equations based on the correlation statistical moments of correlated input random variables. And the regression technique is employed for PC coefficient calculation considering computational efficiency. The proposed method is applied to several numerical examples with correlated input random variables for UP and design optimization under uncertainty. It is found that when the variable distribution is known, as no transformation is required for the proposed method, it is more accurate than the existing DD-PC method with Nataf transformation; when it is unknown, almost all the existing PC methods cannot work, the proposed method can still obtain accurate results that are very close to those produced by Monte Carlo simulation. The effectiveness and advantage of the proposed method are well demonstrated.

Abbreviations

- b i :

-

The ith coefficient of PC model

- d :

-

Dimension of random inputs

- x :

-

Random input vector

- y :

-

Stochastic response value

- H :

-

Order of PC model

- P (k) :

-

The kth orthogonal polynomials for correlated variables

- \( \overline{P} \) :

-

The orthogonal polynomials for independent variables

- Q + 1:

-

Number of PC coefficients

- μ :

-

Mean value

- μ a, b :

-

Correlation statistical moment

- ρ :

-

Correlation coefficient

- σ :

-

Standard deviation value

- Ωc :

-

Original correlated random variable space

- Γ(x):

-

Joint cumulative distribution function

- DD-PC:

-

The data-driven polynomial chaos method

- gPC:

-

The generalized polynomial chaos method

- GS-PC:

-

The Gram-Schmidt polynomial chaos method

- ME-PC:

-

The multi-element generalized polynomial chaos method

- PC:

-

Polynomial chaos

- UP:

-

Uncertainty propagation

References

Abou SC (2012) Fuzzy-logic-based network for complex systems risk assessment: application to ship performance analysis. Accid Anal Prev 45(2):305–316

Arora JS (2004) Introduction to optimum design, 2nd edn. Elsevier, Amsterdam

Coelho RF, Bouillard P (2011) Multi-objective reliability-based optimization with stochastic metamodels. Evol Comput 19(4):525–560

Dodson M, Parks GT (2015) Robust aerodynamic design optimization using polynomial chaos. J Aircr 46(2):635–646

Du XP (2008) Saddlepoint approximation for sequential optimization and reliability analysis. J Mech Des 130(1):842–849

Guo L, Liu YL, Zhou T (2019) Data-driven polynomial chaos expansions: a weighted least-square approximation. J Comput Phys 381(1):129–145

Hampton J, Doostan A (2018) Basis adaptive sample efficient polynomial chaos (BASE-PC). J Comput Phys 371(2):20–49

Hosder S, Walters R W, Balch M (2007) Efficient sampling for non-intrusive polynomial chaos applications with multiple uncertain input variables. AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics, and Materials Conference, USA

Isukapalli SS (1999) Uncertainty analysis of transport-transformation models. PhD thesis of The State University of New Jersey

Isukapalli SS, Roy A, Georgopoulos PG (2000) Efficient sensitivity/uncertainty analysis using the combined stochastic response surface method and automated differentiation: application to environmental and biological systems. Risk Anal Off Publ Soc Risk Anal 20(5):591–602

Kiureghian D, Liu PL (1986) Structural reliability under incomplete probability information. J Eng Mech 112(1):85–104

Lebrun R, Dutfoy A (2009) An innovating analysis of the Nataf transformation from the copula viewpoint. Probabilistic Eng Mech 24(3):312–320

Lee SH, Chen W (2009) A comparative study of uncertainty propagation methods for black-box-type problems. Struct Multidiscip Optim 37(3):239–253

Li Y, Wang X, Wang L et al (2017) Non-probabilistic stability reliability measure for active vibration control system with interval parameters. J Sound Vib 387:1–15

Liu X, Wang X, Xie J et al (2019) Construction of probability box model based on maximum entropy principle and corresponding hybrid reliability analysis approach. Struct Multidiscip Optim:1–19

Mukhopadhyay S, Khodaparast H, Adhikari S (2016) Fuzzy uncertainty propagation in composites using gram-schmidt polynomial chaos expansion. Appl Math Model 40(7–8):4412–4428

Noh Y, Choi KK, Liu D (2009) Reliability-based design optimization of problems with correlated input variables using a Gaussian copula. Struct Multidiscip Optim 38(1):1–16

Oladyshkin S, Nowak W (2012) Data-driven uncertainty quantification using the arbitrary polynomial chaos expansion. Reliabil Eng Syst Saf 106(4):179–190

Oladyshkin S, Class H, Helmig R, Nowak W. A concept for data-driven uncertainty quantification and its application to carbon dioxide storage in geological formations. Adv Water Resour, 2011, 34(11): 0–1518

Oladyshkin S, Class H, Nowak W (2013) Bayesian updating via bootstrap filtering combined with data-driven polynomial chaos expansions: methodology and application to history matching for carbon dioxide storage in geological formations. Comput Geosci 17(4):671–687

Paulson JA, Buehler EA, Mesbah A (2017) Arbitrary polynomial chaos for uncertainty propagation of correlated random variables in dynamic systems. IFAC-PapersOnLine 50(1):3548–3553

Prabhakar A, Fisher J, Bhattacharya R (2010) Polynomial chaos-based analysis of probabilistic uncertainty in hypersonic flight dynamics. J Guid Control Dyn 33(1):222–234

Rackwitz R, Flessler B (1978) Structural reliability under combined random load sequences. Comput Struct 9(5):489–494

Rosenblatt M (1952) Remarks on a multivariate transformation. Ann Math Stat 23(3):470–472

Sinou JJ, Jacquelin E (2015) Influence of polynomial chaos expansion order on an uncertain asymmetric rotor system response. Mech Syst Signal Process 50:718–731

Socie DF (2003) Seminar notes: probabilistic aspects of fatigue. University of Illinois Press, Illinois

Song SF, Lu ZZ, Song ZS (2011) Reliability sensitivity analysis involving correlated random variables by directional sampling. International Conference on Quality and Reliability, Thailand. 845–850

Wan X, Karniadakis GE (2006) Multi-element generalized polynomial chaos for arbitrary probability measures. SIAM J Sci Comput 28(3):901–928

Wang FG, Xiong FF, Jiang H, Song JM (2017) An enhanced data-driven polynomial chaos method for uncertainty propagation. Eng Optim 50(2):273–292

Wang GZ, Xin HH, Wu D et al (2019) Data-driven arbitrary polynomial chaos-based probabilistic load flow considering correlated uncertainties. IEEE Trans Power Syst 34(4):3274–3276

Wei X, Feng BW, Liu ZY (2018) Ship uncertainty optimization design based on multidimensional polynomial chaos expansion method. Ship Eng 1(40):42–47

William C, Meecham DTJ (1968) Use of the wiener—hermite expansion for nearly normal turbulence. J Fluid Mech 32(2):225–249

Witteveen JAS, Bijl H (2013) Modeling arbitrary uncertainties using gram-schmidt polynomial chaos. AIAA 2013 Aerospace sciences meeting & exhibit, USA

Xia BZ, Qin Y, Chen N et al (2017) Optimization of uncertain acoustic metamaterial with Helmholtz resonators based on interval model. SCIENCE CHINA Technol Sci 60(3):385–398

Xiao Z, Han X, Jiang C et al (2016) An efficient uncertainty propagation method for parameterized probability boxes. Acta Mech 227(3):633–649

Xiong F F, Xue B, Yan Z (2011a) Polynomial chaos expansion based robust design optimization. IEEE 2011 International Conference on Quality, Reliability, Risk, Maintenance, and Safety Engineering, China. pp 868–873

Xiong FF, Chen W, Xiong Y et al (2011b) Weighted stochastic response surface method considering sample weights. Struct Multidiscip Optim 43(6):837–849

Xiu DB, Karniadakis GE (2002) The wiener--askey polynomial chaos for stochastic differential equations. SIAM J Sci Comput 24(2):619–644

Xiu DB, Karniadakis GE (2003) Modeling uncertainty in flow simulations via generalized polynomial chaos. J Comput Phys 187(1):137–167

Zhang K, Achari G (2010) Uncertainty propagation in environmental decision making using random sets. Procedia Environ Sci 2(1):584

Funding

Grant support was received from Science Challenge Project (No. TZ2018001) and Hongjian Innovation Foundation (No.BQ203-HYJJ-Q2018002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Responsible Editor: Byeng D Youn

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Replication of results

The results shown in the manuscript can be re-produced. Considering the size limit of the uploaded supplementary material, the codes for one of the mathematical example for UP (Function 1 and Function 2 in Sect. 3.1) is uploaded as supplementary material. For the rest of the examples, it is very easy to implement by changing the response functions and sample points based on the codes provided to obtain the results shown in the manuscript

Electronic supplementary material

ESM 1

(RAR 12.7 kb)

Appendix

Appendix

The correlated statistical moments and the polynomial coefficients for Function 1 (Case 1, ρ = 0.8) in Sect. 3.1 are provided as below.

For this example, the dimension of correlated random variable is 2 and the order of model is set as H = 3. Therefore, there are ten two-dimensional orthogonal polynomial bases with polynomial order on more than 3. The correlated statistical moments that are in the form of matrix in (17) are shown as below:

Based on the probabilistic distribution information of the correlated input random variables, one can obtain these correlated statistical moments that will be employed in the orthogonal polynomial basis construction. As the correlated statistical moments for k = 0, 1,..., 8 are all part of those for k = 9, only those for k = 9 is given as below:

Correspondingly, the polynomial coefficients of the ten two-dimensional orthogonal polynomial bases are listed as below:

Rights and permissions

About this article

Cite this article

Lin, Q., Xiong, F., Wang, F. et al. A data-driven polynomial chaos method considering correlated random variables. Struct Multidisc Optim 62, 2131–2147 (2020). https://doi.org/10.1007/s00158-020-02602-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-020-02602-7