Abstract

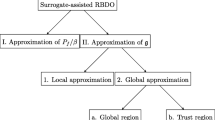

In the reliability-based design optimization (RBDO) process, surrogate models are frequently used to reduce the number of simulations because analysis of a simulation model takes a great deal of computational time. On the other hand, to obtain accurate surrogate models, we have to limit the dimension of the RBDO problem and thus mitigate the curse of dimensionality. Therefore, it is desirable to develop an efficient and effective variable screening method for reduction of the dimension of the RBDO problem. In this paper, requirements of the variable screening method for deterministic design optimization (DDO) and RBDO are compared, and it is found that output variance is critical for identifying important variables in the RBDO process. An efficient approximation method based on the univariate dimension reduction method (DRM) is proposed to calculate output variance efficiently. For variable screening, the variables that induce larger output variances are selected as important variables. To determine important variables, hypothesis testing is used in this paper so that possible errors are contained in a user-specified error level. Also, an appropriate number of samples is proposed for calculating the output variance. Moreover, a quadratic interpolation method is studied in detail to calculate output variance efficiently. Using numerical examples, performance of the proposed method is verified. It is shown that the proposed method finds important variables efficiently and effectively

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The variable screening method is a useful method in the design optimization process because it can select essential design variables for accurate surrogate models and effective design optimization. In the formulation of a design optimization problem, a set of design variables that describe the system need to be identified (Arora 2004). Design variables are selected to be independent of each other as much as possible in the design space. The number of independent design variables is known as the degrees of freedom, and this is the dimensionality of the optimization problem. To obtain an appropriate optimum design, a minimum number of design variables is required. For this reason, it is better to identify as many design variables as possible and then fix some of the variables at certain values according to the variable screening result. The variable screening method can play a key role, especially in reliability-based design optimization (RBDO), because the RBDO process requires a larger number of analyses than the deterministic design optimization (DDO) process due to reliability analyses and the design sensitivities of probabilities of failure. To this end, surrogate models are usually used to reduce the number of analyses required in RBDO. Various surrogate model methods such as the radial basis function (RBF), polynomial response surface (PRS), support vector regression (SVR), Kriging, and dynamic Kriging (DKG) methods have been developed (Cressie 1991; Barton 1994; Jin et al. 2001; Simpson et al. 2001; Queipo et al. 2005; Wang and Shan 2007; Forrester et al. 2008; Forrester and Keane 2009; Zhao et al. 2011). However, even for the surrogate model, the number of design variables becomes a critical factor because surrogate model generation is difficult for high-dimensional problems, due to the curse of dimensionality.

Variable screening methods have been developed in various disciplines. In statistics, important variables were found to create an accurate surrogate model of computer simulation using the maximum likelihood estimator (MLE) of correlation parameters of the Gaussian process for a deterministic problem (Welch et al. 1992). Using a regression model, essential variables among candidate variables were efficiently identified based on data (Duarte Silva 2001; Wang 2009). Especially in statistical learning theory, various feature selection methods have been developed to choose a reduced number of input variables to represent an output effectively (Guyon and Elisseeff 2003). In addition, methods such as manifold learning have been used to preserve input information in reduced dimension for efficient statistical analysis (Izenman 2008). In physics, a variable screening model was developed for the quasi-molecular treatment of ion-atom collision (Eichler and Wille 1975). In engineering, a confidence interval of the coefficient of a linear surrogate model was proposed to detect key variables for a car crash DDO problem (Craig et al. 2005). A sampling-based sensitivity measure using a small amount of data was introduced to rank the importance of variables and was applied to long-term performance of a geologic repository for high-level radioactive waste (Wu and Mohanty 2006). Moreover, the design sensitivity method can be extended to the variable screening method because vital variables have larger design sensitivity. In the deterministic problem, the design sensitivity, which shows the rate of change in the performance measure at the design point, can be obtained using various methods (Choi and Kim 2005a, b) and is called local sensitivity analysis (LSA) (Reedijk 2000; Chen et al. 2005). For a reliability problem, the variability of the input random variable should be incorporated to assess the design sensitivity of a probabilistic constraint. The design sensitivity of the probabilistic constraint using the first-order reliability method (FORM) (Haldar and Mahadevan 2000; Ditlevsen and Madsen 1996; Hou 2004), dimension reduction method (DRM) (Rahman and Wei 2008; Lee et al. 2010), and sampling-based stochastic sensitivity (Lee et al. 2011a, b), could be used to identify important design variables. In addition, global sensitivity analysis (GSA), such as correlation ratio (McKay et al. 1999), global sensitivity indices (Sobol 2001), and analytical GSA methods (Chen et al. 2005), can be used for variable screening as well (Mack et al. 2007).

However, previous works may have limitations to being directly applied to RBDO with surrogate models. If a method depends entirely on existing data (Duarte Silva 2001; Wang 2009; Guyon and Elisseeff 2003; Izenman 2008), it may not be possible to carry out RBDO because design variables change during the optimization process. Finding input variables from among all the variables that may be irrelevant to an output to well represent the output from data (Guyon and Elisseeff 2003) is not an issue from the RBDO perspective. The relationship and relative input variables are already known through the computer aided engineering (CAE) such as the finite element method (FEM) or computational fluid dynamics (CFD). A method using CAE to find variables which significantly affect output reliability is more interesting. Moreover, capturing the input information in reduced variables (Izenman 2008) is not an issue with RBDO, either; how much output uncertainty is affected by the input variables is the main interest. A method developed for a specific problem (Eichler and Wille 1975) will be inadequate for broad applications. Variable screening and design sensitivity methods for a deterministic problem (Welch et al. 1992; Craig et al. 2005; Choi and Kim 2005a, b) may not be applicable for RBDO because input randomness is not considered. Methods that require a very large number of analyses (McKay et al. 1999; Sobol 2001) could be ineffective for RBDO of computationally demanding problems and could become unstable when sufficient numbers of analyses are not provided (Wu and Mohanty 2006). The design sensitivity of the probabilistic constraint using FORM or DRM (Haldar and Mahadevan 2000; Ditlevsen and Madsen 1996; Hou 2004; Rahman and Wei 2008; Lee et al. 2010) requires searching for the most probable point (MPP), which may be very difficult to obtain for a large-dimensional problem. If a method was developed based on the assumption that accurate full-dimensional surrogate models are available a priori (Chen et al. 2005; Lee et al. 2011a, b), RBDO could be carried out using the surrogate models because it can provide accurate response and sensitivity of the problem, unless the optimization algorithm has a limitation on the number of design variables, which is not common. From the previous works, key desirable properties of a variable screening method for RBDO with a surrogate model were found: it should (1) be efficient, (2) consider input randomness, (3) not require a full-dimensional surrogate model, and (4) be applicable to broader problems.

Therefore, the objective of this paper is to develop a variable screening method that can satisfy the above desirable properties. The reliability analysis in RBDO captures the output variability induced by the input variability and the sensitivity of the performance function. The variable that induces larger output variability is important in the RBDO process. In this paper, a partial output variance, which is the output variance when one random variable has variability while others are fixed at their mean, is used to find important design variables (Bae 2012). The partial output variance is simple to calculate and requires a 1-D surrogate model for each design variable. The method introduced in this paper has strengths and weaknesses. Its main strength is its efficiency and practical applicability. Its weakness is accuracy; the interactions between the random variables are not fully captured. However, practical applicability is the focus in this paper because it is very important for large-scale problems. In the following sections, the proposed method will be explained in detail, and its strengths and weaknesses will be fully discussed. To demonstrate the effectiveness of the proposed method, analytical examples and a large-scale industrial problem are used.

2 Variable screening

As explained in the introduction, screening out variables means finding important variables among all random variables. Here, the word “important” could have different meanings depending on the problem we are dealing with. In the following two sections, the difference between variable screening for DDO and RBDO will be explained. Based on the difference, the required properties of variable screening for RBDO will be introduced.

2.1 Variable Screening for DDO

A DDO problem can be formulated as

where d, G j , NC, and NDV are the design variable vector, jth constraint function, number of constraints, and number of design variables, respectively.

As stated before, in the DDO problem, the input design variables do not have uncertainty, and thus the design sensitivity can be used as a barometer to determine the importance ranking of design variables with respect to the performance measure. The question is: “Where should the importance ranking of design variables be determined?” or “Where should the design sensitivity be calculated?”

The LSA calculates the design sensitivity at a given design point (Reedijk 2000; Chen et al. 2005). Usually, LSA is used to provide the direction of design movement in the optimization process. For variable screening, LSA can provide the importance ranking of design variables at the current design point. However, the importance ranking at the given design point could be different from the ranking at other design points if the performance measure is a nonlinear function of design variables. On the other hand, GSA is used to calculate overall design sensitivity on the entire design domain. The GSA is like averaged design sensitivity in the design domain. As it is an average, the importance ranking using GSA could mislead at specific points or even regions. Hence, LSA and GSA have advantages and disadvantages for variable screening (Reedijk 2000).

2.2 Variable Screening for RBDO

A general RBDO problem can be formulated as

where d, \(G_{j},P_{F_{j} }^{Tar} \), NC, NDV, and NRV are the design variable vector, jth constraint function, jth target probability of failure, number of constraints, number of design variables, and number of random variables, respectively.

In the RBDO process, design variable vector d is the mean vector of the corresponding random variable X. Though the design variable d is deterministic, the design sensitivity for RBDO should consider the randomness of X because the constraints are based on the probabilistic performance measure P[G j (X)>0] as shown in (2). Therefore, the design sensitivity of the performance measure alone cannot be used as a barometer. The design sensitivity of the probabilistic performance measure can be obtained by using several methods, such as FORM (Haldar and Mahadevan 2000; Ditlevsen and Madsen 1996; Hou 2004), DRM (Rahman and Wei 2008; Lee et al. 2010), and sampling-based stochastic sensitivity (Lee et al. 2011a, b). The design sensitivity of the probabilistic performance measure can be used for variable screening. The design sensitivities by those methods are LSA because they provide different sensitivities at different designs. The GSA method is also applicable for variable screening in RBDO problems as it is in DDO problems. Again, both LSA and GSA methods have advantages and disadvantages.

The random parameters will not increase the dimensionality of the optimization problem because they are not random design variables. However, the surrogate model that includes random parameters is still required because they affect the output distribution. The main objective of this paper is to select important design variables so that accurate surrogate models can be generated and, at the same time, an appropriate optimum design (i.e., not suboptimum) can be obtained in the RBDO process. Hence, once variable screening is done, the screened-out random design variables need to be fixed, not to be a random parameter. However, fixing a random variable as a deterministic variable will reduce the total output variability.

Consider a simple example:

If the probabilistic performance measure is P[Y>60], then the reliability analysis result is

However, if one dimension is reduced by screening out X 10=μ 10=5 while the other variables remain random, then the probabilistic performance measure changes to

As a consequence, the reliability analysis result yields

From (4) and (6), 0.0126 (1.26 %) of the reliability output is decreased by screening out one variable. A more fundamental problem is that the lost amount 1.26 % cannot be estimated without the full-dimensional reliability analysis result of (4). On the other hand, let’s assume that X 10 has a smaller variance of one. Then, the full-dimensional reliability analysis yields

From (6) and (7), the difference is 0.0014 (0.14 %), which could be acceptable. Therefore, in this case, X 10 could be fixed at the mean value. As shown in the example, the output variability decreases if any random variable is fixed at a deterministic value. However, there are some variables that affect the output variability a small amount. The variable screening method for effective surrogate models for RBDO is to find those variables that have small effects on the output variability. It is noted that the random parameters are considered as much as the random design variables in this paper. Even though the random parameters are not changing during the RBDO process, they will influence the output variability. Hence they should be considered in the variable screening process, so that reliability of the performance measure can be accurately approximated using reduced dimension.

3 Variable screening with 1-D surrogate model

The probability of failure cannot be solely determined by the output variability. To obtain accurate probability of failure, the output distribution is needed, that is, all statistical information of the output is required. However, even though an input distribution is known, it is very difficult to obtain complete output distribution since the performance measure could be implicit, a non-linear function, or even both. For example, for a given normal input distribution, the output distribution could be bimodal as well as asymmetric. Consequently, it is impractical to select a reduced number of input variables based solely on probability of failure. As discussed in previous sections, a screened-out variable will be fixed at its mean value. Then the change of output mean will be minimized. As a result, the output variability becomes the measure that can determine a probability of failure. Of course, other statistical moments or parameters, such as skewness and kurtosis, could affect probability of failure. However, either of these statistical moments cannot be a measure by itself. For example, a variable that induces larger (or smaller) output skewness may not be an important variable, but it could be an important variable when it induces larger (or smaller) output skewness and very similar output variability. We could consider a combination of the moments as a measure, but there are too many possible combinations to consider. Hence, under the assumption that the output mean is similar, the output variability is chosen as the measure to select vital variables for RBDO in this paper.

The output variability can be quantified by the output variance as shown in the previous section. The exact output variance of a nonlinear implicit performance measure is very difficult to obtain. Hence, an approximated output variance is used in this paper. In the following sections, the output variance is decomposed into partial output variances, which are the output variances when each input variable is random and the others are fixed at their mean values. Then, a method to find the design variables that have a large impact on output variance is developed using a hypothesis testing.

3.1 Approximated output variance

A univariate DRM is a well-known approximation method for statistical moments using multiple 1-D integrations (Rahman and Xu 2004). Consider a performance measure Y and its realization y subject to input random vector X={X 1,…,X N }T:

Define a function Y i , which is the performance measure when X i is random and other variables are fixed at their mean values, as

The realization of the performance measure at the input mean point μ X is defined as

The lth statistical moment of Y, which is approximated using the univariate DRM, is defined as (Rahman and Xu 2004)

Then, the output variance \({\sigma _{Y}^{2}} \) can be approximated as

where \(\sigma _{Y_{i} }^{2} \) is the variance of (9), which is the partial output variance when only X i is random, and \(\rho _{Y_{i} Y_{j} } \) is the correlation coefficient between Y i and Y j . As shown in (12), the partial output variances \(\sigma _{Y_{i} }^{2} \) are the main variables for approximating the output variance \({\sigma _{Y}^{2}} \). When \(\sigma _{Y_{i} }^{2} \) is larger than other partial output variances, it takes the largest portion in the output variance \({\sigma _{Y}^{2}} \). Therefore, if some X i produces larger partial output variance than others, then X i should be selected as an important variable. It is noted that calculation of \(\sigma _{Y_{i} }^{2} \) requires only 1-D integration, and thus only 1-D surrogate models are required.

Statistical correlation between X i and X j yields the term of \(\rho _{Y_{i} Y_{j} } \sigma _{Y_{i} } \sigma _{Y_{j} } \) in (12) and affects the output variance. When X i and X j are strongly correlated, one could be replaced by the other. To calculate the term \(\rho _{Y_{i} Y_{j} } \sigma _{Y_{i} } \sigma _{Y_{j} } \), a two-dimensional surrogate model is required. If there are only a few correlation pairs, calculating the correlation term could be affordable. However, with a practical point of view, the partial output variance \(\sigma _{Y_{i} }^{2} \) is the focus in this paper. As we are looking for important variables, not the value the output variance of \({\sigma _{Y}^{2}} \), the partial output variance would be enough for variable screening. In Fig. 1, contours of independent, positively correlated (ρ=0.8) and negatively correlated (ρ=−0.8) probability density functions are shown. Correlation determines how the random variables are distributed inside the box (dotted line), whereas the size of the box is determined by variances of X 1 and X 2. It can be seen that the primary effect on output variance is the box size, and then distribution inside the box follows. Consequently, to perform variable screening efficiently, the first thing we need to consider is the box size, not the distribution of random variables inside the box. Hence, the correlation term is not considered in this paper for efficiency and practicality. It is noted that the statistical correlation between X i and X j will be considered in reduced-dimensional RBDO if both variables are selected.

The partial output variance of \(\sigma _{Y_{i} }^{2} \) is like LSA because it can have different values at different input mean points μ X , which is the current design point in the RBDO process. Hence, the variable screening result could be changed as the design point changes. There are several recommended points at which to perform variable screening using LSA. The first one is the DDO optimum. As the DDO optimum is usually close to the RBDO optimum, the variable screening result at the DDO optimum is likely to be similar to the result at the RBDO optimum. Also, the design point where most of the deterministic constraints are active can be a good candidate point. It is noted that DDO or the design point where the constraints are active could be obtained using the finite difference method in a practical engineering problem. Also, DDO could be achieved using the sensitivity obtained from a 1-D surrogate model because DDO requires only the deterministic LSA, which is 1-D.

3.2 Variable screening using hypothesis testing

Using the 1-D surrogate model, the partial output variance \(\sigma _{Y_{i} }^{2} \) can be calculated approximately as

where \(x_{i}^{\left (j \right )} \) is the jth realization of the input random variable X i , ns is the number of samples, and \(\overline y_{i} \) is the mean of y i as,

As explained in previous sections, the partial output variance \(s_{Y_{i} }^{2} \) can be used to determine important design variables. To make the variable screening procedure systematic, hypothesis testing is applied in this paper. Hypothesis testing can prevent undesirable choices that could occur during the decision-making procedure. Calculated partial output variance \(s_{Y_{i} }^{2} \) depends on the number of samples ns. When ns is large enough, the variable screening result will be accurate. However, it would require a large computational time. Also, it is hard to determine what value of ns is large enough. When ns is small, it will include statistical error. If calculated \(s_{Y_{i} }^{2} \) are distinctive from each other or with respect to the screening threshold value, then the effect of ns may not be significant. However, ns could cause an error when some \(s_{Y_{i} }^{2} \) are similar to each other or are near the screening threshold value. Hypothesis testing can prevent this problem in a statistical manner by letting users control the error level.

Various hypothesis testing methods have been developed for the decision-making problem (Rosner 2006). Among those methods, we need the one that is not sensitive to distribution type because the distribution type of Y i or \(s_{Y_{i} }^{2} \) is not known in general. The one-sample t-test is developed based on the central limit theorem, which states that the sample mean of non-normal distribution follows normal distribution approximately for a large number of samples. The one-sample t-test is not sensitive to underlying distribution types, so it is used in this paper. As the t-test is a method for sample mean, \(s_{Y_{i} }^{2} \) is calculated nr times for its statistical moments as

where \(s_{Y_{i} }^{2\left (k \right )} \) is the kth repetition of \(s_{Y_{i} }^{2} \) and nr is the number of repetitions. Now, the hypothesis is constructed:

where μ 0 is the criterion of hypothesis testing. According to (17), the design variable that corresponds to \(\overline v_{i} \), which is greater than μ 0 (H 1 is true), will be selected as an important variable. Using the one-sample t-test, the hypothesis can be tested by checking the following statement:

where α is the significance level, t n r−1,∙ is \(t_{nr-1}^{-1} \left (\cdot \right )\), and the test statistics q is defined as

In (15) and (16), the uncertainty induced by ns is transferred to nr. Hence, ns can be a fixed number, whereas nr should be decided appropriately. Also, μ 0 needs to be identified in (17) and (19). μ 0 is the key criterion that decides important variables, and it should be a value relative to \(\overline v_{i} \) because the relative difference of partial output variances should be checked for variable screening. At the same time, μ 0 needs to be statistically independent from \(\overline v_{i} \) for reasonable hypothesis testing. In this paper, preliminary testing is proposed to obtain reasonable nr and μ 0 as follows. First, choose n r 0, which is large enough so that the central limit theorem holds. Then, calculate the initial statistical moments of \(s_{Y_{i} }^{2} \) as

Using the value from (20), the testing criterion μ 0 relative to \(\overline v_{i} \) can be calculated as

where γ is a constant that the user selects. nr is calculated by limiting type II error (H 0 is accepted when H 1 is true) at the level of false negative rate β as [35]

In (23), t n r−1,∙ should be used instead of \(t_{nr_{0} -1,\bullet } \) for accurate calculation of nr. However, (23) requires the value of nr on the right side to calculate nr. To avoid this problem, \(t_{nr_{0} -1,\bullet } \) is used instead, and \(t_{nr_{0} -1,\bullet } \) produces a conservative result as it is larger than t n r−1,∙ because nr is larger than n r 0 in (23) and α is usually small. Finally, nr and μ 0 are determined so that the proposed hypothesis testing can be utilized.

3.3 1-D surrogate model

In previous sections, the 1-D surrogate model is treated as the given one because it is not difficult to generate. However, efficiently creating a 1-D surrogate model could be an issue. For efficiency, quadratic interpolation is proposed as a basic 1-D surrogate model in this paper. Quadratic interpolation may not be an adequate method for creating a surrogate model for a highly nonlinear performance measure. However, a nonlinear performance measure can be effectively approximated by a quadratic function on a small region. If X follows normal distribution, the domain of X is (−∞,∞), whereas 99.73 % of X is in (μ X −3 σ X ,μ X +3σ X ), which is much smaller than the infinite domain. Even if X does not follow normal distribution, the region (μ X −3σ X ,μ X +3σ X ) can cover almost all (approximately 98 %) of X. In view of the fact that this paper is focused on calculation of partial output variance, the region (μ X −3σ X , μ X +3σ X ) is large enough. Hence, the 1-D surrogate model needs to be accurate in the region (μ X −3σ X , μ X +3σ X ) so that quadratic interpolation could be an appropriate method to approximate the performance measure in the region.

Quadratic interpolation requires three design of experiments (DoE) samples, and the location of DoE samples affects the accuracy of interpolation. The location of DoE samples determined using the Chebyshev polynomial is known to give uniform error in the domain (Rao 2002). Because only the region (μ X −3σ X , μ X +3σ X ) is of interest, the location of DoE samples is determined as x 1=μ X −2.5981σ X , x 2=μ X and x 3=μ X +2.5981σ X using the Chebyshev polynomial. Since a random variable X may not be evenly distributed in its domain, providing uniform error does not necessarily mean that the calculated partial output variance is accurate. However, since no unique location of DoE samples is best for accurate partial output variance, the sample location by the Chebyshev polynomial is used in this paper due to the fact that it yields reasonable results for various distribution types of the random variable X. If the random variable X has a closed and bounded domain like [a, b], the domain can be directly used for calculation of partial output variance, and the location of samples are x 1=0.93301a+0.06699b,x 2=(a+b)/2 and x 3=0.06699a+0.93301b, using Chebyshev polynomials.

To check the performance of a selected location of DoE samples, a nonlinear performance measure Y is used as

Assuming that random variable X follows N(0.5,0.3332), three locations of DoE samples are chosen to compare the accuracy of the partial output variance. The first location is {0.167,0.5,0.833} , which is μ X and μ X ±σ X and the second location is from the Chebyshev polynomial as {−0.365, 0.5, 1.365}. The third location is wider, as {−0.667, 0.5, 1.667}, which is μ X and μ X ±3.5σ X . Partial output variances are calculated using 100,000 realizations of X, and true partial output variance is calculated by (24) with the same realizations. To check the accuracy of the quadratic interpolation itself, mean square error (MSE) is calculated in the region of (−0.5,1.5), which is (μ X −3σ X , μ X +3σ X ) with 100 uniformly distributed points. The calculated result is shown in Table 1, and the shape of quadratic interpolations is shown in Fig. 2, where asterisk marks (*) represent the DoE sample point. As shown in Table 1, the location of the DoE sample using Chebyshev polynomials produces more accurate partial output variance compared to the true one and less MSE than the other cases.

This example cannot represent all performance measures. When a highly nonlinear performance measure is expected, more sophisticated surrogate methods, such as the RBF, PRS, SVR, Kriging, and DKG (Cressie 1991; Barton 1994; Jin et al. 2001; Simpson et al. 2001; Queipo et al. 2005; Wang and Shan 2007; Forrester et al. 2008; Forrester and Keane 2009; Zhao et al. 2011) methods, are better. In any case, it is recommended to sample inside the region of (μ X −3σ X , μ X +3σ X ) for the random variable X if the distribution has an infinite domain.

4 Numerical examples

Analytical examples and an engineering example are used to test the performance of the proposed variable screening method. Partial output variances are calculated to select important variables using the 1-D quadratic interpolation presented in Section 3.3. To use the variable screening method, five parameters: significance level α, false negative rate β, number of sample ns, initial number of repetition nr 0 and control parameter γ for threshold value, need to be decided by users. Smaller α and β are better choices because they result in smaller statistical errors in the variable screening method. However, when they are too small, very large nr could be required to maintain the error level specified by α and β in (23). Hence, 0.025 to 0.05 would be a reasonable choice for them. For nr 0 and ns, a small number could be chosen to reduce computational cost. However, a small value of nr 0 and ns will rapidly increase nr to maintain the error level. Hence, an appropriately large number should be used; they are set to be 50 or 100 in the numerical examples. The parameter γ in (22) is for user control of the threshold value that determines important variables. In the numerical example, γ is initially set to 1.0, and the variable screening procedure is performed. Then, the ratio of the sum of partial output variances of selected variables to that of all random variables, which is an estimation of the captured output variance, is checked. If the ratio is less than 85 %, γ is lowered to achieve 85 %. As explained before, the partial output variance is an approximation method, which is why the ratio of 85 % may not mean that 85 % of total output variance is actually captured in the selected variables. However, it would be a good estimation with affordable cost because it does not require many design of experiments DoE samples or full-dimensional surrogate models.

4.1 Analytical examples

Hartmann 6-D and Dixon-Price 12-D are well-known analytical functions. They are high-dimensional as well as nonlinear, so they are tested for the variable screening method. Constant terms are added to the original functions to make both functions active (i.e. G(X)=0) at the mean point of the input random variables. Note that adding a constant term does not change the character of the functions. Input random variables have a variety of marginal distribution types and copula types, so the analytical examples can reveal the effects of different distribution types and correlations.

The functions are tested with three different methods. The first is the developed variable screening method. As mentioned before, parameters of α=β=0.025, n r 0 = n s=100 and γ=1.0, and 1-D surrogate model with quadratic interpolation are used. γ is initially set to 1.0 and lowered when necessary. The second is screening with accurate partial output variances using the analytical functions directly and 1,000,000 realizations of random variables. The calculated partial output variances are used for reference. When a performance measure is a linear function (G=Σα i X i ) of the input random variables X i ’s, the output variance is \(\Sigma {\alpha _{i}^{2}} \sigma _{X_{i} }^{2} \), where \(\sigma _{X_{i} }^{2} \) is variance of X i . Hence, the partial output variance can be linearly approximated as \({\alpha _{i}^{2}} \sigma _{X_{i} }^{2} \) with a design sensitivity (gradient) α i and the input variance \(\sigma _{X_{i} }^{2} \). Furthermore, an important variable might be selected based on the partial output variances calculated with the sensitivity-variance method, and it is applied to the analytic functions for comparison.

4.1.1 Hartmann 6-D

The first analytical example is the Hartmann 6-D, and a constant term is added as explained earlier. The analytical expression is shown as (Dixon and Szegö 1978)

where 0 ≤X i ≤1, m=6, q=4 and

Information about input random variables is listed in Table 2. Input random variables have four different marginal distribution types: normal, lognormal, gamma, and Weibull. X 5 and X 6 are correlated with the Clayton copula and Kendall’s tau of 0.5.

The result of variable screening is shown in Table 3. The design sensitivity (gradient) of the Hartmann 6-D in (25) at the input mean point is shown in the second column, and the third through fifth columns show partial output variances using the sensitivity-variance method, the variable screening method, and the accurate method, respectively. As the variable screening method calculates partial output variances nr times, the result is the mean value of the calculated partial output variances. In each method, important variables are marked with bold font. It can be seen that the variable screening method finds the same variables as the accurate method, whereas the sensitivity-variance method misses X 4 and X 5. The sixth and seventh columns are the ratios of partial output variances using the sensitivity-variance method and the variable screening method to the accurate partial output variances, respectively. It is evident that the sensitivity-variance method cannot estimate the partial output variances accurately, while the variable screening method does. Overall, the variable-screening method outperforms the sensitivity-variance method. Hence, it is better to use at least quadratic approximation for the 1-D surrogate model to calculate partial output variances.

In Table 3, the bottom four rows show more information about each method. The first and second rows are the sums of partial output variances of selected variables and all variables, respectively. The third row shows the ratio of the first row to the second row. The last row is μ 0, which is the criterion used to select important variables. In the variable screening method, the important variables (X 4, X 5 and X 6) are determined to be larger than μ 0 by hypothesis testing. The sensitivity-variance method and the accurate method select important variables if the variable has partial output variance larger than μ 0. In the third row, the ratio for the variable screening method is larger than 85 %, so γ is the initial value of 1.0, and equivalent μ 0 is applied for other methods. The sensitivity-variance method estimates that 92.3 % of output variance is contained in X 6 only. This is a very poor estimation, as only 36.7 % (=1.66E−04/4.52E−04) of output variance is captured in X 6 according to the result of the accurate method. On the other hand, the variable screening method estimates that 96.9 % of output variance is contained in X 4, X 5 and X 6, and this is very accurate compared to the 97.1 % determined by the accurate method.

However, the total and captured output variances in Table 3 are approximation using the partial output variances and the correlation term is not considered as explained in Section 3.1. Having the analytical expression of the Hartmann 6-D example in (25), true total output variance induced by multiple input random variables can be calculated as well. The true total output variance is calculated using 1,000,000 realizations of all input random variables and the calculated value is 4.23E −04 as shown in Table 4. Recalling the approximated result in Table 3, the variable screening method (4.54E −04) and the accurate method (4.52E −04) well approximate the true total output variance, whereas the sensitivity-variance method (2.48E −04) is not able to do so. In Table 4, true captured output variance by the selected variables is also calculated. To calculate the true captured output variance, the realizations, which are generated to calculate the true total output variances, are used. Among them, the realizations of the screened-out variables are fixed at their mean values. Then, the variance of Hartmann 6-D is calculated using the modified realizations. The true captured output variance in X 6 is 1.66E −04, which is the same as the partial output variance of X 6 found by the accurate method (see Table 3). Hence, the sensitivity-variance method captures only 39.2 % (1.66E −04/4.23E −04) of the true total output variance in its selection X 6. This will lead a reliability problem not to estimate the probability of failure correctly. By contrast, the captured output variance in X 4, X 5 and X 6 is 4.10E −04, and this is 96.9 % (4.10E −04/4.23E −04) of the total output variance. This indicates that a reliability problem could be solved accurately utilizing X 4, X 5 and X 6. From this example, it can be seen that the variable screening method works as it is intended.

4.1.2 Dixon-Price 12-D

The second analytical example is the Dixon-Price 12-D, and again a constant term is added to its original function. The analytical expression is shown as (Lee 2007)

where −10≤x i ≤10, i=1,2,…, m and m=12. Input random variables shown in Table 5 are used for the test. They have five different marginal distribution types of normal, lognormal, Weibull, Gumbel, and Gamma. X 1 and X 2 are correlated with the Frank copula and Kendall’s tau of 0.7. Also, X 5 and X 6 are correlated with the FGM copula and Kendall’s tau of 0.2.

The test result of the Dixon-Price 12-D example is shown in Table 6, and selected variables in each method are marked with bold font. In this example, the value of γ is lowered to 0.7 to contain at least 85 % of output variance in the selected variables. And it is shown that 86.6 % of output variance is estimated using the variable screening method in Table 6. Design sensitivities with respect to X 9∼X 12 are zero, and accordingly the partial output variances of X 9∼X 12 using the sensitivity-variance method are zero. Hence, the sensitivity-variance method misses X 9 and X 12 even though they have large partial output variances. Moreover, the other partial output variances using the sensitivity-variance method have poor accuracy compared to the accurate method (see the sixth column in Table 6). Hence, X 2 and X 3 are selected instead of X 6 even though X 6 actually has larger partial output variance than X 2 and X 3. On the contrary, the variable screening method reasonably estimates partial output variances and correctly identifies important variables compared to the accurate method. Therefore, it is confirmed that at least quadratic approximation is needed for the 1-D surrogate model to calculate partial output variances.

Using (29), the true total and captured output variances of Dixon-Price 12-D example are calculated as shown in Table 7. In Table 6, the variable screening (3.14E −03) and the accurate methods (3.06E −03) reasonably approximate the true total output variance (3.30E −03 in Table 7), while the sensitivity-variance method (1.95E −04) cannot. In Table 7, the true captured output variance by selected variables using the sensitivity-variance method is only 2.15E −03, which is 65.2 % of the true total output variance. By contrast, the output variance of 2.80E −03 is contained in X 4∼X 9 and X 12, which indicates that 84.8 % of the true total output variance is captured. Hence, it is verified that the variable screening method correctly finds the important variables of the Dixon-Price 12-D example. Through analytical examples, it is shown that the partial output variance is a well-performing measure for variable screening purposes, and the proposed variable screening method successfully finds important variables as it is intended.

4.2 Engineering example

A car noise, vibration, and harshness (NVH) and crash safety problem is considered to demonstrate the performance and efficiency of the proposed method. The problem includes full frontal impact, 40 % offset frontal impact, and NVH as constraints. There are a total of 11 performance measures as shown in Table 8: nine safety measures and two NVH measures.

In this example, it is assumed that the only source of uncertainty is the thickness of the body plates. The 44 random variables shown in Table 9 are used to represent the thicknesses. All random variables follow normal distribution and are statistically independent. The design variable vector d B is the mean vector of the 44 random variables, and there is no random parameter in this example. Among those random variables, six random variables (X 1∼X 5 and X 8) are common variables for both safety and NVH measures, two (X 6 and X 7) are variables only for safety, and the other 36 random variables are only for NVH measures.

This problem requires three and a half hours for the impact dynamic analysis for crash safety and the modal analysis for NVH. Thus, the actual analysis takes too much time to test the proposed method thoroughly. Ford Motor Company provided full-dimensional global (considering the entire design domain) surrogate models so that we could use them to demonstrate the proposed method of variable screening. The full-dimensional surrogate models may not be accurate, since 44-D is too high to create accurate surrogate models, especially for RBDO. However, to test the proposed method of variable screening, the responses from the 44-D global surrogate models are treated as true responses in this example. The maximum dimension at which accurate surrogate models can be generated depends on the computational power and nonlinearity of a given problem. In this paper, the DKG method (Zhao et al. 2011) is used to generate an accurate surrogate model using the Iowa Reliability-Based Design Optimization (I-RBDO) code (Choi et al. 2012), and 18-D is targeted as the maximum degrees of freedom of DKG models. The I-RBDO code is also used to carry out RBDO in this paper.

4.2.1 Variable screening

At the baseline design d B, which is the initial design as shown in Table 9, all 11 performance measures in Table 8 are active. That is, the value of every performance measure at the baseline design is the same as the baseline values, with G i =B a s e l i n e i , i=1∼11. Therefore, the proposed variable screening method is performed for the problem at the baseline design. Parameters ns, n r 0, α, β, and γ are set as 50, 50, 0.05, 0.05, and 1.0, respectively. Four hundred eighty-four (44 design variables × 11 performance measures) 1-D surrogate models with quadratic interpolation are generated using 89 DoE samples (i.e., simulation samples). It is noted that 11 values of performance measures are obtained from one DoE analysis. The results of partial output variances \(\overline v_{i} \) are listed in Tables 10 and 11 for every performance measure. The partial output variances of the important variables for each performance measure are marked with bold font. It is noted that only partial output variances of X 1∼X 8 are listed in Table 10 since G 1∼G 9 are only a function of X 1∼X 8 as the variable screening method identified the partial output variances to be zero for other random variables. In Table 11, X 6 and X 7 have zero partial output variances as G 10 and G 11 are not functions of X 6 and X 7. In the last three rows of Tables 10 and 11, the sums of partial output variances of selected variables, the sums of all partial output variances, and their ratios are listed. As explained earlier, this is the estimated ratio between the captured output variance in selected variables to the total output variance. It is estimated that a minimum of 90.3 % of the total output variance is captured in the selected variables. In total, 14 random variables: X 1, X 2, X 3, X 4, X 5, X 6, X 7,X 8, X 10, X 20, X 23, X 25, X 26, and X N 1, are selected as important variables. Accordingly, 14 design variables, which are the means of the selected random variables, are considered as important design variables.

Sensitivity-variance introduced in Section 4.1 is applied to performance measure G 5, and the result is shown in Table 12. Selected random variables for G 5(bold font in Table 12) are X 1∼X 3 and X 5∼X 8 out of X 1∼X 8. Among X 1∼X 8, variables X 3∼X 7 have the largest standard deviation of 0.06. However, X 4 is not selected among them because it has small design sensitivity compared to others. Here, the design sensitivities are calculated at the design point using the forward finite difference method (FDM) with 0.1 % perturbation. By contrast, X 8 is selected as an important variable even though it has smallest standard deviation of 0.03. Again, this is because it has relatively large sensitivity and induces large output variance.

Interestingly, the partial output variances using the sensitivity-variance method of G 5 shown in Table 12 are close to the result shown in the sixth column of Table 10. In fact, the same variables as those in the variable screening method will be selected by using the sensitivity-variance method throughout all 11 constraints. However, the sensitivity-variance method has the possibility of choosing undesirable variables as shown in the analytical examples in Section 4.1. In Fig. 3, the shape of G 5 when each X i is random is shown. It is easily anticipated that the design sensitivity of G 5 with respect to X i could be very small or even zero so that the sensitivity-variance method may provide inaccurate partial output variance; this can be prevented if the variable screening method is used.

The sensitivity-variance method requires accurate design sensitivity. In practical engineering problems, design sensitivity might be calculated using FDM. To use FDM, a user determines perturbation method (forward, backward or central) and perturbation size, and the result of the sensitivity-variance could depend on the user’s choice. In Table 13, partial output variance of X 2 in G 6 at DDO optimum design with various methods is shown. It can be seen that X 2 is not selected as an important variable when design sensitivity is calculated using forward FDM with 1 % perturbation. The partial output variance is only 56.6 % of that found using the accurate method with 100,000 realizations of X 2. To obtain more accurate design sensitivity with forward FDM, small perturbation is required as shown in Table 13. However, small perturbation does not always provide accurate design sensitivity, and determining appropriate perturbation size would require extra DoE samples. Central FDM provides more accurate design sensitivity, and it is insensitive to perturbation size. However, the partial output variance with central FDM sensitivity shows at most 76.7 % accuracy compared to the accurate method. It is noted that the variable screening method would not require perturbation size determination. Moreover, a user can perform the proposed variable screening method using only one more DoE sample than the sensitivity-variance method with central FDM.

Since we have 44-D global surrogate models for this example, GSA can be carried out to verify effectiveness of the proposed method. Among various GSA methods, the global sensitivity index method, which can identify the global effect of the variables of interest on the output, is used here. The main strength of the global sensitivity index method is that it can find interactions between all variables (not statistical correlation between random variables). All random variables are assumed to follow uniform distribution in their corresponding design domain of d L and d U, and global sensitivity indices are calculated using the Monte Carlo simulation (MCS) method with 1 million MCS samples (Sobol 2001). There are many global sensitivity indices in this 44-D problem; the total sensitivity index \(S_{i}^{\text {tot}} \) is used for variable ranking and screening. The total sensitivity index \(S_{i}^{\text {tot}} \) is a “total influence of ith random variable” to the output. That is, it indicates the main effect plus interactions of the ith random variable with other random variables (Chen et al. 2005). The results are listed in Table 14 and Table 15. To identify important random variables, the mean value of \(S_{i}^{\text {tot}} \) is calculated for each constraint and the random variable, which yields larger \(S_{i}^{\text {tot}} \) than the mean value, is selected as an important variable and marked in bold font in these tables. In Table 14, only \(S_{i}^{\text {tot}} \) for X 1∼X 8 are listed as \(S_{i}^{\text {tot}} \) for other variables are zero. Also, the sum of \(S_{i}^{\text {tot}} \) for the selected random variables, the sum of \(S_{i}^{\text {tot}} \) for all random variables, and their ratios are listed in the last three rows, respectively.

Using the global sensitivity index method, 16 random variables are selected as shown in Table 16. Those 16 random variables include all 14 random variables selected using the proposed method as shown in Table 16. Moreover, if we set a limit of 14 random variables to be selected, X 12 and X 27 will not be selected as they have least \(S_{i}^{\text {tot}} \) among the selected variables for G 11 as shown in Table 15. Thus, the 14 random variables selected by both methods are identical. The ratio between the sensitivity indices of selected variables and all variables has no physical meaning. However, it is an indicator that shows how much variance is captured by the selected random variables. The results are quite similar to those of the proposed method as shown in Tables 10 and 11 and Tables 14 and 15, respectively. Hence, it is demonstrated that the proposed variable screening method is quite effective even though it does not require global surrogate models unlike the global sensitivity index method.

4.2.2 Reliability-based design optimization

For this example, RBDO is formulated as

For a comparison study, we considered three cases: (1) a set of 14 random variables is selected based on experience without using the proposed variable screening method, (2) another set of 14 random variables is selected using the proposed variable screening method as shown in Section 4.2.1, and (3) in addition to the 14 random variables selected in case 2, four more random variables are selected using the cost function sensitivity for a total of 18 design variables to test the effectiveness of the proposed variable screening method and the accuracy of the I-RBDO code. The selected design variables are listed in Table 17.

Because the cost function, which is weight in this problem, is a function of design variables d, not random variables X, the function is deterministic. Therefore, the design sensitivity of the cost function with respect to the design variable is calculated by FDM, and the four design variables (and related random variables X N 4, X N 9, X N 10, and X N 11) that show the largest sensitivity among the unselected design variables are chosen. Then, RBDO is carried out with three sets of selected random variables. The optimum design results are summarized in Table 18. The bold font indicates chosen design variables, and others are fixed at the baseline design value. Also, probabilities of failure, cost function values, and design iteration details are listed in Table 19. All RBDOs are carried out using I-RBDO code with 500,000 MCS samples. For the three cases, I-RBDO generates DKG surrogate models, using the DoE sample responses obtained from the 44-D global surrogate models, which are treated as true responses, for RBDO. Since I-RBDO is able to carry out RBDO using the surrogate models generated by other methods (i.e., the surrogate models generated by Ford in this example) without having information on how the surrogate models are generated, the full-dimensional RBDO is performed as well using the 44-D surrogate models. The 44-D RBDO result is treated as the true RBDO optimum and used for the purpose of validation of RBDO results obtained for the three reduced-dimensional cases.

Indeed, the optimum design values for d 1∼d 8 are very close to the full-dimensional 44-D case, as shown in Table 18, which shows that the DKG surrogate models generated in the I-RBDO code are accurate. In the three cases, the random variables X 1∼X 8 are selected because they have large partial output variance for performance measures G 1∼G 9. This means that they contribute a large portion of the output variance. Hence, finding optimum values for them is the most effective way to reduce the probabilities of failure of G 1∼G 9. Similarly, d 22 (corresponding to X 26) moves to the upper bound of 1.1 when it is selected because it has the largest partial output variances of G 10 and G 11. The design variables d 27, d 32, d 33, and d 34 (corresponding to X N 4, X N 9, X N 10, and X N 11), which are selected by the sensitivity of the cost function, move to their lower bounds of 0.7, 0.6, 0.6, and 0.9, respectively, to reduce the cost function without significantly affecting the reliability of the optimum design. On the other hand, even though some design variables are selected due to the partial output variances of some constraints, they move to their lower bounds. For example, d 24 (corresponding to X N 1) moves to the lower bound of 0.7 because it has the largest sensitivity for the cost function, even though it has the third-largest partial output variance of G 11. That is, via the trade-offs in the optimization process, it is moved to the lower bound to minimize the cost function rather than to reduce the probability of failure.

In Table 19, the number of black box calls is listed. I-RBDO can deal with multiple samples simultaneously to create a surrogate model to utilize parallel computing. That is, one black-box call requests computational simulations at a number of sampling points; thus, the black box call indicates the clock time required for analyses for RBDO. Therefore, the number of black box calls represents actual computational cost more realistically than the number of CAEs when parallel computing is used. In this example, five samples are added to the DKG model at a time; therefore, the total number of CAEs is roughly five times the number of black box calls.

To verify once again that the surrogate model generated by DKG using I-RBDO is accurate, the same three cases are performed using I-RBDO by using responses from 44-D global surrogate model directly while fixing screened-out variables at their baseline design points. As shown in Table 20, the optimums found using DKG and 44-D global surrogate models are very close to each other. Hence, it is confirmed that DKG in I-RBDO generated an accurate surrogate model. Moreover, it is also verified that RBDO can be conducted based on an accurate surrogate model even for a moderately large-dimensional problem (14 and 18 dimensions).

For the three reduced-dimensional cases, the probabilities of failure are calculated using only selected variables as random variables, since the other design variables are treated as deterministic as explained in Section 2.2 with fixed values at the baseline design. As shown in Table 19, it is noted that, in all cases, the target design constraints of 10 % probability failure are closely satisfied as expected at these optimum designs since the RBDO considers only the selected variables as random variables. On the other hand, to check correct reliabilities, reliability analyses are carried out at these optimum designs treating all variables as random for the 44-D surrogate models and MCS with 1 million samples, as shown in Table 21. It is noted that the probabilities of failure of the full-dimensional optimum in Table 19 and Table 21 are different even though the discrepancy is negligible. Theoretically, they should be the same; however, they are not equal because different numbers of MCS samples (500,000 and 1 million) are used and MCS error is induced. At the baseline design, all constraints have approximately 50 % probability of failure, and this is reasonable because all constraints are active at the baseline design. However, they are not exactly 50 % because the constraint functions are nonlinear. Probabilistic constraint results corresponding to G 1∼G 9 are active or feasible in both Table 19 and Table 21. Due to the fact that G 1 ∼ G 9 are functions of X 1 ∼ X 8, and all of them are selected as important variables, the RBDO result with reduced-dimension and the reliability analysis result with full-dimension are very close to each other, considering MCS errors. The constraint G 10 shows inactive results regardless of which selection design variable set is used.

All probabilities of failure for the constraint G 11 in Table 19 satisfy the 10 % target probability of failure, which makes it obvious that these are the reliability analyses results of reduced-dimensional problems. However, full-dimensional reliability analyses at optimum designs show quite different values as shown in Table 21. Selection based on experience shows a 17.70 % probability of failure, which violates the target reliability significantly. The variables selected based on experience contain only 55.4 % (= 1.76E−02/3.18E−02 × 100 % ) of the total output variance of G 11. Hence, it cannot find any safe design once dimension is reduced. On the other hand, the probabilities of failure for the proposed variable screening method (Case 2) and also the one considering cost function (Case 3) are close to the target probability of failure. The selected variables contain 93.4 % (=2.97E−02/3.18E−02 × 100 %) and 93.7 % (=2.98E−02/3.18E−02 × 100%) of the total output variance of G 11 for Case 2 and Case 3, respectively. Hence it can find a correct optimum even with reduced dimension.

5 Conclusion

A new efficient and effective variable screening method for RBDO is proposed in this paper. For the proposed methods, the output variance is used as a measure that can identify important design variables. Thus, a partial output variance based on the univariate DRM is proposed to approximate the output variance efficiently and to identify the design variables that affect output variance more significantly than others. The univariate DRM and partial output variance only require multiple 1-D surrogate models, which is much more efficient than the full-dimensional surrogate models. Hence, the proposed method has great merit in efficiency as well as effectiveness. To reduce computational time and maintain a user-specified statistical error level, hypothesis testing is used in the variable screening process. Also, a required minimum number of samples for calculating the correct output variance is proposed using the user-specified error level. In addition, the quadratic interpolation method is tailored to be applied to efficient partial output variance calculation.

Two analytical examples and a 44-D industrial example are used to verify the performance of the proposed variable screening method. Through the analytical examples, it is shown that at least quadratic approximation is required for the 1-D surrogate model and that partial output variance is a good measure that successfully identifies important variables. In the industrial example, 14 design variables out of 44 are selected by considering the output variances of 11 constraints. For comparison, another 14 design variables selected based on experience are used. In addition, 18 design variables are selected by adding four design variables, which affect the objective function significantly while not affecting the output variances much, to the 14 design variables previously selected with the proposed method. The selection based on experience shows a 7.6 % reduced cost value, whereas the target probability of failure is violated by 77 %. However, selection by the proposed method shows only a 12.3 % disagreement of target value and a 3.6 % reduced cost value. Moreover, the selection of 18 design variables shows 11.7 % target disagreement as well as 9.4 % reduced cost value. Therefore, the performance of the proposed variable screening method is verified.

References

Arora JS (2004) Introduction to optimum design, 2nd edn. Elsevier/Academic Press, San Diego

Bae S (2012) Variable screening method using statistical sensitivity analysis in RBDO. Master’s thesis, University of Iowa

Barton RR (1994) Metamodeling: a state of the art review. In: WSC ’94: Proceedings of the 26th conference on winter simulation, Society for computer simulation international. Orlando, pp 237–244

Choi KK, Kim NH (2005a) Structural sensitivity analysis and optimization 1: linear systems. Springer, New York

Choi KK, Kim N (2005b) Structural sensitivity analysis and optimization 2: nonlinear systems and applications. Springer, New York

Chen W, Jin R, Sudjianto A (2005) Analytical variance-based global sensitivity analysis in simulation-based design under uncertainty. J Mech Des 127(5):875–886

Choi KK, Gaul N, Song H, Cho H, Lee I, Zhao L, Noh Y, Lamb D, Sheng J, Gorsich D, Yang R-J, Shi L, Epureanu B, Hong S-K (2012) Iowa reliability-based design optimization (I-RBDO) code and its applications. In: ARC conference. Ann Arbor, May 21–22

Craig KJ, Stander N, Dooge DA, Varadappa S (2005) Automotive crashworthiness design using response surface-based variable screening and optimization. Eng Comput 22(1):38–61

Cressie NAC (1991) Statistics for spatial data. Wiley, New York

Dixon LCW, Szegö GP (1978) Towards global optimisation, vol 2. North-Holland Publishing Company, Amsterdam

Ditlevsen O, Madsen H O (1996) Structural reliability methods. Wiley, Chichester

Duarte Silva AP (2001) Efficient variable screening for multivariate analysis. J Multivar Anal 76(1):35–62

Eichler J, Wille U (1975) Variable-screening model for the quasimolecular treatment of ion-atom collisions. Phys Rev A 11(6):1973–1982

Forrester AIJ, Keane AJ (2009) Recent advances in surrogate-based optimization. Prog Aerosp Sci 45(1–3):50–79

Forrester AIJ, Sobester A, Keane A J (2008) Engineering design via surrogate modeling: a practical guide. Wiley, Chichester

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3:1157–1182

Haldar A, Mahadevan S (2000) Probability, reliability and statistical methods in engineering design. Wiley, New York

Hou GJ-W (2004) A most probable point-based method for reliability analysis, sensitivity analysis, and design optimization. NASA CR, 2004-213002

Izenman A J (2008) Modern multivariate statistical techniques: regression, classification, and manifold learning. Springer, New York

Jin R, Chen W, Simpson T (2001) Comparative studies of metamodeling techniques under multiple modeling criteria. Struct Multidiscip Optim 23(1):1–13

Lee J (2007) A novel three-phase trajectory informed search methodology for global optimization. J Glob Optim 38(1):61–77

Lee I, Choi KK, Gorsich D (2010) Sensitivity analyses of FORM-based and DRM-based performance measure approach (PMA) for reliability-based design optimization (RBDO). Int J Numer Methods Eng 82(1):26–46

Lee I, Choi KK, Zhao L (2011a) Sampling-based RBDO using the stochastic sensitivity analysis and dynamic kriging method. Struct Multidiscip Optim 44(3):299–317

Lee I, Choi KK, Noh Y, Zhao L, Gorsich D (2011b) Sampling-based stochastic sensitivity analysis using score functions for RBDO problems with correlated random variables. J Mech Des 133:021003

Mack Y, Goel T, Shyy W, Haftka R (2007) Surrogate model-based optimization framework: a case study in aerospace design. Stud Comput Intell 51:323–342

McKay MD, Morrison JD, Upton SC (1999) Evaluating prediction uncertainty in simulation models. Comput Phys Commun 117(1–2):44–51

Queipo NV, Haftka RT, Shyy W, Goel T, Vaidyanathan R, Kevin Tucker P (2005) Surrogate-based analysis and optimization. Prog Aerosp Sci 41(1):1–28

Rahman S, Wei D (2008) Design sensitivity and reliability-based structural optimization by univariate decomposition. Struct Multidiscip Optim 35(3):245–261

Rahman S, Xu H (2004) A univariate dimension-reduction method for multi-dimensional integration in stochastic mechanics. Probab Eng Mech 19(4):393–408

Rao SS (2002) Applied numerical methods for engineers and scientists, Prentice hall, Upper Saddle River

Reedijk CI (2000) Sensitivity analysis of model output: performance of various and global sensitivity measures on reliability problems. Master’s thesis, Delft University of Technology

Rosner B (2006) Fundamentals of biostatistics, 6th edn. Thomson Brooks/Cole, Belmont

Simpson T, Poplinski J, Koch P (2001) Metamodels for computer-based engineering design: survey and recommendations. Eng Comput 17(2):129–150

Sobol IM (2001) Global sensitivity indices for nonlinear mathematical models and their monte carlo estimates. Math Comput Simul 55(1–3):271–280

Wang H (2009) Forward regression for ultra-high dimensional variable screening. J Am Stat Assoc 104(488):1512–1524

Wang GG, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. J Mech Des 129(4):370–380

Welch WJ, Buck RJ, Sacks J, Wynn HP, Mitchell TJ, Morris MD (1992) Screening, predicting, and computer experiments. Technometrics 34(1):15–25

Wu Y, Mohanty S (2006) Variable screening and ranking using sampling-based sensitivity measures. Reliab Eng Syst Saf 91(6):634–647

Zhao L, Choi K K, Lee I (2011) Metamodeling method using dynamic kriging for design optimization. AIAA J 49(9):2034–2046

Acknowledgments

Research is supported by the Automotive Research Center, which is sponsored by the U.S. Army TARDEC. This research was also partially supported by the World Class University Program through a National Research Foundation of Korea (NRF) grant funded by the Ministry of Education, Science and Technology (Grant Number R32-2008-000-10161-0 in 2009). These supports are greatly appreciated. The authors also express appreciation to Lei Shi of Ford Motor Company for his modeling help and consultation.

Disclaimer

Reference herein to any specific commercial company, product, process, or service by trade name, trademark, manufacturer, or otherwise, does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government or the Department of the Army (DoA). The opinions of the authors expressed herein do not necessarily state or reflect those of the United States Government or the DoA, and shall not be used for advertising or product endorsement purposes.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cho, H., Bae, S., Choi, K.K. et al. An efficient variable screening method for effective surrogate models for reliability-based design optimization. Struct Multidisc Optim 50, 717–738 (2014). https://doi.org/10.1007/s00158-014-1096-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-014-1096-9