Abstract

The paper discusses the filtering of shape sensitivities as a mesh independent regularization method for very large problems of shape optimal design. The vertices of the simulation discretization grids are directly used as design morphing handles allowing for the largest possible design space. Still, however, there has been a lack of theory to consistently merging the sensitivity filtering into the standard optimization technology which is an ongoing topic of discussion in the community. The actual paper tries to overcome this burden. As a result it will be shown that there is a perfect transition between the sensitivity filtering and all the other shape parameterization techniques used for the shape optimization, as there are CAD-based techniques, subdivision surfaces or morphing box technologies. It appears that sensitivity filtering belongs to the most general and powerful control technologies available for shape optimal design. The success will be demonstrated by various illustrative examples which span from basic aspects to sophisticated applications in structural and fluid mechanics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sensitivity filtering is a well-established and very successful procedure in discrete topology and shape optimization. It is used to regularize the optimization problem by introducing an additional filter length scale which is independent of the discretization. The filter is both, a design tool controlling local shape or density distribution and a mean to prevent numerical problems such as mesh distortion or checker board patterns. Together with adjoint sensitivity analysis to determine the discretized shape gradient, the filter technique is a most powerful optimization procedure and successively applied to the largest optimization problems known. Filtering is the key technology for using the vertices of even the finest discretization mesh directly as design handles for discrete shape optimization. This leads to what is called Vertex Assigned Morphing of Optimal Shape (VAMOS) throughout this paper. VAMOS is the most direct approach to modify geometry. In contrast to standard shape morphing techniques and CAD methodologies no other design handles are used than the mesh vertices together with filters.

Still, however, there has been a lack of theory to consistently merging the sensitivity filtering into the standard optimization technology which is an ongoing topic of discussion in the community (Sigmund and Maute 2012). The actual paper tries to overcome this burden with the special application to shape optimization. As a result it will be shown that there is a perfect transition between the sensitivity filtering and all the other shape and topology optimization techniques for problems of structural and fluid mechanics. Among those techniques which do not use CAD parameters to parameterize shape there are meshfree and node-based or parameter-free methods which means “free of CAGD parameters” (Kim et al. 2002; Le et al. 2011; Clausen and Pedersen 2006; Bletzinger et al. 2010; Firl and Bletzinger 2012; Firl et al. 2013; Arnout et al. 2012; Scherer et al. 2010; Hojjat et al. 2014), the traction method (Azegami and Takeuchi 2006) and filtering techniques as developed for topology optimization (Sigmund 1994, 2007; Bourdin 2001; Bruns and Tortorelli 2001) or for CFD problems (Pironneau 1984; Jameson 1995, 2003; Jameson and Vassberg 2000; Mohammadi and Pironneau 2004, 2009; Stuck and Rung 2011). CAGD based optimization (Imam 1982; Braibant and Fleury 1984, 1986; Bletzinger et al. 1991, 2005) and morphing techniques have been adapted from computer graphics technology (de Boer et al. 2007) and define the actual state of commercial application.

The common reason behind the development of all the mentioned methods is the properties of the inverse problem that is ill posed by nature, as it is well known. That is the case for the continuous formulation as well. There exists a vast amount of mathematical literature about dealing with regularization in the context of shape optimization and shape derivatives. The above referred work of Jameson, Pironneau and others as well as Sokolowski and Zolésio (1992) shall be mentioned as some few entry points into this topic. Discretization amplifies the situation as it adds all the well-known numerical deficiencies and artifacts. It is important to realize that discretization is not the only, and not even the major source of difficulties observed when solving optimization problems. One can distinguish all the above mentioned techniques by the regularization technology applied to overcome the numerical problems. This aspect is treated explicitly by the filtering methods as they are mentioned above. CAGD based methods or morphing methods regularize the problem in an implicit manner as shape functions and morphing strain fields have the similar effect as filters.

Looking at the history of structural optimization, the technological deficiencies have been observed as jagged surfaces in shape optimization and checkerboard patterns in topology optimization. Regarding shape optimization, that was an important argument to introduce CAGD-based techniques (Braibant and Fleury 1984). Alternatively, filtering has been introduced in topology optimization (Sigmund 1994). Both approaches take control of the basic modes of geometry or material distribution and regularize the problem by omitting the higher oscillating modes. This is done either by reducing the number of design parameters and introducing basic shape functions, as in the CAGD-based, morphing boxes and similar techniques, or, by directly filtering higher modes. The relation between filtering and discretization is obvious as it depends on the grid size which modes can be resolved. Consequently, the filter radius or the CAGD patches must be larger than a certain number of grid cells or elements.

In the context of topology optimization filtering is discussed in even further details which is motivated by the fact that clear separation fronts between the material phases should be generated. The original approach of Sigmund (1994) is known as ‘sensitivity filtering’ because it affects the filtering of the objective sensitivities with respect to the material density. The challenge of the original filtering formula is that it seems not to fit in any consistent theory but has proven to be most successful. Alternatively, Bruns and Tortorelli (2001) and Bourdin (2001) independently had suggested to filtering the material density instead which could be proven to be mathematically consistent. That technique became known as ‘density filtering’ At the same time Sigmund suggested a modified sensitivity filter which can be shown to be equivalent to the mentioned density filtering. Refer to Sigmund (2007) for an in depth discussion. The difference of the two approaches is that the density filter deals with the filtering of total actual values of the design variables whereas the sensitivity filters deal with the variable update, only. What follows in the sequel of the actual paper is a sensitivity filtering scheme for shape which consistently is derived from a ‘shape filtering’ procedure. That compares best to Sigmund’s modified sensitivity filter and affects the shape update, only. Consequently, the initial shape will not be smoothed at all throughout the entire optimization procedure. That is intended to deal with specific properties such as feature lines which are important to identify the product brand. In contrast, the parameter-free shape optimization approach by Le et al. (2011) is based on ‘shape filtering’ which is equivalent to the density filtering in topology optimization. Since the complete actual shape is filtered and not the shape update only, consequently, initial shape features will be smoothed as well.

It is important to realize that choosing a regularization method is a design decision which triggers the optimal design. That is obvious when for regularization the number of design parameters is reduced. Clearly, the optimal solution can only be found in the reduced design space. It is a real challenge to consider all optimization relevant design parameters while setting up the initial model. It is an art to find the right balance between a small number of design parameters and their relevance for the final optimum. It is even more challenging to implement a distributed work flow when design modeling and optimization are done by different departments as it is typical for engineering collaboration. One gets stuck between the demand of having a small number of design parameters necessary for regularization and the engineering insight which is necessary to take the right choice.

It appears that the filtering technique is an elegant and effective solution of the engineering challenge. A very large design space can easily be treated by definition of a filter size. Design modeling and optimization can be done separately. The large design space allows for finding even unexpected solutions that could never be found with dimension reduced design models. The filter, however, will not modify the solution. The filter helps to guide the optimization procedure towards that local optimum whose shape mode is characterized by the filter size. Hence, from the operational point of view the filter is nothing more than a smoother as it is called in the mathematical community. From the engineering point of view, the idea of a filter is appropriate because it is an easy to be applied handle to identify different designs as local optima. The design space is explored for local optima by repeatedly optimizing with different filter sizes but the same design model. It appears to be the ideal technology for preliminary design.

Also, the filter technique can be applied to dimension reduced design models. Then the properties of CAD and vertex assigned morphing are merged. In this case there are two different discretization grids for the design control field and the geometry. The design control grid serves as the convex hull of design as it is known from CAGD. Applying the filtering procedure to the coarse design control field appears to be identical to the procedure of the subdivision surface technology to generate geometry (Catmull and Clark 1978; Zorin et al. 1996; Kobbelt 1996; Litke et al. 2001). Indeed, the filtering technique can be seen as a further generalization of CAD methods with the possibility to have identical grids for design control and generated geometry. A proper combination of design control grid and filter function even generates cubic or other B-spline geometries as it is known since the pioneer work of Catmull and Clark (1978). Also, it will be shown that for the mentioned case of merged grids to discretize design control and geometry fields it is not necessary to introduce extra design control parameters. The coordinates of the surface coordinates are enough, the control parameters can be treated virtually. Le et al. (2011) had been very close to the results presented here, however, it seems that they did not realize the consistent relations between sensitivity filtering and using the design control field which they explained as the initial surface geometry.

Finally, regarding design, the filtering technology supports all relations to the isogeometric analysis (IGA) which the subdivision surface technology does as well. The difference to filtering is that IGA starts from a rather coarse mesh of the design controls. Then, by knot insertion, finer analysis meshes are derived which, still, are approximating the true geometry. As a fact IGA follows the classical CAD based optimization strategies by considerably reducing the dimension of the design space compared to the number of analysis degrees of freedom. There is a rapidly increasing amount of literature on the tracks of this line (Wall et al. 2008; Cho and Ha 2009; Ha et al. 2010; Seo et al. 2010; Nagy 2011; Kiendl 2011; Schmidt 2013; Kiendl et al. 2013). There are many advantageous aspects of IGA. However, the question of how to deal with large, non-convex design spaces remains. For an answer it might be an option to combining IGA and the filter technology for regularization. Filtering is a methodology on top of any simulation methodology including IGA.

To conclude, sensitivity filtering is the most general and powerful shape control technology available for shape optimal design. It has been applied to shape optimization problems for structures and fluids with a number of design parameters up to 3.5 million.

The paper is organized such that the theory is first developed and then be demonstrated for some simple one-dimensional cases. Finally, several real size and industrial examples will briefly demonstrate the impressive potentials of filtering and the vertex assigned morphing of design.

2 Continuous shape control

We start very general by introducing an additional field p. This serves as the control which steers the evolution of shape. In analogy to splines the control field can be identified as the continuous equivalent to the convex hull which is discretized by control nodes. As with splines where the coordinates of the control nodes are the design variables, now, the control field represents the design degrees of freedom which drive the shape.

The considered shape optimization problem states as:

where f and gj are the objective function and constraints and R are the state equations which may be non-linear. There are four fields describing the state u, the surface coordinate x, the geometry z as well as the design control field p, Fig. 1. To concentrate on the basic ideas of what follows and for the sake of clarity, (1) is formulated in 1D geometric space. As a consequence, the geometry z is a function of the one spatial surface coordinate x and the design control p. Extended to 3D, (1) represents the classical view at a surface controlled shape optimization problem following the ideas of Hadamard, e.g., refer to Sokolowski and Zolésio (1992). Then, the shape relevant modifications of geometry z are identified as in the normal direction to the surface spanned by surface coordinates x1 and x2. Since the field of coordinates z decides about the shape of the structure it will be called “geometry” or “shape” as well. In the case of topology optimization, z is a dealt with as a scalar parameter and may be identified as the field of material density.

Additionally, we introduce the material coordinate ξ which is attached to the material points of the surface. The surface coordinate x(ξ) is a function of the material coordinate. This relation might be inverted to generate ξ(x), which will be used later on. Finally, z and p are functions of the material coordinate through resolution of the surface coordinate, z(x(ξ)) and p(x(ξ)), respectively.

Applying appropriate discretization techniques, e.g., the finite element method for state and geometry or CAGD techniques for the design control, one arrives at vectors u, z, x and p of discrete parameters for state, shape as well as surface coordinates, and design control, respectively. Since the goal of the paper is to discuss the control of shape the following presentation will concentrate on the treatment of z as the shape coordinate and how filtering is introduced in that regard. Tangential terms as they are known from the Hadamard formulae are omitted here without loss of generality. The closing examples will show applications to the optimal shape design of surfaces embedded in three dimensions.

The geometry z at x0 is generated from the design control field p(x) by a smoothing filter operation:

F is the filter function also known as kernel function in terms of the meshfree method (Kim et al. 2002). It is related to the surface point x0 = x(ξ0) spanning over the filter domain of radius r, Fig. 1. The filter function and the filter radius are chosen to control the curvature or waviness of the generated shape z. They are design decisions. In topology optimization the equivalent approach is known as density filtering (Bruns and Tortorelli 2001; Bourdin 2001).

The (forward) filter function satisfies the property:

The adjoint or backward filter function A is defined as:

A filter function is defined to be symmetric around its center x0 or self-adjoint if

Finally, a dual filter function D may be defined to restore a suitable design control field p from the geometry z and is defined as:

Typically, when an optimization problem is set up, the initial shape z is given. The distribution of the related design control field p is unknown and has to be adjusted by applying the dual filter D if one is interested in it. Typically, dual filters refer to the dual shape functions of the applied surface discretization method. In this context the term ‘filter’ for D is used because the integrals (2) and (6) are formally identical and D in (6) plays the same role as F in (2). As a matter of fact, this operation is not necessary as the absolute state of the control field may remain unresolved. This is because during the process of optimization we only are interested in the change of the design control p to determine the change of geometry. The absolute values of the design control field have not to be known.

The variation of shape δz is determined to depend on the variation δp of the control straight forward as:

Also, from (2), we can identify the relation between z(x0) at x0 and p(x1) at x1 which allows to define the derivative of z at x0 with respect to p at x1 as:

Finally, applying the chain rule of differentiation the derivative of a response function f with respect to the design control p at x1 is given as

Formally comparing (9), (4) and (2), obviously, the geometry gradient df/dz is filtered backward by the adjoint filter function A. This procedure is what is defined as ‘sensitivity filtering’. In the sequel it will be shown how that serves as the basis for the design update.

3 Shape discretization and discrete sensitivity filtering

For numerical analysis, geometry and design control fields are discretized. CAGD techniques use different grids for control and geometry. Alternatively, both fields share the same grid as it is the case what we discuss here. Discretizing the control field by a fine mesh allows for as many as possible design degrees of freedom. Now, at every node j there are values for the geometry (xj, zj) as well as a value for the nodal design control parameter pj. The design control field is discretized using shape functions Nj related to each design parameter pj:

One can think of linear hat shape functions as the simplest case, Fig. 2, creating a piecewise linear approximation of the design control field, Fig. 3. The number n is the number of nodes within the half span of the shape function. We will see that this simple discretization is already good enough to produce C2-continuous geometry fields z using linear filters similar to the shape functions.

Applying (10) to discretize the design control field as well as the filter function Fi to generate the geometry value zi at node i we get from (2):

Additionally, Fi = F(x, xi, r) has been introduced to simplify notation.

From this equation we can determine the discrete derivative of shape coordinate zi with respect to the design parameter pj which is the point of departure of sensitivity analysis:

The matrix B is the forward filter operator matrix as the discrete equivalent to the forward filtering operation (2).

Let us now consider an unconstrained optimization problem with f the objective as a function of the geometry zi and design parameters p j as defined above:

Constraints may be added as necessary. However, it is advisable to consider techniques which support adjoint sensitivity methods for large numbers of design parameters, e.g., refer to Arnout et al. (2012) for further details.

The derivative of the objective with respect to the design parameter p j is straight forward to be determined as integral over the surface Γ:

The geometry gradient df/dzi is assumed to be determined by appropriate methods, e.g., by adjoint techniques.

We see that filter and shape functions exchange their role which explains the definition of the adjoint filter operator matrix B T as the discrete equivalent to (9). The derivatives df/dzi are filtered backward compared to the design parameters pj, (11), which we consider to be filtered forward.

For the special case of regular and equidistant grids, the filter operator matrix B is symmetric even if filter and shape functions are spanning over different supports n or m, respectively. Applying the transformation rule between F and the related shape function N, Fig. 4, we arrive at:

Here, the head index (n) of N(n) = N(ξ,n) refers to the number of nodes the shape function spans on each side.

Interesting enough, filtering the linear hat shape function by itself turns out to be the well-known cubic B-spline. Also, note that filtering on different grids for design control and geometry fields is strongly related to approximating the geometry from the convex hull polygon as it is done by standard CAGD methods using B- or other splines.

Finally, on regular grids and symmetric filters, we arrive at what is known as filtering rules. The same filter is applied to design control field p as well as to the shape derivatives df/dz:

For non-regular grids the non-symmetry of the filter operator matrix B has to be considered. In particular, at design edges filters are non-symmetric, as will be demonstrated later. Design parameters are to be filtered forward by B and the sensitivity parameters in backward direction by B T. All that proves the sensitivity filtering technique to be equivalent to the shape filtering and, consequently, as a theoretically consistent method for shape optimization.

In numerical practice additional effort is applied to carefully guarantee a high quality, less distorted mesh or, at the best, equally sized grid cells throughout the evolutionary process of shape optimization to support best simulation results (Stavropoulou et al. 2013). Then, applying the forward filter to the shape derivative which is equivalent to assuming B to be symmetric is a good choice. Also, as we will see later on, there is much more freedom to choose B or an equivalent filtering scheme without altering the optimization model as it might be anticipated, here.

4 Shape update rules

Let us start from the Taylor series expansion of the objective function with respect to the design parameters at a certain state of the solution evolution:

Next, the chain rule of differentiation is applied:

and from the stationary condition the well-known Newton parameter update formulae are derived where H z is the Hessian of f with respect to z and H p that one with respect to p:

Obviously, by the last operations the design control parameters have been eliminated although their effect remains in terms of the filter operator matrices B and B T.

By substituting the matrix M instead of the inverse of H p we can generate the general quasi-Newton family with α as the line search step length factor:

Replacing M by the identity matrix I gives the steepest decent method. For large and very large problems steepest decent and variants of the conjugate gradient methods are the methods of first choice because second derivatives or their approximations typically are far too costly to be evaluated. We see from (20) the direct effect of the filter on the algorithm. Through the related operator matrix B the parameter update is affected which results in the discussed smoothening of the geometry field but also in modifications of the search direction. Note, that (20) represents a two pass filtering of the sensitivity. Furthermore, filters might be generated which together with M approximate the inverse Hessian and can help to optimize the convergence speed of algorithms.

For the special case of the same number N of design and geometry parameters the B matrix is quadratic of size N × N. That is the standard case for the vertex assigned or node-based shape optimization as it is discussed here. Also, a reasonable filter shall be applied such that B is of full rank. Most of the common filters including the hat function share that property. Then, we can draw further conclusions:

First, choosing M as the inverse of B T, then we get the well-known sensitivity filtering technique where the shape update vector Δz is directly determined from the filtered gradient vector (the tilde represents the smoothing operation) which is the basis for the implementation of the algorithm:

Second, looking again at the Newton update rule (19), we get:

As it can be seen, the filter cancels out. That is not surprising, since a N × N matrix B of full rank represents nothing else than a transformation between alternative variables p or z. This means, that regarding global and local minima the optimization problem is not modified at all by the choice of a filter, may it be the simple hat filter as presented here or any other as e.g., a Gaussian distribution (Stück and Rung 2011). Instead, the problem will only be modified if it is re-parameterized, e.g., by re-meshing or introducing individual parameters for control grid and geometry grid as done by CAGD or standard morphing techniques. Even if canceling out during the optimization procedure, filters support the numerical quality by controlling the surface quality in terms of the smoothness of the discretized geometry.

As the filter modifies the gradient vector the filtering effect can be exploited best by first order gradient methods. Typically, those methods converge to that local minimum which is characterized by a shape mode wave length that is not smaller than the filter radius or the variance in case of Gaussian filters. The filter shape, however, appears not to be important at all. That allows to using any kind of filter for the sensitivity filtering as long as B remains non-singular. For example, forward sensitivity filtering instead of backward is a feasible alternative as done in (16). In turn, we can conclude that every simple gradient method with sensitivity filtering will converge to a solution of the original, unmodified problem. For non-convex problems, the choice of filter will affect which local optimum will finally be found. This is the intended effect which helps to efficiently explore the design space.

Also, we see that neither the control field p nor its update Δp must be explicitly introduced or evaluated although they are essential for the consistency of theory. That supports the finding that vertex assigned or node-based shape optimization directly deals with the grid node coordinates of shape discretization although the true variables are the discretization parameters of the design control field. Indeed, for application purposes the knowledge of p appears not to be necessary at all.

To conclude, gradient methods together with filtering are the first choice for large and very large problems. They allow to solving easily, flexibly and intuitively problems of up to several million design parameters. It is a minimal effort to set up optimization problems when design and geometry parameters share the same discretization mesh. If a mesh for simulation is available the optimization problem is readily generated by choosing the proper filter and to decide about objective and constraints.

5 Discretized filtering hierarchy

Alternatively, we approach at a discrete version of the filter integrals (16) through series expansion applying the trapezoidal integration rule. That is done for the example of the one dimensional case on a regular grid (2D cases and non-regular grids have to be treated considering the non-symmetry of the filter operator):

Here, the filter integral transforms into a sum of products of the discrete design control parameters pj and shape functions Ni evaluated at the related nodes. They are derived from scaling the filter function by r/n, where r is the filter radius r and n the number of integration intervals. That recalls the transformation between filter and shape functions as shown in Fig. 4 and the definition of Ni(ξj) below in (26).

Using the fact that the shape functions used are self adjoint, i.e., Ni(ξj) = Nj(ξi), we get the equivalent notation which is formally identical with CAGD and other standard discretization methods:

As an example, consider the simplest case of a piecewise linear hat filter function F:

And the equivalent shape function Ni:

For an example, refer to Fig. 5 with the choice of r = 4 and the filtering of a linear, hat-like distribution of the design control field p.

For any number n of node intervals within the filter radius and the simple hat filter function the equation of the filtered coordinate zi is:

In the limit n → ∞ the discrete filtered geometry as shown in Fig. 5c will converge to z0 = 2/3p0 and the curve will span from x = −8 to x = 8.

Furthermore, in the limit n → ∞ it appears that filtering the linear filter hat function (F(x, x0, r), spanning over 2r, Fig. 2a) by itself converges to the well-known cubic B-Spline function spanning over 4r:

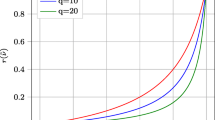

Figure 6 shows the convergence history with increasing n for the filtering of the hat function with radius r = 4 and height z0 = 1. Obviously, it converges to the cubic B-spline with maximum height of 2/3.

As a conclusion, we can state that filtering of polygonal design control fields with the linear hat function and a filter radius equivalent to the spacing of the control vertices will generate C2-continuous geometries in the limit. Refer to Zorin et al. (1996) for the generalization to meshes of arbitrary topologies. Discrete filtering by using the linear hat shape function with at least 4 node spans within the filter radius will generate very good approximating polygons of piecewise C2-continuous cubic functions and can be interpreted as generating a “moving B-Spline” shape approximation. In general, the field of continuous design control p(x) or the polygon defined by the discrete design parameters p j defines a convex hull around the shape. The convex hull is approximating the shape rather than interpolating as it is well known from classical CAGD techniques. Discrete design parameters interpolate the generated shape if linear hat shape functions are used and the filter radius is as large as the distance between nodes, i.e., overlap n = 1. In contrast, if the node spacing is given by the discretization mesh, increasing the filter radius will improve the smoothness of the generated shape.

6 Shape approximation and convex hull property

It is the nature of filtering that the job of the design control field is not more than approximating the generated geometry. The design control field is the equivalent to the control node polygon of B-splines. Moreover, as it has been shown above, if a polygonal design control field and a filter with radius as large as the control vertex spacing are used the polygon of the filtered geometry will approximate the cubic B-spline. It will converge to the B-spline as the number n of node intervals within the filter radius converges to infinity, Fig. 7. Using n = 1, the design control field is interpolated; with n = 4 the cubic polynomial is approximated already very well, Fig. 7. The design control field of the example shown in Fig. 7 is generated piecewise linear with the filter radius used as spacing between the vertices. When filtering (n − 1) additional nodes are generated between the vertices, Fig. 7.

Particularly, the design control field does not interpolate the edges of the generated shape, here, the left and the right end of the curve, Fig. 8. Again, the filtering approach shares this property with B-splines. In contrast, however, it is not straight forward to create an alternative filtering scheme which is interpolating the edges as it the case for open B-splines. The alternative is to extend the control field by one filter radius across the edges such that the generated geometry properly starts at the desired position, Fig. 8. For the case of a polygonal design control field—as for the presented example—the generated shape, its derivative and curvature at the free edge can exactly be controlled by defining the positions of the three control nodes at the edge and one node span to the left and right, nodes −1, 0, 1 as shown in Fig. 8:

Obviously, the effect of discretization is fading out in the continuous limit when n approaches to infinity. The right edge is controlled, equivalently. The example of Fig. 8 is generated with the specific values:

Note that filter and shape functions may have different node spans n. Filtering always improves the smoothness of the design control field, as it is demonstrated in Fig. 9.

7 Pragmatic discrete filtering using post-scaling

Instead of appropriate pre-scaling the shape functions by the inverse of the number of node spans within the filter radius as shown in Fig. 4 post-scaling can be done to insure the unit-integration-condition, Fig. 10:

That approach can be used also in the case of non-regular grids when pre-scaling is complicated. Then the summation is done over all neighbor nodes inside the filter radius. The extension to 2D surface design control and filter functions is straight forward. Regarding topology optimization, this technique is an essential part of the density filtering methods by Bruns and Tortorelli (2001) as well as Bourdin (2001) but also of the modified sensitivity filter by Sigmund (2007).

8 Discrete sensitivity filtering

The discrete sensitivity filtering is created directly applying (31) to the shape sensitivity for symmetric filter operators:

In the case of asymmetric filter operators backward filtering has to be applied:

And, finally, again forward:

9 Modified density and sensitivity filtering for topology optimization

As mentioned in the introduction, there is still a lack of theory to merge the original sensitivity filtering scheme of Sigmund (1994) into a consistent framework. Following the idea of the additional design control field a new motivating argument may be developed, again for the 1D case.

First, another filtering scheme is introduced which links the control field p to the density ρ at coordinate xi, compare to (2):

Straight forward, the derivative of ρ i at xi with respect to p j at x j is determined to be, compare to (8):

That in mind, the chain rule is applied to determine the derivative of the objective function f as function of the density ρ with respect to the parameters p j :

and, applying a symmetric filter we arrive at:

which, according to the previous paragraphs can be approximated as

The last expression is almost identical to Sigmund’s original sensitivity filter. The only difference is that the control parameter p j in the denominator of (39) should be replaced by p j which might be motivated that, typically, the control parameters are eliminated and not available:

Last not least, it can be argued that (40) is another filtering rule than from where started with (35). But as the number N of control and density parameters is identical this leads to a N × N filter operator matrix B, which we learned can be chosen freely as long as it is of full rank. As that is the case there is no reason to blame (40) as a heuristic suggestion.

10 Sensitivity filtering at the domain edges

As shown above domain edges can only be manipulated by design control fields which are extended across the edges by one filter radius span. In particular, additional nodes have to be generated across the edge to support the derivatives df/dpj:

where the domain is discretized by nodes with numbers from 0 to num.

In practice, however, it may be very difficult or even impossible to generate extra nodes across the domain edges. In particular, that is true for very large problems of industrial importance. That problem can be solved by using those domain nodes twice which are within a band of size r near the edges: First, as they are, and secondly, as the shadow of the virtual nodes from outside. Instead of spanning to the extra outside node, filters near the edge are reflected back into the domain spanning to the shadow nodes s j , Fig. 11. For the example in Fig. 11 the shape functions N0 and N1 are reflected back into the domain where the nodes 1 and 2 serve as shadows of nodes −1 and −2, respectively, to additionally store their data. The distance between a node and a shadow node has to be determined by the length of the reflected ray between those nodes. The extension to surfaces needs special considerations at corners.

Considering shadow nodes, (27) has to be modified:

11 Varying filters to interpolate edges

Alternatively, for the edges of the domain one can modify the filters towards the edges such that the filters will never leave the integration domain.Regarding linear hat functions the radius and the filter height have to be adjusted according to the unit integral condition (3), Fig. 12:

The reducing filter sizes towards the edges allow interpolating the geometry at the free edge. Consequently, different forward and backward filtering has to be considered. To carefully control the smoothness of the generated geometry, additional control may be superimposed to the discrete design parameters within the range of the filter size right or left to the edge. In Fig. 13 an example is given with the regular filter radius r = 4. The discrete design parameters p j at the edge between nodes j = 0 to 4 are interpolated cubical to exactly control design z0, first and second derivative at the edge j = 0. As the filters increase towards the interior of the domain the higher discontinuities at parameter j = 4 will be filtered away when the geometry is generated. The design parameter interpolation has to be considered as additional parameter linking by the chain rule of sensitivity analysis. Figure 14 shows a larger view of the last example. The extension to surfaces is straight forward as filters are modified at the edges. Refer e.g., to Litke et al. regarding equivalent techniques for the trimming of subdivision surfaces.

12 Numerical examples

12.1 A simple optimization model problem: best fit

Consider the model problem given in Fig. 15. Given is a control field p(x) which is discretized using the grid levels shown. The geometry is always generated on grid level 0 with varying filter radii from 1 to 8. The objective function f is the least square error determined from comparing the generated geometry z(x) and the function shown in Fig. 15.

First, the shapes of the best fitted cubic B-splines on grids 0 to 3 are determined, Fig. 16. On grids 0 and 1, they are roughly approximated since the grid sizes of geometry and control field differ only slightly. The solution on grid 0 even resembles the goal function itself.

Next, the steepest descent method together with filters is used. As for typical large scale optimization problems the design control field is discretized on the same finest grid level 0 as the generated geometry. Figure 17 shows the result of several optimization runs after the given number of iterations for different choices of filter radii. They are chosen in relation to the grid sizes of the four grid levels. As can clearly be seen from Fig. 17 at the beginning of the optimization procedure the filter guides the solution towards those best cubic fitted curves which are determined on that grid which is related to the applied filter radius. That is exactly the effect which is intended by the sensitivity filter technique: To approach at basic shape modes of different wave lengths using the same fine grid but varying the mesh independent filter. Further continuing optimizing the algorithm will converge to the goal curve for any choice of filter as has been shown in theory. The convergence might be very slow depending on how well the finer modes are represented by the filtering procedure. But they are there because the filter operator matrix B is non-singular.

12.2 Convergence to selected local minima

This example is constructed such that it consists of an unlimited number of local minima which are composed by arbitrary combinations of basic sinusoidal modes for k = {2, 4, 64}, where k reflects the frequency of the related mode. It will be shown that the method will converge to a local minimum which is characterized by the selected filter radius. The objective function is given as:

where

For the numerical solution the geometry field z(x) is discretized by linear shape functions as used before. The following solutions are determined for a domain of length n = 64, Fig. 18. The discretization grid is defined by equally distances of Δ x = 1. Applying the filter technique three results are determined for filter radii r ={1, 8, 16}. As can be seen, using the related filter the algorithm converges to solutions which represent the background noise (k = 64) or solutions which are related to combinations of the basic modes k = {2, 4}. According to the low pass filter properties the lower modes are always present. For problems of structural optimization, usually, different structural shapes are directly related to different structural behavior representing distinct local minima. In particular, that is true for the shape optimization of shells for maximum stiffness or other objective functions. One can easily imagine that selecting a certain filter radius will guide the algorithm to the related local minimum. It should be noted that for this example because the mentioned three modes are blended out from (44) the rank of the related B operator matrix is incomplete and reduced by 3. Inversion of B must be done through modal decomposition to check the Newton update schemes as introduced before.

12.3 Preserved design features

For typical industrial applications the product shape shall be optimized, however, small size shape characteristics must be preserved. For example, think of the feature lines which give car bodies the characteristic appearance of the brand. It is essential that shape optimization does not disturb the design identity. This is naturally supported by the presented technique as the shape update is filtered rather than the shape in total. Typically, an initial shape is given which is generated from a creative design process and which represents all product characteristics regarding large and small surface curvature as well as sharp kinks. During optimization until the final stage of the convergence history those properties will be preserved which are related to curvatures radii which are smaller than the selected filter radius. Figure 19 sketches a simple example. Again, a certain target curve has to be optimally fitted. Additionally, a small kink at x = 20 is defined which has to be preserved through optimization. That is the case if a filter radius is chosen which is large enough, e.g., r = 10, as done in Fig. 19. What is displayed are the initial shape and the shape after iteration steps 4, 12, and 24, including the kink at x = 20. At iteration step 24 the curve has nicely converged to the target curve except for the top with the forced kink and the base where one observes a small undershooting because of the smoothing. From an application point of view this intermediate solution may be accepted as the optimal shape including the kink as the intended design feature. This situation is immediately identified since the speed of convergence drops considerably when the optimization is continued. Then, as is to be expected and can be seen from Fig. 19 as well, the method will converge completely to the target curve. This is indicated in the figure by the tag ‘many iterations’.

12.4 Bead design of plates and shells

This and the following examples demonstrate the success of the technology for the application to thin structures and surfaces in 3D (Firl et al. 2013; Hojjat et al. 2014). The presented ideas are analogically applied moving nodes in shape normal direction whilst the filters are extended to be rotationally symmetric hat filters. Additionally, the mesh quality has been controlled by applying a weighted, anisotropic Laplace smoother which has been developed by the group applying the mechanical analogy of virtual surface stresses (Bletzinger et al. 2010; Stavropoulou et al. 2013). The optimization as well as the structural analysis has been done applying the own software CARA++, which is an efficient, object-oriented and parallel implementation, including highly efficient semi-analytical (Bletzinger et al. 2008) and adjoint sensitivity analysis, robust, gradient based optimization techniques (variants of conjugate gradients), and reliable non-linear finite element models in particular for shell and membrane structures.

All examples are highly non-convex and the results depend extremely on the applied regularization scheme, i.e., the chosen filter methodology, how it is implemented and, most important, how large the filter radius is chosen, not to mention the effects of the type of finite element discretization and the modeling of loads and supports. Therefore, the examples are not reported in all details. Instead, the focus is on potential fields of application and the success of the approach for challenging industrial problems as an alternative to CAD-based shape optimization, in particular for the first stages of product development when a large design space is mandatory.

The first example demonstrates the mesh independence of the method, Fig. 20. A quadratic plate is loaded in the center and supported at the corners. The question is to find the optimal topology of stiffening beads. A filter radius is chosen as large as half of the width of support. Additionally, a constraint on the maximum bead depth is given. As shown, the optimal solution is characterized by the filter but it is mesh independent. The choices of filter type and size are additional degrees of design freedom which may be used to explore the design space. Note the smooth final surface although only local radial filters are applied. No post-processing is applied.

Figure 21 shows the result of a joint project together with Adam Opel GmbH. The optimal distribution of beads has been determined to maximize the five lowest eigen-frequencies of a thin metal sheet. The number of iterations appears always to be not more than 40 for every problem size. The number of optimization variables has been appr. 50.000 lateral (shape relevant) and 100.000 tangential (mesh relevant) variables.

12.5 Optimal design of a cylindrical roof

The shape of a cylindrical roof (length = 2.000 mm) subjected to self-weight is optimized for stiffness, Figs. 22 and 23. Mass is indirectly controlled by setting the thickness constant. A very fine mesh is used for analysis and design with approximately 100.000 lateral and 200.000 tangential design degrees of freedom. The well-known shape of the inverted hanging chain is found as global optimum for any choice of filter radius if the Poisson effect is neglected (ν = 0). For ν ≠ 0 several local minima are found depending on the choice of filter radius r. Note the speed of convergence as well as the marginal differences of design objective values for the various different solutions, Fig. 23.

12.6 Staggered optimization of a fiber reinforced composite shell

The shape of a bend cantilever is determined, assuming a composite shell with two layers of fiber reinforcement, Figs. 24 and 25. The filter technique has been applied to regularize the fiber optimization as well. The objective is maximum stiffness; altogether there are about 80,000 shape and fiber angle variables.

12.7 CFD applications

The geometry of the VW-Passat side mirrors has been improved in several different scenarios, where the whole mirror or parts have been allowed to be modified, Fig. 26. In all cases the goal was to reduce the drag of the complete car by shape modifications of the mirrors only. Therefore, a complete model of the car had to be simulated in an appropriate numerical wind tunnel using OpenFoam, the adjoint solver of ICON, and CARAT++ for optimization. Regarding optimization, both mirrors had been treated individually, i.e., no symmetry conditions had been applied. That gives 32,000 design parameters for each mirror, i.e., 64,000 in total. Most important was to maintain the mirror feature lines throughout the optimization as to preserve the specific characteristics of a Passat mirror, Fig. 27. The total drag of the car could be reduced up to 0.6 %. The geometry has been provided by Volkswagen and the adjoint solvers by ICON who had been partners of the EU-project FLOWHEAD (refer to the acknowledgment). In further applications, which are not displayed here, the complete car body had been optimized which comes together with up to 3.5 Mio shape parameters.

Shape optimization of the side mirrors for drag reduction of the complete car referring to the center column of Fig. 26. Longitudinal section of the mirror body. The shape is morphed whilst the displayed feature lines are maintained. The shape of the mirror itself (left straight line) has been constrained to guarantee the usability. Therefore, the optimizer was prevented to simply remove the mirrors to reduce drag

13 Conclusion

Discrete design filtering is proven to be a variant of a most flexible design parameter approximation technology of infinite dimension. Applied to shape sensitivities, filtering is shown to be a mathematically consistent method which resembles the chain rule of differentiation. Choosing a filter radius independent of the mesh discretization allows to most easily and flexibly formulating and solving the largest possible optimal design problems.

References

Arnout S, Firl M, Bletzinger KU (2012) Parameter free shape and thickness optimization considering stress response. Struct Multidiscip Optim 45:801–814

Azegami H, Takeuchi K (2006) A smoothing method for shape optimization: traction method using the robin condition. Int J Comput Methods 3(1):21–34

Bletzinger KU, Kimmich S, Ramm E (1991) Efficient modeling in shape optimal design. Comput Syst Eng 2:483–495

Bletzinger KU, Wüchner R, Daoud F, Camprubí N (2005) Computational methods for form finding and optimization of shells and membranes. Comput Methods Appl Mech Eng 194:3438–3452

Bletzinger KU, Firl M, Daoud F (2008) Approximation of derivatives in semi-analytical structural optimization. Comput Struct 86:1404–1416

Bletzinger KU, Firl M, Linhard J, Wüchner R (2010) Optimal shapes of mechanically motivated surfaces. Comput Methods Appl Mech Eng 199:324–333

Bourdin B (2001) Filters in topology optimization. Int J Numer Methods Eng 50(9):2143–2158

Braibant V, Fleury C (1984) Shape optimal design using B-splines. Comput Methods Appl Mech Eng 44:247–267

Braibant V, Fleury C (1986) Shape optimal design: a CAD-oriented formulation. Engineering with Computers 1(4):193–204

Bruns TE, Tortorelli DA (2001) Topology optimization of nonlinear elastic structures and compliant mechanisms. Comput Methods Appl Mech Eng 190(26–27):3443–3459

Catmull E, Clark J (1978) Recursively generated B-spline surfaces on arbitrary topological meshes. Comput Aided Des 10:350–355

Cho S, Ha SH (2009) Isogeometric shape design optimization: exact geometry and enhanced sensitivity. Struct Multidiscip Optim 38:53–70

Clausen P, Pedersen C (2006) Non-parametric large scale structural optimization for industrial applications. In: Proceedings ECCM-2006. Lisbon

de Boer A, van der Schoot M, Bijl H (2007) Mesh deformation based on radial basis function interpolation. Comput Struct 85:784–795

Firl M, Bletzinger KU (2012) Shape optimization of thin walled structures governed by geometrically nonlinear mechanics. Comput Methods Appl Mech Eng 237:107–117

Firl M, Wüchner R, Bletzinger KU (2013) Regularization of shape optimization problems using FE-based parameterization. Struct Multidiscip Optim 47:507–521

Ha SH, Choi KK, Cho S (2010) Numerical method for shape optimization using T-spline based isogeometric method. Struct Multidiscip Optim 42:417–428

Hojjat M, Stavropoulou E, Bletzinger KU (2014) The vertex morphing method for node-based shape optimization. Comput Methods Appl Mech Eng 268:494–513

Imam MH (1982) Three dimensional shape optimization. Int J Numer Methods Eng 18(1982):661–677

Jameson A (1995) Optimum aerodynamic design using control theory. Comput Fluid Dyn Rev 3:495–528

Jameson A (2003) Aerodynamic shape optimization using the adjoint method. Lecture at the Von Karman Institute. Brussels

Jameson A, Vassberg JC (2000) Studies of alternative numerical optimization methods applied to the brachistochrone problem. Comput Fluid Dyn 9(3):281–296

Kiendl J (2011) Isogeometric analysis and shape optimal design of shell sructures. PhD thesis, Technische Universtität München

Kiendl J, Schmidt R, Wüchner R, Bletzinger KU (2013) submitted to Comput Methods Appl Mech Eng

Kim NH, Choi KK, Botkin ME (2002) Numerical method for shape optimization using meshfree method. Struct Multidiscip Optim 24:418–429

Kobbelt L (1996) A variational approach to subdivision. Comput Aid Geom Des 13:743–761

Le C, Bruns T, Tortorelli D (2011) A gradient-based, parameter-free approach to shape optimization. Comput Methods Appl Mech Eng 200(9):985–996

Litke N, Levin A, Schröder P (2001) Trimming for subdivision surfaces. Comput Aid Geom Des 18:463–481

Nagy AP (2011) Isogemetric design optimisation. PhD thesis, Technische Universiteit Delft, Delft

Mohammadi B, Pironneau O (2004) Shape optimization in fluid mechanics. Annu Rev Fluid Mech 36:255–279

Mohammadi B, Pironneau O (2009) Applied shape optimization for fluids. Oxford University Press, Oxford

Pironneau O (1984) Optimal shape design for elliptic systems. Springer series in computational physics. Springer, Berlin

Seo YD, Kim HJ, Youn SK (2010) Shape optimization and its extension to topological design based on isogeometric analysis. Int J Solids Struct 47:1618–1640

Scherer M, Denzer R, Steinmann P (2010) A fictitious energy approach for shape optimization. Int J Numer Methods Eng 82:269–302

Sigmund O (1994) Design of material structures using topology optimization. Ph.D. Thesis, Technical University of Denmark

Sigmund O (2007) Morphology-based black and white filters for topology optimization. Struct Multidiscip Optim 33:401–424

Sigmund O, Maute K (2012) Sensitivity filtering from a continuum mechanics perspective. Struct Multidiscip Optim 46:471–475

Schmidt R (2013) Trimming, mapping and optimization in isogeometric analysis of shell structures. PhD thesis, Technische Universtität München.

Sokolowski J, Zolésio J-P (1992) Introduction to shape optimization: shape sensitivity analysis. Springer, Berlin

Stavropoulou E, Hojjat M, Bletzinger KU (2013) In-plane mesh regularization for parameter-free shape optimization problems. Submitted to Comput Methods Appl Mech Eng

Stück A, Rung T (2011) Adjoint RANS with filtered shape derivatives for hydrodynamic optimisation. Comput Fluids 47(1):22–32

Wall WA, Frenzel MA, Cyron C (2008) Isogeometric structural shape optimization. Comput Methods Appl Mech Eng 197:2976–2988

Zorin D, Schröder P, Sweldens W (1996) Interpolating subdivision for meshes with arbitrary topology. In: Computer Graphics (SIGGRAPH ’96 Proceedings). pp 189–192

Acknowledgments

The author gratefully acknowledges the support of the Deutsche Forschungsgemeinschaft (DFG) under various grants as well as the support of the European Commission under THEME SST.2007-RTD-1: Competitive product development, as part of the project FLOWHEAD (Fluid Optimisation Workflows for Highly Effective Automotive Development Processes), from February 2009 to May 2012. Also, the support of Dr. Othmer (Volkswagen), Dr. Harzheim (Opel) as well as ICON shall be gratefully acknowledged,

Author information

Authors and Affiliations

Corresponding author

Additional information

Original version submitted to Structural and Multidisciplinary Optimization, May 10, 2013, Revision 2, submitted, November 10, 2013

Rights and permissions

About this article

Cite this article

Bletzinger, KU. A consistent frame for sensitivity filtering and the vertex assigned morphing of optimal shape. Struct Multidisc Optim 49, 873–895 (2014). https://doi.org/10.1007/s00158-013-1031-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-013-1031-5