Abstract

Although deterministic optimization has to a considerable extent been successfully applied in various crashworthiness designs to improve passenger safety and reduce vehicle cost, the design could become less meaningful or even unacceptable when considering the perturbations of design variables and noises of system parameters. To overcome this drawback, we present a multiobjective robust optimization methodology to address the effects of parametric uncertainties on multiple crashworthiness criteria, where several different sigma criteria are adopted to measure the variations. As an example, a full front impact of vehicle is considered with increase in energy absorption and reduction of structural weight as the design objectives, and peak deceleration as the constraint. A multiobjective particle swarm optimization is applied to generate robust Pareto solution, which no longer requires formulating a single cost function by using weighting factors or other means. From the example, a clear compromise between the Pareto deterministic and robust designs can be observed. The results demonstrate the advantages of using multiobjective robust optimization, with not only the increase in the energy absorption and decrease in structural weight from a baseline design, but also a significant improvement in the robustness of optimum.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Crashworthiness is of primary interest in automotive industry to ensure structural integrity of vehicle and more importantly the safety of occupant in the event of the crash (Kurtaran et al. 2002). Over the past two decades, finite element analysis (FEA) based optimization has been developed as an effective tool to seek a best possible crashworthiness design for a full vehicle or components (Avalle et al. 2002; Duddeck 2008; Fang et al. 2005; Forsberg and Nilsson 2006; Gu et al. 2001; Hou et al. 2008; Jansson et al. 2003; Liao et al. 2008a, b; Marklund and Nilsson 2001; Redhe et al. 2002). Since impact modeling involves highly nonlinear mechanics, a sensitivity analysis may be rather challenging. Thus, such surrogate techniques as response surface method (RSM) and its variation (Kurtaran et al. 2002; Liao et al. 2008a, b) have been exhaustively adopted in this respect (Yang et al. 2005a).

To date, substantial studies on crashworthiness design have been focused on deterministic optimization, in which all the design variables and parameters involved are assumed to be certain and there is no variability in the simulation outputs. However, most real-life engineering problems involve some degree of uncertainty in loading conditions, material properties, geometries, manufacturing precision and actual usage, etc. It must be noted that usually a deterministic optimization tends to push a design toward one or more constraints until the constraints become active, thereby leaving very little or no room for tolerances in modeling, uncertainties, and/or manufacturing imperfections. In addition, optimization algorithms search for a “peak” solution, where even subtle perturbation in design variables and parameters can result in substantial loss of performance, making an “optimum” less meaningful or even misleading. Consequently, deterministic optimal design obtained without consideration of those uncertainties could lead to unreliable design.

To take into account various uncertainties, a number of non-deterministic methods have been developed over the last two decades, which can be classified into probability based design optimization (PBDO) and non-probability based design optimization (NPBDO). The reliability-based design and robust based design are two typical PBDO methods (Zang et al. 2005). The former estimates the probability distribution of the system in terms of known probability distributions of the stochastic parameters, and is predominantly used for risk analysis by computing the failure probability of a system (Doltsinis and Kang 2004; Doltsinis et al. 2005; Siddall 1984; Zang et al. 2005). The latter improves the quality of a product by minimizing the effect of variations on system responses. Unlike the reliability design, the robust design optimizes the mean performance and minimize its variation, while satisfying given probabilistic constraints (Zang et al. 2005).

There has been some published work available about PBDO for vehicle crashworthiness optimization. For example, Yang, Gu and their co-workers (Gu et al. 2001; Koch et al. 2004; Yang et al. 2000; Youn et al. 2004) proposed a reliability-based design and six sigma-based robust optimization for vehicle side impact. Youn et al. (2004) developed a reliability-based optimization by integrating the hybrid mean value approach with RSM to improve side impact crashworthiness of vehicle.

NPBDO is mainly based on Taguchi philosophy, which incorporates the mean and variability in the design so that variation and mean of performance can be optimized (Kovach et al. 2008). Often, a dual response surface approach is adopted to model these two quantities in an optimization framework (Sun et al. 2010a; Vining and Myers 1990; Youn and Choi 2004) as:

where \(\tilde {y}_\mu\) denotes the mean response, \(\tilde {y}_\sigma\) is its standard deviation (SD), T is the constraint of mean value. To avoid the zero-bias presented in the mean constraint, Lin and Tu (1995) proposed a mean square error (MSE) approach as:

which allows a certain bias, and the resulted process variance would be less than or, at most, equal to the variance in Eq. 1, thereby achieving a better (or at least equal) result.

Most existing robust design problems have concerned with single objective optimization. While real-life engineering problems are typically characterized by a number of quality and/or performance indices, some of which could be conflicting with each other. To address such a multiobjective robust optimization problem, some attempts have been made. For instance, Koksoy et al. (Koksoy 2006; Koksoy and Yalcinoz 2006) adopted the mean square error criterion for optimizing several different objectives. Gunawan and Azarm (2005) presented a multiobjective robust method for measuring multiple sensitivities of design variation and then use such measure to obtain a set of robust Pareto solutions. Kovach et al. (2008) proposed a physical programming model for experiment-based multiobjective robust design by linking RSM to a multi-response robust design problem. Yang et al. (Yang and Chou 2005; Yang et al. 2005b) solved a multi-response robust problem by constructing proper dual response surfaces firstly, then transforming the dual-response problem into a standard non-linear programming formulation, and finally obtaining a robust design by using a so-called scatter-search method. Kapur and Cho (1996) also developed a multi-response technique by minimizing the deviation from the target and maximizing the robustness to noise. Shimoyama et al. (2005) developed a multiobjective design for a design problem of welded beams. Kim and Lin (1998) adopted the dual RS model for mathematical programming formulation in the fuzzy optimization with a goal to maximize the lowest degree of satisfaction.

However, there have been few reports available regarding multiobjective robust crashworthiness for vehicle design studies despite their practical value. This paper aims to tackle this problem by exploring how uncertainties, different sigma criteria and different emphases on mean and deviation components affect the Pareto optimum.

2 Surrogate model for robust design

A conventional RSM has focused on the mean value of response Y without considering variance. However, only constructing mean response model may not be adequate and an optimization could become even meaningless (Yeniay et al. 2006). While DRSM allows constructing two models: one for the mean (μ) and another for the standard deviation (σ), as:

where y μ and y σ denotes, respectively, the mean and standard deviation of the true responses from FEA, \(\tilde{y}_\mu\) and \(\tilde{y}_\sigma\) the RS models for the mean and standard deviation, ε μ and ε σ the modeling errors, \({\bf x} = [x_{1}, x_{1},{\ldots}x_{n}]^{\rm T}\) denotes the design variables, \(b_{\emph{v}}(\emph{v} = 1,{\ldots},V)\) is the \(\emph{v}^{\rm th}\) unknown coefficient corresponding to the \(\emph{v}^{\rm th}\) basis function \(\varphi_{\emph{v}}\) (x), \(c_{\emph{w}}(\emph{w} = 1,{\ldots},W)\) is the \(\emph{w}^{\rm th}\) unknown coefficient corresponding to the \(\emph{w}^{\rm th}\) basis function \(\psi_{\emph{w}}\) (x). In theory, the basis function can be in any form. In most cases, the polynomial functions have proven fairly effective for both the mean and standard deviation responses. For example, a quadratic polynomial dual RS model can be given as,

where the unknown coefficients b and c can be determined by means of the least square method. Specifically, If the FEA results \({\rm {\bf y}}_\mu =\big[{y_\mu ^{(1)} ,y_\mu ^{(2)} ,\cdots ,y_\mu ^{(P)}}\big]^{\rm T}\) and \({\rm {\bf y}}_\sigma =\big[{y_\sigma ^{(1)} ,y_\sigma ^{(2)} ,\cdots ,y_\sigma ^{(P)}}\big]^{\rm T}\) are obtained at m selected sample design points (m ≥ V and m ≥ W), the total squared errors between the analysis and dual RS model are computed as:

or in the matrix forms as:

where \({\boldsymbol\upvarphi}_{\mu}\) and \({\boldsymbol\Psi}_{\sigma}\) are the matrices consisting of basis functions evaluated at these selected design points, respectively, as:

By minimizing E μ (b) and E σ (c) in Eq. 6,

the coefficient vectors of the dual RS model are obtained as,

The flowchart of constructing the dual response surface is presented in Fig. 1.

The choice of sample points can substantially influence the accuracy of the dual RS model. In this paper, the Optimal Latin Hypercube sampling (OLHS) method is adopted to explore the design space for its proven efficiency (Sun et al. 2010a; b; c). It is critical to validate the fitness of the dual model when the dual RS model is constructed. The error in the dual RS model can be caused by the selection of basis functions, selection of sampling points and the least squares (Hou et al. 2008). To evaluate the predictive ability of the dual RS model, a simple relative error (RE) can be evaluated as

where \(\tilde {y}({\rm {\bf x}})\) represents the result of mean or standard deviation response from the dual RS model, y(x) denotes the corresponding FEA result.

Alternatively, statistical techniques such as analysis of variance can be used to check the fitness of a dual RS model to identify the main effects of design variables on the responses. The major statistical measures used for evaluating dual RS model fitness can include

where p is the number of inconstant terms in the mean or standard deviation response of dual RS model. SSE and SST are calculated, respectively, as follows,

where\(\bar {y}_i\) is the mean value of y i .

Note that these parameters are not completely independent on each other (Fang et al. 2005; Wang and Shan 2007). Generally speaking, the larger the values of R 2 and \(R_{\it adj}^2\), and the smaller the value of RMSE; the better the fitness. When the number of design variables is large, it is more appropriate to look at \(R_{\it adj}^2\), because R 2 will keep increasing as increase in the number of terms, while \(R_{\it adj}^2\) actually decreases if unnecessary terms are added to the model.

3 Multiobjective robust optimization method

Robust optimization aims at developing a solution that is insensitive to variations of the nominal design and is feasible in an uncertainty range around the nominal design. As shown in Fig. 2, the x-axis represents the uncertain parameters, including design variables (control factors) and noise factors (uncontrollable factors), while the vertical axis represents the objective function f(x) to be minimized. Of these three solutions 1, 2, and 3 pointed, Solution 3 is considered robust as a variation of ±Δx in design variables does not alter the objective function too much and maintains the solution within the design constraint when the design variable is perturbed. Although Solution 2 is also within the design space when the design variable varies in ±Δx, the perturbation causes a larger change in objective function. Solution 1 is highly sensitive to the parameter perturbation and usually cannot be recommended in practice, though it has the best mean value of all the three solutions.

Despite the importance of determining robust solution to a single objective, development of a multiobjective robust optimization for sophisticated engineering problems is more significant from practical point of view (Koksoy 2008; Koksoy and Yalcinoz 2006; Yang et al. 2005b). To cope with this problem, this paper presents a multi-objective robust optimization procedure that is constructed by integrating dual response surface model, sigma criteria and multi-objective particle swarm optimization.

3.1 Sigma criteria for robustness

In order to quantify the robustness of a design, different sigma criteria are introduced to measure performance variability. The term “sigma” refers to standard deviation, σ, which is a measure to the distribution of a set of data around the mean value, μ. This property can be used to describe the known randomness of variables and parameters that influence system responses, thereby measuring variability of objective performances. Performance variations can be characterized in terms of different standard deviations from the mean performance (Koch et al. 2004). Traditionally, many companies adopt ±3σ as variation criterion, where 99.73% of the variation is within specification limits. Recently, some industries have been advocating the zero product defects, though it could cost substantially more from design and production perspectives. In this paper, we use ±6σ as performance variation criterion to ensure a higher level of acceptable quality. Nevertheless, other sigma level (e.g. ±3σ) will be also explored herein to investigate its effects on the multiobjective robust design.

3.2 Multiobjective robust optimization mathematical model

A general multiobjective optimization problem can be formulated as:

where f 1(x), f 2(x), ⋯ ,f K (x) are the K objective functions, g j (x) denotes the j th constraint, J is the number of constraints, x L and x U denote the lower and upper bounds of design variable x, respectively. Obviously, this formulation does not take into account the perturbation induced by parameter uncertainties. For this reason, a corresponding multiobjective robust optimization model can be formulated as:

where Y μ1 (x),Y μ2 (x), ⋯ ,Y μK (x) and Y σ1 (x),Y σ2 (x), ⋯ ,Y σK (x) are the mean values and standard deviations of these K objectives, respectively, g μj (x) and g σj (x) are the mean and standard deviation of the j th constraint, vectors x μ and x σ are the mean and standard deviation of x, respectively, η is design level of sigma, e.g. η = 6 denotes a six sigma design.

Objective vector in (18) often includes different types of mathematical components, in which some require maximization, some require minimization, and others seek for specific target. For this reason, three different robust objective functions are formulated as follows:

Case 1—Minimization The design aims to minimize the mean of response and the objective function is expressed as:

where λ denotes the weight to emphasize either mean or standard deviation.

Case 2—Maximization The design aims to maximize the mean of response and the objective function is formulated as:

Case 3—Target The design aims to force the mean of response to a specific target and the objective function is given by:

where \(Y_{\mu I}^\ast \) denotes the target for the Ith objective mean.

The multiobjective particle swarm optimization (MOPSO) has drawn some attention recently because it exhibits a relatively fast convergence and well-distributed Pareto front compared with other multiobjective optimization algorithms, such as NSGA, PEAS et al (Liu et al. 2007; Raquel and Naval 2005). Hence, we adopt MOPSO to solve multiobjective robust optimization here. The more details about MOPSO can be found in Raquel and Naval (2005).

4 Demonstrative example for vehicle crashworthiness design

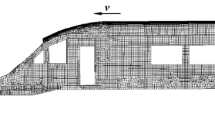

We used a full-scale FE model of a National Highway Transportation and Safety Association (NHTSA) vehicle to demonstrate the multiobjective robust optimization algorithm. The FE model of the vehicle was obtained from the public domain at: www.ncac.gwu.edu/archives/model/index.html. It has a total mass of 1,373 kg. Note that the front part of the car absorbs the most impact energy and the rear part of the car nearly does not deform much in the case of full frontal impact. Therefore an adaptive mesh was generated as in Fig. 3, whose accuracy has been verified in literature (Craig et al. 2005), thus it is considered appropriate for the design optimization herein.

4.1 Design objectives and variables

When car crashing occurs, it is expected that most of impact energy is absorbed by the vehicle structure to reduce risk to occupants. However, increase in energy absorption capacity often leads to unwanted increase in structural weight. In other words, energy absorption and lightweight can conflict with each other and we have to impose the optimum in a Pareto sense. Furthermore, the deceleration history typically used as an indicator of impact severity, where deceleration peak should be restricted to a certain level, for instance, 40 g (g = 9.81 m/s2) for crashworthiness design. Thus, the energy absorbed by car parts and structural weight of vehicle are chosen as the objectives, while the peak acceleration as constraint in this paper.

The vehicle front end structures are important structural components for their roles on the energy absorption. Following a variable screen analysis (Craig et al. 2005), we found that the thicknesses of different parts of the frontal frame have a more significant effect on energy absorption and deceleration of the vehicle (Liao et al. 2008a; b). Thus the thicknesses (t 1, t 2 and t 3) of different parts are selected as design variables in a range of 1 and 2 mm (Fig. 4). The material properties, such as Young’s modulus E, density ρ, yielding stress σ y can be affected by rolling process, and thus are chosen as random parameters, whose fluctuation are in the ranges of E = (198, 202 GPa), \(\rho = (7\text{,}700, 7\text{,}900~{\rm kg/m}^{3})\), and σ y = (213, 217 MPa), respectively.

4.2 Multiobjective robust optimization model

OLHS and orthogonal design are integrated to perform DoE analysis. The noise factors sampled with orthogonal design are arranged in an outer array with the sample points of 4, and control factors sampled with OLHS are arranged in an inner array with the sample points of 16. Experiments in the inner array are repeated at 4 points corresponding to the outer array to simulate the variability due to the uncertainties of the three noise factors, making the total number of simulations equals to 64. Although increasing sample points for outer array could better populate the space of random variables, the total DoE numbers would increase by 16 times of the sample points for the outer array in our case. Thus to compromise the sampling precision with computational cost, we adopted 4 sample points for the outer array herein.

For crash simulation, we used LSDYNA970 (LS-DYNA 2003) in a personal computer with 1.7 GHz Pentium 4 with 2 GB RAM. A single simulation of 100 ms frontal impact takes approximately three hours. The arrangement of cross design matrix and FEA parameters are given in Table 1. The mean and standard deviation of each response are summarized in Table 2.

Based upon the results in Table 2, the dual RS models of energy absorption U and deceleration peak a are constructed in the quadratic polynomials. Due to the mass M is a linear function of the part thickness, the dual RS models of mass M are formulated linearly. Finally, the three dual RS models are obtained as follows, respectively:

It is essential to evaluate the accuracies of such surrogate models obtained. In this paper, the R 2, \(R^{2}_{\rm adj}\), and max(RE) measures are used to evaluate the accuracy of these dual RS models of objective and constraint functions. max(RE) is obtained by randomly generating five extra sampling points in the design space. The error results are listed in Table 3. It needs to point out that in this case the inclusion of relatively small constant terms in Eqs. 24 and 25 can make the modelling accuracy higher. Obviously, the accuracies of the dual response models are adequate and allow us to carry out the design optimization properly.

After introducing the sigma criterion (η = 3, 6, respectively herein), the multiobjective robust optimization is thus formulated as:

4.3 Optimization results and discussion

We first used MOPSO-CD to solve the deterministic multiobjective optimization problem without considering the perturbations of design variables and parametric noise with inertial weight w = 0.4, acceleration constants C 1 = C 2 = 0.5, population size a = 100; and external archive A = 50. The optimal Pareto fronts with the 10, 30, 50, 80, 100 and 200 generations are respectively plotted in Fig. 5, which indicate that the 100 generations converged fairly stably and is considered adequate.

In order to take into account the uncertainties, the design variables are assumed to distribute normally, whose standard deviations are given as [0.01, 0.01, 0.01] from typical manufacturing tolerance. Figure 6 gives the Pareto optimal fronts for different sigma levels. Although the profiles of the Pareto fronts keep similar in the different sigma levels, whose ranges change fairly evidently compared with that in the deterministic case. All the Pareto optimal fronts are obtained with the same population size (100) and number of generations (100). Every point represents one Pareto optimal solution in different cases, which elucidate the trade-off between mass M and energy absorption U. It is shown that these two objective functions strongly compete with each other: the more the mass, the higher the energy absorption. Consequently, if the decision maker wishes to emphasize more on the energy absorption of the structures, the mass must be compromised and become heavier, and vice versa. More importantly, a higher sigma level indicates that the perturbations of the design variables and parametric noise have a lower probability to violate constraint. This is to say that the Pareto becomes more stable. However, the objective functions must sacrifice more, as compared in Fig. 6. In addition, a greater standard deviation (i.e. a wider range of random parameters) could lead to a bigger gap between different sigma levels (e.g. 3 and 6 sigma).

Figure 7 presents the optimal Pareto fronts for the deterministic multiobjective optimization (λ = 1) and the means of robust multiobjective optimizations with λ = 0.01 and λ = 0.1, respectively. It is interesting to note that the consideration of randomness of the parameters leads to sacrifice of Pareto optimum, i.e. the robust solution is farther to the origin in the Pareto space than the deterministic counterpart. Figure 8 gives the optimal Pareto fronts of standard deviation in the multiobjective robust optimizations with λ = 0.01 and λ = 0.1. The ranges and shapes of the Pareto optimal front change with variation of λ as compared to the deterministic case. The smaller the λ (i.e. less emphasis on the mean objective), the higher the robustness of the objective functions (see Fig. 8, where the deviation is much smaller). However, the mean objective functions are worse (see Fig. 7, where the Pareto front moves farther). Hence, compromise must be made between the robustness and nominal performance in practice. The optimized Pareto set, which takes into account the perturbations of the design variables and noise parameters, does not violate the constraint, as in Fig. 9.

Although the Pareto-set can provide designer with a large number of design solutions for their decision-make in the beginning of design stage, decision must be made for the most satisfactory solution (termed as “knee point”) from Pareto-set finally. Conventionally, the most satisfactory solution is often decided by the weight method which aggregates many objectives into a single cost function in terms of weighted average to emphasize their relative importance. However, it can be difficult to assign proper weight to each objective. In this paper, we present the minimum distance selection method (TMDSM), mathematically given as below, which allows us determining a most satisfactory solution from Pareto-set,

where K is the number of the objective components, f cτ is the τ th objective value in the c th Pareto solution, d = 2, 4, 6,..., D is the distance from knee point to an “utopia point” that is given by the optimal values of each individual objective (refer to Fig. 10), which are normally not attainable in practice with presence of conflicting objectives.

The deterministic knee point and robust knee point are obtained to use TMDSM from the Pareto-sets, respectively. The results are summarized in Table 4 to compare with the baseline model. It can be seen that the deterministic design and robust design can improve the performance of vehicle. However, the optimal result of the deterministic multiobjective optimization lies on the margin of design space, thus the reliability is low. Comparing x D with x R, the mean objective performance of x D is better than that of x R, but the robustness of x D is worse than that of x R. The optimal results of the deterministic design and robust design are also verified by additional FEM simulations. In terms of %error, these two optimal results of approximations have sufficient accuracies, which demonstrate the effectiveness of DRSM optimization method presented.

5 Conclusions

Following widely available reports on either multiobjective deterministic optimization or single robust optimization of crashworthiness designs, this paper presented a multiobjective robust optimization for vehicle design by using dual response surface model and sigma criteria, which allows taking into account the effects of system uncertainties on different objectives. The adoption of a new multiobjective particle swarm optimization algorithm no longer requires formulating a single cost function in terms of weight average or other means of combining multiple objectives. The procedure proved fairly effective in a full-scale vehicle crashing model, where energy absorption and weight are taken as the objectives, while the peak deceleration as the constraint. The example demonstrated that the multiobjective particle swarm optimization generates the Pareto points fairly efficient and evenly. The comparison of Pareto optimums between the deterministic design and robust design clearly indicated that improvement in the robustness must sacrifice the Pareto optimum of mean objectives. For this reason, a weight factor can be prescribed to balance the importance between deterministic design and robust design. The more the emphasis on robustness (a higher sigma level), the worse the objective means. The example showed that the energy absorption and weight of the car were improved, at the same time; the robustness of the design is enhanced.

References

Avalle M, Chiandussi G, Belingardi G (2002) Design optimization by response surface methodology: application to crashworthiness design of vehicle structures. Struct Multidisc Optim 24(4):325–332

Craig KJ, Stander N, Dooge DA, Varadappa S (2005) Automotive crashworthiness design using response surface-based variable screening and optimization. Eng Comput 22(1–2):38–61

Doltsinis I, Kang Z (2004) Robust design of structures using optimization methods. Comput Methods Appl Mech Eng 193(23–26):2221–2237

Doltsinis L, Kang Z, Cheng GD (2005) Robust design of non-linear structures using optimization methods. Comput Methods Appl Mech Eng 194(12–16):1779–1795

Duddeck F (2008) Multidisciplinary optimization of car bodies. Struct Multidisc Optim 35(4):375–389

Fang H, Rais-Rohani M, Liu Z, Horstemeyer MF (2005) A comparative study of metamodeling methods for multiobjective crashworthiness optimization. Comput Struct 83(25–26):2121–2136

Forsberg J, Nilsson L (2006) Evaluation of response surface methodologies used in crashworthiness optimization. Int J Impact Eng 32(5):759–777

Gu L, Yang RJ, Tho CH, Makowski M, Faruque O, Li Y (2001) Optimization and robustness for crashworthiness of side impact. Int J Veh Des 26(4):348–360

Gunawan S, Azarm S (2005) Multi-objective robust optimization using a sensitivity region concept. Struct Multidisc Optim 29(1):50–60

Hou SJ, Li Q, Long SY, Yanga XJ, Li W (2008) Multiobjective optimization of multi-cell sections for the crashworthiness design. Int J Impact Eng 35(11):1355–1367

Jansson T, Nilsson L, Redhe M (2003) Using surrogate models and response surfaces in structural optimization—with application to crashworthiness design and sheet metal forming. Struct Multidisc Optim 25(2):129–140

Kapur KC, Cho BR (1996) Economic design of the specification region for multiple quality characteristics. IIE Trans 28(3):237–248

Kim KJ, Lin DKJ (1998) Dual response surface optimization: a fuzzy modeling approach. J Qual Technol 30(1):1–10

Koch PN, Yang RJ, Gu L (2004) Design for six sigma through robust optimization. Struct Multidisc Optim 26(3–4):235–248

Koksoy O (2006) Multiresponse robust design: mean square error (MSE) criterion. Appl Math Comput 175(2):1716–1729

Koksoy O (2008) A nonlinear programming solution to robust multi-response quality problem. Appl Math Comput 196(2):603–612

Koksoy O, Yalcinoz T (2006) Mean square error criteria to multiresponse process optimization by a new genetic algorithm. Appl Math Comput 175(2):1657–1674

Kovach J, Cho BR, Antony J (2008) Development of an experiment-based robust design paradigm for multiple quality characteristics using physical programming. Int J Adv Manuf Technol 35(11–12):1100–1112

Kurtaran H, Eskandarian A, Marzougui D, Bedewi NE (2002) Crashworthiness design optimization using successive response surface approximations. Comput Mech 29(4–5):409–421

Liao XT, Li Q, Yang XJ, Li W, Zhang WG (2008a) A two-stage multi-objective optimization of vehicle crashworthiness under front impact. Int J Crashworthiness 13(3):279–288

Liao XT, Li Q, Yang XJ, Zhang WG, Li W (2008b) Multiobjective optimization for crash safety design of vehicles using stepwise regression model. Struct Multidisc Optim 35(6):561–569

Lin DKJ, Tu WZ (1995) Dual response-surface optimization. J Qual Technol 27(1):34–39

Liu DS, Tan KC, Goh CK, Ho WK (2007) A multiobjective memetic algorithm based on particle swarm optimization. IEEE Trans Syst Man Cybern B Cybern 37(1):42–50

LS-DYNA (2003) Keyword user’s manual, v. 970. Livermore Software Technology Corporation, Livermore

Marklund PO, Nilsson L (2001) Optimization of a car body component subjected to side impact. Struct Multidisc Optim 21(5):383–392

Raquel C, Naval P (2005) An effective use of crowding distance in multiobjective particle swarm optimization. In: Proceedings of the 2005 conference on genetic and evolutionary computation. Washington DC, USA

Redhe M, Forsberg J, Jansson T, Marklund PO, Nilsson L (2002) Using the response surface methodology and the D-optimality criterion in crashworthiness related problems—an analysis of the surface approximation error versus the number of function evaluations. Struct Multidisc Optim 24(3):185–194

Shimoyama K, Oyama A, Fujii K (2005) A new efficient and useful robust optimization approach—design for multi-objective six sigma. In: Proceedings of the 2005 IEEE congress on evolutionary computation. Edinburgh

Siddall JN (1984) A new approach to probability in engineering design and optimization. J Mech Transmissions Automation Des Trans ASME 106(1):5–10

Sun GY, Li GY, Gong ZH, Cui XY, Yang XJ, Li Q (2010a) Multiobjective robust optimization method for drawbead design in sheet metal forming. Mater Des 31(4):1917–1929

Sun GY, Li GY, Hou SJ, Zhou SW, Li W, Li Q (2010b) Crashworthiness design for functionally graded foam-filled thin-walled structures. Mater Sci Eng Struct Mater Prop Microstruct Process 527(7–8):1911–1919

Sun GY, Li GY, Stone M, Li Q (2010c) A two-stage multi-fidelity optimization procedure for honeycomb-type cellular materials. Comput Mater Sci 49(3):500–511

Vining GG, Myers RH (1990) Combining taguchi and response-surface philosophies—a dual response approach. J Qual Technol 22(1):38–45

Wang GG, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. J Mech Des 129(4):370–380

Yang RJ, Akkerman A, Anderson DF, Faruque OM, Gu L (2000) Robustness optimization for vehicular crash simulations. Comput Sci Eng 2(6):8–13

Yang RJ, Wang N, Tho CH, Bobineau JP (2005a) Metamodeling development for vehicle frontal impact simulation. J Mech Des 127(5):1014–1020

Yang TH, Chou PH (2005) Solving a multiresponse simulation-optimization problem with discrete variables using a multiple-attribute decision-making method. Math Comput Simul 68(1): 9–21

Yang TH, Kuo YY, Chou PH (2005b) Solving a multiresponse simulation problem using a dual-response system and scatter search method. Simul Model Pract Theory 13(4):356–369

Yeniay O, Unal R, Lepsch RA (2006) Using dual response surfaces to reduce variability in launch vehicle design: a case study. Reliab Eng Syst Saf 91(4):407–412

Youn BD, Choi KK (2004) A new response surface methodology for reliability-based design optimization. Comput Struct 82(2–3):241–256

Youn BD, Choi KK, Yang RJ, Gu L (2004) Reliability-based design optimization for crashworthiness of vehicle side impact. Struct Multidisc Optim 26(3–4):272–283

Zang C, Friswell MI, Mottershead JE (2005) A review of robust optimal design and its application in dynamics. Comput Struct 83(4–5):315–326

Acknowledgements

The support from National 973 Project of China (2010CB328005), Key Project of National Science Foundation of China (60635020) and Program for Changjiang Scholar Innovative Research Team in Hunan University. The first author is also grateful the supports from China Scholarship Council (CSC).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sun, G., Li, G., Zhou, S. et al. Crashworthiness design of vehicle by using multiobjective robust optimization. Struct Multidisc Optim 44, 99–110 (2011). https://doi.org/10.1007/s00158-010-0601-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-010-0601-z