Abstract

We demonstrate how Game Theoretic concepts and formalism can be used to capture cryptographic notions of security. In the restricted but indicative case of two-party protocols in the face of malicious fail-stop faults, we first show how the traditional notions of secrecy and correctness of protocols can be captured as properties of Nash equilibria in games for rational players. Next, we concentrate on fairness. Here we demonstrate a Game Theoretic notion and two different cryptographic notions that turn out to all be equivalent. In addition, we provide a simulation-based notion that implies the previous three. All four notions are weaker than existing cryptographic notions of fairness. In particular, we show that they can be met in some natural setting where existing notions of fairness are provably impossible to achieve.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Both Game Theory and the discipline of cryptographic protocols are dedicated to understanding the intricacies of collaborative interactions among parties with conflicting interests. Furthermore, the focal point of both disciplines is the same and is algorithmic at nature: designing and analyzing algorithms for parties in such collaborative situations. However, the two disciplines developed very different sets of goals and formalisms. Cryptography focuses on designing algorithms that allow those who follow them to interact in a way that guarantees some basic concrete properties, such as secrecy, correctness or fairness, in face of adversarial, malicious behavior. Game Theory is more open-ended, concerning itself with understanding algorithmic behaviors of “rational” parties with well-defined goals in a given situation, and on designing rules of interaction that will “naturally” lead to behaviors with desirable properties.

Still, in spite of these differences, some very fruitful cross-fertilization between the two disciplines has taken place (see, e.g., [9, 25]). One very natural direction is to use cryptographic techniques to solve traditional Game Theoretic problems. In particular, the works of Dodis et al. [8], Ismalkov et al. [23, 24], Abraham et al. [1] and Halpern and Pass [22] take this path and demonstrate how a mutli-party protocol using cryptographic techniques can be used to replace a trusted correlation device or a mediator in mechanism design. Another line of research is to extend the traditional Game Theoretic formalisms to capture, within the context of Game Theory, cryptographic concerns and ideas that take into account the fact that protocol participants are computationally bounded and that computational resources are costly [8, 19, 22].

Yet another line of work is aimed at using Game Theoretic concepts and approach to amend traditional cryptographic goals such as secure and fair computation. A focal point in this direction has been the concept of rational fair exchange of secrets (also known as rational secret sharing) [3, 10, 17, 21, 26–28, 30]. Here the goal is to design a protocol for exchanging secrets in a way that “rational players” will be “interested” in following the protocol, where it is assumed that players are interested in learning the secret inputs of the other players while preventing others from learning their own secrets. In fact, it is assumed that the participants have specific preferences and some quantitative prior knowledge on these preferences of the participants is known to the protocol designer. Furthermore, such prior knowledge turns out to be essential in order to get around basic impossibility results [3, 6].

These ingenious works demonstrate the benefit in having a joint theory of protocols for collaborative but competing parties, but at the same time they underline the basic incompatibility in the two formalisms. For instance, the (primarily Game Theoretic) formalisms used in the works on rational secret sharing do not seem to naturally capture basic cryptographic concepts, such as semantic security of the secrets. Instead, these works opt for more simplistic notions that are not always compatible with traditional cryptographic formalisms. In particular, existing modeling (that is used both by constructions and by impossibility results) treats the secret as an atomic unit and considers only the case where the parties either learnt or did not learn the secret entirely. Unlike traditional cryptographic modeling, the option where partial information about the secret is leaked through the execution is disregarded.

This Work We relate the two formalisms. In particular, we show how Game Theoretic formalism and concepts can be used to capture traditional cryptographic security properties of protocols. We concentrate on the setting of two-party protocols and fail-stop adversaries. While this setting is admittedly limited, it does incorporate the core aspects of secrecy, correctness and fairness in face of malicious (i.e., not necessarily “rational") aborts.

In this setting, we first show Game Theoretic notions of secrecy and correctness that are equivalent, respectively, to the standard cryptographic notions of secret and correct evaluation of deterministic functions in the fail-stop setting (see, e.g., [12]). We then turn to capturing fairness. Here the situation turns out to be more intricate. We formulate a natural Game Theoretic notion of fairness and observe that it is strictly weaker than existing cryptographic notions of fair two-party function evaluation. We then formulate new cryptographic notions of fairness that are equivalent to this Game Theoretic notion and a simulation-based notion of fairness that implies the above three. Furthermore, we show that these new notions can indeed be realized in some potentially meaningful settings where traditional cryptographic notions are provably unrealizable.

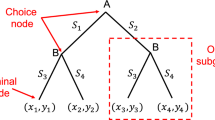

Our Results in More Detail The basic idea proceeds as follows. We translate a given protocol into a set of games, in such a way that the protocol satisfies the cryptographic property in question if and only if a certain pair of strategies (derived from the protocol) are in a (computational) Nash equilibrium in each one of the games. This allows the cryptographic question to be posed (and answered) in Game Theoretic language. More precisely, given a protocol, we consider the (extensive form with incomplete information) game where in each step the relevant party can decide to either continue running the protocol as prescribed, or alteratively abort the execution. We then ask whether the pair of strategies that instruct the players to continue the protocol to completion is in a (computational) Nash equilibrium. Each cryptographic property is then captured by an appropriate set of utilities and input distributions (namely distributions over the types). In particular:

Secrecy A given protocol is secret (as in, e.g., [12]) if and only if the strategy that never aborts the protocol is in a computational Nash equilibrium with respect to the following set of utilities and distributions over the types. For each pair of values in the domain, we define a distribution that chooses an input for one party at random from the pair. The party gets low payoff if the two values lead to the same output value and yet the other party managed to guess which of the two inputs was used. It is stressed that this is the first time where a traditional cryptographic notion of secrecy (in the style of [14]) is captured in Game Theoretic terms. In particular, the works on rational secret sharing do not provide this level of secrecy for the secret (indeed, the solution approaches taken there need the secret to be taken from a large domain).

Correctness A protocol correctly computes a deterministic function if and only if the strategy that never aborts the protocol is in a computational Nash equilibrium with respect to the set of utilities where the parties get high payoff only if they output the correct function value on the given inputs (types), or abort before the protocol starts; in addition, the players get no payoff for incorrect output.

Fairness Here we make the bulk of our contributions. We first recall the basic setting: Two parties interact by exchanging messages in order to evaluate a function f on their inputs. The only allowed deviation from the protocol is abortion, in which event both parties learn that the protocol was aborted. Consequently, a protocol in this model should specify, in addition to the next message to be sent, also a prediction of the output value in case the execution is aborted (although the setting makes sense for any function, it may be helpful to keep in mind the fair exchange function, where the output of each party is the input of the other).

Current notions of fairness for two-party protocols in this model (e.g., [16, 18]) require there to be a point in the computation where both parties move from a state of no knowledge of the output to a full knowledge of it. This is a strong notion, which is impossible to realize in many scenarios. Instead, we would like to investigate more relaxed notions of fairness, which allow parties to gradually learn partial information on their desired outputs—but do so in a way that is “fair.” Indeed, such an approach seems reasonable both from a Game Theoretic point of view (as a zero-sum game) and from a cryptographic point of view via the paradigm of gradual release (see, e.g., [3, 4, 11, 13, 18] and the references within).

A first thing to note about such a notion of fairness is that it is sensitive to the potential prior knowledge that the parties may have on each other’s inputs. Indeed, a “gradual release” protocol that is “fair” without prior knowledge may become “unfair” in a situation where one of the parties has far more knowledge about the possible values of the inputs of the second party than vice versa.

We thus explicitly model in our security notions the knowledge that each party has on the input of the other party. That is, we let each party has, in addition to its own input, some additional information on the input of the other party. Furthermore, to simplify matters and put the two parties on equal footing, we assume that the information that the parties have on the input of the other consists of two possible values for that input. That is, each party receives three values: its own input and two possible values for the input of the other party. Indeed, such information naturally captures situations where the domain of possible inputs is small (say, binary). The formalism can also be naturally extended to deal with domains of small size which is larger than two.

We first sketch our Game Theoretic notion. We consider the following set of distributions over inputs (types): Say that a quadruple of elements \((a_0,a_1,b_0,b_1)\) in the domain of function f is valid if for all \(i\in \{0,1\}\), \(f(a_0,b_i)\ne f(a_1,b_i)\) and \(f(a_i,b_0)\ne f(a_i,b_1)\). For each valid quadruple of values in the domain, we define a distribution that chooses an input for one party at random from the first two values and an input for other party at random from the other two values. The utility function for a party is the following: When the party aborts the protocol, each party predicts its output. If the party predicts correctly and the other one does not, then it gets payoff +1. If it predicts incorrectly and the other party predicts correctly, then it gets payoff \(-1\). Else, it gets payoff 0. We say that a protocol is Game Theoretically fair if the strategy that never aborts the protocol is in a computational Nash equilibrium with respect to the above utility, applied to both parties, and any distribution from the above family.

This formalism is perhaps the most natural way to define fairness using Game Theoretic tools, yet it also provides a very different point of view of fairness than the traditional cryptographic one. Specifically, in cryptography fairness is usually captured by requiring that in almost each execution, either both parties learn the secret, or neither of them does. In contrast, our new notion of fairness examines the protocol in a broader sense and requires that no party obtains an advantage. In particular, even if the protocol enables one of the parties to gain an advantage in some execution, it may still be considered “fair” if it balances this advantage and gives an equivalent superiority to the other party in subsequent executions. As a result, these cross advantages are eliminated and the parties are in an equilibrium state. This interesting notion of fairness may give hope for a much wider class of functions for which the standard cryptographic notion is impossible to achieve. In this work, we explore the feasibility of this new notion of cryptographic fairness, while proposing an appropriate equivalent definition that captures this Game Theory fairness using the conventional cryptographic language. Specifically, we consider three different cryptographic notions of fairness and study their relationships with the above Game Theoretic notion, see Fig. 1 for an illustration.

-

First, we formulate a simple “game-based” notion of fairness that limits the gain of an arbitrary (i.e., not necessarily “rational”) fail-stop adversary in a game that closely mimics the above Game Theoretic interaction. The main difference between the notions is that in the cryptographic setting the adversary is arbitrary, rather than rational. Still, we show that the two notions are equivalent. Specifically, we consider a test for the protocol in a “fair” environment, where each party has two possible inputs and its effective input is chosen uniformly at random from this set. Moreover, both parties know the input tuple and the distribution over the inputs. This is due to the fact that we, untraditionally, assume that the protocol instructs the honest party to guess the output of the function based on its view and the information it recorded so far. By doing so, we are able to capture scenarios for which both parties learn the same incomplete information (in a computationally indistinguishable sense) regarding their output. In particular, as long as both parties hold the same partial information, with the same probability, then the protocol is fair. Below we further explore the feasibility of our new notion and present a protocol that realizes it under some restrictions.

-

Next, we show that this notion in fact corresponds to a natural new concept of limited gradual release. That is, say that a protocol satisfies the limited gradual release property if at any round the probability of any party to predict its output increases only by a negligible amount. We show that a protocol is fair (as in the above notions) if and only if it satisfies the limited gradual release property. We note that the traditional notion of gradual release (where the predictions are non-negligibly increased, unlike our notion studied here) is in essence the basis of the classic protocols of Beaver and Goldwasser and Levin [4, 13].

-

Then, we formulate an ideal-model-based notion of fairness that allows for limited gradual release of secrets. In this notion, the ideal functionality accepts a “sampling algorithm” M from the ideal-model adversary. The functionality then obtains the inputs from the parties and runs M on these inputs, and obtains from M the outputs that should be given to the two parties. The functionality then makes the respective outputs available to the two parties (i.e., once the outputs are available, the parties can access them at any time). The correctness and fairness guarantees of this interaction clearly depend on the properties of M. We thus require that M be both “fair” and “correct” in the sense that both parties get correct output with roughly equal (and substantial) probability. We then show that the new simulation-based definition implies the our limited gradual release notion (we note that the converse does not necessarily hold with respect to secure computation in the fail-stop model, even disregarding fairness).

On the Feasibility of Game-Based Fairness Finally, we consider the realizability of our game-based notion. Notably, this notion is strictly weaker than the conservative cryptographic notion of fairness, as any protocol that is fair with respect to the latter definition is also fair with respect to our new notion. On the other hand, known impossibility results, such as of Cleve [6] and Asharov-Lindell [3], hold even with respect to this weaker notion, as long as both parties are required to receive an output. This demonstrates the meaningfulness of our new concept, as well as the benefits of translating definitions from one field to another. In particular, ruling out the feasibility of our Game Theoretic notion is derived by minor adaptations of existing impossibility results in cryptography.

We demonstrate it by designing a protocol where only one of the parties learns the correct output (even when both parties are honest), while guaranteeing that the expected number of times that the honest party learns its correct output is negligibly far from the number of times an arbitrary fail-stop adversary learns its output. This restricted setting is useful, for instance, for realizing the sampling problem where the goal is to toss two correlated coins. Interestingly, in a recent work [2] it was proven that sampling is impossible to achieve in the standard cryptographic setting in the presence of a fail-stop adversary. On the other hand, our protocol can be easily used to realize this task. We note that [2] is an independent work, yet it provides a good demonstration of the main motivation for our work. That is, by considering reasonable game theoretic notions of cryptographic primitives and objects (that are relaxations of the standard cryptographic notions), it is feasible to achieve meaningful realizations of tasks that are impossible according to the standard definition.

Consider a concrete application for our protocol of a repeated game where two parties have two shares of a long term secret that are used to jointly compute a session key, such that the first party that presents the session key gets a treasure. Then, the question is how can the parties collaborate fairly in order to get the treasure. This is exactly the type of scenarios captured by our protocol. Specifically, the condition under which our protocol guarantees correlation is that the parties become aware of their gain or loss only after the protocol execution is done. Therefore, any strategizing done during the protocol must be done without knowing the outcome of the execution.

To conclude, we view this result as a fruitful cross-fertilization between the two disciplines, where the Game Theoretic point of view provides different and new insights in cryptography. We stress that these feasibility results are indeed somewhat restricted and hard to be generalized. Nevertheless, they do point to a surprising feasibility result. It is an interesting open problem to extend this protocol to more general settings and functions.

Related Work We stress that our Game Theoretic approach of fairness implies an entirely different notion than the cryptographic notions of fairness and is in particular different than “complete fairness” [16] where we do not allow any advantage of one party over the other. To overcome the impossibility of complete fairness, relaxed notions of “partial fairness” have been suggested in the cryptographic literature such as gradual release [4, 5, 7, 13] and optimistic exchange [29]. A notable recent example is the work of [18] that addresses the question of partial fairness using a new approach of the standard real-/ideal-world paradigm. Namely, this definition ensures fairness with probability at least \(1-1/p\) for some polynomial \(p(\cdot )\). Nevertheless, a drawback of this approach is that it allows a total break of security with probability 1 / p. In this work, we demonstrate how can our approach capture some notion of partial fairness as well (where according to our approach, one party may have some limited advantage over the other party in a collection of executions and not necessarily in a single one). We further discuss these differences below in the main body.

The Work of [20] Following the proceedings version of our work, Groce and Katz [20] showed the following result that demonstrates the feasibility of rational fair computation in the two-party setting in the presence of fail-stop and malicious adversaries. Specifically, they showed that any mediated game where (1) the mediator is guaranteed to either give the full function output to both parties or give nothing to both, and (2) both parties have positive expected utility from participating in the game at a Nash equilibrium, can be transformed into a protocol without a mediator, while preserving the same incentive structure and Nash equilibria.

Our work and [20] complement each other in the sense that the latter work shows that whenever the parties have a strict incentive to compute the function in the ideal world, there exists a real-world protocol for computing the function fairly. On the other hand, our result shows an example where the parties do not have a strict incentive to compute the function in the ideal world, and there does not exist a real-world protocol for computing the function fairly. Moreover, our paper shows that a feasibility result can be recovered in that specific case by relaxing correctness.

On the Definitional Choices One question that comes to mind when considering our modeling is why use plain Nash equilibria to exhibit correspondence between cryptographic notions and Game Theoretic ones. Why not use, for instance, stronger notions such as Dominant Strategy, Survival Under Iterated Deletions or Subgame Perfect equilibria. It turns out that in our setting of two party computation with fail-stop faults, Nash equilibria do seem to naturally correspond to cryptographic secure protocols. In particular, in the fail-stop case any Nash equilibrium is sub-game perfect, or in other words empty threats do not hold (see more discussion on this point in the next section).

Future Work Although considered in [20], it is still an interesting challenge to extend the modeling of this work to the Byzantine case. For one, in the Byzantine case there are multiple cryptographic notions of security, including various variants of simulation-based notions. Capturing these notions using Game Theoretic tools might shed light on the differences between these cryptographic notions. In particular, it seems that here the Game Theoretic formalism will have to be extended to capture arbitrary polynomial-time strategies at each decision point. In particular, it seems likely that more sophisticated Game Theoretic solution concepts such as sub-game perfect equilibria and computational relaxations thereof [19, 31] will be needed.

Another challenge is to extend the notions of fairness presented here to address also situations where the parties have more general, asymmetric a priori knowledge on each other’s inputs, and to find solutions that use minimal trust assumptions on the system. Dealing with the multi-party case is another interesting challenge.

Organization Section 2 presents the basic model, as well as both the cryptographic and the Game Theoretic “solution concepts.” In the cryptographic setting, we present both ideal-model-based definitions and indistinguishability-based ones. Section 3 presents our results for secrecy and correctness for deterministic functions. Section 4 presents the results regarding fairness, i.e., (i) the Game Theoretic notion, (ii) the equivalent cryptographic definition, (iii) a new simulation-based definition, (iv) the limited gradual release property and its relation to fairness and (v) the study of the fairness definition.

2 The Model and Solution Concepts

We review some basic definitions that capture the way we model protocols as well as the solution concepts from Game Theory.

2.1 Cryptographic Definitions

We review some standard cryptographic definitions of security for protocols. The definitions address secrecy and correctness using the indistinguishability approach.

Negligible Functions and Indistinguishability A function \(\mu (\cdot )\) is negligible if for every polynomial \(p(\cdot )\) there exists a value N such that for all \(n>N\) it holds that \(\mu (n)<\frac{1}{p(n)}\). Let \(X=\left\{ X(a,n)\right\} _{n\in N,a\in \{0,1\}^*}\) and \(Y=\left\{ Y(a,n)\right\} _{n\in N,a\in \{0,1\}^*}\) be distribution ensembles. Then, we say that X and Y are computationally indistinguishable, denoted \(X\mathop {\equiv }\limits ^\mathrm{c}Y\), if for every non-uniform probabilistic polynomial-time (ppt) distinguisher D there exists a negligible function \(\mu (\cdot )\) such that for all sufficiently long \(a\in \{0,1\}^*\),

Protocols Our basic object of study is a two-party protocol, modeled as a pair of interacting Turing machines as in [15]. We formulate both the cryptographic and the Game Theoretic concepts in terms of two-party protocols. We restrict attention to ppt machines (to simplify the analysis, we consider machines that are polynomial in a globally known security parameter, rather than in the length of their inputs).

Two-Party Functions In general, a two-party function is a probability distribution over functions \(f:\{0,1\}^*\times \{0,1\}^*\times \mathbf{N}\rightarrow \{0,1\}^*\times \{0,1\}^*\). Here the first (second) input and output represent the input and output of the first (second) party, and the third input is taken to be the security parameter. In this work, we consider the restricted model of deterministic functions. We say that a function is efficiently invertible if, for \(i=0,1\), given \(1^n\), an input value \(x_i\) and an output value y, it is possible to compute in ppt a value \(x_{1-i}\) such that \(f(x_0,x_1)=y\).

The Fail-Stop Setting The setting that we consider in this paper is that of two-party interaction in the presence of fail-stop faults. In this setting, both parties follow the protocol specification exactly, with the exception that any one of the parties may, at any time during the computation, decide to stop, or abort the computation. Specifically, it means that fail-stop adversaries do not change their initial input for the execution, yet they may arbitrarily decide on their output.

As seen momentarily, the Game Theoretic and the cryptographic approaches differ in the specifics of the abortion step. However, in both cases we assume that the abortion operation is explicit and public: as soon as one party decides to abort, the other party receives an explicit notification of this fact and can act accordingly (this is in contrast to the setting where one party decides to abort while the other party keeps waiting indefinitely to the next incoming message). This modeling of abortion as a public operation is easily justified in a communication setting with reasonable timeouts on the communication delays. Protocols in this model should specify the output of a party in case of early abortion of the protocol. We assume that this output has a format that distinguishes this output from output that does not result from early abortion. To this end, we denote the identity of the corrupted party by \(i^*\).

2.1.1 Cryptographic Security

We present game-based definitions that capture the notions of privacy and correctness. We restrict attention to deterministic functions. By definition [12], the \(\mathbf{View}\) of the ith party (\(i\in \{0,1\}\)) during an execution of \(\pi \) on \((x_0,x_1)\) is denoted \(\mathbf{View}_{\pi ,i}(x_0,x_1,n)\) and equals \((x_i,r^i,m_1^i,\ldots ,m_t^i)\), where \(r^i\) equals the contents of the ith party’s internal random tape, and \(m_j^i\) represents the jth message that it received.

Privacy We begin by introducing a definition of private computation [12]. Intuitively, it means that no party (that follows the protocol) should be able to distinguish any two executions when using the same inputs and seeing the same outputs. This holds even for the case that the other party uses different inputs. More formally:

Definition 2.1

(privacy) Let f and \(\pi \) be as above. We say that \(\pi \) privately computes f if the following holds:

-

1.

For every non-uniform ppt adversary \(\mathcal{A}\) that controls party \(P_0\)

$$\begin{aligned}&\left\{ \mathbf{View}_{\pi ,\mathcal{A}(z),0}(x_0,x_1,n)\right\} _{x_0,x_1,x'_1,y,z\in \{0,1\}^*,n\in {\mathbb {N}}}\\&\quad \mathop {\equiv }\limits ^\mathrm{c}\left\{ \mathbf{View}_{\pi ,\mathcal{A}(z),0}(x_0,x'_1,n)\right\} _{x_0,x_1,x'_1,z \in \{0,1\}^*,n\in {\mathbb {N}}} \end{aligned}$$where \(|x_0|=|x_1|=|x'_1|\) and \(f(x_0,x_1)=f(x_0,x'_1)\).

-

2.

For every non-uniform ppt adversary \(\mathcal{A}\) that controls party \(P_1\)

$$\begin{aligned}&\left\{ \mathbf{View}_{\pi ,\mathcal{A}(z),1}(x_0,x_1,n)\right\} _{x_0,x'_0,x_1,z \in \{0,1\}^*,n\in {\mathbb {N}}} \\&\quad \mathop {\equiv }\limits ^\mathrm{c}\left\{ \mathbf{View}_{\pi ,\mathcal{A}(z),1}(x'_0,x_1,n)\right\} _{x_0,x'_0,x_1,z \in \{0,1\}^*,n\in {\mathbb {N}}} \end{aligned}$$where \(|x_0|=|x'_0|=|x_1|\) and \(f(x_0,x_1)=f(x'_0,x_1)\).

Correctness Recall that we distinguish between output that corresponds to successful termination of the protocol and output generated as a result of an abort message. We assume that the two types have distinct formats (e.g., the second output starts with a \(\bot \) sign). The correctness requirement only applies to the first type of output. More precisely:

Definition 2.2

(correctness) Let f and \(\pi \) be as above. We say that \(\pi \) correctly computes f if for all sufficiently large inputs \(x_0\) and \(x_1\) such that \(|x_0|=|x_1|=n\), we have:

where \(\mathbf{Output}_{\pi ,i}\ne \bot \) denotes the output returned by \(P_i\) upon the completion of \(\pi \) whenever the strategy of the parties is continue, and \(\mu \) is a negligible function.

Note that, for the fail-stop setting, it holds that privacy and correctness imply simulation-based security with abort. This follows by a simple extension of the proof from [12] that states the same for semi-honest adversaries.

2.2 Game Theoretic Definitions

We review the relevant concepts from Game Theory, and the extensions needed to put these concepts on equal footing as the cryptographic concepts (specifically, these extensions include introducing asymptotic, computationally bounded players and negligible error probabilities). For simplicity, we restrict attention to the case of two-player games. Traditionally, a two-player (normal form, full information) game \(\Gamma =(\{A_0,A_1\},\{u_0,u_1\})\) is determined by specifying, for each player \(P_i\), a set \(A_i\) of possible actions and a utility function \(u_i : A_0 \times A_1\mapsto R\). Letting \(A\mathop {=}\limits ^\mathrm{def}A_0 \times A_1\), we refer to a tuple of actions \(a = (a_0,a_1)\in A\) as an outcome. The utility function \(u_i\) of party \(P_i\) expresses this player’s preferences over outcomes: \(P_i\) prefers outcome a to outcome \(a'\) if and only if \(u_i(a) > u_i(a')\). A strategy \(\sigma _i\) for \(P_i\) is a distribution on actions in \(A_i\). Given a strategy vector \(\sigma =\sigma _0,\sigma _1\), we let \(u_i(\sigma )\) be the expected utility of \(P_i\) given that all the parties play according to \(\sigma \). We continue with a definition of Nash equilibria:

Definition 2.3

(Nash equilibria for normal form, complete information games) Let \(\Gamma =(\{A_0,A_1\},\{u_0,u_1\})\) be as above, and let \(\sigma =\sigma _0,\sigma _1\) be a pair of strategies as above. Then \(\sigma \) is in a Nash equilibrium if for all i and any strategy \(\sigma '_i\) it holds that \(u_i(\sigma ''_0,\sigma ''_1)\le u_i(\sigma )\), where \(\sigma ''_i=\sigma '_i\) and \(\sigma ''_{1-i}=\sigma _{1-i}\).

The above formalism is also naturally extended to the case of extensive form games, where the parties take turns when taking actions. Here the strategy is a probabilistic function of a sequence of actions taken so far by the players. The execution of a game is thus represented naturally via the history which contains the sequence of actions taken. Similarly, the utility function of each player is applied to the history. Another natural extension is games with incomplete information. Here each player has an additional piece of information, called type, that is known only to itself. That is, the strategy \(\sigma _i\) now takes as input an additional value \(x_i\). We also let the utility function depend on the types, in addition to the history. In fact, we let the utility of each player depend on the types of both players. This choice seems natural and standard and greatly increases the expressibility of the model. We note, however, that as a result, a party cannot necessarily compute its own utility. To extend the notion of Nash equilibria to deal with this case, it is assumed that an a priori distribution on the inputs (types) is known and fixed. The expected utility of players is computed with respect to this distribution.

Definition 2.4

(Nash equilibria for extensive form, incomplete information games) Let \(\Gamma =(\{A_0,A_1\},\{u_0,u_1\})\) be as above, and let D be a distribution over \((\{0,1\}^*)^2\). Also, let \(\sigma =\sigma _0,\sigma _1\) be a pair of extensive form strategies as described above. Then \(\sigma \) is in a Nash equilibrium for D if for all i and any strategy \(\sigma '_i\) it holds that \(u_i(x_0,x_1,\sigma ''_0(x_0),\sigma ''_1(x_1))\le u_i(x_0,x_1,\sigma _0(x_0),\sigma _1(x_1))\), where \((x_0,x_1)\) is taken from distribution D, and \(\sigma _i(x)\) denotes the strategy of \(P_i\) with type x, \(\sigma ''_i=\sigma '_i\) and \(\sigma ''_{1-i}=\sigma _{1-i}\).

Extensions for the Cryptographic Model We review the (by now standard) extensions of the above notions to the case of computationally bounded players. See e.g., [8, 25] for more details. The first step is to model a strategy as an (interactive) probabilistic Turing machine that algorithmically generates the next move given the type and a sequence of moves so far. Next, in order to capture computationally bounded behavior (both by the acting party and, more importantly, by the other party), we move to an asymptotic treatment and consider an infinite sequence of games. That is, instead of considering a single game, we consider an infinite sequence of games, one for each value of a security parameter \(n\in {\mathbb {N}}\) (formally, we give the security parameter as an additional input to each set of possible actions, to each utility function, and to the distribution over types). The only strategies we consider are those whose runtime is polynomial in n.Footnote 1 The third and last step is to relax the notion of “greater or equal to” to “not significantly less than.” This is intended to compensate for the small inevitable imperfections of cryptographic constructs. That is, we have:

Definition 2.5

(computational Nash equilibria for extensive form, incomplete inf. games) Let \(\Gamma =(\{A_0,A_1\},\{u_0,u_1\})\) be as above, and let \(D=\{D_n\}_{n\in \mathbf{N}}\) be a family of distributions over \((\{0,1\}^*)^2\). Let \(\sigma =\sigma _0,\sigma _1\) be a pair of ppt extensive form strategies as described above. Then \(\sigma \) is in a Nash equilibrium for D if for all sufficiently large n’s, all i and any ppt strategy \(\sigma '_i\) it holds that \(u_i(n,x_0,x_1,\sigma ''_0(n,x_0),\sigma ''_1(n,x_1))\le u_i(n,x_0,x_1,\sigma _0(n,x_0),\sigma _1(n,x_1))+\mu (n)\), where \((x_0,x_1)\) is taken from distribution \(D_n\), \(\sigma _i(x,n)\) denotes the strategy of \(P_i\) with type x, \(\sigma ''_i=\sigma '_i\) and \(\sigma ''_{1-i}=\sigma _{1-i}\), and \(\mu \) is a negligible function.

We remark that an alternative and viable definitional approach would use a parametric, non-asymptotic definition of cryptographic security. Another viable alternative is to use the [22] notion of costly computation. We do not take these paths since our goal is to relate to standard cryptographic notions, as much as possible.

Our Setting We consider the following setting: Underlying the interaction there is a two-party protocol \(\pi =(\pi _0,\pi _1)\). At each step, the relevant party can make a binary decision: either abort the computation, in which case the other party is notified that an abort action has been taken, or else continue running the protocol \(\pi \) scrupulously. That is, follow all instructions of the protocol leading to the generation of the next message, including making all random choices as instructed. Namely, the strategic part of the action is very simple (a mere binary choice), in spite of the fact that complex computational operations may be involved. One may envision a situation in which a human has access to a physical device that runs the protocol, and can only decide whether or not to put the device into action in each round.

The traditional Game Theoretic modeling of games involving such “exogenous” random choices that are not controllable by the players involved introduces additional players (e.g., “Nature”) to the game. In our case, however, the situation is somewhat different, since the random choices may be secret, and in addition, each player also has access to local state that is preserved throughout the interaction and may affect the choices. We thus opt to capture these choices in a direct way: We first let each player have local history (initially, this local history consists only of the type of the player and its internal randomness). The notion of an “action” is then extended to include also potentially complex algorithmic operations. Specifically, an action may specify a (potentially randomized) algorithm and a configuration. The outcome of taking this action is that an output of running the said algorithm from the said configuration, is appended to the history of the execution, and the new configuration of the algorithm is added to the local history of the player. Formally:

Definition 2.6

Let \(\pi =(P_0,P_1)\) be a two-party protocol (i.e., a pair of interactive Turing machines). Then, the local history of \(P_i\) (for \(i\in \{0,1\}\)), during an execution of \(\pi \) on input \((x_0,x_1)\) and internal random tape \(r^i\), is denoted by \(\mathbf{History}_{\pi ,i}(x_0,x_1,n)\) and equals \((x_i, r^i, m_1^i, \ldots , m_t^i)\), where \(m_j^i\) represents its jth message. The history of \(\pi \) during this execution is captured by \((m_1^0,m_1^1),\ldots ,(m_t^0,m_t^1)\) and is denoted by \(\mathbf{History}_\pi \). The configuration of \(\pi \) at some point during the interaction consists of the local configurations of \(P_0,P_1\).

Fail-Stop Games We consider games of the form \(\Gamma _{\pi ,u}=(\{A_0,A_1\},\{u_0,u_1\})\), where \(A_0=A_1=\{\) continue,abort \(\}\). The decision is taken before the sending of each message. That is, first the program \(\pi _i\) is run from its current configuration, generating an outgoing message. Next, the party makes a strategic decision whether to continue or to abort. A continue action by player i means that the outgoing message generated by \(\pi _i\) is added to the history, and the new configuration is added to the local history. An abort action means that a special abort symbol is added to the configurations of both parties and then both \(\pi _0\) and \(\pi _1\) are run to completion, generating local outputs, and the game ends. We call such games fail-stop games.

The utility functions in fail-stop games may depend on all the histories: the joint one, as well as the local histories of both players. In the following sections, it will be convenient to define utility functions that consider a special field of the local history, called the local output of a player \(P_i\). We denote this field by \(\mathbf{Output}_{\pi ,i}\). Also, denote by \(\sigma ^{\mathtt{continue}}\) the strategy that always returns \(\mathtt{continue}\). The basic Game Theoretic property of protocols that we will be investigating is whether the pair of strategies \((\sigma ^{\mathtt{continue}},\sigma ^{\mathtt{continue}})\) is in a (computational) Nash equilibrium in fail-stop games, with respect to a given set of utilities and input distributions. That is:

Definition 2.7

(Nash protocols) Let \(\mathcal{D}\) be a set of distribution ensembles over pairs of strings, and let \(\mathcal{U}\) be a set of extensive form binary utility functions. A two-party protocol \(\pi \) is called Nash Protocol with respect to \(\mathcal{U,D}\) if, for any \(u\in \mathcal{U}\) and \(D\in \mathcal{D}\), the pair of strategies \(\sigma =(\sigma ^{\mathtt{continue}},\sigma ^{\mathtt{continue}})\) is in a computational Nash equilibrium for the fail-stop game \(\Gamma _{\pi ,u}\) and distribution ensemble D.

On Subgame Perfect Equilibria and Related Solution Concepts An attractive solution concept for extensive form games (namely interactive protocols) is subgame perfect equilibria, which allow for analytical treatment which is not encumbered by “empty threats.” Furthermore, some variants of this notion that are better suited to our computational setting have been recently proposed (see [19, 31]). However, we note that in our limited case of fail-stop games any Nash equilibrium is subgame perfect. Indeed, once one of the parties aborts the computation, there is no chance for the other party to “retaliate”; hence, empty threats are meaningless (recall that the output generation algorithms are not strategic, only the decision whether to abort is).

3 Privacy and Correctness in Game Theoretic View

In this section, we capture the traditional cryptographic privacy and correctness properties of protocols using Game Theoretic notions. We restrict attention to the fail-stop setting and deterministic functions with a single output (fairness aside, private computation of functions with two distinct outputs can be reduced to this simpler case, see [12] for more details).

3.1 Privacy in Game Theoretic View

Our starting point is the notion of private computation. A protocol is private if no (fail-stop) PPT adversary is able to distinguish any two executions where the adversary’s inputs and outputs are the same, even when the honest party uses different inputs in the two executions. Our goal, then, is to define a set of utility functions that preserve this property for Nash protocols. We therefore restrict ourselves to input distributions over triples of inputs, where the input given to one of the parties is fixed, whereas the input of the other party is uniformly chosen from the remaining pair. This restriction captures the strength of cryptographic (semantic) security: Even if a party knows that the input of the other party can only be one out of two possible values, the game does not give it the ability to tell which is the case. We then have a distribution for each such triple.

We turn to defining the utility functions. At first glance, it may seem that one should define privacy by having each party gain whenever it learns something meaningful on the other party’s private input. Nevertheless, it seems that it is better to make a partylose if the other party learns anything about its secret information. Intuitively, the reason is that it must be worthwhile for the party who holds the data to maintain it a secret. In other words, having the other party gain any profit when breaking secrecy is irrelevant, since it does not introduce any incentive for the former party to prevent this leakage (note, however, that here the utility of a party depends on events that are not visible to it during the execution). Formally, for each n we consider a sequence of input distributions for generating inputs of this length. Each distribution is denoted by D and indexed by triples \((a_0,a_1,b)\), and is defined by picking \(x\leftarrow (a_0,a_1)\) and returning (x, b). More concretely,

Definition 3.1

(distribution ensembles for privacy) The distribution ensemble for privacy for \(P_0\) for a two-party function f is the ensemble \(\mathcal{D}^{\mathsf{p}}_f=\{D^{\mathsf{p}}_{f,n}\}_{n\in \mathbf{N}}\) where \(D^{\mathsf{p}}_{f,n}=\{D_{a_0,a_1,b}\}_{a_0,a_1,b\in \{0,1\}^n,f(a_0,b)=f(a_1,b)}\), and \(D_{a_0,a_1,b}\) outputs (x, b), where \(x\mathop {\leftarrow }\limits ^{R}(a_0,a_1)\).

Distribution ensembles for privacy for \(P_1\) are defined analogously.

Let \(\pi \) be a two-party protocol computing a function f. Then, for every \((n,a_0,a_1,b)\) as above and for every ppt algorithm \(\mathcal{B}\), let the augmented protocol for privacy for \(\pi \), with guess algorithm \(\mathcal{B}\), be the protocol that first runs \(\pi \) and then runs \(\mathcal{B}\) on the local state of \(\pi \) and two additional auxiliary values. We assume that \(\mathcal{B}\) outputs a binary value, denoted by \(\mathsf{guess}_{\pi _{\mathsf{Aug},\mathcal{B}}^\mathsf{p},i}\) where \(P_i\) is the identity of the attacker. This value is interpreted as a guess for which one of the two auxiliary values is the input value of the other party.

Definition 3.2

(utility function for privacy) Let \(\pi \) be a two-party protocol and f be a two-party function. Then, for every \(a_0,a_1,b\) such that \(f(a_0,b)=f(a_1,b)\), and for every guessing algorithm \(\mathcal{B}\), the utility function for privacy for party \(P_0\), on input \(x\in \{a_0,a_1\}\), is defined by:

The utility function for party \(P_1\) is defined analogously. Note that if the history of the execution is empty, i.e., no message has been exchanged between the parties, and the inputs of the parties are taken from a distribution ensemble for privacy, then \(u_0^\mathsf{p}\) equals at least \(-1/2\). This is due to the fact that \(P_1\) can only guess x with probability at most 1 / 2. Therefore, intuitively, it will be rational for \(P_0\) to participate in the protocol (rather than to abort at the beginning) only if (and only if) the other party cannot guess the input of \(P_0\) with probability significantly greater than 1 / 2. The definition of Game Theoretic privately is as follows:

Definition 3.3

(game theoretic private protocols) Let f and \(\pi \) be as above. Then, we say that \(\pi \) is Game Theoretic private for party \(P_0\) if \(\pi _{\mathsf{Aug},\mathcal{B}}^\mathsf{p}\) is a Nash protocol with respect to \(u_0^\mathsf{p},u_1^\mathsf{p}\) and \(\mathcal{D}^{\mathsf{p}}_f\) and all valid ppt \(\mathcal{B}\).

Game Theoretic private protocol for \(P_1\) is defined analogously. A protocol is Game Theoretic private if it is Game Theoretic private both for \(P_0\) and for \(P_1\).

Theorem 3.4

Let f be a deterministic two-party function, and let \(\pi \) be a two-party protocol that computes f correctly (cf. Definition 2.2). Then, \(\pi \) is Game Theoretic private if and only if \(\pi \) privately computes f in the presence of fail-stop adversaries.

Proof

We begin with the proof that a Game Theoretic private protocol implies privacy by indistinguishability. Assume by contradiction that \(\pi \) does not compute f privately (in the sense of Definition 3.1) with respect to party \(P_0\) (w.l.o.g.). This implies that there exist a ppt adversary \(\mathcal{A}\) that corrupts party \(P_0\), a ppt distinguisher D, a non-negligible function \(\epsilon \) and infinitely many tuples \((x_0,x_1^0,x_1^1,n)\) where \(|x_0|=|x_1^0|=|x_1^1|=n\) and \(f(x_0,x_1^0)=f(x_0,x_1^1)\) such that,

We assume, without loss of generality, that \(\mathcal{A}\) never aborts prematurely, or in fact, it plays honestly. This is because we can construct an equivalent distinguisher that ignores all the messages sent after the round in which \(\mathcal{A}\) would have originally aborted, and then applies D on the remaining view.

We prove that there exists a valid ppt \(\mathcal{B}\) in which \((\sigma ^{\mathtt{continue}}_0, \sigma ^{\mathtt{continue}}_1)\) is not a computational Nash equilibrium in the game \(\Gamma _{\pi _{\mathsf{Aug}, \mathcal{B}}^{\mathsf{p}}, u^\mathsf{p}}\). That is, there exist an alternative strategy \(\sigma _1'\) for \(P_1\), a non-negligible function \(\epsilon '(\cdot )\) and infinitely many distributions \(D_{x_0, x_1^0, x_1^1} \in \mathcal{D}_f^\mathsf{p}\) such that

contradicting the assumption that \((\sigma ^{\mathtt{continue}}_0, \sigma ^{\mathtt{continue}}_1)\) is Nash equilibrium in the game \(\Gamma _{\pi _{\mathsf{Aug}, \mathcal{B}}^{\mathsf{p}}, u^\mathsf{p}}\).

Let \(\sigma _1^{\mathtt{abort}}\) be the strategy where \(P_1\) aborts the protocol before it starts (the initial abort strategy). Then, for any \(D_{x_0, x_1^0, x_1^1} \in D_f^\mathsf{p}\), and any ppt valid algorithm \(\mathcal{B}\) it holds that:

Consider the following \(\mathcal{B}\) algorithm: On input \(\mathbf{History}_{\pi ,1}(x,c,n),x_0,x_1^0,x_1^1\), \(\mathcal{B}\) invokes the distinguisher D and outputs \(x_1^0\) if D outputs 1, and outputs \(x_1^1\) otherwise.

We now consider the expected utility of \(P_1\) in the game \(\Gamma _{\pi _{\mathsf{Aug}, \mathcal{B}}^{\mathsf{p}}, u^\mathsf{p}}\), when both parties run according to the prescribed strategy:

Then it holds that,

contradicting the assumption that \((\sigma _0^\mathtt{continue}, \sigma _1^\mathtt{continue})\) is in Nash equilibrium. The above implies that \(\pi \) is not a Nash protocol.

We now turn to the proof in which privacy implies Nash with respect to \(u_0^\mathsf{p},u_1^\mathsf{p}\) and \(\mathcal{D}^{\mathsf{p}}_f\) and all valid \(\mathcal{B}\). Assume by contradiction that there exists a ppt \(\mathcal{B}\), such that the augmented protocol \(\pi _{\mathsf{Aug},\mathcal{B}}^\mathsf{p}\) is not a Nash protocol with respect to these parameters and party \(P_1\) (w.l.o.g.). This means that there exist an alternative strategy \(\sigma _1'\), infinitely many distributions \(D_{x_0,x_1^0,x_1^1,n}\in \mathcal{D}^{\mathsf{p}}_f\) and a non-negligible function \(\epsilon \) such that

without loss of generality, we can assume that \(\sigma _1'\) is the initial abort strategy; this is because in any other strategy, some information regarding \(P_1\)’s input may be leaked (since it participates in the protocol), and lowers the utility. Moreover, for any valid ppt algorithm \(\mathcal{B}\) it holds that

From the contradiction assumption, it holds that

Next we show that this implies that there exists a ppt adversary \(\mathcal{A}\), a ppt distinguisher D, a non-negligible function \(\epsilon '\) and infinitely many tuples of equal length inputs \((x_0,x_1^0,x_1^1)\) with \(f(x_0,x_1^0)=f(x_0,x_1^1)\) such that,

Fix \((x_0,x_1^0,x_1^1,n)\) and consider an adversary \(\mathcal{A}\) that simply runs as an honest party. Then, define a distinguisher D that invokes the algorithm \(\mathcal{B}\) and outputs 1 if and only if \(\mathcal{B}\) outputs \(x_1^0\). Then formally,

which is a non-negligible probability. This concludes the proof. \(\square \)

3.2 Correctness in Game Theoretic View

We continue with a formulation of a utility function that captures the notion of correctness as formalized in Definition 3.5. That is, we show that a protocol correctly computes a deterministic function if and only if the strategy that never aborts the protocol is in a computational Nash equilibrium with respect to the set of utilities specified as follows. The parties get high payoff only if they output the correct function value on the given inputs (types), or abort before the protocol starts; in addition, the players get no payoff for incorrect output. More formally, we introduce the set of distributions for which we will prove the Nash theorem. The distribution ensemble for correctness is simply the collection of all point distributions on pairs of inputs:

Definition 3.5

(distribution ensemble for correctness) Let f be a deterministic two-party function. Then, the distribution ensemble for correctness is the ensemble \(\mathcal{D}^{\mathsf{c}}_f=\{D^{\mathsf{c}}_{n}\}_{n\in \mathbf{N}}\) where \(D^{\mathsf{c}}_{n}=\{D_{a,b}\}_{a,b\in \{0,1\}^n}\), and \(D_{a,b}\) outputs (a, b) w.p. 1.

Note that a fail-stop adversary cannot affect the correctness of the protocol as it plays honestly with the exception that it may abort. Then, upon receiving an abort message we have the following: (i) either the honest party already learnt its output and so, correctness should be guaranteed, or (ii) the honest party did not learn the output yet, for which it outputs \(\bot \) together with its guess for the output (which corresponds to a legal output by Definition 2.2). Note that this guess is different than the guess appended in Definition 3.2 of utility definition for privacy, as here, we assume that the protocol instructs the honest party how to behave in case of an abort. Furthermore, an incorrect protocol in the presence of fail-stop adversary implies that the protocol is incorrect regardless of the parties’ actions (where the actions are continue or abort).

This suggests the following natural way of modeling a utility function for correctness: The parties gain a higher utility if they output the correct output, and lose if they output an incorrect output. Therefore, the continue strategy would not induce a Nash equilibrium in case of an incorrect protocol, as the parties gain a higher utility by not participating in the execution. More formally:

Definition 3.6

(utility function for correctness) Let \(\pi \) be a two-party fail-stop game as above. Then, for every a, b as above the utility function for correctness for party \(P_0\), denoted \(u_0^\mathsf{c}\), is defined by:

-

\(u_0^{\mathsf{c}}(\mathbf{History}_{\pi ,0}^\phi )=1\).

-

\(u_0^{\mathsf{c}}(\mathbf{Output}_{\pi ,0},a,b)\mapsto \left\{ \begin{array}{ll} 1 &{} \mathrm{if}~\mathbf{Output}_{\pi ,0}=f(a,b)\\ 0 &{} \mathrm{otherwise}\\ \end{array}\right. \)

where \(\mathbf{History}_{\pi ,0}^\phi \) denotes the case that the local history of \(P_0\) is empty (namely, \(P_0\) does not participate in the protocol).

Intuitively, this implies that the protocol is a fail-stop game if it is correct and vice versa. A formal statement follows below. \(u_1^\mathsf{c}\) is defined analogously, with respect to \(P_1\).

Theorem 3.7

Let f be a deterministic two-party function, and let \(\pi \) a two-party protocol. Then, \(\pi \) is a Nash protocol with respect to \(u_0^{\mathsf{c}},u_1^{\mathsf{c}}\) and \(\mathcal{D}_f^\mathsf{c}\) if and only if \(\pi \) correctly computes f in the presence of fail-stop adversaries.

Proof

We begin with the proof that a Nash protocol with respect to \(u_0^{\mathsf{c}},u_1^{\mathsf{c}}\) and \(\mathcal{D}_f^\mathsf{c}\) implies correctness. Assume by contradiction that \(\pi \) does not compute f correctly. Meaning, there exist infinitely many pairs of inputs \((x_0,x_1)\), \(i\in \{0,1\}\) and a non-negligible function \(\epsilon \) such that

We assume that the output of the protocol in case of prematurely abort starts with \(\bot \). Thus, our contradiction assumption holds only for the case where the protocol terminates successfully; however, \(P_i\) outputs a value different than \(f(x_0, x_1)\). Without loss of generality, assume that \(i = 0\). We now show that \((\sigma _0^{\mathtt{continue}}, \sigma _1^{\mathtt{continue}})\) does not induce a computational Nash equilibrium in the game \(\Gamma _{\pi , u^\mathsf{c}}\). Namely, the expected utility for \(P_0\) when both parties play according to the prescribed strategy is,

Let \(\sigma _0^{\mathtt{abort}}\) be the strategy for which \(P_0\) initially aborts (i.e., does not participate in the protocol). Then, we have that

Thus, it holds that

contradicting the assumption that \((\sigma _0^\mathtt{continue}, \sigma _1^\mathtt{continue})\) induces a computational Nash equilibrium in game \(\Gamma _{\pi , u^\mathsf{c}}\).

We now turn to the proof in which correctness implies Nash with respect to \(u_0^{\mathsf{c}},u_1^{\mathsf{c}}\) and \(\mathcal{D}_f^\mathsf{c}\). Assume by contradiction that \(\pi \) is not a Nash protocol with respect to \(u_0^{\mathsf{c}}\) (w.l.o.g.) and \(\mathcal{D}_f^\mathsf{c}\). This means that there exist an alternative strategy for \(P_0\) (w.l.o.g), infinitely many distributions \(D_{x_0,x_1,n}\in \mathcal{D}_f^\mathsf{c}\) and a non-negligible function \(\epsilon \) in which

When both parties follow the prescribed strategy, the format of the output does not start with \(\bot \), and thus we have

Under the assumption that \(P_1\) plays according to \(\sigma _1^{\mathtt{continue}}\), once \(P_0\) starts the protocol—its utility can only reduces unless it outputs \(f(x_0, x_1)\) exactly. This can happen only when the protocol terminates successfully, i.e., it plays according to \(\sigma ^{\mathtt{continue}}_0\). Therefore, the only alternative strategy that yields a higher utility is \(\sigma _0^{\mathtt{abort}}\) (i.e., the initial abort). The expected utility for \(P_0\) when it plays according to \(\sigma _0^{\mathtt{abort}}\) and \(\sigma _1\) plays according to \(\sigma _1^{\mathtt{continue}}\) is 1. Thus, we have that

Yielding that \(\pi \) does not compute f correctly on these inputs. \(\square \)

4 Exploring Fairness in the Two-Party Setting

Having established the notions of privacy and correctness using Game Theoretic formalism, our next goal is to capture fairness in this view. However, this turns out to be tricky, mainly due to the highly “reciprocal” and thus delicate nature of this notion. To illustrate, consider the simplistic definition for fairness that requires that one party learns its output if and only if the second party does. However, as natural as it seems, this definition is lacking since it captures each party’s output as an atomic unit. As a result, it only considers the cases where the parties either learnt or did not learn their output entirely, and disregards the option in which partial information about the output may be gathered through the execution. So, instead, we would like to have a definition that calls a protocol fair if at any point in the execution both parties gather, essentially, the same partial information about their respective outputs.

Motivated by this discussion, we turn to the Game Theoretic setting with the aim to design a meaningful definition for fairness, as we did for privacy and correctness. This would, for instance, allow investigating known impossibility results under a new light. Our starting point is a definition that examines the information the parties gain about their outputs during the game, where each party loses nominatively to the success probability of the other party guessing its output (this is motivated by the same reasoning as in privacy). In order to obtain this, we first define a new set of utility functions for fairness for which we require that the game would be Nash, see Sect. 4.1 for the complete details.

Having defined fairness for rational parties, we wish to examine its strength against cryptographic attacks. We therefore introduce a new game-based definition formalizes fairness for two-party protocols and is, in fact, equivalent to the Game Theoretic definition. We then provide a new definition of the gradual release property, that is equivalent to the Game Theoretic and game-based definitions. Finally, we present a simulation-based definition and explore the realizability of our notions. Specifically, we include a new definition of the gradual release property (cf. Sect. 4.3) and demonstrate a correlation between protocols with this property and protocols that are fair according to our notion of fairness. In particular, it shows that at any given round, the parties cannot improve their chances for guessing correctly “too much.” Otherwise, the protocol would not be fair. We then introduce in Sect. 4.4 a new notion of simulation-based definition for capturing security of protocols that follow our game-based notion of fairness, specified above. This new notion is necessary as (gamed-based) fair protocols most likely cannot be simulatable according to the traditional simulation-based definition [12]. We consider the notion of “partial information” in the ideal world alongside preserving some notion of privacy. We then prove that protocols that satisfy this new definition are also fair with respect to game-based definition.

Finally, we consider the realizability of our notion of fairness. We then observe that our notion is meaningful even in the case where parties are not guaranteed to always learn the output when both parties never abort. Somewhat surprisingly, in cases where the parties learn the output with probability one half or smaller, our notion of fairness is in fact achievable with no set-up or trusted third parties. We demonstrate two-party protocols that realize the new notion in this settings. We also show that whenever this probability raises above one half, our notion of fairness cannot be realized at all.

4.1 Fairness in Game Theoretic View

In this section, we present our first definition for fairness that captures this notion from a Game Theoretic view. As for privacy and correctness, this involves definitions for utility functions, input distributions and a concrete fail-stop game (or the sequence of games). We begin with the description of the input distributions. As specified above, the input of each party is picked from a domain of size two, where all the outputs are made up of distinct outputs. More formally,

Definition 4.1

(collection of distribution ensembles for fairness) Let f be a two-party function. Let \((x_0^0,x_0^1,x_1^0,x_1^1, n)\) be an input tuple such that: \(|x_0^0|=|x_0^1|=|x_1^0|=|x_1^1|=n\), and for every \(b \in \{0,1\}\) it holds that:

-

\(f_0(x_0^0, x_1^b) \ne f_0(x_0^1, x_1^b)\) (in each run there are two possible outputs for \(P_0\)).

-

\(f_1(x_0^b, x_1^0) \ne f_1(x_0^b, x_1^1)\), (in each run there are two possible outputs for \(P_1\)).

Then, a collection of distribution ensembles for fairness \(\mathcal{D}^{\mathsf{f}}_f\) is a collection of distributions \(\mathcal{D}^{\mathsf{f}}_f= \{D_{x_0^0,x_0^1,x_1^0,x_1^1,n}\}_{x_0^0,x_0^1,x_1^0,x_1^1,n}\) such that for every \((x_0^0,x_0^1,x_1^0,x_1^1,n)\) as above, \(D_{x_0^0,x_0^1,x_1^0,x_1^1,n}\) is defined by

Next, let \(\pi _{\mathcal{B}}\) be the protocol, where \(\mathcal{B}=(\mathcal{B}_0,\mathcal{B}_1)\). By this notation, we artificially separate between the protocol and the predicting algorithms in case of prematurely abort. More precisely, in the case that \(P_0\) prematurely aborts, \(P_1\) invokes algorithm \(\mathcal{B}_1\) on its input, its auxiliary information and the history of the execution, and outputs whatever \(\mathcal{B}_1\) does. \(\mathcal{B}_0\) is defined in a similar manner. In fact, we can refer to these two algorithms by the instructions of the parties regarding the values they need to output after each round, capturing the event of an early abort. We stress these algorithms are embedded within the protocol. However, this presentation enables us to capture scenarios where one of the parties follow the guessing algorithm as specified by the protocol, whereas the other party follows an arbitrary algorithm. That is, we can consider protocols \(\pi _{\mathcal{B}'}\) (with \(\mathcal{B}' = (\widetilde{\mathcal{B}}_0, \mathcal{B}_1)\)) that are equivalent to the original protocol \(\pi _\mathcal{B}\) except for the fact that \(P_0\) guesses its output according to \(\widetilde{\mathcal{B}}_0\) instead of \(\mathcal{B}_0\). We now describe the fairness game \(\Gamma _{\pi _\mathcal{B}, u^\mathsf{f}}\) for some \(\mathcal{B}=(\mathcal{B}_0,\mathcal{B}_1)\). The inputs of the parties, \(x_0, x_1\), are selected according to some distribution ensemble \(D_{x_0^0,x_0^1,x_1^0,x_1^1}\) as defined in Definition 4.1. Then, the parties run the fail-stop game, where their strategies instruct them in each step whether to \(\mathtt{abort}\) or \(\mathtt{continue}\). In case that a party \(P_i\) aborts, the outputs of both parties are determined by the algorithms \((\mathcal{B}_0, \mathcal{B}_1)\). Let \(\mathbf{Output}_{\pi _{\mathcal{B}'}, i}\) denote the output of \(P_i\) in game \(\pi _{\mathcal{B}'}\), and then a utility function for fairness is defined by:

Definition 4.2

(utility function for fairness) Let f be a deterministic two-party function, and let \(\pi \) be a two-party protocol. Then, for every \(x_0^0,x_0^1,x_1^0,x_1^1,n\) as above (cf. Definition 4.1), for every pair of strategies \((\sigma _0,\sigma _1)\) and for every ppt \(\widetilde{\mathcal{B}}_0\), the utility function for fairness for party \(P_0\), denoted by \(u_0^\mathsf{f}\), is defined by:

where \(x_0, x_1\) are as in Definition 4.1 and \(\mathcal{B}'=(\widetilde{\mathcal{B}}_0,\mathcal{B}_1)\). Moreover, the utility for \(P_1\), \(u_1^\mathsf{f}=0\).

Since the utility function of \(P_1\) is fixed, \(P_1\) has no incentive to change its strategy. Moreover, we consider here the sequence of games where \(P_1\) always guesses its output according to \(\mathcal{B}_1\), the “original” protocol. This actually means that \(P_1\) always plays honestly, from the cryptographic point of view. We are now ready to define a protocol that is Game Theoretic fair for \(P_1\) as:

Definition 4.3

(game theoretic fairness for \(P_1\)) Let f and \(\pi _\mathcal{B}\) be as above. Then, we say that \(\pi _\mathcal{B}\) is Game Theoretic fair for party \(P_1\) if \(~\Gamma _{\pi _{\mathcal{B}'},(u_0^\mathsf{f},u_1^\mathsf{f})}\) is a Nash protocol with respect to \((u_0^\mathsf{f},u_1^\mathsf{f})\) and \(\mathcal{D}^{\mathsf{f}}_f\) and all ppt \(\widetilde{\mathcal{B}}_0\), where \(\mathcal{B}' = (\widetilde{\mathcal{B}}_0, \mathcal{B}_1)\).Footnote 2

Namely, if \(\pi _\mathcal{B}\) is not Game Theoretic fair for \(P_1\), then \(P_0\) (and only \(P_0\)) can come up with a better strategy, and some other guessing algorithm \(\widetilde{\mathcal{B}}_0\) (where both define an adversary in the cryptographic world). This is due to the fact that the utility for \(P_1\) is fixed, and so it cannot find an alternative strategy that yields a higher utility. In other words, \(P_1\) has no reason to invoke a different guess algorithm, and so this definition formalizes the case where \(P_1\) is honest in the protocol \(\pi _\mathcal{B}\). This, in particular, implies that a somewhat natural zero-sum game, where the total balance of utilities is zero, does not work here.

Similarly, we define Game Theoretic fair for party \(P_0\), where we consider all the protocols \(\pi _{\mathcal{B}'}\), for all ppt \(\widetilde{\mathcal{B}}_1\) and \(\mathcal{B}' = (\mathcal{B}_0, \widetilde{\mathcal{B}}_1)\), and the utilities functions are opposite (i.e., the utility for \(P_0\) is fixed into zero, whereas the utility of \(P_1\) is modified according to its guess). We conclude with the definition for Game Theoretic protocol:

Definition 4.4

(game theoretic fair protocol) Let f and \(\pi \) be as above. Then, we say that \(\pi \) is Game Theoretic fair protocol if it is Game Theoretic fair for both \(P_0\) and \(P_1\).

4.2 A New Indistinguishability-Based Definition of Fairness

Toward introducing our cryptographic notion for fairness, we first consider a basic, one-sided definition that guarantees fairness only for one of the two parties. A protocol is considered fair if the one-sided definition is satisfied with respect to both parties. We refer to this game as a test for the protocol in a “fair” environment, where each party has two possible inputs and its effective input is chosen uniformly at random from this set. Moreover, both parties know the input tuple and the distribution over the inputs. This is due to the fact that we, untraditionally, assume that the protocol instructs the honest party to guess the output of the function based on its view and the information it recorded so far. We note that these scenarios are not fair under the traditional simulation-based definition [12], since they do not follow the all or nothing method, in which the parties can only learn the entire output at once. In order to illustrate this, consider a protocol that enables both parties to learn the correct output with probability about 3 / 4. Then, this protocol is fair according to our definition below although it is not simulatable by the [18] definition. Before introducing the game-based definition, we first introduce non-trivial functionalities, to avoid functionalities that one of the parties may know the correct output without participating.

Definition 4.5

(non-trivial functionalities) Let f be a two-party function. Then, f is non-trivial if for all sufficiently large n’s, there exists an input tuple \((x_0^0,x_0^1,x_1^0,x_1^1,n)\) such that \(|x_0^0|=|x_0^1|=|x_1^0|=|x_1^1|=n\) and \(\{f_0(x_0,x_1^b),f_0(x_0,x_1^b)\}_{b \in \{0,1\}}\), \(\{f_1(x_0^b,x_1^0), f_1(x_0^b,x_1^1)\}_{b \in \{0,1\}}\) are distinct values.

We are now ready to introduce our formal definition for fairness:

Definition 4.6

(game-based definition for fairness) Let f be a non-trivial two-party function, and let \(\pi \) be a two-party protocol. Then, for every input tuple (cf. Definition 4.5) and any ppt fail-stop adversary \(\mathcal{A}\), we define the following game:

Game \(\mathsf{Fair}_{\pi ,\mathcal{A}}{(x_0^0, x_0^1, x_1^0, x_1^1,n)}\):

-

1.

Two bits \(b_0, b_1\) are picked at random.

-

2.

Protocol \(\pi \) is run on inputs \(x_0^{b_0}\) for \(P_0\) and \(x_1^{b_1}\) for \(P_1\), where \(\mathcal{A}\) sees the view of \(P_{i^*}\).

-

3.

Whenever \(\mathcal{A}\) outputs a value y, \(P_{1-i^*}\) is given an \(\mathtt{abort}\) message (At this point, \(P_{1-i^*}\) would write its guess for \(f_{1-i^*}(x_0^{b_0}, x_1^{b_1}, n)\) on its output tape).

-

4.

The output of the game is:

-

1 if (i) \(y = f_0(x_0^{b_0}, x_1^{b_1}, n)\) and (ii) \(P_{1-i^*}\) does not output \(f_1(x_0^{b_0}, x_1^{b_1}, n)\).

-

\(-1\) if (i) \(y \ne f_0(x_0^{b_0}, x_1^{b_1}, n)\) and (ii) \(P_{1-i^*}\) outputs \(f_1(x_0^{b_0}, x_1^{b_1}, n)\).

-

0 otherwise (i.e., either both parties output correct outputs or both output incorrect outputs).

-

We say that \(\pi \) fairly computes f if for every ppt adversary \(\mathcal{A}\), there exists a negligible function \(\mu (\cdot )\) such that for all sufficiently large inputs it holds that,

At first sight, it may seem that Definition 4.6 is tailored for the fair exchange function, i.e., when the parties trade their inputs. This is due to the fact that the parties’ output completely reveal their inputs. Nevertheless, we note that the definition does not put any restriction on f in this sense and is aimed to capture fairness with respect any nontrivial function. We continue with the following theorem:

Theorem 4.7

Let f be a two-party function and let \(\pi \) be a protocol that computes f correctly. Then, \(\pi \) is Game Theoretic fair (in the sense of Definition 4.4), if and only if \(\pi \) fairly computes f in the presence of fail-stop adversaries (in the sense of Definition 4.6).

Proof

We first write explicitly the guessing algorithm \(\mathcal{B}=(\mathcal{B}_0,\mathcal{B}_1)\) and denote the protocol \(\pi \) as \(\pi _\mathcal{B}\). Assume that \(\pi _\mathcal{B}\) is Game Theoretic fair for \(P_0\) and \(P_1\); we thus prove that \(\pi _\mathcal{B}\) is fair in the sense of Definition 4.6. Assume by contradiction that \(\pi _\mathcal{B}\) is not fair with respect to this latter definition. Thus, there exist infinitely many input tuples \(x_0^0,x_0^1,x_1^0,x_1^1,n\) which yield distinct outputs, a ppt adversary \(\mathcal{A}\) controlling \(P_0\) (w.l.o.g.) and a non-negligible function \(\epsilon \) for which

This implies that,

Namely, when \(P_1\) runs according to the protocol specifications and \(P_0\) runs according to \(\mathcal{A}'s\) strategy, the expected advantage of \(P_0\) over \(P_1\) in guessing successfully is non-negligibly higher.

We now show that there exists \(\mathcal{B}' = (\mathcal{B}_0^\mathcal{A}, \mathcal{B}_1)\) for which the game \(\Gamma _{\pi _{\mathcal{B}'},u^\mathsf{f}}\) is not a Nash game. That is, consider an alternative strategy \(\sigma _0^\mathcal{A}\) for \(P_0\): This strategy invokes the adversary \(\mathcal{A}\), such that for each message that the strategy receives from the other party, it forwards this message to \(\mathcal{A}\). Furthermore, whenever it receives the message and guess for the following round from the game (together with the random coins that were used to generate these values), the strategy invokes the adversary \(\mathcal{A}\) with the appropriate random coins. If the adversary chooses to output this message, \(\sigma _0^{\mathcal{A}}\) outputs the action \(\mathtt{continue}\). If the adversary \(\mathcal{A}\) chooses to abort, \(\sigma _0^\mathcal{A}\) outputs \(\mathtt{abort}\).

Moreover, let \(\mathcal{B}^\mathcal{A}_0\) be the following guess algorithm: On input \(\left( \mathbf{History}_{\pi ,0}(x_0^{b_0},x_1^{b_1},n),x_0^0,x_0^1,x_1^0,x_1^1\right) \), \(\mathcal{B}^\mathcal{A}_0\) invokes the adversary \(\mathcal{A}\) on the above history. Upon completion, \(\mathcal{B}^\mathcal{A}_0\) outputs whatever \(\mathcal{A}\) does. Let \({\vec {x}}= (x_0^{b_0},x_1^{b_1})\). Then, in a run of \((\sigma ^\mathcal{A}_0, \sigma _1^\mathtt{continue})\) within game \(\Gamma _{\pi _{\mathcal{B}'}, u^\mathsf{f}}\) where \(\mathcal{B}' = (\mathcal{B}_0^\mathcal{A}, \mathcal{B}_1)\), we have that:

yielding that the expected utility of \(P_0\) when it follows \(\sigma ^{\mathcal{A}}_0\) (and assuming that \(P_1\) runs according to the prescribed strategy), is non-negligible. That is,

When both parties play according to the prescribed strategy \(\sigma _i^\mathtt{continue}\), both parties learn the correct output at the end of the protocol except to some negligible function \(\mu (\cdot )\), and thus:

which is implied by the correctness of the protocol, and thus:

for infinitely many n’s, implying that there exists a non-negligible difference between the alternative strategy and the prescribed strategy, and thus, \(\pi _{\mathcal{B}}\) is not Game Theoretic fair for \(P_0\).

Next, assume that \(\pi _\mathcal{B}\) meets Definition 4.6 and prove that \(\pi _\mathcal{B}\) is Game Theoretic fair for \(P_0\) and \(P_1\). Assume by contradiction that \(\pi _\mathcal{B}\) is not Game Theoretic fair for \(P_1\) (w.l.o.g.), implying that there exists an alternative strategy \(\sigma _0'\), infinitely many distributions \(D_{x_0^0,x_0^1,x_1^0,x_1^1,n}\in \mathcal{D}^{\mathsf{f}}_f\), a ppt \(\widetilde{\mathcal{B}}_0\) and a non-negligible function \(\epsilon \) such that \(\pi _{\mathcal{B}'}\) is not a Nash protocol, where \(\mathcal{B}'=(\widetilde{\mathcal{B}}_0,\mathcal{B}_1)\). Namely,

Based on the same assumption as above, when both parties follow \(\sigma ^\mathtt{continue}\), both learn the correct output except to some negligible function \(\mu (\cdot )\), and thus:

implying that,

Now, consider the following adversary \(\mathcal{A}\) which controls the party \(P_0\): \(\mathcal{A}\) invokes the strategy \(\sigma _0'\) with the random coins of \(\mathcal{A}\). Then, whenever \(\sigma _0'\) outputs \(\mathtt{continue}\), \(\mathcal{A}\) computes the next message (using the same random coins) and outputs it. Whenever \(\mathcal{A}\) receives a message from \(P_1\), it passes the message to \(\sigma _0'\). When \(\sigma _0'\) outputs \(\mathtt{abort}\), \(\mathcal{A}\) aborts, invokes the guess algorithm \(\widetilde{\mathcal{B}}_0\) and outputs the corresponding output to \(\widetilde{\mathcal{B}}_0\)’s guess. We have that an execution of the protocol \(\pi _\mathcal{B}\) with an honest party \(P_1\) and the adversary \(\mathcal{A}\) is equivalent to an execution of the game \(\Gamma _{\pi _{\mathcal{B}'}, u^\mathsf{f}}\) with \(\mathcal{B}' = (\widetilde{\mathcal{B}}_0, B_1)\) where the parties follow the \((\sigma _0', \sigma _1^\mathtt{continue})\) strategies. Therefore, we have that

and so,

contradicting the assumption that \(\pi _\mathcal{B}\) is fair. \(\square \)

4.3 The Limited Gradual Release Property

In this section, we show that the guesses of the parties at any given round, and that every two consecutive guesses of each party, must be statistically close. Namely, we show that once there is a non-negligible difference between these, the protocol cannot be fair. Intuitively, this follows from the fact that any such difference is immediately translated into an attack where the corresponding party aborts before completing this round, while gaining an advantage over the other party in learning the output.

The significance of limited gradual release is in providing a new tool to study fairness in the two-party setting. Specifically, by proving that it implies fairness (in the sense of Definition 4.6; see Theorem 4.11), it enables to gain more insights regarding the characterization of fairness. On the other hand, the fact that limited gradual release is implied by fairness (cf. Theorem 4.10) may shed light on the reason it is impossible to achieve limited gradual release under certain constraints. Notably, our notion of limited gradual release is in contrast to the typical meaning of gradual release specified in prior work (e.g., [7]), which refers to partially fair protocols in which the parties learn their output gradually such that the amount of information that is released regarding the output is limited, but non-negligible, and there always exists a party with a non-negligible advantage over the others.