Abstract

In the past decade, the sparsity prior of image is investigated and utilized widely as the development of compressed sensing theory. The dictionary learning combined with the convex optimization methods promotes the sparse representation to be one of the state-of-the-art techniques in image processing, such as denoising, super-resolution, deblurring, and inpainting. Empirically, the sparser of image representation, the better of image restoration. In this work, the non-local clustering sparse representation is applied with optimized matching strategies of self-similar patches, which break through the bottleneck of search window (localization) and improve the estimation effect of the sparse coefficient. The experimental results show that the proposed method provides an effective suppression on noise, preserves more details of image and presents more comfortable visual experience.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Vision is taken as the most advanced source of information for human beings, and images play the most important role in human vision. Noise corruption is inevitable during the sensing process, and it may heavily degrade our visual experience. Removing noise is an essential step in various image processing and vision tasks, such as image segmentation, image coding, and target detection. In particular, many image restoration problems could be addressed by solving a sub-problems of denoising, which further extends the meaning of exploring image denoising techniques.

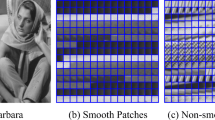

Many classical denoising methods have emerged in recent years [1,2,3,4,5,6,7,8], such as wavelet, dictionary leaning and multi-scale feature fusion. The methods could be roughly divided into two categories: spatial domain filtering and transform domain filtering. The former directly processes the pixels of the image and the representative one is the non-local means (NLM) [1]. NLM is wonderful algorithm because of its simplicity and effectiveness, which creatively utilized the non-local self-similarity (NSS) of image for denoising task. The core of NLM is to group the similar patches and perform an average operation for noise suppression. Natural images often have NSS prior, as shown in Fig. 1, the contours are not randomly distributed and have a clear correlation/similarity at different positions. The NSS prior is widely used in many image processing methods [2,3,4,5,6], such as the benchmark denoising algorithm-block matching three-dimensional filtering (BM3D) [3].

The other category mainly uses a basis function (atom) to transform the image to another domain, where separates the noise and effective information of image. The representative method is sparse representation. For natural images, the meaningful information usually possesses sparsity in a transform domain (e.g. wavelet domain), where the sparse signal is enhanced and noise remains the same, by using a simple thresholding method, the noise component could be removed and the useful information is retained, and finally the inverse transform is performed to recover the image in spatial domain. There are two typical solutions, one is based on the analytic basis [9] and the other is the dictionary learning. With the flexibility of atom design, the redundant and over-complete dictionary representation could achieve higher sparsity when compared with the analytic basis methods, and indeed obtained much better denoising performance, such as K-means Singular Value Decomposition (K-SVD) [10], Learned Simultaneous Sparse Coding (LSSC) [5], Expected Patch Log Likelihood (EPLL) [11], Nonlocally Centralized Sparse Representation (NCSR) [12]; furthermore, the visual data often has an intrinsic low-rank structure [13], the low-rankness of patch matrix could be viewed as a 2D sparsity prior as compared with the dictionary sparse representation, and based on the low rank matrix completion theory [14], some impressive restoration methods have been proposed [15,16,17,18,19,20,21,22,23,24]. The key point of sparse method is to improve the sparsity of representation, and the NSS feature is one of the most important and widely used prior for sparsity improvement, especially in the case of image corrupted by noise. Several very competitive algorithms reported [12, 16, 18], taking full advantage of the NSS prior of natural images, have proven the effectiveness of this prior.

In this work, in order to better employ the NSS prior of image and further improve the denoising performance, the centralized sparse representation (CSR) [25]-based algorithm is proposed with enhanced NSS from 3 aspects: (1) the similar patches from external images are combined with the internal noise patches to refine the dictionary; (2) a global matching strategy is adopted to facilitate the estimation of model parameters; (3) an improved similarity measurement with cosine distance is used to eliminate the influence of the dimensional difference.

Among the sparse representation methods, the benchmarks [12, 25] introduced the sparse coding noise (SCN) in the object function and minimized SCN along with the fidelity term to obtain a superior performance. However, the sub-dictionary is learned from the centralized patches from noisy image itself and neglects the external priors, and the estimation of latent clean image is performed in a local window, which limits the quality of patch grouping with NSS prior. By introducing external reference images and global matching strategies, the clustering is improved to make better dictionary learning and more accurate estimation of latent clean patches, and then, the improvement of denoising performance is expected. In particular, using Euclidean distance (ED) is easy to cause mismatch by dimensional error, as shown in Fig. 2, and we adopt the cosine distance instead of ED to remove the dimensional difference to achieve a better matching effect.

The structure of the paper is constructed as follows: the second part introduces the proposed method, and third part illustrates the operations of NSS enhancement as well as the principle of denoising method; the fourth part demonstrates the denoising results and the comparison with several state-of-the-art algorithms based on two kinds of datasets, one is the standard image processing pictures with added Gaussian white noise (AGWN); the other is the actual low-light image with real noise; the last part summarizes the content of the paper, points out the places need to be improved in the future.

2 The proposed method

For sparse representation, the image x ϵ RN, and its sparse coefficients x ≈ Φαx, αx ϵ RM, where M < < N and most entries of αx are 0 by dictionary coding (dictionary Φ ϵ RMxN), the key point of the method is sparse decomposition with dictionary Φ, and generally it could be obtained by solving the l0-norm minimization problem [26,27,28], αx = argmin||α||0, s.t. ||x-Φαx||2 ≤ ε; however, the l0 norm minimization is a NP-hard problem, and often replaced by its closest convex relaxation-l1 norm minimization:

where the constant λ denotes the regularization parameter, which balance the fidelity term (approximation error) and the sparse prior. And Eq. (1) could be minimized efficiently by an analytic method such as iterative shrinkage algorithm [29]. In particular, coding with dictionaries learned from natural images could get a better performance than with fixed basis [30].

For image restoration, the degraded image signal y could be generally written as y = Hx + n, where H is the degradation operator and for denoising H is specified as identical matrix, x is the latent clean image and n is the additive noise, and generally set as Gaussian distribution N(0, σn2). Due to intensity difference in sparse domain between noise and image signal, denoising could be realized by a simple thresholding method, which removes the noise components and retains the structure information [31]. To recover the latent clean image x from its noisy version y with respect to the dictionary Φ, the sparse model could be written in a patched way as below:

However, simply using the sparse coding for the degraded image to recover the latent clean one is a very challenging task, for ill-posed nature of denoising for high-dimensional nature of natural images. On the other hand, it is known that the sparse coefficients of natural images are not randomly distributed, as shown in Fig. 1, and they have a NSS feature obviously. The strong correlations could be used to develop a more effective sparse model by exploring the nonlocal redundancies. Indeed, several classic methods are derived from this point; among them, the most representative one is NSCR, which introduced a sparse coding noise (SCN) to incorporate the NSS prior to help improve the image restoration performance. The objective function of NCSR [12] is:

where parameters Φ and β paly the most important role for performance improvement. Φ is indeed a sub-dictionary which is learned from each cluster grouped in a global area and β is the estimated latent clean patch used to form the SCN (α-β), obtained by the corresponding sub-dictionary coding on an averaged patch with local matching method. The regularization parameter λ could be derived from the interpretation of the model in the Maximum a Posterior way [32].

The NCSR model achieved the state-of-the-art performance at that time. However, new requirements raised later have surpassed its ability, such as the heavy noise condition where the noise components are dominating in the images, it is very difficult to extract effective characteristics if only based on the original one. There is still room for improvement of the estimated β by sub-dictionary coding on a specified global searching instead of a local window. In order to improve the accuracy of sparse coding and cover more noise levels, we propose an enhanced NSS improvement for sparse representation embed in the NCSR basic framework to pursue a better denoising performance.

3 External and global matching strategy

3.1 The effectiveness and feasibility of external images

The prior information could be extracted from different sources. In general, learning a dictionary from the natural image library will be time-consuming, however, in many occasions, a large number of similar images could be obtained and simplify the selection of external priors. With the help of the correlated images, the extracted sparse prior is much more accurate than that learned from the noisy image only. For example, in the surveillance situations, the image taken at night corrupted by a strong noise due to its low light environment, and in the same environment, images taken during the day have less noise while the major components remain the same which could be a worth reference and provide effective guidance for denoising at night.

3.2 Similarity measurement

For image processing, patch operation is widely adopted for consideration of computation efficiency. Therefore, the patch matrix M of image is constructed with each column as a patch vector. To cluster the patches, the K-means method is adopted for similarity calculation, the cosine distance is used to replace the ED since ED ignores the correlation interference between data/patch (as shown in Fig. 2), which may lower the matching accuracy and in turn affects quality of sparse representation.

The cosine distance could be viewed as a normalized ED; it is insensitive to the absolute values of patches and more closed to the humane perception under a neuroscience perspective [33]. Based on the concept of cosine distance, image block similarity measurement is proposed:

where r represents the reference image patch, i represents the test patch, dr, i is the ‘distance’ between the two patches yr, yi, function cos(·) is the cosine similarity of yr, yi as two vectors. One patch is classified into a certain class according to the size of dr, i.

3.3 Global clustering for dictionary learning

For the noisy image, external priors are added to improve the dictionary learning of corresponding features, aiming to improve the denoising performance, steps include:

(1) Image retrieval: selecting the similar external images from some ready-made datasets or on the web. For the tested dataset set12, the external image could be selected easily by using a search engine, such as TinEye or Google Images. Also, one can use a much more sophisticated method for image searching, such as [34]. For the real-noise dataset, the external similar images are captured with different conditions such as light intensity, exposure time and different view-angles.

(2) Patch matrix formation: decomposing the selected images into patches with specified size, merging the patches sequentially to form a matrix M (each column represents a patch vector);

(3) Feature extraction:

(a) Flat feature extraction, for a specific patch yi = M(:, i) in M, calculating its variance:

where n is the pixel number in a patch, p and q represent the pixel locations, b is the patch size (square shape). \(\tilde{y}_{i}\) is the Gaussian filtered image of patch yi. Then a threshold method is used to determine whether a patch belongs to the flat region. As for natural images, the flat area generally accounts for a large proportion and the flat structure are simple, a small local window is sufficient for its extraction as well as atom learning.

(b) Detail feature extraction: for the detail patches left in M, the K-means method is used for grouping. The similarity measurement adopts the method described in Sect. 2.2. In consideration of the instability of the global dictionary learning method [10], a sub-dictionary learning followed the Ref.12 is adopted based on PCA method [35]. The number of sub-dictionary atom is consistent with the final optimized clustering centers (assuming K). Given the flat region atom, the total atom number is K + 1, and the final dictionary is represented as:

3.4 Global matching for sparse coefficient estimation

The method of searching similar patches in local window is widely used because it is simple and effective. However, for natural images, similar structures may be distributed at different locations across the whole image as well as similar external images. Local windows exclude a large number of high-quality structures outside the window, as shown in Fig. 3. Therefore, we propose a matching method by searching the similar patches in a global area (patch matrix M) instead of a local window.

Comparison of finding similar patches with a local and global matching: a The similar patches with local matching; b The similar patches with global matching (the reference patch is indicated by green square, and the similar patches indicated by red square); c comparison of the averaging effect for local and global matching

For the i-th patch yi of target image, first finding the class Sk it belongs to, for all the image blocks yj in the class Sk, calculating the distance di,j by using (4); then choosing the first t minimum distance patches yi,j as the similar patches of yi. The estimated patch of yi is calculated as:

where the averaging weights of wi,j is determined by:

Finally, the key parameter of sparse coefficient is calculated by the learned sub-dictionary D (φk):

Additionally, the regularization parameter λ could be derived from applying the maximum posterior probability MAP [28] for NCSR model representation:

where σn2 is the noise level of original image, and σi,j is the noise level of jth pixel in ith patch, and the object function is:

The global matching strategy offers several distinct advantages: (1) the external prior is used to enhance the NSS feature for sub-dictionary learning and latent clean patch estimation; (2) making the sparse coding of patch consistent with the sub-dictionary learning process in a global nature; (3) the denoising effect has been improved remarkably with different noise levels.

The object function (11) could be solved by an iterative shrinkage method efficiently [29], and the algorithm of this work combined with a global match strategy is illustrated in Algorithm 1.

4 Experimental results and discussions

4.1 Evaluation indicators and parameters setting

In order to verify the effectiveness of NSS enhancement, the proposed method will be tested on the standard test image dataset (set12) and the real-noise photograph dataset, respectively. The results are compared with the classic denoising algorithms K-SVD, EPLL, BM3D, NCSR and WNNM. Peak signal-to-noise ratio (PSNR) and structural similarity (SSIM) are used for evaluation of image denoising performance.

The parameters in K-SVD, EPLL, BM3D, NCSR and WNNM are used as default. Except that there are several parameters to be illustrated for patch matching in NCSR and proposed method. Experimentally, the patch size is set to 6 × 6, 7 × 7, 8 × 8 and 9 × 9 for σn ≤ 20, 20 < σn ≤ 40, 40 < σn ≤ 70 and 70 < σn, respectively as well as T is set to 2, 3, 3, and 4 according to the noise levels. Additionally, the thresholding value for flat classification is proportional to the total noise level and the default scale coefficient is 1. When used for real photograph denoising, the noise level is evaluated by the method [37].

4.2 Test on standard dataset (set12)

Test is performed on 12 standard test images (as shown in Fig. 4) with additive white Gaussian noise (AWGN) for performance testing. The AWGN is set with a mean value of 0 and the standard deviation σn is 10, 30, 50, 70, and 100, respectively.

Table 1 and Table 2 show the PSNR and SSIM results for various sparse representation algorithms, respectively, and the bold values represent the best performance in the comparison. It could be seen that the proposed method has achieved a better performance in almost all noise levels, even in the case of strong noise (σn > = 50). The remarkable improvements under strong noise (σn > = 50) prove the effectiveness of the NSS enhancement, since that the external images with similar details/structures merged have offered extra useful information and improved the quality of image prior, while the other methods that only rely on the single noisy image suffer from heavy degradation as the noise level increases and could not be recovered independently and effectively.

For subjective evaluation, three standard images (Boat, Monarch, Peppers) under strong noise (σn=100) were selected for display (shown in Figure 5). The details of the same area of each figure are enlarged (marked by a green square). Fig. 5 indicates that some details in the original image are almost indistinguishable due to severe damage of strong noise. By comparison, it is found that the K-SVD is overall blurred after denoising, image details are lost. As for EPLL and BM3D, some visual artifacts are suppressed, but the water ripple effect appears in the smooth area of the image, mainly caused by the patch processing of the image, while the NCSR and WNNM have an over-smooth phenomenon and some texture information is lost. Local window search limits the quantity and quality for patch matching, resulting in obvious errors in the reconstructed structures. As for the proposed method with global matching, more details of the original image are reconstructed, while the flat area is visually pleasant.

4.3 Test on real photograph dataset

To further verify the effectiveness of the proposed algorithm, we applied the algorithm for real-noise image taken at low-light condition in a laboratory environment. The dataset contains 8 scenes, 5 levels of light intensity below 3 × 10−3 lx and 3 exposure times have been chosen for each scene, and 5 photos are taken under every condition. The total number of our self-made image dataset is 8 × 5 × 3 × 5 = 600. Several low-light typical scenes are shown in Fig. 6.

The overall image is dark, with low signal-to-noise ratio and dead pixels. Usually, such image needs to be pre-processed before denoising, which mainly includes defect corrections and luminance enhancement. The dataset contains images taken under different ambient light levels and under the same shooting parameters, generally, the lower the brightness, the lower the signal-to-noise ratio and stronger noise intensity after digital amplifier. Since latent truth of images is extremely difficult to obtain, we only make a subjective comparison, as shown in Figure 7.

The comparison is similar to that on the standard dataset. Overall, the EPLL and BM3D results still have slight artifacts in the flat region and edge structure; the NCSR and WNNM have a smooth trend. As for the protection of details and textures such as characters and edge lines, the EPLL, BM3D and NCSR are not as good as the proposed algorithm.

5 Conclusions

In this paper, the limitations of reported sparse representation for image denoising are studied. Based on the non-local clustering algorithm framework, we proposed a NSS enhanced denoising algorithm. By combining external reference images and global matching strategies, the quantity and quality of similar patches for sparse prior are improved, making the NSS-based method more efficient. By testing on the standard test dataset and the real low-light dataset, the results indicate that the proposed algorithm could restore the details and textures effectively during denoising and result in visually pleasant images. The method proposed surpasses the previous methods alike and achieves competitive performance even compared with remarkable low rank methods.

The method based on sparse model optimization has promoted many advanced image techniques; however, these methods suffer from shortcoming of time-consuming which limits their applications in real world. In particular, with the fast development of machine learning technology, many deep learning-based denoising methods have been invented [38,39,40,41], which are classified as the discriminative learning method with the advantages of excellent performance on specific problems and fast processing speed, and disadvantages of poor generalization ability and difficult to collect the training pairs.

On the contrary, the model optimization method, such as sparse representation, is of good generalization and provides relatively satisfactory denoising performance, and how to improve the processing speed of the method is our next focus. Inspired by the deep learning work on image denoising, a natural optimization method could be raised by converting the solution of a sparse model to a network like the deep learning network, and it is expected to take both advantages of retaining the image prior for better generalization and transferring the time-consuming work to the pre-training part. The final testing could be high efficiency with GPU hardware accelerations.

References

Buades, A., Coll, B., Morel, J.M.: A review of image de-noising algorithms with a new one. J. Multiscale Model. Simul. 4(5), 490–530 (2010)

Zhang, L., Dong, W., Zhang, D., Shi, G.: Two-stage image denoising by principal component analysis with local pixel grouping. Pattern Recogn. 43(4), 1531–1549 (2010)

Dabov, K., Foi, A., Katkovnik, V., et al.: Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2080–2095 (2007)

Xu, J., Zhang, L., Zuo, W., Zhang, D., Feng, X.: Patch group based nonlocal self-similarity prior learning for image denoising, Proc. IEEE International Conference on Computer Vision, October (2015) pp. 64–78

Mairal, J., Bach, F., Ponce, J., Sapiro, G, Zisserman, A: Non-local sparse models for image restoration. Proc. IEEE International Conference on Computer Vision, September 2009, pp. 2272–2279

Dong, W., Shi, G., Li, X.: Nonlocal image restoration with bilateral variance estimation: a low-rank approach. IEEE Trans. Image Process. 22(2), 700–711 (2012)

Huang, W., Wang, Q., Li, X.L.: Denoising-based multiscale feature fusion for remote sensing image captioning. IEEE Geosci. Remote Sens. Lett. 18(3), 436–440 (2021)

Yuan, Y., Lin, J.Z., Wang, Q.: Hyperspectral image classification via multitask joint sparse representation and stepwise MRF optimization. IEEE Transactions Cybern. 46(12), 2966–2977 (2016)

Kaur, M., Sharma, K., et al.: Image denoising using wavelet thresholding. Proc. Int. J. Eng. Comput. Sci. 2(10), 2932–2935 (2012)

Elad, M., et al.: Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 15(12), 3735–3746 (2006)

Zoran, D., Weiss, Y.: From learning models of natural image patches to whole image restoration, Proc. IEEE International Conference on Computer Vision, November (2011) pp. 479–486

Dong, W., Zhang, L., Shi, G., Li, X.: Nonlocally centralized sparse representation for image restoration. IEEE Image Processing Transactions 22(4), 1620–1630 (2012)

Wang, S., Zhang, L., Liang, Y.: Nonlocal spectral prior model for low-level vision, Proc. 11th Asian conference on Computer Vision, November (2012)

Candes, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9, 717–772 (2009)

Chen, F., Zhang, L., Yu, H. M.: External Patch Prior Guided Internal Clustering for Image Denoising, Proc. IEEE International Conference on Computer Vision, December (2015) pp 603–611

Guo, Q., Zhang, C.M., et al.: An efficient SVD-based method for image denoising. IEEE Trans. Circuits Syst. Video Technol. 26(5), 868–880 (2016)

Gu, S. H., Zhang, L. et al.: Weighted nuclear norm minimization with application to image denoising, Proc. IEEE Conference on Computer Vision and Pattern Recognition, June 2014, pp. 1–8

Xie, Y., Gu, S.H., et al.: Weighted schatten p-norm minimization for image denoising and background subtraction. IEEE Trans. Image Process. 25(10), 4842–4857 (2016)

Wu, T., Zhang, R., Jiao, Z. H. et al.: Adaptive spectral rotation via joint cluster and pairwise structure. IEEE Transactions on Knowledge and Data Engineering (Early Access), April (2021)

Zhang, R., Li, X. L., Zhang, H. Y. et al., “Geodesic Multi-Class SVM with Stiefel Manifold Embedding”, IEEE Transactions on Pattern Analysis and Machine Intelligence ( Early Access), March (2021)

Zhang, R., Li, X.L.: Unsupervised feature selection via data reconstruction and side information. IEEE Trans. Image Process. 29, 8097–8106 (2020)

Zhang, R., Zhang, H.Y., Li, X.L.: Robust multi-task learning with flexible manifold constraint. IEEE Trans. Pattern Anal. Mach. Intell. 43(6), 2150–2157 (2021)

Zhang, R., Tong, H. H.: Robust Principal Component Analysis with Adaptive Neighbors, 33rd Conference on Neural Information Processing Systems, (2019)

Gu, S.H., Xie, Q., et al.: Weighted nuclear norm minimization and its applications to low level vision. Int. J. Comput. Vision 121(7), 183–208 (2017)

Dong, W., Zhang, L. et al.: Centralized sparse representation for image restoration, Proc. IEEE International Conference on Computer Vision, November 2011, pp 1259–1266

Candes, E. J.: Compressive Sampling, Proceedings of the International Congress of Mathematicians, Madrid, Spain, (2006)

Candes, E.J., Romberg, J., Tao, T.: Robust uncertainty principles exact signal reconstruction from highly incomplete frequency information. IEEE Transactions Information Theory 52(2), 489–509 (2016)

Candes, E.J., Romberg, J.: Sparsity and incoherence in compressive sampling. Inverse Prolems 23, 969–985 (2017)

Daubechies, I., Defrise, M., Dye Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57(11), 1413–1457 (2004)

Zhang, L., Zuo, W.M.: Image restoration: from sparse and low-rank priors to deep priors. IEEE Signal Process. Mag. 34(5), 172–179 (2017)

Donoho, D.L.: De-noising by soft-thresholding. IEEE Trans. Inf. Theory 41(3), 613–627 (1995)

Sendur, L., Selesnick, I.W.: Bivariate shrinkage functions for wavelet-based denoising exploiting interscale dependency. IEEE Trans. Signal Process. 50(11), 2744–2756 (2002)

Olshausen, B.A., Field, D.J.: Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381(13), 607–609 (1996)

Gordo, A., Almazan, J. et al.: Deep image retrieval: learning global representations for image search, Proc. European Conference on Computer Vision, September 2016, pp 241–257

Shlens, J: A Tutorial on Principal Component Analysis, arXiv:1404.1100, April (2014)

Elad, M., Aharon, M.: Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 15(12), 3736–3745 (2006)

Zoran, D., Weiss, Y. et al.: Scale invariance and noise in natural images, Proc. IEEE 12th International Conference on Computer Vision, October 2009, pp 2209–2216

Chen, Y., Pock, T.: Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Transactions Pattern Anal. Mach. Intell. 39(6), 1256–1272 (2016)

Zhang, K., Zuo, W.M., et al.: Beyond a Gaussian Denoiser: residual learning of deep CNN for image denoising. IEEE Image Process. Transactions 26(7), 3142–3155 (2017)

Zhang, K., Zuo, W.M., Zhang, L.: Ffdnet: toward a fast and flexible solution for cnn based image denoising. IEEE Image Process. Transactions 27(9), 4608–4622 (2018)

Dong, W., Wang, P.Y., et al.: Denoising prior driven deep neural network for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 41(10), 2305–2318 (2019)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhou, T., Li, C., Zeng, X. et al. Sparse representation with enhanced nonlocal self-similarity for image denoising. Machine Vision and Applications 32, 110 (2021). https://doi.org/10.1007/s00138-021-01232-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-021-01232-3