Abstract

Image mosaic is a useful preprocessing step for background subtraction in videos recorded by a moving camera. To avoid the ghosting effect and mosaic failure due to huge exposure difference and big parallax between adjacent images, this paper proposes an effective mosaic algorithm named Combined SIFT and Dynamic Programming (CSDP). Based on SIFT matching and dynamic programming, CSDP uses an improved optimal seam searching criterion that provides “protection mechanisms” for moving objects with an edge-enhanced weighting intensity difference operator and ultimately solves the ghosting and incomplete effect induced by moving objects. The proposed method was compared to three widely used mosaic softwares (i.e., AutoStitch, Microsoft ICE, and Panorama Maker) and Mills’ approach in multiple scenes. Experimental results show the feasibility and effectiveness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Background subtraction is a hot research topic in computer vision and has many applications in video surveillance, human-machine interaction and sports video analysis [1, 8, 25–27]. In real-world applications, the videos are usually recorded by a moving camera in which case standard background subtraction algorithms do not work. A possible solution is to first generate wide-angle view images with image mosaic softwares and then apply standard background subtraction algorithms on the wide-angle view images. In the past few decades, a large number of mosaic algorithms were proposed, which achieve good performances [12, 22, 28]. There are also some existing mosaic softwares available, such as AutoStitch [2], Microsoft ICEFootnote 1, and Panorama MakerFootnote 2. However, to ensure good mosaic effect, most current algorithms and softwares have to meet the following constraints:

-

1.

The scenes must be static. Most mosaic algorithms are designed for static scenes. If the scenes contain moving objects such as moving vehicles, pedestrians, leaves under wind and so on, ghosting effect would appear in mosaic results, or even worse mosaic will fail due to the parallax of moving objects [2, 14].

-

2.

The camera should be kept rotating around its optical center when capturing images. A large deviation from optical center would also cause ghosting effect and thus result in the mosaic failure [2, 4, 10, 17, 20].

-

3.

Large exposure differences are not allowed between images. Large exposure difference will lead to inaccurate registration and mosaic failure [3, 24].

There is some work that tries to solve the dynamic mosaic problems mentioned above. Echigo et al. and Iranio et al. [7, 13] used a median filtering operator to eliminate the ghost problem in their mosaic processes. However, these methods require large overlapping regions without obvious intensity differences between the images. Shum and Szeliski [21, 23] proposed a method that uses a set of transformations to construct a full view panorama, but they are not suitable for mosaic conditions with strong motion parallax.

David [3] first used phase correlation to perform image registration, and then calculated the intensity difference between the registered images by the overlapping regions, and finally search for the optimal stitching line by using the Dijkstra algorithm. However, if there are large exposure differences between the registered images, the optimal stitching line searching may not be achieved using intensity difference alone.

Milles and Dudek [19] proposed an optimal seam searching criterion that combines intensity difference and gradient difference. The Dijkstra algorithm was then used to optimize the seam line searching. However, if the moving objects are similar to the background of the adjacent image to be matched, the optimal seam line may divide the whole moving object into two parts. In this case, the composite image would contain ghosting effect.

Image registration is a critical step for image mosaic. The commonly used registration methods are mainly divided into three classes: template matching, mutual information (MI), and features-based methods. The template matching methods firstly get a matching template by selecting a window from the overlapping regions [15], and then search in another image until it reaches the highest matching degree. This kind of methods can solve the image registration with low computational complexity when there is only pure translation. MI-based methods were originally proposed for the registration of multi-modality images. It was commonly used for medical image processing in recent years [29]. However, MI-based approaches are often powerless in the presence of serious occlusions. Mikolajczyk and Schmid [18] compared several popular feature point matching algorithms, and drew the conclusion that the SIFT algorithm [16] is better than other feature-based algorithms in many aspects. The SIFT feature has many advantages, such as invariant to image scale and rotation, robust to affine distortion, and even to occlusion, noise, and illumination variations.

In this paper, to address the problems mentioned above, we propose an algorithm named CSDP, which was mainly designed for dynamic scenes with moving objects, large exposure differences and big parallax. Firstly, SIFT matching was applied to perform image registration.Then we propose an optimal seam searching criterion modified from Mills and Dudek [19]. The optimal seam searching criterion is established by combining gradient difference with edge-enhanced weighting intensity difference, which provides an effective mechanism for avoiding problems caused by moving objects and as a result the stitching line will bypass the moving objects. Subsequently, we searched for the stitching line based on the improved optimal seam searching criterion by applying dynamic programming [6] which is relatively easier than the Dijkstra algorithm. Finally, the multi-resolution image fusion method was used to minimize inconsistency nearby the stitching line.

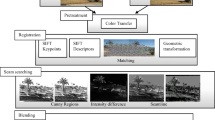

In the following sections, we will give more details about the proposed method using the example images in Fig. 1. To verify the effectiveness of the proposed method, we compare it with three popular softwares, i.e., AutoStitch, Microsoft ICE, and Panorama Maker as well as Mills’ approach.

2 CSDP algorithm for dynamic scene mosaic

There are mainly three steps in the proposed method: image registration, improved optimal seam searching, and multi-resolution image fusion.

2.1 Image registration

As mentioned in Sect. 1, we adopt SIFT feature matching to register two images including the following four steps:

-

Step 1 Extracting SIFT descriptors from two adjacent images to be registered.

-

Step 2 Applying an approximate nearest neighbor algorithm for SIFT descriptor matching.

-

Step 3 Deleting the mismatching points (outline points) by RANSAC algorithm [9]. As shown in Fig. 2, the correct matching points (inline points) are marked in the red circle, and the mismatching points deleted by RANSAC algorithm are marked in the green circle.

-

Step 4 Computing the projection between two images based on the matching points using the general linear least square method or some recently developed approaches, such as [30].

2.2 Improved optimal seam searching

After registering (i.e., the projection matrix was computed), we can figure out the overlapping regions between two adjacent images. And then we stitch the registered images together along the seam in the overlapping region. The ideal optimal seam should satisfy the following requirements [6]:

-

The difference in intensity around the stitch line should be minimal.

-

The difference in geometrical structure around the stitch line should be minimal.

Actually, it is very hard to meet the above two requirements at the same time since there are inevitable exposure differences between the images taken by a hand-held camera. To overcome this problem, we first correct the exposure difference using the inline matching points mentioned in Sect. 2.1. After exposure correction, we introduce an edge-enhanced weighting intensity difference (EWID) operator to replace the traditional intensity difference mentioned in Mills and Dudek [19]. For the similarity of geometric structure in the overlapping region, we formulate it by gradient difference. The detailed procedures are introduced in the following subsections.

2.2.1 Exposure correction

The exposure differences between the images are unavoidable whether taken by the same camera at different times or different cameras at the same time. Assuming the optical reflection characteristics of objects in the overlapping regions remain constant, we can estimate the exposure correction between two images by a linear model:

where \(pixel_{1}\) and \(pixel_{2}\) represent the pixel values of the original images and the image after exposure correction, respectively, \(\alpha \) means gain and \(\beta \) means bias.

To calculate the parameters \(\alpha \) and \(\beta \) , we propose to denoise image \(I_{1}\) and \(I_{2}\) by Gaussian blur filtering. Let \(I'_{1} \) and \( I'_{2}\) denote the denoised images, respectively. And then we compute \(\alpha \) and \(\beta \) by using the least square method based on the inline points mentioned in Sect. 2.1. Supposing \(pixel'_{1} \in I'_{1},pixel'_{2} \in I'_{2}\) are the pixel values of a pair of inline matching points from adjacent images after Gaussian blur filtering. The linear equation of \(\{ \alpha ,\beta \} \) is formulated as

where \(A=[pixel'_{2i},1],m=[\alpha ,\beta ]^{T} ), i=1,2,\ldots ,n \) and \(n\) is the total number of inline points. The results of the exposure correction are illuminated in Fig. 3a (with Gaussian blur filter) and Fig. 3b (without Gaussian blur filter). As we can see that the exposure correction model with Gaussian blur is more robust and accurate.

An illustration of the effectiveness of the proposed exposure correction. The red snowflake points represent the inline matching point pairs extracted by SIFT, and the fitting straight line is fitted using linear least square. a The linear fitting result without Gaussian blur preprocessing and b the linear fitting result after Gaussian blur preprocessing

2.2.2 Edge-enhanced weighting intensity difference

In Mills and Dudek [19], the intensity difference was obtained by a pixel-by-pixel normalized ratio

where \(Im_{1_{ij}}\) and \(Im_{2_{ij}}\) are corresponding pixels in the overlapping regions of adjacent images, and obviously \(\delta ^{I}_{ij} \in [0,1]\). The intensity difference computed using Eq. 3 is shown in Fig. 4a.

From Fig. 4a, we can see multiple moving objects: a bus, cars, and a biker. Here, we focus on the moving bus that overlaps with the static tree. Traditional intensity difference method divided the moving bus into multiple parts. To overcome this problem, we propose an improved edge-enhanced weighting intensity difference (EWID). The detailed procedures are summarized as follows:

-

Step 1 Denoising images \(Im_{1}\) and \(Im_{2}\) by a Gaussian blur filter of \(5\times 5 \) kernel operators \(G(x,y,\delta )\), we can get:

$$\begin{aligned} Im'_{1}&= G(x,y,\delta )\times Im_{1} \nonumber \\ Im'_{2}&= G(x,y,\delta )\times Im_{2} \end{aligned}$$(4) -

Step 2 Building intensity difference by

$$\begin{aligned} \delta ^{I}_{ij}=\frac{abs(Im'_{1_{ij}}-Im_{2'_{ij}})}{max(Im'_{1_{ij}},Im'_{2_{ij}})} \end{aligned}$$(5) -

Step 3 Threshold binarization :

$$\begin{aligned} Im^{msk}_{ij}= {\left\{ \begin{array}{ll} 1 &{} (\delta ^{I}_{ij} \ge Thrd )\\ 0 &{} (\delta ^{I}_{ij} < Thrd ) \end{array}\right. } \end{aligned}$$(6)here, \(Im^{msk}_{ij}\) is the binary image of \(\delta ^{I}_{ij}\). We set the threshold \(Thrd\) to 0.7 in this paper which achieves the best performance in the experiments.

-

Step 4 Making a morphological dilation operation for the binary image \(Im^{msk}\) twice, and then connecting the dilated image with 4 pixels both on horizontal and vertical directions to generate the image \(Im^{msk'}\) which is shown in Fig 4b.

-

Step 5 Large weighting intensity-difference value \(weight\) is given to the edge of moving objects by computing the average of image \(Im^{msk'}\). In this paper, we set the parameter \(weight\) to 5. So after enhancing weights on the edge, the intensity difference image \(\delta ^{I'}_{ij}\) can be computed as

$$\begin{aligned} \delta ^{I'}_{ij}= {\left\{ \begin{array}{ll} weight &{} (Im^{msk'}_{ij}=1 \cap \delta ^{I}_{ij}<thrd )\\ \delta ^{I}_{ij} &{} (else ) \end{array}\right. } \end{aligned}$$(7)

Figure 4c shows the EWID image \(\delta ^{I'}\) where the green pixel points take up a value of \(weight\). According to Fig. 4c, the edge of moving objects (bus, cars and biker) are connected as a whole. As described in the following section, the above mentioned improvement can effectively prevent the seam from dividing the moving objects into several parts.

2.2.3 Gradient difference

If the exposure differences between two images are obviously nonlinear, the exposure correction will become ineffective. In this case, the intensity differences cannot effectively express the similarity between images, so we set up the gradient images \(\Delta Im_{1}\) and \(\Delta Im_{2}\) for those images

And the computation of gradient difference \(\delta ^{\Delta }\) in this paper is the same as traditional intensity difference \(\delta ^{I}\). An example of the gradient difference image is shown in Fig. 5.

2.2.4 Optimal seam searching criteria

Based on the combination of EWID and gradient difference, we establish the optimal seam line searching criteria as follows:

In this formula, we select \(w_{1} \) and \(w_{2} \) by adopting the weighting method in Duplaquet [6]:

Here, \(w_{2}\) is the weight of the gradient difference. During the mosaic process with huge exposure difference, the accuracy of exposure correction using a linear model will be reduced, and the reliability of the similarity measurement between intensity differences will also be decreased. In that case, the gradient difference will be assigned a greater weight.

where \(w_{1}\) is the weight of intensity difference. If the value is negative, we set it to be 0.

After the procedure above, we finally create a seam searching criterion, as shown in Fig. 6. Because of the minimal exposure difference between these two example images, \(w_{1}\) trend to 1. Therefore, the image of seam searching criteria is similar to the EWID image as shown in Fig. 4c.

2.2.5 Optimal seam searching implementation

In this section, we use dynamic programming associated with Eq. 9 to find out the optimal seam criterion. Supposing each pixel position of the first row residing in the overlap region corresponds to a seam line, then the optimal seam should have the minimal value according to the seam searching criterion that was mentioned in the previous section. The main steps are summarized as follows:

-

Initialization. As shown in Fig. 7a, each pixel in each column on the first row corresponds to a seam line, and is assigned with a value calculated by Eq. 9.

Fig. 7 An illustration of the optimal seam searching method. Each circle represents a pixel value obtained using Eq. 9. a Initialization. b Expanding for the first time

-

Expanding sequentially until reaching the last row. Adding the current points of each seam line to the minimum value of the three pixels on the next row, as shown in Fig. 7b, and then setting the column with the minimum pixel as extension direction, updating the current point of the column with the minimum value.

The algorithm complexity of the above process is \(O(mn)\) where \(m\) and \(n\) denote the numbers of row and column, respectively. The proposed method is more efficient than the Dijkstra algorithm [5] with complexity of \(O(mn^2)\).

In Fig. 8, the red line is the optimal seam obtained by dynamic programming. The optimal seam without improvement is shown in Fig. 8a. We can see this optimal seam line cuts the moving bus into two parts.The optimal seam after improvement is shown in Fig. 8b. Because of the introduction of edge-enhanced weighting intensity difference algorithm, the moving bus can effectively maintain its integrity, and there is no incomplete object in the mosaic image. Figure 9 is the mosaic result of the example images in Fig. 1 where the stitch line is shown in the black line. In Fig. 9a, the red box demonstrates that the composited image contains residual of the moving bus, but there is no such a problem in Fig. 9b.

2.3 Multi-resolution mosaic based on optimal seam

To minimize the inconsistency nearby the optimal seam, we adopted the multi-resolution method [11] to stitch two images \(I_A\) and \(I_B\) along the optimal seam. We first generated a mask \(I_R\) based on the optimal seam. \(I_R\) is assigned to 0 on the left side of this seam line, and 1 on the right side. The steps of composition are summarized as follows:

-

Step 1 Generating Laplacian pyramid \(L_A\) and \(L_B\) for \(I_A\) and \(I_B\), respectively;

-

Step 2 Generating Gaussian pyramid \(G_R\) for \(I_R\);

-

Step 3 Compositing image pyramid \(S\). The \(L\) layer of \(S\) is formulated as

$$\begin{aligned} LS_l(i, j)&= G_{Rl}(i, j)L_{Al}(i, j)\nonumber \\&\quad +\,(1-G_{Rl}(i, j))L_{Bl}(i, j) \end{aligned}$$(12)where \(G_{Rl}(i, j)\) represents the Gaussian pyramid of the \(L\) layer in the mask image, \(L_{Al}(i, j)\) and \(L_{Bl}(i, j)\) represent the Laplacian pyramid of the \(L\) layer in the left and right image, respectively.

-

Step 5 Rebuilding the \(S\) from the top layer to the bottom layer.

3 Experiment results

In this paper, we implemented the proposed CSDP algorithm in OpenCV 2.0 to implement the proposed. To sufficiently verify the CSDP’s effectiveness, we compared it with three popular mosaic softwares (i.e., AutoStitch, Microsoft ICE, and Panorama Maker) and Mills’ approach on multi-group pictures in multiple scenes that contain moving objects, huge exposure difference and strong motion parallax. Among them Microsoft ICE, Panorama Maker and Mills’ approach have the optimal seam searching function, but AutoStitch not yet. We will give more details in the following sections regarding three different scenes.

3.1 General dynamic scene

Figure 10 shows the mosaic results of the example images shown in Fig. 1 obtained by AutoStitch, Microsoft ICE , Panorama Maker, Mills’ approach and our method (CSDP), respectively. From the comparison, we can draw the following conclusions: AutoStitch method has the ghosting problem on moving objects, as shown in Fig. 10c; Mills’ approach and Microsoft ICE can only find the local optimum seam that has no protection mechanisms for the moving objects, the mosaic image by Mills’ approach has slightly incomplete problem as identified by red rectangle in Fig. 10d and Microsoft ICE has an obviously incomplete moving bus as shown in Fig. 10a; CSDP and Panorama Maker can get good mosaic results as shown in Fig. 10b, e, respectively.

Figure 11 shows the mosaic results of another group of pictures taken from the same scene as in Fig. 10. AutoStitch still has the ghosting phenomenon on the moving object. Microsoft ICE and Panorama maker have an incomplete moving car as shown in Fig. 11a, b. CSDP and Mills’ approach obtained good mosaic results in this scene as shown in Fig. 11e, d respectively.

3.2 Dynamic scene with exposure difference

Figure 12 is a group of pictures taken by a hand-held camera in our laboratory. At the moment of shooting, one subject is moving on purpose, and we artificially add huge exposure difference at the same time. As shown in Fig. 12b, e, the ghosting phenomenon appears in the mosaic result of Microsoft ICE due to the head moving, and that Mills’ approach is marked by a red rectangle area. In this scene, Panorama maker cannot register the images well, which makes severe dislocation in the composited image as shown in Fig. 12c. As for AutoStitch, we can see from the red rectangle in Fig. 12d, it has the worse ghosting problem than that of Microsoft ICE. Before improving the searching criterion, as shown in Fig. 12g, we get the optimal seam line as the same as Microsoft ICE and Mills’ approach. After the improved optimal seam as shown in Fig. 12h, we can get good mosaic result as shown in Fig. 12f.

3.3 Strong motion parallax scene

Figure 13a is a group of images for mosaic with strong motion parallax. As shown in Fig. 13b, c, because of inaccuracy of registration and seam-searching strategy, both Microsoft ICE and Panorama maker have dislocation phenomenons marked by red rectangle areas. And AutoStitch has the huge ghost phenomenon in multiple places as lamp and computer as shown in Fig. 13d while Mills’s approach and CSDP get good mosaic results as shown in Fig. 13e, f, respectively.

Figure 14a contains two pictures taken by a hand-held camera in an outdoor environment with strong motion parallax. As shown in Fig. 14b, c, Panorama maker, Mills’ approach and CSDP can get effective mosaic results. However, Microsoft ICE and AutoStitch cannot register images and result in the incomplete mosaic results.

3.4 Experimental analysis

Since AutoStitch does not have the optimal seam searching function, it will result in the ghosting phenomenon and mosaic failure in the presence of moving objects and strong motion parallax. Microsoft ICE , Panorama Maker and Mills’ approach have the optimal seam function but with limited application scopes. In general in dynamic scene and some uncertain conditions, the mosaic image obtained by Microsoft ICE, Panorama Maker and Mills’ approach have incomplete moving objects. In dynamic scene with huge exposure difference among images, Panorama Maker sometimes cannot finish the matching process. The optimal seam search used by Microsoft ICE and Mills’ approach can only have a local optimum without the consideration of the moving object’s integrality. In the scene with strong motion parallax due to the misregistration, Panorama maker has a dislocation phenomenon, and Microsoft ICE and AutoStitch sometimes cannot finish the mosaic process without matching images to be found. For our CDSP algorithm, we can always get effective mosaic results by using an improved optimal seam searching criterion.

4 Conclusion

Mosaic failure always happens in dynamic scenes due to huge exposure difference and strong motion parallax. To solve these problems, we proposed the CSDP mosaic algorithm based on SIFT matching and dynamic programming. The main contributions are summarized as follows:

-

SIFT descriptors are invariant to rotation, image scaling, brightness variations, and partially invariant to view angles, affine transformation and noise. For these reasons, we use SIFT operator to match images. We can further figure out the inline points and outline points between the matching pairs of point by RANSAC algorithm.

-

Based on Mills and Dudek [19], we proposed an improved optimal seam line searching criteria. The optimal seam searching criterion is established by combining gradient difference with the proposed edge-enhanced weighting intensity difference operator, which provides an effective mechanism for moving objects so as to avoid the ghosting problem and incomplete effects.

-

We proposed the combination of the multi-resolution fusion algorithm and optimal seam line searching strategy to minimize inconsistency nearby the stitching lines.

References

Bouwmans, T.: Recent advanced statistical background modeling for foreground detection: a systematic survey. Recent Pat. Comput. Sci. 4(3), (2011)

Brown, M., Lowe, D.: Recognising panorama. In: Proceedings of international conference on computer vision, pp. 1218–1225 (2003)

Davis, J.: Mosaics of scenes with moving objects. In: Proceedings of international conference on computer vision and, pattern recognition, pp. 354–360 (1998)

Debevec, P., Malik, J.: Recovering high dynamic range radiance maps from photographs. In: Proceedings of the 24th annual conference on computer graphics and interactive, techniques, pp. 369–378 (1997)

Dijkstra, E.W.: A note on two problems in connexion with graphs. NUMERISCHE MATHEMATIK 1(1), 269–271 (1959)

Duplaquet, M.: Building large image mosaies with invisible sema-lines. In: Proceedings of SPIE aerosense, pp. 369–377 (1998)

Echigo, T., Radke, R., Ramadge, P., Miyamori, H., Lisaku, S.: Ghost error elimination and superimposition of moving objects in video mosaicing. Proc. Int. Conf. Image Process. 4, 128–132 (1999)

Farcas, D., Marghes, C., Bouwmans, T.: Background subtraction via incremental maximum margin criterion: a discriminative subspace approach. Mach. Vis. Appl. 23(6), 1083–1101 (2012)

Fischler, M., Bolles, R.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Garg, R., Seitz, S.M.: Dynamic mosaics. 3DIMPVT 2012, pp. 65–72 (2012)

Hartley, R., Zisserma, A.: Multiple view geometry in computer vision. Cambridge University Press, Cambridge (2004)

He, Y., Chung, R.: Image mosaicking for polyhedral scene and in particular singly visible surfaces. Pattern Recogn. 41(3), 1200–1213 (2008)

Irani, M., Anandan, P., Bergen, J., Kumar, R., Hsu, S.: Efficient representations of video sequences and their applications. Signal Process. Image Commun. 8, 327–351 (1996)

Jia, J., Tang, C.K.: Eliminating structure and intensity misliagnment in image stitching. In: Proceedings of international conference on computer vision, pp. 1651–1658 (2005)

Kohandani, A., Basir, O., Kamel, M.: A fast algorithm for template matching. Image Anal. Recogn. 4142, 398–409 (2006)

Lowe, D.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Mann, S., Piccard, R. : Being ‘undigital’ with digital cameras: extending the dynamic range by combining differently exposed pictures. In: Proceedings of the 4th Annual Conference on IS &T, pp. 422–428 (1995)

Mikolajczyk, K., Schmid, C.: A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 27(10), 1615–1630 (2005)

Mills, A., Dudek, G.: Image stitching with dynamic elements. Image Vis. Comput. 27(10), 1593–1602 (2009)

Mitsunaga, T., Nayar, S.: Radiometric self calibratio. In: Proceedings of international conference on computer vision and, pattern recognition, pp. 374–380 (1999)

Shum, H., Szeliski, R.: Construction of panoramic image mosaics with global and local alignment. Int. J. Comput. Vis. 36(2), 101–130 (2000)

Szeliski, R.: Image alignment and stitching: a tutorial. Found. Trends Comput. Gr. Vis. 2(1), 1–105 (2006)

Szeliski, R., Shum, H.: Creating full view panoramic image mosaics and environment mapsn. In: Proceedings of the 24th annual conference on computer graphics and interactive, techniques, pp. 251–258 (1997)

Zeng, L., Deng, D., Chen, X., Zhang, Y.: A self-adaptive and real-time panoramic video mosaicing system. J. Comput. 7(1), 218–225 (2012)

Zhang, S., Yao, H., Liu, S.: Dynamic background subtraction based on local dependency histogram. Int. J. Pattern Recogn. Artif. Intell. 23(7), 1397–1419 (2009)

Zhang, S., Yao, H., Sun, X., Lu, X.: Sparse coding based visual tracking: Review and experimental comparison. Pattern Recogn. 46(7), 1772–1788 (2013a)

Zhang, S., Yao, H., Zhou, H., Sun, X., Liu, S.: Robust visual tracking based on online learning sparse representation. Neurocomputing 100(1), 31–40 (2013b)

Zhao, L., Yang, Y.: Mosaic image method: a local and global method. Pattern Recogn. 32(8), 1421–1433 (1999)

Zhou, H., Liu, T., Lin, F., Pang, Y., Wu, J., Wu, J.: Towards efficient registration of medical images. Comput. Med. Imaging Gr. 31(6), 374–382 (2007)

Zhou, H., Green, P.R., Wallace, A.M.: Estimation of epipolar geometry by linear mixed-effect modelling. Neurocomputing 72(16–18), 3881–3890 (2009)

Acknowledgments

This work was supported in part by Natural Science Foundation of China (No. 61300111) and Natural Scientific Research Innovation Foundation in Harbin Institute of Technology (HIT. NSRIF. 2014137). Jun Zhang was supported by Natural Science Foundation of China (No. 61273237).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zeng, L., Zhang, S., Zhang, J. et al. Dynamic image mosaic via SIFT and dynamic programming. Machine Vision and Applications 25, 1271–1282 (2014). https://doi.org/10.1007/s00138-013-0551-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-013-0551-8