Abstract

Agricultural non-point sources of nutrients and sediments have caused eutrophication and other water quality issues in aquatic and marine ecosystems, such as the annual occurrence of hypoxia in the Gulf of Mexico. Management practices have been implemented adjacent to and in agricultural drainage ditches to promote their wetland characteristics and functions, including reduction of nitrogen, phosphorus, and sediment losses downstream. This review: (1) summarized studies examining changes in nutrient and total suspended solid concentrations and loads associated with management practices in drainage ditches (i.e., riser and slotted pipes, two-stage ditches, vegetated ditches, low-grade weirs, and organic carbon amendments) with emphasis on the Lower Mississippi Alluvial Valley, (2) quantified management system effects on nutrient and total suspended solid concentrations and loads and, (3) identified information gaps regarding water quality associated with these management practices and research needs in this area. In general, management practices used in drainage ditches at times reduced losses of total suspended solids, N, and P. However, management practices were often ineffective during storm events that were uncommon and intense in duration and volume, although these types of events could increase in frequency and intensity with climate change. Studies on combined effects of management practices on drainage ditch water quality, along with research towards improved nutrient and sediment reduction efficiency during intense storm events are urgently needed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Gulf of Mexico Hypoxic Zone

Efforts in modern agriculture, to meet consumer demands, include application of fertilizers to provide nutrients to crops. United States fertilizer application has increased four-fold since 1961, from 50 to 200 kg nitrogen (N) ha−1 year−1 allowing for a doubling in crop yield (Lassaletta et al. 2014). Various non-point sources of nutrients, particularly runoff of agricultural fertilizers, have increased the nutrient load discharged to freshwater and marine ecosystems, including the Gulf of Mexico (USEPA 2011). Agricultural sources contributed more than 70% of N and phosphorus (P) loads to the Gulf of Mexico via the Mississippi and Atchafalaya rivers (Alexander et al. 2008), with loading doubled in the second half of the twentieth century due to increased runoff of fertilizers (Pierce et al. 2012).

The annual occurrence of a hypoxic zone in the Gulf of Mexico is caused by this increased nutrient loading from the Mississippi and Atchafalaya river basins (USEPA 2008, 2011). The size of the hypoxic zone in 2017 was 22,720 km2, the size of New Jersey and largest ever recorded (LUMCON 2017), with a 5-year mean hypoxic zone size of 15,032 km2, over three times larger than the 5000 km2 targeted by the Gulf of Mexico Hypoxia Task Force (USEPA 2013; Scavia et al. 2017). Nitrogen loading, in particular nitrate–N (NO3 −–N), is proportional to the bottom water hypoxia (LUMCON 2017). To achieve the hypoxic zone size goal, the Gulf of Mexico Hypoxia Task Force in 2013 predicted a reduction in total N loads of at least 45% would be necessary (USEPA 2008, 2013). In order to meet the goal of 45% N load reduction while minimizing impacts to agricultural production, McLellan et al. (2015) posited that N management practices in agricultural fields and innovative N removal practices in aquatic ecosystems would have to be used. Several management practices have been implemented in and adjacent to agricultural drainage ditches to reduce N in these systems.

Agricultural Drainage Ditches

In the Lower Mississippi Alluvial Valley (LMAV), agricultural drainage ditches are a prevalent management practice that maintains optimal soil moisture, in turn leading to increased crop yields and economic returns for farmers (Bouldin et al. 2004; Strock et al. 2007). Agricultural drainage ditches have also increased transport and discharge of excess N and P (Kröger et al. 2007, 2008b) and sediment (Kröger et al. 2008a) to natural receiving waters. As agricultural drainage ditches are ubiquitous across the landscape of the LMAV, many studies have investigated their use for pollutant mitigation (Kröger et al. 2011). It has also been observed that drainage ditches have similar biological and physical functions to linear wetlands under low flow conditions, whereas they function more like lotic, transport-dominated systems during high flow conditions (Moore et al. 2001; Strock et al. 2007). Wetland functionality in drainage ditches may provide areas of mitigation for non-point source contamination (e.g. excess nutrient loading), particularly with implementation of best management practices (Bouldin et al. 2004; Kröger and Moore 2011).

Several management practices have been implemented and examined in drainage ditches with the goal of reducing nutrient loads to the Gulf of Mexico. Some practices aim to achieve this goal by promoting and using wetland functions. The purposes of this review are to: (1) review literature describing effects of management practices (riser pipes and slotted pipes, two stage ditches, vegetated ditches, low-grade weirs, and organic carbon amendments) on nutrient concentrations and loads reaching and/or leaving agricultural drainage ditches; (2) quantify percent change in nutrient concentrations and loads observed in studies which these management practices have been implemented; (3) identify information gaps regarding water quality associated with these management practices and research needs in this area.

In order to conduct a review of these practices, two databases, Digitop (USDA National Agricultural Library) and Google Scholar, were searched for water quality literature on each of these practices. While many of the studies cited are from the LMAV, some studies from the upper Mississippi River Basin were included to due to limited information on management practices, particularly two-stage ditches and riser pipes. Percent change of nutrient and total suspended solids (TSS) concentrations and loads were calculated using data presented in the literature using Eq. 1. As a change in concentration resulting from implementation of a management practice may not necessarily result in the same change in load, percent change values are presented in separate figure panels for concentrations and loads. Percent change for both concentrations and loads are presented in the same figure however, to preserve sample size for some management practices because, in some instances, sample sizes were small (i.e., n ≤ 3) for some variables when concentrations and loads were presented individually.

Riser and Slotted Pipes

Riser pipes have been implemented in two different fashions. When used in conjunction with tile drain pipes, primarily in the upper Mississippi River Basin, riser control structures manage the water level in the tile drainage system before subsurface water is discharged into a ditch/stream (Evans et al. 1995). Riser pipes are implemented using surface drainage pipes in the LMAV. Boards can be placed on the field side of the pipe during the dormant season to flood the field to depths of 5–50 cm (Maul and Cooper 2000). Slotted pipes (e.g., Cullum et al. 2006) retain water in a portion of fields such that sediment particles and nutrients have an opportunity to deposit before flowing into a drainage ditch or lake (Cullum et al. 2006). In all approaches, subsurface (riser pipe in tile systems) and surface runoff water (riser and slotted pipes), is withheld for a period of time before reaching the receiving water body.

Riser control structures substantially reduced NO3 −–N loads in tile drainage and concentrations in surface runoff (median = − 57.2%) (Fig. 1). Reductions in tile drainage systems were due to 50% less water volume passing through tile drains, not a reduction in NO3 −–N concentrations (Gilliam et al. 1979; Lalonde et al. 1996). There is also limited evidence that riser control structures reduced ammonium (NH4 +), total nitrogen (TN), total phosphorus (TP), and TSS in surface and subsurface drainage (Cullum et al. 2006; Evans et al. 1995; Maul and Cooper 2000) (Fig. 1). Kröger et al. (2013c) observed that slotted pipes retained sediment and P. Time since pipe installation highly affected sediment accumulation rates and maximum accumulation occurred at 65% of slotted pipe volume. These results indicated slotted pipes became less effective over time as sediment accumulated closer to the pipe opening was scoured (Kröger et al. 2013c). Reduction of nutrient concentrations and/or loads before reaching drainage ditches reduces demands of drainage ditches to do so before water is discharged to receiving water bodies.

Percent change in nitrate (NO3 −), ammonium (NH4 +), total nitrogen (TN), phosphate (PO4 3−), total phosphorus (TP), and total suspended solid (TSS) concentrations and loads for riser pipes in agricultural drainage ditches. n = number of percent change values represented. Numbers in parentheses represent the number of values greater than 200% and not presented in the figure. Line in each box is the median, edges of the box are 25th and 75th percentiles, error bars are 10th and 90th percentiles, and points are values beyond the 10th and 90th percentiles. Percent changes calculated from data presented in Cullum et al. (2006), Evans et al. (1995), Gilliam et al. (1979), Lalonde et al. (1996), and Maul and Cooper (2000)

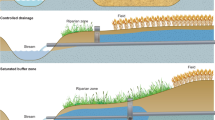

Two-Stage Ditches

Generally, drainage ditches have been constructed or modified such that they are channelized, one-stage systems. Two-stage ditches were originally implemented to increase bank stability via creation of a floodplain in channeled agricultural streams (Davis et al. 2015). In doing so, pipes coming into the ditch are intended to empty onto the constructed floodplain rather than directly into the stream, mimicking adjacent wetlands, retaining water on the floodplain, increasing wetted channel width, and slowing water velocity (Davis et al. 2015). Design of the channel and floodplain width can affect fluvial stability and geomorphic changes in ditches (D’Ambrosio et al. 2015; Krider et al. 2017; Powell et al. 2007a, b). Connectivity between ditch channel and floodplain can increase: hydraulic retention time (HRT), contact between sediment and water, riparian vegetation development, and organic matter accumulation (Powell and Bouchard 2010).

These characteristics of two-stage ditches may provide for increased nutrient mitigation ability. Floodplain soils are hydrologically connected to surface water, resulting in higher soil moisture and redox conditions suitable for denitrification (Roley et al. 2012). Powell and Bouchard (2010) found denitrification rates did not differ between two-stage and one stage ditches in the thalweg. However, rates were greater outside the channels on benches and slopes in two-stage, compared to one-stage ditches (Mahl et al. 2015; Powell and Bouchard 2010). Furthermore, denitrification rates were similar before and after two-stage ditch construction (Roley et al. 2012). These results demonstrate potential for increased NO3 − removal due to increased wetted width of two-stage ditches not present in one-stage ditches.

Few studies (Davis et al. 2015; Hodaj et al. 2017; Mahl et al. 2015; Roley et al. 2012) have examined nutrient concentrations or loads in two-stage ditches. These studies observed both increases and decreases in NO3 −–N concentrations and loads in two-stage ditches (median change = 7.1%) (Fig. 2). Roley et al. (2012) found the highest percentage of NO3 − removal occurred at the lowest loads, but increased loads during higher flow volumes limited overall removal ability. Davis et al. (2015) observed similar difficulties in which reductions of NO3 −–N were not as easily achieved under high loading. Results indicated water quality improvements depended on sizing two-stage ditches such that floodplain inundation is maximized (Davis et al. 2015). Ammonium concentrations of two-stage ditches were not different from reference reaches (median change = 13.9%). Mean soluble reactive phosphorus (SRP) (median change = − 11.4%) and TP (median change = − 17.6%) concentrations decreased in two-stage ditches compared to reference reaches, while TSS increases and decreases were observed (median change = 1.7%) (Davis et al. 2015) (Fig. 2). In addition to increasing HRT and denitrification potential, vegetation may have contributed to increased nutrient reduction as vegetation was more frequently observed on benches of two-stage ditches compared to one-stage ditch slopes (Powell and Bouchard 2010).

Percent change in nitrate (NO3 −), ammonium (NH4 +), soluble reactive phosphorus (SRP), total phosphorus (TP), and total suspended solids (TSS) concentrations and loads for two-stages in agricultural drainage ditches. n = number of percent change values represented. Line in each box is the median, edges of the box are 25th and 75th percentiles, error bars are 10th and 90th percentiles, and points are values beyond the 10th and 90th percentiles. Percent changes calculated from data presented in Davis et al. (2015), Hodaj et al. (2017), Mahl et al. (2015), and Roley et al. (2012)

Vegetated Ditches

Vegetation in drainage ditches may increase nutrient and suspended solid removal because plants directly uptake nutrients from water, increase HRT, oxygenize soil, and provide surface area for microbial biofilms (Deaver et al. 2005). Decreases in particulate P (PP) and inorganic P loads and concentrations (Kröger et al. 2008b) along with TSS loads (Bouldin et al. 2004; Moore et al. 2010) were observed in vegetated ditches (Fig. 3). Dissolved inorganic N load was reduced by 57% over 2 years in vegetated ditches (Kröger et al. 2007). Nitrogen load reductions from vegetated mesocosms (> 50%) were greater than unvegetated controls (26.9%) (Tyler et al. 2012b). Conversely, there were no differences in NH4 +, NO3 −, or dissolved inorganic phosphorus (DIP) mitigation between vegetated and non-vegetated ditches, while a non-vegetated ditch had a greater total inorganic phosphorus (TIP) load reduction (71%) compared to a vegetated ditch (36%) (Moore et al. 2010). This disparity in P load reduction capability of vegetated ditches is exhibited by the variability in percent change of DIP, PP, and TIP loads in Fig. 3b. Seasonal differences likely contributed to variability in nutrient removal associated with vegetation in drainage ditches as nutrients assimilated by plants can be released back into the ditch system through senescence in the dormant season (Kröger et al. 2007) and removal by plant species was more effective in the summer than winter (Moore et al. 2013). Buffer strips next to drainage ditches may also be beneficial as NO3 −, phosphate (PO4 3−), and TSS concentrations were highest at sites with limited or no buffer strips, regardless of ditch size. Conversely, sites with grass or forested buffer strips had no measurable NO3 − (Bouldin et al. 2004).

Percent change in nitrate (NO3 −), ammonium (NH4 +), phosphate (PO4 3−), dissolved inorganic phosphorus (DIP), and particulate phosphorus (PP) concentrations (a) and NO3 −, NH4 +, total nitrogen (TN), PO4 3−, DIP, PP, total inorganic phosphorus (TIP), and total phosphorus (TP) loads (b) for agricultural drainage ditches with vegetation. n = number of percent change values represented. Numbers in parentheses represent the number of values greater than 200% and not presented in the figure. Line in each box is the median, edges of the box are 25th and 75th percentiles, error bars are 10th and 90th percentiles, and points are values beyond the 10th and 90th percentiles. Percent change calculated from data presented in Deaver et al. (2005), Kröger et al. (2007), Kröger et al. (2013a, b), Moore and Kröger (2010), Moore et al. (2010), Moore et al. (2013), Tyler et al. (2012a), and Tyler et al. (2012b)

Presence of vegetation may not be sufficient to achieve increased reduction in nutrient and TSS concentrations and loads. The composition of the vegetative community also seems to be an important factor as changes from submerged to emergent vegetation can decrease sediment resuspension and nutrients in the water column (Bouldin et al. 2004). In a mesocosm study, Deaver et al. (2005) found that soft rush (Juncus effusus, L.), yellow primrose (Ludwigia peploides, Kunth) and cutgrass (Leersia oryzoides, L.) reduced nutrient concentrations relative to the control. Concentrations of NH4 + and NO3 − were most decreased by L. peploides, while PO4 3− was decreased most by L. oryzoides, which was least effective in NH4 + and NO3 − removal (Deaver et al. 2005). Several other mesocosm studies have also observed differing abilities to remove N nutrients compared to P nutrients. Species more efficient at removing N nutrients included L. oryzoides, broadleaf cattail (Typha latifolia L.) (Tyler et al. 2012b), creeping burhead (Echinodorus cordifolius L.), and Eurasian watermilfoil (Myriophyllum spicatum L.) (Moore and Kröger 2010). Species more efficient at removing P nutrients included L. oryzoides, T. latifolia, M. spicatum, E. cordifolius, and lizard’s tail (Saururus cernuus L.) (Moore and Kröger 2010; Tyler et al. 2012a). Leersia oryzoides, M. spicatum, and E. cordifolius performed well for both N and P nutrient removal (Moore and Kröger 2010; Tyler et al. 2012a, b). Moore et al. (2013) also observed differing seasonal removal efficiencies for plant species between summer and winter. Overall, results of these mesocosm studies indicate that no single plant species was most effective in removing both N and P and removal efficiencies were different depending on the season. Therefore, establishment of a more diverse vegetative community in drainage ditches with complementary nutrient reduction abilities for N and P during growing and dormant seasons is necessary for maintaining efficient nutrient mitigation year round (Deaver et al. 2005; Tyler et al. 2012b; Moore et al. 2013).

Low-Grade Weirs

One management practice implemented in the LMAV is low-grade weirs (hereafter weirs) in agricultural drainage ditches to increase HRT and decrease flow velocities (Pierce et al. 2012; Kröger et al. 2013a). Although no differences in water volume or HRT were observed between ditches with weirs and those with riser pipes (Kröger et al. 2011), weirs were able to provide drainage management year-round and on a spatially gradated basis compared to a single riser pipe usually used only during the dormant season (Kröger et al. 2012). Times to reach peak and base flows (approximately 7.5 h longer) were increased in ditches with vegetation and weirs (Kröger et al. 2008a; Prince Czarnecki et al. 2014). Zones of inundation were created upstream of weirs, aiding increased sedimentation, sediment-bound P removal, and anaerobic conditions for increased N removal through denitrification (Kröger et al. 2011, 2012) (Fig. 4).

Percent change in nitrate (NO3 −), ammonium (NH4 +), total Kjeldahl nitrogen (TKN), total nitrogen (TN), dissolved inorganic phosphorus (DIP), total inorganic phosphorus (TIP), total phosphorus (TP), and total suspended solids (TSS) concentrations (a) and NO3 −, NH4 +, TKN, total inorganic nitrogen (TIN), TN, DIP, TIP, TP, and TSS loads (b) for agricultural drainage ditches with low-grade weirs. n = number of percent change values represented. Numbers in parentheses represent the number of values greater than 200% and not presented in the figure. Line in each box is the median, edges of the box are 25th and 75th percentiles, error bars are 10th and 90th percentiles, and points are values beyond the 10th and 90th percentiles. Percent change calculated from data presented in Amatya and Gilliam (2003), Baker et al. (2016), Flora and Kröger (2014a, b), Kröger et al. (2011), Kröger et al. (2012), Littlejohn et al. (2014), and Faust et al. (2017b)

Denitrification rates were not different between sites with weirs and those without weirs. There were, however, seasonal differences with a trend of summer > winter > spring. Interestingly, denitrification rates also differed based on weir age with greater rates in younger systems (< 1 year) compared to older systems (> 2 years) (Kröger et al. 2013a). In the Mississippi Delta, Baker et al. (2015) observed increased abundance of the nosZ gene [a nitrous oxide (N2O) reductase catalyzing the final step of denitrification of reducing N2O to atmospheric N2] 5–25 m upstream of weirs as compared to 50 m sampling intervals between weirs.

Substantial NO3 −–N concentration and load reductions were observed in systems with (79%) and without (73%) weirs, resulting in no statistical difference between systems (Kröger et al. 2012). Loads of NO3 −–N, NH4 +–N, DIP, and TIP decreased from inflow to outflow in systems with weirs and with riser pipes, with no difference in removal between systems (Kröger et al. 2011). Conversely, Littlejohn et al. (2014) found no differences between inflow and outflow loads in ditches with weirs, likely due to substantial variation in nutrient loads between storm events. Furthermore, few differences were observed between inflow and outflow concentrations because of variability between seasons, hydrology, and runoff volume (Fig. 4a). For example, NO3 − load was skewed by two dormant season storm events in which outflow load greatly exceeded inflow load. However, with exception of NO3 −, median loads decreased between inflow and outflow (Littlejohn et al. 2014). Baker et al. (2016) also observed large variability in nutrient and sediment load percent change in ditches with weirs, with ranges of − 96%–885% for total inorganic N and − 65%–1% for total inorganic P (Fig. 4b).

Weirs create zones of inundation for sedimentation as supported by greater sediment and water depth upstream of weirs compared to reference sites. Sediment accumulation decreased as weirs became older than 1 year, indicating yearly maintenance is necessary for optimal sediment reduction (Kröger et al. 2013b), but this may counter nutrient mitigation as Littlejohn et al. (2014) found that drainage ditches with weirs performed better in nutrient reduction 1 year after construction. Usborne et al. (2013) observed greater mean water depth upstream of weirs, but sediment deposition was not different between sites with and without weirs. Sediment deposited upstream of weirs can also remove sediment-bound P. Yet, P can also be released under anaerobic conditions behind weirs because non-bioavailable iron phosphate complexes are reduced to ferrous iron, releasing bioavailable P under periods of inundation. No differences between TP concentrations of sediments or any P fractions at 1-year-old weirs and reference sites were detected (Kröger et al. 2013b). Total P, soluble P, loosely bound P, and reductant-soluble P in sediment was not different between sites, while sediment aluminum phosphate and iron phosphate concentrations were different between sites (Usborne et al. 2013). Several studies have observed sediment organic carbon levels ranging between 1% and 6.8% (Kröger et al. 2013b; Usborne et al. 2013; Littlejohn et al. 2014). Smith et al. (2005) observed that sediment organic carbon and silt and clay fractions decreased as drainage area increased, with sediment organic carbon > 6% with small drainage areas and 1%–3% in large drainage areas. Kröger et al. (2013a) suggested organic carbon seemed to be limiting denitrification in drainage ditches in sediments frequently inundated and would be thought to accumulate organic matter due to slower decomposition under anaerobic conditions.

Organic Carbon Amendments

Whereas weirs have allowed for increased N removal by forming areas of anaerobic conditions within drainage ditches conducive to denitrification, there are other factors that may influence the N cycle in aquatic ecosystems. Three major factors have been implicated in directly influencing denitrification rates of microbial communities: (1) presence of anaerobic conditions; (2) availability of NO3 −–N; and (3) availability of organic carbon (Knowles 1982). If we assume the presence of anaerobic conditions (due to use of low-grade weirs or other drainage control structures) and NO3 −–N are not limiting, then other factor(s) such as organic carbon become limiting.

Sediments from North Carolina agricultural drainage ditches showed increasing organic matter (as straw) from 5% dry weight to 11% resulted in significant increases in NO3 −–N removal, but no further increase was observed when organic matter was increased to 16% (Burchell et al. 2007). Forms of C substances used in stream bioreactors include wood media, cornstalks, wheat, maize cobs, barley straw, and rice husks with NO3 −–N removal in the order of cornstalks > cardboard, > wood chips and oil > wood chips (Greenan et al. 2006; Liu et al. 2015). In a field trial of organic carbon barriers in drainage ditches in China, rice straw produced mean removal rates of 73% for NH4 +–N and 96% for NO3 −–N (Liu et al. 2015). Removal of NO3 −–N ranged from 83% to 100% in a laboratory microcosm study of drainage ditch sediments with amendments of dissolved organic carbon (DOC as glucose) and particulate organic carbon (POC as Bermuda grass (Cynodon dactylon L.) hay) (Faust et al. 2016) (Fig. 5). However, when DOC and POC amendments were scaled up to use in experimental drainage ditches with and without weirs, the maximum NO3 −–N removal rate was 31%. This result indicates that flow reduces the ability for N mitigation in drainage ditches even with weirs in place to increase HRT (Faust et al. 2017b). Finally, in a field scale study, Faust et al. (2017a) observed sediment organic carbon levels from 0.25% to 6.04%. Increased aromatic and molecular weight character of DOC in drainage ditches could indicate that organic carbon is less available to microbes performing denitrification (Faust et al. 2017a; Song et al. 2011). While much focus regarding organic carbon amendments has been on N removal, fibric organic carbon horizons at the ditch sediment surface had greatest mean P, Fe, Al concentrations (Vaughan et al. 2007), indicating drainage ditch sediments may serve as a sink for P.

Percent change in nitrate (NO3 −), ammonium (NH4 +), and total nitrogen (TN), concentrations and loads for agricultural drainage ditches with organic carbon amended. n = number of percent change values represented. Line in each box is the median, edges of the box are 25th and 75th percentiles, error bars are 10th and 90th percentiles, and points are values beyond the 10th and 90th percentiles. Percent change calculated from data presented in Burchell et al. (2007), Faust et al. (2016), Faust et al. (2017b), and Liu et al. (2015)

Undoubtedly, limited bioavailability of organic carbon can reduce denitrification rates and thus N removal. Carbon and N cycles are often considered independently, but links and interactions between the two are important, especially regarding denitrification and other microbial-mediated processes (Holmes et al. 1996). Several studies have examined limitations to denitrification and thus NO3 −–N removal using carbon to N (C:N) ratios. The amount of rice straw added to drainage ditches was positively correlated with NH4 + and NO3 − removal (Liu et al. 2015). The optimal C:N ratio in drainage ditch sediments was determined to be 5.0 as NO3 −–N removal was similar to removal when organic carbon was amended to achieve ratios of 10, 15, and 20, but did not increase NH4 +–N concentrations as did higher C:N ratios (Faust et al. 2016). Various management practices, examined above, have been implemented in drainage ditches. Clearly, there are still questions to be answered and improvements to these management practices that can be achieved when used individually as well as jointly.

Research Needs

For each management practice reviewed above, there were studies conducted pertaining to their use in decreasing nutrient and sediment concentrations and loads within drainage ditches. An array of water quality parameters were measured in these studies. Depending on specific goals and objectives, researchers should strive to measure TSS, TN, NH4 +–N, NO3 −–N, NO2 −–N, TP, PP, and PO4 3−–P concentrations and loads in order to distinguish how bioavailable and sequestered N and P are distributed as related to TN and TP. Measurement of runoff volume in order to calculate load is important since trends are often observed between concentration and load (Figs. 3, 4). Measurement of runoff volume will further allow for determination of how runoff volume, along with variables affected by volume (e.g., rainfall intensity and duration, antecedent soil water content, antecedent drainage ditch water level), impact nutrient and sediment loss to downstream aquatic ecosystems. Given the relationship between the C and N cycles, measurements of organic carbon content in sediment (% TOC) and water (total organic carbon, DOC, absorbance ratio, specific ultraviolet absorbance) will provide insight into possible limitation of denitrification and other N cycles processes due to low organic carbon availability.

Water quality data should be collected for several consecutive years since studies have found effects associated with management practices shift over time (Kröger et al. 2013b). For example, sediment build-up behind weirs and slotted pipes will change nutrient and sediment reduction efficiencies and hydrological characteristics in drainage ditches. Researchers should attempt to maintain long-term data collection efforts and report results both over shorter and longer time frames. At a minimum, studies in drainage ditches receiving runoff from agricultural landscapes should include several full crop rotations in order to capture a range of climatic conditions including wet years, dry years, and average years.

While not an exhaustive list, below are questions that remain to be answered with regard to the management practices reviewed herein:

-

1.

As demonstrated by the low sample sizes in Figs. 1 and 2, few studies have been conducted in drainage ditches with riser or slotted pipes and with two-stage ditches. Comprehensive studies examining nutrient and sediment concentrations and loads associated with these management practices are imperative.

-

2.

Due to typical weir heights used (5%–20% of bankfull), water above this level can flow unimpeded, meaning weirs may not possess nutrient mitigation effects during large storm events (Prince Czarnecki et al. 2014). If weir heights were increased to occupy more of the bankfull height, would nutrient and sediment removal increase and be less affected by large storm events?

-

3.

In bioreactors, forms of C substances used included wood media, cornstalks, wheat, maize cobs, barley straw, and rice husks (Liu et al. 2015). What forms of organic carbon result in the best efficiency of nutrient removal?

-

4.

Bioreactors required frequent C replenishment due to rapid C depletion. For example, C released from rice straw occurred primarily during the first 3 weeks with C loss accounting for less than 5% of C available (Liu et al. 2015). While optimizing release of organic carbon for denitrification, how long do various C substances amended to drainage ditches last before replenishment is necessary?

-

5.

How can management practices be used in concert or in combination? Two examples demonstrating possibilities of combining management practices include: (1) organic matter content in sediment of two-stage ditches (range = 3.7%–28.3%) was slightly higher than one-stage ditches (5.6%–24.2%), perhaps showing that presence of floodplains in two-stage ditches helps build organic matter (Roley et al. 2012; Davis et al. 2015) and be favorable for denitrification (Powell and Bouchard 2010); (2) use of organic carbon amendments at weirs in drainage ditches to induce anaerobic conditions and provide organic C for denitrification (Faust et al. 2017b).

-

6.

An overarching theme regardless of management practice is that storm events intense in duration and volume had an impact on the ability to reduce nutrient concentrations with the majority of nutrients (> 70% N and > 80% P) and sediments (up to 90%) transported during intermittent, large storm events occurring less than 10% of the year (Kröger et al. 2007; Davis et al. 2015; Baker et al. 2016). Yet, intense storm events are predicted to increase in the southeast U.S. under some climate change models (Carter et al. 2014; Wuebbles et al. 2014). What can be done to improve the nutrient and sediment removal efficacy of management practices reviewed herein, particularly during large storm events?

This review found management practices used in drainage ditches have at times been successful in decreasing concentrations and loads of nutrients and TSS. Research on nutrient management practices in fields and nutrient removal practices in drainage ditches will allow for: assessment of how well practices work, identification of strategies to improve practices through adaptive management, and determination of what next steps are feasible if management practices do not perform as well as anticipated. Results from future studies should be used by producers to inform adaptive management decisions and improve management practices to reduce nutrient and sediment losses in drainage ditches and decrease water quality impacts to downstream ecosystems.

References

Alexander RB, Smith RA, Schwarz GE et al (2008) Differences in phosphorus and nitrogen delivery to the Gulf of Mexico from the Mississippi River basin. Environ Sci Technol 42:822–830

Amatya DM, Gilliam JW (2003) Effects of orifice-weir outlet on hydrology and water quality of a drained forested watershed. South J Appl For 27:130–142

Baker BH, Kröger R, Brooks JP et al (2015) Investigation of denitrifying microbial communities within an agricultural drainage system fitted with low-grade weirs. Water Res 87:193–201

Baker BH, Kröger R, Prevost JD et al (2016) A field-scale investigation of nutrient and sediment reduction efficiencies of a low-technology best management practice: low-grade weirs. Ecol Eng 91:240–248

Bouldin JL, Farris JL, Moore MT, Cooper CM (2004) Vegetative and structural characteristics of agricultural drainages in the Mississippi Delta landscapes. Environ Pollut 132:403–411

Burchell MR, Skaggs RW, Evans RO et al (2007) Addition of organic matter to agricultural ditch soils to be used as constructed wetlands for nitrate treatment. ASCE, world environmental and water resources congress 2007: restoring our natural habitat

Carter LM, Jones JW, Berry L et al (2014) Chap. 17: Southeast and the Caribbean. In: Melillo JM, Richmond TC, Yohe GW (eds) Climate change impacts in the United States: the third national climate assessment. U.S. Global Change Research Program, Washington, DC, pp 396–417

Cullum RF, Knight SS, Cooper CM, Smith S (2006) Combined effects of best management practices on water quality in oxbow lakes from agricultural watersheds. Soil Tillage Res 90:212–221

D’Ambrosio JL, Ward AD, Witter JD (2015) Evaluating geomorphic change in constructed two-stage ditches. J Am Water Resour Assoc 51:910–922

Davis RT, Tank JL, Mahl UH et al (2015) The influence of two-stage ditches with constructed floodplains on water column nutrients and sediments in agricultural streams. J Am Water Resour Assoc 51:941–955

Deaver E, Moore MT, Cooper CM, Knight SS (2005) Efficiency of three aquatic macrophytes in mitigating nutrient run-off. Int J Ecol Environ Sci 31:1–7

Evans RO, Skaggs RW, Gilliam JW (1995) Controlled versus conventional drainage effects on water quality. J Irrig Drain Eng 121:271–276

Faust DR, Kröger R, Miranda LE, Rush SA (2016) Nitrate removal from agricultural drainage ditch sediments with amendments of organic carbon: potential for an innovative best management practice. Water Air Soil Pollut 227:378

Faust DR, Kröger R, Omer AR et al (2017a) Understanding nitrogen and organic carbon contents of agricultural drainage ditches of the Lower Mississippi Alluvial Valley. J Soil Water Conserv (in press)

Faust DR, Kröger R, Rogers JW et al (2017b) Investigating organic carbon amendments with low-grade weirs as a management practice for nitrogen removal in experimental agricultural drainage ditches. J Soil Water Conserv (in review)

Flora C, Kröger R (2014a) Use of vegetated drainage ditches and low-grade weirs for aquaculture effluent mitigation: I. Nutrients. Aquac Eng 60:56–62

Flora C, Kröger R (2014b) Use of vegetated drainage ditches and low-grade weirs for aquaculture effluent mitigation: II. Suspended sediment. Aquac Eng 60:68–72

Gilliam JW, Skaggs RW, Weed SB (1979) Drainage control to diminish nitrate loss from agricultural fields. J Environ Qual 8:137–142

Greenan CM, Moorman TB, Kaspar TC et al (2006) Comparing carbon substrates for denitrification of subsurface drainage water. J Environ Qual 35:824–829

Hodaj A, Bowling LC, Frankenberger JR, Chaubey I (2017) Impact of a two-stage ditch on channel water quality. Agric Water Manag 192:126–137

Holmes RM, Jones JB Jr, Fisher SG, Grimm NB (1996) Denitrification in a nitrogen-limited stream ecosystem. Biogeochemistry 33:125–146

Knowles R (1982) Denitrification. Microbiol Rev 46:43–70

Krider L, Magner J, Hansen B et al (2017) Improvements in fluvial stability associated with two-stage ditch construction in Mower County, Minnesota. J Am Water Resour Assoc 53:886–902

Kröger R, Moore MT (2011) Phosphorus dynamics within agricultural drainage ditches in the lower Mississippi Alluvial Valley. Ecol Eng 37:1905–1909

Kröger R, Holland M, Moore M, Cooper C (2007) Plant senescence: a mechanism for nutrient release in temperate agricultural wetlands. Environ Pollut 146:114–119

Kröger R, Cooper CM, Moore MT (2008a) A preliminary study of an alternative controlled drainage strategy in surface drainage ditches: low-grade weirs. Agric Water Manag 95:678–684

Kröger R, Holland MM, Moore MT, Cooper CM (2008b) Agricultural drainage ditches mitigate phosphorus loads as a function of hydrological variability. J Environ Qual 37:107

Kröger R, Moore MT, Farris JL, Gopalan M (2011) Evidence for the use of low-grade weirs in drainage ditches to improve nutrient reductions from agriculture. Water Air Soil Pollut 221:223–234

Kröger R, Pierce SC, Littlejohn KA et al (2012) Decreasing nitrate-N loads to coastal ecosystems with innovative drainage management strategies in agricultural landscapes: an experimental approach. Agric Water Manag 103:162–166

Kröger R, Dunne EJ, Novak J et al (2013a) Downstream approaches to phosphorus management in agricultural landscapes: regional applicability and use. Sci Total Environ 442:263–274

Kröger R, Usborne EL, Pierce SC (2013b) Sediment and phosphorus accumulation dynamics behind newly installed low-grade weirs in agricultural drainage ditches. J Environ Qual 42:1480–1485

Kröger R, Littlejohn KA, Pierce SC et al (2013c) Evidence toward sediment accumulation characteristics of slotted inlet pipes as best management practices on agricultural landscapes. Ecol Eng 51:249–255

Lalonde V, Madramootoo CA, Trenholm L, Broughton RS (1996) Effects of controlled drainage on nitrate concentrations in subsurface drain discharge. Agric Water Manag 29:187–199

Lassaletta L, Billen G, Grizzetti B et al (2014) 50 Year trends in nitrogen use efficiency of world cropping systems: the relationship between yield and nitrogen input to cropland. Environ Res Lett 9:105011

Littlejohn KA, Poganski BH, Kröger R, Ramirez-Avila JJ (2014) Effectiveness of low-grade weirs for nutrient removal in an agricultural landscape in the Lower Mississippi Alluvial Valley. Agric Water Manag 131:79–86

Liu F, Wang Y, Xiao R et al (2015) Influence of substrates on nutrient removal performance of organic channel barriers in drainage ditches. J Hydrol 527:380–386

Louisiana Universities Marine Consortium (LUMCON) (2017) 2017 Shelfwide Cruise: July 24–July 31. Press Release, pp 1–8

Mahl UH, Tank JL, Roley SS, Davis RT (2015) Two-stage ditch floodplains enhance N-removal capacity and reduce turbidity and dissolved P in agricultural streams. J Am Water Resour Assoc 51:923–940

Maul JD, Cooper CM (2000) Water quality of seasonally flooded agricultural fields in Mississippi, USA. Agric Ecosyst Environ 81:171–178

McLellan E, Robertson D, Schilling K et al (2015) Reducing nitrogen export from the corn belt to the Gulf of Mexico: agricultural strategies for remediating hypoxia. J Am Water Resour Assoc 51:263–289

Moore MT, Kröger R (2010) Evaluating plant species-specific contributions to nutrient mitigation in drainage ditch mesocosms. Water Air Soil Pollut 217:445–454

Moore MT, Bennett ER, Cooper CM et al (2001) Transport and fate of atrazine and lambda-cyhalothrin in an agricultural drainage ditch in the Mississippi Delta, USA. Agric Ecosyst Environ 87:309–314

Moore MT, Kröger R, Locke MA et al (2010) Nutrient mitigation capacity in Mississippi Delta, USA drainage ditches. Environ Pollut 158:175–184

Moore MT, Kröger R, Locke MA et al (2013) Seasonal and interspecific nutrient mitigation comparisons of three emergent aquatic macrophytes. Bioremediation J 17:148–158

Pierce SC, Kroger R, Pezeshki SR (2012) Managing artificially drained low-gradient agricultural headwaters for enhanced ecosystem functions. Biology 1:794–856

Powell KL, Bouchard V (2010) Is denitrification enhanced by the development of natural fluvial morphology in agricultural headwater ditches? J North Am Benthol Soc 29:761–772

Powell GE, Ward AD, Mecklenburg DE et al (2007a) Two-stage channel systems: part 2, case studies. J Soil Water Conserv 62:286–296

Powell GE, Ward AD, Mecklenburg DE, Jayakaran AD (2007b) Two-stage channel systems: part 1, a practical approach for sizing agricultural ditches. J Soil Water Conserv 62:277–286

Prince Czarnecki JM, Baker BH, Brison AM, Kröger R (2014) Evaluating flood risk and alterations to hydraulic patterns following installation of low-grade weirs in agricultural systems. Agric Water Manag 146:69–74

Roley SS, Tank JL, Stephen ML et al (2012) Floodplain restoration enhances denitrification and reach-scale nitrogen removal in an agricultural stream. Ecol Appl 22:281–297

Scavia D, Bertani I, Obenour DR et al (2017) Ensemble modeling informs hypoxia management in the northern Gulf of Mexico. Proc Natl Acad Sci USA 114:8823–8828

Smith DR, Haggard BE, Warnemuende EA, Huang C (2005) Sediment phosphorus dynamics for three tile fed drainage ditches in northeast Indiana. Agric Water Manag 71:19–32

Song CC, Wang LL, Guo YD et al (2011) Impacts of natural wetland degradation on dissolved carbon dynamics in the Sanjiang Plain, northeastern China. J Hydrol 398:26–32

Strock JS, Dell CJ, Schmidt JP (2007) Managing natural processes in drainage ditches for nonpoint source nitrogen control. J Soil Water Conserv 62:188–196

Tyler HL, Moore MT, Locke MA (2012a) Potential for phosphate mitigation from agricultural runoff by three aquatic macrophytes. Water Air Soil Pollut 223:4557–4564

Tyler HL, Moore MT, Locke MA (2012b) Influence of three aquatic macrophytes on mitigation of nitrogen species from agricultural runoff. Water Air Soil Pollut 223:3227–3236

Usborne EL, Kröger R, Pierce SC et al (2013) Preliminary evidence of sediment and phosphorus dynamics behind newly installed low-grade weirs in agricultural drainage ditches. Water Air Soil Pollut 224:1520

USEPA (2008) Gulf hypoxia action plan 2008 for reducing, mitigating, and controlling hypoxia in the Northern Gulf of Mexico and improving water quality in the Mississippi River Basin. Office of Wetlands, Oceans, and Watersheds, U.S. Environmental Protection Agency, Washington, DC, pp 1–64

USEPA (2011) Moving forward on Gulf hypoxia annual report 2011. Office of Wetlands, Oceans, and Watersheds, U.S. Environmental Protection Agency, Washington, DC, pp 1–24

USEPA (2013) Assessing progress made since 2008. Office of Wetlands, Oceans, and Watersheds, U.S. Environmental Protection Agency, Washington, DC, pp 1–86

Vaughan RE, Needelman BA, Kleinman PJA, Allen AL (2007) Vertical distribution of phosphorus in agricultural drainage ditch soils. J Environ Qual 36:1895–1903

Wuebbles D, Meehl G, Hayhoe K et al (2014) CMIP5 climate model analyses: climate extremes in the United States. Bull Am Meteorol Soc 95:571–583

Acknowledgements

The US Department of Agriculture (USDA) prohibits discrimination in all its programs and activities on the basis of race, color, national origin, age, disability, and, where applicable, sex, marital status, familial status, parental status, religion, sexual orientation, genetic information, political beliefs, reprisal, or because all or part of an individual’s income is derived from any public assistance program (not all prohibited bases apply to all programs). USDA is an equal opportunity provider and employer. Mention of trade names or commercial products in this paper is solely for the purpose of providing specific information and does not imply recommendation or endorsement by the US Department of Agriculture.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Faust, D.R., Kröger, R., Moore, M.T. et al. Management Practices Used in Agricultural Drainage Ditches to Reduce Gulf of Mexico Hypoxia. Bull Environ Contam Toxicol 100, 32–40 (2018). https://doi.org/10.1007/s00128-017-2231-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00128-017-2231-2