Abstract

Typical controlled drainage structures in drainage ditches provide drainage management strategies for isolated temporal periods. Innovative, low-grade weirs are anticipated to provide hydraulic control on an annual basis, as well as be installed at multiple sites within the drainage ditch for improved spatial biogeochemical transformations. This study provides evidence toward the capacity of low-grade weirs for nutrient reductions, when compared to the typical controlled drainage structure of a slotted riser treatment. Three ditches with weirs were compared against three ditches with slotted risers, and two control ditches for hydraulic residence time (HRT) and nutrient reductions. There were no differences in water volume or HRT between weired and riser systems. Nutrient concentrations significantly decreased from inflow to outflow in both controlled drainage strategies, but there were few statistical differences in N and P concentration reductions between controlled drainage treatments. Similarly, there were significant declines in N and P loads, but no statistical differences in median N and P outflow loads between weir (W) and riser (R) ditches for dissolved inorganic phosphate (W, 92%; R, 94%), total inorganic phosphate (W, 86%; R, 88%), nitrate-N (W, 98%; R, 96%), and ammonium (W, 67%; R, 85%) when nutrients were introduced as runoff events. These results indicate the importance of HRT in improving nutrient reductions. Low-grade weirs should operate as important drainage control structures in reducing nutrient loads to downstream receiving systems if the hydraulic residence time of the system is significantly increased with multiple weirs, as a result of ditch length and slope.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Agriculture is a dominant driver of nonpoint source (NPS) pollution of receiving waters and other downstream environments (Wells 1992; Cooper 1993). Unfortunately, societies and scientists alike criticize agriculture for their lack of effort toward NPS pollutant reduction. Best management practices of crop rotation, conservation tillage (i.e., no till or reduced till), terracing, cover crops, buffer zones, and sound land use are all tools that are becoming prominent within the agricultural industry, and thus farmers are unfairly stigmatized. Retention ponds and constructed wetlands have been suggested as excellent NPS pollutant mitigation tools to significantly reduce the potential for downstream aquatic system degradation (Hammer 1999). The construction of ponds and wetlands requires set aside land, while farming is all about land profit, where acreage is a means to derive higher profits. Replacing farmland with a 1/4–1 ha constructed wetland will mean a loss of 1/4–1 ha of profit. Recent research has highlighted the potential use of ubiquitous agricultural drainage ditches for pollutant mitigation.

Agricultural drainage ditches are primary intercept wetlands, capable of reducing pollutant loads into receiving waters, thus decreasing nutrient (Kröger et al. 2007, 2008b) and pesticide (Moore et al. 2001) pollution in downstream aquatic environments. In terms of nutrients, Kröger et al. (2007, 2008b) demonstrated that drainage ditches reduce nitrogen (N) species and phosphorus (P) species to about 50% of their original influent concentrations and loads. This leads to the question: Can agricultural drainage ditches be modified to increase nutrient reduction capacity? A theory suggests that the effectiveness of water quality improvement within aquatic systems, with respect to storm water runoff from adjacent agricultural land, depends on a system’s ability to delay flow velocities (i.e., controlled drainage) (Wesstrom et al. 2001).

Controlled drainage practices are used in many agricultural circumstances to reduce outflow volume and velocity, increase storm water mitigation and sedimentation, and decrease water table depths (Wright et al. 1992; Wesstrom et al. 2001; Needelman et al. 2007). A typical controlled drainage strategy used by farmers is the common slotted board riser (hereafter referred to as riser), normally implemented during isolated temporal periods when rainfall frequency and intensity are at their highest, thus reducing erosional impact of increased runoff. Risers are only placed at ditch outlets and can be configured to increase the level of the water within the drainage ditch by the specific height of the rise (Skaggs et al. 1994; Lalonde et al. 1996). The major disadvantage to this system is twofold: (1) the riser outlet is limited in temporal operation and (2) it is spatially specific (i.e., at the drainage outlet). An alternative controlled drainage strategy is the utilization of permanently installed low-grade weirs, spatially stratified throughout the drainage ditch. Low-grade weirs are small impoundments designed to be installed into the drainage ditch at several spatial locations. A low-grade weir is theoretically designed to retain a certain volume of water within the drainage ditch depending on the cross-sectional area occupied by the structure (i.e., 5–20% of bank full area). The structure will decrease flow velocities which should increase sedimentation rates and nutrient reduction with increased availability and likelihood of aquatic biogeochemical processes. Kröger et al. (2008a) showed an increase in chemical residence time between both vegetated and non-vegetated weired systems when compared to non-weired systems. The spatial allocation of low-grade weirs has the potential of decreasing water table depths uniformly throughout the agricultural landscape rather than just at the outflow. The practicality of weir installation (i.e., number and size) will be system driven. Fall or grade change within the ditch will drive weir height and weir placement, whilst cooperative agreements with landowners (e.g., Monsanto Delta F.A.R.M., program) will cost share materials for weir construction (i.e., typically US $1,000–1,500 per weir) if farmers enter into 10–15 years of cooperative contracts for maintenance. Drainage will still remain the primary function of agricultural ditches; however, increasing the residence time of the ditch at multiple spatial locations will provide increased opportunities for biological transformation, immobilization, and mitigation of NPS pollutants such as nutrients. For example, a 0.4-km drainage ditch reach (8–12-m deep) with a fall of approximately 1.15 m could potentially have two weirs that retain approximately 0.6 m of fall at two locations within the system. The heights would still only be between 5% and 10% of a bank’s full volume of the drainage ditch itself and not sufficiently high enough to impact upstream drainage areas.

This study took place at the Arkansas State University (ASU) agricultural facility where the primary objective was to compare replicated weired and riser ditch systems for nutrient (nitrogen and phosphorus) reduction during simulated storm runoff events. Two events were delivered sequentially to the systems, at two contrasting nitrogen concentrations, to determine reduction capacity at low and high nutrient runoff concentrations.

2 Materials and Methods

2.1 Experimental Design and System Setup

In the summer of 2008, two simulated nutrient runoff events, 3 weeks apart, were sequentially added to artificially constructed drainage ditch systems at ASU’s agricultural field research facility in Jonesboro, AR, USA (Fig. 1). The ASU facility consists of eight constructed drainage ditches, each with an independent, adjustable inflow and outflow hydrologic structure (Fig. 1). A groundwater well supplies a large retention pond which subsequently has eight standpipes attached to butterfly valves that serve as inflows to each respective ditch. The ditches had a mean width and length of 1.89 and 58.7 m, respectively. A Trimble Laser Theodolite with a fixed base station quantified ditch slopes, weir heights, and water depths, which were calculated into ditch water and weir volumes. Table 1 describes the inflow rates and water volumes for the respective ditches. Flow velocities were measured using a portable electromagnetic flow meter (Marsh and McBirney, HACH). Flow rates were derived mathematically using cross-sectional area, depth, and ditch width. Flow velocities were measured at the beginning of each runoff event. Butterfly valves controlling inflows were not changed between runoff events, and flow rates were remeasured to verify similarity. Three drainage ditches (ditch 3, 5, and 6) (Fig. 1) were randomly selected (random treatment drawn to each ditch) to contain weirs. Weirs were constructed with the use of rice spills (180 × 90-cm high density plastic sheet). A rice spill is typically used in rice agriculture to maintain and retain water levels between landformed pieces of farmland. These spills were secured and sealed with sandbags, riprap, and topsoil. A flow notch atop each weir was created by laying a stake vertically along the weir to depress the middle. There were two weirs per ditch, for a total of six weirs. Three drainage ditches were randomly selected as riser systems (ditch 2, 4, and 7), where no weirs were installed, but standpipes were set at maximum heights mirroring riser conditions at the outflow of a drainage ditch. The two remaining ditches (ditch 1 and 8) served as controls with no weirs and no nutrient runoff events. All ditches had standpipes (Fig. 1) set at maximum height to retain water volumes. Ditches with weirs were hypothesized to increase hydraulic volumes as weir crest heights, and weir placements were higher than the standpipe height at the ditch outflows. Weir heights were also stair-stepped so that weir heights were sequentially higher than the previous weir and standpipe.

Artificial drainage ditch setup at Arkansas State University, agricultural research facility. Eight primary drainage ditches were plumbed to receive inflow from a groundwater retention pond. All drainage ditches were vegetated with a mixture of annual and perennial herbaceous species, and obligate wetland plants. Ditch 3, 5, and 6 were weired systems, with two weirs constructed in each system. Ditch 2, 4, and 7 were riser systems, with standpipes set at maximum height to maximize ditch volume retention

2.2 Nutrient Runoff Simulations

Runoff events were simulated as nutrient slugs pulsing through each drainage ditch system. Ditches 2–7 were dosed with ammonium nitrate (NH4NO3) and triple superphosphate (TSP) nutrient slurry. The first runoff event delivered a nutrient pulse at high nitrate-N concentrations (15 mg L−1), while the second runoff event was delivered at low nitrate-N (2 mg L−1). Phosphate concentrations were kept even (10 mg L−1) between runoff events. Nutrient pulses were calculated to be similar to potential runoff concentrations immediately leaving agricultural acreage (36 ha) postfertilization and runoff occurring 3 weeks postapplication for nitrate-N (NO −3 –N), ammonium-N (NH +4 –N), and total phosphorus. The high concentrations enabled toxicity analyses and the development of nutrient toxicity thresholds (unpublished data not herein discussed). Weights (kilograms) of NH4NO3 and TSP were individually determined per drainage ditch and mixing chamber volume to achieve abovementioned targeted nutrient concentrations within the ditch. Large 190-L polyethylene mixing chambers were filled with groundwater from the detention ponds and mixed to 133 L. Nutrient slugs were dosed from the mixing chambers to each treatment drainage ditch in sequential fashion with all doses delivered within 2 min of experiment initiation. All ditches were at maximum water volumes, with flow through occurring for at least 30 min and head pressure equilibrium being maintained in a detention pond prior to the nutrient runoff event.

Water quality parameters of pH, dissolved oxygen (DO) (milligrams per liter), and conductivity (EC; microsiemens per centimeter) were recorded with a handheld YSI® 85 (Yellow Springs Instrumentation, OH, USA) and recorded every time water was sampled. Water samples were taken in duplicate every hour for 8 h, providing eight initial points on an outlet breakthrough curve. Subsequent water sampling took place at 16, 24, 48, and 168 h (7 days). Background samples were also recorded for each respective ditch (n = 3 per ditch) and for the detention pond (n = 6). Water samples were removed from their respective mixing chambers to analyze for initial slug concentration and load. Water samples were taken from the outflows of each respective drainage ditch with 230 ml polyethylene Fisher Scientific specimen containers. All water samples were placed on ice (≤4°C) and transported back to the United States Department of Agriculture—Agricultural Research Service, National Sedimentation Laboratory’s water quality laboratory. Within 24 h, all available water samples were filtered and aliquots disseminated for appropriate nutrient analysis.

2.3 Nutrient Analyses

Water samples were analyzed for NH +4 –N, NO −3 –N, dissolved inorganic orthophosphate (DIP), and total inorganic orthophosphate (TIP). Samples analyzed for NH +4 –N, NO −3 –N, and DIP were filtered through 0.45-μm Whatman nitrate–cellulose membranes. The cadmium reduction method was used to analyze NO −3 –N, whereas NH +4 –N was analyzed using the phenate method (APHA 1998). Dissolved inorganic orthophosphate and TIP were analyzed using colorimetric reaction methods described by Murphy and Riley (1962) on digested filtered and unfiltered samples, respectively. All analyses were performed using a Thermo Spectronic Genesys 10 ultraviolet spectrophotometer.

2.4 Data and Statistical Analyses

Duplicate hourly water samples and instrument duplicates were held to 20% and 10% relative percentage differences for all nutrient analyses respectively between samples to verify quality assurance on lab analyses and instrument readings. Duplicate outflow water samples were averaged and subtracted against inflow concentrations to determine nutrient concentration reduction percentages for each hour and ditch replicates. Outflow concentrations were individually plotted against time to create breakthrough curves for each ditch outlet. Relative concentration differences between inflow and outflow for each breakthrough curve were compared between controlled drainage treatments to observe differences. All breakthrough curves had mean control nutrient concentrations subtracted from outlet concentrations to determine changes to amended nutrient concentrations. Breakthrough curves for each runoff experiment had eight representative points, with an additional three sampled at 16, 24, and 48 h. One-way ANOVAs (α = 0.05) were conducted to determine statistical differences between volumes and flow rates between control, riser, and weir systems. Percentage reductions data were tested for normality using Shapiro–Wilks’ W test using JMP 8 (SAS 2008). Percentage data that failed the Shapiro–Wilks’ W test were arcsin transformed to validate the parametric (Student’s t test; ANOVA) statistical assumptions. If data still were not normally distributed, a Wilcoxon Mann–Whitney U test was used to test for differences between treatments. Maximum concentration was defined as the maximum concentration recorded on the breakthrough curve at the outlet of each respective ditch for the duration of each runoff event. Runoff events were analyzed independently for concentration reductions, but events were combined to analyze load reductions. Percent load reductions within each ditch was calculated with the following equation:

where I c is inflow concentration (milligrams per liter), T over is the time for the slug introduction, O Cn and O Cn+1 are outflow concentrations (milligrams per liter) at time n (T n ; seconds) and time n + 1 (T n+1; seconds), and Q is flow (meter cube per second) for each ditch. Loads were calculated by assuming inflow rates equaled outflow rates, with the systems at the beginning of both runoff events being in a state of hydrological equilibrium (loss to groundwater was assumed negligible). Load reductions were quantified as a range of percentage reductions based on maximum and minimum percent reductions attained as a result of variability in reduction capacity between the replicated systems. Compared means between riser and weir treatments were considered statistically different below an alpha of 0.1.

3 Results and Discussion

3.1 Physicochemical Conditions

There were no statistically significant differences (ANOVA, arcsin transformed) between control ditches and ditches with weirs and risers for DO, temperature, pH, and conductivity for either runoff events. Average DO (7.5 mg L−1) concentrations typically increased throughout the day within all ditches with increased radiation and microbial photosynthetic activity but were not significantly different from the groundwater supplied to each drainage ditch system. Temperature (29.9°C), pH (7.1), and conductivity (279 μS cm−1) showed no significant variability between, within, and among drainage ditches and nutrient runoff events. Comparing water volumes and flow rates, a one-way ANOVA (F vol = 2.8, P = 0.14; F flow = 0.25, P = 0.7) showed no statistically significant differences between control (C), riser (R), and weir (W) systems (C, 24.4 ± 1.5 m3; R, 29.2 ± 1.3 m3; W, 28.2 ± 1.3 m3) (Table 1), as a result of the high variability between replicates. This nonsignificant difference was expected from a theoretical standpoint because of a lack of statistical power (β = 0.07, sample size and effect) and from an agricultural standpoint as riser systems mimic high water, hydrological circumstances in a typical southern agriculture landscape. Increases in spatial scale of drainage systems is anticipated to have a greater longitudinal gradient that allows for an increased number of weirs, which would have a larger water volume capacity as compared to a single riser outflow ditch. The flow rate and volume of water was scaled with respect to ditch size, and thus the results would be transferable with commensurate increases in ditch size and residence. Residence times for breakthrough concentrations (i.e., maximum concentration at the outlet) varied between replicates for each runoff event (Figs. 2 and 3). For the majority, mean weir residence times were longer than riser ditches except for nitrate in the low nutrient experiment (Figs. 2 and 3). There were no significant differences between riser, weir, and control ditches in the hydraulic residence of breakthrough curves (ANOVA, F = 0.28, P = 0.3) as a result of high variability between treatment replicates.

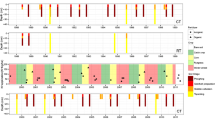

Average maximum breakthrough concentrations and residence (±S.E.) for ammonium, nitrate, DIP, and TIP for weir and riser ditches for the high nutrient runoff experiment. Dashed lines represent an average amended nutrient concentration to each ditch. Control ditches did not receive any nutrient amendments. Vertical error bars highlight variance in outlet concentration, and horizontal error bars highlight variance in residence time for maximum outlet concentrations between treatment replicates

Average maximum breakthrough concentrations and residence (± S.E.) for ammonium, nitrate, DIP and TIP for weir and riser ditches for the low nutrient runoff experiment. Dashed lines represent an average amended nutrient concentration to each ditch. Control ditches did not receive any nutrient amendments. Vertical error bars highlight variance in outlet concentration, and horizontal error bars highlight variance in residence time for maximum outlet concentrations between treatment replicates

3.2 Nitrogen Concentrations

Figure 4 reports the lack of changes in the control ditches with time for NO −3 and NH +4 for both runoff events (Figs. 4a, b). Relative breakthrough concentrations with time have been plotted for NO −3 and NH +4 for the high (Fig. 2) and low (Fig. 3) runoff events. Error bars in the vertical plane denote variability between replicates for maximum breakthrough outlet concentrations while error bars in the horizontal denote variability in time taken for the peak in the breakthrough curve. Ammonium concentrations increased between mixing chambers and outflow in both weir and riser systems for both nutrient runoff events (Figs. 2 and 3). Riser ditches had a higher increase (−132 ± 66%) in NH +4 concentration over the weired system (22% ± 27%) in the first runoff event. In the second nutrient runoff event, however, weired ditches increased NH +4 concentrations by twofold (weir, −97% vs. riser, −18%). These increases in NH +4 over the amended concentration as well as the role reversal between controlled drainage treatments was hypothesized as an artifact of the antecedent biogeochemical history of the previous nutrient addition, loading the system with positive adsorbed NH +4 ions associated with fine, settled particulate matter. A second nutrient runoff event, causing re-inundation and flow, possibly resuspended and released adsorbed NH +4 ions and increased concentrations at the outflow. There are few studies that discuss NH +4 reduction associated with controlled drainage. In contrasting studies, Martin et al. (1997) showed the release of NH +4 in histosols in the everglades was not influenced by changes in water depth. Amatya et al. (1998), however, showed that in pine plantations the use of controlled drainage reduced outflows and thus reduced corresponding nutrient and sediment exports. This same study showed a variable decrease in NH +4 concentrations between 7% and 72%.

Average (±S.E.) control nutrient concentrations for ammonium, nitrate, DIP, and TIP for the high (a) and low (b) amended nutrient experiments. Control concentrations were assumed background to all ditches and thus treatment concentrations were reflected as subtractions of control values. Spikes in TIP and DIP occurred at 60 and 120 min and 180 min, respectively

There were significantly contrasting results for NO −3 reduction between high (Fig. 2) and low (Fig. 3) concentration runoff events. Nitrate concentrations were significantly reduced between inflow and outflow in both weir and riser drainage systems in the initial, high concentration runoff event (Fig. 2). Reductions in NO −3 were likely due to saturated soil conditions, driving a reduction in redox potential and inducing denitrification (Dinnes et al. 2002; Burchell et al. 2005; Evans et al. 2007; Sharpley et al. 2007). There was a significantly (z = 2.36; P = 0.018) higher decrease (97 ± 3.5%) in NO −3 concentration between inflows and outflows for the weired system as compared to the riser ditches, which reduced NO −3 by approximately 79 ± 18%. The riser reduction value was higher to what Kröger et al. (2007) found in field studies, where drainage ditches without a definitive controlled drainage strategy showed a 53% reduction in NO −3 concentrations from fertilized cotton fields. Needelman et al. (2007) observed two drainage ditches in Minnesota under baseflow conditions. Results showed a greater decline in NO −3 concentrations (71%) in the ditch with controlled drainage vs. that observed without a water control structure. Lalonde et al. (1996) reported ditch drain flow was reduced by 58–95% with controlled drainage which resulted in a 62–95% reduction in NO −3 .

Interestingly, a second nutrient runoff event at a lower NO −3 concentration flipped both weired and riser ditches from being NO −3 sinks to NO −3 sources, contrary to previous published studies on drainage ditch nutrient reduction capacity (Kröger et al. 2007; Moore et al. 2010). Outflow concentrations were higher than the dosed concentrations in both weired and riser ditches (Fig. 3). Since concentrations added were low (0.3 mg L−1) and similar to background concentrations reported in the control ditches, it seems that the amended concentrations had an additive effect on the system resulting in higher concentrations as peaks in the breakthrough curves. These results potentially highlight the lack of nutrient reduction at low concentrations of NO −3 .

The success of controlled drainage in reducing NO −3 whether by outflow volume or concentration/load basis is well documented (Gilliam and Skaggs 1986; Gilliam et al. 1979; Evans et al. 1995). The effect of multiple storm events, however, on NO −3 reduction capacity has drawn less attention. We caution the use of these data to extrapolate to concurrent storm events in time. This study looked at two runoff events with different inflow concentrations. Predictably, seasonality, influent concentration and load, and the antecedent history of the drainage ditch will more than likely lead to variable nitrogen reduction results. It can be hypothesized, however, what biogeochemical processes are occurring within the system. A multitude of factors could have contributed to the lack of NO −3 transformation under the second, lower concentrated runoff event. Studies have shown that for denitrification to occur, a readily available carbon source and high effluent NO −3 load are important (Sirivedhin and Gray 2006). Starr and Gillhorn (1993) failed to detect any denitrification in inundated areas where organic carbon was lacking. In addition to readily available carbon, Bachand and Horne (2000) and Seitzinger (2008) demonstrated that lower influent NO −3 concentrations constrained the rate of denitrification in wetland systems. A high influent NO −3 concentration, typical of these agricultural systems would result in a greater rate of denitrification. These systems are more than likely N-limited, with NO −3 concentrations constraining N production pathways.

3.3 Phosphorus Concentrations

Figure 4 highlights background concentrations of DIP and TIP. There were noticeable spikes, similar in magnitude, in background TIP concentrations in both runoff experiments (Figs. 4a, b). This is hypothesized as a result of variations in suction and hydraulic head increasing turbidity with inflow from the detention basin. Phosphorus results were the opposite of the results obtained for NH +4 and NO −3 and variable between runoff events (Figs. 2 and 3). In the initial nutrient runoff event, there were significant differences (z = 1.68, P = 0.09) in DIP concentrations (Fig. 3) between weired ditches (77 ± 29%) and riser ditches (47 ± 47%). High inflow rates, high soil clay contents (unpublished data), and low soil conductivity was hypothesized as influencing the interaction of pore soil water and the overlying water column, thus improving reduction capacities in both drainage systems. Kröger et al. (2008b) showed that field drainage ditches reduced DIP concentrations and loads by 47% without any controlled drainage strategy, identical results to the riser ditches in this study. Total inorganic P concentrations significantly increased in riser ditches (10.5 mg L−1), as compared to weired ditches (4.6 mg L−1) (Fig. 2) over amended concentrations (W, 5.3 mg L−1; R, 2.8 mg L−1). Turbulence and generation of particulates with the slug dose, as well as a lack of sedimentation in the riser system is hypothesized to have increased TIP concentrations (>100%). Interestingly, the second nutrient runoff event (Fig. 3) resulted in near identical DIP (z = −1.89; P = 0.1) and TIP (z = −0.93; P = 0.34) reductions between weired (DIP, 70 ± 17%; TIP, 58 ± 19%) and riser ditches (DIP, 80 ± 25%; TIP, 21 ± 110%). Seeing that both drainage strategies had similar substrate constituents, more than likely adsorptive capacities and biogeochemical exchange mechanisms were saturated in both systems, from the first event, resulting in similar reduction potentials. Phosphorus concentrations and load reductions in controlled drainage are interesting, since unlike N, P is predominantly a biogeochemically mediated as opposed to a biological process. Phosphorus can sorb or be desorbed to sediments provided the adequate iron and aluminum oxides are present where redox conditions allow for sorption (Dunne et al. 2007a, b; Olli et al. 2009; Zhuan-Xi et al. 2009). Sediments, the reduction in flow velocities, and the settling of particulate P associated with fine suspended particulate matter are dominant processes for P mitigation, though temporary. Amatya et al. (1998) showed with controlled drainage that TP export was reduced as a result of a decrease in outflow rates. Evans et al. (1992) in field scale drainage systems demonstrated that drainage control under poorly drained soils in North Carolina reduced P losses by 30–50% as compared to uncontrolled drainage systems. Similarly, Wesstrom and Messing (2007) noted that controlled drainage significantly lowered P loads in drain outflows.

3.4 Overall Nutrient Reductions and Load Comparisons

Tables 2 and 3 show the variability in load reductions for both weired and riser ditches. There was a lack of statistical power to compare loads between weired and riser ditches from mean percent reductions based on combining storm events using a Wilcoxon Mann–Whitney U test. In evaluating the 5% and 95% standard error percent reductions for nitrogen and phosphorus load reductions, there is significant variability and range in load reductions for both riser and weired ditches, thus, a high probability that there were no significant differences in reductions between nitrogen and phosphorus species. Ammonium was the only species to increase in load, but it occurred in both systems. It is difficult to understand the reason for increases in NH +4 concentrations and loads within both weired and riser ditches. It can only be hypothesized that controlled drainage and increased volumes within each system improved conditions for microbial mineralization of organic N, increasing the concentration of NH +4 and subsequent loads. Similar flooding and biogeochemical circumstances between riser and weir ditches also resulted in similar nitrate, DIP, and TIP loads being reduced (Tables 2 and 3). The increased residence time and increased ditch surface area of anaerobic substrate inundated under the weired system is hypothesized to have significantly increased denitrification potential and resulted in high reductions in NO −3 load for weired systems and riser ditches. Similarly, the decreased flow velocities and similar sediment substrates between the controlled drainage systems resulted in equal TIP and DIP load reductions. This data highlights that drainage ditches are useful in nutrient reductions confirming past work (Kröger et al. 2007, 2008b; Moore et al. 2010; Needelman et al. 2007), but also substantiates controlled drainage literature on the effectiveness of controlled drainage strategies in nutrient reductions (Shirmohammadi et al. 1995; Thomas et al. 1991; Borin et al. 2001; Thomas et al. 1995).

4 Conclusions

A comparison of weir vs. riser drainage systems showed variable overall differences (post two nutrient runoff events) in percentage nutrient concentration reductions between nitrogen and phosphorus species. Using weirs and risers as controlled drainage strategies, however, did significantly reduce outflow concentrations and loads from measured inflow concentrations and loads for NO3, DIP, and TIP highlighting the effectiveness of controlled drainage to reduce nutrients. Often there were unexplained variations in nutrient reductions in both drainage systems when a second nutrient event was dosed to the system. Future research aims to move comparisons of experimental nutrient reductions with weirs to field based research. Further information is needed on the field mitigation capacities of drainage strategies over the growing season where typically systems have no control drainage, and the dissemination of the role heterogeneous anthropogenic inputs have on mitigation capacity in these primary aquatic systems. Additionally, new weir designs will be evaluated between controlled, replicated events and natural field circumstances to evaluate best management practice (low-grade weirs) effectiveness in nutrient reductions.

References

Amatya, D. M., Gilliam, J. W., Skaggs, R. W., Lebo, M. E., & Campbell, R. G. (1998). Effects of controlled drainage on forest water quality. Journal of Environmental Quality, 27, 923–935.

APHA. (1998). Standard methods for the examination of water and wastewater (20th ed.). Washington D.C.: American Public Health Association.

Bachand, P. A. M., & Horne, A. J. (2000). Denitrification in constructed free-water surface wetlands: 1. Very high nitrate removal rates in macrocosm study. Ecological Engineering, 14, 9–15.

Borin, M., Bonaiti, G., & Giardini, L. (2001). Controlled drainage and wetlands to reduce agricultural pollution: a lysimetric study. Journal of Environmental Quality, 30, 1330–1340.

Burchell, M. R., Skaggs, R. W., Chescheir, G. M., Gilliam, J. W., & Arnold, L. A. (2005). Shallow subsurface drains to reduce nitrate losses from drained agricultural lands. Transactions of the ASAE, 48(3), 1079–1089.

Cooper, C. M. (1993). Biological effects of agriculturally derived surface water pollutants on aquatic systems—a review. Journal of Environmental Quality, 22, 402–408.

Dinnes, D. L., Karlen, D. L., Jaynes, D. B., Kaspar, T. C., Hatfield, J. L., Colvin, T. S., et al. (2002). Nitrogen management strategies to reduce nitrate leaching in tile-drained mid-western soils. Agronomy Journal, 94, 153–171.

Dunne, E. J., McKee, K. A., Clark, M. W., Grunwald, S., & Reddy, K. R. (2007a). Phosphorus in agricultural ditch soil and potential implications for water quality. Journal of Soil and Water Conservation, 62(4), 244–252.

Dunne, E. J., Smith, J., Perkins, D. B., Clark, M. W., Jawitz, J. W., & Reddy, K. R. (2007b). Phosphorus storages in historically isolated wetland ecosystems and surrounding pasture uplands. Ecological Engineering, 31, 16–28.

Evans, R. O., Parsons, J. E., Stone, K., & Wells, W. B. (1992). Water table management on a watershed scale. Journal of Soil and Water Conservation, 1, 58–64.

Evans, R. O., Skaggs, R. W., & Gilliam, J. W. (1995). Controlled versus conventional drainage effects on water quality. Journal of Irrigation and Drainage Engineering, 121(4), 271–275.

Evans, R. O., Bass, K. L., Burchell, M. R., Hinson, R. D., Johnson, R., & Doxey, M. (2007). Management alternatives to enhance water quality and ecological function of channelized streams and drainage canals. Journal of Soil and Water Conservation, 62(4), 308–320.

Gilliam, J. W., & Skaggs, R. W. (1986). Controlled agricultural drainage to maintain water quality. Journal of Irrigation and Drainage Engineering, 112(3), 254–263.

Gilliam, J. W., Skaggs, R. W., & Weed, S. B. (1979). Drainage control to diminish nitrate loss from agricultural fields. Journal of Environmental Quality, 8(1), 137–142.

Hammer, D. A. (1999). Designing constructed wetlands systems to treat agricultural nonpoint source pollution. In J. Vymazal (Ed.), Nutrient cycling and retention in natural and constructed wetlands. Leiden: Backhuys Publishers.

Kröger, R., Holland, M. M., Moore, M. T., & Cooper, C. M. (2007). Hydrological variability and agricultural drainage ditch inorganic nitrogen reduction capacity. Journal of Environmental Quality, 36, 1646–1652.

Kröger, R., Cooper, C. M., & Moore, M. T. (2008a). A preliminary study of an alternative controlled drainage strategy in surface drainage ditches: low-grade weirs. Agricultural Water Management, 95, 678–684.

Kröger, R., Holland, M. M., Moore, M. T., & Cooper, C. M. (2008b). Agricultural drainage ditches mitigate phosphorus loads as a function of hydrological variability. Journal of Environmental Quality, 37, 107–113.

Lalonde, V., Madramootoo, C. A., Trenholm, L., & Broughton, R. S. (1996). Effects of controlled drainage on nitrate concentrations in subsurface drain discharge. Agricultural Water Management, 29, 187–199.

Martin, H. W., Ivanoff, D. B., Graetz, D. A., & Reddy, K. R. (1997). Water table effects on histosol drainage water carbon, nitrogen and phosphorus. Journal of Environmental Quality, 26, 1062–1071.

Moore, M. T., Bennett, E. R., Cooper, C. M., Smith, S. Jr., Shields, F. D. Jr., Milam, C. D., et al. (2001). Transport and fate of atrazine and lambda-cyhalothrin in an agricultural drainage ditch in the Mississippi Delta, USA. Agriculture Ecosystems & Environment, 87, 309–314.

Moore, M. T., Kröger, R., Locke, M. A., Cullum, R. F., Steinriede, R. W., Jr., Testa, S., et al. (2010). Nutrient mitigation capacity in mississippi delta, USA drainage ditches. Environmental Pollution, 158, 175–184.

Murphy, R., & Riley, J. P. (1962). A modified single solution method for the determination of phosphate in natural waters. Analytica Chimica Acta, 27, 31–36.

Needelman, B. A., Kleinman, P. J. A., Strock, J. S., & Allen, A. L. (2007). Improved management of agricultural drainage ditches for water quality protection: an overview. Journal of Soil and Water Conservation, 62(4), 171–178.

Olli, G., Darracq, A., & Destouni, G. (2009). Field study of phosphorus transport and retention in drainage reaches. Journal of Hydrology, 365, 46–55.

SAS. (2008). JMP 8 introductory guide. Cary, NC, USA: SAS Institute Inc.

Seitzinger, S. (2008). Nitrogen cycle: out of reach. Nature, 452, 162–163.

Sharpley, A. N., Krogstad, T., Kleinman, P. J. A., Haggard, B. E., Shigaki, F., & Saparito, L. S. (2007). Managing natural processes in drainage ditches for nonpoint source phosphorus control. Journal of Soil and Water Conservation, 62(4), 197–206.

Shirmohammadi, A., Wenberg, R. D., Ritter, W. F., & Wright, F. S. (1995). Effect of agricultural drainage on water quality in mid-atlantic states. Journal of Irrigation and Drainage Engineering, 121(4), 302–306.

Sirivedhin, T., & Gray, K. A. (2006). Factors affecting denitrification rates in experimental wetlands: field and laboratory studies. Ecological Engineering, 26, 167–181.

Skaggs, R. W., Breve, M. A., & Gilliam, J. W. (1994). Hydrologic and water quality impacts of agricultural drainage. Critical Reviews in Environment Science and Technology, 24(1), 1–32.

Starr, R. C., & Gillhorn, R. W. (1993). Denitrification and organic carbon availability in two aquifers. Groundwater, 31, 934–947.

Thomas, D. L., Shirmohammadi, A., Lowrance, R. R., & Smith, M. C. (1991). Drainage-subirrigation effect on water quality in georgia flatwoods. Journal of Irrigation and Drainage Engineering, 117(1), 123–137.

Thomas, D. L., Perry, C. D., Evans, R. O., Izuno, F. T., Stone, K. C., & Gilliam, J. W. (1995). Agricultural drainage effects on water quality in southeastern U.S. Journal of Irrigation and Drainage Engineering, 121(4), 277–282.

Wells, H. W. (1992). Pollution prevention. Pollution Engineering, 24(1), 23–25.

Wesstrom, I., & Messing, I. (2007). Effects of controlled drainage on n and p losses and n dynamics in a loamy sand with spring crops. Agricultural Water Management, 87, 229–240.

Wesstrom, I., Messing, I., Linner, H., & Lindstrom, J. (2001). Controlled drainage—effects on drain outflow and water quality. Agricultural Water Management, 47, 85–100.

Wright, J. A., Shirmohammadi, A., Magette, W. L., Fouss, J. L., Bengston, R. L., & Parsons, J. E. (1992). Water table management practice effects on water quality. Transactions of the ASAE, 35(3), 823–831.

Zhuan-Xi, L., Bo, Z., Jia-Liang, T., & Tao, W. (2009). Phosphorus retention capacity of agricultural headwater ditch sediments under alkaline condition in purple soils area, china. Ecological Engineering, 35, 57–64.

Acknowledgments

The authors wish to thank Arkansas State University for the use of the artificial drainage systems, Renee Russell for nutrient analyses, and staff, ecologists, and biologists in the Water Quality Ecology Unit for comments and reviews on earlier drafts of the manuscript. Special acknowledgments go to Lisa Brooks for all the organizational tasks, management, and coordination of water quality samples. The authors would also like to thank the comments from anonymous reviewers who gratefully gave their time to substantially improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kröger, R., Moore, M.T., Farris, J.L. et al. Evidence for the Use of Low-Grade Weirs in Drainage Ditches to Improve Nutrient Reductions from Agriculture. Water Air Soil Pollut 221, 223–234 (2011). https://doi.org/10.1007/s11270-011-0785-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11270-011-0785-x