Abstract

A distributed arithmetic (DA)-based decision feedback equalizer architecture for IEEE 802.11b PHY scenarios is presented. As the transmission data rate increases, the hardware complexity of the decision feedback equalizer increases due to requirement for large number of taps in feed forward and feedback filters. DA, an efficient technique that uses memories for the computation of inner product of two vectors, has been used since DA-based realization of filters can lead to great computational savings. For higher-order filters, the memory-size requirement in DA would be high, and so ROM decomposition has been employed. The speed is further increased by employing digit-serial input operation. Two architectures have been presented, namely the direct-memory architecture and reduced-memory architecture where the later is derived using the former. A third architecture has also been presented where the offset-binary coding scheme is employed along with the ROM decomposition and digit-serial variants of DA. Synthesis results on Altera Cyclone III EP3C55F484C6 FPGA show that the proposed DA-based implementations are free of hardware multipliers and use less number of hardware resources compared to the multiply-and-accumulate-based implementation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

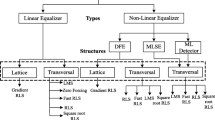

In telecommunications, intersymbol interference (ISI) occurs because of the multipath propagation of the transmitted signal and due to the band limited nature of channels. Equalizers [19, 20] are employed at the receiver end in order to cancel the effect of multipath channels. Linear equalizers can counter the ISI by estimating the inverse of the discrete time model of the channel. However, noise power would theoretically become infinity at those frequencies where the channel transfer function becomes zero. Even, in the absence of spectral nulls, there would be a substantial enhancement of noise power in the vicinity of those frequencies which are greatly attenuated within the bandwidth of the transmitted signal. Decision feedback equalizers (DFE) [7] are widely used for effectively equalizing the channels that exhibit nulls in their frequency spectrum . Unlike the linear equalizers, DFEs do not estimate the inverse of the channel directly. A DFE basically consists of a feed forward filter (FFF), a feedback filter (FBF) and a decision device (quantizer). The FFF works directly on the received data and equalizes the anti-causal part of the channel transfer function. The residual ISI at the FFF output is then cancelled out by subtracting the FBF output from the FFF output. The FBF whose coefficients are carefully chosen operates on the decisions made on the past symbols. The DFE works basically on the assumption that there are no errors at the output of the decision device. As long as the decisions are correct, the DFE can equalize the channel effectively with low noise enhancement.

The problem with DFEs is that the sizes of FFF and FBF increase as the transmission data rate increases. This is because of the fact that as the transmission data rate increases, more and more symbols get overlapped, which demands for large number of taps for FFF and FBF. Hence, when implementing the DFEs, more number of multiply-and-accumulate (MAC) units are to be employed and operated in parallel in order to cope up with the transmission speed. But, due to the presence of large number of multipliers, the system would become complex and the real-time implementation becomes difficult. Distributed arithmetic [5, 31], an efficient technique to compute the sum-of-product of two vectors, can be employed since it can realize vectors of any size without the presence of a hardware multiplier. Further, DA-based realization can lead to good throughput achievements since the computation speed depends on the bit-length of the input vectors unlike the MAC-based implementation where it depends on the length of the vectors. Most of the digital signal processing (DSP) algorithms such as convolution, correlation and fast transforms are principally the sum-of-product operations [15]. Hence, past work on DA-based realization mostly included finite and infinite impulse-response digital filters [2, 3, 28, 29, 34], transforms [8, 9, 22, 33], rotation operations [4, 27], adaptive digital filters [1, 10, 26, 30]. Recent works based on distributed arithmetic once again include transforms and filtering [11–14, 16–18, 21, 23, 32]. In the most recent work [24, 25], a block floating point realization (BFP) of DFE and adaptive DFE (ADFE) have been presented where the processing cost is marginally higher compared to the fixed point implementation. So far, DA technique has not been exploited for the efficient realization of the decision feedback equalizer. In this paper, we present a DA-based decision feedback equalizer architecture that consumes less hardware resources operating at high frequency compared to conventional (MAC-based) DFE implementations.

The paper is organized as follows: Section 2 presents the background of DA concept. Section 3 presents the proposed DA-based implementation of DFE where DA treatment to both FFF and FBF blocks are carried out separately. The analysis of hardware complexity and speed of MAC-based and DA-based schemes and their implementation details are discussed in Sect. 4, and the superiority of the proposed scheme over MAC-based scheme is also described here. In the entire paper, variables with the subscripts \(f\) and \(b\) represent the terms related to FFF and FBF, respectively.

2 Distributed Arithmetic Background

Distributed arithmetic is an efficient way of computing the inner product of two vectors in a fixed number of clock cycles irrespective of the length of vectors. DA works on the principle that the one-dimensional scalar convolution is equivalent to two-dimensional binary convolution [6]. In DA, the basic idea behind the computation of inner product is to store the partial products in memory and accessing and shift-accumulating them will compute the result. The computation of inner product of two vectors using DA is explained in the following paragraphs.

Consider the inner product of two vectors ‘\(\mathbf{c}\)’ and ‘\(\mathbf{x}\)’ \(\left( i=0,1,\ldots ,N-1\right) \) which may be given as

If every sample of \(x_{i}\) is written in fixed point \(Q1.B-1\) signed 2’s-complement representation,

where \(b_{i,j}\in \{0,1\}\) is the \(j\)-th bit in the binary representation of \(x_{i}\) . Now,

where,

Let

and

Hence, (5) becomes

From (6) and (7), it can be observed that, taking \(j\)-th bit from each of \(x_{i}\), the term \(C_{B-1-j}\) would take only one out of \(2^{N}\) possible combinations which are nothing but the partial products of elements of \(c_{i}\). Hence all these combinations can be stored in an LUT (usually a read-only memory (ROM)) whose address bits are formed by \(j\)-th bit of every \(x_{i}\). Then the output \(y\) can be computed by shifting the partial products taken from the memory for every \(j\)-th \(\left( j=0,1,\ldots ,B-1\right) \) set of bits of \(x_{i}\)’s and accumulating all of them using shifting operation. As there are \(B\) bits in all \(x_{i}\)’s counting from LSB to MSB, the system would take a total of \(B\) clock cycles to compute the inner product. Figure 1a shows the DA structure for computation of the inner product as per (8) with \(B=4\). Here, the address bits are indexed by \(j\) and the memory word is indexed by \(i\) .

As there are \(2^{N}\) partial products, the size of the LUT would be \(2^{N}\), and so if \(N\) is large, the LUT size requirement would be high. DA provides the flexibility for the usage of multiple small-sized LUTs if \(N\) is split as \(N=M\times L\) in which case (6) becomes

If \(s=i-mL\) and if the dummy variable \(s\) is replaced by \(i\), then

Hence, \(M\) number of LUTs can be used each of size \(2^{L}\), taking address lines from every \(K\) sets of \(x_{i}\)’s. The DA structure corresponding to (13) with \(L=2\) and \(B=4\) is shown in Fig. 1b where the address bits are indexed by \(j\), the LUT block is indexed by \(m\) and the LUT word is indexed by \(i\). The number of clock cycles to compute the inner product remains the same, but the number of arithmetic operations (adders) would increase. Specifically, the system requires a total of \(M\times 2^{L}\) LUT locations and \(M-1\) adders and computes the inner product in \(B\) clock cycles. \(L=N\) is same as (6), and \(L=1\) case will have \(N\) individual LUTs with two locations in each. In order to increase the speed of the system, multiple bits of \(x_{i}\)’s can be used in parallel as the address lines to the LUT at the cost of increase in its size. This is obtained by splitting \(B\) as \(B=P\times Q\) in which case (8) becomes

If \(s=j-pQ\) and if the dummy variable \(s\) is replaced by \(j\), then

The DA structure corresponding to (17) with \(P=2\) is shown in Fig. 1c. In such case, the size of the memory would be \(2^{PN}\) and the speed is increased by a factor of \(P\), and hence the inner product is computed in \(B/P\) clock cycles. Further it can be noted from (10) that the shifting is done for \(P\) units unlike the previous case where unit shifting is done on each of the partial product. If \(P=B\), then all the \(B\)-bits of \(x_{i}\)’s form the address lines and the inner product is computed in a single clock cycle while \(P=1\) is same as (8).

Hence, from (13) and (17), it can be noted that splitting \(N\) would result in the low memory-size requirement at the expense of increased combinational logic and employing digit-serial would speed up the system at the cost of increased parallelism. Alternately, one can employ the ROM splitting as well as the digit-serial nature of DA as shown in Fig. 1d for the trade-offs between speed and hardware complexity based on the parameters \(M\) and \(P\) as explained above. These techniques apply equally well when the partial products of \(x_{i}\)’s are stored and the bits of \(c_{i}\)’s are used as the address bits to the LUT. Further, the techniques work well for the signed \(2\)’s-complement representation of the elements of the vectors [15].

The ROM size in the basic DA architecture can be further reduced using the offset-binary coding (OBC) technique [15], which can be derived as follows:

Rewriting (2) as \(x_{n-i}=\frac{1}{2}[x_{n-i}-(-x_{n-i})]\) we have:

Choosing

Substituting (18), (19) in (1) and rearranging, we get

Defining

and

We arrive at

Now, for a given set of \(w_{i}\left( i=0,1,\ldots ,N-1\right) \), the terms \(C_{B-1-j}\)’s would take one out of \(2^{N}\) combinations, half of which would be the mirror image of other half [31]. Hence a \(2^{N-1}\)-sized ROM can be used the address bits of which can be obtained through the Ex-OR operation of all the LSB’s with the LSB of the newest sample as shown in Fig. 1e.

3 Proposed DA-Based Architecture

Consider a DFE shown in Fig. 2, with ‘\(N_{f}\)’ number of FFF coefficients and ‘\(N_{b}\)’ number of FBF coefficients which process the input signal \(u(n)\), \(\left( n\in Z\right) \), and the previous output decisions \(s(n)\), respectively. The equations describing the operation of the DFE are

where \(Q\left[ .\right] \) is the quantization operation.

and the output of FFF and FBF filters respectively is given as follows:

where \(\mathbf{w}^{T}=\left[ w_{0},w_{1},\ldots ,w_{N_{f}-1}\right] ,\) \(\mathbf{v}^{T}=\left[ v_{0},v_{1},\ldots ,v_{N_{b}-1}\right] \) are the coefficients of FFF and FBF respectively.

If each of \(u\left( n\right) \) and \(s\left( n\right) \) is represented by their signed \(2\)’s-complement representation, we have:

Employing the digit-serial arithmetic, using (17) we have

where \(f\left( w_{i},u_{i,P_{f}-1-j}\right) \) is given by

Now, if the ROM decomposition technique is also employed, then using (5) and (6) we have

Hence, Eqs. (29) and (31) describe the realization of FFF using both digit-serial input and ROM decomposition techniques. Similarly, for FBF we can have

The DA-based DFE architecture derived based on the above equations is shown in Fig. 3. It can be seen that each of the filters has their own DA block that works on both digit-serial and ROM decomposition techniques. The parameters \(P_{f}\) and \(P_{b}\) decide the number of digits used from each of input register for FFF and FBF, respectively. Similarly, the parameters \(M_{f}\) and \(M_{b}\) decide the number of ROMs operated in parallel for FFF and FBF, respectively. The filters FFF and FBF can be synchronized by choosing same number of digits from each of the input register i.e. by choosing \(P_{f}=P_{b}\). Hence, the output of FFF and FBF can be computed using just the shift and add operations, which makes the DFE free of hardware multipliers. The detailed internal structure of the FFF and/or FBF blocks in all the three cases, namely direct-memory architecture, reduced-memory architecture and OBC-memory architecture, are shown in Fig. 4. The internal structure consists of a register bank, a set of memory blocks, bank of adders and a shift-accumulator block. Input bits enter the register bank serially which form the address bits to the memories. The adder bank consists of a set of adders where the outputs from the memories are summed up. The result is then shift-accumulated to compute the output. Figure 4 shows the case where \(P=B\); however, the parameters \(P\) and \(L\) can be chosen based on the speed and complexity requirements. It can be seen from Fig. 4a that the contents of upper half of the memories look like the mirror image of the lower half, and hence a memory storing only one half of the contents can be used to perform DA filtering. Specifically, one half of the partial products of any memory in case of direct-memory architecture are nothing but the additive inverse of the other half. Hence, only one half of the partial products may be stored and the other half may be obtained live by taking their complement. This operation is depicted in Fig. 4b by the NOT gate used at the output of each ROM. The idea is particularly useful when the requirement for the memory size is large. When the ‘upper-half’ set of the partial products are stored in the memory, the lower half can be generated by using a multiplexer (MUX) as shown in Fig. 4b. Here, the most significant bit (MSB) is neglected in forming the address bits to the memory, instead it is used to find the effective address using the Ex-OR operation with each of the remaining address bits. In case of OBC-memory-based architecture, the size of the memories would be same as that of the reduced-memory architecture but contain more complex combinations of partial products. Further it needs an extra adder and a register for the storage and addition of the \(C_{{\text {initial}}}\) term to the accumulator output. The decision device takes an input signal that lies between a set of signal levels and quantizes it to a set of pre-defined levels that depends on the modulation scheme employed. The control circuitry sends the required timing signals for the operation of FFF and FBF.

4 Implementation Results

In order to test the proposed architecture, a DFE-based channel-equalizer system has been created like the one shown in Fig. 5 where a frequency-selective channel is taken. A set of message signals each modulated using binary phase shift keying (BPSK) has been transmitted. The received symbols (containing the ISI) are then passed through the FFF for the removal of anti-causal part of ISI. The remaining ISI is then cancelled out using the decisions taken on previous symbols, and as observed in Fig. 5, the original transmitted symbols can be retained if there is no error propagation in the DFE. For the ease of analysis, we chose \(N_{b}=N_{f}-1\). The input to the DFE and the weights of FFF and FBF are chosen to be fixed point Q2.6 signed \(2\)’s-complement representation, and the system is implemented using verilog HDL. To attain the maximum speed, \(P_{f}\) and \(P_{b}\) are chosen to be eight and the outputs of each memory are summed up by the adder bank, and hence one sample of output of both FFF and FBF is computed in one clock cycle. The decision device quantizes its input to either of the two symbols (as BPSK scheme is used). A rough estimations and comparisons of number of logic elementsFootnote 1, maximum usable frequency (\(F_{\text {max}}\)) and core static power consumption estimates for the MAC-based and DA-based implementations for various FBF taps implemented on Altera Cyclone III EP3C55F484C6 at different operating conditions (0, \(85\,^\circ \)C) are summarized in Tables 1, 2 and 3, respectively. From the table, it can be observed that the DA-based implementation outperforms the MAC-based implementation in all the cases. For the case of \(4\)-tap FBF, the difference in the number of logic elements for DA-based and MAC-based implementations is very less; however, the maximum usable frequency (\(F_{{\text {max}}}\)) is a bit morein case of DA-based implementation. Further, it can be seen that as the filter-order increases, the difference in the number of logic elements between the MAC-based and DA-based implementation is getting increased while the maximum usable frequency of DA-based implementation is approaching to that of MAC-based implementation. This can be explained as follows: For lower-order filters, the number of MAC units used in the MAC-based implementation is quite low, and therefore the number of memories replacing the multipliers in case of DA-based implementation will also be less. But as the filter order increases, the number of memories replacing the multipliers in DA-based implementation will be high, and hence the difference in the number of logic elements utilized will be high. The critical path (that decides the maximum usable frequency) of the FBF filter for the MAC-based and DA-based direct-memory implementation is given as \({\text {CP}}_{{\text {MAC}}}=T_{m}+{\text {NT}}_{A}\) and \({\text {CP}}_{{\text {DA-direct-memory}}}=T_{{\text {memory}}}+\left( N-1\right) T_{A}\) where \(T_{{\text {memory}}}\), \(T_{\mathrm{m}}\), and \(T_{\mathrm{A}}\) are the computation times of memory, multiplier and adder units respectively. For lower-order filters, because of the presence of less number of adder units, the critical path of MAC-based and DA-based implementation differs. As the filter order increases, the number of adders increase, and hence the critical path of the DA-based implementation approaches that of MAC-based implementation due to the fact that the terms \(T_{{\text {memory}}}\) and \(T_{M}\) become negligible compared to the term \(\left( N-1\right) T_{A}\). In case of reduced-memory architecture, even though the memory size is halved, the additional hardware overhead is added by the Ex-OR gates, multiplexer and adder units. The number of Ex-OR gates is fixed for a fixed \(P\) (which depends on \(B\)), while the number of adder units and MUXs depend upon the value of \(M\) and \(P\). The critical path in this case would be \({\text {CP}}_{{\text {DA-reduced-memory}}}=T_{{\text {reduced-memory}}}+T_{{\text {MUX}}}+{\text {NT}}_{A}\). Since the computation time of a memory does not vary greatly with respect to size of it, the additional computation time of reduced-memory architecture is just \(T_{{\text {MUX}}}+T_{A}\) units. The scheme with OBC-DA-based memory uses slightly more number of logic elements in which case, the critical path would be \({\text {CP}}_{{\text {DA-reduced-memory}}}=T_{{\text {OBC-memory}}}+T_{{\text {MUX}}}+(N+1)T_{A}\) .

5 Conclusion

A distributed arithmetic-based realization of the decision feedback equalizer has been presented. In order to attain the maximum speed, digit-serial input has been employed, apart from that ROM decomposition technique is also employed to eliminate the exponential increase in the size of memories as the FFF and FBF filter orders increase. Two architectures based on digit-serial and ROM decomposition variants of DA have been presented, and results show that both are efficient when compared to MAC-based implementation. A third architecture which uses DA-OBC scheme has also been presented which uses slightly more number of hardware resources compared to reduced-memory architecture. For lower-order filters, the maximum usable frequency is a bit high compared to that of MAC-based implementation while the number of logic elements is only few units less. For higher-order filters, the number of logic elements is found to be almost half that of the MAC-based implementation. The proposed architecture can be used in high-data-rate modems such as the case of IEEE 802.11b scenarios. The speed of the proposed implementation can be further increased by using techniques that reduce the number of adder units, which results in the reduction of critical path.

Notes

Logic elements (LEs) are the smallest units of logic in the Cyclone III device family architecture. Each logic element consists of four input lookup table, a programmable register and many other features. (www.altera.com/literature/hb/cyc3/cyc3_ciii51002)

References

D.J. Allred, H. Yoo, V. Krishnan, W. Huang, D.V. Anderson, LMS adaptive filters using distributed arithmetic for high throughput. IEEE Trans. Circuits Syst. I Regul. Pap. 52(7), 1327–1337 (2005)

M. Arjmand, R.A. Roberts, On comparing hardware implementations of fixed-point digital filters. IEEE Circuits Syst. Mag. 3(2), 2–8 (1981)

W.P. Burleson, L.L. Scharf, A VLSI design methodology for distributed arithmetic. J. VLSI Sig. Process. Syst. Signal Image Video Technol. 2(4), 235–252 (1991)

W.P. Burleson, L.L. Scharf, A VLSI implementation of a cellular rotator array, in IEEE Custom Integrated Circuits Conference, pp. 8.1/1–8.1/4 (1988)

W.P. Burleson, L.L. Scharf, VLSI design of inner-product computers using distributed arithmetic, in IEEE International Symposium Circuits Systems (ISCAS), pp. 158–161 (1989)

C.S. Burrus, Digital filter structures described by distributed arithmetic. IEEE Trans. Circuits Syst. 24(12), 674–680 (1977)

C.P. Callender, S. Theodoridis, C.F.N. Cowan, Adaptive non-linear equalisation of digital communications channels. Signal Process. 40(2–3), 325–333 (1994)

H.C. Chen, J.I. Guo, C.W. Jen, A new group distributed arithmetic design for the one dimensional discrete Fourier transform, in IEEE International Symposium Circuits Systems (ISCAS), vol. 1 (2002)

H.C. Chen, J.I. Guo, C.W. Jen, T.S. Chang, Distributed arithmetic realisation of cyclic convolution and its DFT application, in IEEE Proceedings on Circuits, Devices and Systems, pp. 615–629 (2005)

C.F.N. Cowan, J. Mavor, New digital adaptive-filter implementation using distributed-arithmetic techniques. IEEE Proc. Commun. Radar Signal Process. 128(4), 225–230 (1981)

S.P. Joshi, R. Paily, Distributed arithmetic based Split-Radix FFT. J. Signal Process. Syst. 75(1), 85–92 (2014)

P.K. Meher, Hardware-efficient systolization of DA-based calculation of finite digital convolution. IEEE Trans. Circuits Syst. II Exp. Br. 53(8), 707–711 (2006)

P.K. Meher, Unified systolic-like architecture for DCT and DST using distributed arithmetic. IEEE Trans. Circuits Syst. I Reg. Pap. 53(12), 2656–2663 (2006)

B.K. Mohanty, P.K. Meher, A high-performance energy-efficient architecture for FIR adaptive filter based on new distributed arithmetic formulation of block LMS algorithm. IEEE Trans. Signal Process. 61(4), 921–932 (2013)

K.K. Parhi, VLSI Digital Signal Processing Systems: Design And Implementation (Wiley India Pvt, Limited, 2007)

S.Y. Park, P.K. Meher, Low-power, high-throughput, and low-area adaptive FIR filter based on distributed arithmetic. IEEE Trans. Circuits Syst. II Exp. Br. 60(6), 346–350 (2013)

S.Y. Park, P.K. Meher, Efficient FPGA and ASIC realizations of a DA-based reconfigurable FIR digital filter. IEEE Trans. Circuits Syst. II Exp. Br. 61(7), 511–515 (2014)

M.S. Prakash, R.A. Shaik, Low-area and high-throughput architecture for an adaptive filter using distributed arithmetic. IEEE Trans. Circuits Syst. II Exp. Br. 60(11), 781–785 (2013)

J.G. Proakis, D.G. Manolakis, Digital Communications, vol. 3 (McGraw-hill, New York, 1995)

S.U.H. Qureshi, Adaptive equalization. IEEE Commun. Mag. 73(9), 1349–1387 (1985)

J. Ramìrez, A. Garcìa, U. Meyer-Bäse, F. Taylor, A. Lloris, Implementation of RNS-based distributed arithmetic discrete wavelet transform architectures using field-programmable logic. J. VLSI Signal Process. Syst. Signal Image Video Technol. 33(1–2), 171–190 (2003)

M. Rawski, M. Wojtynski, T. Wojciechowski, P. Majkowski, Distributed arithmetic based implementation of Fourier transform targeted at FPGA architectures, in International conference on Mixed Design of Integrated Circuits and Systems (MIXDES), pp. 152–156 (2007)

G. Seetharaman, B. Venkataramani, G. Lakshminarayanan, Design and FPGA implementation of self-tuned wave-pipelined filters with distributed arithmetic algorithm. Circuits Syst. Signal Process. 27(3), 261–276 (2008)

R.A. Shaik, M. Chakraborty, An efficient realization of the decision feedback equalizer using block floating point arithmetic, in IEEE Asia Pacific Conference on Circuits and Systems (APCCAS), pp. 1047–1050 (2006)

R.A. Shaik, M. Chakraborty, A block floating point treatment to finite precision realization of the adaptive decision feedback equalizer. Signal Process. 93(5), 1162–1171 (2013)

G. Sicuranza, G. Ramponi, Adaptive nonlinear digital filters using distributed arithmetic. IEEE Trans. Acoust. Speech Signal Process. 34(3), 518–526 (1986)

S.G. Smith, S.A. White, Hardware approaches to vector plane rotation, in International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 4, pp. 2128–2131 (1988)

B.S. Tan, G.J. Hawkins, Speed-optimised microprocessor implementation of a digital filter. IEEE Proc. Comput. Dig. Tech. 128(3), 85–93 (1981)

L. Wanhammar, Implementation of wave digital filters using distributed arithmetic. Signal Process. 2(3), 253–260 (1980)

C.H. Wei, J.J. Lou, Multimemory block structure for implementing a digital adaptive filter using distributed arithmetic. IEEE Proc. Electron. Circuits Syst. 133(1), 19–26 (1986)

S.A. White, Applications of distributed arithmetic to digital signal processing: a tutorial review. IEEE Acoust. Speech Signal Process. Mag. 6(3), 4–19 (1989)

J. Xie, P.K. Meher, J. He, Hardware-efficient realization of prime-length DCT based on distributed arithmetic. IEEE Trans. Comput. 62(6), 1170–1178 (2013)

S. Yu, E.E. Swartziander, DCT implementation with distributed arithmetic. IEEE Trans. Comput. 50(9), 985–991 (2001)

J. Zeman, H.T. Nagle, A high-speed microprogrammable digital signal processor employing distributed arithmetic. IEEE J. Solid-State Circuits 15(1), 70–80 (1980)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Prakash, M.S., Shaik, R.A. & Koorapati, S. An Efficient Distributed Arithmetic-Based Realization of the Decision Feedback Equalizer. Circuits Syst Signal Process 35, 603–618 (2016). https://doi.org/10.1007/s00034-015-0076-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-015-0076-7