Abstract

This paper proposes a function-controlled variable step size least mean square (VSLMS) algorithm for channel estimation in low-SNR or colored input signals. The proposed method aligns the step size update with the steady-state error and alleviates the impact of high-level noise. It improves the filter performance in terms of fast convergence rate and low misadjustment error. Simulation results demonstrate the effectiveness and verify the theoretic analysis of the proposed VSLMS algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Because of their simplicity and robustness, the least mean square (LMS) algorithms are widely used in applications such as echo cancellation, unknown channel estimation, and speech signal processing [1, 7–12]. One notable characteristic of the LMS method is that the weight coefficients are obtained by the stochastic gradient descent method, which brings about the effect that different step sizes produce different misadjustment errors [7]. A large step size is associated with a high-level steady-state error, while a small step size does not ensure fast convergence. In practice, the convergence performance of LMS algorithms often deteriorates with high-level measurement noise or colored input signals [1].

In the past two decades, several step-size updating adaptive algorithms have been developed to achieve improved LMS performance [1–3, 5, 6, 8, 13–15]. For instance, Kwong and Johnston [8] used the squared instantaneous error to derive a variable step size LMS filter, which gives an improved performance in processing correlated signals. To have better control of the step size, Ang and Farhang-Boroujeny [1] developed a scheme using the inner product between adjacent gradient vectors, and Zhang et al. [15] applied the squared Euclidean norm of the gradient vector. Also, Costa and Bermudez [2] proposed a robust variable step size LMS (VSLMS) algorithm, whose performance is less sensitive to the power of measurement noise than that of [8]. Hwang and Li [6] proposed a scheme using a gradient-based weighted average to control the step size. This scheme enables a faster convergence rate and has lower misadjustment error than the VSLMS schemes reported in [1, 8, 15]. The averaged gradient vector used in [6], however, is affected by the noise power after the algorithm converges. Other VSLMS methods include the wavelet-based affine projection algorithm [14] and the normalized LMS algorithm with robust regulation [3].

This paper proposes a new VSLMS method, which selects an appropriate function to control the step size. The proposed algorithm aims to attain lower steady-state misadjustment errors under the same convergence rates obtained by other VSLMS algorithms mentioned above. Guidelines on the selection of the control function and the updating parameter are presented. In addition, the excess MSE of the proposed method is theoretically derived and verified by the simulation results.

2 Algorithm Formulation

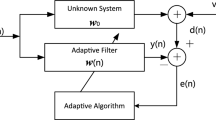

The recurrence formula of the variable step size LMS algorithm is described as follows:

where e(k) is the instantaneous error, (⋅)∗ denotes the conjugate operator, and (⋅)H the conjugate transpose operator. \({\bf{x}}(k) = {[x(k), \ldots,x(k - M + 1)]^{T}}\) is the input signal vector, (⋅)T denotes the transpose operator, and M is the length of the filter. \({\bf{w}}(k) = {[w(k), \ldots,w(k - M + 1)]^{T}}\) is the weight vector. d(k) is the desired signal and μ(k) the step size of the algorithm. The widely used step size control scheme is [7, 8]

where 0<α<1 and γ>0. The updating parameter γ, which is a constant, plays an important role in the filter convergence rate as well as the level of steady-state misadjustment error. However, a better LMS performance can be achieved when γ is considered a varied parameter [1, 2, 6, 8, 15]. A relatively large γ at the earlier iterations enables faster convergence, whereas a small γ at the later iterations may ensure a smaller misadjustment level. Moreover, it would be better for the noise power to have less influence on the step size. A higher noise power often results in a larger step size, which leads to greater steady-state error, and the algorithm loses its efficiency.

The proposed function-controlled VSLMS scheme has better control on the step size so that the impact of high-level noise is alleviated. The idea is that we use an appropriate function to control the updating parameter γ and, at the same time, make the steady-state step size free from the interference of noise through the use of the estimated mean square error (MSE). The proposed step size update recursion is expressed in the form

where 0<α<1, γ s >0 is an updating parameter for the proposed algorithm, and \(\hat{e}_{\mathrm{ms}}^{2}(k)\) is the estimated MSE defined as

The weighting factor β lies in the range of 0<β<1 and is close to one.

As the adaptation progresses, the weight of the instantaneous error e(k) of (1) on the step size should be gradually reduced. To achieve this goal, the control function f(k) is introduced and chosen as a decreasing function. The control function f(k) selected for the proposed VSLMS algorithm is

N is a relatively large constant number, say N>100. Other candidates for the control function are

3 Performance Analysis

The following assumptions are used to conduct the performance analysis [4, 5]:

-

(1)

The input signal x(k) and system noise v(k) are zero-mean, wide sense stationary processes. They are independent of each other.

-

(2)

x(k) and d(k) are jointly Gaussian random processes.

-

(3)

The weight vector \({\bf{w}}(k)\) is independent of the input signal \({\bf{x}}(k)\) and desired signal d(k).

It follows from (5) that the estimated MSE \(\hat{e}_{\mathrm{ms}}^{2}(k)\) can be obtained recursively:

Taking the expectation on both sides, (9) becomes

In the steady state, i.e., k→∞ , (10) becomes

Similarly, we can obtain E{μ(k)} recursively, when k≫N,

Letting k→∞ , we have

The excess MSE ξ ex of the LMS algorithm can be expressed as [7]

where \(\sigma_{x}^{2}\) is the variance of the input signal, μ c the steady-state step size, and ξ min the least MSE of the filter. Substituting (13) into (14), we obtain the excess MSE

and the misadjustment of the proposed algorithm

Let μ(0) and \(\hat{e}_{\mathrm{ms}}^{2}(0)\) be set to zero; from (10) and (12), we have

Then the upper bound of E{μ(k)} is

To guarantee the convergence of the LMS algorithm, a sufficient condition is [7]

where \(\bf{R}\) is the autocorrelation matrix of \({\bf{x}}(n)\). It follows from (17) and (19) that the parameter γ s can be selected according to

Next, we discuss the condition the control function f(k) should fulfill. From (9), suppose that \(\hat{e}_{\mathrm{ms}}^{2}(0) = 0\); then we have

Substituting (21) into (4) and taking the expectation operation yields

Letting μ(0)=0, the upper bound of E{μ(k)} can be obtained recursively:

It follows from (19) and (23) that the control function f(k) should be chosen to satisfy the following condition:

As for the computational complexity, the proposed algorithm requires 2M+9 multiplications and 2M+1 additions to compute one filter output. This complexity is slightly higher than that of [8], but lower than that of [6].

4 Simulation Results

In this section, the performance of the proposed algorithm is evaluated in the situation of unknown channel estimation, similar to the simulations used in [8] and [13], and compared with the adaptive LMS algorithms reported in [1, 2, 6, 8, 14, 15]. The adaptive filter and the FIR system are assumed to have the same number of taps. The unknown channel is chosen as \({\bf{w}}_{o} = [0.307, 0.463, 0.617, 0.463, 0.307]^{T}\), and the output signal is d(k)=y(k)+v(k), where \(y(k) = {\bf{w}}_{o}^{T}{\bf{x}}(k)\) and v(k) is the measurement noise. The parameter values of the proposed algorithm used in the simulation are α=0.993, β=0.92, N=200, and γ s =0.002. The trace of the matrix \(\bf{R}\) is set as 5. Note that the upper bound of the updating parameter γ s is 0.0448, computed from (20). The excess MSE of (15) is employed as the performance metric for various LMS algorithms. Results are averaged over 100 independent runs. The step size update equations and the parameter values for other VSLMS algorithms are listed in Table 1.

Two types of input signals are considered. One is a white Gaussian signal of zero mean and unit variance; another is a colored signal obtained by filtering the zero-mean, unit variance white Gaussian noise through a third-order system:

Results are compared at 10 dB and 0 dB of the signal-to-noise ratio (SNR) calculated by

Simulation results on the excess MSE for the proposed VSLMS method and other adaptive LMS algorithms are shown in Figs. 1 and 2. Figure 1 compares the excess MSE for a white Gaussian input signal, and Fig. 2 for the colored signal. Performance under the low-SNR environment is shown in Figs. 1(b) and 1(b). We observe from Figs. 1 and 2 that the proposed VSLMS method enables as fast a convergence as other VSLMS algorithms, and is able to achieve a lower misadjustment error. The excess MSE by the proposed method at SNR = 10 dB is −35 dB, and at SNR = 0 dB it is −24.8 dB. These simulation results are almost the same as the excess MSEs of −34.5 dB and −24.5 dB calculated by (15). This implies that the proposed method is able to achieve a misadjustment of 0.0035, as expected from (16). On the other hand, all the other VSLMS algorithms only attain the excess MSE in the neighborhood of −30 dB at SNR = 10 dB and −20 dB at SNR = 0 dB, which points to misadjustments of around 0.01.

The behavior of step size adaptation by different VSLMS algorithms is illustrated in Fig. 3, which shows that the steady-state step size of the proposed method approaches 0.0012. This value is slightly smaller than the expected 0.0014 calculated by (13). The step size of the proposed method is controlled to have a relatively large value in the beginning for fast convergence, and is slowly decreased to ensure good control to reach a lower level of misadjustment.

The performance of different control functions is shown in Fig. 4. We observe that the control function (8) gives better performance than the other two when the input is a colored signal, whereas there is not much difference in performance among the three control functions for a white Gaussian input.

5 Conclusion

This paper has presented a function-controlled, variable step size LMS algorithm. The proposed method exploits an adjustable adaption scheme to enhance the LMS performance and alleviates the influence of the high-level noise power. Guidelines on the selection of the control functions and the updating parameter have been given. In addition, the excess MSE of the proposed method is theoretically derived and verified by experiment. The simulation results have demonstrated the improved performance of the proposed VSLMS scheme in terms of speed of convergence and level of misadjustment error. In conclusion, the proposed VSLMS method is suitable for unknown channel estimation applications.

References

W.P. Ang, B. Farhang-Boroujeny, A new class of gradient adaptive step-size LMS algorithms. IEEE Trans. Signal Process. 49, 805–810 (2001)

M.H. Costa, J. Bermudez, A robust variable step size algorithm for LMS adaptive filters, in Proc. IEEE Int. Conf. Acoust. Speech Signal Process, vol. 3, (2006), pp. 93–96

Y.S. Choi, H.C. Shin, W.J. Song, Robust regularization for normalized LMS algorithms. IEEE Trans. Circuits Syst. II, Express Briefs 53, 627–631 (2006)

A. Feuer, E. Weinstein, Convergence analysis of LMS filters with uncorrelated Gaussian data. IEEE Trans. Acoust. Speech Signal Process. 33, 222–230 (1985)

L.L. Horowitz, K.D. Senne, Performance advantage of complex LMS for controlling narrow-band adaptive arrays. IEEE Trans. Acoust. Speech Signal Process. 29, 722–736 (1981)

J.K. Hwang, Y.P. Li, Variable step-size LMS algorithm with a gradient-based weighted average. IEEE Signal Process. Lett. 16, 1043–1046 (2009)

S. Haykin, Adaptive Filter Theory (Prentice-Hall, Englewood Cliffs, 2002)

R.H. Kwong, E.W. Johnston, A variable step size LMS algorithm. IEEE Trans. Signal Process. 40, 1633–1642 (1992)

Y. Liu, R. Ranganathan, M.T. Hunter, W.B. Mikhael, Complex adaptive LMS algorithm employing the conjugate gradient principle for channel estimation and equalization. Circuits Syst. Signal Process. 31, 1067–1087 (2012)

A. Mader, H. Puder, G.U. Schmidt, Step-size control for acoustic echo cancellation—an overview. Signal Process. 80, 1697–1719 (2000)

R.M. Udrea, D.N. Vizireanu, S. Ciochina, An improved spectral subtraction method for speech enhancement using a perceptual weighting filter. Signal Process. 18, 581–587 (2008)

R.M. Udrea, D.N. Vizireanu, S. Ciochina, S. Halunga, Nonlinear spectral subtraction method for colored noise reduction using multi-band Bark scale. Signal Process. 88, 1299–1303 (2008)

B. Widrow, J.M. McCool, M. Larimore, C.R. Johnson, Stationary and nonstationary learning characteristics of the LMS adaptive filter. Proc. IEEE 64, 1151–1162 (1976)

W.W. Wu, Y.S. Wang, Wavelet based affine projection adaptive filter. Adv. Intell. Soft Comput. 110, 487–494 (2012)

Y. Zhang, N. Li, J.A. Chambers, Y. Hao, New gradient-based variable step size LMS algorithms. EURASIP J. Adv. Signal Process. (2008). doi:10.1155/2008/529480

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, M., Li, L. & Tai, HM. Variable Step Size LMS Algorithm Based on Function Control. Circuits Syst Signal Process 32, 3121–3130 (2013). https://doi.org/10.1007/s00034-013-9598-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-013-9598-z