Abstract

This paper mainly investigates the disturbance rejection problem of Markov jump systems with bounded disturbance and saturation, in the case that only part of the transition probabilities are known in the discrete-time domain. The mode-dependent state feedback controller is designed to ensure that the resulting closed-loop system is stochastically stable and satisfies the optimal disturbance rejective index, meanwhile, the state of the system is to remain in an expected small region including origin in terms of disturbances. Specifically, the stochastically stable conditions are formulated by parameter-dependent Lyaponuv methodology and further established as linear matrix inequalities (LMIs). Sweeping the auxiliary parameters in the domain of definition, the global optimal disturbance rejective index is obtained. Finally, tolerance capability is further analyzed to evaluate the disturbance rejection level. Two numerical examples, a common linear system and a Markov jump system with completely known and partly unknown transition probabilities, are presented to illustrate the potential of the results, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In practice, the structure and parameters of abundant engineering systems tend to vary due to random changes such as component failures, changing subsystem interconnection and etc. In this case, deterministic models cannot perfectly represent the dynamic of these changes. Thus, Markov jump linear systems (MJSs) are a set of dynamics with transitions among the models governed by a Markov chain taking values in a finite set. In the engineering field, the Markov chain model associated with this characteristic of stochastic modeling superiority has been widely applied to model the dynamic of system during the past decades [1, 6, 11]. More attractive results in control field have been obtained (see controller design [7, 12], 2D MJSs filter design[15], Kalman filter [13], saturated controller design [8, 9], sliding mode control [10, 14, 16, 17]). Typically, MJSs evolve according to Markov stochastic process (or chain). The transition probabilities (TPs), one crucial factor in the Markov process (or chain), determine the behavior and performance of MJSs. However, sometimes TPs are impractical to full accessible due to the fact that the cost may be probably expensive. A typical example can be found in communication networks [21, 24], in which the packet dropouts may be random in different internals of networks and the TPs are costly to access. Fortunately, the efforts directly facing the partially unknown TPs have been proved to be feasible, pioneer works with respect to partially unknown TPs have also been reported (see robust stabilization [18], fault detection [19, 20]), H ∞ control and filter [21, 22]).

Also, in practice, actuator saturation nonlinearity generally exists. As we know, almost all the actuators have a limited working region; once the system input exceeds the maximal capacity or lower than the minimal capacity, then, it will lead to the actuator saturation nonlinearity, and such nonlinearity is a complicated nonlinear constraint and cannot be avoided in the system. It occurs very common in a lot of biochemistry systems, networked control systems, and communication systems. Since this nonlinearity degrades system performance or even leads to unstable system behavior, actuator saturation may probably be the most dangerous nonlinearity in many systems. Thus, saturation nonlinearity has persistently received attention over the past decades, and some attempts have been done on control problems of linear systems [2, 4, 5].

On the other hand, external disturbance often occur in MJSs because of the numbers of subsystems and the stochastic jump through the transition process, and this detrimental factor may reduce the performance of the controller, or what is more, make the closed-loop system unstable. Plenty of results referred to disturbance for switch systems and MJSs have been reported (see H ∞ control and filter [3, 23]); in this literature, H ∞, a bounded input and output index, is widely used to evaluate the system performance by investigating the energy of controlled output. Despite this, H ∞ performance index assumes the external disturbance are L 2 space integrable or summable. However, in most engineering fields, the bound of perturbance is more convenient to obtain. For bounded disturbance, the problem of disturbance attenuation for linear systems has been exploited [4, 5]. Surprisingly, although plenty of results have been reported about the controller design for Markov jump systems, no attention has been paid to investigate the problem of disturbance rejection for MJLs subject to saturation, which is generally existed in practice, even in a full accessible TPs’ context. Therefore, this motivates us to address the challenge and necessary work of solving disturbance rejection problem for discrete Markov jump systems subject to partly unknown TPs and saturation.

In this paper, we consider the problem of disturbance rejection for Markov jump systems with partly unknown TPs and saturation in the discrete-time domain. The approach followed in this paper gives a sufficient condition to design mode-dependent state feedback controller to guarantee that the resulting closed-loop system is stochastically stable and the influence caused by disturbance to the state is reduced to the minimal level. Moreover, the disturbance rejection index relationship among the common linear system and Markov jump system with completely known and partly unknown transition probabilities is analyzed. Finally, tolerance capability is also analyzed to evaluate disturbance rejection level.

This paper is organized as follows: In Sect. 2, the dynamical structure of the system is defined and the purposes of the paper are stated. Section 3 gives the concept of stochastic stability. In Sect. 4, stabilization conditions of disturbance rejection are derived. In Sect. 5, the tolerance is analyzed to evaluate the disturbance rejection level in terms of LMIs formulation. In Sect. 6, two numerical examples, a common linear system and a Markov jump system with completely known and partly unknown transition probabilities are provided to illustrate the validity of the results, respectively. Section 7 concludes the paper.

Notations. In the sequel, the notation R n stands for a n-dimensional Euclidean space; the transpose of a matrix is denoted by A T; E{⋅} denotes the mathematical statistical expectation of the stochastic process or vector; ∂ is the boundary of a set; a positive-definite matrix is denoted by P>0; I is the unit matrix with appropriate dimension; and ∗ means the symmetric term in a symmetric matrix.

2 Problem Statement and Preliminaries

Consider a probability space (M,F,P) where M, F and P represent the sample space, the algebra of events and the probability measure defined on F, respectively, then the following discrete-time Markov jump systems (MJSs) are considered in this paper:

where x k ∈R n is the state vector of the system, u k ∈R m is the input vector of the system, \(w_{k}\in\{w_{k}^{\mathrm{T}}w_{k}\leq1\}\) is the bounded external disturbance vector of the system. σ(u k )=[σ(u 1k ) σ(u 2k ) ⋯ σ(u mk )]T and \(\sigma(u_{lk})=\{\operatorname{sign}(u_{lk}) \min\{1,|u_{lk}|\}\}\), {r k ,k≥0} is the concerned discrete-time stochastic Markov chain which takes values in a finite state set Γ={1,2,3,…,τ}, and r 0 represents the initial mode, the transition probability matrix is defined as π(k)={π ij (k)}, i, j∈Γ, π ij (k)=P(r k+1=j|r k =i) is the transition probability from mode i at time k to mode j at time k+1, which satisfies π ij (k)≥0 and \(\sum_{j=1}^{\tau}\pi_{ij}(k)=1\). The transition probabilities matrix is defined by

The set Γ contains τ modes of system (2.1), when r k =i, i∈Γ, for simplicity, the matrices A(r k ), B(r k ), E(r k ) and F(r k ) are denoted as A i , B i , E i , and F i .

In addition, the matrix of partly unknown transition probabilities means some elements in matrix π are not available, for instance, consider system (2.1) with four operation modes, the transition probabilities matrix π may be shown as

where “?” represents the inaccessible transition probabilities.

For clarity, here, we denote \(\pi=\pi^{k}_{i} +\pi^{uk}_{i}, \forall i\in\varGamma\), if \(\pi^{k}_{i}\neq0\), and redescribe it as \(\pi^{k}_{i}=(\kappa^{1}_{i},\ldots,\kappa^{l}_{i}), \forall 1\leq l\leq \tau\), where \(\kappa^{l}_{i}\) represents the lth known element in the ith row of π, \(\varPi^{k}_{i}=\sum_{j\in\pi^{k}_{i}}\pi_{ij}\). Before proceeding with the study, we briefly introduce some preliminaries.

Definition 2.1

For any initial mode r 0, and a given initial state x 0 which evolves in an initial state set, discrete-time Markov jump system (2.1) (with w k =0) is said to be stochastically stable such that

Definition 2.2

Given a matrix H i for system (2.1), one can denote h qi as the qth row of matrix H i , and then, a symmetric polyhedron set is defined as follows:

Lemma 2.1

[4]

Given matrices u k ∈R m and v k ∈R m for system (2.1), if |v k |<1, then, \(\sigma(u_{k})=\sum_{t=1}^{2^{m}}\theta_{t}(M_{t}u_{k}+M_{t}^{-}v_{k})\), where 0≤θ t ≤1, \(\sum_{t=1}^{2^{m}}\theta_{t}=1\), M t are m×m diagonal matrices whose diagonal elements are either 1 or 0, and \(M_{t}^{-}=I-M_{t}\)

Lemma 2.2

[5]

Given matrices v k =H i x k for system (2.1), if x k ∈Θ(H i ), that is, |v k |<1, then, \(\sigma(F_{i}x_{k})=\sum_{t=1}^{2^{m}}\theta_{t}(M_{t}F_{i}+M_{t}^{-}H_{i})x_{k}\). Obviously, \(\{M_{t}F_{i}+M_{t}^{-}H_{i}: t\in[1,2^{m}]\}\) is the set formed by matrices, and these matrices are formed by choosing some rows from F i and the remaining from H i .

Lemma 2.3

[4]

For given symmetric matrices P i >0, one can define a series of ellipsoid sets as follows:

3 Stochastic Stability

The objective of this section is to discuss stochastic stability of the closed loop for system (2.1).

Theorem 3.1

Consider system (2.1) with partly unknown TP matrix (2.3) and w k =0, if there exists a set of symmetric positive definite matrices P i >0, and F i , H i , ∀i∈Γ, such that

then the set \(\bigcap^{\tau}_{i=1}\varepsilon(P_{i},1)\) is contained in the domain of stochastically stable attraction of the closed-loop system (2.1).

Proof

Construct a Lyapunov function as

Recalling Lemmas 2.1 and 2.2, ΔV(x k ,i) for system (2.1) is obtained as

For system (2.1), condition (3.1) implies

Denote δ=min t λ min(−Φ i (t)),∀i∈Γ where λ min(−Φ i (t)) is the minimal eigenvalue of (−Φ i (t)). Hence,

then

thus, the following inequality holds:

which implies

From Definition 2.1, the system (2.1) is stochastically stable. This completes the proof. □

4 Disturbance Rejection

In this section, we will develop the stabilization results which guarantee that system (2.1) satisfies the optimal disturbance rejective index in discrete-time context.

Theorem 4.1

For system (2.1) and a given ellipsoid ε(P i ,1),i∈Γ, if there exist an H i and a positive number η such that

and ε(P i ,1)⊂Θ(H i ), then \(\bigcap^{\tau}_{i=1}\varepsilon(P_{i},1)\) is a strictly invariant set for system.

Proof

Consider \(V(x)=x_{k}^{\mathrm{T}}P_{i}x_{k}\), we need to show that

since \(w_{k}^{\mathrm{T}}w_{k}\leq1\) and using the fact that \((a+b)^{\mathrm{T}}(a+b)\leq(1+\eta)a^{\mathrm{T}}a+(1+\frac{1}{\eta})b^{\mathrm{T}}b\),

To prove the strict invariance, ∀t∈[1,2m], x k ∈⋂ε(P i ,1), one sufficient condition is that there exists an η such that

Noticing that \(1=x_{k}^{\mathrm{T}}P_{i}x_{k}\) on ∂ε(P i ,1), we see that (4.4) is guaranteed by (4.1). □

Theorem 4.2

For a given initial x 0 and reference set χ 0, consider system (2.1) with partly unknown TP matrix (2.3), let α>0 be a scalar, if there exist symmetric positive definite matrices P i >0, and F i , H i , ∀i∈Γ, such that

when E i =0, recalling Theorem 3.1, system (2.1) is stochastically stable and if α>1, then x 0 is in the domain of stochastically stable attraction by the set theory.

Remark 4.1

For a given x 0 in the domain of stochastically stable attraction, since there are infinitely many choices of the feedback matrices F i , we will use this extra freedom for disturbance rejection; that is, to drive the state of the system (2.1) with partly unknown transition probabilities (2.3) and bounded disturbance into a region including the origin as small as possible and the state remaining in it.

Theorem 4.3

Consider a given initial x 0 and reference set χ 0 for system (2.1) with partly unknown TP matrix (2.3), let α>0 be a scalar, if there exist symmetric positive definite matrices P i >0, and F i , H i , ∀i∈Γ, such that

Thus system (2.1) is stochastically stable and has a prescribed disturbance reject performance index α.

Remark 4.2

Theorems 4.2 and 4.3 just give us the conceptional description of the domain of stochastically stable attraction and disturbance rejection level from the viewpoint of set theory. We will give the specific proofs of this description in Theorem 4.4.

Theorem 4.4

Consider a given initial x 0 and reference set χ 0 for system (2.1) with partly unknown TP matrix (2.3), let γ=α 2>0 be a scalar, if there exist symmetric positive definite matrices \(Q_{i}=P_{i}^{-1}>0\), and Y i =F i Q i , Z i =H i Q i , η>0, \(\lambda\in(0,\frac{\eta}{1+\eta})\), ∀i∈Γ, such that

then system (2.1) with partly unknown transition probabilities (2.3) and bounded disturbance is stochastically stable and has a prescribed optimal disturbance reject performance index α.

Proof

Let γ=α 2, From the literature [5] and Lemma 2.3, when the reference set is an ellipsoid ε(R,1), condition (4.10) is equivalent to \(\frac{R}{\gamma}\leq P_{i}\), which can be guaranteed by (4.14). Since one always has ∑ j∈Γ π ij =1, the left-hand side of (4.11) can be rewritten as

Due to the fact that \(\sum_{j\in\varGamma}\pi_{ij}= \pi^{k}_{i}+\pi^{uk}_{i}\), (4.19) is equal to

which is equivalent to

Using Schur complement, (4.15) and (4.16) guarantees (4.21). (4.17) implies the existence of λ max. From [5], (4.12) is equivalent to (4.18). This completes the proof. □

Remark 4.3

Theorem 4.2 gives a method to check if the initial state is in the domain of stochastically stable attraction. For a given initial state \(x_{0}=[x^{1}_{0},\ldots,x^{n}_{0}]^{\mathrm{T}}\), condition (4.6) is converted to

if α>1, then x 0 is in the domain of stochastically stable attraction.

Remark 4.4

Since Theorem 4.4 are parameter-dependent LMIs, before solving these LMIs, the two parameters η,λ need to be fixed. Sweeping these two parameters in the domain of definition, the global optimal (not local optimal in the domain of definition) disturbance rejection index can be obtained.

5 Tolerance Analysis

In this section, the disturbance tolerance capability, which reveals the disturbance rejective capability from another perspective of view, is evaluated. The main concern is, for a given disturbance rejection level α, what the maximum amplitude β of disturbance is, then we can still maintain the state of system in this small region associated with α including the origin.

Theorem 5.1

Consider a given initial x 0 and reference set χ 0 and \(w_{k}^{\mathrm{T}}w_{k}\leq\beta^{2}=\omega\), β>0 for system (2.1) with partly unknown TP matrix (2.3) and a given disturbance rejection level α, if there exist symmetric positive definite matrices \(Q_{i}=P_{i}^{-1}>0\) and Y i =F i Q i , Z i =H i Q i , η>0, \(\lambda\in(0,\frac{\eta}{1+\eta})\), ∀i∈Γ, such that

Analogous to the proof of Theorem 4.4, we omit the proof of Theorem 5.1.

Remark 5.1

Here, before solving these LMIs, we first fix these parameters ω≥1, η, λ, and then check the feasibility of LMIs (5.1)–(5.6) to get the supper bound of β.

6 Illustrative Examples

In this section, two numerical examples are investigated to evaluate the performance of our developed approach; the system parameters including four modes are given as follows:

Example 1

Consider system (2.1) as a special system, that is, mode 1–4 have the same parameters. In this case, the jump system is degenerated to a discrete linear system,

By solving LMIs (4.13)–(4.18), an admissible state feedback controller can obtained as F=[0.4572 −2.8252].

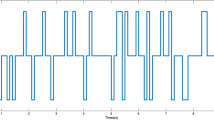

Figure 1 shows the state response of the corresponding closed-loop system for initial condition x 0=[−0.2 0.15]T with bounded disturbance w k =0.5sin(k). Applying Theorem 4.2, we obtain α>1, and this means x 0 is in the domain of stochastically stable attraction. And we can use the extra freedom of feedback controllers to restrict the disturbance. It can be seen from Fig. 1 that the influence of the disturbance is constrained to a small and flat ellipsoid, which means the disturbance is suppressed on a decent level.

Remark 6.1

It is noted that the simulation Example 1 shows the stable attraction with bounded disturbance and saturation, which reveals the case that the Markov model degenerates to a common linear system when the different four system modes change to one mode. This case shows that the results in [5] can be viewed as a special case in this stochastic mode frame.

Example 2

Consider the discrete jump system with four modes as the following data:

The two different types of the TP are considered in Table 1. Our purpose here is to design a mode-dependent state feedback stabilizing controller such that the resulting closed-loop system is stochastically stable and restrains the influence of disturbance in a certain level as small as possible. By solving (4.13)–(4.18) in Theorem 4.4, the controller gains are calculated as

Case I: F 1=[0.9630 −3.0605], F 2=[0.7432 −5.9797], F 3=[1.0385 −2.6259], F 4=[0.7095 −3.8489], Case II:F 1=[1.1194 −3.1332], F 2=[0.6515 −5.9469], F 3=[1.0074 −2.6157], F 4=[0.7098 −3.8491].

Figure 2 shows two diacritical state trajectories of the closed-loop systems with both completely and partly unknown transition probabilities matrix under the initial state x 0=[−0.2 0.15]T and the external disturbance w k =0.5sin(k).

It can be shown that the state of Case I (completely known transition probabilities matrix) is finally restricted in a more diminutive region including the origin than Case II (partly unknown transition probabilities matrix), this means the rejection level of disturbance in the completely known case is more effective than that in the partly unknown case. The main reason is that a complete transition probabilities matrix case more than sufficiently uses the transition information and then reduces the conservation.

Furthermore, applying the Theorem 4.4, we can also get the minimum rejection level index α (see Table 2), more precisely, the α min is 0.1698 in Case I while it is 0.2352 in Case II, which also demonstrates the conclusion shown in Fig. 2.

More interestingly, it can be seen that the curve of the linear system shows a mini-ellipsoid whereas that of Markov jump system (Fig. 2) finally displays an irregular appearance but it still in an expected region. This phenomenon impartially and virtually reveals the jumping behavior among different categories of system modes according to Markov dynamic.

From another perspective of view, since Table 2 gives us some guidance about choosing α, more precisely, \(\alpha_{\operatorname{Case\, I}}\geq0.1698\) and \(\alpha_{\operatorname{Case\, II}}\geq0.2352\), we here choose α from 0.25 to 0.45. Table 3 shows that, for the same given disturbance rejection level, Case I has better disturbance tolerance margin (tolerating larger amplitude of disturbance) than Case II. This result is consistent with that of Table 2.

Remark 6.2

Note that we also considered the disturbance tolerance capability of discrete Markov jump systems, that is, for a given toleration index, we found the maximal energy of the disturbances. This approach provides a new perspective on disturbance rejection.

7 Conclusions

This paper concerns the problem of disturbance rejection for discrete Markov jump systems with partly unknown transition probabilities and saturation subject to bounded disturbance. And this paper also gives the methodology to design mode-dependent state feedback controller to evaluate disturbance rejection level and disturbance tolerance capability. Two numerical examples are presented to illustrate the validity of the results. The developed results are expected to extend to issues such as output feedback and estimation in the presence of saturation.

References

Q. Ahmed, A. Iqbal, I. Taj, K. Ahmed, Gasoline engine intake manifold leakage diagnosis/prognosis using hidden Markov model. Int. J. Innov. Comput. Inf. Control 8(7), 4661–4674 (2012)

Y. Cao, Z. Lin, Y. Shamash, Set invariance analysis and gain-scheduling control for LPV systems subject to actuator saturation. Syst. Control Lett. 46(2), 137–151 (2002)

H. Dong, Z. Wang, D. Ho, H. Gao, Robust H-infinity filtering for Markovian jump systems with randomly occurring nonlinearities and sensor saturation: the finite-horizon case. IEEE Trans. Signal Process. 59(7), 3048–3057 (2011)

T. Hu, Z. Lin, B. Chen, An analysis and design method for linear systems subject to actuator saturation and disturbance. Automatica 38(2), 351–359 (2002)

T. Hu, Z. Lin, B. Chen, An analysis and design for discrete-time linear systems subject to actuator saturation and disturbance. Syst. Control Lett. 45(2), 97–112 (2002)

F. Kojima, J.S. Knopp, Inverse problem for electromagnetic propagation in a dielectric medium using Markov chain Monte Carlo method. Int. J. Innov. Comput. Inf. Control 8(3), 2339–2346 (2012)

N.M. Krasovskii, E.A. Lidskii, Analytical design of controllers in systems with random attributes. Autom. Remote Control 22(1), 1021–1025 (1961). Also 22(2), 1141–1146, 22(3), 1289–1294

H. Liu, E.K. Boukas, F. Sun, W.C. Daniel, Controller design for Markov jumping systems subject to actuator saturation. Automatica 42(3), 459–465 (2006)

H. Liu, F. Sun, E.K. Boukas, Robust control of uncertain discrete-time Markovian jump systems with actuator saturation. Int. J. Control 79(7), 805–812 (2006)

M. Liu, P. Shi, L. Zhang, X. Zhao, Fault tolerant control for nonlinear Markovian jump systems via proportional and derivative sliding mode observer. IEEE Trans. Circuits Syst. I, Regul. Pap. 58(11), 2755–2764 (2011)

J. Peng, J. Aston, C. Liou, Modeling time series and sequences using Markov chain embedded finite automata. Int. J. Innov. Comput. Inf. Control 7(1), 407–432 (2011)

P. Shi, E.K. Boukas, R. Agarwal, Control of Markovian jump discrete-time systems with norm bounded uncertainty and unknown delay. IEEE Trans. Autom. Control 44(11), 2139–2144 (1999)

P. Shi, E.K. Boukas, R. Agarwal, Kalman filtering for continuous-time uncertain systems with Markovian jumping parameters. IEEE Trans. Autom. Control 44(8), 1592–1597 (1999)

P. Shi, Y. Xia, G. Liu, D. Rees, On designing of sliding mode control for stochastic jump systems. IEEE Trans. Autom. Control 51(1), 97–103 (2006)

L. Wu, P. Shi, H. Gao, C. Wang, Robust H-infinity filtering for 2-D Markovian jump systems. Automatica 44(7), 1849–1858 (2008)

L. Wu, P. Shi, H. Gao, State estimation and sliding-mode control of Markovian jump singular systems. IEEE Trans. Autom. Control 55(5), 1213–1219 (2010)

L. Wu, X. Su, P. Shi, Sliding mode control with bounded L 2 gain performance of Markovian jump singular time-delay systems. Automatica 48(8), 1929–1933 (2012)

J. Xiong, J. Lam, H. Gao, D.W.C. Ho, On robust stabilization of Markovian jump systems with uncertain switching probabilities. Automatica 41(5), 897–903 (2005)

Y. Yin, P. Shi, F. Liu, Gain-scheduled robust fault detection on time-delay stochastic nonlinear systems. IEEE Trans. Ind. Electron. 58(10), 4908–4916 (2011)

Y. Yin, P. Shi, F. Liu, J.S. Pan, Gain-scheduled fault detection on stochastic nonlinear systems with partially known transition jump rates. Nonlinear Anal. Ser. B, Real World Appl. 13(1), 359–369 (2012)

L. Zhang, E.K. Boukas, Mode-dependent H ∞ filtering for discrete-time Markovian jump linear systems with partly unknown transition probabilities. Automatica 45(6), 1462–1467 (2009)

L. Zhang, E.K. Boukas, H ∞ control for discrete-time Markovian jump linear systems with partly unknown transition probabilities. Int. J. Robust Nonlinear Control 19(8), 868–883 (2009)

L. Zhang, P. Shi, Stability, l 2-Gain and asynchronous H-infinity control of discrete-time switched systems with average dwell time. IEEE Trans. Autom. Control 54(9), 2193–2200 (2009)

L. Zhang, Y. Shi, T. Chen, B. Huang, A new method for stabilization of networked control systems with random delays. IEEE Trans. Autom. Control 50(8), 1177–1181 (2005)

Acknowledgements

This work was partially supported by National Natural Science Foundation of China (61273087, 61134007), Program for Excellent Innovative Team of Jiangsu Higher Education Institutions, Jiangsu Higher Education Institutions Innovation Funds (CXZZ12−0743), the Fundamental Research Funds for the Central Universities (JUDCF12029).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, Y., Liu, F. Disturbance Rejection for Markov Jump Systems with Partly Unknown Transition Probabilities and Saturation. Circuits Syst Signal Process 32, 2783–2797 (2013). https://doi.org/10.1007/s00034-013-9593-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-013-9593-4