Abstract

The most general approach to the study of rare extreme events is based on the extreme value theory. The fundamental General Extreme Value Distribution lies in the basis of this theory serving as the limit distribution for normalized maxima. It depends on three parameters. Usually the method of maximum likelihood (ML) is used for the estimation that possesses well-known optimal asymptotic properties. However, this method works efficiently only when sample size is large enough (~200–500), whereas in many applications the sample size does not exceed 50–100. For such sizes, the advantage of the ML method in efficiency is not guaranteed. We have found that for this situation the method of statistical moments (SM) works more efficiently over other methods. The details of the estimation for small samples are studied. The SM is applied to the study of extreme earthquakes in three large virtual seismic zones, representing the regime of seismicity in subduction zones, intracontinental regime of seismicity, and the regime in mid-ocean ridge zones. The 68%-confidence domains for pairs of parameter (ξ, σ) and (σ, μ) are derived.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The statistical study of extreme events is necessary in many applications. Such extreme events are usually connected with the most powerful natural phenomena or with the most disastrous catastrophes. It seems that the most general and the most justified approach to statistical study of such events is based on the extreme value theory (EVT) and two of its main limit distributions: the Generalized Extreme Value Distribution (GEV) and the Generalized Pareto Distribution (GPD). Each of these distributions depends on three parameters.

The GEV parameters:

-

the form parameter ξ, −∞ < ξ < +∞;

-

the location parameter μ, −∞ < μ < +∞;

-

the scale parameter σ, 0 < σ < +∞.

The GPD parameters:

-

the form parameter ξ, −∞ < ξ < +∞;

-

the lower threshold parameter h, −∞ < h < +∞;

-

the scale parameter s, 0 < s < +∞.

The GEV describes the limit distribution of normalized maxima of increasing samples, whereas the GPD appears as the limit distribution of scaled excesses over sufficiently high threshold h values. There is a close connection between these two distributions. A Poisson flow of events having a GPD distribution is distributed in accordance with GEV distribution. In this case, the form parameter ξ of both distributions is the same, but two other parameters differ. There are simple formulas connecting these parameters (see Embrechts et al. 1997; Pisarenko and Rodkin 2010). The practical use of the GPD and the GEV is aggravated often by small sample size of available data. The small size situation for the GPD was studied by Pisarenko et al. (2017) where the quantile method (QM) was found to be the most efficient. In this paper, we concentrate on the case of the GEV.

The GEV distribution function has a form:

If ξ > 0, then the function 1−F(x) decreases as x −1/ξ. If ξ = 0, then we have usual exponential distribution \( F(x)\,{ = }\,1 - \exp \left\{ { - \frac{x - \mu }{\sigma }} \right\} \). It should be remarked that the normal distribution also leads to ξ = 0. If ξ < 0, then one has distribution restricted from above by μ−σ/ξ. We denote this limit as M max = μ−σ/ξ. It should be noted that the case of power-like decrease (ξ > 0) can be reduced to the case ξ = 0 by logarithmic transform. If a random variable X has power distribution (the Pareto distribution) F (x) = 1−(h/x)b, x ≥ h, then log(X) has the exponential distribution. Thus, it is sufficient to consider only the case ξ ≤ 0.

Some examples of GEV probability densities with non-positive ξ are shown in Fig. 1.

The most widespread method for parameter estimation is the standard maximum likelihood method. However, its application for the GEV is complicated by a few circumstances. First, the support of the underlying distribution function depends on the unknown parameters [see Eq. (1)]. Second, it was shown that the classical good properties of the maximum likelihood estimators (MLE) of the GEV parameters hold whenever ξ > −0.5 and are being lost for ξ ≤ −0.5. Having this in mind we shall restrict our consideration by the former case. It should be noted that practically interesting cases usually meet this condition, and thus this restriction seems to be unessential. The third circumstance is connected with the sample size because the MLE method is not guaranteed to be the most efficient for small samples. The MLE for the GEV were studied in (Smith and Naylor 1987; Buishand 1989; Smith 1990; Embrechts et al. 1997). The method of statistical moment estimation and the method of weighted moments were suggested by Hosking et al. (1985) and Christopeit (1994). However, the efficiency of these approaches for the case of small samples still remains uncertain, and this case is just very important for many practical situations where sample size consists only 30–100 [see (Pisarenko and Rodkin 2013)]. A very annoying property of small samples occurred because of a strong bias of the MLE and other methods. Thus, the new approaches for this situation are very crucial. In this paper we study in detail the case of small samples and suggest some new modifications of the statistical moment (SM) estimation procedure based on the bootstrap ideas. In the study by Pisarenko et al. (2017) it was found that the quantile method (QM) works more efficiently for small samples in the case of GPD. In this paper we study the problem of estimation of the GEV parameters for small and moderate (20–500) samples. We have found that for this situation the method of statistical moments (SM) works more efficiently than ML and QM estimates. We restrict ourselves below by the comparison of efficiency of SM and ML estimates.

2 The ML Estimates for the GEV

The GEV log-likelihood for a sample \( \left( {x_{1} , \ldots ,x_{n} } \right) \) has form

The ML estimators are found as values (ξ, σ, μ) maximizing (2) under conditions:

3 The SM Estimates for the GEV

We denote the three first statistical moments by M 1, M 2, M 3:

Their theoretic analogs are following:

ϒ 1 = μ−σ/ξ−σ·Γ(−ξ); Γ is the gamma function;

ϒ 2 = (μ−σ/ξ)2−2σ(μ−σ/ξ)·Γ(−ξ)−2(σ 2/ξ)Γ(−2ξ);

Equalizing (4) and (5) we get the system of three equations for three unknown parameters. This system is easily solved. In fact, the skew \( E\left( {\frac{X - EX}{{{\text{std}}({\text{X}})}}} \right)^{3} \) depends only on ξ:

where E is mathematical expectation symbol and std is a standard deviation. Equalizing (6) to the sample skew

we get equation for ξ:

Equation (8) is solved numerically which provides the estimate \( \hat{\xi } \) of parameter ξ.

Then we get estimators for two other parameters:

The third moment ϒ 3 is finite for ξ < 1/3. Since we assumed ξ ≤ 0, this condition is satisfied and we have included it as well into our numerical algorithm. Under this condition all three sample moments converge with probability one to corresponding theoretical moments as n tends to infinity. Thus, the SM estimates (8)–(10) converge as well to their true values with probability one.

We used the bootstrap variant with replacement (Efron 1979). In bootstrap sample \( \left( {y_{1} , \ldots ,y_{n} } \right) \) each y k was randomly chosen from the initial sample \( \left( {x_{1} , \ldots ,x_{n} } \right) \). We have taken usually 10,000 bootstrap samples and averaged obtained estimates. Bootstrap samples are not independent, but the mathematical expectation of a sum equals to sum of expectations for dependent terms too.

We have modified the SM by adding a very useful procedure. To estimate std we divided each bootstrap sample into two equal subsamples \( \left( {x_{1}^{(1)} , \ldots ,x_{n/2}^{(1)} } \right),\;\left( {x_{1}^{(2)} , \ldots ,x_{n/2}^{(2)} } \right) \). Then, we got estimate of variance:

taking into account that \( E\left( {x_{k}^{(1)} - x_{k}^{(2)} } \right)^{2} = 2\text{var} \), so that var is an unbiased estimate of the true variance var. Then we averaged estimates (11) over all bootstrap ensembles. The similar method was used to estimate standard deviation of the quantiles Q q (τ) (see below).

Similarly, to estimate the significance level of the Anderson–Darling distance (ADD) that was used in our procedure we put parameter estimates derived from the first subsample into the DF and used this DF as “theoretical DF” for the second subsample. Thus, each time theoretical DF and the used subsample were independent. Of course, there was some loss of efficiency (because the sample size is two times less), so that our procedure gives slightly lowered significance value.

4 Comparison of the MLE with the SM

We have carried out the comparison on artificial GEV samples generated with the help of computer random numbers.

The quality of statistical estimates is defined by three characteristics:

-

1.

The mean-square error MSE

\( {\text{MSE}}\;{ = }\;\left[ {E\left( {\hat{\xi } - \xi } \right)^{2} } \right]^{1/2} \), where E is mathematical expectation symbol.

-

2.

The bias

\( {\text{BIAS}}\;{ = }\;E\left( {\hat{\xi }} \right) - \xi \).

-

3.

The standard deviation

$$ {\text{STD}}\;{ = }\;\left[ {E\left( {\hat{\xi } - E\left( \xi \right)} \right)^{2} } \right]^{1/2} . $$

These statistical characteristics are related by equation:

Thus, knowing two of them one can determine the third. The quality of an estimate is characterized fully by MSE, but sometimes two other statistical characteristics are of interest too. Analyzing estimates of the GEV parameters we concentrate mainly on the form parameter ξ since its estimation is most difficult. The estimation of parameter σ and especially parameter μ is much easier and certain. We carried out estimation of ξ within interval −0.5 < ξ ≤ 0. Since the centered and normalized term (x−μ)/σ is entered in the GEV we can study estimates of parameter ξ for any fixed σ, μ. The statistical properties of ξ-estimate do not depend on them.

The results of the estimation are collected in Tables 1, 2, 3, 4, 5, and 6. The averaged over ξ, −0.5 < ξ ≤ 0, MSE and absolute Bias for the Maximum Likelihood and the Moment Method are shown in Figs. 2 and 3. We see that ML has larger average MSE for n ~ < 100, and larger average bias over all considered range. These estimates for two other parameters σ, μ behave similarly. Thus, we can conclude that for small samples (n ≤ 100) the SM estimates are more efficient than the ML estimates, at least within the ξ-range (−0.5, 0] that is the most interesting for applications [see (Pisarenko and Rodkin 2010, 2013)].

5 The GEV Estimates for Different Seismic Zones

To illustrate the exposed SM-method we have formed three large samples of seismic magnitudes, representing the seismic regime of three different seismic–tectonic situations: subduction zone, intercontinental seismicity and mid-ocean ridge zone. We have aimed both to illustrate the application of the SM-method and to check the conclusion (Kagan 1999) on the universality of the seismic moment–frequency relation in all seismic areas excepting the specific character of seismicity in the mid-ocean ridge zones.

We restricted the depth by 70 km. The moment magnitudes m w were taken from the GCMT seismic moment catalog (http://www.globalcmt.org/CMTsearch.html); time interval 01.01.1976–12.01.2016 was examined. There were 23,379 events, 4.65 ≤ m w ≤ 9.08. We have cleaned the catalog from aftershocks and swarms with the help of the Kagan–Knopoff algorithm (Knopoff et al. 1982) which reduced the number of events down to 8954 (we call them main shocks). According to our method [see for details (Pisarenko and Rodkin 2010)] the catalog time interval is divided into n equal blocks, and maximum event m k is taken in each block. Thus, we get a sample \( \left( {m_{1} , \ldots ,m_{n} } \right) \). According to the theory of extreme events the distribution of m k tends to the GEV as the length of block goes to infinity. Thus, we try to fit a GEV to this sample varying n and choosing block length that should be sufficiently large. We used both the ML and SM methods with the bootstrap modification described above. The number of bootstrap samples was 10,000. The goodness-of-fit was measured by the Anderson–Darling distance ADD between sample DF F n (x) and theoretical GEV F (x):

where \( x_{{}} < \, x_{2} < \ldots < \, x_{n} \) is ordered sample \( \left( {m_{1} , \ldots ,m_{n} } \right) \); the weighting function \( W(x) \, = \frac{1}{{F(x)\left[ {1 - F\left( {\left| x \right.} \right)} \right]}} \) [see (Anderson and Darling 1954)]. We prefer to use just the ADD rather than more traditional measures of goodness-of-fit like, say, the Cramer-von Mises distance with \( W(x) \equiv 1 \) or the Kolmogorov–Smirnov distance n 1/2·max|F n (x)−F (x)| just because its weighting function stresses the fitting at the ends of distribution in study, which is important for our problem. We put both ML and SM sample estimates of GEV parameters in (1) and used this function as the theoretical F (x). Finally, we used ADD for each bootstrap sample under given n and averaged it over the bootstrap ensemble. Varying n we chose value n 0 giving minimum averaged ADD. To estimate significance level of the minimum ADD one can not use standard statistical ADD-tables since they are applicable only for exactly known theoretical DF with no estimated parameters. We used a simulation procedure to avoid this obstacle, repeating our method of ADD estimation 10,000 times. Then we estimated the p value equal to probability of exceeding observed ADD under null hypothesis that GEV is valid. Usually a hypothesis is rejected if p value is less than 0.1. In our examples, p values were less than the table values but still more than 0.1 (see Table 7) which admissibly justifies using the GEV.

We have applied this procedure to the seismic moment GCMT catalog. We have studied the seismicity in three virtual zones representing the intracontinental seismicity, subduction zones seismicity, and the mid-ocean ridge zones seismicity. The earthquakes that occurred in these three virtual zones are presented in Fig. 4. We have chosen for examination the areas of high seismicity level corresponding to these three tectonic situations. The virtual subduction zone includes 18,299 events, the virtual intracontinental zone includes 2116 earthquakes, and the virtual mid-ocean ridge zone includes 4168 events.

The estimates of GEV parameters for three virtual zones are collected in Table 7. Corresponding distribution tails 1−F (x) are shown in Figs. 5, 6, and 7. The optimal sample sizes occurred as follows: n = 66 (subduction), n = 60 (continents), n = 60 (ridges).

Distribution tail for the virtual intracontinental seismicity, the axis as in Fig. 5

Distribution tail for the virtual mid-ocean ridge zone, the axis as in Fig. 5

We have used the SM-estimation. Standard deviations were estimated by the method of two subsamples, described above. By the way, these std estimates were close to estimates obtained from the bootstrap ensembles. p values were estimated by the cross-validation of two subsamples too. It should be noted that p values calculated directly from the bootstrap ensembles (which is not legitimate because of inserted parameter estimates) in this case occurred much larger than the cross-validated p values. Thus, the cross-validation modification turned out to be quite valuable.

As it is seen from Table 7 the form parameter ξ is estimated with a large uncertainty in all three zones. The coefficient of variation C v (ratio of sample std to absolute sample mean) varies from 0.29 up to 0.78 which testifies to a large uncertainty. The scale parameter σ is estimated more confidently. Its C v ranges from 0.088 up to 0.102. The location parameter μ is estimated the most certainly: 0.007 ≤ C v ≤ 0.011. The quantiles Q q (τ) of maximum event in future τ years (see for details below) possess sufficient stability also. The sample quantile Q 0.5 (10) (median of maximum magnitude in future 10 years) has variation interval 0.014 ≤ C v ≤ 0.017 and Q 0.90 (10) has correspondingly 0.021 ≤ C v ≤ 0.028. For comparison, sample coefficient for statistic corresponding to maximum possible event, namely

is extremely uncertain. Its C v takes values much more than one. Thus, its use in practical purposes is highly questionable.

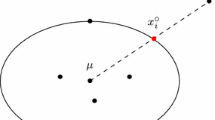

The statistical character of the SM estimates can be illustrated by 2D 68%-confidence domains for pairs of parameters (ξ, σ) and (σ, μ). The confidence level 68% corresponds to ±std intervals for Gaussian distribution. In our case, the quantile distribution is asymmetric, so that the 68% intervals are more adequate. These domains were derived as follows. First, we have found the non-parametric estimate of 2D-density of pair of estimates in question in form of

where n is the size of artificial sample, generated by SM estimates of parameters in question (we took n = 10,000); K σ is 2D symmetric kernel depending on a scale parameter σ; (x k , y k ), \( k = 1, \ldots ,n \) is the sample; s x , s y are sample standard deviations of X, Y. We have used the Gaussian kernel

The scale parameter σ should be chosen as a compromise between too smoothed and too “sharp” estimates of the density. This choice depends on n and on structure of 2D sample points. We have taken σ = 0.5 which seems to meet both conditions. Now, let D (h) denote domain on (x, y)-plane, where f (x, y) > h. We select h so that probability P{D} of domain D (h) would be equal to q, where q (0 < q < 1) is a given number:

The 2D domain D (h q ) is the q %-confidence domain for pair (X, Y) that is optimal (it consists of points of higher probability than other q %-domains). We have chosen q = 68% since in 1D case this confidence level corresponds to ±std domain. The 68%-confidence domains for pairs (ξ, σ) and (σ, μ) are shown in Figs. 8 and 9.

We see the pair (ξ, σ) distinctly separates the Ridge Zone from two others, whereas the pair (σ, μ) separates definitely all three types of seismic zones. One can say that parameters σ, μ have a resolving power for zone separation whereas parameter ξ has not.

The obtained estimates can be commented as follows. We cannot differentiate the cases of subduction zone seismicity, intracontinental seismicity, and mid-ocean ridge zone seismicity through the ξ parameter values, but these zones can be well differentiated by μ and σ parameter values (Fig. 9). It means that the magnitude distribution function in these zones is definitely different. Thus, basing on the available data (40 years catalog) we are not able to decide whether the ξ-parameter is universal or not, but longer catalogs perhaps will permit to decide this question.

For the full characterization of the seismic regime we have to add to the magnitude distribution discussed above one more parameter—the intensity of earthquake flow exceeding some threshold magnitude. This parameter appears to be rather robust. We have cleaned the catalog from aftershocks and swarms to make earthquake flow close to the Poisson point process. If the seismic region catalog covers time span T, then the intensity (the mean number of main events per time unit) can be estimated as \( \hat{\lambda } = n/T \), where n is number of main events over some selected threshold h. Let us denote the true intensity as λ 0. We have \( E(\hat{\lambda }) = \lambda_{0} \). The variance of a Poisson random value n equals to its mean value:

For practical use for large n we can replace λ 0 for n/T:

The ratio \( {\text{std}}\left( {\hat{\lambda }} \right)/E\left( {\hat{\lambda }} \right) \approx 1/n^{1/2} \) characterizes the variability of a random value. In our estimation of the intensity this ratio was usually less than 0.05–0.1 which can be interpreted as a rather high level of stability and robustness of estimate of intensity. In our bootstrap experiments we have put sample size to be a Poissonian random number with parameter n (real sample size) and thus took into account the intensity variation.

6 The Quantiles Q q (τ) as a Robust Estimate of the Seismic Hazard

The exposed above method can be used in the seismic hazard assessment. Usually for this aim, the maximum regional magnitude m max [see, e.g., (Kijko 2012)] is used. It is well known, however, that m max estimate is very unstable [see (Pisarenko and Rodkin 2010)]. In the GEV distribution, the maximum possible magnitude is given by expression M max = μ−σ/ξ (14) which explains the instability by small (or even very small) denominator ξ in most cases connected with seismic hazard assessment. In practical examples, one can obtain very improbable values M max like 20–100. Taking this fact into account we have recommended (Pisarenko and Rodkin 2007, 2010) to exploit instead of m max the quantiles \( Q_{q} (\tau ) \) level q of maximum event in future time interval τ. If one uses time blocks ΔT for measuring maxima then the theoretical \( Q_{q} (\tau ) \) has form:

In our estimation we found an optimal number n 0 equal to T/ΔT, where T was the catalog time span. Figures 10, 11 and 12 show the quantiles \( Q_{q} (\tau ) \) for our three zones with q = 0.50 (median) and q = 0.90 along with 68% confidence intervals. We see that for large future time intervals τ = 1000, 5000 years the confidence intervals become very large. For the subduction zone they reach 1.2–1.5 magnitude unit (it should be noted also that the results for the ridge zone can be relatively less accurate because of lack of seismic stations near these zones). This fact testifies that it is hardly possible to speak definitely about maximum magnitudes occurring in such large future times. Quite satisfactory results can be obtained only for essentially less time intervals, e.g., if τ = 10 years (short-term prediction) and τ = 50 years (period of exploitation of usual constructions). For τ = 10, the 68% confidence intervals do not exceed 0.25 magnitude unit, and for case τ = 50 years 68% confidence interval does not exceed 0.5 magnitude unit which is close to the standard error in the majority of hazard assessment schemes.

7 Summary

The most general theoretic approach to characterization of the tail distribution in range of rare extreme events is based on the Extreme Value theory (EVT). This theory has two main limit distributions: the GEV and the GPD. To use these distributions, one has to estimate statistically their parameters. But in many practical situations, one possesses limited experimental data for this aim, which makes the use of standard statistical tools like the method of maximum likelihood (ML) rather questionable and not reliable. We tried to study this rather typical situation of small sample size. The GPD was studied in our work (Pisarenko et al. 2017); the GEV has been considered in this paper. In the study by Pisarenko et al. (2017) we found for the GPD approach a good competitor to the ML for small samples—the method of quantiles. Now we have established for the same situation that well-known method of statistical moments (SM) is preferable for the GEV. We tried the method of quantiles too, but for the GEV it turned out to be less efficient than the ML and SM methods. It was shown that for small samples (n < 100) the SM mostly overpasses other methods.

Using the discussed SM approach we have examined the Kagan (1999) conclusion on the universality of the seismic moment–frequency relation and have found that we are able to discriminate three virtual seismic zones: the virtual subduction zone, the virtual intracontinental zone and virtual mid-oceanic ridge zone through μ and σ parameter values. It cannot be done, however, using the parameter ξ only. The change in μ and σ values of these zones correlates well with the change in earthquake magnitudes typical of such zones.

We have suggested the quantiles \( Q_{q} (\tau ) \) of maximum event in future time interval τ, as a stable statistical characteristic of the seismic regime and seismic danger. Its stability is explained by the fact that it is an integral characteristic of the distribution law in question as opposed to the unstable maximum possible magnitude M max.

References

Anderson, T. W., & Darling, D. A. (1954). A test of goodness-of-fit. Journal of the American Statistical Association, 49, 765–769.

Buishand, T. (1989). Statistics of extremes in climatology. Statistica Neerlandica, 43, 1–30.

Christopeit, N. (1994). Estimating parameters of an extreme value distribution by methods of moments. Journal of Statistical Planning and Inference, 41, 173–186.

Efron, B. (1979). Bootstrap methods: another look at the Jackknife. Annals Statistics, 7(1), 1–26.

Embrechts, P., Kluppelberg, C., & Mikosch, T. (1997). Modelling extremal events (p. 645). Berlin: Springer.

Hosking, J. R., Wallis, J. R., & Wood, E. F. (1985). Estimation of the generalized extreme-value distribution by the method of probability-weighted moments. Technometrics, 27, 251–261.

Kagan, Y. Y. (1999). Universality of the seismic moment-frequency relation. Pure Applied Geophysics, 155, 537–573.

Kijko, A. (2012). On Bayesian procedure of maximum earthquake magnitude estimation. Research in Geophysics, 2(7), 46–54.

Knopoff, L., Kagan, Y., & Knopoff, R. (1982). b-values for foreshocks and aftershocks in real and simulated earthquake sequences. Bulletin of the Seismological Society of America, 72, 1663–1675.

Pisarenko, V., & Rodkin, M. (2007). Distributions with heavy tails: Application to the disaster analysis. In: Computational Seismology, iss.38 (p. 240). Moscow: GEOS. (in Russian).

Pisarenko, V., & Rodkin, M. (2010). Heavy-tailed distributions in disaster analysis. Advances in natural and technological hazards research, Volume 30. Dordrecht: Springer.

Pisarenko, V., & Rodkin, M. (2013). Statistical analysis of natural disasters and related losses. Dordrecht: Springer. (Springer briefs in earth sciences).

Pisarenko, V., Rodkin, M., & Rukavishnikova T. (2017). The Estimation of probability of rare extreme events for the case of small samples, methods and examples of analysis of earthquake catalog. Physics of the Earth. (in press).

Smith, R. (1990). Extreme value theory. In W. Ledermann (Ed.), Handbook of Applicable Mathematics, Supplement (pp. 437–472). Chichester: Wiley.

Smith, R., & Naylor, J. (1987). A comparison of maximum likelihood and Bayesian estimators for the three-parameter Weibull distribution. Applied Statistics, 36, 358–369.

Acknowledgements

The work was supported by the Russian Foundation for Basic Research, Grant Nos. 14-05-00866 and 14-05-00776.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pisarenko, V.F., Rodkin, M.V. The Estimation of Probability of Extreme Events for Small Samples. Pure Appl. Geophys. 174, 1547–1560 (2017). https://doi.org/10.1007/s00024-017-1495-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00024-017-1495-0