Abstract

Automatic and accurate prognosis of myocardial infarction (MI) from electrocardiogram (ECG) signals is a very challenging task for the diagnosis and treatment of heart diseases. Hence, we have proposed a hybrid convolutional neural network—long short-term memory network (CNN-LSTM) deep learning model for accurate and automatic prediction of myocardial infarction using ECG dataset. The total 14552 ECG beats from “PTB diagnostic database” are employed for validation of the model performance. The ECG beat time interval and its gradient value ID are directly considered as the feature and given as the input to the proposed model. The used data is unbalanced class data, hence synthetic minority oversampling technique (SMOTE) & Tomek link data sampling techniques are used for balancing the data classes. The model performance was verified using six types of evaluation metrics and compared the result with state-of-the-art method. The experimentation was performed using CNN and CNN + LSTM model on both imbalance and balance data sample, and the highest accuracy achieved is 99.8% using ensemble technique on balanced dataset.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Myocardial infarction (MI) is the most common and fatal cardiovascular disease (CVD), which impedes blood flow to the heart muscle due to partial or complete blockage of the coronary arteries. The oxygenated blood to the cardiac muscle is supplied by coronary arteries, if there is any obstruction into it, the heart muscle segment may die due to lack of blood flow into it [1]. The damage or death of cardiac muscle tissue causes the change in the normal cardiac conduction system, resulting the life-threatening arrhythmias which may leads to sudden cardiac arrest. There are several symptoms may be seen in case of MI such as chest pain, breathing problems, and unconsciousness but many individual do not experience any symptoms, that why it is also called “silent heart attack”. According to one estimate, it has been found that about 22–65% of MIs do not show any symptoms, which means that they are silent. Therefore, patients do not get time to prepare themselves, which makes the disease more dangerous and fatal, the mortality rate is very high as a result, and the mortality rate of MI is very high [2].

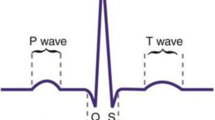

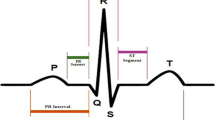

Hence, the early detection of the MI is very much important to provide timely treatment and reduce the mortality rate. The electrocardiogram (ECG) signal analysis is the most appropriate technique for detecting the MIs at early stage. But the manual and incorrect detection of cardia abnormality may lead to loss of life of the patients suffering from MIs. The main objective of our work is to assist the medical practitioner by automatic, accurate and quick detection of MI from ECG signals.

The use of machine learning for computational computing has been enormously increased in every field of computation encluding medical and health care [3,4,5]. From past few years, the deep learning models have exponentially rised especially in the field of medical and health care. Many researchers, academicians, and scientists have proposed different techniques for the detection of cardiac arrhythmias such as MI. For the automatic classification of arrhythmias, some researchers have utilized neural network (NN) [6], support vector machine (SVM) [7], decision tree [8], radial basis function (RBF) [9], K-nearest neighbors (KNN) [10], and hybrid classifiers [11] for detecting the arrhythmias into different classes. Reasat et al. [12] developed an automated method for the detection of inferior MI using inception frame-based shallow CNN and attained 84.54% of average classification accuracy. Liu et al. [13] presented a myocardial infarction (MI) detection algorithm using convolutional neural network (on) on multi-lead ECG signals. Their ML-CNN model uses sub 2D convolution which can use complete character of total leads, and it also uses 1D filter to generate local optimal features. The algorithm was evaluated on PTB diagnostic ECG dataset, and it achieved 95.40% of sensitivity, 97.37% of specificity and 96% of accuracy. Kayikcioglu et al. [14] proposed a technique to categorize the ST segments using distribution of time frequency-dependent features for the detection of myocardial infarction (MI) from multi-lead ECG waveforms. The weighted KNN algorithm provided best performance with average sensitivity of 95.72%, accuracy of 94.23% and specificity of 98.18% using Choi–Williams frequency distribution (CWFD) features. Liu et al. [15] presented a new hybrid RNN (LSTM)-based method for the prediction of MI from 12 lead ECG and obtained overall 93.08% accuracy.

Despite these excessive efforts, still there are few scope of improvements based on the review carried out on the MI detection from ECG signals. Data imbalance issue is the most common in medical signal and image which has not been addressed by the researchers. To resolve the issue of imbalance dataset, we have come up with the solution by applying SMOTE and tomek link jointly, along with hybrid CNN-LSTM model. Also, we have detected the MI from PTB database with the accuracy of 99.8%.

The structure of this paper is arranged as follows: Sect. 2 briefs about the materials and methods employed in this work. Section 3 explains about the proposed methodology, and Sect. 4 describes about the result and discussion. The final Sect. 5 provides concluding remarks and future work of this paper.

2 Materials and Methods

2.1 ECG Dataset

The PTBDB (PTB diagnostic database) comprises 549, 12 lead ECG recording from 290 individuals publically available at PhysioNet Web site to download [16]. Especially, this database contains 148 individuals diagnosed with MI in 368 recording and 52 individuals with healthy control in 80 recordings, apart from this other records are diagnosed with seven different types of arrhythmias [16]. For this work, we used ECG lead-II signals of only two classes, myocardial infarction (MI) and healthy ECG beats (N). The total number of ECG beats utilized for MI detection is 14,552 including 4046 healthy beats (N) and 10,506 MI beats, in other words, MI beats are 72%, and normal betas are ony 28% of the total utilized beats. ECG signals are segmented and down sampled of size 187, and also zero padded to make all the beats of same size, also all the signals are filtered and preprocessed, and this dataset is available publically at kaggle respiratory [17, 18]. The visualization of normal and myocardial infarction (MI) beats sample is shown in Fig. 1.

2.2 Data Balancing

If the difference between the two classes is large in terms of the number of samples, then the data is said to be imbalanced. In case of data imbalance, one class (majority) has more dominance or influence over the other classes [19].

Hence, the imbalance datasets mostly impacted the classifier performance because classifiers are mainly designed for balanced class problems. The another issue is that this imbalance data may not be visualized in the overall accuracy of the classification for that it is required to also validate the performance using different evaluation metrics.

There are various methods that have been suggested by many researchers to overcome the issue of data imbalance, but for this work, we have employed SMOTE and Tomek links jointly to oversample the dataset.

2.3 SMOTE and Tomek Links Sampling

The method applied to oversample the minority class data (MI) is synthetic minority oversampling technique (SMOTE) which creates the synthetic samples close to the minority class instead of simply generating the multiple copies. The SMOTE generates the minority data samples based on the K-nearest neighbors algorithm, it selects nearest neighbor from the minority data samples, and then based on linear interpolation new samples are generated [20].

Tomek links are the technique of under sampling which reduces the number of instances from majority classes based on the data samples which belongs to the borderline. This process keeps on repeating and creates the gap between the borderline of majority and minority classes by deleting the majority samples [21].

2.4 Convolutional Neural Network (CNN) Model

CNN was initially designed for the pattern recognition tasks in the field of computer vision for edge detection, segmentation and object detection task, but because of its versatile applicability, it is being used in almost every application. The commonly used layers of CNN are convolution (Conv) layer, ReLU layer, pooling layer, and batch normalization. The convolution layer is the first layer of the CNN which does the convolution operation, and it is supported by the kernel size, filter number, and padding. ReLU does the nonlinear operation which stands for “rectified linear unit”, and it is a type of activation function which introduces nonlinearity into the network. Mainly, two types of pooling operation are available: max pooling and average pooling; it reduces the number of parameters in the training, and it avoids the overfitting problem and increases the efficiency of the network. The batch normalization process normalizes the every layer’s input by applying the variance and mean of the present batch, during training process.

2.5 Long Short-Term Memory Network (LSTM)

LSTM network is the widely and most preferred DL model used for sequential and time series data, providing a solution to short-term memory (STM) as to why it is named “long- ‘short-term memory’”. The basic concepts of LSTM operation are in gates and cell states, which are forget gate, input gate, cell state, output gate, and hidden state [22]. In forget gate (ft) system, the present input (xt) is concatenated with the previous hidden state (ht−1) and passes through the sigmoid activation function to produce the output between 0 and 1. Forget gate is mathematically expressed as follows:

Input gate (it) is mainly used for updating the memory or cell state, and in this gate also sigmoid function is used between concatenated value of current input (xt) and previous hidden state (ht−1) and expressed by the equation.

Cell state (Ct) is also known as the memory state, and the operation in this state takes place in three step. First step, the previous cell state value (Ct−1) gets multiplied (point wise) with the forget gate (ft) value (from Eq. 1), and in the second step candidate (Ct) vector is point wise multiplied with the input gate (it) vector (from Eq. 2). In the final step, both of these values get added together to create the memory cell or current state value (Ct) to be fed to the next stage, which is given by the equation:

Output gate (Ot) is also calculated in a similar way as input and forget gate but with different weight and bias value, here also the sigmoid activation function is used for providing the output between 0 and 1. The output gate operation is mathematically given by

Hidden state (ht) and cell state memory is only passed to the next LSTM stage, and all the gates are used for regulating and computing the value of these states. It is the point wise vector multiplication of output gate value (from Eq. 4) and cell state value (from Eq. 3) after applying tanh activation, and it is presented in Eq. 5. The overall visualization of signal stage LSTM network, including all gates and states, is shown in Fig. 2.

3 Proposed Methodology

The methodology employed for the predication of myocardial infarction (MI) from ECG signals, using CNN, CNN-LSTM and ensemble technique, is presented in this section. The proposed methodology includes two deep learning models with different architecture: One is CNN model and another is CNN-LSTM model along with ensemble technique in the final stage.

In this work, the automatic MI detection and also oversampling methods have been employed in the ECG signals. The ECG signals are first preprocessed by filtering and segmenting it, and then the time interval and gradient of these time series data were calculated. In the next step, the preprocessed imbalance data is directly trained on the training dataset using CNN model and also CNN-LSTM model. In the final step, the imbalanced dataset is balanced using SMOTE-Tomek link method and then trained using the CNN and CNN-LSTM model. The predicted result from both the model is ensemble by averaging them, and final model performance is obtained. Instead of data augmentation technique, data oversampling using SMOTE-Tomek link is applied for generating more number of sample in minority instances. Since the ECG signal is time series and nonlinear data, we have preferred LSTM in combination with CNN among many deep learning models.

3.1 Data Splitting (Distribution)

The data distribution also plays a very important role in the prediction task using classifiers. In this work, total 14,552 ECG beats from PTB database consisting MI beats 10,506 and normal beats 4056 are utilized for MI detection. The ratio of distribution is 80:20 for train: test dataset, respectively, i.e., 11,641 beats for training and 2911 beats for testing the model. Further, the train dataset has been divided into two parts, training and validation in the ratio of 80:20, respectively. Finally, 9312 ECG beats are used for learning the proposed models, and 2329 beats are used for validating the training performance.

3.2 Proposed CNN Model

The 21 layer CNN deep learning model has been proposed to accurately and automatically detect the MI from ECG signal, and the structure of the proposed CNN model layer wise is illustrated in Fig. 3. The input is the 1D ECG signal of sample size (87 × 1) and reshapes it to the (187 × 2 × 1) to make suitable for 2D convolutional (Con2D) layer. The complete structure is designed into four parts (stage), where first two stages contain the convolutional layer followed by batch normalization, ReLu non-linearization and max pooling with dropout (0.5) layer. Throughout the model, stride is constant (s = 1) and filter size of Con2D layer (f = 3, 1) in each segment, except first, (f = 3, 2) is used. Second stage with 128 filter size is repeated for extracting the deep features, and third and fourth stages do not contain max pool layer to maintain the feature map and do not allow it to shrink further. Con2D layer varies in the filter number from first to fourth stage as 256, 128, 64, and 64, respectively.

3.3 Proposed CNN-LSTM Model

The 19 layer hybrid CNN-LSTM model is designed for detection of MI from ECG signals and demonstrated the structure of the model, layer by layer in Fig. 3. The complete design is built-up into four segments, first two segments are exactly same as CNN model, but the third and fourth segments are modified to optimize the better result. In this model, also the filter size (f = 3, 1) was kept constant for con2D layer except first and fourth layer, where we have used (f = 3, 2) and (f = 6,1) to match the feature size with the forward layers. The stride and padding throughout the model are one (s = 1) and valid, respectively. The segments third and fourth are entirely different from the CNN model, and it is indicated by the red box, similar to CNN model, and this segment does not contain max pooling layer for avoiding further reduction of feature size. The last fourth segment consists of LSTM layer trailed by reshape which is combined with the CNN module. First to third segments represent the CNN model, and fourth segment is added extra for embedding the LSTM layer into it and completed with FC + softmax layer similar to CNN model.

3.4 Performance and Parameter Evaluation

There are several methods to evaluate the performance of the classifier, but we have used confusion matrix and computed various evaluation metrics to verify the model classification result. Six types of performance evaluation metrics, recall (Re%), specificity (Sp%), precision (Pr%), accuracy (Acc), and F1-score (F1%), and classification error rate (CER%) is used for validation of the classifier performance.

4 Result and Discussion

The experiment was performed on total 14,552 ECG beats from PTB database consisting MI beats 10,506 and normal beats 4056. The Python environment has been used for validating the model with TensorFlow and keras library on Kaggle respiratory with GPU support. Laptops with hardware configuration, 8 GB RAM, 1 TB HDD, i5 core Pentium processor, and NVIDIA graphics card have been used for the experimentation. The hyperparameters used during the training process were as, Adam optimizer, batch size 128, momentum 0.9, learning rate 1e-3, epoch 100, and 1e-7 decay. The prediction was made on all three datasets training, validation and test, where training and validation data were already used for learning but the test data was unexposed to the training process.

The first experimentation was performed on imbalance dataset using CNN and CNN + LSTM models. The detection was executed on test dataset, and the prediction results from both the models were ensemble to attain the final result. The accuracy and loss history of training and validation on the original dataset using CNN model are visualized in Fig. 4, and the training history using proposed CNN + LSTM model is shown in Fig. 5. It was observed from both the figures that the model learned well using both the proposed model, but there is slight improvement noticed on the CNN + LSTM model training history.

The training was performed on the original dataset (imbalance), and after training the model was validated on test dataset (2911 beats). From the prediction result, it was found that the ensemble results from both the model provide better result as compared to CNN and CNN + LSTM model. The overall accuracy achieved using CNN, CNN + LSTM, and ensemble model is 99.3%, 99.5%, and 99.6% respectively. The performance evaluation metrics of each models are presented in Table 1.

The second experiment was performed on the balanced training dataset by SMOTE + Tomek link resampling method. The total 11,641 ECG beats from both the classes were oversampled to 16,798 beats where 8399 beats are from each class. Hence, after balancing the datasets, it was trained using both the models and the prediction was made on the reserved test dataset only. The training performance using CNN and CNN + LSTM model on the balanced dataset is depicted in Figs. 6 and 7, respectively.

The prediction result in Table 2 demonstrates that the class (Cl) accuracy has improved as well as overall accuracy is also improved compared to the imbalanced dataset. The overall accuracy obtained using CNN, CNN + LSTM, and ensemble is 99.5%, 99.7%, and 99.8%, respectively, on balanced dataset. Hence by balancing the dataset using SMOTE +Tomek link sampling methods, not only the overall accuracy increases but each individual class prediction values also increases. The obtained result was also compared with state-of-the-art techniques to validate the proposed model performance, as presented in Table 3.

5 Conclusion and Future Scope

The hybrid CNN-LSTM-based deep learning model for automatic and accurate detection of myocardial infarction (MI) was proposed in this paper. The 14,552 ECG beats were used for the verification of model performance from PTB diagnostic dataset. The two models are proposed for the detection of MI and normal beats from ECG signals using two proposed models, CNN and CNN + LSTM. The data resampling method SMOTE + Tomek link is used for balancing the data classes. The CNN-LSTM model performance was tested on 2,911 ECG beats along with training and validation dataset and obtained 99.8% overall accuracy which proves the model supremacy compared to all state-of-the-art techniques.

In the future scope of this work and the generation adverse network (GAN) for data enrichment, the large dataset will be used with the fastest intensive learning model to reduce the computation time.

References

Acharya UR, Fujita H, Oh SL, Hagiwara Y, Tan JH, Adam M (2017) Application of deep convolutional neural network for automated detection of myocardial infarction using ECG signals. Inf Sci (Ny) 415–416:190–198. https://doi.org/10.1016/j.ins.2017.06.027

Lui HW, Chow KL (2018) Multiclass classification of myocardial infarction with convolutional and recurrent neural networks for portable ECG devices. Inform Med Unlocked 13:26–33. https://doi.org/10.1016/j.imu.2018.08.002

Singh PK, Kar AK, Singh Y, Kolekar MH, Tanwar S (2019) Recent innovations in computing. Springer Nature, Switzerland AG

Singh PK, Panigrahi BK, Suryadevara NK, Sharma SK, SAK (eds) (2020) Proceedings of ICETIT 2019. Springer International Publishing, Cham. https://doi.org/10.1007/978-3-030-30577-2

Singh PK, Pawłowski W, Tanwar S, Kumar N, Rodrigues JJPC, Obaidat, MS (eds) (2020) Proceedings of first international conference on computing, communications, and cyber-security (IC4S 2019). Springer Singapore, Singapore. https://doi.org/10.1007/978-981-15-3369-3

Banerjee S, Mitra M (2013) ECG beat classification based on discrete wavelet transformation and nearest neighbour classifier. J Med Eng Technol 37:264–272. https://doi.org/10.3109/03091902.2013.794251

Khalaf AF, Owis MI, Yassine IA (2015) A novel technique for cardiac arrhythmia classification using spectral correlation and support vector machines. Expert Syst Appl 42:8361–8368. https://doi.org/10.1016/j.eswa.2015.06.046

Alarsan FI, Younes M (2019) Analysis and classification of heart diseases using heartbeat features and machine learning algorithms. J Big Data 6:1–15. https://doi.org/10.1186/s40537-019-0244-x

Singh R, Mehta R, Rajpal N (2018) Efficient wavelet families for ECG classification using neural classifiers. In: Procedia computer science, pp 11–21. Elsevier B.V. https://doi.org/10.1016/j.procs.2018.05.054

Savostin AA, Ritter DV, Savostina GV (2019) Using the K-Nearest neighbors algorithm for automated detection of myocardial infarction by electrocardiogram data entries. Pattern Recognit Image Anal 29:730–737. https://doi.org/10.1134/S1054661819040151

Hernandez-Matamoros A, Fujita H, Escamilla-Hernandez E, Perez-Meana H, Nakano-Miyatake M (2020) Recognition of ECG signals using wavelet based on atomic functions. Biocybern Biomed Eng 40:803–814. https://doi.org/10.1016/j.bbe.2020.02.007

Reasat T, Shahnaz C (2018) Detection of inferior myocardial infarction using shallow convolutional neural networks. In: 5th IEEE Region 10 humanitarian technology conference 2017, R10-HTC, pp 718–721. Institute of Electrical and Electronics Engineers Inc. https://doi.org/10.1109/R10-HTC.2017.8289058

Liu W, Zhang M, Zhang Y, Liao Y, Huang Q, Chang S, Wang H, He J (2018) Real-time multilead convolutional neural network for myocardial infarction detection. IEEE J Biomed Heal Inform 22:1434–1444. https://doi.org/10.1109/JBHI.2017.2771768

Kayikcioglu İ, Akdeniz F, Köse C, Kayikcioglu T (2020) Time-frequency approach to ECG classification of myocardial infarction. Comput Electr Eng 84. https://doi.org/10.1016/j.compeleceng.2020.106621

Liu W, Wang F, Huang Q, Chang S, Wang H, He J (2020) MFB-CBRNN: a hybrid network for MI DETECTION using 12-Lead ECGs. IEEE J Biomed Heal Inform 24:503–514. https://doi.org/10.1109/JBHI.2019.2910082

Bousseljot R, Kreiseler D, Schnabel AN (1995) The PTB diagnostic ECG database. Biomed Tech 40, 317. https://doi.org/10.13026/C28C71

Fazeli S (2020) ECG heartbeat categorization dataset. https://www.kaggle.com/shayanfazeli/heartbeat. Last accessed 2020/08/21

Kachuee M, Fazeli S, Sarrafzadeh M (2018) ECG heartbeat classification: a deep transferable representation. In: Proceedings—2018 IEEE international conference on healthcare informatics, ICHI 2018. pp. 443–444 (2018). https://doi.org/10.1109/ICHI.2018.00092

Somasundaram A, Reddy US (2016) Data imbalance: effects and solutions for classification of large and highly imbalanced data. In: Proceeding 1st international conference on research in engineering, computers and technology (ICRECT 2016), pp 28–34

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: synthetic minority over-sampling technique

Younes Charfaoui: resampling to properly handle imbalanced datasets in machine learning. https://heartbeat.fritz.ai/resampling-to-properly-handle-imbalanced-datasets-in-machine-learning-64d82c16ceaa. Last accessed 2020/08/28

Phi M (2020) Illustrated guide to LSTM’ s and GRU’ s : a step by step explanation. https://towardsdatascience.com/illustrated-guide-to-lstms-and-gru-s-a-step-by-step-explanation-44e9eb85bf21. Last accessed 2020/08/23

Kora P (2017) ECG based myocardial Infarction detection using Hybrid Firefly algorithm. Comput Methods Programs Biomed 152:141–148. https://doi.org/10.1016/j.cmpb.2017.09.015

Dohare AK, Kumar V, Kumar R (2018) Detection of myocardial infarction in 12 lead ECG using support vector machine. Appl Soft Comput J 64:138–147. https://doi.org/10.1016/j.asoc.2017.12.001

Liu W, Huang Q, Chang S, Wang H, He J (2018) Multiple-feature-branch convolutional neural network for myocardial infarction diagnosis using electrocardiogram. Biomed Signal Process Control 45:22–32. https://doi.org/10.1016/j.bspc.2018.05.013

Sharma M, Tan RS, Acharya UR (2018) A novel automated diagnostic system for classification of myocardial infarction ECG signals using an optimal biorthogonal filter bank. Comput Biol Med 102:341–356. https://doi.org/10.1016/j.compbiomed.2018.07.005

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Rai, H.M., Chatterjee, K., Dubey, A., Srivastava, P. (2021). Myocardial Infarction Detection Using Deep Learning and Ensemble Technique from ECG Signals. In: Singh, P.K., Wierzchoń, S.T., Tanwar, S., Ganzha, M., Rodrigues, J.J.P.C. (eds) Proceedings of Second International Conference on Computing, Communications, and Cyber-Security. Lecture Notes in Networks and Systems, vol 203. Springer, Singapore. https://doi.org/10.1007/978-981-16-0733-2_51

Download citation

DOI: https://doi.org/10.1007/978-981-16-0733-2_51

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-0732-5

Online ISBN: 978-981-16-0733-2

eBook Packages: EngineeringEngineering (R0)