Abstract

The simple or classical ordination methods mostly used by palaeoecologists and palaeolimnologists are principal component analysis (PCA) and correspondence analysis (CA), and, more rarely, principal coordinate analysis (PCoA) and non-metric multidimensional scaling (NMDS). These methods are reviewed in a geometric framework. They mostly differ by the types of distances among objects that they allow users to preserve during ordination. Canonical ordination methods are generalisations of the simple ordination techniques; the ordination is constrained to represent the part of the variation of a table of response variables (e.g., species abundances) that is maximally related to a set of explanatory variables (e.g., environmental variables). Canonical redundancy analysis (RDA) is the constrained form of PCA whereas canonical correspondence analysis (CCA) is the constrained form of CA. Canonical ordination methods have also been proposed that look for linear and polynomial relationships between the dependent and explanatory variables. Tests of statistical significance using permutation tests can be obtained in canonical ordination, just as in multiple regression. Canonical ordination serves as the basis for variation partitioning, an analytical procedure widely used by palaeolimnologists.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Canonical correspondence analysis

- Correspondence analysis

- Non-metric multidimensional scaling

- Ordination

- PCNM analysis

- Principal component analysis

- Principal coordinate analysis

- Redundancy analysis

- Variation partitioning

- Principal coordinates of neighbour matrices

Introduction

To ordinate is to arrange objects in some order (Goodall 1954). Ordination procedures are well-known to ecologists who wish to represent and summarise their observations along one, two, or a few axes. The most simple case is the ordination of sites along a single variable representing an environmental gradient (e.g., lake-water pH), or a sampling variable such as depth along a sediment core or along the estimated ages of levels in a sediment core. Ordination diagrams are simply scatter plots of the objects (e.g., core levels) on two or sometimes three axes according to the values taken by the objects along the variables comprising the axes.

When the data are multivariate, the problem is to either choose two pertinent variables for plotting the observations, or to construct synthetic variables that represent, in some optimal mathematical way, the set of variables under study; these synthetic variables may then be used as the major axes for the ordination. The data matrix subjected to analysis may contain a set of environmental variables, or the multi-species composition of the assemblage under study. In such cases, we will say that we are performing an ordination in a space of reduced dimensionality, or an ordination in reduced space, since the original data-set has many more dimensions (variables) than the ordination graph we want to produce.

This chapter describes the choices that have to be made in order to obtain a meaningful and useful ordination diagram. It will also show how the methods of canonical ordination, which are widely used to relate species to environmental data in palaeolimnology, are extensions within the framework of regression modelling of two classical ordination methods. Some forms of ordination analysis, classical or canonical, are widely used by palaeolimnologists as tools in the handling, summarisation, and interpretation of palaeolimnological data, either modern assemblages or core fossil assemblages (Smol 2008). The various types of use of ordination analysis in palaeolimnology are summarised in Table 8.1. No attempt is made here to provide a comprehensive review of palaeolimnological applications of ordination methods. Emphasis is placed instead on basic concepts and the critical methodological questions that arise in the use of ordination methods in palaeolimnology. Birks (2008, 2010) provides a short overview of the range of ordination methods currently available and of the general use and value of ordination techniques in ecology and palaeoecology. Borcard et al. (2011) discuss classical (unconstrained) and canonical (constrained) ordinations and their implementation with R.

Basic Concepts in Simple Ordination

The simple ordination methods mostly used by (palaeo)ecologists and (palaeo) limnologists are principal component analysis (PCA), correspondence analysis (CA) and its relative, detrended correspondence analysis (DCA), principal coordinate analysis (PCoA), and non-metric multidimensional scaling (NMDS) (Prentice 1980, 1986). These methods will be reviewed here in a geometric framework. They mostly differ in the types of distances among objects that they attempt to preserve in the ordination.

Simple ordination is used in palaeolimnology to address two main types of questions. (1) In a study of sediment cores, ordination is used to identify the main gradients in the species assemblage data, which are multivariate by nature, and to interpret these gradients using species loadings on the ordination axes (see Birks 2012b: Chapter 11). Ordinations are also used as graphical templates to draw groups of sampling units obtained by clustering, as well as trajectories of the multivariate species data through time to estimate the magnitude and rates of change in species assemblage composition (Birks and Gordon 1985; Jacobson and Grimm 1986; Birks 1992, 2012b, Chapter 11). (2) Ordination of modern objects from various locations is also used as a basis on which fossil objects can be projected as passive objects for comparison between modern and fossil assemblages (Lamb 1984; Birks and Gordon 1985; Birks 1992, 2012b, Chapter 11).

Starting with a data-set, several choices have to be made before obtaining an ordination (Table 8.2). These choices will be described in some detail because a good understanding of their implications is likely to produce more informative and useful ordination diagrams. Users of ordination methods should not let themselves be guided blindly by the implicit choices that are inherent to some methods or computer programs. The critical decisions to be made are the following:

-

Do the data (environmental or assemblage data) need to be transformed prior to ordination analysis?

-

Which distance measure should be preserved by the ordination method?

-

Should a metric or non-metric ordination method be used?

-

How many axes are required?

These decisions will now be discussed in some detail.

Transformation of Physical Data

Physical, chemical, or geological variables are often used as explanatory variables in palaeolimnological studies. They may also be used directly to obtain ordinations of the objects or sites on the basis of these variables (Table 8.1). Three problems may require pre-processing of the data before ordination: (a) if the distributions of the data along the variables are not symmetrical, skewness may need to be reduced; (b) if the variables are not all expressed in the same physical units, they need to be transformed to eliminate their physical units; (c) multistate qualitative variables (e.g., rare, common, abundant) may require, in some cases, transformation into dummy variables prior to ordination. Possible solutions to these three problems are as follows.

-

1.

An ordination in which some of the points are clumped in a big mass while other points are stretched across the diagram is not very useful or informative. It is better to have the points scattered in a fairly homogeneous fashion across the diagram, with perhaps some clumping in the centre of the diagram, or in some areas of higher density if the data are clumped; the latter case may suggest that a cluster analysis might produce a more interesting and useful multivariate description of the data (see Legendre and Birks 2012: Chapter 7).

The data should be initially examined using univariate methods, such as computing skewness statistics, or drawing frequency histograms (see Juggins and Telford 2012: Chapter 5). Depending on the type of asymmetry found, various transformations can be applied, such as square root, double square root, or log transformation. General methods, such as the Box-Cox transformations, are available to find automatically the most efficient normalising transformation; see Sokal and Rohlf (1995), Legendre and Legendre (1998), and Juggins and Telford 2012: Chapter 5. These are often referred to as normalizing transformations because removing the asymmetry is an important step towards obtaining normally-distributed data. We emphasise, however, that the objective prior to ordination is not to obtain a multinormal distribution of the data, but simply to reduce the asymmetry of the distributions. Tests of normality may be useful to screen the data and identify the variables whose distributions should be examined more closely in order to find, if possible, a skewness-reducing transformation (see Juggins and Telford 2012: Chapter 5).

Scientists often worry about transforming variables. Is it permissible? The original physical unit in which an environmental variable is measured imposes a scale to the data that is as unlikely to be related to the response of the species to this variable as any other scale that we may impose by applying a nonlinear transformation to the data. In order to relate a physical variable to the response of the species, physiological studies would be required to determine what the most appropriate transformation is. So, short of having such information available to them, users of ordination methods are left with statistical criteria only, such as skewness of the distributions, to decide on the transformation of physical variables.

-

2.

In most cases, physical variables are not expressed in the same physical units; some may be in cm, others in μg L−1, in °C, or in pH units. Such variables need to be transformed to eliminate the physical dimensions before being used together to produce an ordination. Note that log-transformed data are dimensionless because logarithms are exponents of a base and exponents are dimensionless. There are two main methods for eliminating physical dimensions: standardisation and ranging. They both eliminate the physical units by dividing the original data by a value possessing the same physical units.

-

Standardise variable y to z:

$$ {z_i} = \frac{{{y_{\text{i}}} - \bar{y}}}{{{s_y}}} $$(8.1)where y i is the original value of variable y for object i, \( \bar{y} \) is the mean value of y, and s y is the estimated standard deviation of y. z i is the standardised value of variable y for object i. Variable standardisation is available in the decostand() function of the vegan R-language package (method = ‘standardize’).

-

Relative-scale variables: range variable y to y' using equation

$$ {y\prime_i} = {y_i}/{y_{\mathit {max}}} $$(8.2)where \( {y\prime_i} \) is the ranged value of y for object i and y max is the maximum value of y in the whole data table. This form of ranging is used for relative-scale variables, where ‘zero’ means the absence of the characteristic of interest. This transformation is available in the decostand() function of the vegan R-language package (method = ‘max’).

-

Interval-scale variables: range variable y to y’ using equation

$$ y_i^{\prime} = \frac{{{y_i}/{y_{\mathit {min}}}}}{{{y_{\mathit {max}}} - {y_{\mathit {min}}}}} $$(8.3)where \( y_i^{\prime} \) is the ranged value of y for object i, whereas y min and y max are, respectively, the minimum and maximum values of y in the whole data table. This form of ranging is used for interval-scale variables, in which the value ‘zero’ is chosen arbitrarily and whose range may include negative values. Temperatures in °C are an example of an interval-scale variable. This transformation is available in the decostand() function of the vegan R-language package (method = ‘range’).

Variables may also be standardised in order to bring their variances to unity. It is preferable to apply skewness-reducing transformations before standardising the data. If the opposite is done, standardisation would produce negative values which are incompatible with square root, log, or Box-Cox transformations. Ranging, which brings all values of a variable into the interval [0,1], may be used before or after applying a skewness-reducing transformation.

-

-

3.

Multistate qualitative variables may be handled in different ways. If the ordination is to be obtained through a method requiring the prior calculation of a distance matrix (PCoA, NMDS), resemblance coefficients are available that are capable of handling mixtures of quantitative and qualitative variables, as discussed in the section below on Choice of an appropriate distance function and in Simpson (2012, Chapter 15). If, on the other hand, the ordination is to be obtained through a method that will implicitly preserve the Euclidean distance among objects (PCA, redundancy analysis (RDA)), the qualitative data must be transformed in some way prior to being subjected to the ordination method because a qualitative variable is not a metric or measurement variable; in other words, the distance between states 1 and 3 of a qualitative variable is not twice as large as the distance between states 1 and 2. Variables from which Euclidean distances are calculated must be metric (or quantitative). The transformation can be done in one of two ways:

-

A qualitative variable possessing p states can be recoded into p binary (0–1) variables, called dummy variables, using one dummy variable for each state of the qualitative variable. The coding method is described in Legendre and Legendre (1998: Chapter 1). Dummy variables can be used in PCA or RDA only if the program provides a possibility for weighting the variables. Indeed, if the variables are standardised or ranged prior to the ordination, a qualitative variable recoded into p dummy variables occupies p dimensions in the full-dimensional representation of the data. Each dummy variable should be downweighted to have a weight of 1/p in the analysis while the other quantitative variables have a weight of 1. The program canoco (ter Braak 1988a; ter Braak and Šmilauer 2002) offers the possibility of specifying weights for variables in PCA or RDA.

-

Redundancy analysis (RDA) or canonical correspondence analysis (CCA) can be used to find a transformation of a qualitative multistate variable into a quantitative variable which is optimal with respect to a table of assemblage composition data (Legendre and Legendre 1998: p.597). This is done as follows. Recode the qualitative variable into dummy variables as in the previous paragraph. Remove one of the dummy variables because, with all p dummy variables, the variance-covariance matrix of the dummy variables is singular and cannot be inverted; this is an obligatory step for explanatory variables in multiple regression and canonical analysis. For RDA or CCA, use the table of species composition data as the response matrix and the table of dummy variables as the explanatory matrix. If the first canonical ordination axis explains most of the canonical variance, it can be used in further analyses as a quantitative representation of the original qualitative variable. [Note: in the program canoco, the last of a set of dummy variables is automatically removed from the calculations. In the same way, the last state of a ‘factor’ variable is removed from the calculations in the rda() and cca() functions of the vegan R-language package, but the centroids of all states are drawn in the biplot.]

-

Transformation of Assemblage Composition Data

Assemblage composition data (species abundances) for short gradients, which contain relatively few zeros, can be ordinated by PCA or RDA: in that case the Euclidean distance is a meaningful measure of the ecological distance among the observations. These variables may, however, have asymmetric distributions because species tend to have exponential growth when conditions are favourable. This well-known fact has been embedded in the theory of species-abundance models; see He and Legendre (1996, 2002) for a synthetic view of these models. To reduce the asymmetry of the distributions, species abundance y may be transformed to y' by taking the square root or the fourth root (which is equivalent to taking the square root twice), or by using a log transformation:

where y is the species abundance and c is a constant. Usually, c = 1 in species log transformations, so that an abundance y = 0 is transformed into y' = log(0 + 1) = 0 for any logarithmic base. Michael Palmer (http://www.okstate.edu/artsci/botany/ordinate/) does not recommend this transformation for absolute biomass data because it gives different values depending on the mass units (e.g., g or kg) used to record biomass. Another transformation that reduces the asymmetry of heavily skewed abundance data is the one proposed by Anderson et al. (2006). The abundance data y ij are transformed to an exponential scale that makes allowance for zeros: \( {y\prime_{{ij}}} = {\text{ lo}}{{\text{g}}_{{{1}0}}}\left( {{y_{{ij}}}} \right) + {1} \) when y ij > 0 or \( {y\prime_{{ij}}} = 0 \) when y ij = 0. Hence, for y ij = {0, 1, 10, 100, 1000}, the transformed values are {0, 1, 2, 3, 4}. This transformation is available in the decostand() function of the vegan package (method = ‘log’).

Community composition data sampled along long ecological gradients typically contain many zero values because species are known to have generally unimodal responses along environmental gradients (ter Braak and Prentice 1988). The proportion of zeros is greater when the sampling has crossed a long environmental gradient. This is because species have optimal niche conditions, where they are found in greater abundances along environmental variables (see Juggins and Birks 2012: Chapter 14). The optimum for a species along an environmental variable corresponds to the centre of its theoretical Hutchinsonian niche along that factor. These propositions are discussed in most texts of community ecology and, in particular, in Whittaker (1967) and ter Braak (1987a). Because ordination methods use a distance function as their metric to position the objects with respect to one another in ordination space, it is important to make sure that the chosen distance is meaningful for the objects under study. Choosing an appropriate distance measure means trying to model the relationships among the sites appropriately for the assemblage composition data at hand. The choice of a distance measure is an ecological, not a statistical decision.

An example presented in Legendre and Legendre (1998: p.278) shows that the Euclidean distance function may produce misleading results when applied to assemblage composition data. Alternative (dis)similarity functions described in the next section, which were specifically designed for assemblage composition data, do not have this drawback. In some cases, distance measures that are appropriate for assemblage composition data can be obtained by a two-step procedure: first, transform the species abundance data in some appropriate way, as described below; second, compute the Euclidean distance among the sites using the transformed data (Fig. 8.1). This also means that assemblage composition data transformed in these ways can be directly used to compute ordinations by the Euclidean-based methods of PCA or RDA; this approach is called transformation-based PCA(tb-PCA) or transformation-based RDA (tb-RDA). The transformed data matrices can also be used in K-means partitioning, which is also a Euclidean-based method (see Legendre and Birks 2012: Chapter 7). Legendre and Gallagher (2001) have shown that the following transformations can be used in that context (some of these transformations have been in use in community ecology and palaeoecology for a long time, e.g., by Noy-Meir et al. (1975) and by Prentice (1980)).

The role of the data transformations as a way of obtaining a given distance function. The example uses the chord distance (Modified from Legendre and Gallagher 2001)

-

1.

Transform the species abundances from each object (sampling unit) into a vector of length 1, using the equation:

$$ {y\prime_{{ij}}} = {{{{y_{{ij}}}}} \left/ {{\sqrt {{\sum\limits_{{j = 1}}^p {y_{{ij}}^2} }} }} \right.} $$(8.5)where y ij is the abundance of species j in object i. This equation, called the ‘chord transformation’ in Legendre and Gallagher (2001), is one of the transformations available in the program canoco (Centring and standardisation for ‘samples’: Standardise by norm) and in the decostand() function of the vegan R-language package (method = ‘normalize’). If we compute the Euclidean distance

$$ {D_{\text{Euclidean}}}\left( {{\mathrm {\bf x\prime}_1},{\mathrm {\bf x\prime}_2}} \right) = \sqrt {{\sum\limits_{{j = 1}}^p {{{\left( {{{y\prime}_{{1j}}} - {{y\prime}_{{2j}}}} \right)}^2}} }} $$(8.6)between two rows (x′ 1, x′ 2) of the transformed data table, the resulting value is identical to the chord distance (Eq. 8.18) that could be computed between the rows of the original (untransformed) species abundance data table (Fig. 8.1). The interest of this transformation is that the chord distance, proposed by Orlóci (1967) and Cavalli-Sforza and Edwards (1967), is one of the distances recommended for species abundance data. Its value is maximum and equal to √2 when two objects have no species in common. As a consequence, after the chord transformation, the assemblage composition data are suitable for PCA or RDA which are methods preserving the Euclidean distance among the objects.

-

2.

In the same vein, if the data [y ij ] are subjected to the ‘chi-square distance transformation’ as follows:

$$ {y\prime_{{ij}}} = \sqrt {{{y_{{ + + }}}}} \frac{{{y_{{ij}}}}}{{{y_{{i + }}}\sqrt {{{y_{{ + j}}}}} }} $$(8.7)where y i+ is the sum of the row (object) values, y +j is the sum of the column (species) values, and y ++ is the sum of values of the whole data table, then Euclidean distances computed among the rows of the transformed data table [y′ ij ] are equal to chi-square distances (Eq. 8.19) among the rows of the original, untransformed data-table. The chi-square distance, preserved in correspondence analysis, is another distance often applied to species abundance data. Its advantage or disadvantage, depending upon the circumstances, is that it gives higher weight to the rare than to the common species. The chi-square distance transformation is available in the decostand() function of the vegan R-language package (method = ‘chi.square’).

-

3.

The data can be transformed into profiles of relative species abundances through the equation:

$$ {y\prime_{{ij}}} = \frac{{{y_{{ij}}}}}{{{y_{{i + }}}}} $$(8.8)which is a widespread method of data standardisation, prior to analysis, especially when the sampling units are not all of the same size as is commonly the case in palaeolimnology. Data transformed in that way are called compositional data. In palaeolimnology and community ecology, the species assemblage is considered to represent the response of the community to environmental, historical, or other types of forcing; the variation of any single species has no clear interpretation. Compositional data are used because ecologists and palaeoecologists believe that the vectors of relative proportions of species can lead to meaningful interpretations. Many fossil or recent assemblage data-sets are presented as profiles of relative abundances, for example, in palynology and palaeolimnology, or as percentages if the values y′ ij are multiplied by 100. Computing Euclidean distances among rows (objects) of a data-table transformed in this way produces ‘distances among species profiles’ (Eq. 8.20). The transformation to profiles of relative abundances is available in the decostand() function of the veganR-language package (method = ‘total’). Statistical criteria investigated by Legendre and Gallagher (2001) show that this is not the best transformation; the Hellinger transformation (next paragraph) is preferable. Log-ratio analysis has been proposed as a way of analysing compositional data (Aitchison 1986). This method is, however, only appropriate for data that do not contain many zeros (ter Braak and Šmilauer 2002).

-

4.

A modification of the species profile transformation is the Hellinger transformation:

$$ {y\prime_{{ij}}} = \sqrt {{\frac{{{y_{{ij}}}}}{{{y_{{i + }}}}}}} $$(8.9)

Computing Euclidean distances among rows (objects) of a data table transformed in this way produces a matrix of Hellinger distances among sites (Eq. 8.21). The Hellinger distance, described in more detail below, is a measure recommended for clustering or ordination of species abundance data (Prentice 1980; Rao 1995). It has good statistical properties as assessed by the criteria investigated by Legendre and Gallagher (2001). The Hellinger transformation is available in the decostand() function of the vegan R-language package (method = ‘hellinger’).

Before using these transformations, one may apply a square root or log transformation to the species abundances in order to reduce the asymmetry of the species distributions (Table 8.2). The transformations described above can also be applied to presence-absence data. The chord and Hellinger transformations appear to be the best for general use. The chi-square distance transformation is interesting when one wants to give more weight to the rare species; this is the case when the rare species are considered to be good indicators of special ecological conditions. We will come back to the use of these transformations in later sections. Prior to these transformations, any of the standardisations investigated by Noy-Meir et al. (1975), Prentice (1980), and Faith et al. (1987) may also be used if the study justifies it: species adjusted to equal maximum abundances or equal standard deviations, sites standardised to equal totals, or both.

Choice of an Appropriate Distance Function

Most statistical and numerical analyses assume some form of distance relationship among the observations. Univariate and multivariate analyses of variance and covariance, for instance, assume that the Euclidean distance is the appropriate way of describing the relationships among objects; likewise for methods of multivariate analysis such as K-means partitioning and PCA (see Legendre and Birks 2012: Chapter 7). It is the responsibility of the scientist doing the analyses to either make sure that this assumption is met by the data, or to model explicitly relationships of other forms among the objects by computing particular distance functions and using them in appropriate methods of data analysis.

Many similarity or distance functions have been used by ecologists; they are reviewed by Legendre and Legendre (1998: Chapter 7), Borcard et al. (2011: Chapter 3) and other authors. We will only mention here those that are most commonly used in the ecological, palaeoecological, and palaeolimnological literature.

-

1.

The Euclidean distance (Eq. 8.6) is certainly the most widely used coefficient to analyse tables of physical descriptors, although it is not always the most appropriate. This is the coefficient preserved by PCA and RDA among the rows of the data matrix (objects), so that if the Euclidean distance is considered appropriate to the data, these methods can be applied directly to the data matrix, perhaps after one of the transformations described above, to obtain a meaningful ordination.

-

2.

For physical or chemical data, an alternative to the Euclidean distance is to compute the Gower (1971) coefficient of similarity, followed by a transformation of the similarities to distances. The Gower coefficient is particularly important when one is analysing a table containing a mixture of quantitative and qualitative variables. In this coefficient, the overall similarity is the mean of the similarities computed for each descriptor j (see Simpson 2012: Chapter 15). Each descriptor is treated according to its own type. The partial similarity (s j ) between objects x 1 and x 2 for a quantitative descriptor j is computed as follows:

$$ {s_j}({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = 1 - \frac{{\left| {{y_{{1j}}} - {y_{{2j}}}} \right|}}{{{R_j}}} $$(8.10)where R j is the range of the values of descriptor j across all objects in the study. The partial similarity s j is a value between 0 (completely dissimilar) and 1 (completely similar). For a qualitative variable j, s j = 1 if objects x 1 and x 2 have the same state of the variable and s j = 0 if they do not. The Gower similarity between x 1 and x 2 is obtained from the equation:

$$ S({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \sum\limits_{{j = 1}}^p {{{{{s_j}({{\mathbf{x}}_1},{{\mathbf{x}}_2})}} \left/ {p} \right.}} $$(8.11)where p is the number of variables. The variables may receive different weights in this coefficient; see Legendre and Legendre (1998: p.259) for details. See also the note at the end of this section about implementations in R.

For presence-absence of physical descriptors, one may use the simple matching coefficient:

$$ S({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \frac{{a + d}}{{a + b + c + d}} = \frac{{a + d}}{p} $$(8.12)where a is the number of descriptors for which the two objects are coded 1, d is the number of descriptors for which the two objects are coded 0, whereas b and c are the numbers of descriptors for which the two objects are coded differently. p is the total number of physical descriptors in the table.

There are different ways of transforming similarities (S) into distances (D). The most commonly used equations are:

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = {1} - S({{\mathbf{x}}_{{1}}},{{\mathbf{x}}_{{2}}}) $$(8.13)and

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \sqrt {{1 - S({{\mathbf{x}}_1},{{\mathbf{x}}_2})}} $$(8.14)For the coefficients described in Eqs. 8.11, 8.12, 8.15, 8.16 and 8.17, Eq. 8.14 is preferable for transformation prior to ordination because the distances so obtained produce a fully Euclidean representation of the objects in the ordination space, except possibly in the presence of missing values; Eq. 8.13 does not guarantee such a representation (Legendre and Legendre 1998: Table 7.2). The concept of Euclidean representation of a distance matrix is explained below in the section on Euclidean or Cartesian space, Euclidean representation. Equation 8.14 is used for transformation of all binary coefficients computed by the dist.binary() function of the ade4 R-language package.

-

3.

For species presence-absence data,

-

1.

the Jaccard coefficient:

$$ S({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \frac{a}{{a + b + c}} $$(8.15) -

2.

and the Sørensen coefficient of similarity:

$$ S({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \frac{{2a}}{{2a + b + c}} $$(8.16)are widely used. In these coefficients, a is the number of species that the two objects have in common, b is the number of species found at site or sample 1 but not at site or sample 2, and c is the number of species found at site or sample 2 but not at site or sample 1. In order to obtain a fully Euclidean representation of the objects in the ordination space, these similarities should be transformed into distances using Eq. 8.14.

-

3.

The Ochiai (1957) coefficient:

$$ S({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \frac{a}{{\sqrt {{(a + b)(a + c)}} }} $$(8.17)deserves closer attention on the part of palaeoecologists since it is monotonically related to the binary form of the widely used chord and Hellinger distances described below (Eqs. 8.18 and 8.21).

For ordination analysis, the three similarity coefficients described above (Eqs. 8.15, 8.16, and 8.17) can be transformed into Euclidean-embeddable distances using the transformation D(x 1,x 2) = √(1 − S(x 1,x 2)) (Eq. 8.14). These distances will not produce negative eigenvalues in principal coordinate analysis and will thus be entirely represented in Euclidean space.

An interesting similarity coefficient among sites, applicable to presence-absence data, has been proposed by the palaeontologists Raup and Crick (1979): the coefficient is the probability of the data under the hypothesis of no association between objects. The number of species in common in two sites, a, is tested for significance under the null hypothesis H0 that there is no association between sites x 1 and x 2 because each site in a region (or each level in a core) receives a random subset of species from the regional pool (or the whole sediment core). The association between objects, estimated by a, is tested using permutations. The probability (p) that the data conform to the null hypothesis is used as a measure of distance, or (1 − p) as a measure of similarity. The permutation procedure of Raup and Crick (1979) was re-described by Vellend (2004). Legendre and Legendre (2012: coefficient S 27) describe two different permutational procedures that can be used to test the significance of the number of species in common between two sites (i.e., the statistic a). These procedures correspond to different null hypotheses. Birks (1985) discusses the application of this and other probabilistic similarity measures in palaeoecology.

-

1.

-

4.

Several coefficients have been described by ecologists for the analysis of quantitative assemblage composition data. The property that these coefficients share is that the absence of any number of species from the two objects under comparison does not change the value of the coefficient. This property avoids producing high similarities, or small distances, between objects from which many species are absent. The Euclidean distance function, in particular, is not appropriate for assemblage composition data obtained from long environmental gradients because the data table then contains many zeros, and the objects that have many zeros in common have small Euclidean distance values; this is considered to be an inappropriate answer in most ecological and palaeoecological problems. This question is discussed at length in many texts of quantitative community ecology. The coefficients most widely used by ecologists for species abundance data tables are:

-

1.

The chord distance, occasionally called the cosine-θ distance:

$$ {\fontsize{9}{11}\selectfont{\begin{aligned}D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \sqrt {{{{\sum\limits_{{j = 1}}^p {\left( {\frac{{{y_{{1j}}}}}{{\sqrt {{\sum\limits_{{j = 1}}^p {y_{{1j}}^2} }} }} - \frac{{{y_{{2j}}}}}{{\sqrt {{\sum\limits_{{j = 1}}^p {y_{{2j}}^2} }} }}} \right)} }^2}}} = \sqrt {{2\left( {1 - \frac{{\sum\limits_{{j = 1}}^p {{y_{{1j}}}{y_{{2j}}}} }}{{\sqrt {{\sum\limits_{{j = 1}}^p {y_{{1j}}^2} \sum\limits_{{j = 1}}^p {y_{{2j}}^2} }} }}} \right)}} \end{aligned}}} $$(8.18)which consists of subjecting the species data to the chord transformation (Eq. 8.5) followed by calculation of the Euclidean distance (Eq. 8.6). The chord distance is closely related to the Hellinger distance (Eq. 8.20).

-

2.

The chi-square distance:

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \sqrt {{{y_{{ + + }}}}} \sqrt {{\sum\limits_{{j = 1}}^p {\frac{1}{{{y_{{ + j}}}}}{{\left( {\frac{{{y_{{1j}}}}}{{{y_{{1 + }}}}} - \frac{{{y_{{2j}}}}}{{{y_{{2 + }}}}}} \right)}^2}} }} $$(8.19)where y i+ is the sum of the frequencies in row i, y +j is the sum of the frequencies in column j, and y ++ is the sum of all frequencies in the data table. It is equivalent to subjecting the species data to the chi-square distance transformation (Eq. 8.7) followed by calculation of the Euclidean distance (Eq. 8.6).

-

3.

The distance between species profiles:

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \sqrt {{\sum\limits_{{j = 1}}^p {{{\left( {\frac{{{y_{{1j}}}}}{{{y_{{1 + }}}}} - \frac{{{y_{{2j}}}}}{{{y_{{2 + }}}}}} \right)}^2}} }} $$(8.20)is equivalent to subjecting the species data to the transformation to profiles of relative abundances (Eq. 8.8) followed by calculation of the Euclidean distance (Eq. 8.6).

-

4.

The Hellinger distance (Rao 1995), is also called the chord distance by Prentice (1980) although it differs from Eq. 8.18:

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \sqrt {{\sum\limits_{{j = 1}}^p {{{\left[ {\sqrt {{\frac{{{y_{{1j}}}}}{{{y_{{1 + }}}}}}} - \sqrt {{\frac{{{y_{{2j}}}}}{{{y_{{2 + }}}}}}} } \right]}^2}} }} $$(8.21)It is equivalent to subjecting the species data to the Hellinger transformation (Eq. 8.9) followed by calculation of the Euclidean distance (Eq. 8.6). This equation is occasionally called the chord distance (Prentice 1980) described in Eq. 8.18, because the Hellinger distance is the chord distance computed on square-root transformed frequencies. In the Hellinger distance, the relative species abundances (‘compositional data’, used directly in Eq. 8.20) are square-root transformed in order to lower the importance of the most abundant species, which may grow exponentially when they encounter favourable conditions. This coefficient thus increases the importance given to the less abundant species (Prentice 1980). The chord (Eq. 8.18) and Hellinger (Eq. 8.21) functions produce distances in the range [0, √2]. For presence-absence data, they are both equal to

$$ \sqrt {2} \sqrt {{1 - \frac{a}{{\sqrt {{(a + b)(a + c)}} }}}} $$where

$$ \frac{a}{{\sqrt {{(a + b)(a + c)}} }} $$is the Ochiai (1957) similarity coefficient for binary data, described above.

-

5.

A coefficient first described by Steinhaus (in Motyka 1947) and rediscovered by other authors, such as Odum (1950) and Bray and Curtis (1957), is:

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \frac{{\sum\limits_{{j = 1}}^p {|{y_{{1j}}} - {y_{{2j}}}|} }}{{\sum\limits_{{j = 1}}^p {({y_{{1j}}} + {y_{{2j}}})} }} $$(8.22)This coefficient has excellent descriptive properties for community composition data (Hajdu 1981; Gower and Legendre 1986). Taking the square root of this distance will avoid negative eigenvalues and complex principal axes in principal coordinate analysis. A particular form of this coefficient, for data transformed into percentages by sites (\( {y\prime_{{ij}}} \) of Eq. 8.8 multiplied by 100), has been described by Renkonen (1938). When presence-absence data are used in Eq. 8.22, the resulting coefficient is the one-complement of the Sørensen coefficient of similarity (Eq. 8.16) computed over the same data (i.e.,, D (eq. 8.22) = 1 − S (eq. 8.16)).

-

6.

Whittaker’s (1952) index of association:

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \frac{1}{2}\sum\limits_{{j = 1}}^p {\left| {\frac{{{y_{{1j}}}}}{{{y_{{1 + }}}}} - \frac{{{y_{{2j}}}}}{{{y_{{2 + }}}}}} \right|} $$(8.23) -

7.

Clark’s (1952) coefficient of divergence:

$$ D({{\mathbf{x}}_1},{{\mathbf{x}}_2}) = \sqrt {{\frac{1}{p}\sum\limits_{{j = 1}}^p {{{\left( {\frac{{{y_{{1j}}} - {y_{{2j}}}}}{{{y_{{1j}}} + {y_{{2j}}}}}} \right)}^2}} }} $$(8.24)is a form of the Canberra metric (Lance and Williams 1967) rescaled to the [0, 1] range.

Most of the distances described in this section can be computed using the R-language functions dist() (stats package), vegdist() (vegan), dist.binary() (ade4), gowdis() (FD) and daisy() (cluster); see footnote of Table 8.3 for references. This statement calls for some remarks. (1) These libraries do not all produce the same results for the binary Jaccard coefficient: dist() and vegdist() use the transformation D = (1 − S) (Eq. 8.13) whereas dist.binary() uses \( D = \sqrt {{1 - S}} \) (Eq. 8.14) to transform similarities into distances. The latter guarantees that a fully Euclidean representation, without negative eigenvalues and complex eigenvectors, will result from principal coordinate analysis. (2) The chord, chi-square and Hellinger distances are not obtained directly but after two calculation steps: transformation of the data (Eqs. 8.5, 8.7 and 8.9) followed by calculation of the Euclidean distance (Eq. 8.6). (3) Several functions propose the Gower distance: vegdist() (vegan), daisy() (cluster), and gowdis() (FD); see footnote of Table 8.3 for references. The latter is the only function that can handle missing values and variables of all precision levels, including multistate qualitative variables (‘factors’ in R), and allows users to give different weights to the variables involved in a calculation.

Table 8.3 Computer programs for ordination. The list makes no pretence at being exhaustive -

1.

Euclidean or Cartesian Space, Euclidean Representation

A Cartesian space, named after René Descartes (1596–1650), French mathematician and philosopher, is a space with a Cartesian system of coordinates. It is also called a Euclidean space because the distances among points are measured by Eq. 8.6 in that space. The multidimensional ordination spaces of PCA, CA, PCoA, NMDS, etc., are Cartesian or Euclidean spaces; hence the distances among points embedded in these spaces are measured by the Euclidean distance formula. A few dimensions that represent a good deal of the variance of the data will be chosen from these multidimensional spaces to create a reduced-space ordination.

A distance function is said to have the Euclidean property, or (in short) to be Euclidean, if it always produces distance matrices that are fully embeddable in a Euclidean space. The test, available in the R-language package ade4 (function is.euclid()), is that a principal coordinate analysis (PCoA) of a Euclidean distance matrix produces no negative eigenvalues. This is not always the case in ordination. Some distance functions are not Euclidean, meaning that the distances in the matrix cannot be fully represented in a Euclidean ordination space. A principal coordinate analysis of the distance matrices produced by these coefficients may generate negative eigenvalues; these eigenvalues indicate the non-Euclidean nature of the distance matrix (Gower 1982). They measure the amount of variance that needs to be added to the distance matrix to obtain a full Euclidean representation. To be a metric is a necessary but not a sufficient condition for a distance coefficient to be Euclidean. Many of the commonly-used similarity coefficients are not Euclidean when transformed into distances using Eq. 8.13. The transformation described by Eq. 8.14 often solves the problem, however. For instance, the similarity coefficients of Gower, simple matching, Jaccard, Sørensen, Ochiai, and Steinhaus, described above, all become Euclidean when transformed into distances using Eq. 8.14 (Gower and Legendre 1996; Legendre and Legendre 1998: Table 7.2).

If the analysis is carried out to produce a PCoA ordination in a few (usually 2 or 3) dimensions, negative eigenvalues do not matter as long as their absolute values are not large when compared to the positive eigenvalues used for the reduced-space ordination. If the analysis requires that all coordinates be kept, as will be the case when testing multivariate hypotheses using the db-RDA method (see the subsection below on Linear RDA), negative eigenvalues should either be avoided or corrected for. They can be avoided by selecting a distance coefficient that is known to be Euclidean. When a non-Euclidean coefficient is used (for example, the Steinhaus/Odum/Bray-Curtis coefficient of Eq. 8.21), there are ways of correcting for negative eigenvalues in PCoA to obtain a fully Euclidean solution; see Legendre and Legendre (1998: pp. 432–438) for details. These corrections are available in some PCoA computer programs, including function pcoa() of the ape R-language package.

Metric or Non-metric Ordination?

Metric ordinations are obtained by the methods of principal component analysis (PCA), correspondence analysis (CA), and principal coordinate analysis (PCoA). These methods all proceed by eigenvalue decomposition. The eigenvalues measure the amount of variation of the observations along the ordination axes. The distances in the full-dimensional ordination space are projected onto the space of reduced dimensionality (usually two dimensions) chosen for ordination. Non-metric ordinations are obtained by non-metric multidimensional scaling (NMDS) which is not an eigenvalue method. This method only preserves the rank-order of the original distances in the reduced ordination space.

PCA is the method of choice to preserve Euclidean distances among objects, and CA when the chi-square distance is to be preserved. For other forms of distance, users have to choose between PCoA (also called metric scaling) and NMDS. PCoA is the preferred method (1) when one wishes to preserve the original distances in full-dimensional space, (2) when many (or all) ordination axes are sought, or (3) when the data-set is fairly large. NMDS may be preferred when the user wants to represent as much as possible of the distance relationships among objects in a few dimensions, at the cost of preserving only the rank-order of the distances and not the distances themselves.

The size of the data-sets is also of importance. PCA and CA can easily be computed on very large data-sets (tens or hundreds of thousand objects) as long as the number of variables is small (up to a few hundred), because the eigenvalue decomposition is done on the covariance matrix, which is of size p, the number of variables in the data-set.

For tables containing assemblage composition data, three paths can be followed: (1) one can transform the data using one of the transformations described by Eqs. 8.5, 8.7, 8.8, or 8.9, and produce the ordination by PCA (tb-PCA approach), or (2) compute a distance matrix using Eqs. 8.15, 8.16, 8.17, 8.18, 8.19, 8.20, 8.21, 8.22, 8.23 and 8.24, followed by PCoA or NMDS. For large data-sets of intermediate sizes (a few hundred objects), PCoA will produce the ordination solution faster than NMDS. For very large data-sets, PCA should be used. (3) For data-sets of any size, one can produce the ordination using CA if the chi-square distance is appropriate.

An alternative and biologically useful approach to deciding between ordinations based on PCA (Euclidean distance) of untransformed data and CA (chi-square distance) of multivariate species assemblage data is that emphasised by ter Braak (1987a) and ter Braak and Prentice (1988), namely the underlying species response model that is assumed when fitting either PCA or CA and extracting synthetic latent variables that are then used as the major ordination axes. PCA assumes an underlying linear response model, whereas CA assumes an underlying unimodal response model between the variables and the unknown but to be determined latent variables or ordination axes. The question is thus how to know whether a linear-based or a unimodal-based ordination is appropriate for a given data-set. The detrended relative of CA, detrended correspondence analysis (DCA: Hill and Gauch 1980; ter Braak 1987a), is a heuristic modification of CA designed to minimise two of the disadvantages of CA, namely the so-called arch-effect and the so-called edge-effect (ter Braak and Prentice 1988). As a result of the non-linear rescaling of the axes that removes the edge-effect, the object scores are scaled and standardised in a particular way. The lengths of the resulting ordination axes are given by the range of object scores and are expressed in ‘standard deviation units’ (SD) or units of compositional turnover. The tolerance or amplitude of the species’ curves along the rescaled DCA axes are close to 1; each curve will therefore rise and fall over about 4 SD (ter Braak and Prentice 1988). Objects that differ by 4 SD can be expected to have no species in common. A preliminary DCA of an assemblage data-set, with detrending by segments and non-linear rescaling, provides an estimate of the underlying gradient length. If the gradient length is less than about 2.5 SD, the assemblage variation is within a relatively narrow range, and the linear approach of PCA is appropriate. If the gradient length is 3 or more SD, the assemblage variation is over a larger range, and the unimodal-based approach of CA is appropriate (ter Braak and Prentice 1988). Transformation-based PCA (tb-PCA) is also appropriate in that case.

How Many Axes Are Required?

In most instances, ordination analysis is carried out to obtain an ordination in two, sometimes three, dimensions. The ordination is then used to illustrate the variability of the data along the ordination axes and attribute it to the variables that are most highly correlated with those axes. Simple interpretation of the variability in the ordination diagram can be obtained by projecting interpretative variables in the ordination plane, or by representing other properties of the data (for instance, the groups produced by cluster or partitioning analysis), or some other grouping of the objects known a priori (for example, the type of lake, or the nature of the sediment) (see Lepš and Šmilauer 2003).

There are instances where ordination analysis is carried out as a pre-treatment, or transformation, of the original data, before carrying out some further analysis. For example, one may wish to preserve the Steinhaus/Odum/Bray-Curtis distance in a canonical redundancy analysis (RDA) or K-means partitioning (see Legendre and Birks 2012: Chapter 7). To achieve that, one may compute the distance matrix using Eq. 8.22 (or its square root) and carry out a PCoA of that matrix. One then keeps all eigenvectors from this analysis (after perhaps a correction for negative eigenvalues) and uses that matrix of eigenvectors as input to redundancy analysis (RDA) orK-means partitioning. This is an example of distance-based RDA (db-RDA) described in more detail in the subsection on Linear RDA.

Tests of significance for individual eigenvalues are available for PCA; see the review papers of Burt (1952) and Jackson (1993). They are not often useful because, in most instances, ecologists do not have a strong null hypothesis to test; they rather use PCA for an exploratory representation of their data. Also, the parametric tests of significance assume normality of all descriptors, which is certainly a drawback for palaeolimnological data. Users most often rely on criteria that help them determine how many axes represent ‘important’ variation with respect to the original data table. The two best criteria at the moment are the simple broken-stick model proposed by Frontier (1976) as well as the bootstrapped eigenvalue method proposed by Jackson (1993).

Simple Ordination Methods: PCA, CA, PCoA, NMDS

The simple ordination methods mostly used by palaeoecologists and palaeolimnologists (Table 8.1) are the following.

1. Principal component analysis (PCA) is the oldest (Hotelling 1933) and best-known of all ordination methods. Consider a group of data points in multidimensional space, placed at Euclidean distances (Eq. 8.6) of one another. Imagine a lamp behind the cloud of points, and the shadows of the points projected onto a white wall. The geometric problem consists of rotating the points in such a way that the shadows have as much variance as possible on the wall. The mathematics of eigenvalues and eigenvectors, which is part of matrix algebra, is the way to find the rotation that maximises the variance of the projection in any number of dimensions. The variables are first transformed if required (Table 8.2), then centred by column, forming matrix Y. One computes the dispersion (or variance-covariance) matrix S among the variables, followed by the eigenvalues (λ j ) and eigenvectors of S. The eigenvectors are assembled in matrix U. The principal components, which provide the coordinates of the points on the successive ordination axes, are the columns of matrix F = YU. The eigenvalues measure the variance of the points along the ordination axes (the columns of matrix F). The first axis has the highest eigenvalue λ1, hence the largest variance; and so on for the following axes, with the constraint that all axes are orthogonal and uncorrelated to one another.

A scatter diagram with respect to the first two ordination axes, using the coordinates in the first two columns of matrix F, accounts for an amount of variance equal to λ1 + λ2. The distances among points in two dimensions are projections of their original, full-dimensional Euclidean distances. The contributions of the variables to the ordination diagram can be assessed by drawing them using the loadings found in matrix U. For two dimensions again, the first two columns of matrix U provide the coordinates of the end-points of vectors representing the successive variables. A graph presenting the variables (as arrows) on top of the dispersion of the points, as described above, is called a distance biplot. Another type of biplot, called a correlation biplot, can also be produced by many PCA programs; the correlations among variables are represented by the angles of projection of the variables, in two dimensions, after rescaling the eigenvectors to the square root of their respective eigenvalues (ter Braak 1994; Lepš and Šmilauer 2003). Supplementary or passive objects and variables can be projected onto a PCA ordination diagram. This option is available in most of the programs offering a PCA procedure listed in Table 8.3. The mathematics behind such projections is described in Legendre and Legendre (1998: Section 9.1.9) and ter Braak and Šmilauer (2002).

The approach of fitting fossil objects as supplementary objects onto a PCA ordination has been used by palaeoecologists (e.g., Lamb 1984) as an aid in detecting similarities between modern and fossil assemblages. It is important, however, to calculate the residual distances when adding additional supplementary objects into any low-dimensional ordination, as new objects may appear to be positioned close to other objects on the first few axes and yet be located some distance from the other objects when further dimensions are considered (Birks and Gordon 1985). Gower (1968) discusses the calculation and interpretation of the residual distances from the true position of the added points to the fitted plane giving the best two-dimensional representation of the objects.

Alternatively, one may perform a PCA of fossil assemblage data and add modern objects into the ordination (e.g., Ritchie 1977), or perform a PCA of fossil and modern assemblage data combined (MacDonald and Ritchie 1986). Prentice (1980) and Birks and Gordon (1985) discuss the advantages and disadvantages of fitting objects, modern or fossil, into low-dimensional PCA representations.

The most common application of PCA in palaeolimnology is to produce biplot diagrams of the objects (sites, lakes, core subunits, etc.) with respect to physical or chemical variables (e.g., Jones et al. 1993) or assemblage composition data (after appropriate transformation: Table 8.1) (e.g., Birks and Peglar 1979). Another useful representation of PCA results of core assemblages is to plot the object scores on the first few principal components in stratigraphical order for each axis (e.g., Birks and Berglund 1979; Birks 1987; Lotter and Birks 2003; Wall et al. 2010; Wang et al. 2010; Birks 2012b: Chapter 11), thereby providing a summarisation of the major patterns of variation in the stratigraphical data in two or three axes. PCA can also be used to detect outliers in data, which may correspond to legitimate outliers, or to erroneous data. PCA may be used to identify groups of variables that are highly correlated and, thus, form bundles of arrows in the ordination diagram; look, in particular, for variables that are highly but negatively correlated: their arrows are opposite in the diagram (e.g., Gordon 1982; MacDonald and Ritchie 1986). Another application is to simplify data-sets containing many highly collinear variables; the PCA axes that account for, say, 95% of the total variance form a simplified set of variables and allow discarding of the remaining 5%, which can be regarded as noise (Gauch 1982; Lotter et al. 1992).

2. Correspondence analysis (CA) is a form of PCA that preserves the chi-square distance (Eq. 8.19) among the objects or variables. CA is appropriate for frequency data, and in particular for species presence-absence or abundance data, subject to the caveat that the chi-square distance gives high weights to rare species. There are several ways of presenting CA (Hill 1974). We will look at it here as the eigenanalysis of a table of components of chi-square. The assemblage composition data matrix Y is transformed into a matrix of components of chi-square Q = [q ij ] where

The part inside the square parentheses is easily recognised as the component of the chi-square statistic computed in each cell of a frequency (or contingency) table; they are obtained from the observed (O ij ) and the expected values (E ij ) of cell ij of the table. These components can be added to produce the Pearson chi-square statistic used to test the hypothesis of absence of relationship between the rows and columns of a contingency table. Here, the components of chi-square are divided by a constant, the square root of the sum of values in the whole table (y ++), which turns them into the values [q ij ] of the transformed data table Q. From this point, one can compute a cross-product matrix (the covariance matrix computed in PCA is also a cross-product matrix, but it is computed here without further centring since centring is part of Eq. 8.25), and from it the eigenvalues and eigenvectors. An alternative approach is to carry out singular value decomposition of the matrix Q, as explained in Legendre and Legendre (1998: Section 9.4). The eigenvalues measure the amount of inertia accounted for by each ordination axis. Matrices are obtained that contain the positions of the objects (rows) and species (columns) along the successive axes of the ordination space. Two types of scaling can be used for biplots: one can (1) preserve the chi-square distances among objects (rows), the objects being at the centroids of the species (columns); or (2) preserve the chi-square distance among the variables (columns), the variables being at the centroids of the objects (rows) (ter Braak and Verdonschot 1995). The most common application of CA in palaeolimnology is to produce biplot diagrams of species and objects or other sampling units (e.g., Jones and Birks 2004). As in PCA, supplementary objects and variables can be projected onto a CA ordination diagram (e.g., Jones and Birks 2004). This option is available, for instance, in the program canoco. In R, functions to that effect are also available in vegan and ade4. vegan: predict.rda() and predict.cca() for adding new points to PCA, RDA, CA and CCA, and envfit() for adding supplementary variables to all of the above (envfit() does weighted fitting in CCA so that it is consistent with the original). ade4: suprow() to add supplementary objects and supcol() to add supplementary variables to PCA and CA plots.

Usually, ecologists who see the organisms they are sampling consider rare species as potential indicators of rare environmental conditions, whereas those who have to sample blindly or use traps are more wary of the interpretation of rare species. In animal ecology, a single presence of a species at a site may be due to a species that does not belong to the site but was travelling between two other favourable sites. In palynology, likewise, pollen may be brought by far transport from distant sites. In aquatic ecology, rare species may appear in spurious ways in sampling units from sites where they are found at low abundance. Because of their influence on the chi-square distance (Eq. 8.19), one should pay special attention to rare species in CA. One must understand that rare species affect the ordination of objects very little, but these species will be represented by points located far from the origin. Users of CA who are worried about the interpretation of rare species often decide to remove, not the species that have low abundance, but those that occur in the data-set very rarely. One may try removing first the species that occur only once in the data-set, then those that occur once or twice, and so on, repeating the analysis every time. One can remove the rarest species up to the point where the first few eigenvalues, expressed as percentages of the inertia (= variation) in the original data-set, are little affected by the removal. This approach has been suggested by Daniel Borcard, Université de Montréal.

Palaeolimnologists often use the detrended relative of CA, detrended correspondence analysis (DCA), as a preliminary tool in establishing the extent of compositional turnover in modern calibration data-sets as a guide as to whether to use calibration procedures that assume linear or unimodal responses of species to environmental gradients (Birks 1995). Detrending by segments is an arbitrary method for which no theoretical justification has been offered, while the assumptions behind the nonlinear rescaling procedure have not been fully substantiated (Wartenberg et al. 1987, but see ter Braak 1985). Jackson and Somers (1991) showed that DCA ordinations of sites greatly varied with the number of segments one arbitrarily decides to use, so that the ecological interpretation of the results may vary widely. In simulation studies conducted on artificial data representing unimodal species responses to environmental gradients in one or two dimensions, DCA did not perform particularly well in recovering complex gradients (Kenkel and Orlóci 1986; Minchin 1987). For these reasons, detrended correspondence analysis (DCA) should generally be avoided for the production of ordination plots. Palaeolimnologists (e.g., Birks et al. 2000: Birks and Birks 2001; Bradshaw et al. 2005) have plotted the object scores on the first DCA axis in stratigraphical order for different palaeolimnological variables (e.g., diatoms, chironomids) as a means of comparing the major trends and compositional turnover between different proxies within the same stratigraphical sequence (see Birks 2012b: Chapter 11).

3. In principal coordinate analysis (PCoA), the objective is to obtain an ordination, in any number of dimensions, representing as much as possible of the variation of the data while preserving the distance that has explicitly been computed. The algebra used to find a solution to the geometric problem proceeds directly from a pre-computed square, symmetric distance matrix D. The first step is to transform the distances d hi of D into values \( {a_{{hi}}} = - 0.5\,d_{{hi}}^2 \), then to centre the resulting matrix A to produce a third matrix Δ = [δ hi ] using the equation:

where ā h and ā i are the means of row h and column i corresponding to element \( {a_{{hi}}} \), whereas ā is the mean of all \( {a_{{hi}}} \) values in the matrix. Eigenvalue decomposition is applied to matrix Δ, producing eigenvalues and eigenvectors. When the eigenvectors are normalised to the square root of their respective eigenvalues, they directly provide the coordinates of the objects on the given ordination axis. The eigenvalues give the variance (not divided by degrees of freedom) of the objects along that axis. If some eigenvalues are negative and all ordination axes are needed for subsequent analyses, corrections can be applied to the distance matrix; this was mentioned in the section on Euclidean or Cartesian space, Euclidean representation.

A simple example may help explain PCoA. From an object-by-variable data matrix Y, compute matrix D of Euclidean distances among the objects. Run PCA using matrix Y and PCoA using matrix D. The eigenvalues of the PCoA of matrix D are proportional to the PCA eigenvalues computed for matrix Y (they differ by the factor (n − 1)), while the eigenvectors of the PCoA of D are identical to matrix F of the PCA of Y. Normally, one would not compute PCoA on a matrix of Euclidean distances since PCA is a faster method to obtain an ordination of the objects in Y that preserves the Euclidean distance among the objects. This was presented here simply as a way of understanding the relationship between PCA and PCoA in the Euclidean distance case. The real interest of PCoA is to obtain an ordination of the objects from some other form of distance matrix more appropriate to the data at hand—for example, a Steinhaus/Odum/Bray-Curtis distance matrix in the case of assemblage composition data. Surprisingly, PCoA has rarely been used in palaeoecology (e.g., Birks 1977; Engels and Cwynar 2011) in contrast to the extensive use of PCA, CA, and DCA.

4. Non-metric ordinations are obtained by non-metric multidimensional scaling (NMDS); several variants of this method have been proposed (Prentice 1977, 1980). The distances in the low-dimensional space are not rigid projections of the original distances in full-dimensional space. In NMDS, the user sets the dimensionality of the space in which the ordination is to be produced; the solution sought is usually two-dimensional. The computer program proceeds by successive iterations, trying to preserve in the ordination the rank-order of the original distances. Different functions, called Stress() (formula 8.1 or 8.2), Sstress(), or Strain(), may be used to measure the goodness-of-fit of the solution in reduced space. Non-metric ordinations are rarely used in palaeoecology. Early applications include Birks (1973), Gordon and Birks (1974), and Prentice (1978), whereas more recent applications include Brodersen et al. (1998, 2001), Simpson et al. (2005), Soininen and Weckström (2009), Tremblay et al.(2010), Wiklund et~al. (2010), Allen et al. (2011), and Wischnewski et al. (2011). Overall, there seem to be few theoretical advantages in using NMDS in palaeoecology (Prentice 1980).

Introduction to Canonical Ordination

The methods of canonical ordination are generalisations of simple ordination methods; the ordination is forced or constrained to represent the part of the variation in a table of response variables (e.g., species abundances) that is maximally related to a set of explanatory variables (e.g., environmental variables). Canonical redundancy analysis (RDA) is the constrained form of PCA whereas canonical correspondence analysis (CCA) is the constrained form of CA. Canonical ordination is a hybrid between regression and ordination, as will be described below. The classical forms of RDA and CCA use multiple linear regression between the variables in the two data tables. Canonical ordination methods have also been described that look for polynomial relationships between the dependent (response) and explanatory (predictor) variables. Tests of statistical significance of the relationship between the species and environmental data can be performed in canonical ordination, just as in multiple regression.

Canonical ordination methods are widely used in palaeolimnological studies. The Birks et al. (1998) bibliography on the use of canonical analysis in ecology for the period 1986–1996 contained 804 titles, 96 of which are in the fields of palaeobotany, palaeoecology, and palaeolimnology. Applications of these methods in palaeoecology (Table 8.1) try to establish links between species assemblages and environmental factors, or use canonical analysis as a first step in calibration studies to guide the selection of significant environmental variables that may be estimated by biological assemblages (ter Braak and Juggins 1993; Birks 1995) (see Juggins and Birks 2012: Chapter 14). Palaeolimnologists also try to estimate how much of the assemblage variation can be attributed to different groups of environmental factors, such as sediment types, geology, climatic factors, geography, topography, land-use, etc. (e.g., Lotter et al. 1997; Simpson and Hall 2012: Chapter 19).

Canonical Ordination Methods

The types of canonical ordination methods that palaeoecologists are mostly interested in are redundancy analysis (RDA) and canonical correspondence analysis (CCA). They are asymmetric forms of analysis, combining regression and ordination. These analyses focus on a clearly identified table of response variables (containing, very often, assemblage composition data), which is related to a table of explanatory variables (e.g., environmental variables). Other forms of canonical analysis are available in the major statistical packages: canonical correlation analysis (CCorA) and canonical variates analysis (CVA), also called multiple discriminant analysis (see ter Braak 1987a). These forms will not be discussed in this chapter because they do not treat matrix Y as a response data table; they are briefly outlined in Chapter 2 (this volume). Other more general approaches to the linking of two or more ecological data tables are co-inertia analysis (Dolédec and Chessel 1994; Dray et al. 2003) and multiple factor analysis (Escofier and Pagès 1994); they allow the analysis of a wide range of different data tables (Dray et al. 2003), with no constraints on the number of species and environmental variables in relation to the number of objects or on the role of the different tables as response and predictor variables. All these methods of canonical analysis are described and illustrated in Chapter 6 of Borcard et al. (2011).

In the asymmetric forms of canonical analysis, after regressing the Y variables on X, an ordination is computed on the regression fitted values. The preliminary questions that have to be resolved before ordination (Table 8.1) will also have to be answered about the data in Y prior to canonical ordination: the choice of transformations for the physical or species data, and of an appropriate distance measure among objects. The table of explanatory variables, called X, contains the independent (or constraining) variables used in the regression part of the analysis. The decisions normally made prior to or during regression will have to be considered prior to canonical analysis: transformation of the regressors; coding of multi-state qualitative variables into dummy (binary or orthogonal) variables; coding the factors of experiments into (orthogonal) dummy variables; and choice of a linear or polynomial regression model. We do not have to worry about (multi)normality since the tests of significance in canonical analysis are carried out by Monte Carlo permutation tests (see Legendre and Legendre 1998, 2012; Lepš and Šmilauer 2003; Birks 2012a: Chapter 2; Lotter and Anderson 2012: Chapter 18).

Linear RDA

Canonical redundancy analysis (RDA) combines two steps: linear regression and PCA. The analysis is schematically described in Fig. 8.2. (1) Each variable (column) of Y is regressed on X, which contains the explanatory variables. The fitted values of the multiple regressions are assembled in matrix Ŷ, whereas the residuals are placed in the columns of matrix Y res . Ŷ thus contains that part of Y that is explained by linear models of X, whereas Y res contains that part of Y that is linearly independent of (or orthogonal to) X. At this point, the matrices Ŷ and Y res have the same number of columns as Y. (2) The matrix of fitted values Ŷ usually contains (much) less information, measured by its total variance, than Y. A PCA of Ŷ is computed to reduce its dimensionality, producing eigenvalues (that are now called canonical eigenvalues), a matrix of eigenvectors U (now called canonical eigenvectors, which will be used as the matrix of response variable scores for the biplot), and a matrix Z of principal components, obtained in the same way as matrix F of the principal components in PCA, which contains the sampling unit scores for the ordination biplot; for details, see the description of PCA in the previous section on Simple ordination methods. In some applications, ecologists prefer to use, for biplots, the sampling unit scores obtained by the operation F = YU (upper-right in Fig. 8.2). These scores are not the direct result of the PCA of the fitted values Ŷ; they are based on the original data Y, which contain the fitted values plus the residuals (noise). These sampling unit scores (column vectors of matrix F) are not orthogonal to each another, since they differ from the vectors of matrix Z, which are orthogonal as in any PCA. (3) In some applications, the effect of the explanatory variables on Y is already well documented and understood; for instance, the effect of water depth on aquatic macroinvertebrates. RDA can be used to go beyond what is already known, by examining the residuals of the regression, found in matrix Y res. In those cases, one is interested in obtaining an ordination of the matrix of residual variation: a PCA is performed on matrix Y res , as shown in the lower part of Fig. 8.2.

Redundancy analysis involves two steps: regression which produces fitted values \( {\mathbf{\hat{Y}}} \) and residuals Y res , followed by principal component analysis (PCA) of the matrix of fitted values. PCA of the matrix of residuals may also be of interest (Modified from Legendre and Legendre 1998). Var. variables

Scalings in RDA biplots follow the same rules as in PCA: one may be primarily interested in an ordination preserving the Euclidean distances among sampling unit fitted values (distance biplot), or in illustrating the correlations among the columns of Ŷ (correlation biplot) (ter Braak 1994). The explanatory environmental variables can also be represented in the ordination diagrams, which become triplots, by using their correlations with the canonical ordination axes. The correlation coefficients must be slightly modified to account for the stretching of the canonical ordination axes; the biplot scores of environmental variables are obtained by multiplying the correlation coefficients by √(λk/total variance in Y). States of binary or multistate qualitative variables can be usefully represented in triplots by the centroids (mean coordinates) of the sampling units that possess the given state (ter Braak 1994).

The number of canonical axes is limited by either the number of variables in Y or the number of variables in X. Example 1: if Y contains a single variable, regressing it on X produces a single vector of fitted values and, hence, a single canonical axis. Example 2: if X contains a single column, regressing Y (which contains p columns) on X will produce a matrix Ŷ with p columns, but since they are the result of regression on the same explanatory variable, matrix Ŷ is actually one-dimensional. So, the PCA will come up with a single non-zero eigenvalue that will contain all the variance of Y explained by X. The analysis of a matrix Y(n x p) by a matrix X(n x m) produces at most (n − 1), p, or m canonical axes, whichever is the minimum.

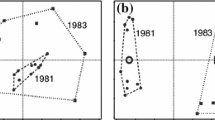

Like PCA, RDA canbe tricked into preserving some distance that is appropriate to assemblage composition data, instead of the Euclidean distance (Fig. 8.3). Figure 8.3b shows that assemblage composition data can be transformed using Eqs. 8.5 or 8.7, 8.8 and 8.9 (transformation-based RDA, or tb-RDA, approach). RDA computed on data transformed by these equations will actually preserve the chord, chi-square, profile, or Hellinger distance among sites. One can also directly compute one of the distance functions appropriate for assemblage composition data (Eqs. 8.15, 8.16, 8.17, 8.18, 8.19, 8.20, 8.21, 8.22, 8.23 and 8.24), carry out a principal coordinate analysis of the distance matrix, and use all the PCoA eigenvectors as input to RDA (Fig. 8.3c). This is the distance-based RDA approach (db-RDA) advocated by Legendre and Anderson (1999).

Comparison of (a) classical redundancy analysis (RDA) and canonical correspondence analysis (CCA) to (b, c) alternative approaches forcing canonical analyses to preserve other distances adapted to assemblage composition data (Modified from Legendre and Gallagher 2001)

Partial RDA offers a way of controlling for the effect of a third data-set, called the matrix of covariables W. Computationally, the analysis first calculates the residuals X res of the explanatory variables X on the covariables W; then an RDA of Y on X res is computed; see details in Legendre and Legendre (2012). This is quite different from a PCA of Y res mentioned at the end of the introductory paragraph of the present section. Partial RDA is a generalisation of partial linear regression to multivariate data, for example, species assemblages. It is used in many different situations, including the following: (1) controlling for the effect of W(e.g., geographic positions) in tests of the relationship between Y (e.g., modern biological assemblages) and X (e.g., modern environmental data) (Peres-Neto and Legendre 2010); (2) determining the partial, singular effect of an explanatory variable of interest (e.g., environmental), and testing its significance, while controlling for the effect of all the other explanatory variables in the study; (3) partial RDA is used to test the significance of single factors and interaction terms in two-way or multi-way experimental designs where species assemblages are the response variable (see Testing hypotheses in (multi-)factorial experiments below); (4) partial RDA is also used to test the significance of individual fractions in variation partitioning (see Spatial or temporal analysis through variation partitioning below).

In terms of algorithms, RDA and CCA can be obtained either by global regression and PCA, as described here, or by the iterative algorithm described by ter Braak (1987a) and used in the canoco program. In large analyses, the global algorithm produces more precise results when many canonical ordination axes are to be extracted and used in further analyses; the iterative algorithm is computationally faster when one is only interested in obtaining the first few (4–8) canonical axes.

Linear CCA

Canonical correspondence analysis (CCA) (ter Braak 1986, 1987b) only differs from RDA in two aspects. First, it is the matrix Q of CA (see Simple ordinations methods above) that is used as the response data matrix, instead of the data matrix Y. This ensures that the chi-square distance is preserved by CCA among the rows of the response data table and the assumption of unimodal species responses is made as in CA. Second, the regression step is carried out using weights p i+, where p i+ is the sum of frequencies in row i (y i+) divided by the grand total (y ++) of all frequencies in the table. Using these weights is tantamount to repeating each row of the response and explanatory data tables y i+ times before computing the regressions. Scalings for biplots or triplots are the same as in CA (see ter Braak and Verdonschot 1995). Just as one can compute a partial RDA, it is possible to perform a partial CCA (ter Braak 1988b; ter Braak and Prentice 1988). Odgaard (1994) provides an illustrative application of partial CA in palaeoecology and Bradshaw et al. (2005) provide a detailed application of partial CCA in palaeolimnology.