Abstract

In this paper we provide a simple random-variable example of inconsistent information, and analyze it using three different approaches: Bayesian, quantum-like, and negative probabilities. We then show that, at least for this particular example, both the Bayesian and the quantum-like approaches have less normative power than the negative probabilities one.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Expert Judgment

- Joint Probability Distribution

- Quantum Formalism

- Paraconsistent Logic

- Negative Probability

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In recent years the quantum-mechanical formalism (mainly from non-relativistic quantum mechanics) has been used to model economic and decision-making processes (see [1, 2] and references therein). The success of such models may originate from several related issues. First, the quantum formalism leads to a propositional structure that does not conform to classical logic [3]. Second, the probabilities of quantum observables do not satisfy Kolmogorov’s axioms [4]. Third, quantum mechanics describes experimental outcomes that are highly contextual [5–9]. Such issues are connected because the logic of quantum mechanics, represented by a quantum lattice structure [3], leads to upper probability distributions and thus to non-Kolmogorovian measures [10–12], while contextuality leads the nonexistence of a joint probability distribution [13, 14].

Both from a foundational and from a practical point of view, it is important to ask which aspects of quantum mechanics are actually needed for social-science models. For instance, the Hilbert space formalism leads to non-standard logic and probabilities, but the converse is not true: one cannot derive the Hilbert space formalism solely from weaker axioms of probabilities or from quantum lattices. Furthermore, the quantum formalism yields non-trivial results such as the impossibility of superluminal signaling with entangled states [15]. These types of results are not necessary for a theory of social phenomena [16], and we should ask what are the minimalistic mathematical structures suggested by quantum mechanics that reproduce the relevant features of quantum-like behavior.

In a previous article, we used reasonable neurophysiological assumptions to created a neural-oscillator model of behavioral Stimulus-Response theory [17]. We then showed how to use such model to reproduce quantum-like behavior [18]. Finally, in a subsequent article, we remarked that the same neural-oscillator model could be used to represent a set of observables that could not correspond to quantum mechanical observables [19], in a sense that we later on formalize in Sect. 3. These results suggest that one of the main quantum features relevant to social modeling is contextuality, represented by a non-Kolmogorovian probability measure, and that imposing a quantum formalism may be too restrictive. This non-Kolmogorovian characteristic would come when two contexts providing incompatible information about observable quantities were present.

Here we focus on the incompatibility of contexts as the source of a violation of standard probability theory. We then ask the following question: what formalisms are normative with respect to such incompatibility? This question comes from the fact that, in its origin, probability was devised as a normative theory, and not descriptive. For instance, Richard Jeffrey [20] explains that “the term ’probable’ (Latin probable) meant approvable, and was applied in that sense, univocally, to opinion and to action. A probable action or opinion was one such as sensible people would undertake or hold, in the circumstances.” Thus, it should come as no surprise that humans actually violate the rules of probability, as shown in many psychology experiments. However, if a person is to be considered “rational,” according to Boole, he/she should follow the rules of probability theory.

Since inconsistent information, as above mentioned, violates the theory of probability, how do we provide a normative theory of rational decision-making? There are many approaches, such as Bayesian models or the Dempster-Shaffer theory, but here we focus on two non-standard ones: quantum-like and negative probability models. We start first by presenting a simple case where expert judgments lead to inconsistencies. Then, we approach this problem first with a standard Bayesian probabilistic method, followed by a quantum model. Finally, we use negative probability distributions as a third alternative. We then compare the different outcomes of each approach, and show that the use of negative probabilities seems to provide the most normative power among the three. We end this paper with some comments.

2 Inconsistent Information

As mentioned, the use of the quantum formalism in the social sciences originates from the observation that Kolmogorov’s axioms are violated in many situations [1, 2]. Such violations in decision-making seem to indicate a departure from a rational view, and in particular to though-processes that may involve irrational or contradictory reasoning, as is the case in non-monotonic reasoning. Thus, when dealing with quantum-like social phenomena, we are frequently dealing with some type of inconsistent information, usually arrived at as the end result of some non-classical (or incorrect, to some) reasoning. In this section we examine the case where inconsistency is present from the beginning.

Though in everyday life inconsistent information abounds, standard classical logic has difficulties dealing with it. For instance, it is a well know fact that if we have a contradiction, i.e. \( A \& \left( \lnot A\right) \), then the logic becomes trivial, in the sense that any formula in such logic is a theorem. To deal with such difficulty, logicians have proposed modified logical systems (e.g. paraconsistent logics [21]). Here, we will discuss how to deal with inconsistencies not from a logical point of view, but instead from a probabilistic one.

Inconsistencies of expert judgments are often represented in the probability literature by measures corresponding to the experts’ subjective beliefs [22]. It is frequently argued that this subjective nature is necessary, as each expert makes statements about outcomes that are, in principle, available to all experts, and disagreements come not from sampling a certain probability space, but from personal beliefs. For example, let us assume that two experts, Alice and Bob, are examining whether to recommend the purchase of stocks in company \(X\), and each gives different recommendations. Such differences do not emerge from an objective data (i.e. the actual future prices of \(X\)), but from each expert’s interpretations of current market conditions and of company \(X\). In some cases the inconsistencies are evident, as when, say, Alice recommends buy, and Bob recommends sell; in this case the decision maker would have to reconcile the discrepancies.

The above example provides a simple case. A more subtle one is when the experts have inconsistent beliefs that seem to be consistent. For example, each expert, with a limited access to information, may form, based on different contexts, locally consistent beliefs without directly contradicting other experts. But when we take the totality of the information provided by all of them and try to arrive at possible inferences, we reach contradictions. Here we want to create a simple random-variable model that incorporates expert judgments that are locally consistent but globally inconsistent. This model, inspired by quantum entanglement, will be used to show the main features of negative probabilities as applied to decision making.

Let us start with three \(\pm 1\)-valued random variables, \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\), with zero expectation. If such random variables have correlations that are too strong then there is no joint probability distribution [13]. To see this, imagine the extreme case where the correlations between the random variables are \(E\left( \mathbf {XY}\right) =E\left( \mathbf {YZ}\right) =E\left( \mathbf {XZ}\right) =-1.\) Imagine that in a given trial we draw \(\mathbf {X}=1\). From \(E\left( \mathbf {XY}\right) =-1\) it follows that \(\mathbf {Y}=-1\), and from \(E\left( \mathbf {YZ}\right) =-1\) that \(\mathbf {Z}=1\). But this is in contradiction with \(E\left( \mathbf {XZ}\right) =-1\), which requires \(\mathbf {Z}=-1\). Of course, the problem is not that there is a mathematical inconsistency, but that it is not possible to find a probabilistic sample space for which the variables \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\) have such strong correlations. Another way to think about this is that the \(\mathbf {X}\) measured together with \(\mathbf {Y}\) is not the same one as the \(\mathbf {X}\) measured with \(\mathbf {Z}\): values of \(\mathbf {X}\) depend on its context.

The above example posits a deterministic relationship between all random variables, but the inconsistencies persist even when weaker correlations exist. In fact, Suppes and Zanotti [13] proved that a joint probability distribution for \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\) exists if and only if

The above case violates inequality (1).

Let’s us now consider the example we want to analyze in detail. Imagine \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\) as corresponding to future outcomes in a company’s stocks. For instance, \(\mathbf {X}=1\) corresponds to an increase of the stock value of company \(X\) in the following day, while \(\mathbf {X}=-1\) a decrease, and so on. Three experts, Alice (\(A\)), Bob (\(B\)), and Carlos (\(C\)), have the following beliefs about those stocks. Alice is an expert on companies \(X\) and \(Y\), but knows little or nothing about \(Z\), so she only tells us what we don’t know: her expected correlation \(E_{A}\left( \mathbf {XY}\right) \). Bob (Carlos), on the other hand, is only an expert in companies \(X\) and \(Z\) (\(Y\) and \(Z\)), and he too only tells us about their correlations. Let us take the case where

where the subscripts refer to each experts. For such case, the sum of the correlations is \(-1\frac{1}{2}\), and according to (1) no joint probability distribution exists. Since there is no joint, how can a rational decision-maker decide what to do when faced with the question of how to bet in the market? In particular, how can she get information about the joint probability, and in particular the unknown triple moment \(E\left( \mathbf {XYZ}\right) \)? In the next sections we will show how we can try to answer these questions using three possible approaches: quantum, Bayesian, and signed probabilities.

3 Quantum Approach

We start with a comment about the quantum-like nature of correlations (2)–(4). The random variables \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\) with correlations (2)–(4) cannot be represented by a quantum state in a Hilbert space for the observables corresponding to \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\). This claim can be expressed in the form of a simple proposition.

Proposition 1

Let \(\hat{X}\), \(\hat{Y}\), and \(\hat{Z}\) be three observables in a Hilbert space \(\mathcal{H}\) with eigenvalues \(\pm 1\), let them pairwise commute, and let the \(\pm 1\)-valued random variable \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\) represent the outcomes of possible experiments performed on a quantum system \(|\psi \rangle \in \mathcal{H}\). Then, there exists a joint probability distribution consistent with all the possible outcomes of \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\).

Proof

Because \(\hat{X}\), \(\hat{Y}\), and \(\hat{Z}\) are observables and they pairwise commute, it follows that their combinations, \(\hat{X}\hat{Y}\), \(\hat{Y}\hat{Z}\), \(\hat{X}\hat{Z}\), and \(\hat{X}\hat{Y}\hat{Z}\) are also observables, and they commute with each other. For instance,

Furthermore,

Therefore, quantum mechanics implies that all three observables \(\hat{X}\), \(\hat{Y}\), and \(\hat{Z}\) can be simultaneously measured. Since this is true, for the same state \(|\psi \rangle \) we can create a full data table with all three values of \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\) (i.e., no missing values), which implies the existence of a joint.

So, how would a quantum-like model of correlations (2)–(4) be like? The above result depends on the use of the same quantum state \(|\psi \rangle \) throughout the many runs of the experiment, and to circumvent it we would need to use different states for the system. In other words, if we want to use a quantum formalism to describe the correlations (2)–(4), a \(|\psi \rangle \) would have to be selected for each run such that a different state would be used when we measure \(\hat{X}\hat{Y}\), e.g. \(|\psi \rangle _{xy}\), than when we measure \(\hat{X}\hat{Z}\), e.g. \(|\psi \rangle _{xz}\). Then, the quantum description could be accomplished by the state

This state would model the correlations the following way. When Alice makes her choice, she uses a projector into her “state of knowledge” \(\hat{P}_{A}=|A\rangle \langle A|\), and gets the correlation \(E_{A}\left( \mathbf {XY}\right) \), and similarly for Bob and Carlos.

In the above example, all correlations and expectations are given, and the only unknown is the triple moment \(E\left( \mathbf {XYZ}\right) \). Furthermore, since we do not have a joint probability distribution, we cannot compute the range of values for such moment based on the expert’s beliefs. But the question still remains as to what would be our best bet given what we know, i.e., what is our best guess for \(E\left( \mathbf {XYZ}\right) \). The quantum mechanical approach does not address this question, as it is not clear how to get it from the formalism given that any superposition of the states preferred by Alice, Bob, and Carlos are acceptable (i.e., we can choose any values of \(c_{A}\), \(c_{B}\), and \(c_{C}\)).

4 Bayesian Approach

Here we focus again on the unknown triple moment. As we mentioned before, there are many different ways to approach this problem, such as paraconsistent logics, consensus reaching, or information revision to restore consistency. Common to all those approaches is the complexity of how to resolve the inconsistencies, often with the aid of ad hoc assumptions [22]. Here we show how a Bayesian approach would deal with the issue [23, 24].

In the Bayesian approach, a decision maker, Deanna (\(D\)), needs to access what is the joint probability distribution from a set of inconsistent expectations. To set the notation, let us first look at the case when there is only one expert. Let \(P_{A}(x)=P_{A}(\mathbf {X}=x|\delta _{A})\) be the probability assigned to event \(x\) by Alice conditioned on Alice’s knowledge \(\delta _{A}\), and let \(P_{D}(x)=P_{D}(\mathbf {X}=x|\delta _{D})\) be Deanna’s prior distribution, also conditioned on her knowledge \(\delta _{D}\). Furthermore, let \(\mathbf {P}_{A}=P_{A}\left( x\right) \) be a continuous random variable, \(\mathbf {P}_{A}\in [0,1],\) such that its outcome is \(P_{A}\left( x\right) \). The idea behind \(\mathbf {P}_{A}\) is that consulting an expert is similar to conducting an experiment where we sample the experts opinion by observing a distribution function, and therefore we can talk about the probability that an expert will give an answer for a specific sample point. Then, for this case, Bayes’s theorem can be written as

where \(P_{D}'\left( x|\mathbf {P}_{A}=P_{A}\left( x\right) \right) \) is Deanna’s posterior distribution revised to take into account the expert’s opinion. As is the case with Bayes’s theorem, the difficulty lies on determining the likelihood function \(P_{D}\left( \mathbf {P}_{A}\right) \), as well as the prior. This likelihood function is, in a certain sense, Deanna’s model of Alice, as it is what Deanna believes are the likelihoods of each of Alice’s beliefs. In other words, she should have a model of the experts. Such model of experts is akin to giving each expert a certain measure of credibility, since an expert whose model doesn’t fit Deanna’s would be assigned lower probability than an expert whose model fits.

The extension for our case of three experts and three random variables is cumbersome but straightforward. For Alice, Bob, and Carlos, Deanna needs to have a model for each one of them, based on her prior knowledge about \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\), as well as Alice, Bob, and Carlos. Following Morris [23], we construct a set \(E\) consisting of our three experts joint priors:

Deanna’s is now faced with the problem of determining the posterior \(P'_{D}\left( x|E\right) ,\) using Bayes’s theorem, given her new knowledge of the expert’s priors.

In a Bayesian approach, the decision maker should start with a prior belief on the stocks of \(X\), \(Y\), and \(Z\), based on her knowledge. There is no recipe for choosing a prior, but let us start with the simple case where Deanna’s lack of knowledge about \(X\), \(Y\), and \(Z\) means she starts with the initial belief that all combinations of values for \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\) are equiprobable. Let us use the following notation for the probabilities of each atom: \(p_{xyz}=P\left( \mathbf {X}=+1,\mathbf {Y}=+1,\mathbf {Z}=+1\right) \), \(p_{xy\overline{z}}=P\left( \mathbf {X}=+1,\mathbf {Y}=+1,\mathbf {Z}=-1\right) \), \(p_{\overline{x}y\overline{z}}=P\left( \mathbf {X}=-1,\mathbf {Y}=+1,\mathbf {Z}=-1\right) \), and so on. Then Deanna’s prior probabilities for the atoms are

where the superscript \(D\) refers to Deanna.

When reasoning about the likelihood function, Deanna asks what would be the probable distribution of responses of Alice if somehow she (Deanna) could see the future (say, by consulting an Oracle) and find out that \(E\left( XY\right) =-1\). For such case, it would be reasonable for Alice to think it more probable to have, say, \(\overline{x}y\) than \(xy\), since she was consulted as an expert. So, in terms of the correlation \(\epsilon _{A}\), Deanna could assign the following likelihood function:

where the minus sign represents the negative, i.e. \(p_{xy\cdot }^{A}=p_{\overline{xy}\cdot }=\frac{1}{4}\left( 1+\epsilon _{A}\right) \) and \(p_{\overline{x}y\cdot }=p_{x\overline{y}\cdot }=\frac{1}{4}\left( 1-\epsilon _{A}\right) \). Notice that the choice of likelihood function is arbitrary.

Deanna’s posterior, once she knows that Alice thought the correlation to be zero (cf. (2)), constitutes, as we mentioned above, an experiment. To illustrate the computation, we find its value below, from Alice’s expectation \(E_{A}\left( \mathbf {XY}\right) =-1\). From Bayes’s theorem

where the normalization constant \(k\) is given by

and we use the notation \(p^{D|A}\) to explicitly indicate that this is Deanna’s posterior probability informed by Alice’s expectation. Similarly, we have

and

If we apply Bayes’s theorem twice more, to take into account Bob’s and Carlos’s opinions given by correlations (3) and (4), using likelihood functions similar to the one above, we compute the following posterior joint probability distribution,

and

Finally, from the joint, we can compute all the moments, including the triple moment, and obtain \(E\left( \mathbf {XYZ}\right) =0\).

It is interesting to notice that the triple moment from the posterior is the same as the one from the prior. This is no coincidence. Because the revisions from Bayes’s theorem only modify the values of the correlations, nothing is changed with respect to the triple moment. In fact, if we compute Deanna’s posterior distribution for any values of the correlations \(\epsilon _{A}\), \(\epsilon _{B}\), and \(\epsilon _{C}\), we obtain the same triple moment, as it comes solely from Deanna’s prior distribution. Thus, the Bayesian approach, though providing a proper distribution for the atoms, does not in any way provide further insights on the triple moment.

5 Negative Probabilities

We now want to see how we can use negative probabilities to approach the inconsistencies from Alice, Bob, and Carlos. The first person to seriously consider using negative probabilities was Dirac in his Bakerian Lectures on the physical interpretation of relativistic quantum mechanics [25]. Ever since, many physicists, most notably Feynman [26], tried to use them, with limited success, to describe physical processes (see [27] or [28] and references therein). The main problem with negative probabilities is its lack of a clear interpretation, which limits its use as a purely computational tool. It is the goal of this section to show that, at least in the context of a simple example, negative probabilities can provide useful normative information.

Before we discuss the example, let us introduce negative probabilities in a more formal wayFootnote 1. Let us propose the following modifications to Kolmogorov’s axioms.

Definition 1

Let \(\Omega \) be a finite set, \(\mathcal{F}\) an algebra over \(\Omega \), \(p\) and \(p'\) real-valued functions, \(p:\mathcal{F}\rightarrow \mathbb {R}\), \(p':\mathcal{F}\rightarrow \mathbb {R}\), and \(M^{-}=\sum _{\omega _{i}\in \Omega }\left| p\left( \left\{ \omega _{i}\right\} \right) \right| \). Then \(\left( \Omega ,\mathcal{F},p\right) \) is a negative probability space if and only if:

Remark 1

If it is possible to define a proper joint probability distribution, then \(M^{-}=1\), and A-C are equivalent to Kolmogorov’s axioms.

Going back to our example, we have the following equations for the atoms.

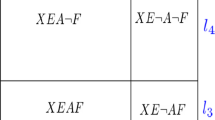

where (7) comes from the fact that all probabilities must sum to one, (8)–(10) from the zero expectations for \(\mathbf {X}\), \(\mathbf {Y}\), and \(\mathbf {Z}\), and (11)–(13) from the pairwise correlations. Of course, this problem is underdetermined, as we have seven equations and eight unknowns (we don’t know the unobserved triple moment). A general solution to (7)–(10) is

where \(\delta \) is a real number. From (14)–(17) it follows that, for any \(\delta \), some probabilities are negative. First, we notice that we can use the joint probability distribution to compute the expectation of the triple moment, which is \(E(\mathbf {XYZ})=-\frac{1}{4}-4\delta .\) Since \(-1\le E\left( \mathbf {XYZ}\right) \le -1\), it follows that \(-1\frac{1}{4}\le \delta \le \frac{3}{4}\). Of course, \(\delta \) is not determined by the lower moments, as we should expect, but axiom A requires \(M^{-}\) to be minimized. So, to minimize \(M^{-}\), we focus only on the terms that contribute to it: the negative ones. To do so, let us split the problem into several different sections. Let us start with \(\delta \ge 0\), which gives \(M_{\delta \ge 0}^{-}=-\frac{1}{8}-2\delta ,\) having a minimum of \(-\frac{1}{8}\) when \(\delta =0\). For \(-1/8\le \delta <0\), \(M_{-\frac{1}{8}\le \delta <0}^{-}=\delta -\frac{1}{8}+\delta =-\frac{1}{8},\) which is a constant value. Finally, for \(\delta <-1/8\), the mass for the negative terms is given by \(M_{\delta <-\frac{1}{8}}^{-}=\frac{1}{8}-2\delta .\) Therefore, negative mass is minimized when \(\delta \) is in the following range

Now, going back to the triple correlation, we see that by imposing a minimization of the negative mass we restrict its values to the following range:

But Eqs. (7)–(13) and the fact that the random variables are \(\pm 1\)-valued allow any correlation between \(-1\) and \(1\), and we see that the minimization of the negative mass offers further constraints to a decision maker.

Before we proceed, we need to address the meaning of negative probabilities, as well as the minimization of \(M^{-}\). We saw from Remark 1 that when \(M^{-}\) is zero we obtain a standard probability measure. Thus, the value of \(M^{-}\) is a measure of how far \(p\) is from a proper joint probability distribution, and minimizing it is equivalent to asking \(p\) to be as close as possible to a proper joint, while at the same time keeping the marginals. This point in itself should be sufficient to suggest some normative use to negative probabilities: a negative probability (with \(M^{-}\) minimized) gives us the most rational bet we can make given inconsistent information. But the question remains as to the meaning of negative probabilities.

To give them meaning, let us redefine the probabilities from \(p\) to \(p^{*}\) such that \(p^{*}\left( \left\{ \omega _{i}\right\} \right) =0\) when \(p\left( \left\{ \omega _{i}\right\} \right) \le 0\). It follows from this redefinition that \(\sum _{\omega _{i}\in \Omega }p^{*}\left( \left\{ \omega _{i}\right\} \right) \ge 1\). This newly defined probability would not violate Kolmogorov’s nonnegativity axiom, but instead would violate B above. The \(p^{*}\)’s corresponds to de Finetti’s upper probability measures, and axiom A above guarantees that such upper is as close to a proper distribution as possible. Thus, according to a subjective interpretation, the negative probability atoms correspond to impossible events, and the positive ones to an upper probability measure consistent with the marginals. Once again, the triple moment corresponds to our best bet.

6 Conclusions

The quantum mechanical formalism has been successful in the social sciences. However, one of the questions we raised elsewhere was whether some minimalist versions of the quantum formalism which do not include a full version of Hilbert spaces and observables could be relevant [19]. In this paper we adapted the example modeled with neural oscillators in [19] to a different case where each random variable could be interpreted as outcomes of a market, and where the inconsistencies between the correlations could be interpreted as inconsistencies between experts’ beliefs. Such inconsistencies result in the impossibility to define a standard probability measure that allows a decision-maker to select an expectation for the triple moment. The computation of the triple moment from the inconsistent information was done in this paper using three different approaches: Bayesian, quantum-like, and negative probabilities.

With the Bayesian approach, we showed that not only does it rely on a model of the experts (the likelihood function), but also that no new information is gained from it, as the triple moment from the prior is not changed by the application of Bayes’s rules. Therefore, the Bayesian approach had nothing to say about the triple moment.

Similar to the Bayesian, the quantum approach also had nothing to say about the triple moment, as the arbitrariness of choices for quantum superpositions (without any additional constraints) results in all values of triple moments being possible. In fact, the quantum approach above could be similarly implemented using a contextual theory. For instance, Dzhafarov [29] proposes the use of an extended probability space where different random variables (say, \(\mathbf {X}_{z}\) and \(\mathbf {X}_{y}\)) are used, and where we then ask how similar they are to each other (for instance, what is the value of \(P\left( \mathbf {X}_{z}\ne \mathbf {X}_{y}\right) \)). However, as with the quantum case, the meaning given to \(P\left( \mathbf {X}=1\right) \) in our example does not fit with this model, as it corresponds to the expectation of an increase in the stock value of company \(X\) in the future, and the \(X\) that Alice is talking about is exactly the same one for Bob and Carlos, as it corresponds to the increase in the objective value (in the future) of a stock in the same company. Furthermore, as expected due to its similar features, this approach has the same problem as the quantum one in terms of dealing with the triple moment, but it has the advantage of making it clearer what the problem is: the triple moment does not exist because we have nine random variables instead of three, as we have three different contexts.

The negative probability approach, on the other hand, led to a nontrivial constraint to the possible values of the triple moment. When used as a computational tool, a joint probability distribution, and with it the triple moment, could be obtained. Together with the minimization of the negative mass \(M^{-}\), this joint leads to a nontrivial range of possible values for the triple moment. Given the interpretation of negative probabilities with respect to uppers, it follows that this range is our best guess as to where the values of the triple moment should lie, given our inconsistent information. Thus, negative probabilities provide the decision maker with some normative information that is unavailable in either the Bayesian or the quantum-like approaches.

Notes

- 1.

We limit our discussion to finite spaces.

References

Khrennikov, A.: Ubiquitous Quantum Structure. Springer, Heidelberg (2010)

Haven, E., Khrennikov, A.: Quantum Social Science. Cambridge University Press, Cambridge (2013)

Birkhoff, G., von Neumann, J.: The logic of quantum mechanics. Ann. Math. 37(4), 823–843 (1936)

von Weizsäcker, C.F.: Probability and quantum mechanics. Br. J. Philos. Sci. 24(4), 321–337 (1973)

Bell, J.S.: On the einstein-podolsky-rosen paradox. Physics 1(3), 195–200 (1964)

Bell, J.S.: On the problem of hidden variables in quantum mechanics. Rev. Mod. Phys. 38(3), 447–452 (1966)

Kochen, S., Specker, E.P.: The problem of hidden variables in quantum mechanics. In: Hooker, C.A. (ed.) The Logico-Algebraic Approach to Quantum Mechanics, pp. 293–328. D. Reidel Publishing Co., Dordrecht (1975)

Greenberger, D.M., Horne, M.A., Zeilinger, A.: Going beyond bell’s theorem. In: Kafatos, M. (ed.) Bell’s Theorem, Quantum Theory, and Conceptions of the Universe. Fundamental Theories of Physics, vol. 37, pp. 69–72. Kluwer Academic, Dordrecht (1989)

de Barros, J.A., Suppes, P.: Inequalities for dealing with detector inefficiencies in greenberger-horne-zeilinger type experiments. Phys. Rev. Lett. 84, 793–797 (2000)

Holik, F., Plastino, A., Sánz, M.: A discussion on the origin of quantum probabilities. arXiv:1211.4952, November 2012

de Barros, J.A., Suppes, P.: Probabilistic results for six detectors in a three-particle GHZ experiment. In: Bricmont, J., Dürr, D., Galavotti, M.C., Ghirardi, G., Petruccione, F., Zanghi, N. (eds.) Chance in Physics. Lectures Notes in Physics, vol. 574, pp. 213–223. Springer, Berlin (2001)

de Barros, J.A., Suppes, P.: Probabilistic inequalities and upper probabilities in quantum mechanical entanglement. Manuscrito 33, 55–71 (2010)

Suppes, P., Zanotti, M.: When are probabilistic explanations possible? Synthese 48(2), 191–199 (1981)

Suppes, P., de Barros, J.A., Oas, G.: A collection of probabilistic hidden-variable theorems and counterexamples. In: Pratesi, R., Ronchi, L. (eds.) Waves, Information, and Foundations of Physics: A Tribute to Giuliano Toraldo di Francia on his 80th Birthday. Italian Physical Society, Italy (1996)

Dieks, D.: Communication by EPR devices. Phys. Lett. A 92(6), 271–272 (1982)

de Barros, J.A., Suppes, P.: Quantum mechanics, interference, and the brain. J. Math. Psychol. 53(5), 306–313 (2009)

Suppes, P., de Barros, J.A., Oas, G.: Phase-oscillator computations as neural models of stimulus–response conditioning and response selection. J. Math. Psychol. 56(2), 95–117 (2012)

de Barros, J.A.: Quantum-like model of behavioral response computation using neural oscillators. Biosystems 110(3), 171–182 (2012)

de Barros, J.A.: Joint probabilities and quantum cognition. In: Khrennikov, A., Migdall, A.L., Polyakov, S., Atmanspacher, H. (eds.) AIP Conference Proceedings, vol. 1508, pp. 98–107. American Institute of Physics, Sweden (2012)

Jeffrey, R.: Probability and the Art of Judgment. Cambridge University Press, Cambridge (1992)

da Costa, N.C.A.: On the theory of inconsistent formal systems. Notre Dame J. Formal Logic 15(4), 497–510 (1974)

Genest, C., Zidek, J.V.: Combining probability distributions: a critique and an annotated bibliography. Stat. Sci. 1(1), 114–135 (1986). (Mathematical Reviews number (MathSciNet): MR833278)

Morris, P.A.: Decision analysis expert use. Manage. Sci. 20(9), 1233–1241 (1974)

Morris, P.A.: Combining expert judgments: a Bayesian approach. Manage. Sci. 23(7), 679–693 (1977)

Dirac, P.A.M.: Bakerian lecture. The physical interpretation of quantum mechanics. Proc. R. Soc. Lond. B A180, 1–40 (1942)

Feynman, R.P.: Negative probability. In: Hiley, B.J., Peat, F.D. (eds.) Quantum Implications: Essays in Honour of David Bohm, pp. 235–248. Routledge, London (1987)

Mückenheim, G.: A review of extended probabilities. Phys. Rep. 133(6), 337–401 (1986)

Khrennikov, A.: Interpretations of Probability. Walter de Gruyter, New York (2009)

Dzhafarov, E.N.: Selective influence through conditional independence. Psychometrika 68(1), 7–25 (2003)

Acknowledgments

Many of the details about negative probabilities were developed in collaboration with Patrick Suppes, Gary Oas, and Claudio Carvalhaes on the context of a seminar held at Stanford University in Spring 2011. I am indebted to them as well as the seminar participants for fruitful discussions. I also like to thank Tania Magdinier, Niklas Damiris, Newton da Costa, and the anonymous referees for comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

de Barros, J.A. (2014). Decision Making for Inconsistent Expert Judgments Using Negative Probabilities. In: Atmanspacher, H., Haven, E., Kitto, K., Raine, D. (eds) Quantum Interaction. QI 2013. Lecture Notes in Computer Science(), vol 8369. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-54943-4_23

Download citation

DOI: https://doi.org/10.1007/978-3-642-54943-4_23

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-54942-7

Online ISBN: 978-3-642-54943-4

eBook Packages: Computer ScienceComputer Science (R0)