Abstract

This chapter discusses the potential meaning of the term social in relation to human–agent interaction. Based on the sociological theory of object-centred sociality, four aspects of sociality, namely forms of grouping, attachment, reciprocity, and reflexivity are presented and transferred to the field of human–humanoid interaction studies. Six case studies with three different types of humanoid robots are presented, in which the participants had to answer a questionnaire involving several items on these four aspects. The case studies are followed by a section on lessons learned for human–agent interaction. In this section, a “social agent matrix” for categorizing human–agent interaction in terms of their main sociality aspect is introduced. A reflection on this matrix and the future (social) human–agent relationship closes this chapter.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1.1 Introduction

Several studies in the research fields of Human–Computer Interaction (HCI) and Human–Robot Interaction (HRI) indicate that people tend to respond differently towards autonomous interactive systems than they do towards “normal computing systems” [8]. In the 1990s, the Media Equation Theory already revealed that people treat media and computing systems in a more social manner, like real people and places [31]. However, not only the responses differ, also the expectations vary and tend into a more social direction, the more anthropomorphized the system design is [26]. For instance, when an inexperienced user has to interact with a robot for the first time, the first impression of the robot is paramount to successfully initiate and maintain the interaction [24]. Thus, it is important that the robot’s appearance matches with its task to increase its believability. Exploratory studies in the research field of HRI indicate that people have very clear assumptions that anthropomorphic robots should be able to perform social tasks and follow social norms and conventions [26]. These assumptions about the relation between social cues and anthropomorphic design for interactive agents can also be found on the side of developers and engineers: The Wakamaru robot, developed by Mitsubishi Heavy Industries, for instance was designed in a human-like shape on purpose, as it should (1) live with family members, (2) speak spontaneously in accordance with family member’s requirements, and (3) play its own role in a family (http://www.wakamura.net).

But what do we actually mean when we are talking about social cues in the human–agent relationship? If we have a look at the WordNetFootnote 1 entry for the term “social” we find a wide variety of meanings, such as “social” relating to human society and its members, “social” in terms of living together or enjoying life in communities and organized groups and “social” as relating to or belonging to the characteristic of high society. However, the term social can also relate to non-human fields, such as the tendency to live together in groups or colonies of the same kind–ants can be considered to be social insects. All these meanings indicate that the term “social” has a very broad meaning in everyday language, but there is also a lack of definition for the term social in human–agent interaction. In HCI and HRI we can find several research topics which are related to “social interaction”, such as social software, social computing, CMC (Computer-Mediated Communication), CSCW (Computer-Supported Collaborative Work), the above mentioned Media Equation Theory, and research on social presence and social play.

In traditional psychology “social” is mainly understood as interpersonal interaction. If we take this definition as the starting point, the question arises, if we can consider human–agent interaction as interpersonal. An experiment by Heider and Simmel in 1944 demonstrated for the first time that animated objects can be perceived as social if they move at specific speeds and in specific structures [17]; thus a perception of agency and interpersonality in the interaction with animated agents can be assumed.

In the following, we will present an overview of related literature on the topic of social human–agent interaction and subsequently go into detail regarding how the concepts of believability and sociality interrelate. We will present the concept of object-centred sociality [25] as theoretical baseline to derive four general aspects for social interaction with agents, namely forms of grouping, attachment, reciprocity, and reflexivity. Based on these four aspects, a questionnaire was developed, which was used in six user studies with humanoid robots that will be described subsequently. The results and lessons learned of theses studies will lead to a “social agent matrix” which allows the categorization of (autonomous) interactive agents in terms of their sociality. This chapter ends with a reflection on the social agent matrix and the future (social) human–agent relationship.

1.2 Believability, Sociality, and Their Interrelations

Research on believability of media content and computer agents has got a long history. Believability has often been the object of research, especially in the field of communication science, like for instance the comparison of radio, TV, and newspaper content, as e.g. Gaziano et al. [15] as well as recent research including online media as e.g. Abdulla et al. [2].

Hovland et al. showed in their study that believability consists of the two main principles trustworthiness and expertise [18]. Based on this research, Fogg and Tseng [13] investigated to what extent believability matters in Human Computer Interaction. Hereby, they suggested a set of basic terms for assessing computer believability. Bartneck [4] adapted Fogg’s and Tseng’s concept of believability [13] to his model of convincingness. He could show that convincingness and trustworthiness highly correlate.

One of the most famous experiments regarding the believability and persuasiveness of computing systems was conducted by Weizenbaum who employed a virtual agent called ELIZA [45]. This agent was able to simulate a psychotherapist and to keep the conversation going by passively asking leading questions. Throughout the study the participants did not notice that they were actually speaking to a computer program. At the end of the experiment, it was disclosed that participants thought that they were talking to a human and that the conversational partner appreciated their problems. A higher degree of sociality perception can hardly be achieved by a computing system.

A similar study was conducted by Sundar and Nass in which people favoured to interact with the computer over the human interaction partner [35]. Recent studies confronted their participants with questions regarding trustworthiness and believability of screen characters and fully embodied robots (see e.g. [4, 32]). In order to assess robots in terms of believability, scales were either adapted as done by Shinozawa et al. [33] or newly developed as by Bartneck et al. [5]. Similar scales were often used to assess the believability of an information source (TV, newspapers, web pages, etc.) or an individual agent.

Shinozawa et al. could show that the 3D model of a robotic agent was rated higher in terms of source believability by the means of McCroskey’s believability scale [28] than a 2D on-screen agent. Kidd also used a believability scale [6], which was originally developed for media assessment (such as the McCroskey’s scale), to rate a robot’s believability [23]. Hereby he found out that women tended to rate believability higher than men. Additionally, people trusted robots that were physically present to a greater extent, which is similar to the findings of Shinozawa et al.

The physical appearance of robots plays also a huge role in social HRI. Powers and Kiesler found out that certain physical attributes of the robot could change the human’s mental model of the robot. Moreover, they could show that people’s intentions are linked to the traits of the mental model [3]. For instance, certain physical characteristics, such as the robot’s voice and physiognomy, created the impressions of a sociable robot, which in turn predicted the intention to accept the advice of the robot and changed people’s perception of the robot’s human likeness, knowledge and sociability.

Furthermore, it makes a difference if a robot recommends something or not. Imai and Narumi conducted a user study with a robot that gave recommendations [19]. The participants in the experiment inclined to want what the robot recommended, e.g. this cup of tea is delicious and the other is not. The aspect of recommendation in human–agent interaction is tightly interwoven with trustworthiness and believability [13].

Another approach researched by Desai et al. is to design an interface where the user can adjust the level of trust in a robot so it can decide more autonomously [11]. For instance, if the user controls a robot with a joystick and the robot notes many errors made by the user, the robot suggests the user to switch to a higher level of trust. In such a level the robot could make more decisions without asking the human operator.

However, in how far can a believable computing system, a robot or any kind of anthropomorphic agent be considered to be social? As the research field of robotics and Artificial Intelligence heads towards a direction where engineers develop robots following anthropomorphic images, there seems to be an area in which social interaction between humans becomes comparable to social interaction between a human and a machine. Some researchers even go beyond anthropomorphic images and work on artificial behaviour traits by developing cognitive systems (see for instance Chap. 8), which already passed the false belief test (Leonardo, [34]) and the mirror test (Nico, [1]). According to technology assessment research it is hoped (and feared) that humanoid robots will in future act like humans and be an integral part of society [42]. Such cognitive systems have a model of the self and a model of the others. By continuously updating and relating these models to each other, cognitive systems can “socially” interact. We are convinced that these circumstances require subsequent research on social acceptance in human–robot interaction in specific and human–agent relations in general.

1.3 The Concept of Sociality in Human–Agent Interaction

Reviewing the state-of-the-art literature on social HRI research (a good overview can be found in [14, 44]) and looking on the data gathered during the case studies presented later in this chapter (see Sect. 1.4), it becomes obvious that people believe in anthropomorphic agents to be social actors. However, what could be the basis to assess the degree of sociality of interactive computing systems? One approach can be found in Chap. 8, entitled ConsScale FPS: Cognitive Integration for Improved Believability in Computer Game Bots. For ConsScale, social refers to the Theory of Mind (ToM) cognitive function, i.e. being able to attribute mental (intentional) states to oneself and to other selves. In other words, artificial agents which are capable of attributing intentional states, could be perceived as social.

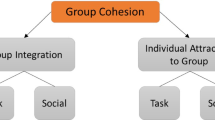

But, what behavioural embodiments of cognitive processes are perceived as social by humans? Studies in the area of sociology of knowledge point into the direction that humans tend to express similar behaviours towards artefacts and objects as towards humans, such as talking to a robot or expressing emotions. Knorr-Cetina [25] speaks of a “post-social” world in this context, in which non-human entities enter the social domain. According to her, these “post-social” interactions with objects include four aspects that explain their sociality towards humans: forms of grouping, attachment, reciprocity, and reflexivity.

1.3.1 Forms of Grouping

To form a group is a core element of human social behaviour. The aspect “forms of grouping” describes the fact that humans define their own identity by sharing common characteristics with others and by distinguishing themselves from other groups. Knorr-Cetina could show that humans ascribe personal characteristics also to objects with which they often closely interact and somehow form a group with these objects [25]. The arising question is: will humans show similar behaviours like that when cooperating with an anthropomorphic agent?

1.3.2 Attachment

Attachment as a psychological concept explains the bond a human infant develops to its caregiver [7]. However, Knorr-Cetina could show that humans also develop an emotional bond to objects (e.g. “my favourite book”) [25] . In general, the idea of emotional attachment towards technology already found its way into HCI and HRI research [21, 22, 36]. For human–agent interaction, attachment could be understood as an affection-tie that one person forms between him/herself and an agent. One main aspect of emotional attachment is that it endures over time. Thus, Norman explains emotional attachment as the sum of cumulated emotional episodes of users’ experiences with a computing system in various contextual areas [30]. These experiences are categorized into three dimensions: a visceral level (first impression), a behavioural level (usage of the device), and a reflective level (interpretation of the device).

1.3.3 Reciprocity

In accordance to Gouldner, reciprocity can be seen as a pattern of social exchange, meaning mutual exchange of performance and counter-performance and as a general moral principle of give-and-take in a relationship [16]. In HRI research, the aspect of reciprocity became relevant with the increase of social robot design [20]. Humans can quickly respond socially to robotic agents (see e.g. [31]), but the question arising is, if humans experience “robotic feedback” as adequate reciprocal behaviour. Thus, in a human–agent relationship reciprocity could be understood as the perception of give-and-take in a human–agent interaction scenario.

1.3.4 Reflexivity

Reflexivity describes the fact that an object or behaviour and its description cannot be separated one from the other, rather they have a mirror-like relationship. Reflexivity can be a property of behaviour, settings and talk, which make the ongoing construction of social reality necessary [27]. In human–agent interaction reflexivity could be understood as the sense-making of a turn taking interaction of which the success depends on the actions of an interaction partner.

Thus, our assumption is that focussing on one of these aspects in the interaction design of a human–humanoid interaction scenario, increases the perception of sociality on the user-side. In other words, from an actor-centred sociology perspective of sociality (and subsequently the degree of believability that an interactive agent displays) is not manifested in its appearance (visual cues), but in its interaction (behavioural cues).

1.4 The Case Studies

In the following, we present our insights gained in six human–humanoid interaction studies. Four of these studies are laboratory-based case studies, whereas two of them were conducted with the humanoid HRP-2 robot [37, 38] and the other two with the humanoid HOAP-3 robot [40, 43]. The other two studies were field trials, both of which were conducted with the anthropomorphically designed ACE robot [39, 41]. In all these studies we investigated the sociality in the interaction with these robots on a reflective level by means of questionnaire data and additional observational data of the two field trials.

1.4.1 Investigating Social Aspects in Human–Humanoid Interaction

To gather quantitative data on the social aspects about the perception of humanoid robots, a questionnaire consisting of 13 items which had to be rated on a 5-point Likert scale, ranging from 1 \(=\) “strongly disagree” to 5 \(=\) “strongly agree”, was developed. In this questionnaire the aspects reciprocity and reflexivity were combined into one concept, as the reflected sense-making of the give-and-take interaction was assessed as successful task completion. The users filled in the questionnaire after they completed the tasks together with the robot. All items are presented in Table 1.1.

Prior to and directly after the interaction with the humanoid robot the study participants were asked to fill in the Negative Attitude towards Robots Scale (NARS) to gain insights on the question, if the interaction with the robot changed their general attitude towards robots. This questionnaire is based on a psychological scale to measure the negative attitudes of humans towards robots. It was originally developed by Nomura et al. [29]. This questionnaire tries to visualize which factors prevent individuals from interacting with robots. The questionnaire consists of 14 questions which have to be rated on a 5-point Likert scale ranging from 1 \(=\) “strongly disagree” to 5 \(=\) “strongly agree”. The 14 questions build three sub scales: S1 \(=\) negative attitude toward situations of interaction with robots; S2 \(=\) negative attitude toward social influence of robots; S3 \(=\) negative attitude toward emotions in interaction with robots.

1.4.2 First Study with the HRP-2

The first case study was carried out as a Wizard-of-Oz user study [9], based on a mixed-reality simulation. The simulation was based on a 3D model of the HRP-2 robot and implemented with the Crysis game engine (more details on the technical implementation can be found in [38]) which was controlled by a hidden human wizard during the interaction trials. The human–humanoid collaboration was based on the task of carrying and mounting an object together, whereas the object (a board) existed in the virtual as well as in the real world and built the contact point for the interaction. The research question was: “How do differently simulated feedback modalities influence the perception of the human–robot collaboration in terms of sociality?”. The four experimental conditions were: Con0: interaction without feedback, Con1: interaction with visual feedback (blinking light showing that the robot understood the command), Con2: interaction with haptic feedback, and Con3: interaction with visual and haptic feedback in combination. The study was conducted with 24 participants in August 2008 at the University of Applied Sciences in Salzburg, Austria.

1.4.2.1 Study Setting

This user study was based on one task which had to be conducted together with the simulated robot via a mobile board as “input modality” (see Fig. 1.1). The task was introduced by the following scenario:

Imagine you are working at a construction site and you get the task from your principal constructor to mount a gypsum plaster board together with a humanoid robot. You can control the robot with predefined voice commands, to carry out the following action sequences.

1.4.2.1.1 Task:

Lift, move, and mount a gypsum plaster board together with a humanoid robot. This task consists of the following action sequences:

-

1.

Start the interaction by calling the robot.

-

2.

Lift the board together with the robot.

-

3.

Move the board together with the robot to the right spot.

-

4.

Tilt the board forward to the column together with the robot.

-

5.

Tell the robot to screw the board.

1.4.2.2 Findings on Social Aspects

The questionnaire data analysis revealed the mean values regarding the items on forms of grouping, attachment, and reciprocity & reflexivity, depicted in Table 1.2, which indicate that the aspect forms of grouping was perceived most intensely (summative overall factor rating: mean: 3.10, SD: 0.91). However, the statement about the importance of a humanoid robot as future working colleague was rated rather low. In terms of attachment, the participants rated the item “1 AT” the highest, indicating that they could imagine that robots will enter a special role in society in future (summative overall factor rating: mean: 2.21, SD: 0.92). The aspect reciprocity & reflexivity was even rated slightly better than attachment (summative overall factor rating: mean: 2.92, SD: 0.98), whereas the highest rated item was “1 RE”, which demonstrates the importance of turn taking for simulated sociality. Moreover, an ANOVA on the overall factor rating revealed that the experimental conditions influenced the results of the aspect forms of grouping (\(F(3,20) 6.26,p<0.05\)). A post-hoc test (LSD) showed that in Con1 (interaction with visible feedback) forms of grouping was rated significantly lower than in all other conditions–another support for the importance of turn taking translated to multimodal feedback on the side of the agent. The NARS questionnaire did not reveal any significant changes due to the interaction with the virtual HRP-2 robot in any of the three attitude scales.

1.4.3 Second Study with the HRP-2

Similar to the previously described case study on the simulation of HRP-2 (see Sect. 1.4.2), the task in this case study was to carry an object together with the robot, but the focus was to (1) investigate differences in the human–humanoid collaboration, between a tele-operated and an autonomous robot and (2) cultural differences in the perception between Western and Asian participants. The research questions were “How does the participant experience the relationship towards (1) the autonomous HRP-2 robot and (2) the tele-operated HRP-2 robot?” and “Is there a difference in terms of sociality perception, between participants with (1) Asian origin and (2) Western origin?”. A total of 12 participants (6 Asian, 6 Western) took part in this study, which was conducted together with CNRS/AIST at Tsukuba University, Japan and the Technical University Munich, Germany in October 2009.

1.4.3.1 Study Setting

This study was based on direct human–humanoid interaction, in which the HRP-2 robot acted partly autonomously and was partly remotley controlled by an human operator located in Germany. The participants (acting as the human operator in Japan), had to lift, carry, and put down a table collaboratively with the HRP-2 robot. During lifting and putting down the table, HRP-2 was tele-operated. During carrying the table, HRP-2 was walking autonomously. The study was based on the following scenario:

Imagine you are working at a construction site and you receive a task from your principal constructor: carrying an object from one place to another together with a humanoid robot which is partly acting autonomously and partly operated by a human expert operator.

1.4.3.1.1 Task:

The task is to carry a table from place A to place B together with the humanoid robot HRP-2. This task is split into four action sequences:

-

Action sequence 0: The robot is sent to the table by the principal instructor

-

Action sequence 1: Lift the table together with HRP-2

-

Action sequence 2: Walk together with HRP-2 from place A to place B

-

Action sequence 3: Put down the table together with HRP-2

The interaction between the human and the robot took place in three sequences 1–3 (see Sect. 1.2). Sequence 0 does not require any interaction between the human and the robot.

1.4.3.2 Findings on Social Aspects

The questionnaire data analysis revealed the mean values regarding the items on forms of grouping, attachment, and reciprocity & reflexivity, depicted in Table 1.3, which indicate that the aspect forms of grouping was perceived most intensely, similar to the first HRP-2 study (summative overall factor rating: \(mean: 3.64, SD: 0.52\)). However, again the statement about the importance of a humanoid robot as a future working colleague (“4 FG”) was rated rather low. In terms of attachment, the participants rated the same item as in the previous study (“1 AT”) the highest, but compared to the previous study with the simulated robot, the participants rated items on attachment in general better for the embodied agent (summative overall factor rating: \(mean: 3.23, SD: 0.69\)). Similarly, the aspect reciprocity & reflexivity was rated better than in the first HRP-2 study (summative overall factor rating: \(mean: 3.18, SD: 0.59\)). However, in the second HRP-2 study the items “1 RE” and “4 RE” were rated equally high. This could be due to the fact, that the robot and the user were directly linked through the table during the interaction and that the robot was tele-operated during lifting and putting down the table, which directly demonstrated the interdependence of every single move. Significant differences in the perception of sociality due to Western or Asian origin could not be identified in this study. The analysis of the NARS questionnaire revealed a decrease in all three scales through the interaction with the HRP-2 robot. However, only the scale “Negative Attitude toward Social Influence of Robots” decreased significantly (\(t(11) \)=\( 2.88, p<0.05\)).

In particular in the comparison of the first HRP-2 study (embodied robot) and the second HRP-2 study (virtual robot) we have to consider the notion of a co-production between the embodiment of the robot and the perception of sociality in the human–agent relationship. The virtual HRP-2 robot understood voice commands immediately (as long as the command was correctly uttered by the participant) due to the fact that the robot was wizarded behind the scenes. Moreover, the virtual robot moved quicker and more smoothly than the embodied one, due to the simulation basis. These facts should have increased the perception of its sociality, whereas on the other hand the missing embodiment and immersion in the interaction may have lowered it again.

1.4.4 First Study with HOAP-3

In this user study, the participants had to conduct two “learning by demonstration” tasks with the robot. For the first task they had to teach the arm of the robot to (1) push a box, and for the second one (2) to close a box. Twelve participants took part in this study conducted together with the Learning Algorithms and Systems Laboratory, EPFL at Lausanne, Switzerland, in August 2008. The research question of this study was the following: “How do novice users experience the collaboration with the humanoid robot HOAP-3 in terms of sociality, when the interaction is based on learning by demonstration?”

1.4.4.1 Study Setting

This user study was based on two tasks that the participants had to conduct together with the HOAP-3 robot (see Figs. 1.3 and 1.4). The tasks were introduced by the following scenario:

Imagine you are working at an assembly line in a big fabrication plant. A new robot is introduced, which should support you in completing tasks. You can teach the robot specific motions by demonstrating them (meaning moving the robot’s arm like you expect it to move it later on its own); the robot will repeat the learned motion. You can repeat this demonstration-repetition cycle as long until you are pleased with the result.

1.4.4.1.1 Task 1:

This task is to teach the robot to push this box from its working space into yours on its own. The task is split up into the following action sequences:

-

1.

Show the robot the specific task card by putting it on the table in front of the robot (move it around until the robot recognizes it).

-

2.

Demonstrate to the robot to push the box with its right arm, by putting the box very close in front of the robot and moving its arm.

-

3.

Let the robot repeat what it learned.

-

4.

(If necessary) repeat sequences 2 and 3 until you are pleased with the way the robot pushes the box.

-

The interaction with the robot is based on speech commands. Just follow the commands of the robot and answer to them with yes or no (or any other answer proposed by the robot).

-

You only need to teach the right arm of the robot by moving its elbow.

1.4.4.1.2 Task 2:

This task is to teach the robot to close this box on its own. The task is split up into the following action sequences:

-

1.

Show the robot the specific task card by putting it on the table in front of the robot (move it around until the robot recognizes it).

-

2.

Demonstrate the robot to close the box, by putting the box very close in front of the robot and moving its arm.

-

3.

Let the robot repeat what it learned.

-

4.

(If necessary) repeat sequences 2 and 3 until you are pleased with the way the robot closes the box.

-

The interaction with the robot is based on speech commands. Just follow the commands of the robot and answer to them with yes or no (or any other answer proposed by the robot).

-

You only need to teach the right arm of the robot by moving its elbow.

As the pre-test of the user study showed that the tasks are experienced as different in their level of difficulty (task 1 was estimated more difficult than task 2) the order of the tasks was counterbalanced between the participants to reduce a potential learning effect.

1.4.4.2 Findings on Social Aspects

The questionnaire data analysis revealed the mean values regarding the items on forms of grouping, attachment, and reciprocity & reflexivity, depicted in Table 1.4, which indicate that the aspect forms of grouping was again perceived most intensely (summative overall factor rating: mean: 3.18, SD: 0.68). The item “5 FG” was rated second best after item “1 FG”, which shows that team work was experienced even more in a learning by demonstration scenario than in a “pure” collaboration task. In terms of attachment, the participants rated the same item (“1 AT”) the highest as in the two previous studies. The higher rating for item “3 AT” could be explained by the smaller size and “cuteness” of the HOAP-3 robot compared to the HRP-2 (see also the results of the second study with HOAP-3; summative overall factor rating: mean: 2.74, SD: 0.94). Regarding the aspect of reciprocity & reflexivity, the item “2 RE” was rated best, which indicates that learning by demonstration plus the “cuteness aspect” fosters a willingness for caring about the agent (summative overall factor rating: mean: 3.15, SD: 0.54). The NARS questionnaire revealed a significant decrease for the scale “Negative Attitude toward Social Influence of Robots” (\(t(11)=3.17, p<0.05\)), showing that the participants rated this scale significantly lower after interacting with HOAP-3.

1.4.5 Second Study with HOAP-3

This user study was also conducted with the HOAP-3 robot. However, the main difference was that the interaction with the robot was remote-controlled via a computer interface, so this scenario provided no direct contact interaction with the robot. The participants had to conduct two tasks via the computer interface: (1) move the robot through a maze and find the exit, and (2) let the robot check all antennas and detect the broken one. Twelve participants took part in this study that was conducted together with the Robotics Lab at the University Carlos III, Madrid, Spain, in September 2008. The research question of this study was: “How do novice users experience the collaboration with the humanoid robot HOAP-3 when interacting via a computer interface?”

1.4.5.1 Study Setting

This user study was based on two tasks that the participants had to conduct via a computer interface with the HOAP-3 robot (see Fig. 1.5). The first task was introduced by the following scenario:

Your space shuttle has been hit by an asteroid and you were forced to an emergency landing. Your communication and internal ship monitoring system does not work, probably due to a damage caused by the crash. The good news is that you have the necessary material to replace the broken antenna for your communication system to send for help. As you are the only human survivor of the ship and the environment could possibly be dangerous for human beings, you decide to let this dangerous work be done by the ship’s robot HOAP-3. At first you have to navigate HOAP-3 to the exit of the shuttle.

1.4.5.1.1 Task 1:

Help the robot to find its way through the corridor and find the door to the outside. The task is to move the robot by means of the computer interface. It is completed if you see the door through the interface and say “door found”. The interaction with the robot is based on a computer interface, with which you can control the robot.

The second task was introduced by the following scenario:

After you have accomplished the first task to get the robot HOAP-3 out of the shuttle, you now have to help your HOAP-3 to find the broken antenna. The problem is that your shuttle has several antennas of different shape and colour and you cannot distinguish the defected one from the others by sight, but HOAP-3 can. Inside the robot there is a mechanism which enables the robot to detect the malfunctioning parts.

1.4.5.1.2 Task 2:

Your task is to control the movements of HOAP-3 again, while it is processing and checking the different antennas. In this task, you have to help the robot to recognize the broken antenna. If HOAP-3 has recognized a malfunctioning device, it will put a square around it on the interface. The task is finished if you recognize the broken antenna through the interface and say “broken antenna recognized”.

1.4.5.2 Findings on Social Aspects

The questionnaire data analysis revealed the mean values regarding the items on forms of grouping, attachment, and reciprocity & reflexivity, depicted in Table 1.5, which indicate that the aspect forms of grouping was again perceived most intensely (summative overall factor rating: mean: 3.43, SD: 0.62), closely followed by attachment (summative overall factor rating: mean: 3.31, SD: 0.52), and reciprocity & reflexivity (summative overall factor rating: mean: 3.15, SD: 0.80). The items “3 FG” and “5 FG” were rated equally high, indicating the team building aspect of the “collaborative explorer task ” in this study. Similarly, as in the first HOAP-3 study, the robot was rated high in terms of attachment (see item “3 AT” and “3 AT”), which could be again due to the “cuteness aspect”. In terms of reciprocity item “3 RE” was rated best. This could be due to the fact that this item was the only one which did not directly address mutuality, which was hard to perceive in an interaction scenario without direct contact interaction with the robot. Regarding the attitude towards interacting with the robot, the NARS questionnaire revealed a decrease for all three scales, but only statistically significant for the scale “Negative Attitude toward Emotions in Interaction with Robots”(\(t(11)=2.25, p<0.05\)). The participants rated this scale significantly lower after interacting with HOAP-3.

1.4.6 First and Second Study with ACE

The ACE robot (Autonomous City Explorer Robot) is a robot with the mission to autonomously finds its way to pre-defined places, through proactive communication with passers-by.

In the first study (see Fig. 1.6), the ACE robot moved remote-controlled via the Karlsplatz, a highly frequented public place in Munich, which is situated at the end of a shopping street, with access to local transportations (metro). Although the robot was remote-controlled for security reasons, the illusion of an autonomous system was preserved as the operator was hidden from the pedestrians. Three researchers accompanied the experiment: one conducted the unstructured observation and two the interviews. The study lasted for two hours.

In the second study (see Fig. 1.6), the ACE robot had to move autonomously from the Odeonsplatz to the Marienplatz and back by asking pedestrians for directions. The pedestrians could tell ACE where to go by first showing it the right direction by pointing and then show ACE the right way (e.g. how far from here) on the map on its touch-screen. The development team of the robot stayed near it because of security reasons, but stayed invisible from pedestrians because of the well-frequented environment. The study lasted for five hours and was accompanied by four researchers, one of whom performed the unstructured passive observation and the other three conducted the interviews.

In both settings, the participants had the possibility to interact with the robot via its touch-screen (in the first study to get more information about the robot itself and on the Karlsplatz, in the second study to show the robot the way on the map). In the first study, 18 participants filled in the social interaction questionnaire and 52 participants in the second study.

1.4.6.1 Findings on Social Aspects

Due to the public setting of the investigations, specialized questionnaires were used in the ACE studies, which were, however, trying to incorporate additional items on the concept of sociality (the complete questionnaires can be found in [37]). The Tables 1.6 and 1.7 show the items and the according results for the two studies.

In terms of the attitude towards interacting with the robot, the observational data of the first ACE study revealed the behaviour pattern of “investigating the engineering of ACE” six times, which could be observed only for male pedestrians. For the second field trial, the analysis of the observational material showed that people were very curious towards this new technology and many people stated surprise in a positive way: “It is able to go around me. I would not have thought that”. Unfortunately, some people also seemed scared. However, most of the time curiosity prevailed over anxiety (people not only watched, but also decided to interact with ACE). This could probably be due to the so called “novelty effect”. In the first field trial, the participants rated the item on forms of grouping rather positive (mean: 4.39, SD: 0.85). The observational data revealed the interesting finding that pedestrians built “interaction groups”, meaning that a group of 10–15 strangers stood in front of ACE and each member of this “coincidental” group stepped forward to interact with ACE, while the rest of the group waited and watched the interaction. In the second field trial, the participants rated the aspect of forms of grouping in the street survey rather positive (mean: 3.45, SD: 0.12). This aspect was rated significantly better by those participants who actually interacted with ACE (\(t(35.96)=4.09, p<0.05\)).

In the first field trial, the participants rated the aspect attachment rather positive for both items (“1 AT” and “2 AT”). However, in the second field trial, the participants rated the overall aspect of attachment rather low (mean: 2.85, SD: 0.14), which is due to the rating of item “3 AT”. The items on trusting an advice of the robot (“1AT” and “2 AT”) were rated similarly like in the first study. Interestingly, participants younger than 50 years rated this aspect significantly lower than older participants (\(t(43.80)=-2.36, p<0.05\)). Moreover, in the second field trial, some people showed companion like behaviour towards the robot (e.g. “Let’s have a look ... Oh yes ... come on ... take off”). The robot was directly addressed like a social actor. However, not everyone addressed the robot in second person, even if they were standing right in front of it; a behaviour which would be considered extremely impolite when interacting with a human and an indicator that the robot was not perceived as a partner by everyone.

Regarding reciprocity & reflexivity the participants of the first field trial rated the items rather low (“1 RE” and “2 RE”). A reason for this could be that only 54 % of the participants experienced the robot as interactive, due to its limited interaction possibilities in the first study set-up. In the second field trial, the participants rated the aspect of reciprocity & reflexivity in the street survey rather positively (mean: 3.50, SD: 0.16). This aspect was significantly better rated by those participants who actually interacted with ACE (\(t(24.85)=2.44, p<0.05\)). Furthermore, reciprocity & reflexivity was the only factor that was significantly better rated by men than by women (\(t(19.99)=-2.42, p<0.05\)). Moreover, participants younger than 50 years rated this aspect significantly lower than older participants (\(t(37)=-3.27, p<0.05\)).

1.5 Lessons Learned for Interaction Scenarios with Anthropomorphic Agents

The main goal of the comparison of the six case studies was to explore participants’ perception of sociality during the interaction with humanoid robots. Overall, the studies have shown how deeply the social interaction paradigm is embedded within the interaction with anthropomorphic robotic systems. All participants in the case studies were novice users, they had no pre-experiences with robots at all and they received no other information on how the interaction with the robots works, than that given in the scenario and task description. Nevertheless, in all laboratory-based case studies (studies 1–4) almost all participants completed the task successfully (task completion rate \(>\)80 %), which indicates the high degree of sense-making on the user side and reflexivity on the robot side. An overview of all results in given in Table 1.8.

But what can we learn from that for future interaction scenarios with anthropomorphic agents (physical and virtual)? The first study with the HRP-2 robot showed the importance of multimodal feedback and turn taking to perceive the sociality aspect forms of grouping. It moreover showed that even a virtual screen representation of a humanoid robot can be perceived as social actor, as long as it proactively reacts to the actions of the user. The second study with the HRP-2 robot, revealed that a humanoid robot can even be a mediator for sociality between two humans, who are not collocated in the same room during the interaction. The robot served as embodiment for a feeling of social presence in a way that the participants experienced a grouping with the robot, as well as with the human operator behind the scene.

The first study with the HOAP-3 robot showed that an anthropomorphic design which is perceived as “cute” can foster the social aspect attachment. Moreover, a learning by demonstration scenario based on interactive tutelage is perceived as reciprocal and reflexive. The second HOAP-3 study also supported the assumption that a design which is perceived as “cute” fosters attachment, however, in a completely different situation in which the user had no direct contact interaction with the robot, but remote-controls it. Furthermore, this study could show that cooperative problem solving offers a suitable basis to foster the aspect forms of grouping.

The two studies with the ACE robot showed that sociality is also perceived if the perspectives are inverted and the robot proactively starts an itinerary request and is dependent on the users’ input to achieve its goal. The importance of interactivity for the perception of reciprocal behaviour became obvious in the comparison of the two studies, as reciprocity was rated lower in the first study. The second study could also show the significant impact on the actual interaction (comparing pure observation) on the perception of sociality, in specific the aspects forms of grouping and reciprocity. This finding is also supported by summarizing the results of the NARS questionnaire. A significant change on the general attitude towards robots due to the interaction with them could be identified twice in a decreased rating of the “Negative Attitude toward Social Influence of Robots” and once of the “Negative attitude toward Emotions in Interaction with Robots”.

Upon reflecting how the results for the four aspects of sociality affect not only the interaction with physical agents, but also with virtual ones, we can come up with a “social agent matrix”, which distinguishes between direct human–agent interaction (see case studies 1, 3, 4, 5, and 6) and human–human interaction mediated by an agent (see case study 2). This matrix allows a categorization of agents in accordance to their “focus of sociality”. According to the empirical data gained in the studies, the matrix in Table 1.9 should be filled in.

There has been an inscription of certain notions in the embodiment of the robots, as well as the human–robot interaction scenarios as a whole, which had an influence on participants’ perception of sociality. Even though different scenarios have been performed with different robots, the aspect forms of grouping was rated best in all case studies except one, which indicates a relatively low influence of the anthropomorphic embodiment of the robot. Besides the fact that questionnaire and item design/formulation issues could be the reason for this result, we have to act on the assumption of a co-production between the embodiment of the robot, the interaction scenario, and the perception of sociality on the human–agent relationship. If the embodiment of the robots and the interaction scenarios were more distinctive, the perception of sociality would probably not have been the same. For instance, the underlying narration of the learning by demonstration scenario with HOAP-3 or the mixed-reality scenario with the virtual HRP-2 robot points more into the direction of reflexivity as key social aspect than forms of grouping.

1.6 Reflection on the Relevance of Social Cues in Human–Agent Relationship

Upon reflecting on the four aspects of sociality, it becomes obvious that designing for the social as an end in itself cannot be the overall solution for establishing a working human–agent relationship. Rather sociality as design paradigm has to be interpreted as a modular concept in which the most relevant aspect has to be chosen to increase sociality for a specific interaction scenario. Designing agents in an anthropomorphic appearance increases the degree of being perceived as a social actor, as Fogg already shows in his work “Computers as Persuasive Social Actors” [12]. However, anthropomorphic design carries a lot of baggage with it, in terms of specific expectations of end-users, such as intelligence, adaptation towards user behaviour, and following social norms and human–oriented perception cues [10].

To our conviction, the one (most important) social aspect for the functionality of the agent has to be identified first and builds the basis for the design. For instance reflexivity could be most important while playing a game against an agent, as the moves of the user are always a reflection on the agent’s gameplay. Forms of grouping will be most relevant in cooperative tasks, like solving a problem/task together as a team (e.g. a wizard agent who guides through an installation process). Reciprocity will be highly relevant in care-giving (comparable to the “Tamagotchi Effect”) or persuasive tasks, in which the user expects an adaptation from the agent’s behaviour due to his/her adaptation of behaviour. Attachment will be relevant in all cases in which we want the user to establish a long-term relationship with the agent. However, in this case variations in the agent’s behaviour are highly relevant to ensure that the interaction does not become monotonously and annoying.

If we have again a look at all the studies presented in this chapter, how could we increase the degree of sociality for the specific interaction scenarios? In accordance to our underlying assumption on the “one (most important) social aspect rule”, the social agent matrix for the studies should look like Table 1.10.

Thus, if we aim for a user-centred design approach of an agent, the first relevant question is to identify which social aspect it should predominantly depict and to focus on that in the interaction and screen/embodiment design. However, user perception of sociality seems to focus more on the interaction scenario of the agent, than on its pure appearance, even though an anthropomorphic appearance fosters the perception of social abilities of an agent. As the theory of object-centred sociality already suggests (and also the Media Equation Theory [31] and the experiment of Heider and Simmel [17] point in that direction), the interaction with all kinds of agents can be perceived as social, also with a zoomorphic or a functionally designed agent. The most important thing to consider is that the interaction in itself is a social one and to focus on the main specific aspect of its sociality to increase the social perception on the user side. One could go even one step further and propose a “social interface matrix” which categorizes all kinds of interactive computing systems, irrespectively of their embodiment and design, in terms of their degree of sociality; some self-explaining examples are presented in Table 1.11.

1.7 Conclusion and Outlook

Table 1.11 demonstrated the power of the “post-social world” [25] in which non-human entities enter the social domain. It shows that human–system interaction can be social in many types of interaction paradigms, going far beyond the interaction with anthropomorphic agents. However, in the design of the future human–agent relationship it will be crucial to identify whether the underlying interaction paradigm is a “post-social” one. There is no need to socialize every computing system we are using, e.g. word processing or mobile phones, with the assumption that this would improve the human–system relationship.

A tendency can be observed that more and more “post-social” interaction design strategies are pursued, primarily focussing on anthropomorphic appearance, however, as the case studies presented in this chapter could show, it is more about interaction design than appearance design to foster the social human–agent relationship. Not only humanoid robots could be perceived as social actors in collaboration scenarios, but also functionally designed robots in a factory context, as long as the interaction is designed in a social manner.

In a next step, we want to explore the impact of appearance on the perception of sociality further, by transferring the concept of the four sociality aspects to human–robot interaction with functionally designed robots in an industrial setting. We believe that future interaction scenarios for human–robot collaboration in the factory context need to combine the strengths of human beings (e.g. creativity, problem solving capabilities, and the ability to make decisions) with the strengths of robotics (e.g. efficient fulfilment of reoccurring tasks). Single robotic work cells are not sufficient for sustainable productivity increase. Thus, we want to use the “social agent matrix” to inform the interaction scenarios of joint human–robot collaboration in turn taking tasks, to explore if and how sociality may positively influence performance measures.

Notes

- 1.

WordNet is an online lexical reference system, developed at Princeton University. Its design is inspired by current psycholinguistic theories of human lexical memory. English nouns, verbs, adjectives and adverbs are organized into synonym sets, each representing one underlying lexical concept (http://wordnet.princeton.edu/).

References

Gold, K., Scassellati, B.: A Bayesian robot that distinguishes “self” from “other”. In: Proceedings of the 29th Annual Meeting of the Cognitive Science Society (CogSci2007). Psychology Press, New York (2007)

Abdulla, R.A., Garrison, B., Salwen, M., Driscoll, P., Casey, D.: The Credibility of Newspapers, Television News and Online News. In: Journalism and Mass Communication, Miami (2002)

Powers, A., Kiesler, S.: The advisor robot: tracing people’s mental model from a robot’s physical attributes. In: Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human–Robot Interaction (HRI’06), pp. 218–225. ACM, New York (2006) doi:10.1145/1121241.1121280

Bartneck, C.: Affective expressions of machines. In: Extended Abstracts on Human Factors in Computing Systems (CHI’01). pp. 189–190. ACM, New York (2001). doi: 10.1145/634067.634181

Bartneck, C., Croft, E., Kulic, D.: Measuring the anthropomorphism, animacy, likeability, perceived intelligence and perceived safety of robots. In: Metrics for Human–Robot Interaction Workshop in Affiliation with the 3rd ACM/IEEE International Conference on Human–Robot Interaction (HRI 2008), Technical Report 471, pp. 37–44. University of Hertfordshire, Amsterdam 2008

Berlo, D.K., Lemert, J.B., Mertz, R.J.: Dimensions for evaluating the acceptability of message sources. Public Opin. Q. 46, 563–576 (1969)

Bowlby, J.: The nature of the child’s tie to his mother. Int. J. Psychoanal. 39, 350–373 (1958)

Cramer, H.: People’s responses to autonomous and adaptive system. Ph.D. thesis, University of Amsterdam (2010)

Dahlbäck, N., Jönsson, A., Ahrenberg, L.: Wizard of Oz studies: why and how. In: Proceedings of the 1st International Conference on Intelligent User Interfaces (IUI’93), pp. 193–200. ACM, New York (1993)

Dautenhahn, K.: Design spaces and niche spaces of believable social robots. In: Proceedings of the 11th IEEE International Workshop on Robot and Human Interactive Communication (RO-MAN2002), pp. 192–197 (2002)

Desai, M., Stubbs, K., Steinfeld, A., Yanco, H.: Creating trustworthy robots: lessons and inspirations from automated systems. In: Proceedings of the AISB Convention: New Frontiers in Human–Robot Interaction (2009)

Fogg, B.J.: Computers as persuasive social actors. In: Fogg, B.J. (ed.) Persuasive technology. Using Computers to Change What we Think and do, Chap. 5, pp. 89–120. Morgan Kaufmann Publishers, San Fransisco (2003)

Fogg, B.J., Tseng, H.: The elements of computer credibility. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’99), pp. 80–87. ACM, New York (1999) doi: 10.1145/302979.303001

Fong, T., Nourbakhsh, I., Dautenhahn, K.: A survey of socially interactive robots. Robot. Auton. Syst. 42, 143–166 (2003)

Gaziano, C., McGrath, K.: Measuring the concept of credibility. J. Q. 63(3), 451–462 (1986)

Gouldner, A.W.: The norm of reciprocity: A preliminary statement. Am. Sociol. Rev. 25, 161–178 (1960)

Heider, F., Simmel, M.: An experimental study of apparent behavior. Am. J. Psychol. 57, 243–249 (1944)

Hovland, C.I., Janis, I.L., Kelly, H.H.: Communication and Persuation. Yale University Press, New Haven (1953)

Imai, M., Narumi, M.: Immersion in interaction based on physical world object. In: Proceedings of the 2005 International Conference on Active Medial Technology (AMT2005), pp. 523–528 (2005)

Kahn P.H., Jr. Freier, N., Friedman, B., Severson, R., Feldman, E.: Social and moral relationships with robotic others? In: Proceedings of the 13th IEEE International Workshop on Robot and Human Interactive Communication (RO-MAN2004), pp. 545–550 (2004)

Kaplan, F.: Free creatures: The role of uselessness in the design of artificial pets. In: Proceedings of the 1st Edutainment Robotics Workshop (2000)

Kaplan, F.: Artificial attachment: Will a robot ever pass the Ainsworth’s strange situation test? In: Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Humanoids 01), pp. 125–132 (2001)

Kidd, C.D.: Sociable robots: The role of presence and task in human–robot interaction. Master’s thesis, Massachusetts Institute of Technology (2003)

Kiesler, S.B., Goetz, J.: Mental models of robotic assistants. In: CHI Extended Abstracts, pp. 576–577, Minneapolis (2002)

Knorr-Cetina, K.: Sociality with objects: Social relations in postsocial knowledge societies. Theor. Cult. Soc. 14(4), 1–30 (1997)

Lohse, M., Hegel, F., Wrede, B.: Domestic applications for social robots-a user study on appearance and function. J. Phys. Agents 2, 21–32 (2008)

Lynch, M., Peyrot, M.: Introduction: A reader’s guide to ethnomethodology. In: Qualitative Sociology, Springer, Verlag (2005)

McCroskey, J.C., Hamilton, P.R., Weiner, A.N.: The effect of interaction behavior on source credibility, homophily, and interpersonal attraction. Human Commun. Res. 1, pp. 42–52 (1974)

Nomura, T., Kanda, T., Suzuki, T.: Experimental investigation into influence of negative attitudes toward robots on human–robot interaction. AI Soc. 20(2), 138–150 (2006)

Norman, D.A.: Emotional Design: Why We Love (or Hate) Everyday Things. Basic Books, New York (2004)

Reeves, B., Nass, C.: The Media Equation: How People Treat Computers, Televisions, and New Media Like Real People and Places. Cambridge University Press, New York (1996)

Reichenbach, J., Bartneck, C., Carpenter, J.: Well done, robot!—the importance of praise and presence in human–robot collaboration. In: Dautenhahn, K. (ed.) The 15th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2006), pp. 86–90. Hatfield (2006) doi: 10.1109/ROMAN.2006.314399

Shinozawa, K., Reeves, B., Wise, K., Lim, S., Maldonado, H.: Robots as new media: A cross-cultural examination of social and cognitive responses to robotic and on-screen agents. International Communication Association (2002)

Smith, L., Breazeal, C.: The dynamic life of developmental process. Dev. Sci. 10(1), 61–68 (2007)

Sundar, S.S., Nass, C.: Source orientation in human–computer interaction. In: Communication Research, vol. 27, pp. 683–703. Sage Journals (2000). doi:10.1177/009365000027006001

Wehmeyer, K.: Assessing users’ attachment to their mobile devices. In: Proceedings of the International Conference on the Management of Mobile Business (2007)

Weiss, A.: Validation of an evaluation framework for human–robot interaction. The impact of usability, social acceptance, user experience, and societal impact on collaboration with humanoid robots. Ph.D. thesis, University of Salzburg (2010)

Weiss, A., Bernhaupt, R., Schwaiger, D., Altmaninger, M., Buchner, R., Tscheligi, M.: User experience evaluation with a Wizard of Oz approach: Technical and methodological considerations. In: Proceedings of the 9th IEEE-RAS International Conference on Humanoids Robotics (Humanoids 2009), pp. 303–308 (2009)

Weiss, A., Bernhaupt, R., Tscheligi, M., Wollherr, D., Kuhnlenz, K., Buss, M.: A methodological variation for acceptance evaluation of Human–Robot interaction in public places. In: Proccededings of the 17th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2008), pp. 713–718 (2008)

Weiss, A., Igelsböck, J., Pierro, P., Buchner, R., Balaguer, C., Tscheligi, M.: user perception of usability aspects in indirect HRI-a chain of translations. In: Proceedings of the 19th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2010), pp. 574–580 (2010)

Weiss, A., Igelsböck, J., Tscheligi, M., Bauer, A., Kühnlenz, K., Wollherr, D., Buss, M.: Robots asking for directions-the willingness of passers-by to support robots. In: Proceedings of the 5th ACM/IEEE International Conference on Human Robot Interaction (HRI’10), pp. 23–30. ACM, New York (2010)

Weiss, A., Igelsböck, J., Wurhofer, D., Tscheligi, M.: Looking forward to a “Robotic Society”?—Imaginations of Future Human–Robot Relationships. Special issue on the Human Robot Personal Relationship Conference in the International Journal of Social Robotics (2010)

Weiss, A., Igelsböeck, J., Calinon, S., Billard, A., Tscheligi, M.: Teaching a humanoid: A user study on learning by demonstration with HOAP-3. In: Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication (Ro-Man2009), pp. 147–152 (2009)

Weiss, A., Tscheilig, M.: Special issue on robots for future societies evaluating social acceptance and societal impact of robots. Int. J. Soc. Robot. 2(4), 345–346 (2010)

Weizenbaum, J.: Eliza-a computer program for the study of natural language communication between man and machine. Commun. ACM 9(1), 36–45 (1966). doi: 10.1145/365153.365168

Acknowledgments

This work was conducted in the framework of the EU-funded FP6 project ROBOT@CWE. Moreover, the financial support by the Federal Ministry of Economy, Family and Youth and the National Foundation for Research, Technology and Development is gratefully acknowledged (Christian Doppler Laboratory for “Contextual Interfaces”).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Weiss, A., Tscheligi, M. (2013). Rethinking the Human–Agent Relationship: Which Social Cues Do Interactive Agents Really Need to Have?. In: Hingston, P. (eds) Believable Bots. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-32323-2_1

Download citation

DOI: https://doi.org/10.1007/978-3-642-32323-2_1

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-32322-5

Online ISBN: 978-3-642-32323-2

eBook Packages: Computer ScienceComputer Science (R0)