Abstract

Given β > 0 and δ > 0, the function t −β may be approximated for t in a compact interval [δ, T] by a sum of terms of the form we−at, with parameters w > 0 and a > 0. One such an approximation, studied by Beylkin and Monzón (Appl. Comput. Harmon. Anal. 28:131–149, 2010), is obtained by applying the trapezoidal rule to an integral representation of t −β, after which Prony’s method is applied to reduce the number of terms in the sum with essentially no loss of accuracy. We review this method, and then describe a similar approach based on an alternative integral representation. The main difference is that the new approach achieves much better results before the application of Prony’s method; after applying Prony’s method the performance of both is much the same.

Dedicated to Ian H. Sloan on the occasion of his 80th birthday.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Consider a Volterra operator with a convolution kernel,

and suppose that we seek a numerical approximation to \(\mathbb {K}u\) at the points of a grid \(0=t_0<t_1<t_2<\cdots <t_{N_t}=T\). For example, if we know U n ≈ u(t n) and define (for simplicity) a piecewise-constant interpolant \(\tilde U(t)=U^n\) for t ∈ I n = (t n−1, t n), then

The number of operations required to compute this sum in the obvious way for 1 ≤ n ≤ N t is proportional to \(\sum _{n=1}^{N_t}n\approx N_t^2/2\), and this quadratic growth can be prohibitive in applications where each U j is a large vector and not just a scalar. Moreover, it might not be possible to store U j in active memory for all time levels j.

These problems can be avoided using a simple, fast algorithm if the kernel k admits an exponential sum approximation

provided sufficient accuracy is achieved using only a moderate number of terms L, for a choice of δ > 0 that is smaller than the time step Δt n = t n − t n−1 for all n. Indeed, if Δt n ≥ δ then δ ≤ t n − s ≤ T for 0 ≤ s ≤ t n−1 so

where

Thus,

and by using the recursive formula

we can evaluate \(\mathbb {K}\tilde U(t_n)\) for 1 ≤ n ≤ N to an acceptable accuracy with a number of operations proportional to LN t—a substantial saving if L ≪ N t. In addition, we may overwrite \(\varTheta ^{n-1}_l\) with \(\varTheta ^n_l\), and overwrite U n−1 with U n, so that the active storage requirement is proportional to L instead of N t.

In the present work, we study two exponential sum approximations to the kernel k(t) = t −β with β > 0. Our starting point is the integral representation

which follows easily from the integral definition of the Gamma function via the substitution p = y∕t (if y is the original integration variable). Section 2 discusses the results of Beylkin and Monzón [3], who used the substitution p = e x in (4) to obtain

Applying the infinite trapezoidal rule with step size h > 0 leads to the approximation

where

We will see that the relative error,

satisfies a uniform bound for 0 < t < ∞. If t is restricted to a compact interval [δ, T] with 0 < δ < T < ∞, then we can similarly bound the relative error in the finite exponential sum approximation

for suitable choices of M > 0 and N > 0.

The exponents a n = e nh in the sum (9) tend to zero as n →−∞. In Sect. 3 we see how, for a suitable threshold exponent size a ∗, Prony’s method may be used to replace \(\sum _{a_n\le a^*}w_ne^{-a_nt}\) with an exponential sum having fewer terms. This idea again follows Beylkin and Monzón [3], who discussed it in the context of approximation by Gaussian sums.

Section 4 introduces an alternative approach based on the substitution \(p=\exp (x-e^{-x})\), which transforms (4) into the integral representation

where

Applying the infinite trapezoidal rule again leads to an approximation of the form (6), this time with

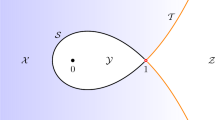

As x →∞, the integrands in both (5) and (10) decay like \(\exp (-te^x)\). However, they exhibit different behaviours as x →−∞, with the former decaying like e βx = e −β|x| whereas the latter decays much faster, like \(\exp (-\beta e^{-x})=\exp (-\beta e^{|x|})\), as seen in Fig. 1 (note the differing scales on the vertical axis).

Li [5] summarised several alternative approaches for fast evaluation of a fractional integral of order α, that is, for an integral operator of the form (1) with kernel

where the integral representation follows from (4), with β = 1 − α, and the reflection formula for the Gamma function, \(\varGamma (\alpha )\varGamma (1-\alpha )=\pi /\sin \pi \alpha \). She developed a quadrature approximation,

which again provides an exponential sum approximation, and showed that the error can be made smaller than 𝜖 for all t ∈ [δ, ∞) with Q of order \((\log \epsilon ^{-1}+\log \delta ^{-1})^2\).

More recently, Jiang et al. [4] developed an exponential sum approximation for t ∈ [δ, T] using composite Gauss quadrature on dyadic intervals, applied to (5), with Q of order

In other applications, the kernel k(t) is known via its Laplace transform,

so that instead of the exponential sum (2) it is natural to seek a sum-of-poles approximation,

for z in a suitable region of the complex plane; see, for instance, Alpert et al. [2] and Xu and Jian [7].

2 Approximation Based on the Substitution p = e x

The nature of the approximation (6) is revealed by a remarkable formula for the relative error [3, Section 2]. For completeness, we outline the proof.

Theorem 1

If the exponents and weights are given by (7), then the relative error (8) has the representation

where R(ξ) and Φ(ξ) are the real-valued functions defined by

Moreover, \(R(\xi )\le e^{-2\pi \theta |\xi |}/(\cos \theta )^\beta \) for 0 ≤ θ < π∕2 and −∞ < ξ < ∞.

Proof

For each t > 0, the integrand \(f(x)=\exp (-te^x+\beta x)\) from (5) belongs to the Schwarz class of rapidly decreasing C ∞ functions, and we may therefore apply the Poisson summation formula to conclude that

where the Fourier transform of f is

The substitution p = te x gives

so, with a n and w n defined by (7),

The formula for ρ(t) follows after noting that \(\overline {\varGamma (\beta +i2\pi \xi )}=\varGamma (\beta -i2\pi \xi )\) for all real ξ; hence, R(−ξ) = R(ξ) and Φ(−ξ) = −Φ(ξ).

To estimate R(ξ), let y > 0 and define the ray \(\mathbb {C}_\theta =\{se^{i\theta }:0<s<\infty \}\). By Cauchy’s theorem,

and thus

implying the desired bound for R(ξ). □

In practice, the amplitudes R(n∕h) decay so rapidly with n that only the first term in the expansion (15) is significant. For instance, since [1, 6.1.30]

if β = 1∕2 then \(R(\xi )=(\cosh 2\pi ^2\xi )^{-1/2}\le \sqrt {2}e^{-\pi ^2\xi }\) so, choosing h = 1∕3, we have R(1∕h) = 1.95692 × 10−13 and R(2∕h) = 2.70786 × 10−26. In general, the bound \(R(n/h)\le e^{-2\pi \theta n/h}/(\cos \theta )^\beta \) from the theorem is minimized by choosing \(\tan \theta =2\pi n/(\beta h)\), implying that

Since we can evaluate only a finite exponential sum, we now estimate the two tails of the infinite sum in terms of the upper incomplete Gamma function,

Theorem 2

If the exponents and weights are given by (7), then

and

Proof

For each t > 0, the integrand \(f(x)=\exp (-te^x+\beta x)\) from (5) decreases for \(x>\log (\beta /t)\). Therefore, if \(Nh\ge \log (\beta /t)\), that is, if te Nh ≥ β, then

where, in the final step, we used the substitution p = te x. Similarly, the function \(f(-x)=\exp (-te^{-x}-\beta x)\) decreases for \(x>\log (t/\beta )\) so if \(Mh\ge \log (t/\beta )\), that is, if te −Mh ≤ β, then

where, in the final step, we used the substitution p = te −x. □

Given 𝜖 RD > 0 there exists h > 0 such that

and by Theorem 1,

so 𝜖 RD is an upper bound for the relative discretization error. Similarly, given a sufficiently small 𝜖 RT > 0, there exist x δ > 0 and X T > 0 such that \(\delta e^{x_\delta }\ge \beta \) and \(Te^{-X_T}\le \beta \) with

Thus, by Theorem 2,

and

showing that 2𝜖 RT is an upper bound for the relative truncation error. Denoting the overall relative error for the finite sum (9) by

we therefore have

The estimate for R(ξ) in Theorem 1, together with the asymptotic behaviours

and

imply that (19) can be satisfied with

Figure 2 shows the relation between 𝜖 RD and 1∕h given by (16), and confirms that 1∕h is approximately proportional to \(\log \epsilon _{\text{RT}}^{-1}\). In Fig. 3, for each value of 𝜖 we computed h by solving (16) with 𝜖 RD = 𝜖∕3, then computed x δ and X T by solving (17) with 𝜖 RT = 𝜖∕3, and finally put M = ⌈X T∕h⌉ and N = ⌈x δ∕h⌉.

The bound 𝜖 RD for the relative discretization error, defined by (16), as a function of 1∕h for various choices of β

The growth in M and N as the upper bound for the overall relative error (18) decreases, for different choices of T and δ

3 Prony’s Method

The construction of Sect. 2 leads to an exponential sum approximation (9) with many small exponents a n. We will now explain how the corresponding terms can be aggregated to yield a more efficient approximation.

Consider more generally an exponential sum

in which the weights and exponents are all strictly positive. Our aim is to approximate this function by an exponential sum with fewer terms,

whose weights \(\tilde w_k\) and exponents \(\tilde a_k\) are again all strictly positive. To this end, let

We can hope to find 2K parameters \(\tilde w_k\) and \(\tilde a_k\) satisfying the 2K conditions

so that, by Taylor expansion,

The approximations here require that the g j and the \(\tilde a_kt\) are nicely bounded, and preferably small.

Algorithm 1 Prony(a 1, …, a L, w 1, …w L, K)

Require: 2K − 1 ≤ L

Compute \(g_j=\sum _{l=1}^Lw_la_l^j\) for 0 ≤ j ≤ 2K − 1

Find q 0, …, q K−1 satisfying \(\sum _{m=0}^{K-1}g_{j+m}q_m=-g_{j+K}\) for 0 ≤ j ≤ K − 1, and put q K = 1

Find the roots \(\tilde a_1\), …, \(\tilde a_K\) of the polynomial \(Q(z)=\sum _{k=0}^K q_kz^k\)

Find \(\tilde w_1\), …, \(\tilde w_K\) satisfying \(\sum _{k=1}^K\tilde a_k^j\tilde w_k\approx g_j\) for 0 ≤ j ≤ 2K − 1

return \(\tilde a_1\), …, \(\tilde a_K\), \(\tilde w_1\), …, \(\tilde w_K\)

In Prony’s method, we seek to satisfy (20) by introducing the monic polynomial

and observing that the unknown coefficients q k must satisfy

for 0 ≤ j ≤ K − 1 (so that j + m ≤ 2K − 1 for 0 ≤ m ≤ K), with q K = 1. Thus,

which suggests the procedure Prony defined in Algorithm 1. We must, however, beware of several potential pitfalls:

-

1.

the best choice for K is not clear;

-

2.

the K × K matrix [g j+k] might be badly conditioned;

-

3.

the roots of the polynomial Q(z) might not all be real and positive;

-

4.

the linear system for the \(\tilde w_k\) is overdetermined, and the least-squares solution might have large residuals;

-

5.

the \(\tilde w_k\) might not all be positive.

We will see that nevertheless the algorithm can be quite effective, even when K = 1, in which case we simply compute

Example 1

We took β = 3∕4, δ = 10−6, T = 10, 𝜖 = 10−8, 𝜖 RD = 0.9 × 10−8 and 𝜖 RT = 0.05 × 10−8. The methodology of Sect. 2 led to the choices h = 0.47962, M = 65 and N = 36, and we confirmed via direct evaluation of the relative error that \(|\rho ^N_M(t)|\le 0.92\times 10^{-8}\) for δ ≤ t ≤ T. We applied Prony’s method to the first L terms of the sum in (9), that is, those with − M ≤ n ≤ L − M, thereby reducing the total number of terms by L − K. Table 1 lists, for different choices of L and K, the additional contribution to the relative error, that is, max1≤p≤P|η(t p)| where

and we use a geometric grid in [δ, 1] given by t p = T (p−1)∕(P−1)δ (P−p)∕(P−1) for 1 ≤ p ≤ P with P = 751. The largest reduction consistent with maintaining overall accuracy was when L = 65 and K = 6, and Fig. 4 (Top) plots |η(t)| in this case, as well as the overall relative error (Bottom) for the resulting approximation,

In this way, the number of terms in the exponential sum approximation was reduced from M + 1 + N = 102 to (M + K − L) + 1 + N = 43, with the maximum absolute value of the relative error growing only slightly to 1.07 × 10−8. Figure 4 (Bottom) shows that the relative error is closely approximated by the first term in (15), that is, \(\rho ^M_N(t)\approx -2R(h^{-1})\cos \bigl (2\pi h^{-1}\log t-\varPhi (h^{-1}) \bigr )\) for δ ≤ t ≤ T.

4 Approximation Based on the Substitution \(p=\exp (x-e^{-x})\)

We now consider the alternative exponents and weights given by (12). A different approach is needed for the error analysis, and we define

so that \(\mathbb {Q}(f,h)\) is an infinite trapezoidal rule approximation to \(\mathbb {I}(f)\). Recall the following well-known error bound.

Theorem 3

Let r > 0. Suppose that f(z) is continuous on the closed strip |ℑz|≤ r, analytic on the open strip |ℑz| < r, and satisfies

with

Then, for all h > 0,

Proof

See McNamee et al. [6, Theorem 5.2]. □

For t > 0, we define the entire analytic function of z,

where φ(z, t) is the analytic continuation of the function defined in (11). In this way, \(t^{-\beta }=\mathbb {I}(f)/\varGamma (\beta )\) by (10).

Lemma 1

If 0 < r < π∕2, then the function f defined in (23) satisfies the hypotheses of Theorem 3 with A r ≤ Ct −β for 0 < t ≤ 1, where the constant C > 0 depends only on β and r.

Proof

A short calculation shows that

and that if 0 < 𝜖 < π∕2 − r, then

Thus, if x ≥ x ∗ then \(\cos {}(r+e^{-x}\sin r)\ge \cos {}(\pi /2-\epsilon )=\sin \epsilon \) so

where \(c=\exp (-e^{-x^*}\cos r)\sin \epsilon >0\). If necessary, we increase x ∗ so that x ∗ > 0. Since |1 + e −(x±ir)|≤ 1 + e −x,

and the substitution p = e x then yields, with \(p^*=e^{x^*}\),

Also, if x ≥ 0 then

so

which is bounded for 0 < t ≤ 1. Similarly, if x ≤ 0 then \(\exp (x-e^{-x}\cos r)\le 1\) so \(\Re \varphi (x\pm ir,t)\ge -t+\beta e^{-x}\cos r\) and therefore, using again the substitution p = e x,

which is also bounded for 0 < t ≤ 1. The required estimate for A r follows.

If x ≥ x ∗, then the preceding inequalities based on (24) show that

which tends to zero as x →∞ for any t > 0. Similarly, if x ≤ 0, then \(\Re \varphi (x\pm iy)\ge -t+\beta e^{-x}\cos r\) for | y|≤ r, so

which again tends to zero as x →−∞. □

Together, Theorem 3 and Lemma 1 imply the following bound for the relative error (8) in the infinite exponential sum approximation (6).

Theorem 4

Let h > 0 and define a n and w n by (12). If 0 < r < π∕2, then there exists a constant C 1 (depending on β and r) such that

Proof

The definitions above mean that \(hf(nh)=w_ne^{-a_nt}\). □

Thus, a relative accuracy 𝜖 is achieved by choosing h of order \(1/\log \epsilon ^{-1}\). Of course, in practice we must compute a finite sum, and the next lemma estimates the two parts of the associated truncation error.

Lemma 2

Let h > 0, 0 < θ < 1 and 0 < t ≤ 1. Then the function f defined in (23) satisfies

and

When 0 < β ≤ 1, the second estimate holds also with θ = 1.

Proof

If n ≤ 0, then φ(nh, t) ≥−t + βe −nh so

The function g 1(x) decreases for \(x>\log (\beta ^{-1}-1)\) if 0 < β < 1∕2, and for all x ≥ 0 if β ≥ 1∕2, so

and the substitution p = e x gives

so the first estimate holds with C 2 = 2e∕Γ(β + 1).

If n ≥ 0 we have \(\varphi (nh,t)\ge t\exp (nh-1)-\beta nh\) and 1 + e −nh ≤ 2, so

The function g 2(x) decreases for \(x>1+\log (\beta t^{-1})\), so

and the substitution p = e x gives

Since te Nh−1 ≥ β, if 0 < β ≤ 1 then the integral on the right is bounded above by \(\beta ^{\beta -1}\exp (-te^{Nh-1})\). If β > 1, then p β−1e −(1−θ)p is bounded for p > 0 so

completing the proof. □

It is now a simple matter to see that the number of terms L = M + 1 + N needed to ensure a relative accuracy 𝜖 for δ ≤ t ≤ 1 is of order \((\log \epsilon ^{-1})\log (\delta ^{-1}\log \epsilon ^{-1})\).

Theorem 5

Let a n and w n be defined by (12). For 0 < δ ≤ 1 and for a sufficiently small 𝜖 > 0, if

then

Proof

The inequalities for h, M and N imply that each of C 1e −2πr∕h, \(C_2\exp (-\beta e^{Mh})\) and \(C_3\exp (-\theta te^{Nh-1})\) is bounded above by 𝜖t −β∕3, so the error estimate is a consequence of Theorem 4, Lemma 2 and the triangle inequality. Note that the restrictions on M and N in (25) and (26) will be satisfied for 𝜖 sufficiently small. □

Although the error bounds above require t ∈ [δ, 1], a simple rescaling allows us to treat a general compact subinterval [δ, T]. If \(\check a_n=a_n/T\) and \(\check w_n=w_n/T^\beta \), then

for δ ≤ t∕T ≤ 1, or in other words for δ ⋅ T ≤ t ≤ T. Moreover, the relative error \(\check \rho ^N_M(t)=\rho ^N_M(t/T)\) is unchanged by the rescaling.

Example 2

We took the same values for β, δ, T, 𝜖, 𝜖 RD and 𝜖 RT as in Example 1. Since the constant C 1 of Theorem 4 is difficult to estimate, we again used (16) to choose h = 0.47962. Likewise, the constant C 3 in Lemma 2 is difficult to estimate, so we chose N = ⌈h −1x δ∕T⌉ = 40. However, knowing C 2 = 2e∕Γ(β + 1) we easily determined that \(C_2\exp (-\beta e^{Mh})\le \epsilon _{\text{RT}}\) for M = 8. The exponents and weights (12) were computed for the interval [δ∕T, 1], and then rescaled as above to create an approximation for the interval [δ, T] with M + 1 + N = 49 terms and a relative error whose magnitude is at worst 2.2 × 10−8.

The behaviour of the relative error \(\rho ^N_M(t)\), shown in Fig. 5, suggests a modified strategy: construct the approximation for [δ, 10T] but use it only on [δ, T]. We found that doing so required N = 45, that is, 5 additional terms, but resulted in a nearly uniform amplitude for the relative error of about 0.97 × 10−8. Finally, after applying Prony’s method with L = 17 and K = 6 we were able to reduce the number of terms from M + 1 + N = 54 to 43 without increasing the relative error.

The relative error for the initial approximation from Example 2

To compare these results with those of Li [5], let 0 < α < 1 and let k(t) = t α−1∕Γ(α) denote the kernel for the fractional integral of order α. Taking β = 1 − α we compute the weights w l and exponents a l as above and define

The fast algorithm evaluates

and our bound \(|\rho ^N_M(t)|\le \epsilon \) implies that \(|k_M^N(t)-k(t)|\le \epsilon t^{\alpha -1}/\varGamma (\alpha )\) for δ ≤ t ≤ T, so

provided Δt n ≥ δ and t n ≤ T. Similarly, the method of Li yields \((\mathbb {K}_QU)^n\) but with a bound for the absolute error in (14), so that |k Q(t) − k(t)|≤ 𝜖′ for δ′≤ t < ∞. Thus,

provided Δt n ≥ δ. Li [5, Fig. 3 (d)] required about Q = 250 points to achieve an (absolute) error 𝜖′≤ 10−6 for t ≥ δ′ = 10−4 when α = 1∕4 (corresponding to β = 1 − α = 3∕4). In Examples 1 and 2, our methods give a smaller error 𝜖 ≤ 10−8 using only M + 1 + N = 43 terms with a less restrictive lower bound for the time step, δ = 10−6. Against these advantages, the method of Li permits arbitrarily large t n.

5 Conclusion

Comparing Examples 1 and 2, we see that, for comparable accuracy, the approximation based on the second substitution results in far fewer terms because we are able to use a much smaller choice of M. However, after applying Prony’s method both approximations are about equally efficient. If Prony’s method is not used, then the second approximation is clearly superior. Another consideration is that the first approximation has more explicit error bounds so we can, a priori, more easily determine suitable choices of h, M and N to achieve a desired accuracy.

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions. Dover, New York (1965)

Alpert, B., Greengard, L., Hagstrom, T.: Rapid evaluation of nonreflecting boundary kernels for time-domain wave propagation. SIAM J. Numer. Anal. 37, 1138–1164 (2000)

Beylkin, G., Monzón, L.: Approximation by exponential sums revisited. Appl. Comput. Harmon. Anal. 28, 131–149 (2010)

Jiang, S., Zhang, J., Zhang, Q., Zhang, Z.: Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations. Commun. Comput. Phys. 21(3), 650–678 (2017)

Li, J.R.: A fast time stepping method for evaluating fractional integrals. SIAM J. Sci. Comput. 31, 4696–4714 (2010)

McNamee, J., Stenger, F., Whitney, E.L.: Whittaker’s cardinal function in retrospect. Math. Comput. 25, 141–154 (1971)

Xu, K., Jiang, S.: A bootstrap method for sum-of-poles approximations. J. Sci. Comput. 55, 16–39 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this chapter

Cite this chapter

McLean, W. (2018). Exponential Sum Approximations for t−β. In: Dick, J., Kuo, F., Woźniakowski, H. (eds) Contemporary Computational Mathematics - A Celebration of the 80th Birthday of Ian Sloan. Springer, Cham. https://doi.org/10.1007/978-3-319-72456-0_40

Download citation

DOI: https://doi.org/10.1007/978-3-319-72456-0_40

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-72455-3

Online ISBN: 978-3-319-72456-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)