Abstract

Positron emission tomography (PET) imaging is widely used for staging and monitoring treatment in a variety of cancers including the lymphomas and lung cancer. Recently, there has been a marked increase in the accuracy and robustness of machine learning methods and their application to computer-aided diagnosis (CAD) systems, e.g., the automated detection and quantification of abnormalities in medical images. Successful machine learning methods require large amounts of training data and hence, synthesis of PET images could play an important role in enhancing training data and ultimately improve the accuracy of PET-based CAD systems. Existing approaches such as atlas-based or methods that are based on simulated or physical phantoms have problems in synthesizing the low resolution and low signal-to-noise ratios inherent in PET images. In addition, these methods usually have limited capacity to produce a variety of synthetic PET images with large anatomical and functional differences. Hence, we propose a new method to synthesize PET data via multi-channel generative adversarial networks (M-GAN) to address these limitations. Our M-GAN approach, in contrast to the existing medical image synthetic methods that rely on using low-level features, has the ability to capture feature representations with a high-level of semantic information based on the adversarial learning concept. Our M-GAN is also able to take the input from the annotation (label) to synthesize regions of high uptake e.g., tumors and from the computed tomography (CT) images to constrain the appearance consistency based on the CT derived anatomical information in a single framework and output the synthetic PET images directly. Our experimental data from 50 lung cancer PET-CT studies show that our method provides more realistic PET images compared to conventional GAN methods. Further, the PET tumor detection model, trained with our synthetic PET data, performed competitively when compared to the detection model trained with real PET data (2.79% lower in terms of recall). We suggest that our approach when used in combination with real and synthetic images, boosts the training data for machine learning methods.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

[18F]-Fluorodeoxyglucose (FDG) positron emission tomography (PET) is widely used for staging, and monitoring the response to treatment in a wide variety of cancers, including the lymphoma and lung cancer [1,2,3]. This is attributed to the ability of FDG PET to depict regions of increased glucose metabolism in sites of active tumor relative to normal tissues [1, 4]. Recently, advances in machine learning methods have been applied to medical computer-aided diagnosis (CAD) [5], where algorithms such as deep learning and pattern recognition, can provide automated detection of abnormalities in medical images [6,7,8]. Machine learning methods are dependent on the availability of large amounts of annotated data for training and for the derivation of learned models [7, 8]. There is, however, a scarcity of annotated training data for medical images which relates to the time involved in manual annotation and the confirmation of the imaging findings [9, 10]. Further, the training data need to encompass the wide variation in the imaging findings of a particular disease across a number of different patients. Hence effort has been directed in deriving other sources of training data such as ‘synthetic’ images. Early approaches used simulated, e.g., Monte Carlo approaches [24, 25] or physical phantoms that consisted of simplified anatomical structures [11]. Unfortunately, phantoms are unable to generate high-quality synthetic images and cannot simulate a wide variety of complex interactions, e.g., presence of the deformations introduced by disease. Other investigators used atlases [12] where different transformation maps were applied on the atlas with an intensity fusion technique to create new images. However, atlas based methods usually require many pre-/post-processing steps and a priori knowledge for tuning large amounts of transformation parameters, and thus limiting their ability to be widely adopted. Further, image registration that is used for creating the transformation maps affects the quality of the synthetic images.

In this paper, we propose a new method to produce synthetic PET images using a multi-channel generative adversarial network (M-GAN). Our method exploits the state-of-the-art GAN image synthesis approach [13,14,15,16] with a novel adaptation for PET images and key improvements. The success of GAN is based on its ability to capture feature representations that contain a high-level of semantic information using the adversarial learning concept. A GAN has two competing neural networks, where the first neural network is trained to find an optimal mapping between the input data to the synthetic images, while the second neural network is trained to detect the generated synthetic images from the real images. Therefore, the optimal feature representation is acquired during the adversarial learning process. Although GANs have had great success in the generation of natural images, its application to PET images is not trivial. There are three main ways to conduct PET image synthesis with GAN: (1) PET-to-PET; (2) Label-to-PET; and (3) Computed tomography (CT)-to-PET. For PET-to-PET synthesis, it is challenging to create new variations of the input PET images, since the mapping from the input to the synthetic PET cannot be markedly different. Label-to-PET synthesis usually has limited constraints in synthesizing PET images, so the synthesized PET images can lack spatial and appearance consistency, e.g., the lung tumor appears outside the thorax. CT-to-PET synthesis is not usually able to synthesize high uptake regions e.g., tumors, since the high uptake regions may not be always visible as an abnormality on the CT images. Both PET-to-PET and CT-to-PET synthesis require new annotations for the new synthesized PET images for machine learning. Our proposition to address these limitations is a multi-channel GAN where we take the annotations (labels) to synthesize the high uptake regions and then the corresponding CT images to constrain the appearance consistency and output the synthetic PET images. The label is not necessary to be derived from the corresponding CT image, where user can draw any high uptake regions on the CT images which are going to be synthesized. The novelty of our method, compared to prior approaches, is as follows: (1) it harnesses high-level semantic information for effective PET image synthesis in an end-to-end manner that does not require pre-/post-processing or parameter tuning; (2) we propose a new multi-channel generative adversarial networks (M-GAN) for PET image synthesis. During training, M-GAN is capable of learning the integration from both CT and label to synthesize the high uptake and the anatomical background. During predication, M-GAN uses the label and the estimated synthetic PET images derived from CT to gradually improve the quality of the synthetic PET image; and (3) our synthetic PET images can be used to boost the training data for machine learning methods.

2 Methods

2.1 Multi-channel Generative Adversarial Networks (M-GANs)

GANs [13] have 2 main components: a generative model G (the generator) that captures the data distribution and a discriminative model D (the discriminator) that estimates the probability of a sample that came from the training data rather than G. The generator is trained to produce outputs that cannot be distinguished from the real data by the adversarially trained discriminator, while the discriminator was trained to detect the synthetic data created by the generator.

Therefore, the overall objective is to minimize min-max loss function, which is defined as:

where x is the real data and z is the input random noise. \(p_{data}\), \(p_z\) represent the distribution of the real data and the input noise. D(x) represents the probability that x came from the real data while G(z) represents the mapping to synthesize the real data.

For our M-GAN, we embed the label and the CT image for training and testing, as shown in Fig. 1. During the training time, the generator takes input from the label and CT to learn a mapping to synthesize the real PET images. Then the synthesized PET images, together with the real PET images, enter into the discriminator for separation as:

where l is the label, c the CT and t is the PET image. The conceptual approach to train the M-GAN is to find an optimal setting \(G^*\) that maximizes D while minimizing G, which can be defined as:

Based on the latest empirical data reported by van den Oord et al. [14], we used L1 distance to encourage less blurring for the synthetic images during training. Therefore, the optimization process becomes:

where \(\lambda \) is a hyper-parameter, which balances the contribution of the two terms and we set it to 100 empirically. We followed the published work Isola et al. [15] and used a U-net [17] architecture for the generator G and a five-layer convolutional networks for the discriminator D.

2.2 Materials and Implementation Details

Our dataset consisted of 50 PET-CT studies from 50 lung cancer patients provided by the Department of Molecular Imaging, Royal Prince Alfred (RPA) Hospital, Sydney, NSW, Australia. All studies were acquired on a 128-slice Siemens Biograph mCT scanner; each study had a CT volume and a PET volume. The reconstructed volumes had a PET resolution of 200 \(\times \) 200 pixels at 4.07 mm2, CT resolution of 512 \(\times \) 512 pixels at 0.98 mm2 and slice thickness of 3 mm. All data were de-identified. Each study contained between 1 to 7 tumors. Tumors were initially detected with a 40% peak SUV (standardized uptake value) connected thresholding to detect ‘hot spots’. We used the findings from the clinical reports to make manual adjustments to ensure that the segmented tumors were accurate. The reports provided the location of the tumors and any involved lymph nodes in the thorax. All scans were read by an experienced clinician who has read 60,000 PET-CT studies.

To evaluate our approach we carried out experiments only on trans-axial slices that contained tumors and so analyzed 876 PET-CT slices from 50 patient studies. We randomly separated these slices into two groups, each containing 25 patient studies. We used the first group as the training and tested on the second group, and then reversed the roles of the groups. We ensured that no patient PET-CT slices were in both training and test groups. Our method took 6 h to train over 200 epochs with a 12GB Maxwell Titan X GPU on the Torch library [18].

3 Evaluation

3.1 Experimental Results for PET Image Synthesis

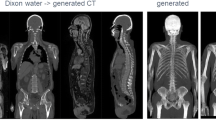

We compared our M-GAN to single channel variants: the LB-GAN (using labels) and the CT-GAN (using CTs). We used mean absolute error (MAE) and peak signal-to-noise ratio (PSNR) for evaluating the different methods [19]. MAE measures the average distance between each corresponding pixels of the synthetic and the real PET image. PSNR measures the ratio between the maximum possible intensity value and the mean squared error of the synthetic and the real PET image. The results are shown in Table 1 where the M-GAN had the best performance across all measurements with the lowest MAE and highest PSNR.

3.2 Using Synthetic PET Images for Training

In the second experiment, we analysed the synthetic PET images to determine their contribution to train a fully convolutional network (FCN - a widely used deep convolutional networks for object detection task [20,21,22]). We trained the FCN model with (i) LB-GAN, (ii) CT-GAN, (iii) M-GAN produced synthetic or (iv) real PET images. Then we applied the trained FCN model to detect tumors on real PET images (We used the first group to build the GAN model and the GAN model was applied on the second group to produce the synthetic PET images. After that, the synthetic PET images were used to build the FCN model. Finally the trained FCN model was tested on the first group with the real PET images for tumor detection. We reversed the roles of the two groups and applied the same procedures). Our evaluation was based on the overlap ratio between the detected tumor and the ground truth annotations [23]. A detected tumor with >50% overlap with the annotated tumor (ground truth) was considered as true positive; additional detected tumor was considered as false positive. We regarded an annoted tumor that was not detected, or an overlap, smaller than 50%, between the detected tumor and the annoted tumors as false negative. We measured the overall precision, recall and f-score.

Table 2 shows the detection and segmentation performances. The results indicate that the M-GAN synthesized PET images performed competitively to the results produced from using real PET images for tumor detection.

4 Discussion

Table 1 indicates that the M-GAN is much closer to the real images when compared with other GAN variants, and achieved the lowest MAE score of 4.60 and a highest PSNR of 28.06. The best score in both MAE and PSNR can be used to indicate the construction of the most useful synthetic images. In general, LB-GAN may be employed to boost the training data. However, due to the lack of spatial and appearance constraints that could be derived from CT, LB-GAN usually result in poor anatomical definitions, as exemplified in Fig. 2d, where the lung boundaries were missing and the mediastinum regions were synthesized wrongly.

CT-GAN achieved competitive results in terms of MAE and PSNR (Table 1). However, its limitation is with its inability to reconstruct the lesions which are information that is only available in the label images (or PET), as exemplified in Fig. 2e, where the two tumors were missing and one additional tumor was randomly appeared in the heart region from the synthetic images. The relative small differences between the proposed M-GAN method and CT-GAN method was due to the fact that tumor regions only occupy a small portion of the whole image and therefore, resulting less emphasis for the overall evaluation. In general, CT-GAN cannot synthesize the high uptake tumor regions, especially for the tumors adjacent to the mediastinum. This is further evidence in Table 2; CT-GAN synthesized PET images have inconsistent labeling of the tumors and resulting the trained FCN producing the lowest detection results.

In Table 2, the difference between the M-GAN and the detection results by using the real PET images demonstrate the advantages in integrating label to synthesize the tumors and the CT to constrain the appearance consistency in a single framework for training.

5 Conclusion

We propose a new M-GAN framework for synthesizing PET images by embedding a multi-channel input in a generative adversarial network and thereby enabling the learning of PET high uptake regions such as tumors and the spatial and appearance constraint from the CT data. Our preliminary results on 50 lung cancer PET-CT studies demonstrate that our method was much closer to the real PET images when compared to the conventional GAN approaches. More importantly, the PET tumor detection model trained with our synthetic PET images performed competitively to the same model trained with real PET images. In this work, we only evaluated the use of synthetic images to replace the original PET; in our future work, we will investigate novel approaches to optimally combine the real and synthetic images to boost the training data. We suggest that our framework can potentially boost the training data for machine learning algorithms that depends on large PET-CT data collection, and can also be extended to support other multi-modal data sets as PET-MRI synthesis.

References

Nestle, U., et al.: Comparison of different methods for delineation of 18F-FDG PET-positive tissue for target volume definition in radiotherapy of patients with non-small cell lung cancer. J. Nuclear Med. 46, 1342–1348 (2005)

Li, H., et al.: A novel PET tumor delineation method based on adaptive region-growing and dual-front active contours. Med. Phys. 35(8), 3711–3721 (2008)

Hoh, C.K., et al.: Whole-body FDG-PET imaging for staging of Hodgkin’s disease and lymphoma. J. Nuclear Med. 38(3), 343 (1997)

Bi, L., et al.: Automatic detection and classification of regions of FDG uptake in whole-body PET-CT lymphoma studies. Comput. Med. Imaging Graph. (2016). http://dx.doi.org/10.1016/j.compmedimag.2016.11.008. ISSN 0895-6111

Doi, K.: Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput. Med. Imaging Graph. 31(4), 198–211 (2007)

Gulshan, V., et al.: Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 316, 2402–2410 (2016)

Kooi, T., et al.: Large scale deep learning for computer aided detection of mammographic lesions. Med. Image Anal. 35, 303–312 (2017)

Esteva, A., et al.: Dermatologist-level classification of skin cancer with deep neural networks. Nature 542(7639), 115–118 (2017)

Chen, H., et al.: DCAN: Deep contour-aware networks for object instance segmentation from histology images. Med. Image Anal. 36, 135–146 (2017)

Bi, L., et al.: Dermoscopic image segmentation via multi-stage fully convolutional networks. IEEE Trans. Biomed. Eng. 64, 2065–2074 (2017)

Mumcuoglu, E.U., et al.: Bayesian reconstruction of PET images: methodology and performance analysis. Phys. Med. Biol. 41, 1777 (1996)

Burgos, N., et al.: Attenuation correction synthesis for hybrid PET-MR scanners: application to brain studies. IEEE Trans. Med. Imaging 12, 2332–2341 (2014)

Goodfellow, I., et al.: Generative adversarial nets. In: Neural Information Processing Systems (2014)

van den Oord, A., et al.: Conditional image generation with pixelcnn decoders. In: Neural Information Processing Systems (2016)

Isola, P., et al.: Image-to-image translation with conditional adversarial networks. In: Computer Vision and Pattern Recognition (2017)

Shrivastava, A., et al.: Learning from simulated and unsupervised images through adversarial training. arXiv preprint arXiv:1612.07828 (2016)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). doi:10.1007/978-3-319-24574-4_28

Collobert, R., Bengio, S., Mariéthoz, J.: Torch: a modular machine learning software library. No. EPFL-REPORT-82802. Idiap (2002)

Gholipour, A., et al.: Robust super-resolution volume reconstruction from slice acquisitions: application to fetal brain MRI. IEEE Trans. Med. Imaging 29(10), 1739–1758 (2010)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Computer Vision and Pattern Recognition (2015)

Bi, L., et al.: Stacked fully convolutional networks with multi-channel learning: application to medical image segmentation. Vis. Comput. 33(6), 1061–1071 (2017)

Kamnitsas, K., et al.: Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 36, 61–78 (2017)

Song, Y., et al.: A multistage discriminative model for tumor and lymph node detection in thoracic images. IEEE Trans. Med. Imaging 31(5), 1061–1075 (2012)

Kim, J., et al.: Use of anatomical priors in the segmentation of PET lung tumor images. In: Nuclear Science Symposium Conference Record, vol. 6, pp. 4242–4245 (2007)

Papadimitroulas, P., et al.: Investigation of realistic PET simulations incorporating tumor patient’s specificity using anthropomorphic models: creation of an oncology database. Med. Phys. 40(11), 112506-(1-13) (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Bi, L., Kim, J., Kumar, A., Feng, D., Fulham, M. (2017). Synthesis of Positron Emission Tomography (PET) Images via Multi-channel Generative Adversarial Networks (GANs). In: Cardoso, M., et al. Molecular Imaging, Reconstruction and Analysis of Moving Body Organs, and Stroke Imaging and Treatment. RAMBO CMMI SWITCH 2017 2017 2017. Lecture Notes in Computer Science(), vol 10555. Springer, Cham. https://doi.org/10.1007/978-3-319-67564-0_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-67564-0_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-67563-3

Online ISBN: 978-3-319-67564-0

eBook Packages: Computer ScienceComputer Science (R0)