Abstract

UML and OCL are frequently employed languages in model-based engineering. OCL is supported by a variety of design and analysis tools having different scopes, aims and technological corner stones. The spectrum ranges from treating issues concerning formal proof techniques to testing approaches, from validation to verification, and from logic programming and rewriting to SAT-based technology.

This paper presents steps towards a well-founded benchmark for assessing UML and OCL validation and verification techniques. It puts forward a set of UML and OCL models together with particular questions centered around OCL and the notions consistency, independence, consequences, and reachability. Furthermore aspects of integer arithmetic and aggregations functions (in the spirit of SQL functions as COUNT or SUM) are discussed. The claim of the paper is not to present a complete benchmark. It is intended to continue the development of further UML and OCL models and accompanying questions within the modeling community having the aim to obtain an overall accepted benchmark.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Model-driven engineering (MDE) as a paradigm for software development is gaining more and more importance. Models and model transformations are central notions in modeling languages like UML, SysML, or EMF and transformation languages like QVT or ATL. In these approaches, the Object Constraint Language (OCL) can be employed for expressing constraints and operations and therefore OCL plays a central role in MDE.

A variety of OCL tools and verification/validation/testing techniques around OCL are currently available (e.g. [1,2,3,4, 6, 8,9,10,11, 14,15,16,17, 19, 21,22,23]) but it is an open issue how to compare such tools and support developers in choosing the OCL tool most appropriate for their project. In many other areas of computer science this comparison is performed by evaluating the tools over a set of standardized benchmark able to provide a somewhat fair comparison environment. Unfortunately, such benchmarks are largely missing for UML and practically inexistent for OCL.

In this sense, this paper continues the initial proposal of a set of UML/OCL benchmarks [13] and puts forward a couple of complementary benchmark models and a few ideas to encourage the community to have an active participation in this benchmark creation and acceptance process. The two new scenarios focus on integer arithmetic (area that has a significant effect on the tool efficiency depending on the underlying formalism used in the reasoning tasks) and large models with heavy use of aggregated functions, a topic for which the OCL language itself has limited coverage [7].

The structure of the rest of this paper is as follows. The next section gives a short introduction to OCL. Section 3 reviews the initial set of models in our benchmark. Section 4 puts forward additional benchmark models while Sect. 5 discusses possible actions to take to further extend the benchmark. The paper is finished in Sect. 6 with concluding remarks.

2 OCL in a Nutshell

The Object Constrains Language (OCL) is a textual, descriptive expression language. OCL is side effect free and is mainly used for phrasing constraints and queries in object-oriented models. Most OCL expressions rely on a class model which is expressed in a graphical modeling language like UML, MOF or EMF. The central concepts in OCL are objects, object navigation, collections, collection operations and boolean-valued expressions, i.e., formulas. Let us consider these concepts in connection with the object diagram in Fig. 1 which belongs to the class diagram in Fig. 2. This class diagram captures part of the submission and reviewing process of conference papers. The class diagram defines classes with attributes (and operations, not used in this example) and associations with roles and multiplicities which restrict the number of possible connected objects.

- Objects::

-

An OCL expression will often begin with an object literal or an object variable. For the system state represented in the object diagram, one can use the objects ada, bob, cyd of type Researcher and sub_17, sub_18 of type Paper. Furthermore variables like p:Paper and r:Researcher can be employed.

- Object navigation::

-

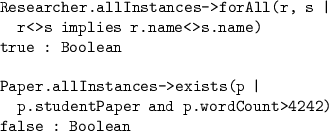

Object navigation is realized by using role names from associations (or object-valued attributes, not occurring in this example) which are applied to objects or object collections. In the example, the following navigation expressions can be stated. The first line(s) shows the OCL expression and the last line the evaluation result and the type of the expression and the result.

- Collections::

-

Collections can be employed in OCL to merge different elements into a single structure containing the elements. There are four collection kinds: sets, bags, sequences and ordered sets. Sets and ordered sets can contain an elements at most once, whereas bags and sequences may contain an element more than once. In sets and bags the element order is insignificant, whereas sequences and ordered sets are sensitive to the element order. For a given class, the operation allInstances yields the set of current objects in the class.

- Collection operations::

-

There is a number of collection operations which contribute essentially to the expressibility of OCL and which are applied with the arrow operator. Among further operations, collections can be tested on emptiness (isEmpty, notEmpty), the number of elements can be determined (size), the elements can be filtered (select, reject), elements can be mapped to a different item (collect) or can be sorted(sortedBy), set-theoretic operations may be employed (union, intersection), and collections can be converted into other collection kinds (asSet, asBag, asSequence, asOrderdSet). Above, we have already used the collection operations union, sortedBy, and asOrderedSet.

- Boolean-valued expressions::

-

Because OCL is a constraint language, boolean expressions which formalize model properties play a central role. Apart from typical boolean connectives (and, or, not, =, implies, xor), universal and existential quantification are available (forAll, exists).

Boolean expressions are frequently used to describe class invariants and operation pre- and postconditions.

3 Previous Benchmarks

The previous benchmark posed general questions that concerned the validation and verification of properties in UML and OCL models. The questions came hand in hand with precise models in which the questions were made concrete. Questions were given names in order to reference them. The following questions were stated:

- ConsistentInvariants::

-

Is the model consistent? Is there at least one object diagram satisfying the UML class model and the explicit OCL invariants?

- Independence::

-

Are the invariants independent? Is there an invariant which is a consequence of the conditions imposed by the UML class model and the other invariants?

- Consequences::

-

Is it possible to show that a stated new property is a consequence of the given model?

- LargeState::

-

Is it possible to automatically build valid object diagrams in a parameterized way with a medium-sized number of objects, e.g. 10 to 30 objects and appropriate links, where all attributes take meaningful values and all links are established in a meaningful way? These larger object diagrams are intended to explain the used model elements (like classes, attributes and associations) and the constraints upon them by non-trivial, meaningful examples to domain experts not necessarily familiar with formal modeling techniques.

- InstantiateNonemptyClass::

-

Can the model be instantiated with non-empty populations for all classes?

- InstantiateNonemptyAssoc::

-

Can the model be instantiated with non-empty populations for all classes and all associations?

- InstantiateDisjointInheritance::

-

Can all classes be populated in presence of UML generalization constraints like disjoint or complete?

- InstantiateMultipleInheritance::

-

Can all classes be populated in presence of multiple inheritance?

- ObjectRepresentsInteger::

-

Given a representation of the integers in terms of a UML class model where an integer is captured as a connected component in an object diagram. Is it true that any connected component of a valid object diagram either corresponds to the term zero or to a term of the form \(succ^n(zero)\) with \(n>0\) or to a term of the form \(pred^n(zero)\)?

- IntegerRepresentsObject::

-

Is it true that any term of the form zero or of the form \(succ^n(zero)\) or of the form \(pred^n(zero)\) corresponds to a valid object diagram for the model?

The concrete four UML and OCL models that were used to make the questions precise were: CivilStatus (CS) [see Fig. 3], WritesReviews (WR) [see Fig. 2], DisjointSubclasses (DS) [see Fig. 4], and ObjectsAsIntegers (OAI) [see Fig. 5]. All details can be found in [13].

4 Additional Benchmarks

The two new benchmarks described in this section complement the old benchmarks with regard to the use of integer arithmetic and the construction of larger models for which aggregate functions (in the sense of SQL functions as count or min) are needed.

4.1 Integer Arithmetic

As indicated in Fig. 6, for the integer arithmetic benchmark the respective class diagram only has one class with three integer attributes a, b, and c. Basically in this benchmark solutions for the equation a = b op c have to be found. The operator op is one of the basic OCL integer operators +, -, *, div. Exactly one of the four invariants from the lower left of Fig. 6 will be active.

The benchmark asks for the construction of a number of C objects (in the example exactly 31) in which the respective operator invariant is valid. The other two invariants guarantee that the solutions in the found C objects are mutually distinct solutions, i.e., each solution appears only once.

We have used this benchmark to compare the efficiency of different SAT solvers that can be employed for the model validator available in the USE tool. The different SAT solvers (SAT4J, LightSAT4J, MiniSat, MiniSatProver) available under Windows show significantly different performance under this benchmark. Another instantiation of the benchmark for available SAT solvers under Linux confirmed the observations made for Windows.

4.2 Larger Model with Aggregation Functions

The second new benchmark handles global invariants restricting many classes and concerns the construction of object diagrams for a State-Distict-Community world as shown in Fig. 7. States consist of districts that in turn consist of communities. Individual persons with four statistical attributes (female, young, degree, married) live in communities. The task is to construct an object diagram where in each geographical area (State, District, Community) the statistical distribution of the attributes follows the percentages (Pc) stated in the Config object.

An example object diagram is presented in Fig. 8. For example, there the Config object requires that in each state, district and community the percentage of young people lies between 25% and 75% (minPcYoung and maxPcYoung). This example object diagram used 2 states, 3 districts, and 4 communities. The number of Person objects is also stated in the Config object.

The underlying invariants concern the three geographical areas and the four statistical attributes. The invariants also include a decent degree of integer arithmetic in order to restrict the statistical distribution of the attributes correctly. It took the USE model validator about 6 min to construct the example object diagram. This benchmark is well-suited for comparing the abilities of a UML and OCL analysis tool with regard to global constraints, integer arithmetic and the construction of middle-sized object diagrams.

5 Community Roadmap

Completing the benchmark is not something we can do on our own. And we shouldn’t either. The next subsections discuss three different community-driven actions to bring our proposal closer to reality.

5.1 Improving Benchmark Coverage

Our initial collection of benchmark models covers already a good number of interesting OCL expressions and scenarios but it is far from being complete. Speaking generally, for an OCL tool there are challenges in two dimensions: (a) challenges related to the expressiveness of OCL (i.e., the complete and accurate handling of OCL) and (b) challenges related to the computational complexity of the evaluating OCL for a given problem (verification, testing, code-generation,...).

Therefore, beyond increasing the number of benchmark models, we also require several variations of the same model, e.g. in terms of size and specific constructs used in the OCL constraints, to be part of the benchmark and improve this way it’s coverage. And each of these variations can be decomposed in a number of subvariations that are relevant too. For instance, wrt size variations, we can increase a model by adding more classes, more attributes per class, increasing its density (number of associations between classes), the number of constraints, or all of them at the same time. Some underlying formalisms are more sensitive than others to some of these variations so fair evaluations would require to play with all these extension variables.

This could easily lead to a combinatorial explosion. Still, based on our own experience we believe that at least the following scenarios should be added to our current collection of benchmark models:

-

1.

Models with tractable constraints, i.e., constraints that can be solved ‘trivially’ by simple propagation steps.

-

2.

Models with hard, non-tractable constraints, e.g., representations of NP-hard problems.

-

3.

Unsatisfiable models, i.e. models that cannot be even instantiated in way that all constraints are satisfied.

-

4.

Highly symmetric problems, i.e., that require symmetry breaking to efficiently detect unsatisfiability.

-

5.

Intensive use of Real arithmetic.

-

6.

Intensive use of String values and operations on strings. So far, String attributes are mostly ignored [5] or simply regarded as integers which prohibits the verification of OCL expressions including String operations other than equality and inequality.

-

7.

Many redundant constraints: is the approach able to detect the redundancies and benefit from them to speed up the evaluation?

-

8.

Sparse models: instances with comparably few links offer optimization opportunities that could be exploited by tools.

-

9.

Support for recursive operations, e.g. in form of fixpoint detection or static unfolding.

-

10.

Intensive use of the ‘full’ semantic of OCL (like the undefined value or collection semantics); this poses a challenge for the lifting to two-valued logics.

Recent research developments (e.g. [20]) could be enhanced to deal with OCL expressions and be employed to automatically generate some of these benchmark models, specially variations in size or density given a “seed” model. Nevertheless, making an effort to find and contribute industrial models is still key to make sure that tools face realistic models.

5.2 OCL Repository

The easiest way to share and contribute models to a common benchmark is by storing them all in a single repository. This is not a new idea, several initiatives like MDEForge [18] or ReMODD [12] have been proposed before but with limited success, mainly due to their ambitious goal: a repository for all kinds of models (and other modeling artifacts) in any format, shape or size.

We aim for a less ambitious but more feasible goal, a repository for OCL-focused models. Being a textual language, the standard infrastructure for code hosting services/version control systems can be largely reused. We still need to store the models accompanying those OCL expressions but, in our scenario, they are basically only UML models and, mostly, limited to class diagrams which simplifies a lot their management.

Nowadays, the online coding platform GitHub (with over 30 million hosted projects) is the only reasonable choice to host such repository since it offers all the functionality we need and it is very well-known which reduces the entry barrier of possible contributors that are not forced to invest time learning a new environment. Therefore we have initialized our OCL repository thereFootnote 1 and added some basic instructions on how to contribute new UML/OCL models there.

5.3 OCL Competitions

Competition is in our blood. Therefore, one way to increase awareness on the benchmark is to organize yearly competitions of OCL tools where tool vendors evaluate their tools against each other by executing them on the same set of benchmark models.

This format is very successful in the SAT community (e.g. seeFootnote 2) where winning a competition is considered a very prestigious achievement for a SAT solver and therefore something that vendors/researchers strive for. In the MDE community we have the successful example of the Tool Transformation ContestFootnote 3, focusing on comparing the expressiveness, usability and performance of transformation tools to get a deeper understanding of their relative merits and identify open problems. We propose to replicate these successes in the OCL community.

Typically, competitions are organized in different tracks depending on the properties we want to measure and, more importantly, include an initial call for problems/case studies to use in the competition itself. These proposals are perfect candidates to extend our benchmark.

6 Conclusions

This paper emphasizes the increasing need for a reliable set of OCL Benchmarks that can be used to consistently evaluate and compare OCL tools. We believe such benchmarks would encourage the development of new OCL tools (that now would have a way to evaluate their progress and contrast it against more established tools and a chance to distinguish themselves by focusing on those aspects where others may be failing) and increase the user base of OCL and other similar languages since users would be more confident on the tools’ capabilities.

This is still work in progress, and thus, we have also outlined how the community as a whole should (and could) push forward these ideas by, for instance, contributing to a common repository or organizing and participating in specific events on this topic. We hope to see these actions taking place in a near future.

References

Anastasakis, K., Bordbar, B., Georg, G., Ray, I.: On challenges of model transformation from UML to alloy. Softw. Syst. Model. 9(1), 69–86 (2010)

Beckert, B., Giese, M., Hähnle, R., Klebanov, V., Rümmer, P., Schlager, S., Schmitt, P.H.: The KeY system 1.0 (Deduction Component). In: Pfenning, F. (ed.) CADE 2007. LNCS (LNAI), vol. 4603, pp. 379–384. Springer, Heidelberg (2007). doi:10.1007/978-3-540-73595-3_26

Boronat, A., Meseguer, J.: Algebraic semantics of OCL-constrained metamodel specifications. In: Oriol, M., Meyer, B. (eds.) TOOLS EUROPE 2009. LNBIP, vol. 33, pp. 96–115. Springer, Heidelberg (2009). doi:10.1007/978-3-642-02571-6_7

Brucker, A.D., Wolff, B.: HOL-OCL: a formal proof environment for uml/ocl. In: Fiadeiro, J.L., Inverardi, P. (eds.) FASE 2008. LNCS, vol. 4961, pp. 97–100. Springer, Heidelberg (2008). doi:10.1007/978-3-540-78743-3_8

Büttner, F., Cabot, J.: Lightweight string reasoning for OCL. In: Vallecillo, A., Tolvanen, J.-P., Kindler, E., Störrle, H., Kolovos, D. (eds.) ECMFA 2012. LNCS, vol. 7349, pp. 244–258. Springer, Heidelberg (2012). doi:10.1007/978-3-642-31491-9_19

Cabot, J., Clarisó, R., Riera, D.: UMLtoCSP: a tool for the formal verification of UML/OCL models using constraint programming. In: Stirewalt, R.E.K., Egyed, A., Fischer, B. (eds.) ASE, pp. 547–548. ACM (2007)

Cabot, J., Mazón, J.-N., Pardillo, J., Trujillo, J.: Specifying aggregation functions in multidimensional models with OCL. In: Parsons, J., Saeki, M., Shoval, P., Woo, C., Wand, Y. (eds.) ER 2010. LNCS, vol. 6412, pp. 419–432. Springer, Heidelberg (2010). doi:10.1007/978-3-642-16373-9_30

Calì, A., Gottlob, G., Orsi, G., Pieris, A.: Querying UML class diagrams. In: Birkedal, L. (ed.) FoSSaCS 2012. LNCS, vol. 7213, pp. 1–25. Springer, Heidelberg (2012). doi:10.1007/978-3-642-28729-9_1

Castillos, K.C., Dadeau, F., Julliand, J., Taha, S.: Measuring test properties coverage for evaluating UML/OCL model-based tests. In: Wolff, B., Zaïdi, F. (eds.) ICTSS 2011. LNCS, vol. 7019, pp. 32–47. Springer, Heidelberg (2011). doi:10.1007/978-3-642-24580-0_4

Chimiak-Opoka, J.D., Demuth, B.: A Feature Model for an IDE4OCL. ECEASST 36 (2010)

Clavel, M., Egea, M., de Dios, M.A.G.: Checking unsatisfiability for OCL constraints. Electron. Commun. EASST 24, 1–13 (2009)

France, R.B., Bieman, J.M., Mandalaparty, S.P., Cheng, B.H.C., Jensen, A.C.: Repository for model driven development (ReMoDD). In: 34th International Conference on Software Engineering, ICSE 2012, pp. 1471–1472 (2012)

Gogolla, M., Büttner, F., Cabot, J.: Initiating a benchmark for UML and OCL analysis tools. In: Veanes, M., Viganò, L. (eds.) TAP 2013. LNCS, vol. 7942, pp. 115–132. Springer, Heidelberg (2013). doi:10.1007/978-3-642-38916-0_7

Gogolla, M., Büttner, F., Richters, M.: USE: a UML-based specification environment for validating UML and OCL. Sci. Comput. Prog. 69, 27–34 (2007)

Gonzalez, C.A., Büttner, F., Clariso, R., Cabot, J.: EMFtoCSP: a tool for the lightweight verification of EMF models. In: Gnesi, S., Gruner, S., Plat, N., Rumpe, B. (eds.) Proceedings of ICSE 2012 Workshop Formal Methods in Software Engineering: Rigorous and Agile Approaches (FormSERA) (2012)

Hußmann, H., Demuth, B., Finger, F.: Modular architecture for a toolset supporting OCL. Sci. Comput. Program. 44(1), 51–69 (2002)

Queralt, A., Artale, A., Calvanese, D., Teniente, E.: OCL-lite: finite reasoning on UML/OCL conceptual schemas. Data Knowl. Eng. 73, 1–22 (2012)

Rocco, J.D., Ruscio, D.D., Iovino, L., Pierantonio, A.: Collaborative repositories in model-driven engineering. IEEE Softw. 32(3), 28–34 (2015)

Roldán, M., Durán, F.: Dynamic Validation of OCL Constraints with mOdCL. ECEASST 44 (2011)

Semeráth, O., Vörös, A., Varró, D.: Iterative and incremental model generation by logic solvers. In: Stevens, P., Wąsowski, A. (eds.) FASE 2016. LNCS, vol. 9633, pp. 87–103. Springer, Heidelberg (2016). doi:10.1007/978-3-662-49665-7_6

Wille, R., Soeken, M., Drechsler, R.: Debugging of inconsistent UML/OCL models. In: Rosenstiel, W., Thiele, L. (eds.) DATE, pp. 1078–1083. IEEE (2012)

Willink, E.D.: Re-engineering eclipse MDT/OCL for Xtext. ECEASST 36 (2010)

Yatake, K., Aoki, T.: SMT-based enumeration of object graphs from UML class diagrams. ACM SIGSOFT Softw. Eng. Notes 37(4), 1–8 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Gogolla, M., Cabot, J. (2016). Continuing a Benchmark for UML and OCL Design and Analysis Tools. In: Milazzo, P., Varró, D., Wimmer, M. (eds) Software Technologies: Applications and Foundations. STAF 2016. Lecture Notes in Computer Science(), vol 9946. Springer, Cham. https://doi.org/10.1007/978-3-319-50230-4_22

Download citation

DOI: https://doi.org/10.1007/978-3-319-50230-4_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-50229-8

Online ISBN: 978-3-319-50230-4

eBook Packages: Computer ScienceComputer Science (R0)