Abstract

Personalization and adaptivity can promote motivated usage, increased user acceptance, and user identification in serious games. This applies to heterogeneous user groups in particular, since they can benefit from customized experiences that respond to the individual traits of the players. In the context of games, adaptivity describes the automatic adaptation of game elements, i.e., of content, user interfaces, game mechanics, game difficulty, etc., to customize or personalize the interactive experience. Adaptation processes follow an adaptive cycle, changing a deployed system to the needs of its users. They can work with various techniques ranging from simple threshold-based parameter adjustment heuristics to complex evolving user models that are continuously updated over time. This chapter provides readers with an understanding of the motivation behind using adaptive techniques in serious games and presents the core challenges around designing and implementing such systems. Examples of how adaptability and adaptivity may be put into practice in specific application scenarios, such as motion-based games for health, or personalized learning games, are presented to illustrate approaches to the aforementioned challenges. We close with a discussion of the major open questions and avenues for future work.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Serious games can be wearing for the users when activities are repetitive or redundant, or when the games present an imbalance of challenge relative to the skill level of the players. An example for educational games is when a player already has reliable knowledge on a certain topic, e.g., relativity theory, yet still has to complete a whole introductory level on this topic. This could be streneous for the user and could lead to impatience with the game. An adaptive game, however, can react to the player and the respective individual prior experience or background by offering context-adaptive modifications, e.g., in the present example a shortcut to skip the introductory level.

Personalized and adaptive serious games offer great opportunities for a large range of potential application areas, since they can promote motivated usage, user acceptance, and user identification within and outside of the games. Potential application areas range from general learning games to games for health, training, and games for specific target groups such as children with dyslexia or people with Parkinson’s disease. Examples for motion-based serious games for health are the so-called exergames, such as ErgoActive and BalanceFit for prevention and rehabilitation [38]. Their promise lies in motivating players to perform exercises that might otherwise be perceived as dull, repetitive, strenuous, etc. However, since personal differences with regard to physical and gaming abilities vary notably amongst the players, especially with specific heterogeneous target groups as mentioned above, providing a personalized experience is crucial in order to facilitate achieving the targeted positive outcomes.

Personalized learning games, as a further example, could offer all learners from a heterogeneous user group the possibility to make progress in a motivating and rewarding manner, and offer increased chances and a more equal basis regarding individual preparation for standardized expected learning outcomes, such as passing an exam. The following often cited anonymousFootnote 1 quote which underlines the necessity for qualified individualized assessment when it comes to heterogeneity is in-line with the basic promise of adaptive e-learning:

Everybody is a genius. But if you judge a fish by its ability to climb a tree, it will live its whole life believing that it is stupid. (Anonymous (see Footnote 1))

In predefined, static (software) systems, an extensive adaptation to individual user potentials and needs is typically not possible, because these systems can only act within their predefined limits. Therefore, when talking about personalized and adaptive games, one often thinks of games which actually can adapt - or be adapted - beyond a limited set of predefined settings through an intelligently acting engine. At this point, reference is often made to intelligent games and Artificial Intelligence (AI). However, despite remarkable progress in AI in recent years, as evidenced by projects such as the Human Brain Project [57] and prominent advances in deep learning with neural networks [53], adding adaptivity or personalization features to serious games in a fully automated manner (e.g., automatically producing adequate content for an individual player of a learning game without employing predefined manually selected sets or parametrized collections) is not yet easily feasible.

The building blocks of modern artificial intelligence, such as machine learning and data mining techniques are, however, beginning to play an important role in the development of adaptive and personalized serious games, since the digital nature of serious games allows for recording and analyzing usage data. The possibility to monitor interactions is the foundation of new research fields like game analytics or, in the educational context, learning analytics and educational data mining. Furthermore, such data could be used to synthesize objective information about the state and progress of a user regarding the serious aim of a game (e.g., progress on English vocabulary while exercising).

In order to allow these potential benefits to unfold, players must remain engaged over prolonged periods of time. This requires adequate game user experience design which must match the player type, play style, and preferences of as many users as possible. In addition to this requirement, which can also be stated for general game development, serious games must consider the success of the desired serious outcome, which requires further careful balancing. In many cases, user-centered iterative design and a small number of predefined difficulty modes for manual adaptation (such as “easy”, “medium” and “hard”) do not provide enough flexibility. Additional manual settings can be made available, via settings interfaces, to allow for a more personalized experience. However, players do not always want to interact with manual settings and they may interfere with the player experience by, e.g., breaking the magic circle [46]. Automatic adaptivity can reduce the potential negative impact of extensive manual adaptability options by automatically tuning games to optimize and personalize game experiences as well as to personalize interactions regarding the serious outcome.

For instance, digital game-based learning systems could utilize the learners’ intrinsic motivation for interaction and learning to keep them motivated and to ultimately increase the learning outcome. Dynamic adaptive systems can help with achieving these goals by adapting the educational games to the knowledge level, skill, and experience of the users. Techniques for personalization can adjust the content and interaction schemes of virtual environments to make them more attractive to the users. Digital game-based learning systems must consider the heterogeneity of the users and their varying knowledge levels, cultural backgrounds, usage surroundings, skills, etc. In a well-defined and controlled environment, the interaction schemes could be very homogeneous and modeled in a deterministic fashion. For learning games this is usually not the case, because everyone tends to learn differently. In the real world, one-to-one tutoring or well-guided group learning that respect the heterogeneous properties of groups of learners and individual learners can arguably produce the best educational outcomes. Thus, targeting to replicate the customization and personalization present in these antetype scenarios in the development of serious games appears a reasonable pursuit. Moving towards that goal, however, requires advanced adaptable and adaptive techniques, since the optimal setup cannot be determined prior to situated use.

For the example of adaptive digital game-based learning systems is that in current systems, little or no concepts for adaptivity of educational techniques and content to the learners’ needs exist. Didactic adaptivity needs didactic models based on learning theories, e.g., behaviorism, constructivism, cognitivism, etc. [96]. For Intelligent Tutoring Systems (ITS) such models have already been created [96]. Ideally, these models would be designed in a generic, interoperable way to allow for transfer to other ITS. The transfer of mature ITS models to virtual environments seems to be the next logical step when thinking about adaptive game systems [40, 92].

However, the development of such adaptive, or partially (automatically) adaptive and partially (manually) adaptable systems is not trivial, as the approach comes with a broad range of challenges. In the remainder of this chapter we will provide a more detailed overview on the general objectives of adaptivity based on initial terms and definitions, together with a discussion of the main challenges. These challenges encompass, but are not limited to, cold-start problems and co-adaptation. General approaches to implementations as well as two implementation scenarios (game-based learning and games for health) are presented to provide a structured discussion of specific implementation challenges. We will close with an overview of the most pressing open research questions and indicate directions for future work.

1.1 Chapter Overview

This chapter is structured as follows.

-

Introduction. The introduction (Sect. 1) gives a short overview on basic adaptivity principles and defines the scope and objectives. Furthermore, to differentiate the scope of dynamic artificial adaptivity, the introduction also includes some remarks on the broadly used term Artificial Intelligence (AI).

-

State-of-the-Art. Section 2 on the state-of-the-art provides the reader with starting points on the topic in general as well as with references to distinct work and current research topics.

-

Adaptation. Section 3 introduces the general principles of adaptation towards personalized and adaptive serious games. This includes a clarification on the terms personalization, customization, adaptation, and adaptivity. Secondly, we differentiate between the concepts behind each of these terms and bring them into an alignment with the general topic of this chapter.

-

Games for Health. A manifestation of the adaptation concepts is provided along the example of adaptive motion-based games for health (Sect. 4).

-

Application Examples. Subsequently, applications of some of the presented methods, principles and techniques are presented in examplary use-cases from learning games and games for health (Sect. 5).

-

Challenges. Personalization and adaptivity in games is a young research area that is difficult to aptly define due to the large number of involved disciplines. Accordingly, the sections on technical challenges (Sect. 6) and research questions (Sect. 7) mark directions for future research.

-

Conclusion. This chapter finishes with a conclusion (Sect. 8) and gives recommendations for further reading (Sect. “Further Reading”).

1.2 Scope of this Chapter

This chapter aims at providing readers with an initial understanding of the motivation of using adaptive techniques in serious games, and of the core challenges that designing and implementing such systems entails. Examples illustrate how adaptability and adaptivity may be realized in more specific application scenarios, and major open challenges and paths for future work are presented. Dynamic adaptivity involves multiple disciplines ranging from initial game design over software design and engineering to topics from artificial intelligence or modeling tasks and up to aspects of game evaluation. Because of this multiplicity and interdisciplinarity, only a subset of the disciplines involved can be presented here. More details can be found in the remaining chapters of this book and in the recommended literature (cf. Sect. “Further Reading”).

1.3 Remarks on AI

The term Artificial Intelligence (AI) [76] is widely used, but the interpretations of what exactly artificial intelligence means differ considerably. In the gaming industry, AI has been stretched to a popular marketing term, encompassing anything from simple if-then-else rules to advanced self-learning models. Nevertheless, AI techniques are commonly used in computer simulations and games; typical examples are pathfinding and planning [59, 76]. Behavior trees have become very popular to model the behavior of Non-Player Characters (NPCs) as seen in the high profile video games Halo 2, Bioshock, and Spore. In serious games, however, the goal is to facilitate gameplay that optimally assists the users in their endeavors for training, learning, etc. - and AI offers promising approaches to this end. Regarding the aspect of NPC behavior, for example, AI driven NPCs could be designed to produce more human behaviors, either in cooperative or in competing ways, with the goal of allowing players to more naturally relate to these artificial agents. In an educational context, this could mean that the program attempts to mimic a tutor to provide individualized learning assistance. The same is true for serious games in general where intelligent, artificial, cooperative players (e.g., NPCs) mimic supporting peers to achieve a common task. Of course, this is still an emerging field of research. Truly human-alike behavior for games falls under the umbrella of Strong AI or Artificial General Intelligence (AGI). Strong AI aims at establishing systems which, in their behavior, cannot be differentiated from humans, i.e., a perfect mimicry in the sense of a solution to the Turing test [76]. “Intelligent” NPCs, as a potential direct embodiment of the role of a human tutor outside of the realm of serious games, are an element that can occur in a wide variety of serious games, and they can heavily benefit from AI techniques [40, 45, 87, 96]. However, numerous other elements of serious games (e.g., training intensity, prediction of difficulty parameters, complexity of a given task, etc.) could arguably also benefit from the wide array of AI techniques. This does not necessarily encompass the full range of AGI techniques, but could factor in advanced AI techniques which are less symbolic and complex, such as automatically learned probabilistic models (e.g., dynamic Bayesian networks) or natural language understanding (as an extension of basic natural language processing) [76]. Since related work is often vague in describing the role and extent of AI techniques that are used to implement adaptivity and personalization in serious games, future work should direct effort towards (1) applying modern AI or AGI technologies like cognitive architectures [54] in the context of personalized adaptive serious games; (2) being very specific about the term AI; (3) clearly stating which technologies are implemented and if they reasonably match the understanding of intelligent behavior.

2 State of the Art

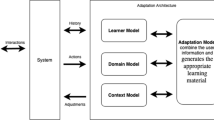

Adaptivity and personalization have long been an active topic for applications which target heterogeneous user groups. With the advent of the World Wide Web Brusilovsky laid the foundation for adaptive and user model-based interfaces in his work on adaptive hypermedia [16]. He described how adaptive hypermedia systems can build a model of the user and apply it to adapt to that user. Adaptation could be the personalization of content to the user’s knowledge and goals or the recommendation of links to other hypermedia pages which promise to be most relevant to the user [16]. This concept has been driven forward ever since, for instance in the development of adaptive e-learning systems, so-called Intelligent Tutoring Systems (ITS) [96]. It is a logical step to transfer the established models and principles from ITS to serious games. Lopes and Bidarra (2011) give a thorough overview on techniques for adaptivity in games and simulations [55]. They surveyed the research on adaptivity in general and discussed the main challenges. In their paper they concluded that, among other methods, procedural content generation and semantic modeling were promising research directions [55].

In a broader view, adaptivity for games for entertainment is often masked behind the term Artificial Intelligence (AI). However, the term AI is often somewhat overstretched as a pure marketing term, as noted above (cf. Sect. 1.3). Nevertheless, games for entertainment and serious games have at least one goal in common: to motivate the user to play the game in a given session - and to motivate to continue playing the game for prolonged periods. AI always played a role in games [17] and the listing of all AI techniques that have been used in the context of games is far beyond the scope of this chapter and subject to further reading (cf. Sect. “Further Reading”). In 2007, the webpage aigamedev.com by A. Champandard listed prominent examples for influential AI in games. These include (1) Sim City as an example for complex simulations; (2) The Sims as an example for emotional modeling; (3) Creatures showing the first application of machine learning in games; (4) Halo for intelligent behavior of enemies with behavior trees; (5) F.E.A.R. for an implementation of AI planning for context-sensitive behavior; and (6) the strategy game Black & White which uses Belief-Desire-Intention (BDI) modeling inspired by cognitive science research as well as machine learning techniques like decision trees and neural networks. Another game AI example can be seen in the cooperative first-person shooter video game Left 4 Dead. The game intensity in Left 4 Dead is adapted following psychological models for increased tension and surprising moments. Other titles employ heuristics to optimize the difficulty settings for the individual players, for instance in the survival horror game Resident Evil 5 where a sub-range of a more fine-grained difficulty selection is determined through the manual difficulty choice of the players. The exact setting on the subscale, which affects multiple aspects of game difficulty, is dynamic and based on the player performance. Such techniques are most commonly referred to as dynamic difficulty adjustment or dynamic game difficulty balancing.

Dynamic Difficulty Adjustment (DDA) is the automatic adaptation of the difficulty level to the current level of the user, based on predefined general parameter ranges, or according to a user model. DDA is predominantly used in entertainment games to increase the difficulty of the game along with the increasing capabilities of the player. A commonly used manifestation of the DDA balancing technique is rubber banding [69] which artificially boosts the possibilities (as expressed in game resources) of players to increase their performance when the actual performance drops below a certain threshold. Rubber banding is often used in racing games, a popular example being Nintendo’s Mario Kart. In the case of Mario Kart, DDA has arguably helped making the game very inviting for novice players, but the very visible boosts provided to trailing players and NPCs can potentially harm the experience of advanced players, since weaker players receive advantages that may be perceived as being unfair [35].

DDA has also been implemented in the context of learning games. For game-based intelligent tutoring systems, DDA has been employed by Howell and Veale (2006) [45]. Their game-based ITS for learning linguistic abilities was designed to attempt to keep learners immersed in the game, in a state of flow, by dynamically adjusting the difficulty level, while at the same time respecting educational constraints to achieve learning targets. This balancing of serious games has also been studied by Kickmeier-Rust and Albert (2012) in their work on adaptive educational games [52]. Their report emphasizes the importance of creating an “educational game AI” which dynamically balances educational serious games to “achieve superior gaming experience and educational gains” [52].

Further examples for adaptive game balancing in other research projects have been shown in the EU projects ELEKTRA and 80 Days [37, 71]. ELEKTRA focuses on assessment and adaptation in a 3D adventure game: NPCs give educational and motivational guidance by providing students with situation-adaptive problem solving support [51]. The goal of the project was to utilize the advantages of computer games and their design principles to achieve adaptive educational games. People from various disciplines worked together, including pedagogy, cognitive science, neuroscience, and computer science. The project developed a methodology for designing educational games that was applied to a 3D adventure game demonstrator to teach physics, more precisely optics. This methodology led to the development of an adaptive engine [71] which uses the outcomes of evaluating the learner’s performance by applying Bloom’s taxonomy [15]. The results are stored in a learner model which reflects the skill level of the learners. A further example for adaptive guidance is the Prime Climb game by Conati and Maske (2009) [20]. Their study showed the educational effectiveness of a pedagogic virtual agent, embodied as a magician NPC in the game world, for a mathematics learning game.

In the 80 Days project concepts for adaptive, interactive storytelling were developed to teach students geography. Göbel et al. (2010) introduce the concept of Narrative Game-based Learning Objects (NGLOBs) to dynamically adjust narratives for adventure-like, story-based educational games [39]. An implementation of adaptive, interactive storytelling with NGLOBs has been shown in the EU project 80 Days [39]. Another popular example for an interactive story is the digital interactive fiction game Façade by Mateas and Stern (2005) [58]. Façade makes use of Natural Language Processing (NLP) to allow the player to naturally interact with a couple which invited the player to their home for a cocktail party. The combination of an NLP interface and a broad spectrum of possible story outcomes positively influences the game experience and immersion. Whereas the technical aspects of the game have been received well, the drama aspects have received mixed reviews [61].

An often cited example for an effective serious game is the DARPA-funded Tactical Language and Cultural Training System (TLCTS) [50] for the US military. However, as TLCTS is a combination of an adaptive ITS component for skill development (the Skill Builder) and two games, only the ITS component shows adaptive behavior [50].

The dynamic adjustment of game mechanics or content is another field of research. Niehaus and Riedl (2009) present an approach to customize scenarios by dynamically inserting or removing events from a scenario that relate to learning objectives [63].

A major problem arises when the player first starts a game and the system has no information on the user yet. A commonly used approach for the initial phase of adaptation is stereotyping or classification [96]. At the beginning, the system utilizes questionnaires, or very early player performance observations, to classify the knowledge or skill level of the users. Based on the results the system can map the learner to predefined class-stereotypes, like “beginner”, “intermediate” or “expert”. This form of personalization is mostly applied to static games with predefined learning pathways for each stereotype and does not consider adaptation based on current learning contexts. An example for stereotyping in serious games is S.C.R.U.B. which aims at teaching microbiology concepts to university students [56].

On the technical side various software architectures and middlewares support the development of adaptive serious games [21, 66, 67, 71]. The ALIGN system architecture by Peirce et al. (2008) presents a way how to noninvasively introduce adaptivity to games [71]. It has been applied in the educational adventure game of the ELEKTRA project. The ALIGN system architecture can decouple the adaptation logic from the actual game without mitigating the gameplay. It is divided into four conceptual processes: the accumulation of context information about the game state; the interpretation of the current learner state; the search for matching intervention constraints; and a recommendation engine, which applies the adaptation rules to the game. Further architecture examples are the distributed architecture for testing, training and simulation in the military domain, TENA [66], or the CIGA middleware for distributed intelligent virtual agents [67]. The extensive software architecture of TENA [66] focuses on interoperability for military test and training systems. The U.S. Department of Defense (DoD) uses TENA for distributed testing and training. At its core, the TENA middleware interconnects various applications and tools for the management, monitoring, analysis, etc., of military assets. Via a gateway service they can be linked with other conformant simulators that provide real sensor data or data from live, virtual and constructive simulations (LVC) for the TENA environment. The CIGA [67] middleware is an architecture to connect multi-agent systems to game engines using ontologies as a design contract. It negotiates between the game engine on the physical layer and the multi-agent system on the cognitive layer [67].

Most game engines come with built-in artificial intelligence (AI) procedures for common problems such as pathfinding and NPC behavior. But since game engines have a generic characteristic to allow the creation of a variety of different game types for different genres, the AI in such game engines is often not tailored towards specific needs in serious games. When additional AI functionality is needed, various game AI middlewares exist to incorporate specific AI functions to existing games. Examples range from more sophisticated path planning algorithms for massive amounts of NPC steering, to dynamically extendable models like behavior trees for intelligence-akin behavior, or to machines learning algorithms to learn human-like behavior. However, AI packages for use in terms of adaptivity and personalization in serious games development are not yet commonly available.

3 General Principles of Adaptation

This section introduces the general principles of personalized and adaptive serious games. The general objectives of adaptivity are discussed, i.e., why one may want to make a game adaptive at all. The section includes clarifications regarding the understanding of the differentiation between the varying terms around adaptation, i.e., adaptivity, adaptation, personalization, and customization. In a broader view and in general applications these terms are often used as synonyms to describe the user-centered adjustments of systems; but in the context of this chapter, with its discussions of the core principles of adaptation, we employ more nuanced definitions and differentiations. We discuss several approaches to adaptability and adaptivity and introduce concepts for the dimensions of adaptation. Since adaptation is an ongoing process we describe the adaptive cycle and one possible manifestation of this model for adaptive serious games. Related to that is the question when and how to start an adaptive cycle; this is discussed in the sections on the cold-start problem and on co-adaptation.

3.1 Objectives of Adaptivity

Adequately matching challenges presented in a game with the capabilities and needs of its players is a prerequisite to good player experience. Furthermore, the “serious” purpose of a serious game must be taken into account, i.e., the desired successful outcome of playing a serious game, such as achieving individual learning or health goals. This challenge has been discussed in an early work which stood at the beginning of a field that is now called game user research [23].

Since then, game user researchers have connected such game-related considerations to candidate psychological models, mostly from motivational and behavioral psychology, including flow by Csikszentmihalyi [24], Behavior Change by Fogg [32], and Self-Determination Theory (SDT) by Deci [27]. As a needs-satisfaction model, SDT explains (intrinsic) motivation based on pre-conditions that lie in a range of needs that must be satisfied, namely competence, autonomy, and relatedness. Rigby and Ryan provide a detailed argument how video games have managed to fulfill these needs better and better during the last 30 years of video game history [73]. Due to their common relation to motivation SDT and flow do have similarities, although SDT is arguably concerned with conditions that enable intrinsic motivation to arise while flow is more concerned with conditions that enable sustained periods of intrinsically motivated actions [86]. In the remainder of this section, we will focus on how these theoretical models relate to adaptability and adaptivity in serious games.

The Flow model (based on Csikszentmihalyi [24]). Adaptivity (dotted arrow-lines) can support keeping the user’s interaction route through the game (arrow-lines) in the flow channel between perceived skill and challenge level.

Flow theory by Csikszentmihalyi [24] is one of the most prominently cited theories that discusses the psychological foundations of motivation. It introduces so-called enabling factors, such as a balance of challenges and skills. According to Csikszentmihalyi, flow is a state of being fully “in the zone” that can occur when one is engaging in an activity that may have very positive effects. As an underlying reason, he discusses an enjoyment that may be explained by evolutionary benefits of performing activities that trigger the state of flow, which in turn leads to increased motivation to repeatedly perform an activity [24]. Flow theory has been connected both to games (by Csikszentmihalyi himself and also by Chen [18]) and also explicitly to serious games (e.g., by Ritterfeld et al. [74]). This connection can easily be understood when considering the conditions under which flow can occur: Csikszentmihalyi argues that there are nine preconditions that need to be present in order to attain a state of flow when performing an activity. The most prominent precondition is an optimal balance of risk of failure (i.e., “challenge”, as mentioned above; which may translate to risk of losing a game life, level, or an entire game when considering flow in games) and the chance to attaining a goal (i.e., “skill”, which in games may mean winning some points, a level, a bonus, or the whole game). A visual representation of this balance can be seen in Fig. 1, which also highlights that this balance is subject to change over time due to the developing, deteriorating, or temporarily boosted or hindered level of skill of each individual player. This is an early indicator of the need for a dynamic level of challenge (e.g., adaptive difficulty) in games. While increasing levels of challenge are usually achieved by pre-defined game progression to a certain extent, this is rarely optimal with regard to the actual skill development of any specific individual (since user-centered design optimizes game experiences for groups of users). Returning to remaining prerequisites for flow according to Chen, the activity must also

-

lead to or present clear goals (e.g., “save at least five bees”, “open the door by solving a puzzle”),

-

give immediate feedback (e.g., “the door opens given the right pass-code”),

-

action and awareness must merge (e.g., no need to look at the controller in order to move),

-

concentration on the task at hand (e.g., little to no interruption, for example with non-matching menus, of the actual game content),

-

a sense of potential and control (e.g., “I [my avatar] can jump incredibly far”),

-

a loss of self-consciousness (in many games, players may get almost fully transported “out of their physical bodies”),

-

a sense of time altered (e.g., in games players often only notice how much time they actually spent on them after a game session has finished), and

-

the activity appearing autotelic (most players play games because they like to and not because they are told to, or because they are getting paid to do so) [24].

More examples of conditions in games that can be facilitating factors for flow have been discussed in related work [22, 89]. Considering the preconditions mentioned above it does not come as a surprise that Csikszentmihalyi explicitly relates to games as a good example of activities which can lead to flow experiences. While all the aforementioned preconditions are subject to differences between individuals, the balance of challenges and skills presents a tangible model which can serve as the foundation for approaches to adaptivity in games and serious games [74]. The complex nature of the challenge of balancing a game so that it does not only manage to capture some players sometimes but most of the players most of the time also delivers a good explanation for the presence of manual difficulty choices, such as those presented in settings menus, in many - and even in very early - video games.

The ultimate goal of an adaptive educational game is to support players in achieving progress towards individual learning goals. Since technical measurement methods for the direct and effective assessment of knowledge gain in the human brain are not available [2, 14], the evaluation of learning efficiency has to be done indirectly by assessment tests or other forms of interaction or data analysis processes. The idea behind adaptive educational games is that the users experience an increased flow resulting in an increased game immersion, which in turn positively increases the user’s intrinsic motivation to interact, play and learn - and ultimately to produce an increased learning outcome.

Oftentimes the ingrained purpose of serious games is not just to perform a specific activity (often repeatedly), but also to have a lasting effect on the players’ traits or behavior. A prominent model for behavioral change is the Fogg Behavior Model (FBM) (Fig. 2). The FBM has been developed in relation to general interactive media and stems from research on persuasive design [32]. In comparison to flow and other motivational theories, which discuss the motivational support, the FBM and related skill development theories discuss the enabling factors of ability. The FBM states further factors that are not considered in the flow model, framing a broader view that is necessary to explain the arguably broader outcome of behavioral change (as opposed to explaining momentary motivation, which is tackled by the flow model).

An illustration of the action line of the Fogg Behavior Model (FBM); it conveys the principle that certain amounts of motivation and ability to change behavior are required in order to allow provided triggers for change to succeed [32].

In the larger picture of serious games, it is interesting to notice that it will likely be desirable to induce phases of serious game interaction that are not spent in a state of flow in order to allow for self-aware reflection (for example after completing an exercise in a motion-based game for health). Reflection can play an important role when learning to complete complex tasks or exercises [7, 30] and it can also play a key role in behavioral change.

3.2 Personalization and Adaptivity

Since the terms personalization and adaptivity, as well as customization and adaptability are used differently and sometimes interchangeably, it is important to provide an understanding of how the terms are used in the context of this chapter. Regarding their general meaning, we define the terms as specified in Table 1.

In this understanding, adaptability and adaptivity can be means to achieve customization or personalization. Furthermore, personalization and adaptivity can at times be used in very similar contexts, but they make a different emphasis: the change to accommodate an individual (personalization), or the change that happens automatically (adaptivity). Accordingly, constructions such as automatic personalization or personal adaptivity can be formed to describe the same concept from different angles. Notably, these terms describe apparent properties of systems and do not define the techniques that are employed to achieve these ends.

In the context of serious games, a personalized game is adapted to the learners’ individual situation, characteristics, and needs, i.e., it offers a personalized experience. The psychological background is that personalized content can cause a significantly higher engagement and a more in-depth cognitive elaboration (in-depth information processing) [72, 90].

As indicated in the definitions above (Table 1), personalization can either be achieved manually or automatically by adapting the content, appearance, or any other aspect of the system. Different users have different preferences and many computer games offer the possibility for adjustment, e.g., brightness level, sound volume or input devices settings on the technical side, and on the content-side, for instance, difficulty levels, or game character or avatar profiles. Adaptivity, on the other hand, means the automatic adjustment of game software over time, be it either technical parameters or on the content-level. Technical adaptivity can, for example, be realized through the automatic setup of a game to best fit the user’s surroundings.

Adaptivity of serious games content can mean the dynamic adjustment of learning paths, the dynamic creation of personalized game content with e.g., procedural generation or user- or task-centered recommendations. Adaptivity of content is typically achieved by applying techniques from the field of AI. It is important to notice that adaptivity typically involves a temporal component, i.e., the adaptive systems evolve over time by adjusting their internal parameters given acquired data from former states. This concept results in the adaptive cycle where each cycle refines the system [91]. The mechanisms behind the refinement process could be manifold and typically often arise from the field of AI since the system employs some form of optimization.

Customization is another term that is frequently used in the context of serious games and while it is sometimes used interchangeably with the term personalization, there is a subtle difference. In many cases where personalization relates to automatically individualized experiences (alas to adaptivity as mentioned above), meaning that a system is configured or adjusted implicitly without interaction by the user, customization relates to manual, explicit adjustments and choices made by the users to optimize their experience (alas employing adaptability as mentioned above). Furthermore, customization is not necessarily tailored towards the needs of individual players, but it may also target specific player groups or other user groups in the ecosystem around serious games.

Lastly, it is important to realize that many systems will employ designs that mix elements of adaptivity and adaptability, so users may have a say in the adjustments considered by the adaptive system, or the selection of choices made available in an interface for adaptability may have been produced dynamically with adaptive components. We will provide a more detailed discussion of the dimensions of adaptive systems in Sect. 3.5

3.3 Approaches to Adaptability

When designing and implementing adaptable serious games, meaning games that allow for manual adjustments, the focus lies on achieving a good usability and the user experience in the user interaction with the settings interfaces for explicit manual adjustments. This means that typical desirable goals like efficiency and effectiveness as key elements of usability [42] play a role while the experience of interacting with the adjustment options should also be enjoyable (which is a key component of user experience [42]).

In order to achieve these goals, methods from interaction design, such as iterative user-centered [5] or participatory [78] design play an increasingly important role in the development of settings interfaces and settings integration for serious games [84]. As a first step, the parameters that will be presented to the players must be isolated. In regular games monolithic one-dimensional difficulty settings are often employed (such as “easy”, “medium” and “hard”), although the parameters typically map to a number of game variables (such as amount of energy, number of opponents, power-ups, etc.). Serious games should take considerable measures to optimize the match between the heterogeneous capabilities and needs of the players, and the content and challenges that are presented. Settings interfaces in this area often present more fine-grained parameters for tuning, in order to provide a more direct influence on the resulting game experience. A detailed example of such a development will be presented below in the section on the motion-based games for health (cf. Chap. 4).

Furthermore, the game developers need to decide whether to use player-based or game-based parametrization.

-

Game-centric parametrization means that settings are made per-game and the parameters typically represent aspects of the specific game. The level of abstraction can vary from terms as general and abstract as simply “difficulty” to specific descriptions of ingame, deep-influencing parameters (e.g., “amount of health” of a player character).

-

Player-centric parametrization means that settings are made on a per-user basis. In such scenarios, the parameters typically relate to the psychophysiological abilities and needs of a user (e.g., “range of motion” in a motion-based game for health). This model is frequently used when a suite of rather small (“mini” or “casual” style) games are employed as a suite targeting a serious purpose since the settings can be transferred from one small game to the other without additional configuration overhead. However, in such cases, an additional technical challenge arises in the mapping from the general per-user settings to the specific game parameters in the different games. Such mappings are often not linear, may be difficult to generalize across user populations, and generally require careful testing and balancing.

Mixed methods, where, e.g., settings are made on player-centric parameters while still being made per game are also possible, as are group-based settings.

3.4 Approaches to Adaptivity

When designing adaptive serious games, meaning games that automatically adjust to the player abilities and needs, the focus lies on a good playability and player experience, and it can be argued - perhaps somewhat counter-intuitively - that getting these aspects right is even more important than it is in the case of purely adaptable games. While many aspects of computation are inherently about automation [79], if the automation does not respect the intentions of the user, or even comes to hinder them, the most basic principles of usability and user experience can easily be violated. This has been discussed more extensively in the general literature on human-computer interaction, especially along the challenges with early embodied conversational agents. One prominent example of such an agent is the notorious “Clippy” which was present in some versions of Microsoft Word and led to numerous problems especially with more advanced users [60]. The same principles do, however, also apply to (serious) games, even though a playful setting may potentially render players more accepting of inefficiencies. While many adaptive systems are designed to address these concerns by implementing some form of explicit preliminary, live, or retroactive interaction with the automated system, which we will discuss in detail in the section on the dimensions of adaptation (cf. Sect. 3.5), all adaptive systems in the context of serious games are at least partially automated. This means that they must implement some form of performance evaluation in order to measure or estimate the impact of the current parameter settings on the player performance. Adaptive systems must also implement some form of an adjustment mechanism that adjusts the parameter settings depending on the outcome of the performance evaluation [1].

The exact realization of such an automated optimization process can take many forms and the adjustments does not have to be limited to tuning existing parameters. As examples from, e.g., learning games show, additionally prepared or generated content can be a means for adjustment next to more traditional approaches, such as heuristics or machine-learning techniques for tuning game variables.

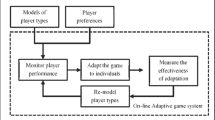

One of the most common forms of adaptivity in games is heuristics-based adaptivity for dynamic game difficulty balancing, i.e., Dynamic Difficulty Adjustment (DDA). As illustrated in Fig. 3, the difficulty is either increased or decreased, depending on the player performance, which can be measured on one or multiple variables. If the performance is below a certain threshold, indicating that the game may be too hard, the difficulty is decreased. Otherwise, if the performance is above a certain threshold, indicating that the game is currently too easy, the difficulty is increased.

Adjustments can be performed on the basis of a single parameter, or with a direct influence on multiple variables. The variable game parameter mappings are usually determined and fine-tuned with iterative testing. The same applies to the thresholds, which can be either discrete, fuzzy, or quasi-continuous. Depending on the amount of adjustments and type of the parameters that are adjusted, the difficulty settings can be either visible or more or less invisible. This is closely linked to one of the largest challenges with straight-forward dynamic difficulty adjustment: Since the goal of the adaptive system is to notably impact player performance or experience, the adjustments usually lead to notable changes in the game, which in turn lead to a resulting difficulty that deviates around the current theoretically “optimal” settings for any given player. This effect is called rubber banding [69] and it can be perceived as annoying, or even unfair, especially when it affects other player or non-player entities in games, e.g., a computer-controlled opponent in a car racing game. A frequently referenced example for this rubber banding effect is the Mario Kart series. Despite the notable challenges, more subtle adjustments, such as slightly supporting the user with aiming (which is widely used in first-person shooters on gaming consoles) [93], or more readily available supply of resources (e.g., health packs) [47], have been shown to be successful in improving the overall game experience or performance.

Figure 3 has a resemblance to the flow model that shows very clearly how the approach of dynamic difficulty adjustments in games directly aims at addressing the prerequisite of presenting an adequate balance of challenges relative to the skills of an individual user. This connection has been discussed by Chen [18], who not only underlines the connection between DDA and flow but also remarks on the potential challenges when control is taken away from the player in fully automated systems. Chen [18] suggests a system where manual game difficulty choices are available, but integrated as credible choices in the game world, in order to avoid “breaking the magic circle” of a game [86]. Embedded difficulty choices have been shown to increase users perceived autonomy compared to fully automated DDA [86].

Since user skills are not a constant, both adaptable and adaptive systems face further challenges, as both users and the game undergo complex changes over time. Various forms of user models [31] have been explored to aggregate and estimate changes in players over time [19, 49]. Machine-learning techniques, such as self-organizing maps [28], decision trees [94], neural networks [12, 95] or reinforcement learning [6], have been employed to facilitate more complex optimizations or general decision making especially for many-to-many and nonlinear parameter mappings.

Next, we will discuss the multidimensional aspects of adaptivity and user involvement and the multiple layers of skill-influencing factors.

3.5 Dimensions of Adaptation

Making a system adaptive is a specific form of automation where the system takes over individual actions or larger activities that would otherwise have to be handled manually. In many cases, this opens possibilities for more fine-grained, frequent, or complex operations than what would have been possible with manual control. On the other hand, as mentioned above, getting automation “right” is crucial in order to avoid negative impacts on the usability, user experience, or the intended serious goals of a system. Related work from automation has framed such considerations in ways that are relevant to the development of adaptive serious games. Parasuraman, Sheridan and Wickens [70] have produced a model of types and levels of automation that describes the general process of designing and implementing automation systems (Fig. 4).

-

1.

The model begins with the question of “What should be automated?”, which in the case of serious games can range from general “difficulty settings”, over specific challenging aspects, such as the required range of motion in a game for therapy, up to very complex aspects such assemblies of learning materials.

-

2.

The next step “identify types of automation” is split into four types, all of which are frequently present in parallel in adaptive serious games, highlighting the complexity of these types of adaptive systems.

-

3.

The model then suggests a decision on the level of automation that can range from fully automatic execution without informing the user about changes or offering options to influence the actions taken by the adaptive systems, to a system that offers a range of alternative suggestions for adjustments that the user must manually choose from (see 4 for a summary of levels of automation).

-

4.

In the next step, the model suggests a primary evaluation based on performance criteria that are closely linked to usability in terms of interaction design.

-

5.

Depending on these criteria, an initial selection of types and levels of automation is made which is then evaluated for secondary criteria, such as reliability and outcome-related costs, leading to a final selection of types and levels of automation.

We propose an adaptation of the model in order to make it fit for adaptive serious games. In this light, the first decision would be phrased as “Which aspects of the serious game should be adaptive?”. Again, it could be a single parameter that influences difficulty, such as the maximum speed in a racing game, or more complex aspects, such as the content or the game mechanics. The layer of types of automation can be related directly to the basic components of adaptive games by Fullerton et al. [34]: Information acquisition and information analysis are components of the performance evaluation. Decision and action selection, as well as action implementation, are parts of the adjustment mechanism. Notably, some aspects of these steps are usually fixed or thresholded in the design phase of a game while some flexibility remains which then gives room to adaptive change during use.

Due to the importance of player experience as a prerequisite for successful outcomes regarding the serious intent, the level of automation requires further consideration. Under closer observation, the scale suggested by Sheridan [79] mixes multiple aspects of automation that should be discussed separately, although - in most cases - they are interrelated. One dimension is the basic level of automation, which can range from fully automatic to fully manual. Additional aspects are the frequency with which adjustments are made (which can range from constantly to never), the extent of changes that are made (which can range from a single, non-central element of the experience to encompassing the whole interaction experience), the visibility or explicitness [35] of changes that are made (which can range from completely invisible, not notable, to full visible, or salient), and also the aspect of user control over the changes that are made (which can range from no control at all to full manual control over every step, as indicated by Sheridan [79]). Other aspects which represent similar dimensions for design choices are (1) long-termedness; (2) target user group size; (3) game variable granularity; (4) explicitness of user feedback as it is provided by the user; (5) implicitness of the feedback data taken into account for adaptivity; (6) explorativeness of the adaptive system; (7) parameter complexity, and (8) the system’s inherentness regarding the feedback.

While there are likely more aspects that become evident when designing for a specific use-case, this shows that any system must take a carefully designed position or subspace in a very complex, high-dimensional design space of adaptive systems. Additional complications are added with complex application areas such as serious games. This has implications not only for the design, implementation, and testing of adaptive systems, but also for any research on them, since these aspects can influence the outcomes of studies on the functioning or the acceptance of adaptive systems.

Following with the next step of the model, the usability-centric criteria presented in the general model on automation must be augmented with more specific criteria forming a notion of playability and player experience. In many use-cases of serious games, criteria from accessibility would also play a more important role. Lastly, outcomes with regard to the serious intent of the game must become part of the primary evaluative criteria.

The secondary outcome criteria should be augmented with evaluations of ecological applicability and validity, meaning that it must be clear whether the solution can effectively be applied in real usage contexts (and not only in laboratory tests), and whether the optimizations still function with regard to the primary goals.

Given the complexity of adaptive systems in the context of serious games, arriving at a near optimal system is likely to require multiple system design iterations based both on primary and secondary evaluative criteria. In this light, it also becomes clear how this model is a detailed realization of a general iterative design model (typically a cycle of analysis, design, implementation, and evaluation), as for instance presented by Hartson et al. [42].

3.6 The Adaptive Cycle

The grounding principle of evolving adaptation is the general cycle of adaptive change, or “Panarchy” as Gunderson and Holling describe it [41]. Panarchy is a cycle of adaptive change, proceeding through “forward-loop” stages of exploitation, consolidation and predictability, followed by “back-loop” phases of novel recombination and reorganization (Fig. 5). The principle of adaptive management alone is inherent to almost all human and natural systems where decisions have been made iteratively using feedback acquired from observations. A famous technical application of an adaptive cycle is seen in the Kalman Filter and its derivatives [76]. This algorithm has prediction and update steps to refine estimates of unknown variables based on a set of noisy or inaccurate measurements observed over time. An example would be to determine the exact position of a moving object, e.g., a vehicle, when observing its movement.

The Adaptive Cycle by Gunderson [41]; temporal changes in a system iteratively proceed through the phases of reorganization, growth, conservation and release.

The Panarchy principle can also be found in a 4-phased adaptive cycle for software systems. A typical 4-phased adaptive cycle consists of the acquisition and processing phases of capturing and analysis, to the phases of selection and presentation of new or modified (adapted) content (Fig. 6). Shute et al. [80] present such a 4-phased adaptive cycle for adaptive educational systems which also includes a learner model. Without loss of generality, this model can also be adapted to serious games in general; the learner model then becomes a user model without didactic and pedagogical concepts and it can also be related to performance analysis (capture and analyze) and an adjustment mechanism (select and present) as the general components of any adaptive game discussed above.

4-process adaptive cycle for adaptive learning systems (based on [80]); the dynamic system is updated through the phases capture, analyze, select and present.

Before implementing each phase the general goals of the targeted adaptive system must be well-defined. One design pattern is to start with the outcome, i.e., the presentation phase, and proceed backwards to the selection, analysis, and capturing phases. A clear understanding of the expected output is oftentimes helpful in defining the input and the processes.

The first phase captures the interaction data of the users. For games this could include the mouse click positions, performance data, identifier names of selected game objects, or other interaction events like starting or stopping the game, or technical data like changes in network connection bandwidth. With additional sensor or input devices, more data can be captured, for example, the loudness of the surroundings can be determined using a microphone; or with an eye-gaze tracker the eye fixations regarding specific objects in a game can be recorded [52]. Other possible suitable types of data include speech, gesture, posture, or haptic data. Of course, not everything which can be captured is necessary for the adaptation process. The nature of the captured data has to be specified in the early phases of the software design process. Regarding data reduction and data economy, one has to keep in mind privacy or ethical constraints when implementing data acquisition methods [29], although by implementing anonymization techniques or reduced personalization components the problems can be mitigated [8].

The second phase is the analysis of the captured data. In this the phase, the actual “intelligence” happens, i.e., an adaptation engine must attempt to “read” the captured data to infer the user’s state or performance. This process can include qualitative or quantitative analysis techniques, analysis of cognitive or non-cognitive variables, machine learning, data mining, or other techniques from artificial intelligence. For well-defined domains, Bayesian networks could be used to probabilistically infer a cognitive state from observed (non-cognitive) variables [76]. The deduced information is then stored in the user model as the basis for the selection phase. After multiple interaction cycles, the analysis typically improves (higher precision) since (historic) data already stored in the user model can be used in addition to any data from the current interaction. Initially, a system can only act on the captured data of the first run. One strategy to this cold-start problem is to provide the adaptation engine with more data in the first cycle, i.e., by directly asking the user a set of questions. Additionally, when the system has access to other user models, this could be used to classify the user. Data mining techniques like pattern matching or collaborative filtering could be used to find similar patterns in the user models of other users.

After an analysis of the captured data, the result is stored in the user model (or learner model). This model is dynamic and represents the user’s current state in the space of all possible interaction combinations. In this phase, the system has to determine when and how to adapt. This is the actual adaptation (adaptivity) process: based on the collected and analyzed data, the system selects that content which best fits the user’s context. The selection process can either select matching content from a pool of available resources, or it can generate new content, e.g., with techniques like procedural content generation [55].

After the selection process, a response has to be presented to the user. The simplest case would be a direct presentation, i.e., a newly selected content could directly be shown to the user without modifications. In other cases, system adjustments can be more subtle, or can be enacted over time, following the range of design choices suggested in the previous section on the dimensions of adaptation (Sect. 3.5).

3.7 When, What and How to Adapt

This section discusses the main challenges which arise when asking when, what, and how to adapt. Answers to these questions concern multiple disciplines, i.e., game design, software engineering, cognitive science, pedagogy, evaluation methodologies, etc. An adaptive system typically evolves over time [41, 80]. This temporal aspect is reflected in the adaptive cycle where each cycle refines the system one step closer to be personalized to the user’s needs (cf. previous Sect. 3.6 and Figs. 5 and 6). Each of the following questions can be mapped to the 4-phased adaptive cycle, although some of the questions involve more than one phase.

-

When to adapt, is a key question of adaptivity, because adaptivity must be justified at each and every step when a user is playing an adaptive game. An adaptive software system must have some kind of reaction model which determines when to start the adaptation process. For an adaptive serious game, the measure could be a decrease in motivation (e.g., captured indirectly through a number of unsuccessful repetitions); or when their assessed effectiveness is below a certain threshold (e.g., learning progress measured by questionnaires). Hence, the software must constantly measure the current state of the user to be able to react to deviations which hinder the user to reach the targeted goals. In the 4-phased adaptive cycle this is done in the selection process after the system has analyzed to observed interaction data.

-

What to adapt concerns the question which media, game mechanics, rules, assets, etc. can be designed and utilized in an adaptive way. This involves game design aspects, for example, modularized content which can be realigned in a dynamic fashion and which can sometimes exist independent of context, i.e., stand-alone. For games, the content often cannot directly be divided into atomic parts because of narrative constraints. Breaking up the storyline typically also breaks the narrative coherence and, therefore, has negative impacts on immersion. A comprehensive overview on game elements that could be adapted is given by Lopes and Bidarra [55]. They list examples for commercial games and academic research along the dimensions game worlds, mechanics, AI or NPC, narratives, and scenarios or quests.

-

How to adapt involves the mechanics behind the adaptation, i.e., the possibilities given by the underlying software architecture or by techniques from Artificial Intelligence. In the 4-phased adaptive cycle this question typically concerns the presentation phase, but with strong entailment of the result of the previous selection phase.

3.8 Cold-Start Problem

Two challenges that frequently arise with adaptive systems are cold-start and co-adaptation. Cold-start is known as a common challenge from machine learning and describes the problem of making first predictions in the absence of a proper amount of data. This problem is prevalent in adaptive serious games, since systems are usually designed to adapt to individual players, who, at one point in time, are all new users for whom no performance data have been recorded. A common approach to this challenge is, first of all, to assure careful user-centered iterative design and balancing, creating a game that works comparatively well for an average population. This can be augmented by

-

calibration procedures;

-

models that require manual settings before first play sessions;

-

taking into account the performance and development of user groups that are very similar to the new user (similarity can be determined by prior tests, questionnaires, or very early performance data);

-

taking into account established models of development based on the application use-case (e.g., a typical rehabilitation curve after a prosthetic implant with motion-based games for the support of rehabilitation); or simply

-

refraining from any adaptivity until enough data has been captured to inform the model in order to avoid maladaptations.

3.9 Co-Adaptation

The challenge of co-adaptation can be explained well in relation to the problem of rubber banding [69]. In real prolonged use of any serious game, the player capabilities and needs are not only complex and multivariate, they also change (adapt) over time in a nonlinear fashion; the user adapts to changes in the adaptive system. This can lead to situations where a system adapts settings in a specific way and the user adapts to handle these settings even though they are not objectively optimal, and in worst-case scenarios may even lead to harmful interactions. One way to tackle co-adaptation are careful user-centered iterative design cycles. It is important that test groups are frequently changed so that problems can be found. This way, testers do not have enough interaction-time with the system to adjust to the problems before the design problems have been detected and fixed. Since the most common motivation for using adaptivity in serious games is an optimization of the experience with regard to individual users, manual means to interfere with changes made through the adaptive system, or to correct them, can also avoid malicious co-adaptation. However, this challenge remains elusive, and controlling for problems with co-adaptation requires detailed observations of both the system and the user behavior, both during formative iterative testing and during more summative evaluations and studies.

4 Adaptive Motion-based Games for Health

The need for adaptivity is frequently discussed in the context of Motion-based Games for Health (MGH). This section will provide some background to explain why that notion is frequently discussed and we will outline a number of major design concerns and challenges with implementing adaptability and adaptivity functionality for MGH.

Games for Health (GFH) are one of the major categories of serious games. The class encompasses a number of sub-classes, such as games for health education of professionals or the public, games for tracking general health behavior, or games for practical health applications [77]. One common class of health applications in serious games are motion-based games for health (MGH).

4.1 Adapting to the Players

As detailed in the chapter on games for health in this book, MGH bear the potential to motivate people to perform movements and exercises that would otherwise be perceived as strenuous or repetitive, they offer the potential to provide feedback regarding the quality of motion execution especially in the absence of a human professional (e.g., when executing physiotherapy exercises at home), and they offer the potential to gather objective information concerning the medium- to long-term development of the users. Adaptability and adaptivity are important aspects of MGH due to the often very heterogeneous abilities and needs even within specific application areas. MGH have been developed for a range of application areas such as children with cerebral palsy [43], people in stroke recovery [4, 44], people with multiple sclerosis [65], or people with Parkinson’s disease [82]. In a game designed to support the rehabilitation of stroke patients, for example, it may be necessary to facilitate game play while a player is unable to employ a specific limb. It may, in fact, even be the target of the serious game to support training of a largely non-functional limb.

With GFH it is also very apparent that the abilities and needs of an individual player are not fixed over time, but instead fluctuate constantly. Recognizing the following three general temporal classes of fluctuation can help designers with structured considerations [83]:

-

Long-term developments, such as age-related limitations [36], state of fitness, chronic disease, etc., form an underlying base influence on the abilities and needs of an individual.

-

However, they are also influenced by medium-term trends such as learning effects, a temporary sickness, environmental factors such as season and weather, etc.

-

Lastly, short-term influences such as the current mood, potentially forgotten medication, etc. can also play a considerable role.

Figure 7 outlines a visual summary of these aspects. While similar aspects also play a role in other application areas of serious games, MGH make for a comprehensible concrete example.

4.2 Adapting to Many Stakeholders

The considerations mentioned so far are framed by considerations of the player as an individual. However, GFH typically have multiple stakeholders, and modern GFH are designed with multiple stakeholders in mind. Beyond the players, whom the primary outcomes are usually targeting, doctors, therapists or other caregivers, family members, guardians or other third parties that are closely related to the player are involved in the larger context of GFH. Adaptive functions may be employed with regard to their interests as well [81]. For example, personalized reports based of GFH performance may be tailored for parents of a child with cerebral palsy who regularly uses a GFH. Further parties are also interested in the interaction with GFH or their subsystems, such as developers and researchers, and the design of some GFH is beginning to take the interests of these groups into account. Additional challenges arise when GFH feature a multi-player mode. Adaptive functions can have a strong impact on the balancing and the perception of the balancing by the players, which in turn may interfere with the player experience [35]. While in many cases, it will simply be suggested to avoid adaptivity in multiplayer settings, games without strong adaptability and adaptivity are likely limited with regard to the level of heterogeneity of co-players they support. Notably, balancing in the form of, for instance, “handicaps” is common in analog physical activities (such as golf). However, it is reasonable to expect measurable psychological impacts if games adapt very visibly, e.g., with clearly notable rubber banding. In a study comparing rather visible with mostly invisible adjustment methods in an established motion-based game, Gerling et al. [35] have found that explicitly notable adjustments can reduce self-esteem and feelings of relatedness in player pairs, whereas hidden balancing appears to improve self-esteem and reduce score differential without affecting the game outcome. It is also important to keep in mind that non-competitive multiplayer settings will likely result in different patterns of technique acceptance and resulting game experience [93] and adaptive techniques may, for example, be less intrusive when the player roles are not symmetrical.

4.3 Adapting to Further Context and Devices

There are various further aspects that can be important surrounding the usage of adaptive systems. Examples are the need to adequately serve potential multiplayer situations, or the context of gameplay, i.e., where it is played, how much space is needed, whether it happens at home or in a professional context, such as a physiotherapy practice. In order to control for such impacts the possible target environments can be modeled and simulated beforehand. However, this is still an area in need of further research. The same goes for additional sensor or control devices, which again hints at the complexity of adapting adequately in real gaming situations - even if “simple” heuristics are used. These challenges support the need for medium- to long-term studies and for studies that make ecological validity a primary target.

Drawn together these aspects explain why commercial movement-based games cannot simply be used for most serious health applications. Commercial products lack matching design, target-group orientation (e.g., Xbox Kinect, Playstation Move, EyeToy or Wii titles), and adaptability and adaptivity that is tailored towards supporting a good game experience while also supporting the targeted serious outcomes.

5 Application Examples

In the following, examples for the application of the described adaptation principles of this chapter are presented. This includes a digital learning game for image interpretation as well as games for health with a focus on kinesiatrics.

5.1 Lost Earth 2307 - Learning Game for Image Interpretation

The serious game Lost Earth 2307 (LE) is a digital learning game for education and training in image interpretation. The game is part of ongoing research projects to find solutions for an effective and lasting knowledge transfer in professional image interpretation. One element of the approach is to introduce adaptive concepts to match the requirements of heterogeneous user groups and the additional requirement of efficient training at the workplace. Lost Earth 2307 was developed by the Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB for the Air Force Training Center for Image Reconnaissance (AZAALw) of the German Armed Forces [33].

The application domain of this game is image interpretation for reconnaissance, i.e., the identification and analysis of structures and objects by experts (image interpreters) according to a given task. The image data could be optical, radar, infrared, hyperspectral, etc. Radar image interpretation, for example, is used in search and rescue operations to find missing earthquake victims. However, peculiar effects of the radar imaging technology (e.g., compression of distances by the foreshortening effect, or ghosting artifacts for moving objects) make it hard for non-experts to interpret the resulting image data, hence expert knowledge and experience is needed and people have to be trained [75]. Education and training facilities have to handle very heterogeneous groups of students varying in age, education, and technical background. The target group of LE consists of students, mainly from the Generation Y, which are eager to play and are going to be trained as image interpreters.

Serious game Lost Earth 2307 for image interpretation training; (a) Bridge scene for mission tasking; (b) adaptive storyboard pathways (the red X indicates elimination of a formerly optional, now mandatory path); (c) dynamic difficulty adjustment with image modifications; (d) virtual agent with help and recommendations. (Colour figure online)

Lost Earth 2307 (Fig. 8) is a 4X strategy game (4X as in eXplore, eXpand, eXploit, and eXterminate) for training purposes. The explorative and exploiting characteristics are mirrored in its game mechanics and are congruent with the job description of an image interpreter. This encompasses the systematic identification of all kinds of objects in challenging image data from various sensor types.

The rationale for adaptivity is, besides the effective knowledge transfer by personalized learning and recommendations for heterogeneous user groups, the introduction of an intelligent tutoring agent which allows gaining advantages by interlinking multiple software products for learning. Since this game is part of a digital ecosystem of learning management systems, computer simulators and serious games, the goal is to have an intelligent tutoring component for adaptivity which can be attached to each learning system and which allows for data interoperability to facilitate learning and training between multiple software products [88]. Intelligent game-based learning systems are typically not designed in interoperable ways. For adaptive serious games and adaptive simulations in image interpretation the intelligent tutoring component is designed as an external software system which follows commonly used interoperability standards for data acquisition and information exchange. The solution approach is the development of an adaptive interoperable tutoring agent, called “E-Learning AI” (ELAI) which consists of multiple game engine adapters, a standardized communication layer and an external intelligent tutoring agent which interprets the collected data to adjust the game or simulation mechanics [88]. The interoperability lays in the communication layer and its data format. Interlinking various computer simulation systems and serious games can be done by using general purpose communication architectures and protocols, for instance, the High Level Architecture (HLA) for distributed simulation systems interoperability. Additionally, by using standardized data specifications like the Extended API (xAPI; also Tin Can API) [3] and Activity Streams [64], the interlinked systems not only gain a common, learning-affine data exchange language (for intra-communication), but they can also be integrated in networks of other learning systems which use xAPI or Activity Streams (for inter-communication).

The adaptive aspects developed for Lost Earth 2307 are (1) dynamic adaptation of storyboard pathways that are achieved by dynamically modifying the underlying state-transition-models (e.g., eliminating crossings with optional paths to retain only mandatory pathways); (2) modification of imagery content in the sense of Dynamic Difficulty Adjustment (DDA) (e.g., dynamically inserting simple effects like clouds or, in the radar case, partial blurring, to make the identification of objects more difficult); and (3) dynamic injection of an Intelligent Virtual Agent (IVA) [88] which gives context-sensitive guidance by providing help- and learning material which fits best (i.e., is most relevant) to the current working context of the user [87]. Figure 8 b–d outline these aspects. Considering the problematic outcomes of overly active or intrusive IVAs (like “Clippy” [60]), the ELAI IVA must be actively triggered by the users to provide context-related help and learning recommendations [87].

5.2 Motion-based Games for Health