Abstract

In this paper we present a robot supervision system designed to be able to execute collaborative tasks with humans in a flexible and robust way. Our system is designed to take into account the different preferences of the human partners, providing three operation modalities to interact with them. The robot is able to assume a leader role, planning and monitoring the execution of the task for itself and the human, to act as assistent of the human partner, following his orders, and also to adapt its plans to the human actions. We present several experiments that show that the robot can execute collaborative tasks with humans.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

In Human-Robot Interaction robots must be equipped with a complex set of skills, which allows them to reason about human agents intentions and statuses and to act accordingly. When interacting with a robot, different users will have different preferences. The robot must be able to take into account these preferences and provide different operation modalities to allow more natural interaction with the human. We have designed a system able to cooperate with humans to complete joint goals.

We present an object manipulation scenario, composed by tasks, such as fetching, giving objects and other collaborative operations, which are typical of domestic environments. In this scenario a human and a robot must cooperate to complete a joint goal, which is a kind of goal that requires both agents to work together. This joint goal is known by both partners at the start of the scenario, perhaps because it has being agreed in a first interaction process. We have identified several ways—or modalities—to envisage how the robot planning and decisional abilities for task achievement can be used:

-

Human Plans. The robot is not aware of the global plan or does not reason about the long-term global goal. The human decides when to ask the robot to perform individual tasks. The robot then acts by performing, in the context, the requested task. Decisional autonomy here is limited to how the robot refines and performs the task in the context. The robot is not completely passive when not executing an operation since it will continuously monitor and update the state of the environment.

-

Robot plans. There is a joint goal between the human and the robot. The robot builds the ‘best’ plan to achieve this goal, taking into account the world status, the abilities of the two agents and the preferences of the human, then it verbalizes it and achieves it by doing its ‘part of the job’ and monitoring the human activity. This modality corresponds to a fully agreed upon plan that is built on-line or even predefined and known to both agents.

-

Robot adapts. There is a joint goal between the human and the robot. The robot monitors what the human is doing and whenever possible, tries to achieve an action or a set of actions that advances the plan toward the goal. This could be seen as an intelligent robot reaction to the context including the possibility to have the robot proactively facilitating the action of the human whenever possible.

We think that the interaction process should be flexible, allowing eventually the robot to switch modality depending on the task status and of the human actions or simply upon request. To be able to interact with a human agent in a natural way, the robot needs to possess a number of different mechanisms, such as joint attention, action observation, task-sharing and action coordination. Using these mechanisms the robot can create shared plans, that take into account the capacities of both agents, and execute them in a coordinated way. We have developed an architecture and decisional components which allow us to run these different interaction modalities. The goal of the paper is not to provide a detailed description of any of these components but rather to provide a global view of the system and to show its flexibility.

2 Related Work

The topic of joint actions has been studied by different authors in the field of psychology. In [1] Bratman proposes a definition of the topic, giving several conditions deemed necessary to perform a joint action. Other researchers [17, 23] have studied a number of key mechanisms necessary to support joint actions between different partners: joint attention, action observation, task-sharing, action coordination and perception of agency.

Individual engaging in joint action use several ways, like language, gaze cues and gestures to establish a common ground. This mechanism appears to be crucial in successfully performing joint actions. Perspective taking, which is studied in psychology literature [5, 30], is a critical mechanism when interacting with people by allowing one to reason on others’ understanding of the world in terms of visual perception, spatial descriptions, affordances and beliefs, etc. In [22] perspective taking is used to solve ambiguities in a human-robot interaction scenario by reasoning from the partner’s point of view. In [29] perspective taking is integrated in a robotic system and applied to several kinds of problems.

Spatial reasoning [20], has been used for natural language processing for applications such as direction recognition [9, 15] or knowledge grounding [12]. Reference [26] presented a spatial reasoner integrated in a robot which computes symbolic positions of objects.

Understanding what other agents are doing is necessary to perform a joint action. In [7] the authors allow a robot to monitor users’ actions by simulating their behaviors with the robot’s motor, goal and perceptual levels. In [4] the authors present the HAMMER architecture, based on the idea of using inverse and forward models arranged in hierarchical and parallel manners. With this architecture the authors are able to use the same model to execute and recognize actions, an idea compatible with several biological evidences.

Other agents’ actions can be predicted in different ways. By observing other agents we can predict the outcomes of their actions and understand what they’re going to do next. Participants in a joint action form a shared representation of a task, used to predict other partners’ actions. In [8] human intentions are estimated using a POMDP (Partially Observable Markov Decision Process) and a set of MDP (Markov Decision Process), that simulate human policies related to different intentions.

However, predicting other agents’ actions is not enough to participate in a joint action, because the agent needs also to choose appropriate complementary actions, adapting them to the capability of the participants. The agents need to create a coordinated plan, giving particular care to timing information. In [19] the idea of cross-training is applied to shared-planning. A human and a robot iteratively switch roles to learn a shared plan for a collaborative task. This strategy is compared to standard reinforcement learning techniques, showing improvements in performances. These results support the idea of modeling practices for human teamwork in human robot interaction. In [24] a shared plan is executed using Chaski, a task-level executive which is used to adapt the robot’s actions to the human partners. Plans can be executed in two different modalities: equal partners or leader and assistant. The authors show that this system reduces human idle time. In [10] an anticipatory temporal conditional random field is used to estimate possible users’ actions, based on the calculation of object affordances and possible user trajectories. With this knowledge the robot can anticipate users’ actions and react accordingly. In [2] the authors present a framework for human aware planning where the robot can observe and estimate human plans to coordinate its activites with those of the human partners. The framework doesn’t support direct human-robot interaction.

Communication between partners in a joint task is crucial. The robot needs to be able to give information to its partners in a clear and socially acceptable way. Reference [27] proposes different strategies to modulate robot speech in order to produce more socially acceptable messages. In [13] the authors study how to generate socially appropriate deictic behaviors in a robot, balancing understandability and social appropriateness.

Few robotic architectures take humans into account to allow the execution of human-robot joint actions. Reference [28] presents ACT-R/E, a cognitive architecture, based on the ACT-R architecture, used for human robot interaction tasks. The architecture aims at simulating how humans think, perceive and act in the world. ACT-R/E has being tested in different scenarios, such as theory of mind and hide and seek, to show its capacity of modeling human behaviors and tought. In [6] the authors present HRI/OS, an agent-based system that allows humans and robots to work in teams. The system is able to produce and schedule tasks to different agents, based on their capacities, and allows the agents to interact mostly in a parallel and independent way, with loose coordination between them. Cooperation mainly takes place when one agent asks for help while dealing with a situation. In this case the HRI/OS will look for the best agent to help, based on their availability and capacities. In [3] the authors build SHARY, a supervision system for human robot interaction, tested in domestic environments to perform tasks such as serving a drink to a person. Our system is an evolution of Shary which includes new aspects, like spatial reasoning and modeling of joint actions.

3 Technical Approach

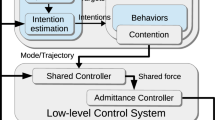

Our robot is controlled by an architecture composed of different components as shown in Fig. 1.

Supervision System. The component in charge of commanding the other components of the system in order to complete a task. After receiving a goal the supervision system will use the various planners of the system to obtain a list of actions for the robot and for the human. It will then be in charge of executing the robot actions and to monitor the human part of the plan. Specific treatment is reserved to joint actions (see Collaboration Planners). The supervision system aims at being flexible enough to be used in different robotic systems and robust so that it can recover from plan failures and adapt to human behaviors that are not expected, according to the current plan.

HATP. The Human-Aware Task Planner [11], based on a Hierarchical Task Network (HTN) refinement which performs an iterative task de-composition into sub-tasks until reaching atomic actions [18]. HATP is able to produce plans for the robot as well as for the other participants (humans or robots). By setting a different range of parameters the plans can be tuned to adapt the robot behavior to the desired level of cooperation. HATP is able to take into account the different beliefs of each agents when producing a plan, eventually including actions that help creating joint attention [31].

Collaboration Planners. This set of planners are based on POMDP models used in joint actions, such as handovers, to estimate the user intentions and select an action to perform. In order to mantain a simple and flexible domain, the POMDP selects high level actions (like continue plan or wait for the user), which are adapted by the supervision system to the current situation. We can picture the interaction between HATP, the collaborative planners and the supervision system in the following way. HATP creates a plan composed by different actions to achieve a goal. The supervision system refines and executes each action in the plan, using the collaborative planners to adapt its actions to those of the other agents during a joint action.

SPARK. The Spatial Reasoning and Knowledge component, responsible for geometric information gathering [16]. SPARK embeds a number of decisional activities linked to abstraction (symbolic facts production) and inference based on geometric and temporal reasoning. SPARK maintains all geometric positions and configurations of agents, objects and furniture coming from perception and previous or a priori knowledge. SPARK computes perspective taking, allowing the system to reason on other agents’ beliefs and capacities.

Knowledge Base. The facts produced by SPARK are stored in a central symbolic knowledge base. This base mantains a different model for each agent, allowing to represent divergent beliefs. For example, the fact representing the position of an object could point to a different location in two agent models in our knowledge base, representing the different information that the two agents possess.

A set of Human aware motion, placement and manipulation planners. These planners are in charge of choosing trajectories for the robot, taking into account the environment and the present agents [14, 21, 25].

4 Results

By using the capacities of its different components, our system is able to produce several results in human-robot interaction.

-

1.

Situation Assesment and Planning. Using SPARK and its sensors, the robot is able to create different representations of the world for itself and for the other agents, which are then stored in the Knowledge Base. In this way the robot can take into account what it and the other agents can see, reach and know when creating plans. Using HATP the robot can create a plan constituted by different execution streams for every present agent.

As said before, there are three operation modalities in the system: robot plans, user plans and robot adapts.

Robot plans. In the first modality the robot will, using information present in the Knowledge Base and HATP, produce a plan to complete the joint goal. After that the robot will verbalize the plan to the user, explaining which actions will be performed by each agent and in which order. The robot will monitor the execution process, informing the human of which actions it’s about to execute and also on when the human should execute its part of the plan. This modality, where the robot is the leader, can be helpful when interacting with naive users or in tasks where the robot has a better knowledge of the domain or of the environment than the other agents.

Human plans. The human can also create plans, interacting with the robot by using a tablet application. This application allows the user to select different actions and parameters. The user can issue both high level goals (e.g. clean the table) and simpler actions (e.g. take the grey tape, give me the walle tape, stop your current action). The robot will simply observe the surroundings and wait for user inputs. This modality is always available and has a priority over the other two modalities. If the robot receives a command from the application while it is in another modality, it will abandon its current plan, stopping its actions at a safe point, and then execute the users’ command. We feel that this interaction modality is important for two different reasons. First, some users will simply prefer to be in charge of the execution process, for a matter of personal preference or because they feel they have a deeper knowledge on how to realize the current task than the robot. We can picture, for example, industrial or medical scenarios, where the human is the leader and asks the robot to perform different tasks to help him, when needed. A second use of this modality is in situations where the robot doesn’t have a clear estimation of the users’ intentions and goals. For example, in a domestic environment, a user could decide to order a robot to bring him a drink, a need that the robot can’t always anticipate.

Robot adapts. In the last presented operation modality the robot will try to help the human to complete a task. At the start of the scenario, the robot will stand still and observe the environment. After the user takes an action the robot will calculate a plan and try to help as it can, by performing actions related to that task and by giving helpful information to the user. In our implementation the robot will start this modality in a ‘passive’ role, simply observing the human until he takes the first action. We could also picture a more pro-active role for the robot, where the robot chooses a goal on its own and starts acting toward its completion, eventually asking for the help of the human when he can’t complete an action.

This modality corresponds to what we feel is a very natural way of interaction between different partners, in particular in non-critical tasks, where defining an accurate plan between the partners is not fundamental. This situation relates quite easily to the scenario we will present in details in Sect. 5, where the two partners must clean a set of furnitures together. In this situation the two partners could simply choose to start executing the actions that they prefer, continuously adapting their plans to the other partners’ actions.

The robot is able to switch from one modality to another during execution. For example, if the robot is in the ‘robot plans’ modality and the users’ actions differ from the calculated plan the robot will interrupt its current action, create a new plan, and switch to the ‘robot adapts’ modality.

-

2.

Human Intention Estimation and Reactive Action Execution. Using the Collaboration Planners we can execute joint actions in a reactive way. For example, in the case of an handover, a POMDP receives as input a set of observations, representing the distance between the human and the robot, the posture of the human arm and the user’s orientation, used to estimate the current user intentions (i.e. user engaged, not engaged, not interested in the task). Using this information and the other variables that model the task status, the POMDP selects high level actions that are adapted by the supervisor. For example, the POMDP could decide to wait for an user that is not currently engaged in the joint action, but hasn’t abandoned the task yet. The supervision system at this point will decide in which posture and for how long to wait for the user. If the user shows again interest in the task, the POMDP could select a ‘continue plan’ action, and the supervision system could choose to extend the robot arm as response.

-

3.

Human Action Monitor. Using SPARK, the system is able to monitor human actions by creating Monitor Spheres associated to items considered interesting in a given context. A monitor sphere is a spheric area surrounding a point that can be associated to different events, like the hand of a human entering into it. Using this system and the vision capabilities of the robot we can monitor interesting activities, like the fact that a human takes an object or throws it into a trashbin. The monitor spheres for a human agent are created when he enters the scene, considering object affordances. If the human doesn’t have items in his hands, the supervision system will use SPARK to create a monitor sphere associated to every pickable object. After a user takes an item, monitor spheres for pickable objects will be erased and the robot will create new spheres for containers, such as thrashbins, where the user can throw its items. For the moment we consider only these two kind of affordances, but we plan to include others in the future, allowing, for example, users to place objects on furnitures, such as tables.

-

4.

Robustness and Safety. Our system incorporates different robustness and safety mechanisms. The robot can deal with failed actions by updating its knowledge of the environment and replanning accordingly. For example, if the robot tries to take an item and fails, it will update its knowledge introducing the information that the item is not reachable from the current position. The robot can then replan, for example by asking the user to take the item. The robot has the ability to stop its current action, for example because of unexpected changes in the environment. The robot is also able to pause and resume the execution of an action, for example because the arm of the human is in its planned trajectory.

-

5.

Flexibility. Our system is designed to be generic and easily expanded. New scenarios can be added by creating a new planning domain (for HATP and the Collaboration Planners), and eventually adding new actions to the system repertoire.

5 Experiments

For our experiments, we present a scenario where the robot and a human have a joint goal: cleaning a set of furniture. The two partners must place the tapes present on the furniture in a trashbin. We will use two pieces of furniture, identified as TABLE_4 and IKEA_SHELF and three tapes, identified as GREY_TAPE, LOTR_TAPE and WALLE_TAPE. We will use one trashbin, named PINK_TRASHBIN. We will place these items differently in each example, depending on our needs and won’t necessary use all of them together.

We will present a set of runs of the system, which show its capacitiesFootnote 1:

-

Robot adapts: In this scenario (Fig. 2) the user is asked to clean the table, without agreeing before the start on a clear plan with the robot. The user is informed that the robot will try to help as it can. The user moves to the table and takes the WALLE_TAPE. At this point the robot notices that the user has completed an action and understands that he wants to clean the table.

The robot creates a plan and executes its part of it while monitoring the human, which executes its part without deviating from the plan calculated by the robot.

Robot adapts.This figure shows the robot’s representation of the scenario. The white tape is the WALLE_TAPE, while the blue one is the LOTR_TAPE. The round shapes represent the agents’ rechabilities, with red shapes representing robot reachabilities and green shapes human reachabilities. In this case only the human can reach the WALLE_TAPE while both agents can reach the LOTR_TAPE and the PINK_TRASHBIN. After the human takes the WALLE_TAPE the robot produces a plan where the human must throw the tape in the thrashbin while the robot can take the LOTR_TAPE and throw it in the trashbin

-

Modality switch and user plans: In this scenario (Fig. 3) the robot is the only agent able to reach both tapes, but it can’t reach the trashbin, which can instead be reached by the human. We tested this scenario in two different runs. In the first one we start with the robot in ‘robot plans’ modality. After exploring the environment the robot produces a plan and starts its execution.

While the robot is taking the LOTR_TAPE the human moves to take the WALLE_TAPE. This deviates from the robot plan, so it switches to the ‘robot adapts’ modality, communicating the change to the user. The user throws the WALLE_TAPE in the PINK_TRASHBIN and meanwhile the robot takes the LOTR_TAPE and handles it to the user. The user takes the LOTR_TAPE and throws it in the PINK_TRASHBIN, completing the task.

In the second run the robot is in the ‘user plans’ mode. The user is asked to clean the table as he wishes. The user asks the robot to take each tape and give it to him, throwing them in the trashbin.

-

Replanning after failed action: In this scenario (Fig. 4) the robot is the only agent able to reach the trashbin, while both agents can reach the two tapes. The robot is in ‘robot plans’ modality and, after examining the environment, produces a plan.

After taking and throwing the LOTR_TAPE, the robot tries to take the WALLE_TAPE, but fails because it’s too far. The robot informs the user and replans. The agents execute the plan, completing the task.

-

Replanning after human inactivity: In this run the robot computes that the GREY_TAPE and PINK_TRASHBIN are reachable only by the human, while the WALLE_TAPE is reachable only by the robot. The robot computes a plan and starts executing it, observing the human reactions. After an initial stage when the human is commited to the task, he doesn’t execute a part of the plan (taking the final tape and throwing it), so the robot looks for another plan. The only solution to the problem is the one already computed at the beginning, so the robot decides to ask the human to take the tape and throw it. A run of this scenario is shown in Fig. 5.

Modality switch and user plans. Another configuration of the environment, where the robot can reach the two tapes and the human can reach the thrashbin. The robot generates an initial plan from this situation. The block surrounding the give and receive actions means that they are considered a single joint action

The picture shows a run of our ‘replanning after human inactivity scenario’. The different rows show, starting from top to bottom: the real world picture, the world state representation built by the robot, symbolic facts input in the knowledge base at each time step, action taken by each agent at each time step, the current plan calculated by the robot

6 Conclusions

The studied experiment shows that our system is able to exhibit the capacities discussed in Sect. 3. Also, an interesting aspect of our system is that it’s generic enough to be adapted to other manipulation scenarios, even involving more than one human. We review some of the main results of our experiments:

-

The system is able to handle joint goals. The system is able to create shared plans with different users, taking into account the capabilities of each agent. When unexpected changes in the world or task status arise, the system is able to quickly replan, adapting to new scenarios. The system is able to execute this joint goal in a human aware way.

-

The system is able to handle joint actions. The system is able to estimate user intentions in collaborative tasks and to choose appropriate actions, using a set of POMDP models.

-

The system is able to handle user preferences. The system is able to adapt itself to user preferences, allowing the human partner to give commands or to be more passive in its role and switching from one modality to the other.

-

The system is able to handle each agent beliefs. The system is able to represent different belief states for different agents and to take into accout what users can see, reach and know when creating a plan.

-

The system is able to monitor human actions. The system is able to monitor human actions using a mechanism that is simple, but fast and efficient for the studied scenarios.

To further understand the advantages and disadvantages of these different modalities, and also in which conditions one or the others are pertinent, we need to conduct user studies, which will be done in the near future.

Notes

- 1.

Videos from our experiments can be seen at http://homepages.laas.fr/mfiore/iser2014.html.

References

Bratman, M.E.: Shared agency. In: Philosophy of the Social Sciences: Philosophical Theory and Scientific Practice, pp. 41–59 (2009)

Cirillo, M., Karlsson, L., Saffiotti, A.: A Framework for human-aware robot planning. In: Proceedings of the Scandinavian Conference on Artificial Intelligence (SCAI), Stockholm, SE (2008). http://www.aass.oru.se/~asaffio/

Clodic, A., Cao, H., Alili, S., Montreuil, V., Alami, R., Chatila, R.: Shary: a supervision system adapted to human-robot interaction. In: Experimental Robotics, pp. 229–238. Springer (2009)

Demiris, Y., Khadhouri, B.: Hierarchical attentive multiple models for execution and recognition of actions. Robot. Auton. Syst. 54(5), 361–369 (2006)

Flavell, J.: Perspectives on Perspective Taking, pp. 107–139. L. Erlbaum Associates (1992)

Fong, T.W., Kunz, C., Hiatt, L., Bugajska, M.: The human-robot interaction operating system. In: 2006 Human-Robot Interaction Conference. ACM, March 2006

Gray, J., Breazeal, C., Berlin, M., Brooks, A., Lieberman, J.: Action parsing and goal inference using self as simulator. In: IEEE International Workshop on Robot and Human Interactive Communication, 2005. ROMAN 2005, pp. 202–209. IEEE (2005)

Karami, A.-B., Jeanpierre, L., Mouaddib, A.-I.: Human-robot collaboration for a shared mission. In: 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2010, pp. 155–156. IEEE (2010)

Kollar, T., Tellex, S., Roy, D., Roy, N.: Toward understanding natural language directions. In: HRI, pp. 259–266 (2010)

Koppula, H.S., Saxena, A.: Anticipating human activities using object affordances for reactive robotic response. In: Robotics: Science and Systems, Berlin (2013)

Lallement, R., de Silva, L., Alami, R.: HATP: an HTN planner for robotics. In: 2nd ICAPS Workshop on Planning and Robotics, PlanRob 2014 (2014)

Lemaignan, S., Sisbot, A., Alami, R.: Anchoring interaction through symbolic knowledge. In: Proceedings of the 2011 Human-Robot Interaction Pioneers Workshop (2011)

Liu, P., Glas, D.F., Kanda, T., Ishiguro, H., Hagita. N.: It’s not polite to point: generating socially-appropriate deictic behaviors towards people. In: Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction, pp. 267–274. IEEE Press (2013)

Mainprice, J., Sisbot, E.A., Jaillet, L., Cortes, J., Alami, R., Simeon, T.: Planning human-aware motions using a sampling-based costmap planner. In: IEEE International Conference on Robotics and Automation (2011)

Matuszek, C., Fox, D., Koscher, K.: Following directions using statistical machine translation. In: International Conference on Human-Robot Interaction, ACM Press (2010)

Milliez, G., Warnier, M., Clodic, A., Alami, R.: A framework for endowing interactive robot with reasoning capabilities about perspective-taking and belief management. In: Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication (2014)

Mutlu, B., Terrell, A., Huang, C.-M.: Coordination mechanisms in human-robot collaboration. In: ACM/IEEE International Conference on Human-Robot Interaction (HRI)-Workshop on Collaborative Manipulation, pp. 1–6 (2013)

Nau, D., Au, T.C., Ilghami, O., Kuter, U., Murdock, J.W., Wu, D, Yaman, F: SHOP2: an HTN planning system. J. Artif. Intell. Res. 379–404 (2003)

Nikolaidis, S., Shah, J.: Human-robot cross-training: computational formulation, modeling and evaluation of a human team training strategy. In: Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction, pp. 33–40. IEEE Press (2013)

O’Keefe, J.: The Spatial Prepositions. MIT Press, Cambridge (1999)

Pandey, A.K., Alami, R.: Mightability maps: a perceptual level decisional framework for co-operative and competitive human-robot interaction. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (2010)

Ros, R., Lemaignan, S., Sisbot, E.A., Alami, R., Steinwender, J., Hamann, K., Warneken, F.: Which one? grounding the referent based on efficient human-robot interaction. In: 19th IEEE International Symposium in Robot and Human Interactive Communication (2010)

Sebanz, N., Bekkering, H., Knoblich, G.: Joint action: bodies and minds moving together. Trends Cogn. Sci. 10(2), 70–76 (2006)

Shah, J., Wiken, J., Williams, B., Breazeal, C.: Improved human-robot team performance using chaski, a human-inspired plan execution system. In: Proceedings of the 6th International Conference on Human-Robot Interaction, pp. 29–36. ACM (2011)

Sisbot, E.A., Clodic, A, Alami, R., Ransan, M.: Supervision and Motion Planning for a Mobile Manipulator Interacting with Humans (2008)

Skubic, M., Perzanowski, D., Blisard, S., Schultz, A., Adams, W., Bugajska, M., Brock, D.: Spatial language for human-robot dialogs. IEEE Trans. Syst. Man Cybern. Part C: Appl. Rev. 34(2), 154–167 (2004)

Torrey, C., Fussell, S.R., Kiesler, S.: How a robot should give advice. In: 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2013, pp. 275–282. IEEE (2013)

Trafton, G., Hiatt, L., Harrison, A., Tamborello, F., Khemlani, S., Schultz, A.: ACT-R/E: an embodied cognitive architecture for human-robot interaction. J. Hum. Rob. Interact. 2(1), 30–55 (2013)

Trafton, J., Cassimatis, N., Bugajska, M., Brock, D., Mintz, F., Schultz, A.: Enabling effective human-robot interaction using perspective-taking in robots. IEEE Trans. Syst. Man Cybern. Part A, 460–470 (2005)

Tversky, B., Lee, P., Mainwaring, S.: Why do speakers mix perspectives? Spat. Cogn. Comput. 1(4), 399–412 (1999)

Warnier, M., Guitton, J., Lemaignan, S., Alami, R.: When the robot puts itself in your shoes. Managing and exploiting human and robot beliefs. In: Proceedings of the 21th IEEE International Symposium in Robot and Human Interactive Communication (2012)

Acknowledgments

This work was conducted within the EU SAPHARI project (www.saphari.eu) funded by the E.C. division FP7-IST under contract ICT-287513.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Fiore, M., Clodic, A., Alami, R. (2016). On Planning and Task Achievement Modalities for Human-Robot Collaboration. In: Hsieh, M., Khatib, O., Kumar, V. (eds) Experimental Robotics. Springer Tracts in Advanced Robotics, vol 109. Springer, Cham. https://doi.org/10.1007/978-3-319-23778-7_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-23778-7_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-23777-0

Online ISBN: 978-3-319-23778-7

eBook Packages: EngineeringEngineering (R0)