Abstract

The problem of fitting logistic regression to binary model allowing for missppecification of the response function is reconsidered. We introduce two-stage procedure which consists first in ordering predictors with respect to deviances of the models with the predictor in question omitted and then choosing the minimizer of Generalized Information Criterion in the resulting nested family of models. This allows for large number of potential predictors to be considered in contrast to an exhaustive method. We prove that the procedure consistently chooses model \(t^{*}\) which is the closest in the averaged Kullback-Leibler sense to the true binary model t. We then consider interplay between t and \(t^{*}\) and prove that for monotone response function when there is genuine dependence of response on predictors, \(t^{*}\) is necessarily nonempty. This implies consistency of a deviance test of significance under misspecification. For a class of distributions of predictors, including normal family, Rudd’s result asserts that \(t^{*}=t\). Numerical experiments reveal that for normally distributed predictors probability of correct selection and power of deviance test depend monotonically on Rudd’s proportionality constant \(\eta \).

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

We consider a general binary regression model in which responses \(y\in \{0,1\}\) are related to explanatory variables \(\mathbf {x}=(1,x_1,\ldots ,x_p)'\in R^{p+1}\) by the equation

where vector \(\varvec{\beta }=(\beta _0,\beta _1,\ldots ,\beta _p)'\) is an unknown vector of parameters and \(q: R\rightarrow (0,1)\) is a certain unknown response function. To the data pertaining to (1) we fit the logistic regression model i.e. we postulate that the posterior probability that \(y=1\) given \(\mathbf {x}\) is of the form

where \(\varvec{\upgamma }\in R^{p+1}\) is a parameter. Our main interest here is the situation when the logistic model is misspecified i.e. \(p\ne q\). Let \(t=\{0\}\cup \{1\le k\le p:\beta _k\ne 0\}\) be the true model i.e. consisting of indices of nonzero coefficients corresponding to true predictors and of the intercept denoted by 0. Our task may be either to identify model t when incorrectly specified model (2) is fitted or, less ambitiously, to verify whether t contains indices corresponding to predictors i.e. whether response depends on predictors at all. The situation of incorrect model specification is of importance because of obvious reasons as in real applications usually we have no prior knowledge about data generation process and, moreover, goodness-of-fit checks may yield inconclusive results. Thus investigating to what extent selection and testing procedures are resistant to response function misspecification is of interest. This is especially relevant with large number of possible features and sparsity when selecting true predictors is a challenge in itself and is further exacerbated by possible model misspecification. Moreover, some data generation mechanisms lead directly to misspecified logistic model. As an example we mention [6] who consider the case of logistic model when each response is mislabeled with a certain fixed probability.

In the paper we consider selection procedures specially designed for large p scenario which use Generalized Information Criterion (GIC). This criterion encompasses, for specific choices of parameters, such widely used criteria as Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC). AIC is known to overestimate the dimension of the true model (see e.g. [4]) whereas BIC in the case of correctly specified linear model with fixed p is consistent [7]. There are many modifications of AIC and BIC which among others are motivated by the phenomenon that for large p depending on the sample size BIC also choses too large number of variables. We mention in particular modified BIC [3, 23], Extended BIC (EBIC) which consists in adding a term proportional to \(\log p\) to BIC [8, 9] and Risk Inflation Criterion [15]. Qian and Field [20] consider GIC and proved its consistency under correct specification. In this line of research [9] propose minimization of EBIC over all possible subsets variables of sizes not larger than k when k is some sufficiently large number. However, this approach becomes computationally prohibitive for even moderate k. Other important approach is based on \(l_1\)-penalized loglikelihood and its extensions and modifications such as Elastic Net (see [24]) and SCAD [14]. It is known that \(l_1\)-penalization leads to cancelation of some coefficients and thus can be considered as model selection method. For discussion of other approaches we refer to [5, 10, 17] and references there.

The aims of the paper are twofold. We first introduce two-step modification of a procedure based on GIC, the minimizer of which over the family of all possible models is used as a selector of relevant variables. In the case when number of possible predictors is large such an approach is practically unfeasible due to high computational cost of calculating GIC for all possible subsets. This is a reason, likely the only one, why these methods are not frequently used and sequential greedy methods are applied in practice. However, greedy methods lack theoretical underpinning and it is known that they may miss true predictors. We thus propose a specific two-stage greedy method which consists in first ranking the predictors according to residual deviances of the models containing all variables but the considered one. Then in the second stage GIC is minimized over the nested family of models pertaining to increasing sets of the most important variables. We prove that such procedure picks with probability tending to 1 the logistic model \(t^{*}\) which minimizes averaged Kullback-Leibler distance from the binary model (1). This is to the best of our knowledge the first formal result on the consistency of greedy selection procedure for logistic regression even in the case when \(p=q\). As a by-product we obtain the known result concerning behaviour of GIC optimized over the family of all models due to [22]. As in their paper the very general framework is considered for which stringent assumptions are needed we note that it is possible to prove the result under much weaker conditions (cf. their Proposition 4.2 (i), (ii) and Theorem 2 below). In view of the result the nature of the interplay between \(t^{*}\) and t becomes relevant. However, it seems that the problem, despite its importance, has failed to attract much attention. Addressing this question, admittedly partially, is the second aim of the paper. We discuss Rudd’s (1983) result in this context which states that for certain distributions of predictors \(\varvec{\beta }^{*}=\eta \varvec{\beta }\) for some \(\eta \in R\), where \(\varvec{\beta }^*\) which minimizes averaged Kullback-Leibler distance from the binary model to logistic regressions. This obviously implies that \(t^{*}=t\) if \(\eta \ne 0\). As our main result in this direction we prove in Theorem 4 if t contains genuine regressors so does \(t^*\) provided that q is monotone and not constant. This implies in particular that in such a case significance test for regressors constructed under logistic model is consistent under misspecification. We also discuss the relevance of proved results in practice by investigating probability of correct model selection for two-stage procedure and power of test of significance for moderate sample sizes. In particular, we empirically verify that, surprisingly, misspecification of the model may lead to larger probabilities of correct selection and positive selection rate than for correct specification and stress the importance of the proportionality constant \(\eta \) in this context. Namely, it turns out that this phenomenon occurs mostly in the cases when \(\eta >1\). Moreover, we established that probability of correct selection and power of deviance test depend monotonically on \(\eta \).

Generalization to the case when p is large in comparison to n is left for further study. As the fitting of the full model in the first stage of the procedure excludes its application when \(p>n\) an initial screening of variables which is commonly done in applications (see e.g. [9]) would be necessary.

The paper is structured as follows. Section 2 contains preliminaries, in Sect. 3 we introduce and prove consistency of two-step greedy GIC procedure. Interplay between t and \(t^*\) is discussed in Sect. 4 together with its consequence for consistency of deviance test under misspecification. In Sect. 5 we describe our numerical experiments and Appendix contains proofs of auxiliary lemmas.

2 Preliminaries

Observe that the first coordinate of \(\varvec{\beta }\) in (1) corresponds to the intercept and remaining coefficients to genuine predictors which are assumed to be random variables. We assume that \(\varvec{\beta }\) is uniquely defined. The data consists of n observations \((y_i,\mathbf {x}_i)\) which are generated independently from distribution \(P_{\mathbf {x},y}\) such that conditional distribution \(P_{y|\mathbf {x}}\) is given by Eq. (1) and distribution of attribute vector \(\mathbf {x}\) is \((p+1)\)-dimensional with first coordinate equal to 1. We consider the case when \(\mathbf {x}\) is random since in this situation behaviour of \(\varvec{\beta }^*\) of maximum likelihood estimator \(\hat{\varvec{\beta }}\) for incorrect model specification can be more easily described (cf. definition (6) below, see however [13] for analogous development for deterministic predictors).

As a first remark note that as distribution \(P_{\mathbf{x},y}\) which satisfies (1) with parameters q and \(\varvec{\beta }\) satisfies also (1) for parameters \(\tilde{q}\) and \(c\varvec{\beta }+\alpha \) where \(c>0\) and \(\tilde{q}(s)=q((s-\alpha )/c)\). It follows that when q is unknown only the direction of the vector \({\tilde{\varvec{\beta }}}=(\beta _1,\ldots ,\beta _p)'\) may be possibly recovered.

Let \(\mathbf {X}\) be \(n\times (p+1)\) design matrix with rows \(\mathbf {x}_1,\ldots ,\mathbf {x}_n\) and \(\mathbf {Y}=(y_1,\ldots ,y_n)'\) be a response vector. Under the logistic regression model, the conditional log-likelihood function for the parameter \(\varvec{\upgamma }\in R^{p+1}\) is

Note that we can alternatively view \(l(\varvec{\upgamma },\mathbf {Y}|\mathbf {X})\) defined above as an empirical risk corresponding to the logistic loss. Define also the score function for the parameter \(\varvec{\upgamma }\in R^{p+1}\)

where \(\mathbf {p}(\varvec{\upgamma })=(p(\mathbf {x}_1'\varvec{\upgamma }),\ldots ,p(\mathbf {x}_n'\varvec{\upgamma }))'\). The negative Hessian matrix will be denoted by

where \(\varPi (\varvec{\upgamma })=\text {diag}\{p(\mathbf {x}_1'\varvec{\upgamma })(1-p(\mathbf {x}_1'\varvec{\upgamma })),\ldots ,p(\mathbf {x}_n'\varvec{\upgamma })(1-p(\mathbf {x}_n'\varvec{\upgamma }))\}\). Under assumption \(\mathbf {E}(x_{k}^{2})<\infty \), for \(k=1,\ldots ,p\) it follows from the Law of Large Numbers that

Observe that in the case of incorrect model specification \(\text {cov}[s_n(\varvec{\upgamma })|\mathbf {x}_1,\ldots ,\mathbf {x}_n]=\sum _{i=1}^{n}\{q(\mathbf {x}_i'\varvec{\upgamma })[1-q(\mathbf {x}_i'\varvec{\upgamma })]\}\mathbf {x}_i\mathbf {x}_i'\) is not equal to negative Hessian \(J_{n}(\varvec{\upgamma })\) as in the case of correct model specification when \(p(\cdot )=q(\cdot )\).

The maximum likelihood estimator (ML) \(\hat{\varvec{\beta }}\) of parameter \(\varvec{\beta }\) is defined to be

Moreover define

where

is the Kulback-Leibler distance from the true Bernoulli distribution with the parameter \(q(\mathbf {x}'\varvec{\beta })\) to the postulated one with the parameter \(p(\mathbf {x}'\varvec{\upgamma })\). Thus \(\varvec{\beta }^{*}\) is the parameter corresponding to the logistic model closest to binary model with respect to Kullback-Leibler divergence. It follows from [16] that

Using the fact that \(\partial p(\mathbf {x}'\varvec{\upgamma })/\partial \varvec{\upgamma }=p(\mathbf {x}'\varvec{\upgamma })[1-p(\mathbf {x}'\varvec{\upgamma })]\mathbf {x}\) it is easy to see that

and

is positive-semidefinite. Thus from the first of the above equations we have

Note that as the first coordinate of \(\mathbf {x}\) is equal one which corresponds to intercept, the pertaining equation is

We also have

Let \(K_{n}(\varvec{\upgamma })=\text {cov}[s_n(\varvec{\upgamma })]\) be covariance matrix of score function \(s_n(\varvec{\upgamma })\). From above facts we have

The form of \(K_n(\varvec{\beta }^*)\) will be used in the proof of Lemma 2. From (6) it is also easy to see that

It follows from [19] that \(\varvec{\beta }^*\) exists provided \(0<q(\varvec{\beta }'x)<1\) almost everywhere with respect to \(P_\mathbf{x}\) and is unique provided \(E||\mathbf{x}||<\infty \). In the following we will always assume that \(\varvec{\beta }^*\) exists and is unique. In the case of correct specification, when \(p(\cdot )=q(\cdot )\) we have \(\varvec{\beta }^*=\varvec{\beta }\). In general \(\varvec{\beta }^*\) may be different from \(\varvec{\beta }\). The most immediate example is when \(q(s)=p(-s)\) which corresponds to logistic model with switched classes. In this case \(\varvec{\beta }^*=-\varvec{\beta }\). Li and Duan [19], p. 1019 give an example when supports of \(\beta \) and \(\beta ^*\) are disjoint for a loss different than logistic. Let \(t^*=\{0\}\cup \{1\le k\le p:\beta _k^*\ne 0\}\). In Sect. 4 we discuss the relationships between \(\varvec{\beta }\) and \(\varvec{\beta }^*\) as well as between t and \(t^*\) in more detail. In Sect. 3 we give conditions under which set \(t^*\) is identified consistently. Under certain assumptions we can also have \(t^*=t\) and thus identification of set t is possible.

Let us discuss the notation used in this paper. Let \(m\subseteq f:=\{0,1,\ldots ,p\}\) be any subset of variable indices and |m| be its cardinality. Each subset m is associated with a model with explanatory variables corresponding to this subset. In the following f stands for the full model containing all available variables and by null we denote model containing only intercept (indexed by 0). We denote by \(\hat{\varvec{\beta }}_m\) a maximum likelihood estimator calculated for model m and by \(\varvec{\beta }^*_m\) the minimizer of averaged Kullback-Leibler divergence when only predictors belonging to m are considered. Thus \(\varvec{\beta }^*=\varvec{\beta }^*_f\). Moreover, \(\varvec{\beta }^*(m)\) stands for \(\varvec{\beta }^*\) restricted to m. Depending on the context these vectors will be considered as |m|-dimensional or as their \((p+1)\)-dimensional versions augmented by zeros. We need the following fact stating that when \(m\supseteq t^*\) then \(\varvec{\beta }_{m}^*\) is obtained by restricting \(\varvec{\beta }^*\) to m.

Lemma 1

Let \(m\supseteq t^*\) and assume \(\varvec{\beta }^*\) is unique. Then \(\varvec{\beta }^*_m=\varvec{\beta }^*(m).\)

Proof

The following inequalities hold

From the definition of projection the above inequality is actually equality and from the uniqueness the assertion follows.

3 Consistency of Two-Step Greedy GIC Procedure

We consider the following model selection criterion

where m is a given submodel containing |m| variables, \(\hat{\varvec{\beta }}_m\) is a maximum likelihood estimator calculated for model m (augmented by zeros to p-dimensional vector) and \(a_n\) is penalty. Observe that \(a_n=\log (n)\) corresponds to Bayesian Information Criterion and \(a_n=2\) corresponds to Akaike Information Criterion. GIC was considered e.g. by [22]. We would like to select a model which minimizes GIC over a family

i.e. the family of all submodels of f containing intercept. Denote the corresponding selector by \(\hat{t}^*\). As \(\mathcal {M}\) consists of \(2^{p}\) models and determination of \(\hat{t}^*\) requires calculation of GIC for all of them this becomes computationally unfeasible for large p. In order to restrict the space of models over which the optimal value of criterion function is sought we propose the following two-stage procedure.

Step 1. The covariates \(\{1,\ldots ,p\}\) are ordered with respect to the residual deviances

Step 2. The considered model selection criterion GIC is minimized over a family

We define \(\hat{t}^*_{gr}\) as the minimizer of GIC over \(\mathcal {M}_{\text {nested}}\). The intuition behind the first step of the procedure is that by omitting the true regressors from the model their corresponding residual deviances are increased significantly more than when spurious ones are omitted. Thus the first step may be considered as screening of the family \(\mathcal {M}\) and reducing it to \(\mathcal {M}_{\text {nested}}\) by whittling away elements likely to be redundant.

The following assumption will be imposed on \(P_{\mathbf {x}}\) and penalization constants \(a_n\)

-

(A1)

\(J(\varvec{\beta }^*)\) is positive definite matrix.

-

(A2)

\(E(x_k^2)<\infty \), for \(k=1,\ldots ,p\).

-

(A3)

\(a_n\rightarrow \infty \) and \(a_n/n\) is nonincreasing and tends to 0 as \(n\rightarrow \infty \).

The main result of this section is the consistency of the greedy procedure defined above.

Theorem 1

Under assumptions (A1)–(A3) greedy selector \(\hat{t}^*_{gr}\) is consistent i.e. \(P(\hat{t}^*_{gr}=t^*)\rightarrow 1\) when \(n\rightarrow \infty \).

The following two results which are of independent interest constitute the proof of Theorem 1. The first result asserts consistency of \(\hat{t}^*\). This is conclusion of Proposition 4.2 (i) and (iii) in [22]. However, as the framework in the last paper is very general, it is possible to prove the assertions there under much milder assumptions without assuming e.g. that loglikelihood satisfies weak law of large numbers uniformly in \(\beta \) and similar assumption on \(J_n\). Theorem 3 states that after performing the first step of the procedure relevant regressors will precede the spurious ones with probability tending to 1. Consistency of GIC in the almost sure sense was proved by [20] for deterministic regressors under some extra conditions.

Theorem 2

Assume (A1)–(A3). Then \(\hat{t}^*\) is consistent i.e.

Consider two models j and k and denote by

deviance of the model k from the model j.

Theorem 3

Assume conditions (A1)–(A2). Then for all \(i\in t^*\setminus \{0\}\) and \(j\not \in t^*\setminus \{0\}\) we have

Proof

(Theorem 1 ) As the number of predictors is finite and does not depend on n the assertion in Theorem 3 implies that with probability tending to one model \(t^*\) will be included in \(\mathcal {M}_{\text {nested}}\). This in view of Theorem 2 yields the proof of Theorem 1.

The following lemmas will be used to prove Theorem 2. Define sequence

Remark 1

It follows from Lemma 6 that under assumptions (A2) and (A3) if \(t^*\setminus {0}\ne \emptyset \) we have \(nd_n^2/a_n\xrightarrow {P}\infty \).

Two lemmas below are pivotal in proving Theorem 2. The proofs are in the appendix.

Lemma 2

Let \(c\supseteq m\supseteq t^*\). Assume (A1)–(A2). Then \(D_{mc}=O_{P}(1)\).

Lemma 3

Let \(w\not \supseteq t^*\) and \(c\supseteq t^*\). Assume (A1)–(A2). Then \(P(D_{wc}>\alpha _1nd_{n}^2)\rightarrow 1\) as \(n\rightarrow \infty \), for some \(\alpha _1>0\).

Proof

(Theorem 3 ) It follows from Lemma 3 that for \(i\in t\) we have \(P[D^{n}_{f\setminus \{i\} f}>\alpha _{1}nd_n^2]\rightarrow 1\), for \(\alpha _1>0\) and by Remark 1 \(nd_n^2\xrightarrow {P}\infty \). By Lemma 2 we have that \(D_{f\setminus \{j\}f}=O_{P}(1)\) for \(j\in t^*\), which end the proof.

Proof

(Theorem 2 ) Consider first the case \(t^*=\{0\}\cup m\), \(m\ne \emptyset \). We have to show that for all models \(m\in \mathcal {M}\) such that \(m\ne t^{*}\)

as \(n\rightarrow \infty \) which is equivalent to \(P[D_{mt^{*}}>a_n(|t^{*}|-|m|)]\rightarrow 1\). In the case of \(m\not \supseteq t^{*}\) this follows directly from Lemma 3 and \(nd_n^{2}/a_n\xrightarrow {P}\infty \). Consider the case of \(m\supset t^{*}\). By Lemma 2 \(D_{mt^{*}}=O_{P}(1)\). This ends the first part of the proof in view of \(a_n(|t^{*}|-|m|)\rightarrow -\infty \). For \(t^*=\{0\}\) we only consider the case \(m\supset t^*\) and the assertion \(P[D_{mt^{*}}>a_n(1-|m|)]\rightarrow 1\) follows again from Lemma 2.

4 Interplay Between t and \(t^*\)

In view of the results of the previous section \(t^*\) can be consistently selected by two-step GIC procedure. As we want to choose t not \(t^*\), the problem what is the connection between these two sets naturally arises. First we study the problem whether it is possible that \(t^*\) is \(\{0\}\) whereas t does contain genuine regressors. Fortunately, the answer under some mild conditions on the distribution \(P_{\mathbf{x},y}\), including monotonicity of response function q, is negative. We proceed by reexpressing the fact that \(t^*=\{0\}\) in terms of conditional expectations and then showing that the obtained condition for monotone q can be satisfied only in the case when y and \(\mathbf{x}\) are independent.

Let \(\tilde{\varvec{\beta }}=(\beta _1,\ldots ,\beta _p)\), \({\tilde{\varvec{\beta }}}^{*}=(\beta _1^*,\ldots ,\beta _p^*)\) and \(\tilde{\mathbf {x}}=(x_1,\ldots ,x_p)\). The first proposition (proved in the appendix) gives the simple equivalent condition for \(t^*=\{0\}\).

Proposition 1

\(E(\mathbf {x}|y=1)=E(\mathbf {x}|y=0)\) if and only \(t^*=\{0\}\).

Let \(f(\tilde{\mathbf {x}}|y=1)\) and \(f(\tilde{\mathbf {x}}|y=0)\) be the density functions of \(\tilde{\mathbf {x}}\) in classes \(y=1\) and \(y=0\), respectively and denote by \(F(\tilde{\mathbf {x}}|y=1)\) and \(F(\tilde{\mathbf {x}}|y=0)\) the corresponding probability distribution functions. Note that the above proposition in particular implies that in the logistic model for which expectations of \(\mathbf{x}\) in both classes are equal we necessarily have \(\tilde{\varvec{\beta }}=0\). The second proposition asserts that this is true for a general binary model under mild conditions. Thus in view of the last proposition under these conditions \(t^*=\{0\}\) is equivalent to \(t=\{0\}\).

Proposition 2

Assume that q is monotone and densities \(f(\tilde{\mathbf {x}}|y=1)\), \(f(\tilde{\mathbf {x}}|y=0)\) exist. Then \(E(\tilde{\mathbf {x}}|y=1)=E(\tilde{\mathbf {x}}|y=0)\) implies \(f(\tilde{\mathbf {x}}|y=1)=f(\tilde{\mathbf {x}}|y=0)\) a.e., i.e. y and \(\tilde{\mathbf {x}}\) are independent.

Proof

Define \(h(\tilde{\mathbf {x}})\) as the density ratio of \(f(\tilde{\mathbf {x}}|y=1)\) and \(f(\tilde{\mathbf {x}}|y=0)\). Observe that as

we have that \(h(\tilde{\mathbf {x}})=w(\tilde{\mathbf {x}}'\tilde{\beta })\) and w is monotone.

Consider first the case \(p=1\). It follows from the monotone likelihood ratio property (see [18], Lemma 2, Sect. 3) that since \(h(\tilde{\mathbf {x}})\) is monotone then conditional distributions \(F(\tilde{\mathbf {x}}|y=1)\) and \(F(\tilde{\mathbf {x}}|y=0)\) are ordered and as their expectations are equal this implies \(F(\tilde{\mathbf {x}}|y=1)=F(\tilde{\mathbf {x}}|y=0)\) and thus the conclusion for \(p=1\).

For \(p>1\) assume without loss of generality that \(\beta _1\ne 0\) and consider the transformation \(\mathbf {z}=(z_1,\ldots ,z_p)=({\tilde{\varvec{\beta }}}'{\tilde{\mathbf {x}}},x_2,\ldots ,x_p)'\). Denote by \(\tilde{f}(\mathbf {z}|y=1)\) and \(\tilde{f}(\mathbf {z}|y=0)\) densities of \(\mathbf {z}\) in both classes. It is easy to see that we have

and

It follows from (13) that marginal densities \(\tilde{f}_{1}(z_1|y=1)\), \(\tilde{f}_{1}(z_1|y=0)\) satisfy \(\tilde{f}_{1}(z_1|y=1)/\tilde{f}_{1}(z_1|y=0)=w(z_1)\) and the first part of the proof yields \(\tilde{f}_{1}(z_1|y=1)=\tilde{f}_{1}(z_1|y=0)\).

Thus we have for fixed \(z_1\)

which implies that for any \(z_1\) we have \(\tilde{f}(z_2,\ldots ,z_p|z_1,{y\,\,=\,\,1})=\tilde{f}(z_2,\ldots ,z_p|z_1,y=0)\) and thus \(\tilde{f}(\mathbf {z}|y=1)=\tilde{f}(\mathbf {z}|y=0)\) and consequently \(f(\tilde{\mathbf {x}}|y=1)=f(\tilde{\mathbf {x}}|y=0)\) which ends the proof.

Observe now that in view of (12) if \(f(\tilde{\mathbf {x}}|y=1)=f(\tilde{\mathbf {x}}|y=0)\) then \(q(\beta _0+\tilde{\mathbf {x}}'\tilde{\varvec{\beta }})\) is constant and thus \(\tilde{\varvec{\beta }}=0\) if \(1, x_1,\ldots ,x_p\) are linearly independent with probability 1 i.e. \( \mathbf {x}'\mathbf {b}=b_0\) a.e. implies that \(\mathbf {b}=0\) (or equivalently that \(\varSigma _{\mathbf {x}}>0)\). Thus we obtain

Theorem 4

If q is monotone and not constant and \(1,x_1,\ldots ,x_p\) are linearly independent with probability 1 then \(t^*=\{0\}\) is equivalent to \(t= \{0\}\) or, \(\tilde{\varvec{\beta }}^*\ne 0\) is equivalent to \( \tilde{\varvec{\beta }}\ne \mathbf {0}\).

Now we address the question when \(t=t^*\). The following theorem has been proved in [21], see also [19] for a simple proof based on generalized Jensen inequality.

Theorem 5

Assume that \({\varvec{\beta }}^*\) is uniquely defined and there exist \(\varvec{\theta }_{0}\), \(\varvec{\theta }_{1}\in R^{p}\) such that

-

(R)

\(E(\tilde{\mathbf {x}}|\tilde{\mathbf {x}}'\varvec{\beta }=z)=\varvec{\theta }_{0}+\varvec{\theta }_{1}z\).

Then \(\tilde{\varvec{\beta }}^*=\eta \tilde{\varvec{\beta }}\), for some \(\eta \in R\).

It is well known that Rudd’s condition (R) is satisfied for eliptically contoured distributions. In particular multivariate normal distribution satisfies this property (see e.g. [19], Remark 2.2). The case when \(\eta \ne 0\) plays an important role as it follows from the assertion of Theorem 5 that then \(t^*=t\). Note that in many statistical problems we want to consistently estimate the direction of vector \(\varvec{\beta }\) and not its length. This is true for many classification methods when we look for direction such that projection on this direction will give maximal separation of classes. Theorem 4 implies that under its conditions \(\eta \) in the assertion of Theorem 5 is not equal zero. Thus we can state

Corollary 1

Assume (A1)–(A3), (R) and conditions of Theorem 4. Then

i.e. two-stage greedy GIC is consistent for t.

Proof

Under (R) it follows from Theorem 5 that \(\tilde{\varvec{\beta }}^*=\eta \tilde{\varvec{\beta }}\) and as q is monotone and not constant it follows from Theorem 4 that \(\eta \ne 0\) and thus \(t=t^*\). This implies the assertion in view of Theorem 2.

In the next section by means of numerical experiments we will indicate that magnitude of \(\eta \) plays an important role for probability of correct selection. In particular we will present examples showing that when regressors are jointly normal and thus Ruud’s condition is satisfied, probability of correct selection of t by two-step greedy GIC can be significantly larger under misspecification than under correct specification.

The analogous result to Corollary 1 follows for \(\hat{t}^*\) when GIC is minimized over the whole family of \(2^p\) models.

The important consequence of Theorem 4 is that power of significance test will increase to 1 when there is dependence of y on \({\mathbf {x}}\) even when logistic model is misspecified and critical region is constructed for such model. Namely, consider significance test for \(H_{0}:\tilde{\varvec{\beta }}= 0\) with critical region

where \(\chi ^{2}_{k,1-\alpha }\) is quantile of order \(1-\alpha \) of chi-squared distribution with k degrees of freedom. Observe that if \(p=q\) it follows from Theorem 2 and [12] that under null hypothesis \(P(C_{1-\alpha }|H_0)\rightarrow \alpha \) what explains the exact form of the threshold of the rejection region when the logistic model is fitted. We have

Corollary 2

Assume that conditions of Theorem 4 are satisfied and \(\tilde{\varvec{\beta }}\ne 0\). Consider test of \(H_{0}:\tilde{\varvec{\beta }}=\mathbf {0}\) against \(H_{1}:\tilde{\varvec{\beta }}\ne \mathbf {0}\) with critical region \(\mathcal{C}_{1-\alpha }\) defined in (14). Then the test is consistent i.e. \(P(D_{null,\hat{t}^{*}_{gr}}\in C_{1-\alpha }|H_1)\rightarrow 1\).

Observe that if \(\tilde{\varvec{\beta }}^*\ne \mathbf {0}\) then in view of Remark 1 \(nd_n^2\rightarrow \infty \). Then the main results and Lemma 3 imply that when \(\tilde{\varvec{\beta }}^*\ne \mathbf {0}\) \(P[D_{null,\hat{t}^{*}_{gr}}>\chi ^{2}_{|\hat{t}^{*}_{gr}|-1,1-\alpha }]\rightarrow 1\) for any \(\alpha >0\) and the test is consistent. But in view of Theorem 4 \(\tilde{\varvec{\beta }}^*\ne \mathbf {0}\) is implied by \({\tilde{\varvec{\beta }}}\ne 0\).

5 Numerical Experiments

In this section we study how the incorrect model specification affects the model selection and testing procedures, in particular how it influences probability of correct model selection, positive selection rate, false discovery rate and power of a test of significance. In the case when attributes are normally distributed we investigate how these measures depend on proportionality constant \(\eta \) appearing in Rudd’s theorem.

Recall that t denotes the minimal true model. Convention that \(\varvec{\beta }_t\) is subvector of \(\varvec{\beta }\) corresponding to t is used throughout. We consider the following list of models.

-

(M1)

\(t=\{10\}\), \(\beta _{t}=0.2\),

-

(M2)

\(t=\{2,4,5\}\), \(\varvec{\beta }_t=(1,1,1)'\),

-

(M3)

\(t=\{1,2\}\), \(\varvec{\beta }_t=(0.5,0.7)'\),

-

(M4)

\(t=\{1,2\}\), \(\varvec{\beta }_t=(0.3,0.5)'\),

-

(M5)

\(t=\{1,\ldots ,8\}\), \(\varvec{\beta }_t=(0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9)'\).

Models (M3)–(M5) above are considered in [9]. The number of all potential attributes is initially set to be \(p=15\) so the proportion of relevant variables varies from \(6.66\,\%\) (for model M1) to \(53.33\,\%\) (for model M5). Recall that \(q(\cdot )\) denotes a true response function, i.e. for a given \(\mathbf {x}\), y is generated from Bernoulli distribution with success probability \(q(\mathbf {x}'\varvec{\beta })\). The logistic model defined in (2) is fitted. Let \(F_{N(0,1)}(\cdot )\) denote distribution function of standard normal random variable and \(F_{Cauchy(u,v)}(\cdot )\) distribution function of Cauchy distribution with location u and scale v. In the case of incorrect model specification, the following response functions are considered:

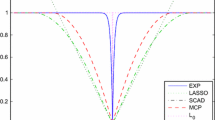

Studied response functions are shown in Fig. 1. Dashed line there corresponds to fitted logistic response function \(p(\cdot )\).

We consider two distributions of attributes, in both cases attributes are assumed to be independent. In the first scenario \(x_j\) have N(0, 1) distribution and in the second \(x_j\) are generated from Gaussian mixture \(0.95N(0,1)+\,0.05N(5,1)\). Thus in the first case condition (R) of Theorem 5 is satisfied. This implies \(\tilde{\varvec{\beta }}^*=\eta \tilde{\varvec{\beta }}\), for some \(\eta \in R\). One of our main goals is to investigate how the value of \(\eta \) affects the performance of model selection and testing procedures.

Recall that although Rudd’s condition is a property of distribution of predictors and \(\varvec{\beta }\) it follows from definition of \(\varvec{\beta }^*\) that \(\eta \) depends on the model as well as on misspecified response \(q(\cdot )\). Table 1 shows values of estimated proportionality constant \(\eta \), denoted by \(\hat{\eta }\). To calculate \(\hat{\eta }\), for each variable \(k\in t\), the value \(\hat{\beta }_k/\beta _k\), where \(\hat{\varvec{\beta }}\) is based on \(n=10^6\) observations is computed and then the values are averaged over all attributes. The first column corresponds to \(\eta =1\) and it allows to gauge the variability of \(\hat{\eta }\). Note also that the smallest value of \(\hat{\eta }\) equal 0.52 and the second largest (equal 1.74) are obtained for the model M2 and responses \(q_6\) and \(q_1\), respectively. It follows that in the first case estimated \(\varvec{\beta }\) is on average two times smaller than the true one and around 1.7 times larger in the second case. Observe also that when \(\hat{\varvec{\beta }}\) and \(\varvec{\beta }\) are approximately proportional, for q(s) such that \(q(s)>p(s)\) for \(s>0\) we can expect that \(\hat{\varvec{\beta }}>\varvec{\beta }\) as we try to match \(q(\mathbf {x}_i'\varvec{\beta })\) with \(p(\mathbf {x}_i'\hat{\varvec{\beta }})\). This results in \(\hat{\eta }>1\). Thus as expected for \(q_1\), \(\hat{\eta }\) is greater than 1, whereas for \(q_6\) it is smaller than 1.

It is noted in [2] (Sect. 4.2) that the probit function can be approximated by the scaled logit function as \(q_1(s)\approx p(a\cdot s)\), where the scaling constant \(a=\sqrt{8/\pi }\approx 1.6\) is chosen so that the derivatives of the two curves are equal for \(s=0\). Observe that constant a is very close to \(\hat{\eta }\) calculated for \(q_1\) (see Table 1).

In order to select the final model we use the two-step greedy procedure with Bayesian Information Criterion (BIC) described in Sect. 3. All fitted models include intercept.

Let \(\hat{t}^{*}\) denote the model selected by a given selection criterion. As the measures of performance we use the following indices:

-

probability of correct model selection (CS): \(P(\hat{t}^{*}=t)\),

-

positive selection rate (PSR): \(\mathbf {E}(|\hat{t}^{*}\cap t|/|t|)\),

-

false discovery rate (FDR): \(\mathbf {E}(|\hat{t}^{*}\setminus t|/|\hat{t}^{*}|)\),

-

power of significance test (POWER): \(P(D_{null,\hat{t}^{*}}\in C_{1-\alpha }|H_1)\), where \(\mathcal{C}_{1-\alpha }\) is critical region and \(H_{1}\) corresponds to models M1–M5. Level \(\alpha =0.05\) was adopted throughout.

Empirical versions of the above measures are calculated and the results are averaged over 200 simulations. In the case of difficult models containing several predictors with small contributions CS can be close to zero and thus PSR and FDR are much more revealing measures of effectiveness. Observe that PSR is an average fraction of correctly chosen variables with respect to all significant ones whereas FDR measures a fraction of false positives (selected variables which are not significant) with respect to all chosen variables. Thus \(\text {PSR}=1\) means that all significant variables are included in the chosen model whereas \(\text {FDR}=0\) corresponds to the case when no spurious covariates are present in the final model. Instead of using critical region based on asymptotic distribution defined in (14) for which the significance level usually significantly exceeded assumed one, Monte Carlo critical value is calculated. For a given n and p 10000 datasets from null model are generated, for each one \(\hat{t}^{*}\) and \(D_{null,\hat{t}^{*}}\) is computed and this yields distribution of \(D_{null,\hat{t}^{*}}\). The critical value is defined as empirical quantile of order \((1-\alpha )\) for \(D_{null,\hat{t}^{*}}\).

Table 2 shows the results for \(n=200\). The highlighted values are maximal value in row (minimal values in case of FDR) and the last column pertains to maximal standard deviation in row. Observe that the type of response function influences greatly all considered measures of performance. Values of POWER are mostly larger than CS as detection of at least one significant variable usually leads to rejection of the null hypothesis. The most significant differences are observed for model M5 for which it is difficult to identify all significant variables as some coefficients are close to zero but it is much easier to reject the null model. However, when there is only one significant variable in the model, the opposite may be true as it happens for model M1. Note also that CS, PSR and POWER are usually large for large \(\hat{\eta }\). To make this point more clear Fig. 2 shows the dependence of CS, PSR, POWER on \(\hat{\eta }\). Model M1 is not considered for this graph as it contains only one significant predictor. In the case of CS, PSR and POWER monotone dependence is evident. However FDR is unaffected by the value of \(\eta \) which is understandable in view of its definition.

Table 3 shows the results for \(n=200\) when attributes \(x_j\) are generated from Gaussian mixture \(0.95N(0,1)+0.05N(5,1)\). Observe that the greatest impact of the change of \(\mathbf{x}\) on CS occurs for truncated probit responses \(q_2\) and \(q_3\) for which in the case of M2–M5 CS drops dramatically. The change affects also PSR but to a lesser extent.

To investigate this effect further we consider the probit function truncated at levels c and \(1-c\)

which is a generalization of \(q_2\) and \(q_3\). Figure 7 shows how parameter c influences CS, PSR and FDR when the response is generated from \(q_{7}\) and attributes are generated from Gaussian mixture \(0.95N(0,1)+0.05N(5,1)\).

To illustrate the result concerning the consistency of greedy two-step model selection procedure stated in Corollary 1 we made an experiment in which dependency on n is investigated. Figures 3 and 4 show considered measures of performance with respect to n for models M4 and M5. Somehow unexpectedly in some situations the results for incorrect model specification are better than for the correct specification, e.g. for model (M4) CS is larger for \(q_1\), \(q_2\) and \(q_4\) than for \(q(\cdot )=p(\cdot )\) (cf. Fig. 3). The results for \(q_6\) are usually significantly worse than for p, which is related to the fact that \(\hat{\eta }\) for this response is small (see again Table 1). Observe also that the type of response function clearly affects the PSRs whereas FDRs are similar in all cases.

Figure 5 shows how the power of the test of significance for the selected model and for the full model depends on the value of coefficient corresponding to the relevant variable in model M1. We see that for both correct and incorrect specification the power for selected model is slightly larger than for the full model for sufficiently large value of coefficient \(\beta _{10}\). The difference is seen for smaller values of \(\varvec{\beta }\) in case of misspecification.

Finally we analysed how the number of potential attributes p influences the performance measures. The results shown in Fig. 6 for model M1 and \(n=500\) indicate that FDR increases significantly when spurious variables are added to the model. At the same time CS decreases when p increases, however, PSR is largely unaffected.

In conclusion we have established that when predictors are normal quality of model selection and power of the deviance test depend on the magnitude of Rudd’s constant \(\eta \). When \(\eta >1\) one can expect better results than for correct specification. Moreover, values of CS, PSR and POWER depend monotonically on \(\eta \).

In addition to tests on simulated data we performed an experiment on real data. We used Indian Liver Patient Dataset publicly available at UCI Machine Learning Repository [1]. This data set contains 10 predictors: age, gender, total Bilirubin, direct Bilirubin, total proteins, albumin, A/G ratio, SGPT, SGOT and Alkphos. The binary response indicates whether the patient has a liver disease or not. Our aim was to use real explanatory variables describing the patients to generate an artificial response from different response functions. This can mimic the situation in which the liver disease cases follow some unknown distribution depending on explanatory variables listed above. We applied the following procedure. Predictors chosen by stepwise backward selection using BIC were considered. Estimators pertaining to 3 chosen variables (1st-age, 4th-direct Bilirubin and 6th-albumin) are treated as new true parameters corresponding to significant variables whereas the remaining variables are treated as not significant ones. Having the new parameter \(\varvec{\beta }\) and vectors of explanatory variables \(\mathbf {x}_1,\ldots ,\mathbf {x}_n\) in the data we generate new \(y_1,\ldots ,y_n\) using considered response functions \(p,q_1,\ldots ,q_6\).

Table 4 shows fraction of simulations in which the given variable was selected to the final model when the two-step procedure was applied. Note that this measure is less restrictive than CS used in previous experiments. Observe that the choice of response function affects the probabilities, e.g. direct Bilirubin is chosen in \(80\,\%\) simulations for correct specification and only in \(12\,\%\) simulations for \(q_3\). The significant variables are most often chosen to the final model for p and \(q_1\). It is seen that direct Bilirubin is less likely to be selected in the case of most of the considered response functions (Fig. 7).

References

Bache K, Lichman M (2013) UCI machine learning repository. University of California, Irvine

Bishop CM (2006) Pattern recognition and machine learning. Springer, New York

Bogdan M, Doerge R, Ghosh J (2004) Modifying the Schwarz Bayesian Information Criterion to locate multiple interacting quantitative trait loci. Genetics 167:989–999

Bozdogan H (1987) Model selection and Akaike’s information criterion (AIC): the general theory and its analitycal extensions. Psychometrika 52:345–370

Burnham K, Anderson D (2002) Model selection and multimodel inference. A practical information-theoretic approach. Springer, New York

Carroll R, Pederson S (1993) On robustness in the logistic regression model. J R Stat Soc B 55:693–706

Casella G, Giron J, Martinez M, Moreno E (2009) Consistency of Bayes procedures for variable selection. Ann Stat 37:1207–1228

Chen J, Chen Z (2008) Extended Bayesian Information Criteria for model selection with large model spaces. Biometrika 95:759–771

Chen J, Chen Z (2012) Extended BIC for small-n-large-p sparse glm. Statistica Sinica 22:555–574

Claeskens G, Hjort N (2008) Model selection and model averaging. Cambridge University Press, Cambridge

Czado C, Santner T (1992) The effect of link misspecification on binary regression inference. J Stat Plann Infer 33:213–231

Fahrmeir L (1987) Asymptotic testing theory for generalized linear models. Statistics 1:65–76

Fahrmeir L (1990) Maximum likelihood estimation in misspecified generalized linear models. Statistics 4:487–502

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Stat Assoc 96:1348–1360

Foster D, George E (1994) The risk inflation criterion for multiple regression. Ann Stat 22:1947–1975

Hjort N, Pollard D (1993) Asymptotics for minimisers of convex processes. Unpublished manuscript

Konishi S, Kitagawa G (2008) Information criteria and statistical modeling. Springer, New York

Lehmann E (1959) Testing statistical hypotheses. Wiley, New York

Li K, Duan N (1991) Slicing regression: a link-free regression method. Ann Stat 19(2):505–530

Qian G, Field C (2002) Law of iterated logarithm and consistent model selection criterion in logistic regression. Stat Probab Lett 56:101–112

Ruud P (1983) Sufficient conditions for the consistency of maximum likelihood estimation despite misspecification of distribution in multinomial discrete choice models. Econometrica 51(1):225–228

Sin C, White H (1996) Information criteria for selecting possibly misspecified parametric models. J Econometrics 71:207–225

Zak-Szatkowska M, Bogdan M (2011) Modified versions of Baysian Information Criterion for sparse generalized linear models. Comput Stat Data Anal 5:2908–2924

Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc B 67(2):301–320

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix A: Auxiliary Lemmas

Appendix A: Auxiliary Lemmas

This section contains some auxiliary facts used in the proofs. The following theorem states the asymptotic normality of maximum likelihood estimator.

Theorem 6

Assume (A1) and (A2). Then

where J and K are defined in (5) and (9), respectively.

The above Theorem is stated in [11] (Theorem 3.1) and in [16] ((2.10) and Sect. 5B).

Lemma 4

Assume that \(\max _{1\le i\le n}|\mathbf {x}_i'(\varvec{\upgamma }-\varvec{\beta })|\le C\) for some \(C>0\) and some \(\varvec{\upgamma }\in R^{p+1}\). Then for any \(\mathbf {c}\in R^{p+1}\)

Proof

It suffices to show that for \(i=1,\ldots ,n\)

Observe that for \(\varvec{\upgamma }\) such that \(\max _{i\le n}|\mathbf {x}_i'(\varvec{\upgamma }-\varvec{\beta })|\le C\) there is

By replacing \(\varvec{\beta }\) and \(\varvec{\upgamma }\) in (15) we obtain the upper bound for \(\mathbf {c}'J_{n}(\varvec{\upgamma })\mathbf {c}\).

Lemma 5

Assume (A1) and (A2). Then \(l(\hat{\varvec{\beta }},\mathbf {Y}|\mathbf {X})-l(\varvec{\beta }^{*},\mathbf {Y}|\mathbf {X})=O_{P}(1)\).

Proof

Using Taylor expansion we have for some \(\bar{\varvec{\beta }}\) belonging to the line segment joining \(\hat{\varvec{\beta }}\) and \(\varvec{\beta }^{*}\)

Define set \(A_{n}=\{\varvec{\upgamma }: ||\varvec{\upgamma }-\varvec{\beta }^{*}||\le s_n\}\), where \(s_n\) is an arbitrary sequence such that \(ns_n^2\rightarrow 0\). Using Schwarz and Markov inequalities we have for any \(C>0\)

Thus using Lemma 4 the quadratic form in (16) is bounded with probability tending to 1 from above by

which is \(O_{P}(1)\) as \(\sqrt{n}(\hat{\varvec{\beta }}-\varvec{\beta }^{*})=O_{P}(1)\) in view of Theorem 6 and \(n^{-1}J_{n}(\varvec{\beta }^*)\xrightarrow {P}J(\varvec{\beta }^*)\).

1.1 A.1 Proof of Lemma 2

As \(\varvec{\beta }^*_{m}=\varvec{\beta }^*_c\) we have for \(c\supseteq m \supseteq t^*\)

which is \(O_{P}(1)\) in view of Remark 1 and Lemma 5.

1.2 A.2 Proof of Lemma 3

The difference \(l(\hat{\varvec{\beta }}_c,\mathbf {Y}|\mathbf {X})-l(\hat{\varvec{\beta }}_w,\mathbf {Y}|\mathbf {X})\) can be written as

It follows from Lemma 5 and Remark 1 that the first term in (17) is \(O_{P}(1)\). We will show that the probability that the second term in (17) is greater or equal \(\alpha _1nd_n^2\), for some \(\alpha _1>0\) tends to 1. Define set \(A_n=\{\varvec{\upgamma }:||\varvec{\upgamma }-\varvec{\beta }^*||\le d_n\}\). Using the Schwarz inequality we have

with probability one. Define \(H_n(\varvec{\upgamma })=l(\varvec{\beta }^*,\mathbf {Y}|\mathbf {X})-l(\varvec{\upgamma },\mathbf {Y}|\mathbf {X})\). Note that \(H(\varvec{\upgamma })\) is convex and \(H(\varvec{\beta }^*)=0\). For any incorrect model w, in view of definition (11) of \(d_n\), we have \(\hat{\varvec{\beta }}_w\notin A_n\) for sufficiently large n. Thus it suffices to show that \(P(\inf _{\varvec{\upgamma }\in \partial A_n}H_n(\varvec{\upgamma })> \alpha _1 nd_n^{2})\rightarrow 1\), as \(n\rightarrow \infty \), for some \(\alpha _1>0\). Using Taylor expansion for some \(\bar{\varvec{\upgamma }}\) belonging to the line segment joining \(\varvec{\upgamma }\) and \(\varvec{\beta }^*\)

and the last convergence is implied by

It follows from Lemma 4 and (18) that for \(\varvec{\upgamma }\in A_n\)

Let \(\tau =\exp (-3)/2\). Using (20), the probability in (19) can be bounded from above by

Let \(\lambda _{1}^{-}=\lambda _{\min }(J(\varvec{\beta }))/2\). Assuming \(\alpha _1<\lambda _{1}^{-}\tau \), the first probability in (21) can be bounded by

Consider the first probability in (22). Note that \(s_n(\varvec{\beta }^*)\) is a random vector with zero mean and the covariance matrix \(K_n(\varvec{\beta }^*)\). Using Markov’s inequality, the fact that \(\text {cov}[s_{n}(\varvec{\beta }^{*})]=nK(\varvec{\beta }^{*})\) and taking \(\alpha _1<\lambda ^{-}\tau \) it can be bounded from above by

where the last convergence follows from \(a_n\rightarrow \infty \).

The convergence to zero of the second probability in (22) follows from \(nd_n^2/a_n\xrightarrow {P}\infty \). As eigenvalues of a matrix are continuous functions of its entries, we have \(\lambda _{\min }(n^{-1}J_{n}(\varvec{\beta }^*))\xrightarrow {P}\lambda _{\min }(J(\varvec{\beta }^*))\). Thus the convergence to zero of the third probability in (22) follows from the fact that in view of (A1) matrix \(J(\varvec{\beta }^*)\) is positive definite. The second term in (21) is bounded from above by

where the last convergence follows from Lemma 4 and (18).

Lemma 6

Assume (A2) and (A3). Then we have \(\max _{i\le n}||\mathbf {x}_i||^2a_n/n\xrightarrow {P}0\).

Proof

Using Markov inequality, (A2) and (A3) we have that \(||\mathbf {x}_n||^{2}a_n/n\xrightarrow {P}0\). We show that this implies the conclusion. Denote \(g_n:=\max _{1\le i\le n}||\mathbf {x}_i||^{2}a_n/n\) and \(h_n:=||\mathbf {x}_n||^{2}a_n/n\). Define sequence \(n_k\) such that \(n_1=1\) and \(n_{k+1}=\min \{n>n_k:\max _{i\le n}||\mathbf {x}_i||^{2}>\max _{i\le n_k}||\mathbf {x}_i||^{2}\}\) (if such \(n_{k+1}\) does not exist put \(n_{k+1}=n_k\)). Without loss of generality we assume that for \(A=\{n_k\rightarrow \infty \}\) we have \(P(A)=1\) as on \(A^c\) the conclusion is trivially satisfied. Observe that \(g_{n_k}=h_{n_k}\) and \(h_{n_k}\xrightarrow {P}0 \) as a subsequence of \(h_n\xrightarrow {P}0\) and thus also \(g_{n_k}\xrightarrow {P}0\). This implies that for any \(\epsilon >0\) there exists \(n_0\in \mathbf {N}\) such that for \(n_k>n_0\) we have \(P[|g_{n_k}|\le \epsilon ]\ge 1-\epsilon \). As for \(n\in (n_k,n_{k+1})\) \(g_n\le g_{n_k}\) since \(a_n/n\) is nonincreasing we have that if \(n\ge n_0\) \(P[|g_n|\le \epsilon ]\ge 1-\epsilon \) i.e. \(g_n\xrightarrow {P}0\).

1.3 A.3 Proof of Proposition 1

Assume first that \(\tilde{\varvec{\beta }}^{*}=0\) and note that this implies \(p(\beta _{0}+\tilde{\mathbf {x}}'\tilde{\varvec{\beta }}^{*})=p(\beta _{0})=C\in (0,1)\). From (8) we have

From (24) we also have

Comparing the last equation and right-side term in (25) we obtain \(\mathbf {E}(\tilde{\mathbf {x}}|y=1)=E{\tilde{\mathbf {x}}}=\mathbf {E}(\tilde{\mathbf {x}}|y=0)\). Assume now \(\mathbf {E}(\tilde{\mathbf {x}}|y=1)=\mathbf {E}(\tilde{\mathbf {x}}|y=0)\) which implies as before that that \(\mathbf {E}(\tilde{\mathbf {x}}|y=1)=\mathbf {E}(\tilde{\mathbf {x}})\). Thus

Since \((\beta _{0}^{*},\tilde{\varvec{\beta }}^{*})\) is unique it suffices to show that (7) and (8) are satisfied for \(\tilde{\varvec{\beta }}^{*}=0\) and \(\beta _{0}^*\) such that \(Ep(\beta _{0}^*)=P(Y=1)\). This easily follows from (26).

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Mielniczuk, J., Teisseyre, P. (2016). What Do We Choose When We Err? Model Selection and Testing for Misspecified Logistic Regression Revisited. In: Matwin, S., Mielniczuk, J. (eds) Challenges in Computational Statistics and Data Mining. Studies in Computational Intelligence, vol 605. Springer, Cham. https://doi.org/10.1007/978-3-319-18781-5_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-18781-5_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-18780-8

Online ISBN: 978-3-319-18781-5

eBook Packages: EngineeringEngineering (R0)