Abstract

Lattice-based cryptography is one of the leading proposals for post-quantum cryptography. The Shortest Vector Problem (SVP) is arguably the most important problem for the cryptanalysis of lattice-based cryptography, and many lattice-based schemes have security claims based on its hardness. The best quantum algorithm for the SVP is due to Laarhoven [Laa16] and runs in (heuristic) time \(2^{0.2653d + o(d)}\). In this article, we present an improvement over Laarhoven’s result and present an algorithm that has a (heuristic) running time of \(2^{0.2570 d + o(d)}\) where d is the lattice dimension. We also present time-memory trade-offs where we quantify the amount of quantum memory and quantum random access memory of our algorithm. The core idea is to replace Grover’s algorithm used in [Laa16] in a key part of the sieving algorithm by a quantum random walk in which we add a layer of local sensitive filtering.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Lattice-based cryptography is one of the most appealing modern public-key cryptography. It has worst case to average case reductions [Ajt96], efficient schemes and allows more advanced primitives such as fully homomorphic encryption [Gen09]. Another important aspect is that lattice based problems are believed to be hard even for quantum computers. Lattice-based cryptography is therefore at the forefront of post-quantum cryptography, especially in the NIST post-quantum standardization process. It is therefore very important to put a large effort on quantum cryptanalysis and to understand the quantum hardness of lattice problems in order to increase our trust in these post-quantum solutions.

For a given lattice \(\mathcal {L}\), the Shortest Vector Problem (SVP) asks to find a short vector of this lattice. Solving the SVP is arguably the most important problem for the cryptanalysis of lattice-based cryptography. Additionally to its own importance, it is used as a subroutine in the BKZ algorithm, which is often the best attack on lattice-based schemes. There are two main families of algorithms for SVP: enumeration algorithms [FP85, Kan83, Poh81] which are asymptotically slow but have small memory requirements, and sieving algorithm which have the best asymptotic complexities but have large memory requirements. For finding very small vectors, which is required by the BKZ algorithm, sieving algorithms are currently the most efficient algorithms despite their large memory requirements. Indeed, in the SVP challenge, the 10 top performances are done by sieving algorithms and the current record (since February 2021) solves SVP for \(d = 180\)Footnote 1. However, for large approximation factors, enumeration algorithms are more efficient and these 2 methods are both of wide interest.

For a lattice \(\mathcal {L}\) of dimension d, sieving algorithms solve SVP classically in time \(2^{0.292d + o(d)}\) (with a heuristic analysis) using the local filtering technique introduced in [BDGL16]. Laarhoven presented a quantum equivalent of this algorithm that runs in time \(2^{0.265d + o(d)}\) while using as much space as in the classical setting, namely \(2^{0.208d + o(d)}\). The BKZ algorithm is the most efficient known attack against all lattice-based schemes which were chosen at the third round of NIST standardization processFootnote 2. These two exponents are used for determining the number of bits of security in all these schemes hence improving the time exponent for SVP has direct implications on the security claims of these schemes.

Related Work. Heuristic sieving algorithms were first introduced by Nguyen and Vidick [NV08] that presented an algorithm running in time \(2^{0.415d + o(d)}\) and using \(2^{0.2075d}\) memory. A more efficient sieve in practice but with the same asymptotic running time was presented in [MV10]. Then, there has been improvements by considering k-sieve algorithms [WLTB11, ZPH14, Laa16]. Also, several works showed how to use nearest neighbor search to improve sieving algorithms [LdW15, Laa15, BL16]. The best algorithm [BDGL16] runs in time \(2^{0.292d + o(d)}\) and uses locality-sensitive filtering.

In the quantum setting, quantum analogues of the main algorithms for sieving were studied [LMvdP15, Laa16]. The best algorithm runs in time \(2^{0.265d + o(d)}\) and is the quantum analogue of [BDGL16]. There has been two more recent works on quantum sieving algorithms. First, quantum variants of the k-sieve were studied in [KMPM19], giving interesting time-space trade-off and a recent article [AGPS20] studied more practical speedups of these quantum algorithms, i.e. when do these gains in the exponent actually translate to quantum speedups.

Contributions. In this article, we study and improve the asymptotic complexity of quantum sieving algorithm for SVP. This is the first improvement on the asymptotic running timeFootnote 3 of quantum sieving algorithms since the work of Laarhoven [Laa16].

It is not a priori clear how to use quantum random walks to adapt the algorithm from [BDGL16]. This algorithm is divided into a pre-processing phase and a query phase. In this query phase, we have several points that are in a filter F, which means here that there are close to a specific point. We are then given a new point \(\vec {v}\) and we want to know whether there exists a point \(\vec {w}\in F\) such that \(\left\Vert \vec {v}\pm \vec {w}\right\Vert \) is smaller than \(\min \{\left\Vert \vec {v}\right\Vert ,\left\Vert \vec {w}\right\Vert \}\)Footnote 4. Then we do not know how to do better here than Grover’s algorithm, which takes time \(\sqrt{|F|}\). On the other hand, if instead of this query framework, we start from a filter F and we want to find all the pairs \(\vec {v},\vec {w}\), then we can apply a quantum random walk.

Even within this framework, there are many ways of constructing quantum random walks and most of them do not give speedups over [Laa16]. What we show is that by adding proper additional information in the vertices of the random walk, in particular by adding another layer of filters within the vertices of the graph on which we perform the quantum walk, we can actually get some improvement over Grover’s algorithm and achieve our speedups.

Presentation of Our Contributions. Our main theorem is an improvement of the best asymptotic quantum heuristic running time for the SVP bringing down the asymptotic running time from \(2^{0.2653d + o(d)}\) (Laarhoven’s algorithm [Laa16]) to \( 2^{0.2570 d + o(d)}\).

Theorem 1

Our algorithm using quantum random walks solves the SVP on dimension d which heuristically solves SVP on dimension d in time \( 2^{0.2570 d + o(d)}\), uses QRAM of maximum size \( 2^{0.0767d}\), a quantum memory of size \(2^{0.0495d}\) and a classical memory of size \({\mathsf {poly}}(d) \cdot 2^{0.2075d}\).

Our results are in the QRAM model where QRAM operations can be done efficiently. Notice that Laarhoven’s result is in this model so our results are directly comparable to his. We can see that additionally to improving the best asymptotic running time, this algorithm uses much less quantum resources (both quantum memory and quantum RAM) than its running time which makes it fairly practical. This theorem can also be helpful if we want to optimize other performance measures. For example, it has been argued that having efficient QRAM operations is too strong and that performing a QRAM operation should require time at least \(r^{1/3}\) where r is the number of QRAM registers. In this model, the best running time was \(2^{0.2849d + o(d)}\) (this is still using Laarhoven’s algorithm but the result has been explicitly stated in [AGPS20]). As a consequence of Theorem 1, we have the following

Corollary 1

In the model where quantum random access to a memory of size r can be done in time \(r^{1/3}\), our quantum algorithm solves SVP on dimension d in time \(2^{0.2826d + o(d)}\).

Proof

This is a direct consequence of Theorem 1 where in this model, the running time is \(2^{0.2570d + o(d)} {\cdot }\left( 2^{0.0767d}\right) ^{1/3} = 2^{0.2826d + o(d)}\). \(\square \)

We also present two trade-offs: a quantum memory-time trade-off and a QRAM-time trade-off. For a fixed amount of quantum memory, our algorithm performs as follows.

Theorem 2

(Trade-off for fixed quantum memory). Our algorithm using quantum random walks solves the SVP on dimension d which, for a parameter \(M \in [0,0.0495]\), heuristically runs in time \(2^{\tau _M d + o(d)}\), uses QRAM of maximum size \(2^{\gamma _M d}\) and quantum memory of size \(2^{\mu _M d}\) and a classical memory of size \({\mathsf {poly}}(d) \cdot 2^{0.2075d}\) where

With this theorem, we obtain for \(M = 0\) the quantum running time of Laarhoven’s quantum algorithm and, for \(M = 0.0495\), the result of Theorem 1. We now present our second trade-off theorem where we fix the amount of QRAM.

Theorem 3

(Trade-off for fixed QRAM). Our quantum algorithm using quantum random walks solves SVP on dimension d which for a parameter \(M' \in [0,0.0767]\) heuristically runs in time \(2^{\tau _{M'} d + o(d)}\), uses QRAM of maximum size \(2^{\gamma _{M'} d}\), a quantum memory of size \(2^{\mu _{M'} d}\) and uses a classical memory of size \({\mathsf {poly}}(d) \cdot 2^{0.2075d}\) where

With this theorem, we obtain for \(M' = 0\), the best classical exponent of [BDGL16] (we can actually show the algorithm uses no quantum resources in this case). For \(M' = 0.0577\), we retrieve Laarhoven’s quantum exponent and for \(M' = 0.0767\), we get Theorem 1.

Organisation of the Paper. In Sect. 2, we present preliminaries on Quantum computing. In Sect. 3, we then present sieving algorithm, as well as useful statements on lattices. In Sect. 4, we present the general framework we use for sieving algorithm. Next, we use and perform a first study of its complexity in Sect. 5, whose Sect. 6 improves. In Sect. 7, we present the space-time trade-offs. We perform a final discussion in Sect. 8 and talk about parallelization of our algorithm as well as possible improvements.

2 Quantum Computing Preliminaries

2.1 Quantum Circuits

We consider here quantum circuits consisting of 1 and 2 qubit gate, without any locality constraint, meaning that we can apply a 2 qubit gate from a universal set of gates to any pair of qubits in timeFootnote 5 1. We use the textbook gate model where the running time of a quantum circuit is just the number of gates used. The width of a circuit is the number of qubits it operates on, including the ancilla qubits. This quantity is important as it represents the number of qubits that have to be manipulated simultaneously and coherently. We will also call this quantity quantum memory.

When we will know much more precisely how quantum architectures look like, it will be possible to make these models more precise and replace the gate model with something more adequate. The gate model is still the most widely used in the scientific community and is very practical to compare different algorithms. We will use the gate model as our main model for computing quantum times but we will also include other interesting quantum figures of merit, such as quantum memory or Quantum Random Access Memory usage.

2.2 Quantum Random Access Memory

Quantum Random Access Memory (denoted hereafter QRAM) is a type of quantum operation which is not captured by the circuit model. Consider N registers \(x_1,\dots ,x_N \in \{0,1\}^d\) stored in memory. A QRAM operation consists of applying the following unitary

We say that we are in the \(\mathrm {QRAM}\) model if the above unitary can be constructed efficiently, typically in time \(O(d + \log (N))\).

\(\mathrm {QRAM}\) operations are theoretically allowed by the laws of quantum mechanics and there are some proposals for building efficiently \(\mathrm {QRAM}\) operations, such as [GLM08], even though its robustness has been challenged in [AGJO+15]. The truth is that it very premature to know whether \(\mathrm {QRAM}\) operations will be efficiently available in quantum computers. This would definitely require a major hardware breakthrough but as does quantum computing in general.

While our results are mainly in the QRAM model, we also discuss other metrics where the cost of a QRAM operation is not logarithmic in N but has a cost of \(N^x\) for a constant x.

2.3 Grover Algorithm

One formulation of Grover’s search problem [Gro96] is the following. We are given a list of data \(x_1, \dots , x_r\), with \(x_i \in {E}\). Given a function \(f : {E} \rightarrow \{0,1\}\), the goal is to find an i such that \(f(x_i)=1\), and to output “no solution” if there are no such i. Let \(\mathrm {Sol}= \{i \in [r]: f(x_i) = 1\}\).

Classically, we cannot solve this problem with a better average complexity than \(\varTheta (\frac{r}{|\mathrm {Sol}|})\) queries, which is done by examining random \(x_i\) one by one until we find one whose image is 1 through f. Quantum computing allows a better complexity. Grover’s algorithm solves this search problem in \(O\left( \sqrt{\frac{r}{|\mathrm {Sol}|}}\right) \) queries to f. Applying Grover’s algorithm this way requires efficient QRAM access to the data \(x_1,\dots ,x_r\).

2.4 Quantum Random Walks

We present here briefly quantum random walks (QRW). There are several variants of QRW and we will use the \(\mathrm {MNRS}\) framework, first presented in [MNRS11].

We start from a graph \(G = (V,E)\) where V is the set of vertices and \(E \subseteq V \times V\) is the set of edges. We do not allow self loops which means that \(\forall x \in V, (x,x) \notin E\) and the graph will be undirected so \((x,y) \in E \Rightarrow (y,x) \in E\). Let also \(N(x) = \{y : (x,y) \in E\}\) be the set of neighbors of x. We have a set \(M \subseteq V\) of marked elements and the goal of a QRW is to find \(v \in M\).

Let \(\varepsilon = \frac{|M|}{|V|}\) be the fraction of marked vertices and let \(\delta \) be the spectral gap of GFootnote 6. For any vertex x, we define \(|p_x\rangle = \sum _{y \in N(x)} \frac{1}{\sqrt{|N(x)|}} |y\rangle \). We also define \(|U\rangle = \frac{1}{\sqrt{|V|}} \sum _{x \in V} |x\rangle |p_x\rangle \). We now define the following quantities:

-

\(\mathrm {SETUP}\) cost \(\mathbf {\mathcal {S}}\): the \(\mathrm {SETUP}\) cost \(\mathbf {\mathcal {S}}\) is the cost of constructing \(|U\rangle \).

-

\(\mathrm {UPDATE}\) cost \(\mathcal {U}\): here, it is the cost of constructing the unitary

$$U_{\mathrm {UPDATE}} : |x\rangle |0\rangle \rightarrow |x\rangle |p_x\rangle . $$ -

\(\mathrm {CHECK}\) cost \(\mathcal {C}\): it is the cost of computing the function \(f_{\mathrm {CHECK}} : V \rightarrow \{0,1\}\) where \(f_{\mathrm {CHECK}}(v) = 1 \Leftrightarrow v \in M\).

Proposition 1

[MNRS11]. There exists a quantum random walk algorithm that finds a marked element \(v \in M\) in time

Quantum Random Walks on the Johnson Graph. A very standard graph on which we can perform QRW is the Johnson graph J(n, r). Each vertex v consists of r different (unordered) points \(x_1,\dots ,x_r \in [n]\) as well as some additional data D(v) that depends on the QRW we want to perform.

\(v = (x_1,\dots ,x_r,D(v))\) and \(v' = (x'_1,\dots ,x'_r,D(v'))\) form an edge in J(n, r) iff. we can go from \((x_1,\dots ,x_r)\) to \((x'_1,\dots ,x'_r)\) by removing exactly one value and then adding one value. The Johnson graph J(n, r) has spectral gap \(\delta = \frac{n}{r(n-r)} \approx \frac{1}{r}\) when \(r \ll n\) [dW19].

The additional data D(v) here is used to reduce the checking time \(\mathcal {C}\) with the drawback that it will increase the update time \(\mathcal {U}\). Johnson graphs were often used, for example when trying to solve the element distinctness problem [Amb07], but also for the subset-sum problem [BJLM13, HM18, BBSS20] or for code-based problems [KT17].

Quantum Data Structures. A time analysis of quantum random walks on the Johnson graph was done in [Amb07] when studying the element distinctness problem. There, Ambainis presented a quantum data structure that uses efficient QRAM that allows in particular insertion and deletion in \(O(\log (n))\) time where n is the database size while maintaining this database in quantum superposition. Another paper on quantum algorithm for the subset problem using quantum random walks [BJLM13] also presents a detailed analysis of a quantum data structure based on radix trees to perform efficient insertion and deletion in quantum superposition. All of these data structures require as much QRAM registers as the number of registers to store the whole database and this running time holds only in the QRAM model. In our work, we will use such a quantum data structure and refer to the above two papers for explicit details on how to construct them.

3 Lattice and Geometric Preliminaries

Notations. Let d be a positive integer. The norm \(\left\Vert \cdot \right\Vert \) we use throughout this paper is the Euclidian norm, so for a vector \(\vec {v}= (v_1,\dots ,v_d) \in \mathbb {R}^d\), \(\Vert \vec {v}\Vert = \sqrt{\sum _{i = 1}^d v_i^2}\). The inner product of \(\vec {v}= (v_1,\dots ,v_d)\) and \(\vec {w}= (w_1,\dots ,w_d)\) is \(\langle \vec {v}, \vec {w}\rangle :=\sum _{i = 1}^d v_i w_i\). The non-oriented angle between \(\vec {v}\) and \(\vec {w}\) is denoted \(\theta (\vec {v}, \vec {w}) :=\arccos \left( \frac{\langle {\vec {v}},{\vec {w}}\rangle }{\left\Vert \vec {v}\right\Vert \left\Vert \vec {w}\right\Vert }\right) \). We denote the d-dimensional sphere of radius R by \(\mathcal {S}^{d-1}_R := \{\vec {v}\in \mathbb {R}^d : \Vert \vec {v}\Vert =R \}\), and \(S^{d-1} := S^{d-1}_1\). Throughout the paper, for a known integer d, we will write \(N :=(\sqrt{4/3})^{d}\). The meaning of this number N is detailed in Sect. 3.1.

Lattices and SVP. The d-dimensional lattice \(\mathcal {L} \subset \mathbb {R}^m\) generated by the basis \(B = (b_1, ... , b_n)\) with \(\forall i, b_i \in \mathbb {R}^m\) is the set of all integer linear combinations of its basis vectors: \( \mathcal {L}(B) = \Big \{ \sum _{i=1}^{d} \lambda _i ~ b_i , ~ \lambda _i \in \mathbb {Z} \Big \}\). Given a basis of a lattice \(\mathcal {L}\), the Shortest Vector Problem (SVP) asks to find a non-zero vector in \(\mathcal {L}\) of minimal norm. SVP is known to be NP-hard [Ajt98]. This problem and its derivatives (SIS, LWE) have been used in several public-key cryptosystems, specifically as candidate for quantum-resistant cryptography [DKL+19, FHK+19, CDH+19]. Thereby, one of the most important ways to know their security and choose parameters is to estimate the computational hardness of the best SVP-solving algorithms.

3.1 An Overview of Sieving Algorithms for SVP

The algorithm LLL [LLL82] returns a reduced basis of a lattice in a polynomial time. However it is not sufficient to solve SVP. All the fastest known algorithms to solve SVP run in exponential time. A first method is enumeration [Kan83], that solves deterministically SVP using low space but in super-exponential time in the lattice dimension d.

Another method, which will interest us in this article, is lattice sieving [NV08, MV10]. They are heuristic algorithms that probably solve SVP in time and space \(2^{\varOmega (d)}\). To this day, the best complexity for sieving in the QRAM model is obtained by quantum hypercone LSF [Laa16] in \(2^{0.2653d + o(d)}\) time and \(2^{0.2075d + o(d)}\) space. Another algorithm [KMPM19] uses k-lists to solve SVP in \(2^{0.2989d + o(d)}\) time and \(2^{0.1395d + o(d)}\) space.

The NV-sieve. The NV-sieve [NV08] is a heuristic algorithm. It starts with a list of lattice vectors, that we can consider of norm at most 1 by normalization. Given this list and a constant \(\gamma <1\), the NV-sieve returns a list of lattice vectors of norm at most \(\gamma \). It iteratively builds lists of shorter lattice vectors by applying a sieve. This sieve step consists in computing all the sums (plus and minus) of two list vectors, and fills the output list with those which have norm at most \(\gamma \). For \(\gamma \) tending to 1, two vectors form a reducing pair - i.e. their sum is of norm at most \(\gamma \) - iff. they are of angle at most \(\pi /3\). The first list of lattice vectors can be sampled with Klein’s algorithm [Kle00] for example. A list size of \(N^{1 + o(1)} = (\sqrt{4/3})^{d+o(d)}\) suffices to have about one reducing vector in the list for each list vector, as stated in [NV08]. Because of the norms of the list vectors reduces with a factor by \(\gamma < 1\) at each application of the algorithm, the output list will hopefully contain a non-zero shortest lattice vector after a polynomial number of application of the NV-sieve.

NNS and Application to Lattice Sieving. A logic improvement of this algorithm is to use Neighbor Nearest Search (NNS) [IM98] techniques. The NNS problem is: given a list L of vectors, preprocess L such that one can efficiently find the nearest vector in L to a target vector given later. Used in the NV-sieve, the preprocessing partitions the input list in several buckets of lattice points, each bucket being associated with a hash function. The algorithm will only sum vectors from a same bucket, which are near to each other, instead of trying all pairs of vectors.

Locality-Sensitive Hashing (LSH). A method to solve NNS is locality-sensitive hashing (LSH) [IM98]. An LSH function is a hash-function that have high probability to collide for two elements if they are close, and a low one if they are far. Several categories of LSH functions exists: hyperplane LSH [Cha02], hypercone or spherical LSH [AINR14, AR15] and cross-polytope LSH [TT07].

Locality-Sensitive Filtering (LSF). More recently, [BDGL16] improved NNS solving by introducing locality-sensitive filtering (LSF). LSF functions, called filters, map a vector \(\vec {v}\) to a boolean value: 1 if \(\vec {v}\) survives the filter, and 0 otherwise. These filters are instantiated by hypercone filters, which correspond to spherical caps centered around points which form an efficiently decodable code on the sphere (the efficient decodability part ensures that we can efficiently determine whether a point is in a filter or not). We present now geometrical preliminaries that will allow us to describe more formally these algorithms.

3.2 Geometrical Preliminaries

Spherical Caps/Hypercone Filters. We define the spherical cap of center \(\vec {v}\) and angle \(\alpha \) as follows:

We will use spherical caps to filter points that are close to a center \(\vec {v}\) and we will sometimes use the term filter or hypercone filter to descibe a spherical cap.

Proposition 2

[MV10]. For an angle \(\alpha \in [0, \pi /2]\) and \(\vec {v}\in \mathcal {S}^{d-1}\), the ratio of the volume of a spherical cap \(\mathcal {H}_{\vec {v}, \alpha }\) to the volume of the sphere \(\mathcal {S}^{d-1}\) is

Proposition 3

[BDGL16]. For an angle \(\alpha \in [0,\pi /2]\) and two vectors \(\vec {v}, \vec {w}\in \mathcal {S}^{d-1}\) such that \(\theta (\vec {v}, \vec {w}) = \theta \), the ratio of the volume of a wedge \(\mathcal {H}_{\vec {v}, \alpha } \cap \mathcal {H}_{\vec {w}, \alpha }\) to the volume of the sphere \(\mathcal {S}^{d-1}\) is

If we consider any vector \(\vec {w}\in \mathbf {\mathcal {S}}^{d-1}\) and \(N^{\rho _0}\) random points \(\vec {s}_1,\dots ,\vec {s}_{N^{\rho _0}}\) in \(\mathcal {H}_{\vec {v},\alpha }\) for \(\rho _0 := \frac{\mathbf {\mathcal {V}}_d(\alpha )}{\mathbf {\mathcal {W}}_d(\alpha ,\theta )}\) ; then this proposition implies that there exists, with constant probability, an \(i \in [N^{\rho _0}]\) such that \(\vec {s}_i \in \mathcal {H}_{\vec {w},\alpha }\).

Reducing Vectors at the Border of a Spherical Cap. The idea a first putting vectors in a spherical cap is that 2 vectors \(\vec {x}_0,\vec {x}_1 \in \mathcal {H}_{\vec {s},\alpha }\) have a larger probability of being reducible (i.e. \(\theta (\vec {x}_0,\vec {x}_1) \le \pi /3\)) than random vectors. We quantify this now. We first define the border of a filter

Working with points on the border makes the calculations easier. We argue later that random points in a spherical cap \(\mathcal {H}_{\vec {v}, \alpha }\) are actually very close to the border, so this result will approximately be also true for random points in a spherical cap.

Proposition 4

Let \(\vec {x}_0,\vec {x}_1 \in \mathcal {B}_{\vec {s}, \alpha }\). This means we can write

with \(\vec {y}_0,\vec {y}_1\) of norm 1 and orthogonal to \(\vec {s}\). We have for \(\alpha \in (0,\pi /2]\)

Proof

We denote for simplicity \(\theta _y := \theta (\vec {y}_0,\vec {y}_1)\). By subtracting Eqs. 2 from 1 and then by squaring, we have

\(\square \)

These \(\vec {y}_0,\vec {y}_1\) will be called residual vectors. If \(\vec {x}_0,\vec {x}_1\) are random points in the border then the \(\vec {y}_0,\vec {y}_1\) are called the residual points and are random points of \(\mathbf {\mathcal {S}}_{d-1}\) (the sphere of dimension \(d-1\) of points orthogonal to \(\vec {s}\)). From there, we have

Corollary 2

Let \(\alpha \in (0,\pi /2]\) and a random pair of vectors \(\vec {x}_0,\vec {x}_1 \in \mathcal {B}_{\vec {s},\alpha }\). The probability that the pair is reducing is equal to \(\mathbf {\mathcal {V}}_{d-1}(\theta ^*_\alpha )\), with

Notice that we have \(\mathbf {\mathcal {V}}_{d-1}\) because we work with residual vectors (orthogonal to \(\vec {s}\)) but since \(\mathbf {\mathcal {V}}_d\) and \(\mathbf {\mathcal {V}}_{d-1}\) are asymptotically equivalent, we will keep writing \(\mathbf {\mathcal {V}}_d(\theta ^*_\alpha )\) everywhere for simplicity.

Probabilistic Arguments. We first recall the multiplicative Chernoff bound.

Proposition 5

(Multiplicative Chernoff bound, see for example [TKM+13]). Suppose \(X_1, ..., X_M\) are independent random variables taking values in \(\{0,1\}\), \(Y = \sum _{i = 1}^M\) and \(\delta > 0\). We have

We now present a direct application of this bound which we will use in our analysis. Consider a set \(S = \vec {s}_1,\dots ,\vec {s}_M\) points taken from the uniform distribution on the sphere \(S^{d-1}\) and \(\vec {v}\) another point randomly chosen on the sphere. Fix also an angle \(\alpha \in (0,\pi /2)\). We have the following statements:

Proposition 6

\(\forall i \in [M], \ \Pr [\vec {v}\in \mathcal {H}_{\vec {s}_i,\alpha }] = \Pr [\vec {s}_i \in \mathcal {H}_{\vec {v},\alpha }] = \mathbf {\mathcal {V}}_d(\alpha )\).

Proof

Immediate by definition of \(\mathbf {\mathcal {V}}_d(\alpha )\) considering that both \(\vec {v}\) and \(\vec {s}_i\) are uniform random points on the sphere. \(\square \)

From the above proposition, we immediately have that \(\mathbb {E}[|S \cap \mathcal {H}_{\vec {v},\alpha }|] = M\mathbf {\mathcal {V}}_d(\alpha )\). We now present a standard concentration bound for this quantity.

Proposition 7

Assume we have \(M\mathbf {\mathcal {V}}_d(\alpha ) = N^x\) with \(x > 0\) an absolute constant. Then

Proof

Let \(X_i\) be the random variable which is equal to 1 if \(\vec {s}_i \in \mathcal {H}_{\vec {v},\alpha }\) and is equal to 0 otherwise. Let \(Y = \sum _{i = 1}^M X_i\) so \(\mathbb {E}[Y] = N^x\). Y is equal to the quantity \(|S \cap \mathcal {H}_{\vec {v},\alpha }|\). A direct application of the multiplicative Chernoff bound gives

which is the desired result. \(\square \)

Random Product Codes (RPC). We assume \(d=m \cdot b\), for \(m=O({\mathsf {polylog}}(d))\) and a block size b. The vectors in \(\mathbb {R}^d\) will be identified with tuples of m vectors in \(\mathbb {R}^b\). A random product code C of parameters [d, m, B] on subsets of \(\mathbb {R}^d\) and of size \(B^m\) is defined as a code of the form \(C = Q \cdot (C_1 \times C_2 \times \cdots C_m),\) where Q is a uniformly random rotation over \(\mathbb {R}^d\) and the subcodes \(C_1, ..., C_m\) are sets of B vectors, sampled uniformly and independently random over the sphere \(\sqrt{1/m} \cdot \mathbf {\mathcal {S}}^{b-1}\), so that codewords are points of the sphere \(S^{d-1}\). We can have a full description of C by storing mB points corresponding to the codewords of \(C_1,\dots ,C_m\) and by storing the rotation Q. When the context is clear, C will correspond to the description of the code or to the set of codewords. Random product codes can be easily decoded in some parameter range:

Proposition 8

([BDGL16]). Let C be a random product code of parameters [d, m, B] with \(m = \log (d)\) and \(B^m = N^{O(1)}\). For any \(\vec {v}\in S^{d-1}\) and \(\alpha \in [0,\pi /2]\), one can compute \(\mathcal {H}_{\vec {v},\alpha } \cap C \) in time \(N^{o(1)} {\cdot }|\mathcal {H}_{\vec {v},\alpha } \cap C|\).

4 General Framework for Sieving Algorithms Using LSF

We present here a general framework for sieving algorithms using LSF. We present here one sieving step where we start from a list L of \(N' = N^{1 + o(1)}\) lattice vectors of norm 1 and output \(N'\) lattice vectors of norm \(\gamma < 1\). Sieving algorithms for SVP then consists of applying this subroutine \({\mathsf {poly}}(d)\) times (where we renormalize the vectors at each step) to find at the end a small vector. We can actually take \(\gamma \) very close to 1 at each iteration, and we refer for example to [NV08] for more details. This framework will encompass the best classical and quantum sieving algorithms.

The \(\mathbf {FindAllSolutions}(f_\alpha (\vec {s}_i),\gamma )\) subroutine starts from a list of vectors \(\vec {x}_1,\dots ,\vec {x}_{N^c} \in f_\alpha (\vec {s}_i)\) and outputs all vectors of the form \(\vec {x}_i \pm \vec {x}_j\) (with \(i \ne j\)) of norm less than \(\gamma \). We want to find asymptotically all the solutions and not strictly all of them. Let’s say here we want to output half of them. Sometimes, there are no solutions so the algorithm outputs an empty list.

4.1 Analysis of the Above Algorithm

Heuristics and Simplifying Assumptions. We first present the heuristic arguments and simplifying assumptions we use for our analysis.

-

1.

The input lattice points behave like random points on the sphere \(\mathbf {\mathcal {S}}^{d-1}\). Also, at each step of the sieving process, the points of the list L behave like random points on the sphere. The relevance of this heuristic has been studied and confirmed in a few papers starting from the initial NV-sieve [NV08].

-

2.

The code points of C behave like random points of the sphere \(\mathbf {\mathcal {S}}^{d-1}\). This was argued in [BDGL16], see for instance Lemma 5.1 and Appendix C therein.

-

3.

We assume that a random point in \(f_\alpha (\vec {s}_i)\) is on the border of the filter, i.e. that it can be written \(\vec {x}= \cos (\alpha ) \vec {s}_i + \sin (\alpha ) \vec {y}\) with \(\vec {y}\bot \vec {s}_i\) and of norm 1. As we argue below, this will be approximately true with very high probability.

In order to argue point 3, notice that for any angle \(\alpha \in (\pi /4, \pi /2)\) and \(\varepsilon > 0\), we have \(\mathbf {\mathcal {V}}_d(\alpha ) \gg \mathbf {\mathcal {V}}_d(\alpha -\epsilon )\). Indeed, for an angle \(\epsilon >0\), \(\mathbf {\mathcal {V}}_d(\alpha -\epsilon )=\sin ^d(\alpha -\epsilon ) = \mathbf {\mathcal {V}}_d(\alpha ) \cdot (\epsilon ')^d\) with \(\epsilon ' = \cos \epsilon - \frac{\sin \epsilon \cos \alpha }{\sin \alpha } < 1\) for \(\alpha > \epsilon \). So the probability for a point to be at angle \(\alpha \) with the center of the cap is exponentially higher than to be at angle \(\alpha -\epsilon \). That justifies that with very high probability, points in \(f_\alpha (\vec {s}_i)\) lie very close to the border of the cap and hence justifies point 3.

Completion. We start from a list L of \(N'\) points. The heuristic states that each point in L is modeled as a random point on the sphere \(S^{d-1}\) so each pair of points \(\vec {x},\vec {x}' \in L\) reduces with probability \(\mathbf {\mathcal {V}}_d(\pi /3) = \frac{1}{N}\). Since there are \(\frac{N'(N'-1)}{2}\) pairs of points in L, we have on average \(\frac{N'(N'-1)}{2N}\) pairs in L that are reducible. We can take for example \(N' = 6N\) to ensure that there are on average \(\approx 3N'\) pairs. Therefore, each time we find a random reducible pair, with probability at least \(\frac{3N' - |L'|}{3N'} \ge 2/3\), it wasn’t already in the list \(L'\).

Time Analysis. From Corollary 2, we have that for an \(\alpha \)-filter that has \(N^c\) points randomly distributed in this filter, the expected number of reducing pairs is \(N^{2c} \cdot \mathbf {\mathcal {V}}_{d-1}(\theta ^*_\alpha )\). We now present the full time analysis of the above algorithm.

Proposition 9

Consider Algorithm 1 with parameter \(c \in [0,1]\) and associated angle \(\alpha \in [\pi /3, \pi /2]\) satisfying \(\mathbf {\mathcal {V}}_d(\alpha ) = N^{-(1-c)}\). Let \(\zeta \) such that \(N^{\zeta } = N^{2c} {\cdot }\mathbf {\mathcal {V}}_{d-1}(\theta ^*_\alpha )\). The above algorithm runs in time \(T = \mathrm {NB}_{\mathrm {REP}}{\cdot }(\mathrm {INIT}+ \mathrm {FAS})\) where

where \(\mathrm {FAS}_1\) the running time of a single call to the \({\mathbf {FindAllSolutions}}\) subroutine.

Proof

We first analyze the two for loops. \(\mathrm {INIT}\) is the running time of the first loop. For each point \(\vec {v}\in L\), we need to compute \(\mathcal {H}_{\vec {v},\alpha } \cap C\) and update the corresponding buckets \(f_\alpha (\vec {s}_i)\). We have \(|C| = N^{1-c}\) and we chose \(\alpha \) such that \(\mathbf {\mathcal {V}}_d(\alpha ) = N^{-(1-c)}\), so the expected value of \(|\mathcal {H}_{\vec {v},\alpha } \cap C|\) is 1. For each point \(\vec {v}\), we can compute \(\mathcal {H}_{\vec {v},\alpha } \cap C\) in time \(N^{o(1)}|\mathcal {H}_{\vec {v},\alpha }|\) using Proposition 8. From there, we can conclude that we compute the filter for the \(N'\) points in time \(\mathrm {INIT}= N^{1 + o(1)}\).

The second loop runs in time \(\mathrm {FAS}= N^{1-c} \mathrm {FAS}_1\) by definition. After this loop, the average number of solutions found is \(N^{\zeta }\) for each call to \(\mathbf {FindAllSolutions}\) so \(N^{1-c+\zeta } \) in total (notice that we can have \(\zeta < 0\), which means that we can find on average much less that one solution for each call of \(\mathbf {FindAllSolutions}\)). We run the while loop until we find \(N'\) solutions so we must repeat this process \(\mathrm {NB}_{\mathrm {REP}}= \max \{1,N^{1 - (1 - c + \zeta ) + o(1)}\} = \max \{1,N^{c - \zeta + o(1)}\}\) times. \(\square \)

This formulation of sieving algorithms is easy to analyze. Notice that the above running time depends only on c (since \(\alpha \) can be derived from c and \(\zeta \) can be derived from \(c,\alpha \)) and on the \(\mathbf {FindAllSolutions}\) subroutine. We now retrieve the best known classical and quantum sieving algorithm in this framework.

Best Classical Algorithm. In order to retrieve the time exponent of [BDGL16], we take \(c \rightarrow 0\), which implies \(\alpha \rightarrow \pi /3\). We can compute \(\theta ^*_{\pi /3} \approx 1.23\text {rad} \approx 70.53^{\circ }\) and \(\zeta = -0.4094\). In this case, we have \(\mathrm {FAS}_1 = O(1)\). From the above proposition, we get a total running time of \(T = N^{1.4094 + o(1)} = 2^{0.2925d + o(d)}\).

Best Quantum Algorithm. In order to retrieve the time exponent of [Laa16], we take \(c = 0.2782\). This value actually corresponds to the case where \(\zeta = 0\), so we have on average one solution per \(\alpha \)-filter. For the \(\mathbf {FindAllSolutions}\) subroutine, we can apply Grover’s algorithm on pairs of vectors in the filter to find this solution in time \(\sqrt{N^{2c}} = N^{c}\) (there are \(N^{2c}\) pairs) so \(\mathrm {FAS}_1 = N^c\). Putting this together, we obtain \(T = N^{1+c + o(1)} = N^{1.2782 + o(1)} = 2^{0.2653d + o(d)}\).

In the next section, we show how to improve the above quantum algorithm. Our main idea is to replace Grover’s algorithm used in the \(\mathbf {FindAllSolutions}\) subroutine with a quantum random walk. In the next section, we present the most natural quantum walk which is done over a Johnson graph and where a vertex is marked if the points of a vertex contain a reducible pair, in a similar way than for element distinctness. We then show in a later section how this random walk can be improved by relaxing the condition on marked vertices.

5 Quantum Random Walk for the FindAllSolutions Subroutine: A First Attempt

5.1 Constructing the Graph

We start from an unordered list \(\vec {x}_1,\dots ,\vec {x}_{N^c}\) of distinct points in a filter \(f_\alpha (\vec {s})\) with \(\alpha \) satisfying \(\mathbf {\mathcal {V}}_d(\alpha ) = \frac{1}{N^{1-c}}\). Let \(L_x\) be this list of \(\vec {x}_i\). For each \(i \in [N^c]\), we write \(\vec {x}_i = \cos (\alpha ) \vec {s}+ \sin (\alpha )\vec {y}_i\) where each \(\vec {y}_i\) is of norm 1 and orthogonal to \(\vec {s}\). Recall from Proposition 4 that a pair \((\vec {x}_i,\vec {x}_j)\) is reducible iff. \(\theta (\vec {y}_i,\vec {y}_j) = \theta ^*_\alpha = 2\arcsin (\frac{1}{2\sin (\alpha )})\). We will work only on the residual vectors \(\vec {y}_i\) and present the quantum random walk that finds pairs \(\vec {y}_i,\vec {y}_j\) such that \(\theta (\vec {y}_i,\vec {y}_j) = \theta ^*_\alpha \) more efficiently than with Grover’s algorithm. Let \(L_y = \vec {y}_1,\dots ,\vec {y}_{N^c}\) be the list of all residual vectors.

The quantum walk has two extra parameters \(c_1 \in [0,c]\) and \(c_2 \in [0,c_1]\). From these two parameters, let \(\beta \in [\pi /3,\pi /2]\) st. \(\mathbf {\mathcal {V}}_{d}(\beta ) = N^{c_2 - c_1}\) and \({\rho _0}\) st. \(N^{\rho _0} = \frac{\mathbf {\mathcal {V}}_d(\beta )}{\mathbf {\mathcal {W}}_d(\beta ,\theta ^*_\alpha )}\). We start by sampling a random product code \(\mathcal {C}_2\) with parameters \([(d-1),\log (d-1),N^{\frac{{\rho _0} + c_1 - c_2}{\log (d-1)}}]\) which has therefore \(N^{{\rho _0} + c_1 - c_2} = \frac{1}{\mathbf {\mathcal {W}}_d(\beta ,\theta ^*_\alpha )}\) points denoted \(\vec {t}_1,\dots ,\vec {t}_{N^{{\rho _0} + c_1 - c_2}}\). We perform our quantum random walk on a graph \(G = (V,E)\) where each vertex \(v \in V\) contains:

-

An unordered list \(L^v_y = \vec {y}_1,\dots ,\vec {y}_{N^{c_1}}\) of distinct points taken from \(L_y\).

-

For each \(\vec {t}_i \in \mathcal {C}_2\), we store the list of elements of \( J^v(\vec {t}_i) := f_{\beta }(\vec {t}_i) \cap L^v_y\). For each \(\vec {t}_i\), we do this using a quantum data structure that stores \(J^v(\vec {t}_i) \) where we can add and delete efficiently in quantum superposition. This can be done with QRAM. Notice that we have on average

$$|J^v(\vec {t}_i) |= N^{c_1} {\cdot }\mathbf {\mathcal {V}}_{d}(\beta ) = N^{c_2},$$and we need to store in total \(|\mathcal {C}_2| {\cdot }N^{c_2} = N^{c_1 + {\rho _0}}\) such elements in total for each vertex.

-

A bit that says whether the vertex is marked (we detail the marked condition below).

The vertices of G consists of the above vertices for all possible lists \(L^v_y\). We have \((v,w) \in E\) if we can go from \(L_y^v\) to \(L_y^w\) by changing exactly one value. In order words

This means the graph G is exactly a Johnson graph \(J(N^c,N^{c_1})\) where each vertex also has some additional information as we described above. Once we find a marked vertex, it contains a pair \((\vec {y}_i,\vec {y}_j)\) such that \(\theta (\vec {y}_i,\vec {y}_j) \le \theta ^*_\alpha \) from which we directly get a reducible pair \((\vec {x}_i,\vec {x}_j)\).

Condition for a Vertex to be Marked. We define the following subsets of vertices. We first define the set \(M_0\) vertices for which there exists a pair of points which is reducible.

Ideally, we would want to mark each vertex in \(M_0\), however this would induce a too large update cost when updating the bit that specifies whether the vertex is marked or not. Instead, we will consider as marked vertices subsets of \(M_0\) but for which the update can be done more efficiently, but losing only a small fraction of the marked vertices. For each \(J^v(\vec {t}_i)\), we define \(\widetilde{J}^v(\vec {t}_i)\) which consists of the first \(2N^{c_2}\) elements of \(J^v(\vec {t}_i)\)Footnote 7 and if \(|J^v(\vec {t}_i)| \le 2N^{c_2}\), we have \(\widetilde{J}^v(\vec {t}_i) = J^v(\vec {t}_i)\). We define the set of marked elements M as follows:

The reason for using such a condition for marked vertices is that when we will perform an update, hence removing a point \(\vec {y}_{old}\) from a vertex and adding a point \(\vec {y}_{new}\), we will just need to look at the points in \(\widetilde{J}^v(\vec {t})\) for \(\vec {t}\in f_\beta (\vec {y}_{new}) \cap \mathcal {C}_2\) which can be done faster than by looking at all the points of the vertex. If we used \(J^v(\vec {t})\) instead of \(\widetilde{J}^v(\vec {t})\) then the argument would be simpler but we would only be able to argue about the average running time of the update but the quantum walk framework require to bound the update for any pair of adjacent verticesFootnote 8. Also notice that each vertex still contain the sets \(J^v(\vec {t}_i)\) (from which one can easily compute \(\widetilde{J}^v(\vec {t}_i)\)).

5.2 Time Analysis of the Quantum Random Walk on This Graph

We are now ready to analyze our quantum random walk, and compute its different parameters. Throughout our analysis, we define \(K(\vec {y}_i) :=f_\beta (\vec {y}_i) \cap \mathcal {C}_2\) and we have on average

Using Proposition 7, we have for each i,

and using a union bound, we have for any absolute constant \(\rho _0 > 0\):

So for a fixed \(\alpha \)-filter, we have with high probability that each \(|K(\vec {y}_i)|\) is bounded by \(2N^{\rho _0}\) and we assume we are in this case. The sets \(K(\vec {y}_i)\) can hence be constructed in time \(N^{{\rho _0} + o(1)}\) using the decoding procedure (Proposition 8) for \(\mathcal {C}_2\).

Setup Cost. In order to construct a full vertex v from a list \(L^v_y = \vec {y}_1,\dots ,\vec {y}_{N^{c_1}}\), the main cost is to construct the lists \(J^v(\vec {t}_i) = f_\beta (\vec {t}_i) \cap L^v_y\). To do this, we start from empty lists \(J^v(\vec {t}_i)\). For each \(\vec {y}_i \in L^v_y\), we construct the list \(K(\vec {y}_i) = f_\beta (\vec {y}_i) \cap \mathcal {C}_2\) and for each codeword \(\vec {t}_j \in K(\vec {y}_i)\), we add \(\vec {y}_i\) in \(J^v(\vec {t}_i)\).

This takes time \(N^{c_1} {\cdot }N^{{\rho _0} + o(1)}\). We can perform a uniform superposition of the vertices by performing the above procedure in quantum superposition. This can also be done in \(N^{c_1} {\cdot }N^{{\rho _0} + o(1)}\) since we use a quantum data structure that performs these insertions in \(J^v(\vec {t}_i)\) efficiently. So in conclusion,

Update Cost. We show here how to go from a vertex v with associated list \(L_y^v\) to a vertex w with \(L_y^w = \left( L_y^v\backslash \{\vec {y}_{old}\}\right) \cup \{\vec {y}_{new}\}\). We start from a vertex v so we also have the lists \(J^v(\vec {t}_i)= f_\beta (\vec {t}_i) \cap L^v_y\).

In order to construct the lists \(J^w(\vec {t}_i)\), we first construct \(K(\vec {y}_{old}) = f_\beta (\vec {y}_{old}) \cap \mathcal {C}_2\) and for each \(\vec {t}_i\) in this set, we remove \(\vec {y}_{old}\) from \(J^v(\vec {t}_i)\). Then, we construct \(K(\vec {y}_{new}) \) and for each \(\vec {t}_i\) in this set, we add \(\vec {y}_{new}\) to \(J^v(\vec {t}_i)\), thus obtaining all the \(J^w(\vec {t}_i)\). Constructing the two lists takes time on average \(N^{{\rho _0} + o(1)}\) and we then perform at most \(2N^{{\rho _0}}\) deletion and insertion operations which are done efficiently. These operations take \(N^{{\rho _0} + o(1)}\) deletions and insertions, which can be done efficiently.

If v was marked and \(\vec {y}_{old}\) is not part of the reducible pair then we do not change the last registers for \(L_y^w\). If v was not marked, then we have to ensure that adding \(\vec {y}_{new}\) doesn’t make it marked. So we need to check whether there exists \(\vec {y'} \ne \vec {y}_{new}\) such that

If such a point \(\vec {y}_0\) exists, it necessarily lies in the set \(\cup _{\vec {t}\in K(\vec {y}_{new})} \widetilde{J}^v(\vec {t})\) which is of size at most \(2N^{\rho }{\cdot }2N^{c_2} = 4N^{\rho _0 + c_2}\). We perform a Grover search on this set to determine whether there exists a \(\vec {y}_0 \in \cup _{\vec {t}\in \mathcal {C}_2} \widetilde{J}^v(\vec {t})\) that reduces with \(\vec {y}_{new}\), and this takes time \(N^{\frac{{\rho _0} + c_1 + o(1)}{2}}\). In conclusion, we have that the average update time is

Checking Cost. Each vertex has a bit that says whether it is marked or not so we have

Computing the Fraction of Marked Vertices Epsilon. We prove here the following proposition

Proposition 10

\(\varepsilon \ge \varTheta \left( \min \left\{ N^{2c_1}\mathbf {\mathcal {V}}_d(\beta ),1\right\} \right) \).

Proof

We consider a random vertex in the graph and lower bound the probability that it is marked. A sufficient condition for a vertex v to be marked is if it satisfies the following 2 events :

-

\(E_1:\)\(\exists \vec {t}\in \mathcal {C}_2, \exists \vec {y}_i,\vec {y}_j \ne \vec {y}_i \in J^v(\vec {t}), \text { st. } \theta (\vec {y}_i,\vec {y}_j) \le \theta ^*_{\alpha }\).

-

\(E_2 :\)\(\forall \vec {t}\in \mathcal {C}_2, |J^v(\vec {t})| \le 2N^{c_2}\).

The second property implies that \(\forall \vec {t}\in \mathcal {C}_2, \ J^v(\vec {t}) = \widetilde{J}^v(\vec {t})\) and in that case, the first property implies that v is marked. We now bound the probability of each event

Lemma 1

\(\Pr [E_1] \ge \varTheta \left( \min \left\{ N^{2c_1}\mathbf {\mathcal {V}}_d(\beta ),1\right\} \right) \).

Proof

For a fixed pair \(\vec {y}_i,\vec {y}_j \ne \vec {y}_i \in L^v_y\), we have \(\Pr [\theta (\vec {y}_i,\vec {y}_j) \le \theta ^*_\alpha ] = \mathbf {\mathcal {V}}_d(\theta ^*_\alpha )\). Since there are \(\varTheta (N^{2c_1})\) such pairs, if we define the event \(E_0\) as: \(\exists \vec {y}_i,\vec {y}_j \ne \vec {y}_i \in L^v_y, \text { st. } \theta (\vec {y}_i,\vec {y}_j) \le \theta ^*_\alpha \), we have

Now we assume \(E_0\) holds and we try to compute the probability that \(E_1\) is true conditioned on \(E_0\). So we assume \(E_0\) and let \(\vec {y}_i,\vec {y}_j \ne \vec {y}_i \in L^v_y, \text { st. } \theta (\vec {y}_i,\vec {y}_j) \le \theta ^*_\alpha \). For each code point \(\vec {t}\in \mathcal {C}_2\), we have

Therefore, we have

Since \(|\mathcal {C}_2 |= \frac{1}{\mathbf {\mathcal {W}}_d(\beta ,\theta ^*_\alpha )}\), we can conclude

which implies \(\Pr [E_1] \ge \Pr [E_1|E_0]{\cdot }\Pr [E_0] \ge \varTheta \left( \max \left\{ N^{2c_1}\mathbf {\mathcal {V}}_d(\beta ),1\right\} \right) \). \(\square \)

Lemma 2

\(\Pr [E_2] \ge 1 - |C_2|e^{-\frac{N^{c_2}}{3}}\).

Proof

For each \(\vec {t}\in \mathcal {C}_2\), we have using Proposition 7 that \(\Pr [|J^v(\vec {t})| \le 2N^{c_2}] \ge 1 - e^{-\frac{N^{c_2}}{3}}\). Using a union bound, we have

\(\square \)

We can now finish the proof of our Proposition. We have

The last inequality comes from the fact that \(|C_2|e^{-\frac{N^{c_2}}{3}}\) is vanishing doubly exponentially in d (N is exponential in d) so it is negligible compared to the first term and is absorbed by the \(\varTheta ({\cdot })\). \(\square \)

Computing the Spectral Gap Delta. We are in a \(J(N^c,N^{c_1})\) Johnson graph so we have

Running Time of the Quantum Walk. The running time \(T_1\) of the quantum walk is (omitting the o(1) terms and the \(O({\cdot })\) notations)

In this running time, we can find one marked vertex with high probability if it exists. We repeat this quantum random walk until we find \(\max \{\frac{N^{\zeta }}{2},1\}\) solutions.

For \(\zeta > 0\), there are \(N^{\zeta }\) different solutions that can be found in each \(\alpha \)-filter. Each time we find a solution, since the list of solutions found is \(< \frac{N^{\zeta }}{2}\). Therefore, the probability that each solutions found by the QRW is new is at least \(\frac{1}{2}\). We have therefore

If \(\zeta > 0\), our algorithm finds \(\varTheta (N^{\zeta })\) solutions in time \(N^{\zeta }T_1\) and if \(\zeta \le 0\), our algorithm finds 1 solution in time \(T_1\) with probability \(\varTheta (N^{-\zeta })\).

5.3 Memory Analysis

Classical Space. We have to store at the same time in classic memory the N list vectors of size d, and the buckets of the \(\alpha \)-filters. Each vector is in \(N^{o(1)}\) \(\alpha \)-filter, so our algorithm takes classical space \(N^{1 + o(1)}\).

Memory Requirements of the Quantum Random Walk. Each vertex v of the graph stores all the \(J^v(\vec {t}_i)\) which together take space \(N^{c_1 + {\rho _0}}\). We need to store a superposition of vertices so we need \(N^{c_1 + {\rho _0}}\) quantum registers and we need that same amount of QRAM because we perform insertions and deletions in the database in quantum superposition. All the operations require QRAM access to the whole list \(L_y\) which is classically stored and is of size \(N^c\). Therefore, we also require \(N^c\) QRAM.

5.4 Optimal Parameters for This Quantum Random Walk

Our algorithm takes in argument three parameters: \(c \in [0,1]\), \(c_1 \le c\) and \(c_2 \le c_1\) from which we can express all the other variables we use: \(\alpha \), \(\theta ^*_\alpha \), \(\beta \), \({\rho _0}\) and \(\zeta \). We recall these expressions as they are scattered throughout the previous sections:

-

\(\alpha \): angle in \([\pi /3,\pi /2]\) that satisfies \(\mathbf {\mathcal {V}}_d(\alpha ) = \frac{1}{N^{1-c}}\).

-

\(\theta ^*_\alpha = 2\arcsin (\frac{1}{2\sin (\alpha )})\).

-

\(\beta \): angle in \([\pi /3,\pi /2]\) that satisfies \(\mathbf {\mathcal {V}}_{d}(\beta ) = \frac{1}{N^{c_1 - c_2}}\).

-

\({\rho _0}\): non-negative real number such that \(N^{\rho _0} = \frac{\mathbf {\mathcal {V}}_d(\beta )}{\mathbf {\mathcal {W}}_d(\beta ,\theta ^*_\alpha )}\).

-

\(\zeta \): real number such that \(N^{\zeta } = N^{2c}\mathbf {\mathcal {V}}_{d}(\theta ^*_\alpha )\).

Plugging the value of \(\mathrm {FAS}_1\) from the end of Sect. 5.2 in Proposition 9, we find that the total running time of our quantum sieving algorithm with parameters \(c,c_1,c_2\) is

We ran a numerical optimization over \(c,c_1,c_2\) to get our optimal running time, summed up in the following theorem.

Proposition 11

Our algorithm with parameters

heuristically solves SVP on dimension d in time \(T = N^{1.2555 + o(1)} = 2^{0.2605d + o(d)}\), uses QRAMM of maximum size \(N^{0.3300 + o(1)} = 2^{0.0685d + o(d)}\), a quantum memory of size \(N^{0.2555 + o(1)} = 2^{0.0530d + o(d)}\) and uses a classical memory of size \(N^{1 + o(1)} = 2^{0.2075d + o(d)}\).

With these parameters, we obtain the values of the other parameters:

As well as the quantum walk parameters:

The equality \({\rho _0} = c_2\) allows to balance the time of the two operations during the update step. With these parameters we also obtain \( \mathbf {\mathcal {S}}= \mathcal {U}/{\sqrt{\epsilon ~ \delta }} = N^{c_1 + {\rho _0}} = N^{0.2555d}\), which balances the overall time complexity.

Notice that with these parameters, we can rewrite T as

Also, we have \(c_1 + {\rho _0} = c - \zeta \), which equalizes the random walk step with the initialization step. From our previous analysis, the amount of required QRAM is \(N^{c}\) and the amount of quantum memory needed is \(N^{c_1 + {\rho _0}}\).

6 Quantum Random Walk for the FindAllSolutions Subroutine: An Improved Quantum Random Walk

We now add a variable \(\rho \in (0,\rho _0]\) that will replace the choice of \(\rho _0\) above. \(\rho _0\) was chosen in order to make sure that if a pair \(\vec {y}_i,\vec {y}_j\) exists in a vertex v, then it will appear on one of the \(J^v(\vec {t})\) for \(\vec {t}\in \mathcal {C}_2\). However, we can relax this and only mark a small fraction of these vertices. This will reduce the fraction of marked vertices, which makes it harder to find a solution, but having a smaller \(\rho \) will reduce the running time of our quantum random walk.

The construction is exactly the same as in the previous section just that we replace \(\rho _0\) with \(\rho \). This implies that \(|C_2| = N^{\rho + c_1 - c_2}\). We can perform the same analysis as above

Time Analysis of this QRW in the Regime \(\zeta + \rho - \rho _0 > 0\). We consider the regime where \(\zeta + \rho - \rho _0 > 0\) and \(\rho \in (0,\rho _0]\) (in particular \(\zeta > 0\), since \(\rho _0 > 0\)). This regime ensures that even when if we have less marked vertices, then there on average more than one marked vertex, so our algorithm at least finds one solution with a constant probability.

The analysis walk is exactly the same than in Sect. 5.2, each repetition of the quantum random walk takes time \(T_1\) with

with

The only thing maybe to develop is the computation of \(\varepsilon \). We perform the same analysis as above but with \(|C_2| = N^{\rho + c_1 - c_2}\). This means that Eq. 5 of Lemma 1 becomes

which gives the extra term \(N^{\rho - \rho _0}\) in \(\varepsilon \). Another issue is that now, we can only extract \(N^{\zeta + \rho - \rho _0}\) solutions each time we construct the graph, we have therefore to repeat this procedure to find \(\frac{N^{\zeta + \rho - \rho _0}}{2}\) solutions with this graph and then repeat the procedure with a new code \(\mathcal {C}_2\). The algorithm becomes

With this procedure, we also find \(\varTheta (N^{\zeta })\) solutions in time \(N^{\zeta }T_1\) and \(\mathrm {FAS}_1 = N^{\zeta }T_1\) (Recall that we are in the case \(\zeta \ge \zeta + \rho - \rho _0 > 0\)). Actually, optimal parameters will be when \(c_2 = 0\) and \(\rho \rightarrow 0\).

6.1 Analysis of the Above Algorithm

This change implies that some reducing pairs are missed. For the quantum random walk complexity, this only change the probability, denoted \(\epsilon \), so that a vertex is marked. Indeed, it is equal to the one so that there happens a collision between two vectors through a filter, which is no longer equal to the existence of a reducing pair within the vertex. Indeed, to have a collision, there is the supplementary condition of both vectors of a reducing pair are inserted in the same filter, which is of probability \(N^{\rho _0-\rho }\). So we get a higher value of \(\epsilon = N^{2c_1} \mathbf {\mathcal {V}}_d(\theta _\alpha ^*) \cdot N^{\rho _0-\rho }\).

However, this increasing is compensated by the reducing of the costs of the setup (\(N^{c_1+\rho +o(1)}\)) and the update (\(2 N^{\max \{\rho , \frac{\rho +c_2}{2}\}+o(1)}\)).

A numerical optimisation over \(\rho , c, c_1\) and \(c_2\) leads to the following theorem.

Theorem 4

(Theorem 1 restated). Our algorithm with a free \(\rho \) with parameters

heuristically solves SVP on dimension d in time \(T = N^{1.2384 + o(1)} = 2^{0.2570 d + o(d)}\), uses QRAM of maximum size \(N^{0.3696} = 2^{0.0767d}\), a quantum memory of size \(N^{0.2384} = 2^{0.0495d}\) and uses a classical memory of size \(N^{1 + o(1)} = 2^{0.2075d + o(d)}\).

With these parameters, we obtain the values of the other parameters:

As well as the quantum walk parameters:

With these parameters, we also have \(\rho _0 = 0.107\) so we are in the regime where \(\zeta + \rho - \rho _0 > 0\). As in the previous time complexity stated in Theorem 11, we reach the equality \(S = U/\sqrt{\epsilon \delta }\), which allows to balance the time of the two steps of the quantum random walk: the setup and the search itself.

Notice that with these parameters, we can rewrite T as

With our optimal parameters, we have \(\rho = 0\) and \(c - \zeta = c_1\), which equalizes the random walk step with the initialization step. From our previous analysis, the amount of required QRAM is \(N^{c}\) and the amount of quantum memory needed is \(N^{c_1}\).

7 Space-Time Trade-Offs

By varying the values \(c, c_1, c_2\) and \(\rho \), we can obtain trade-offs between QRAM and time, and between quantum memory and time. All the following results come from numerical observations.

7.1 Trade-Off for Fixed Quantum Memory

We computed the minimized time if we add the constraint that the quantum memory must not exceed \(2^{Md}\). For a chosen fixed M, the quantum memory is denoted is \(2^{\mu _M d} = 2^{Md}\) and the corresponding minimal time by \(2^{\tau _M d}\). The variation of M also impacts the required QRAM to run the algorithm, that we denote by \(2^{\gamma _M d}\).

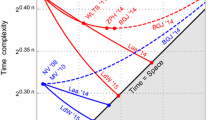

So we get a trade-off between time and quantum memory in Fig. 1, and the evolution of QRAM in function of M for a minimal time is in Fig. 2.

For more than \(2^{0.0495d}\) quantum memory, increasing it does not improve the time complexity anymore. An important fact is that for a fixed M the corresponding value \(\tau _M\) from Fig. 1 and \(\gamma _M\) from Fig. 2 can be achieved simultaneously with the same algorithm.

We observe that from \(M=0\) to 0.0495 these curves are very close to affine. Indeed, the function that passes through the two extremities points is of expression \(0.2653 - 0.1670M\). The difference between \(\tau _M\) and its affine approximation does not exceed \(4{\cdot }10^{-5}\). By the same way, the difference between \(\gamma _M\) and its affine average function of expression \(0.0578 + 0.3829M\) is inferior to \(2 {\cdot }10^{-4}\). All this is summarized in the following theorem.

Theorem 5

(Trade-off for fixed quantum memory). There exists a quantum algorithm using quantum random walks that solves SVP on dimension d which for a parameter \(M \in [0,0.0495]\) heuristically runs in time \(2^{\tau _M d + o(d)}\), uses QRAM of maximum size \(2^{\gamma _M d}\), a quantum memory of size \(2^{\mu _M d}\) and a classical memory of size \(2^{0.2075d}\) where

In the informal formulation of this theorem, we used the symbols \(\lessapprox \) and \(\gtrapprox \) that refers to these hidden small values.

7.2 Trade-Off for Fixed QRAM

We also get a trade-off between QRAM and time. For a chosen fixed \(M'\), the QRAM is denoted by \(2^{\gamma _{M'} d} = 2^{M'd}\), and the corresponding minimal time by \(2^{\tau _{M'} d}\). The required quantum memory is denoted \(2^{\mu _{M'} d}\). Note that \(2^{\mu _{M'} d}\) is the also the amount of the required quantum QRAM called “QRAQM”.

This gives a trade-off between time and QRAM in the Fig. 3, and the evolution of quantum memory in function of \(M'\) is in the Fig. 4.

For more than \(2^{0.0767d}\) QRAM, increasing it does not improve the time complexity.

The difference between the function \(\tau _{M'}\) and its average affine function of expression \(0.2926-0.4647 {\cdot }M'\) does not exceed \(6 {\cdot }10^{-4}\). This affine function is a upper bound of \(\tau _{M'}\).

From \(M'=0\) to 0.0579 the function \(\gamma _{M'}\) is at 0. Then, it is close to the affine function of expression \(2.6356(M'-0.0579)\). So \(\gamma _{M'}\) can be approximated by \(\max \{2.6356(M'-0.0579), 0\}\), and the difference between \(\gamma _{M'}\) and this approximation does not exceed \(9 {\cdot }10^{-4}\). All this is summarized in the following theorem.

Theorem 6

(Trade-off for fixed QRAM). There exists a quantum algorithm using quantum random walks that solves SVP on dimension d which for a parameter \(M' \in [0,0.0767]\) heuristically runs in time \(2^{\tau _{M'} d + o(d)}\), uses QRAM of maximum size \({\mathsf {poly}}(d) \cdot 2^{\gamma _{M'} d}\), a quantum memory of size \({\mathsf {poly}}(d) \cdot 2^{\mu _{M'} d}\) and uses a classical memory of size \({\mathsf {poly}}(d) \cdot 2^{0.2075d}\) where

Finally, we present a table with a few values that presents some of the above trade-offs (Fig. 5).

8 Discussion

Impact on Lattice-Based Cryptography. Going from a running time of \(2^{0.2653d + o(d)}\) to \( 2^{0.2570 d + o(d)}\) slightly reduces the security claims based on the analysis of the SVP (usually via the BKZ algorithm). For example, if one claims 128 bits of security using the above exponent then one must reduce this claim to 124 bits of quantum security. This of course can usually be fixed with a slight increase of the parameters but cannot be ignored if one wants to have the same security claims as before.

Parallelization. On thing we haven’t talked about in this article is whether our algorithm paralellizes well. Algorithm 1 seems to parallelize very well, and we argue that it is indeed the case.

For this algorithm, the best classical algorithm takes \(c \rightarrow 0\). In this case, placing each \(\vec {v}\in L\) in its corresponding \(\alpha \)-filters can be done in parallel and with N processors (or N width) it can be done in time \({\mathsf {poly}}(d)\). Then, there are N separate instances of \(\mathbf {FindAllSolutions}\) which can be also perfectly parallelized and each one also takes time \({\mathsf {poly}}(d)\) when \(c \rightarrow 0\). The while loop is repeated \(N^{-\zeta } = N^{0.409d}\) times so the total running time (here depth) is \(N^{0.409d + o(d)}\) with a classical circuit of width N. Such a result already surpasses the result from [BDGL16] that achieves depth \(N^{1/2}\) with a quantum circuit of width N using parallel Grover search.

In the quantum setting, our algorithm parallelizes also quite well. If we consider our optimal parameters (\(c = 0.3696\)) with a similar reasoning, our algorithm will parallelize perfectly with \(N^{1-c}\) processors (so that there is exactly one for each call to \(\mathbf {FindAllSolutions}\) i.e. for the quantum random walk). Unfortunately, after that, we do not know how to parallelize well within the quantum walk. When we consider circuits of width N, our optimizations didn’t achieve better than a depth of \(N^{0.409 + o(d)}\) which is the classical parallelization. This is also the case if we use Grover’s algorithm as in [Laa16] for the \(\mathbf {FindAllSolutions}\) and we use parallel Grover search as in [BDGL16] so best known (classical or quantum) algorithm with lowest depth that uses a circuit of width N is the classical parallel algorithm described above.

Notes

- 1.

The SVP challenge can be accessed here https://www.latticechallenge.org/svp-challenge.

- 2.

At this stage, there are 3 encryption schemes/key encapsulation mechanisms: KYBER, NTRU and SABER as well as two signature schemes: DILITHIUM and FALCON.

- 3.

- 4.

We remain a bit imprecise and informal here as we haven’t properly described sieving algorithms yet.

- 5.

We are only interested in asymptotic running time here so we are not interested in the choice of this universal gate set, as they are all essentially equivalent from the Solovay-Kitaev theorem (see [NC00], Appendix 3).

- 6.

For a regular graph, if \(\lambda _1> \dots > \lambda _{|V|}\) are the eigenvalues of the normalized adjacency matrix of G, then \(\delta = \lambda _1 - \max _{i = 2 \dots n} |\lambda _i|\).

- 7.

We consider an global ordering of elements of \(L_y\), for example with respect to their index, and \(J^v(\vec {t}_i)\) consists of the \(2N^{c_2}\) elements of \({J}^v(\vec {t}_i)\) which are the smallest with respect to this ordering.

- 8.

This problem arises in several quantum random walk algorithms, for example for quantum subset-sum algorithms. One solution is to use a heuristic that essentially claims that we can use the average running time of the update cost instead of the worst case. In our case, we don’t need this heuristic as we manage to bound the update cost in the worst case. We refer to [BBSS20] for an interesting discussion on the topic.

References

Arunachalam, S., Gheorghiu, V., Jochym-O’Connor, T., Mosca, M., Srinivasan, P.V.: On the robustness of bucket brigade quantum RAM. New J. Phys. 17(12), 123010 (2015)

Albrecht, M.R., Gheorghiu, V., Postlethwaite, E.W., Schanck, J.M.: Estimating quantum speedups for lattice sieves. In: Moriai, S., Wang, H. (eds.) ASIACRYPT 2020. LNCS, vol. 12492, pp. 583–613. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-64834-3_20

Andoni, A., Indyk, P., Nguyên, H.L., Razenshteyn, I.: Beyond locality-sensitive hashing. In: SODA, pp. 1018–1028 (2014)

Ajtai, M.: Generating hard instances of lattice problems (extended abstract). In: Proceedings of the Twenty-Eighth Annual ACM Symposium on Theory of Computing, STOC’96, pp. 99–108. Association for Computing Machinery, New York, NY, USA (1996)

Ajtai, M.: The shortest vector problem in L2 is NP-hard for randomized reductions (extended abstract). In: 30th Annual ACM Symposium on Theory of Computing Proceedings, pp. 10–19 (1998)

Ambainis, A.: Quantum walk algorithm for element distinctness. SIAM J. Comput. 37(1), 210–239 (2007)

Andoni, A., Razenshteyn, I.: Optimal data-dependent hashing for approximate near neighbors. In: STOC, pp. 793–801 (2015)

Bonnetain, X., Bricout, R., Schrottenloher, A., Shen, Y.: Improved classical and quantum algorithms for subset-sum. In: Moriai, S., Wang, H. (eds.) ASIACRYPT 2020. LNCS, vol. 12492, pp. 633–666. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-64834-3_22

Becker, A., Ducas, L., Gama, N., Laarhoven, T.: New directions in nearest neighbor searching with applications to lattice sieving. In: Proceedings of the 2016 Annual ACM-SIAM Symposium on Discrete Algorithms (2016)

Bernstein, D.J., Jeffery, S., Lange, T., Meurer, A.: Quantum algorithms for the subset-sum problem. In: Gaborit, P. (ed.) PQCrypto 2013. LNCS, vol. 7932, pp. 16–33. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-38616-9_2

Becker, A., Laarhoven, T.: Efficient (ideal) lattice sieving using cross-polytope LSH. In: Pointcheval, D., Nitaj, A., Rachidi, T. (eds.) AFRICACRYPT 2016. LNCS, vol. 9646, pp. 3–23. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-31517-1_1

Chen, C., et al.: NTRU. Round-3 submission to the NIST PQC project (2019)

Charikar, M.S.: Similarity estimation techniques from rounding algorithms. In: STOC, pp. 380–388 (2002)

Ducas, L., et al.: Crystals-dilithium, algorithm specifications and supporting documentation. Round-3 submission to the NIST PQC project (2019)

de Wolf, R.: Quantum computing: Lecture notes (2019)

Fouque, P.-A., et al.: Falcon: fast-fourier lattice-based compact signatures over NTRU. Round-3 submission to the NIST PQC project (2019)

Fincke, U., Pohst, M.: Improved methods for calculating vectors of short length in a lattice. Math. Comput. 44(170), 463–471 (1985)

Gentry, C.: Fully homomorphic encryption using ideal lattices. In: Proceedings of the Forty-First Annual ACM Symposium on Theory of Computing, STOC ’09, pp. 169–178. Association for Computing Machinery, New York, NY, USA (2009)

Giovannetti, V., Lloyd, S., Maccone, L.: Quantum random access memory. Phys. Rev. Lett. 100, 160501 (2008)

Grover, L.: A fast quantum mechanical algorithm for database search. In: Proceedings 28th Annual ACM Symposium on the Theory of Computing STOC, pp. 212–219 (1996)

Helm, A., May, A.: Subset sum quantumly in 1.17\(^{\text{n}}\). In: Jeffery, S., (ed.), 13th Conference on the Theory of Quantum Computation, Communication and Cryptography, TQC 2018, 16–18 July 2018, Sydney, Australia, volume 111 of LIPIcs, pp. 5:1–5:15. Schloss Dagstuhl - Leibniz-Zentrum für Informatik (2018)

Indyk, P., Motwani, R.: Approximate nearest neighbors: towards removing the curse of dimensionality. In: STOC, pp. 604–613 (1998)

Kannan, R.: Improved algorithms for integer programming and related lattice problems. In: Proceedings of the 15th Symposium on the Theory of Computing (STOC), pp. 99–108. ACM Press (1983)

Klein, P.: Finding the closest lattice vector when it’s unusually close. In: SODA, pp. 937–941 (2000)

Kirshanova, E., Martensson, E., Postlethwaite, E.W., Moulik, S.R.: Quantum algorithms for the approximate k-list problem and their application to lattice sieving. In: Galbraith, S.D., Moriai, S. (eds.) ASIACRYPT 2019. LNCS, vol. 11921, pp. 521–551. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-34578-5_19

Kachigar, G., Tillich, J.-P.: Quantum information set decoding algorithms. In: Lange, T., Takagi, T. (eds.) PQCrypto 2017. LNCS, vol. 10346, pp. 69–89. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59879-6_5

Laarhoven, T.: Sieving for shortest vectors in lattices using angular locality-sensitive hashing. In: Gennaro, R., Robshaw, M. (eds.) CRYPTO 2015. LNCS, vol. 9215, pp. 3–22. Springer, Heidelberg (2015). https://doi.org/10.1007/978-3-662-47989-6_1

Laarhoven, T.: Search problems in cryptography, from fingerprinting to lattice sieving. Ph.D. thesis, Eindhoven University of Technology (2016)

Laarhoven, T., de Weger, B.: Faster sieving for shortest lattice vectors using spherical locality-sensitive hashing. In: Lauter, K., Rodríguez-Henríquez, F. (eds.) LATINCRYPT 2015. LNCS, vol. 9230, pp. 101–118. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-22174-8_6

Lenstra, A.K., Lenstra, H.W., Lovasz, L.: Factoring polynomials with rational coefficients. Math. Ann. 261, 513–534 (1982)

Laarhoven, T., Mosca, M., van de Pol, J.: Finding shortest lattice vectors faster using quantum search. Des. Codes Cryptogr. 77(2–3), 375–400 (2015)

Magniez, F., Nayak, A., Roland, J., Santha, M.: Search via quantum walk. SIAM J. Comput. 40(1), 142–164 (2011)

Micciancio, D., Voulgaris, P.: Faster exponential time algorithms for the shortest vector problem. In: SODA, pp. 1468–1480 (2010)

Nielsen, M.A., Chuang, I.L.: Quantum Computation and Quantum Information. Cambridge University Press, New York, NY, USA (2000)

Nguyen, P.Q., Vidick, T.: Sieve algorithms for the shortest vector problem are practical. J. Math. Crypt. 2, 181–207 (2008)

Pohst, M.E.: On the computation of lattice vectors of minimal length, successive minima and reduced bases with applications. ACM SIGSAM Bull. 15(1), 37–44 (1981)

Tulsiani, M., Kundu, S.K., Mitzenmacher, M., Upfal, E., Spencer, J.H.: Probability and Computing: Randomized Algorithms and Probabilistic Analysis. Cambridge University Press, Cambridge (2013)

Terasawa, K., Tanaka, Y.: Spherical LSH for approximate nearest neighbor search on unit hypersphere. In: Dehne, F., Sack, J.-R., Zeh, N. (eds.) WADS 2007. LNCS, vol. 4619, pp. 27–38. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-73951-7_4

Wang, X., Liu, M., Tian, C., Bi, J.: Improved Nguyen-Vidick heuristic sieve algorithm for shortest vector problem. In: Proceedings of the 6th ACM Symposium on Information, Computer and Communications Security, ASIACCS ’11, pp. 1–9. Association for Computing Machinery, New York, NY, USA (2011)

Zhang, F., Pan, Y., Hu, G.: A three-level sieve algorithm for the shortest vector problem. In: Lange, T., Lauter, K., Lisoněk, P. (eds.) SAC 2013. LNCS, vol. 8282, pp. 29–47. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-43414-7_2

Acknowledgments and Paths for Improvements

The authors want to thank Simon Apers for helpful discussions about quantum random walks, in particular about the fact that there are no better generic algorithms for finding k different marked than to run the whole random walk (including the setup) O(k) times. There could however be a smarter way to do this in our setting which would improve the overall complexity of our algorithm. Another possible improvement would be to embed the local sensitivity property in the graph on which we perform the random walk instead of working on the Johnson graph.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 International Association for Cryptologic Research

About this paper

Cite this paper

Chailloux, A., Loyer, J. (2021). Lattice Sieving via Quantum Random Walks. In: Tibouchi, M., Wang, H. (eds) Advances in Cryptology – ASIACRYPT 2021. ASIACRYPT 2021. Lecture Notes in Computer Science(), vol 13093. Springer, Cham. https://doi.org/10.1007/978-3-030-92068-5_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-92068-5_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92067-8

Online ISBN: 978-3-030-92068-5

eBook Packages: Computer ScienceComputer Science (R0)