Abstract

The core results of the Kreı̆n-Višik-Birman theory of self-adjoint extensions of semi-bounded symmetric operators are reproduced, both in their original and in a more modern formulation, with a comprehensive discussion that includes missing details, elucidative steps, and intermediate results of independent interest.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

This work has a two-fold purpose. On the one hand it aims at reproducing in their original form the key results of the so-called Kreı̆n-Višik-Birman theory of self-adjoint extensions of semi-bounded symmetric operators, providing an expanded discussion, including missing details and supplementary formulas, of the original works, that up to a large extent are written in a rather compact style and are available in Russian only. On the other hand, the goal is to give evidence of how the original results can be equivalently re-written into what is now the more modern formulation of the theory, as it can be found in the (relatively limited) literature in English language on the subject, formulation which is derived by alternatives means, typically boundary triplets techniques.

The whole field is nowadays undoubtedly classical, and in fact to some extent even superseded by more modern mathematical techniques. Let us then give evidence in this Introduction of the context and of the degree of novelty of this work.

Self-adjointness and self-adjoint extension theory constitute a well-established branch of functional analysis and operator theory, with deep-rooted motivations and applications, among others, in the boundary value problems for partial differential equations and in the mathematical methods for quantum mechanics and quantum field theory. At the highest level of generality, it is von Neumann’s celebrated theory of self-adjoint extensions that provides, very elegantly, the complete solution to the problem of finding self-adjoint operators that extend a given densely defined symmetric operator S on a given Hilbert space \(\mathscr {H}\). As well known, the whole family of such extensions is naturally indexed by all the unitary maps U between the subspaces \(\ker (S^*-z)\) and \(\ker (S^*-\overline {z})\) of \(\mathscr {H}\) for a fixed \(z\in \mathbb {C}\setminus \mathbb {R}\), the condition that such subspaces be isomorphic being necessary and sufficient for the problem to have a solution; each extension S U is determined by an explicit constructive recipe, given U and the above subspaces.

A relevant special case is when S is semi-bounded—one customarily assumes it to be bounded below, and so shall we henceforth—which is in fact a typical situation in the quest for stable quantum mechanical Hamiltonians. In this case \(\ker (S^*-z)\) and \(\ker (S^*-\overline {z})\) are necessarily isomorphic, which guarantees the existence of self-adjoint extensions. Among them, a canonical form construction (independent of von Neumann’s theory) shows that there exists a distinguished one, the Friedrichs extension S F, whose bottom coincides with the one of S, which is characterised by being the only self-adjoint extension whose domain is entirely contained in the form domain of S, and which has the property to be the largest among all self-adjoint extensions of S, in the sense of the operator ordering “\(\geqslant \)” for self-adjoint operators.

The first systematic extension theory for semi-bounded operators is due to Kreı̆n. Kreı̆n’s theory shows that all self-adjoint extensions of S, whose bottom is above a prescribed reference level \(m\in \mathbb {R}\), are naturally ordered, in the sense of “\(\geqslant \)”, between the Friedrichs extension from above (the “rigid” (жёсткое) extension, in Kreı̆n’s terminology), and a unique lowest extension S N from below, whose bottom is not less than the chosen m, the so-called Kreı̆n-von Neumann extension (the “soft” (мягкое) extension). In short: \(S_F\geqslant \widetilde {S}\geqslant S_N\) for any such extension \(\widetilde {S}\). (Let us refer to appendix A for a more detailed summary of von Neumann’s and Kreı̆n’s extension theory, or to modern overviews such as [56, Chapter X] and [60, Chapter 13].)

By the Kreı̆n-Višik-Birman (KVB) theory (where the order here reflects the chronological appearance of the seminal works of Kreı̆n [34], Višik [66], and Birman [8]), one means a development of Kreı̆n’s original theory, in the form of an explicit and extremely convenient classification of all self-adjoint extensions of a given semi-bounded and densely defined symmetric operator S, both in the operator sense and in the quadratic form sense.

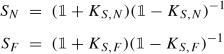

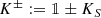

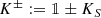

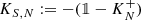

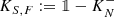

As the distinction “Kreı̆n vs KVB” may appear a somewhat artificial retrospective, let us emphasize the following. In Kreı̆n’s original work [34] each extension of S is proved to be bijectively associated with a self-adjoint extension, with unit norm, of the Kreı̆n transform  of S. This way, a difficult self-adjoint extension problem (extension of S) is shown to be equivalent to an easier one (extension of the Kreı̆n transform of S), yet no general parametrisation of the extensions of S is given. The full KVB theory provides in addition a parametrisation of the extensions, labelling each of them in the form S

B where B runs over all self-adjoint operators acting on Hilbert subspaces of

of S. This way, a difficult self-adjoint extension problem (extension of S) is shown to be equivalent to an easier one (extension of the Kreı̆n transform of S), yet no general parametrisation of the extensions of S is given. The full KVB theory provides in addition a parametrisation of the extensions, labelling each of them in the form S

B where B runs over all self-adjoint operators acting on Hilbert subspaces of  , for some large enough \(\lambda \geqslant 0\).

, for some large enough \(\lambda \geqslant 0\).

The KVB theory has a number of features that make it in many respects more informative as compared to von Neumann’s. First and most importantly, the KVB parametrisation B ↔ S B identifies special subclasses of extensions of S, such as those whose bottom is above a prescribed level, in terms of a corresponding subclass of parameters B. In particular, both the Friedrichs extension S F and the Kreı̆n-von Neumann extension S N of S relative to a given reference lower bound can be selected a priori, meaning that the special parameter B that identifies S F or S N is explicitly known. In contrast, the parametrisation U ↔ S U based on unitaries U provided by von Neumann’s theory does not identify a priori the particular U that gives S F or S N. An amount of further relevant information concerning each extension, including invertibility, semi-boundedness, and special features of its negative spectrum (finiteness, multiplicity, accumulation points) turn out to be controlled by the analogous properties of the extension parameter. Furthermore, the KVB extension theory has a natural and explicit re-formulation in terms of quadratic forms, an obviously missing feature in von Neumann’s theory. On this last point, it is worth emphasizing that whereas the KVB classification of the extensions as operators is completely general, the classification in terms of the corresponding quadratic forms only applies to the family of semi-bounded self-adjoint extensions of S, while unbounded below extensions (if any) escape this part of the theory.Footnote 1

For several historical and scientific reasons (a fact that itself would indeed deserve a separate study) the mathematical literature in English language on the KVB theory is considerably more limited as compared to von Neumann’s theory. Over the decades the tendency has been in general to re-derive and discuss the main results through routes and with notation and “mathematical flavour” that differ from the original formulation.

At the price of an unavoidable oversimplification, we can say that while in the applications to quantum mechanics von Neumann’s theory has achieved a dominant role, and is nowadays a textbooks standard, on a more abstract mathematical level the original results of Kreı̆n, Višik, and Birman, and their applications to boundary value problems for elliptic PDE, have eventually found a natural evolution and generalisation within the modern theory of boundary triplets. Thus, in modern terms the deficiency space  is referred to as a boundary value space, this space is then equipped with a boundary triplet structure, and the extensions of S are parametrised by linear relations on the boundary space, with a distinguished position for the Friedrichs and the Kreı̆n-von Neumann extensions that are intrinsically encoded in the choice of the boundary triplet.

is referred to as a boundary value space, this space is then equipped with a boundary triplet structure, and the extensions of S are parametrised by linear relations on the boundary space, with a distinguished position for the Friedrichs and the Kreı̆n-von Neumann extensions that are intrinsically encoded in the choice of the boundary triplet.

However, this work is neither meant nor going to move from the point of view of the boundary triplet theory, which is surely a beautiful and prolific scheme within which one can indeed retrieve the old results of Kreı̆n, Višik, and Birman—in fact, the latter approach is already available in the literature: for example a recent, concise, and relatively complete survey of the re-derivation of Kreı̆n, Višik, and Birman from the boundary triplet theory may be found in [60, Chapters 13 and 14], and in the references therein.

Our perspective, instead, is primarily motivated by the use of the KVB theory in the rigorous construction of quantum Hamiltonians of particle systems with contact interactions and, more generally, in the extension theory of quantum Hamiltonians of few-body and many-body systems where the interaction is singular enough so as to make perturbative techniques inapplicable, and hence to give rise to a multiplicity of self-adjoint realisations to qualify and classify. This is a realm where customarily the largest part of the mathematics is worked out by means of the ‘dominant’ extension theory a la von Neumann, or by the theory of quadratic forms on Hilbert space, and to a lesser extent by means of tools from non-standard analysis or boundary triplets. However, results from Kreı̆n and Birman have been known since long to bring in this context complementary or also new information: one paradigmatic instance is the study of particle Hamiltonians of contact interaction carried on by trailblazers such as Berezin, Faddeev, and above all Minlos and his school [45, 50, 51]—an ample historical survey of which is in [41, Section 2], further references being provided here at the end of Sect. 4. In the above-mentioned contexts, as we shall elaborate further later, comprehensive references in the literature are, and have been over the decades, quite limited in number and scope (as discussed in Sect. 4), due also to the circumstance that most of the original sources were available in Russian only.

The last considerations constitute our motivations for the present note, the object of which is therefore: first, to provide an exhaustive recapitulation of the KVB theory and of its ‘elementary’ derivation in the original operator-theoretic framework, thus with no reference to more modern superstructures such as boundary triplets; second, to reproduce, neatly and directly from the original formulation, the ‘modern’ version of the main results of the theory, that is, the version appearing essentially in all the (western) literature after Kreı̆n, Višik, and Birman, and derived therein through more involved and more general schemes.

To this aim, in Sect. 2 we devote a careful effort to replicate the original results of the KVB theory, in order to make it accessible in its full rigour (and in English language) with respect to its initial version, that is, by filling certain gaps of the original proofs, supplementing the material with non-ambiguous notation and elucidative steps, producing and highlighting intermediate results that have their own independent interest. In a way, and this is our first novelty, the outcome is a complete and self-consistent “reading guide” for the original results and for the route to demonstrate them, together with a clean presentation of all the main statements that are mostly referred to in the applications, significantly Theorem 1 (together with the special cases (5) and (7)), Theorems 2, 3, and 4, and Proposition 2.

Mirroring such analysis, in Sect. 3 we reproduce an alternative, equivalent version of the main results of the KVB theory. These statements are actually those by which (part of) the KVB theory has been presented, re-derived, discussed, and applied in the subsequent literature in English language. Typically this was done by means of modern boundary-triplet-based extension techniques, whereas the novelty here is to derive such results directly from the original ones, with the minimum amount of operator theory (the key is the inversion mechanism of our Proposition 3). Thus, Sect. 3 has the two-fold feature of proving the equivalence between “modern” and “original” formulations and of providing another reference scheme of all main results, significantly Theorems 5, 6, and 7, Proposition 4, Theorem 8, and Proposition 5.

In Sect. 4 we place the KVB theory into a concise historical perspective of motivations, further developments, and applications.

In Sect. 5 we complete the main core of the theory with results that characterise relevant properties of the extensions, such as invertibility, semi-boundedness, and other special features of the negative discrete spectrum, in terms of the corresponding properties of the extension parameter.

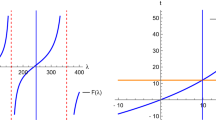

In Sect. 6 we discuss, within the KVB formalism, the structure of resolvents of self-adjoint extensions, in the form of Kreı̆n-like resolvent formulas. The results emerging from Sects. 5 and 6 corroborates the picture that the KVB extension parametrisation is in many fundamental aspects more informative than von Neumann’s parametrisation.

Last, in Sect. 7 we show how the general formulas of the KVB theory apply to simple examples in which the extension problem by means of von Neumann’s theory is already well known, so as to make the comparison between the two approaches evident.

For reference and comparison purposes, in the final Appendix we organised an exhaustive summary of von Neumann’s and of Kreı̆n’s self-adjoint extension theories, with special emphasis on the two “distinguished” extensions, the Friedrichs and the Kreı̆n-von Neumann one.

Notation

Essentially all the notation adopted here is standard, let us only emphasize the following. Concerning the various sums of spaces that will occur, we shall denote by \(\dotplus \) the direct sum of vector spaces, by ⊕ the direct orthogonal sum of closed Hilbert subspaces of the same underlying Hilbert space \(\mathscr {H}\) (the space where the initial symmetric and densely defined operator is taken), and by \(\boxplus \) the direct sum of subspaces of \(\mathscr {H}\) that are orthogonal to each other but are not a priori all closed. For any given symmetric operator S with domain \(\mathscr {D}(S)\), we shall denote by m(S) the “bottom” of S, i.e., its greatest lower bound

S being semi-bounded means therefore m(S) > −∞. Let us also adopt the customary convention to distinguish the operator domain and the form domain of any given densely defined and symmetric operator S by means of the notation \(\mathscr {D}(S)\) vs \(\mathscr {D}[S]\). To avoid ambiguities, V

⊥ will always denote the orthogonal complement of a subspace V of \(\mathscr {H}\) with respect to \(\mathscr {H}\) itself: when interested in the orthogonal complement of V within a closed subspace K of \(\mathscr {H}\) we shall keep the extended notation V

⊥∩ K. Analogously, the closure \(\overline {V}\) of the considered subspaces will be always meant with respect to the norm-topology of the underlying Hilbert space \(\mathscr {H}\). As no particular ambiguity arises in our formulas when referring to the identity operator, we shall use the symbol  for it irrespectively of the subspace of \(\mathscr {H}\) it acts on. As for the spectral measure of a self-adjoint operator A we shall use the standard notation dE

(A) (see, e.g., [60, Chapters 4 and 5]). As customary, σ(T) and ρ(T) shall denote, respectively, the spectrum and the resolvent set of an operator T on Hilbert space.

for it irrespectively of the subspace of \(\mathscr {H}\) it acts on. As for the spectral measure of a self-adjoint operator A we shall use the standard notation dE

(A) (see, e.g., [60, Chapters 4 and 5]). As customary, σ(T) and ρ(T) shall denote, respectively, the spectrum and the resolvent set of an operator T on Hilbert space.

2 Fundamental Results in the KVB Theory: Original Version

In this Section we reproduce, through an expanded and more detailed discussion, the pillars of the KVB theory for self-adjoint extensions of bounded below symmetric operator, in the form they were established in the original works Kreı̆n [34], Višik [66], and Birman [8]. The main statements are Lemma 1, Theorem 1, Remark 1, Theorems 2, 3, and 4, and Proposition 2 below. The notation, when applicable, is kept on purpose the same as that of those works.

2.1 General Assumptions: Choice of a Reference Lower Bound

In the following we shall assume that S is a semi-bounded (below), not necessarily closed, densely defined symmetric operator acting on a Hilbert space \(\mathscr {H}\). Unlike the early developments of the theory (Kreı̆n’s theory), no restriction is imposed to the magnitude of the deficiency indices \(\dim \ker (S^*\pm \mathrm {i})\) of S: in particular, they can also be infinite.

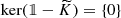

It is not restrictive to assume further

for in the general case one applies the discussion that follows to the strictly positive operator  , λ > −m(S), and then re-express trivially the final results in terms of the original S.

, λ > −m(S), and then re-express trivially the final results in terms of the original S.

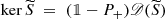

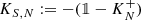

Associated to S are two canonical, distinguished self-adjoint extensions, the well-known Friedrichs extension S F and Kreı̆n-von Neumann extension S N. Whereas a complete summary of the construction and of the properties of such extensions is presented in appendix A.2, which we will be making reference to whenever in the following we shall need a particular attribute of S F or S N for the proofs, let us recall here their distinguishing features.

The extension S F is semi-bounded and with the same bottom m(S F) = m(S) of S. Its quadratic form is precisely the closure of the (closable) quadratic form associated with S. In fact, S F is the restriction of S ∗ to the domain \(\mathscr {D}[S]\cap \mathscr {D}(S^*)\). Among all self-adjoint extensions of S, S F is the only one whose operator domain is contained in \(\mathscr {D}[S]\), and moreover S F is larger than any other semi-bounded extension \(\widetilde {S}\) of S, in the sense of the ordering \(S_F\geqslant \widetilde {S}\) (which, in particular, means \(\mathscr {D}[S_F] \subset \mathscr {D}[\widetilde {S}]\)).

Thus, the choice m(S) > 0 implies that the Friedrichs extension S F of S is invertible with bounded inverse defined everywhere on \(\mathscr {H}\): this will allow \(S_F^{-1}\) to enter directly the discussion. In the general case in which S F is not necessarily invertible, the role of \(S_F^{-1}\) can be naturally replaced in many respects (but not all) by the inverse \(\widetilde {S}^{-1}\) of any a priori known self-adjoint extension \(\widetilde {S}\) of S, which thus takes the role of given “datum” of the theory. As an example see the role played by the Friedrichs extension in the proofs of Lemma 5 and Theorems 3, 4.

With the choice m(S) > 0, the level 0 becomes naturally the reference value with respect to which to express the other distinguished (canonically given) extension of S, the Kreı̆n-von Neumann extension S N. It is qualified among all other positive self-adjoint extensions \(\widetilde {S}\) of S by being the unique smallest, in the sense \(\widetilde {S}\geqslant S_N\).

We underline that unlike Kreı̆n’s original theory and many of the recent presentations of the KVB theory, the discussion here is not going to be restricted to the positive self-adjoint extensions of S. On the contrary, we shall present the full theory that includes also those extensions, if any, with finite negative bottom, or even unbounded below.

2.2 Adjoint of a Semi-Bounded Symmetric Operator: Regular and Singular Part

The first step of the theory is to describe the structure of the domain of the adjoint S ∗ of S. Recall that a characterisation of \(\mathscr {D}(S^*)\) is already given by von Neumann’s formula

which is valid, more generally, for any densely defined S. The KVB theory works with a “real” version of (3), with z = 0 and with the space

instead of the two deficiency spaces  and

and  . With a self-explanatory nomenclature, U shall henceforth be referred to as the deficiency space of S, with no restriction on \(\dim U\).

. With a self-explanatory nomenclature, U shall henceforth be referred to as the deficiency space of S, with no restriction on \(\dim U\).

The result consists of a decomposition of \(\mathscr {D}(S^*)\) first proved by Kreı̆n (see also Remark 21) and a further refinement, initially due to Višik, to which Birman gave later an alternative proof.

Lemma 1 (Kreı̆n Decomposition Formula for \(\mathscr {D}(S^*)\))

For a densely defined symmetric operator S with positive bottom,

Proof

\(\mathscr {D}(S^*)\supset \mathscr {D}(S_F)+\ker S^*\) because each summand is a subspace of \(\mathscr {D}(S^*)\). As for the opposite inclusion, one can always decompose an arbitrary \(g\in \mathscr {D}(S^*)\) as \(g=S_F^{-1}S^*g+(g-S_F^{-1}S^*g)\), where \(S_F^{-1}S^*g\in \mathscr {D}(S_F)\), and where \(g-S_F^{-1}S^*g\in \ker S^*\), because \(S^*(g-S_F^{-1}S^*g)=S^*g-S^*g=0\). Last, the sum in the r.h.s. of (5) is direct because any \(g\in \mathscr {D}(S_F)\cap \ker S^*\) is necessarily in \(\ker S_F\) (S F g = S ∗ g = 0), and from 0 < m(S) = m(S F) one has \(\ker S_F=\{0\}\). □

Theorem 1 (Višik-Birman Decomposition Formula for \(\mathscr {D}(S^*)\))

For a densely defined symmetric operator S with positive bottom,

Proof

Let \(U=\ker S^*\). As in the proof of Lemma 1, \(\mathscr {D}(S^*)\supset \mathscr {D}(\overline {S})\dotplus S_F^{-1} U\dotplus U\) is obvious and conversely any \(g\in \mathscr {D}(S^*)\) can be written as \(g=S_F^{-1}S^*g+u\) for some \(u\in U=\ker S^*\). In turn, owing to \(\mathscr {H}=\overline {\mathrm {ran} S}\oplus \ker S^*\), one writes \(S^*g=h_0+\widetilde {u}\), and hence \(S_F^{-1}S^*g=S_F^{-1} h_0+S_F^{-1}\widetilde {u}\), for some \(\widetilde {u}\in U\) and h 0 =limn→∞ Sφ n for some sequence (φ n)n in \(\mathscr {D}(S)\). From \(\varphi _n= S_F^{-1}S\varphi _n\to S_F^{-1}h_0\) and Sφ n → h 0 as n →∞, and from the closability of S, one deduces that \(f:=S_F^{-1}h_0\in \mathscr {D}(\overline {S})\). Therefore, \(g=f+S_F^{-1}\widetilde {u}+u\), which proves \(\mathscr {D}(S^*)\subset \mathscr {D}(\overline {S})+S_F^{-1} U+U\). Last, one concludes that the sum in (6) is direct as follows: if \(g=f+S_F^{-1}\widetilde {u}+u=0\), then \(0=S^*g=\overline {S}f+\widetilde {u}\), which forces \(\overline {S}f=\widetilde {u}=0\) because \(\overline {S}f\perp \widetilde {u}\); then also \(f=S_F^{-1}\overline {S}f=0\) and, from g = 0, also u = 0. □

Remark 1

The argument of the proof above can be repeated to conclude

and hence

Indeed, while it is obvious that the r.h.s. of (7) is contained in the l.h.s., conversely one takes a generic \(g\in \mathscr {D}(S_F)\) and decomposes \(S_Fg=h_0+\widetilde {u}\) with \(h_0\in \overline {\mathrm {ran} S}\) and \(\widetilde {u}\in U\) as above, whence, by the same argument, \(g=S_F^{-1}h_0+S_F^{-1}\widetilde {u}\) with \(S_F^{-1}h_0\in \mathscr {D}(\overline {S})\).

Remark 2

Precisely as in the remark above, one also concludes that

for any self-adjoint extension \(\widetilde {S}\) of S that is invertible everywhere on \(\mathscr {H}\).

Remark 3

Since S is closable and injective (m(S) > 0), then as well known

Thus, in the above proof one could claim immediately that \(h_0=\overline {S}f\) for some \(f\in \mathscr {D}(\overline {S})\), whence \(S_F^{-1}h_0=S_F^{-1}\overline {S}f=f\in \mathscr {D}(\overline {S})\).

Remark 4

In view of the applications in which S and S F are differential operators on an L 2-space and hence \(\mathscr {D}(S_F)\) indicates an amount of regularity of its elements, it is convenient to regard \(\mathscr {D}(S_F)=\mathscr {D}(\overline {S})\dotplus S_F^{-1} U\) in (6) as the “regular component” and, in contrast, \(U=\ker S^*\) as the “singular component” of the domain of S ∗.

Remark 5

In all the previous formulas the assumption m(S) > 0 only played a role to guarantee the existence of the everywhere defined and bounded operator \(S_F^{-1}\). It is straightforward to adapt the arguments above to prove the following: if S is a symmetric and densely defined operator on \(\mathscr {H}\) and \(\widetilde {S}\) is a self-adjoint extension of S, then for any \(z\in \rho (\widetilde {S})\) (the resolvent set of \(\widetilde {S}\))

2.3 Višik’s B Operator

To a generic self-adjoint extension of S one associates, canonically with respect to the decomposition (6), a self-adjoint operator B acting on a Hilbert subspace of \(\ker S^*\). This “Višik’s B operator” defined in (23) below (introduced first by Višik in [66]) turns out to be a convenient label to index the self-adjoint extensions of S: this is going to be done in formula (24) proved in the end of this Subsection.

Let \(\widetilde {S}\) be a self-adjoint extension of S. Correspondingly, let U 0 and U 1 be the two closed subspaces of \(U=\ker S^*\) (and hence of \(\mathscr {H}\)), and let \(\mathscr {H}_+\) be the closed subspace of \(\mathscr {H}\), uniquely associated to \(\widetilde {S}\) by means of the definitions

Thus,

Let \(P_+:\mathscr {H}\to \mathscr {H}\) be the orthogonal projection onto \(\mathscr {H}_+\).

The operator \(\widetilde {S}\) has the following properties.

Lemma 2

-

(i)

\(\overline {\mathrm {ran} S}\oplus U_1\;=\;\mathscr {H}_+\;=\;\overline {\mathrm {ran}\widetilde {S}}\) , i.e., \(\mathrm {ran}\widetilde {S}\) is dense in \(\mathscr {H}_+\)

-

(ii)

-

(iii)

\(\mathscr {D}(\widetilde {S})=(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+)\boxplus \ker \widetilde {S}=P_+\mathscr {D}(\widetilde {S})\boxplus \ker \widetilde {S}\) and also \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+=P_+\mathscr {D}(\widetilde {S})\)

-

(iv)

\(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\) is dense in \(\mathscr {H}_+\)

-

(v)

\(\widetilde {S}\) maps \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\) into \(\mathscr {H}_+\)

-

(vi)

\(\mathrm {ran}\widetilde {S}=\mathrm {ran} \overline {S}\,\boxplus \,\widetilde {U}_1\) where \(\widetilde {U}_1\) is a dense subspace of U 1 uniquely identified by \(\widetilde {S}\).

Proof

(i) follows by (15), because \(\overline {\mathrm {ran}\widetilde {S}}\) is the orthogonal complement to \(\ker \widetilde {S}\) in \(\mathscr {H}\) (owing to the self-adjointness of \(\widetilde {S}\)). In (ii) the “⊃” inclusion is obvious and conversely, if \(u_0\in \ker \widetilde {S}\subset \mathscr {D}(\widetilde {S})\), then  . To establish (iii), decompose a generic \(g\in \mathscr {D}(\widetilde {S})\) as g = f

+ + u

0 for some \(f_+\in \mathscr {H}_+\) and \(u_0\in U_0=\ker \widetilde {S}\) (using \(\mathscr {H}=\mathscr {H}_+\oplus \ker \widetilde {S}\)): since \(f_+=g-u_0\in \mathscr {D}(\widetilde {S})\), then \(f_+\in \mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\) and therefore \(\mathscr {D}(\widetilde {S})\subset (\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+)\boxplus \ker \widetilde {S}\). The opposite inclusion is obvious, thus \(\mathscr {D}(\widetilde {S})=(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+)\boxplus \ker \widetilde {S}\). It remains to prove that \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+=P_+\mathscr {D}(\widetilde {S})\): the inclusion \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\subset P_+\mathscr {D}(\widetilde {S})\) is obvious, as for the converse, if \(h=P_+ g\in P_+\mathscr {D}(\widetilde {S})\) for some \(g\in \mathscr {D}(\widetilde {S})\), decompose g = f

+ + u

0 in view of \(\mathscr {D}(\widetilde {S})=(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+)\boxplus \ker \widetilde {S}\), then \(h= P_+ g = f_+\in \mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\), which completes the proof. To establish (iv), for fixed arbitrary \(h_+\in \mathscr {H}_+\) let \((f_n)_{n\in \mathbb {N}}\) be a sequence in \(\mathscr {D}(\widetilde {S})\) of approximants of h

+ (indeed \(\mathscr {D}(\widetilde {S})\) is dense in \(\mathscr {H}\)): then, as n →∞, f

n → h

+ implies P

+

f

n → h

+. (v) is an immediate consequence of (i), because \(\widetilde {S}\) maps \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\) into \(\mathrm {ran} \widetilde {S}\). Last, let us prove (vi). Recall that \(\overline {\mathrm {ran} S}=\mathrm {ran}\overline {S}\), because S is closable and injective (m(S) > 0). Set \(\widetilde {U}_1:=\{u_g\in U_1\,|\,\widetilde {S}g=\overline {S}f_g+u_g\text{ for }g\in \mathscr {D}(\widetilde {S})\}\), where \(f_g\in \mathscr {D}(\overline {S})\) and u

g ∈ U

1 are uniquely determined by the given \(g\in \mathscr {D}(\widetilde {S})\) through (i) and the decomposition \(\overline {\mathrm {ran}\widetilde {S}}=\mathscr {H}_+=\mathrm {ran} \overline {S}\oplus U_1\). The inclusions \(\mathrm {ran}\widetilde {S}\subset \mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\) and \(\mathrm {ran}\widetilde {S}\supset \mathrm {ran} \overline {S}\) are obvious, furthermore \(\mathrm {ran}\widetilde {S}\supset \widetilde {U}_1\) because each \(\widetilde {u}_1\in \widetilde {U}_1\) is by definition the difference of two elements in \(\mathrm {ran}\widetilde {S}\). Thus, \(\mathrm {ran}\widetilde {S}=\mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\). It remains to prove the density of \(\widetilde {U}_1\) in U

1. Given an arbitrary \(u_1\in U_1\subset \mathscr {H}_+\) and a sequence \((\widetilde {S}g_n)_{n\in \mathbb {N}}\in \mathrm {ran}\widetilde {S}\) of approximants of u

1 (owing to the density of \(\mathrm {ran}\widetilde {S}\) in \(\mathscr {H}_+\)), decompose \(\widetilde {S}g_n=\overline {S}f_n+\widetilde {u}_n\), for some \(f_n\in \mathscr {D}(\overline {S})\) and \(\widetilde {u}_n\in \widetilde {U}_1\), in view of \(\mathrm {ran}\widetilde {S}=\mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\): denoting by \(P_1:\mathscr {H}_+\to \mathscr {H}_+\) the orthogonal projection onto U

1, one has \(u_1=P_1u_1=P_1\lim _n(\overline {S}f_n+\widetilde {u}_n)=\lim _n\widetilde {u}_n\), which shows that \((\widetilde {u}_n)_{n\in \mathbb {N}}\) is a sequence in \(\widetilde {U}_1\) of approximants of u

1. □

. To establish (iii), decompose a generic \(g\in \mathscr {D}(\widetilde {S})\) as g = f

+ + u

0 for some \(f_+\in \mathscr {H}_+\) and \(u_0\in U_0=\ker \widetilde {S}\) (using \(\mathscr {H}=\mathscr {H}_+\oplus \ker \widetilde {S}\)): since \(f_+=g-u_0\in \mathscr {D}(\widetilde {S})\), then \(f_+\in \mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\) and therefore \(\mathscr {D}(\widetilde {S})\subset (\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+)\boxplus \ker \widetilde {S}\). The opposite inclusion is obvious, thus \(\mathscr {D}(\widetilde {S})=(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+)\boxplus \ker \widetilde {S}\). It remains to prove that \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+=P_+\mathscr {D}(\widetilde {S})\): the inclusion \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\subset P_+\mathscr {D}(\widetilde {S})\) is obvious, as for the converse, if \(h=P_+ g\in P_+\mathscr {D}(\widetilde {S})\) for some \(g\in \mathscr {D}(\widetilde {S})\), decompose g = f

+ + u

0 in view of \(\mathscr {D}(\widetilde {S})=(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+)\boxplus \ker \widetilde {S}\), then \(h= P_+ g = f_+\in \mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\), which completes the proof. To establish (iv), for fixed arbitrary \(h_+\in \mathscr {H}_+\) let \((f_n)_{n\in \mathbb {N}}\) be a sequence in \(\mathscr {D}(\widetilde {S})\) of approximants of h

+ (indeed \(\mathscr {D}(\widetilde {S})\) is dense in \(\mathscr {H}\)): then, as n →∞, f

n → h

+ implies P

+

f

n → h

+. (v) is an immediate consequence of (i), because \(\widetilde {S}\) maps \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\) into \(\mathrm {ran} \widetilde {S}\). Last, let us prove (vi). Recall that \(\overline {\mathrm {ran} S}=\mathrm {ran}\overline {S}\), because S is closable and injective (m(S) > 0). Set \(\widetilde {U}_1:=\{u_g\in U_1\,|\,\widetilde {S}g=\overline {S}f_g+u_g\text{ for }g\in \mathscr {D}(\widetilde {S})\}\), where \(f_g\in \mathscr {D}(\overline {S})\) and u

g ∈ U

1 are uniquely determined by the given \(g\in \mathscr {D}(\widetilde {S})\) through (i) and the decomposition \(\overline {\mathrm {ran}\widetilde {S}}=\mathscr {H}_+=\mathrm {ran} \overline {S}\oplus U_1\). The inclusions \(\mathrm {ran}\widetilde {S}\subset \mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\) and \(\mathrm {ran}\widetilde {S}\supset \mathrm {ran} \overline {S}\) are obvious, furthermore \(\mathrm {ran}\widetilde {S}\supset \widetilde {U}_1\) because each \(\widetilde {u}_1\in \widetilde {U}_1\) is by definition the difference of two elements in \(\mathrm {ran}\widetilde {S}\). Thus, \(\mathrm {ran}\widetilde {S}=\mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\). It remains to prove the density of \(\widetilde {U}_1\) in U

1. Given an arbitrary \(u_1\in U_1\subset \mathscr {H}_+\) and a sequence \((\widetilde {S}g_n)_{n\in \mathbb {N}}\in \mathrm {ran}\widetilde {S}\) of approximants of u

1 (owing to the density of \(\mathrm {ran}\widetilde {S}\) in \(\mathscr {H}_+\)), decompose \(\widetilde {S}g_n=\overline {S}f_n+\widetilde {u}_n\), for some \(f_n\in \mathscr {D}(\overline {S})\) and \(\widetilde {u}_n\in \widetilde {U}_1\), in view of \(\mathrm {ran}\widetilde {S}=\mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\): denoting by \(P_1:\mathscr {H}_+\to \mathscr {H}_+\) the orthogonal projection onto U

1, one has \(u_1=P_1u_1=P_1\lim _n(\overline {S}f_n+\widetilde {u}_n)=\lim _n\widetilde {u}_n\), which shows that \((\widetilde {u}_n)_{n\in \mathbb {N}}\) is a sequence in \(\widetilde {U}_1\) of approximants of u

1. □

Since \(\widetilde {S}\) maps \(\mathscr {D}(\widetilde {S})\cap \mathscr {H}_+\) into \(\mathscr {H}_+\) and trivially U 0 into itself, and since P + maps \(\mathscr {D}(\widetilde {S})\) into itself (Lemma 2(iii)), then \(\mathscr {H}_+\) and U 0 are reducing subspaces for \(\widetilde {S}\) (see, e.g., [60, Prop. 1.15]). The non-trivial (i.e., non-zero) part of \(\widetilde {S}\) in this reduction is the operator

which is therefore a densely defined, injective, and self-adjoint operator on the Hilbert space \(\mathscr {H}_+\). Explicitly,

The inverse of \(\widetilde {S}_+\) (on \(\mathscr {H}_+\)) is the self-adjoint operator \(\widetilde {S}_+^{-1}\) with

and hence

((20) follows from \(\widetilde {S}_+^{-1} (\widetilde {S} P_+f)=P_+f\) in (18), letting now f run on \(\mathscr {D}(\overline {S})\) only: the r.h.s. gives \(P_+\mathscr {D}(\overline {S})\), in the l.h.s. one uses that \(\widetilde {S}P_+f=\widetilde {S}f=\overline {S}f\ \forall f\in \mathscr {D}(\overline {S})\) and hence \(\{\widetilde {S}P_+f\,|\,f\in \mathscr {D}(\overline {S})\}=\mathrm {ran} \overline {S}\).)

Furthermore, by setting

one defines a self-adjoint operator \(\mathscr {S}^{-1}\) on the whole \(\mathscr {H}\), with reducing subspaces \(\mathscr {H}_+=\overline {\mathrm {ran}\widetilde {S}}\) and \(U_0=\ker \widetilde {S}\).

Two further useful properties are the following.

Lemma 3

-

(i)

\(\mathscr {D}(\overline {S})+U_0\;=\;P_+\mathscr {D}(\overline {S})\,\boxplus \,U_0\)

-

(ii)

\(P_+\mathscr {D}(\widetilde {S})\;=\;P_+\mathscr {D}(\overline {S})+\mathscr {S}^{-1}\widetilde {U}_1\).

Proof

The inclusion \(\mathscr {D}(\overline {S})+U_0\subset P_+\mathscr {D}(\overline {S})\,\boxplus \,U_0\) in (i) follows from the fact that each summand in the l.h.s. belongs to the sum in the r.h.s., in particular,  . Conversely, given a generic \(h=P_+f\in P_+\mathscr {D}(\overline {S})\) for some \(f\in \mathscr {D}(\overline {S})\) and u

0 ∈ U

0, then \(h+u_0=f+u_0^{\prime }\) with

. Conversely, given a generic \(h=P_+f\in P_+\mathscr {D}(\overline {S})\) for some \(f\in \mathscr {D}(\overline {S})\) and u

0 ∈ U

0, then \(h+u_0=f+u_0^{\prime }\) with  , which proves the inclusion \(\mathscr {D}(\overline {S})+U_0\supset P_+\mathscr {D}(\overline {S})\,\boxplus \,U_0\). (ii) follows by applying \(\mathscr {S}^{-1}\) to the decomposition \(\mathrm {ran}\widetilde {S}=\mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\) of Lemma 2(vi), for on the l.h.s. one gets \(\mathscr {S}^{-1}\mathrm {ran}\widetilde {S}=P_+\mathscr {D}(\widetilde {S})\), owing to (21), whereas on the r.h.s. one gets \(\mathscr {S}^{-1}\mathrm {ran} \overline {S}=P_+\mathscr {D}(\overline {S})\), owing to (20). □

, which proves the inclusion \(\mathscr {D}(\overline {S})+U_0\supset P_+\mathscr {D}(\overline {S})\,\boxplus \,U_0\). (ii) follows by applying \(\mathscr {S}^{-1}\) to the decomposition \(\mathrm {ran}\widetilde {S}=\mathrm {ran} \overline {S}\boxplus \widetilde {U}_1\) of Lemma 2(vi), for on the l.h.s. one gets \(\mathscr {S}^{-1}\mathrm {ran}\widetilde {S}=P_+\mathscr {D}(\widetilde {S})\), owing to (21), whereas on the r.h.s. one gets \(\mathscr {S}^{-1}\mathrm {ran} \overline {S}=P_+\mathscr {D}(\overline {S})\), owing to (20). □

Summarising so far, the given operator S and the given self-adjoint extension \(\widetilde {S}\) determine canonically (and, in fact, constructively) the closed subspace U 1 of \(\ker S^*\), the dense subspace \(\widetilde {U}_1\) in U 1, the closed subspace \(\mathscr {H}_+=\overline {\mathrm {ran}\widetilde {S}}=\mathrm {ran}\overline {S}\oplus U_1\) of \(\mathscr {H}\) (equivalently, the orthogonal projection P + onto \(\mathscr {H}_+\)), and the self-adjoint operator \(\mathscr {S}^{-1}\) on \(\mathscr {H}\), with the properties discussed above. In terms of these data, one defines

a self-adjoint operator on \(\mathscr {H}\) with the following properties.

Lemma 4

-

(i)

\(\mathscr {B}\) is self-adjoint on \(\mathscr {H}\) and it is bounded if and only if the inverse of \(\widetilde {S}\upharpoonright \big (\mathscr {D}(\widetilde {S})\cap \overline {\mathrm {ran}\widetilde {S}}\,\big )\) (i.e., \(\widetilde {S}_+^{-1}\) ) is bounded as an operator on \(\mathscr {H}_+\).

-

(ii)

With respect to the decomposition (15) \(\mathscr {H}=\mathrm {ran}\overline {S}\oplus U_1\oplus U_0\) , one has \(\mathscr {D}(\mathscr {B})=\mathrm {ran} \overline {S}\,\boxplus \,\widetilde {U}_1\,\boxplus \,U_0\), \(\mathscr {B}\,\mathrm {ran} \overline {S}=\{0\}\), \(\mathscr {B}\,\widetilde {U}_1\subset U_1\) , and \(\mathscr {B}\,U_0=\{0\}\).

Proof

(i) is obvious from the definition of \(\mathscr {B}\) and of \(\mathscr {S}^{-1}\): the former is bounded if and only if the latter is. As for (ii), \(\mathscr {D}(\mathscr {B})=\mathrm {ran}\widetilde {S}\,\boxplus \,\ker \widetilde {S}=\mathrm {ran} \overline {S}\,\boxplus \,\widetilde {U}_1\,\boxplus \,U_0\) follows from (22) and Lemma 2(vi). Moreover, \(\mathscr {B}\,U_0=\{0\}\) is obvious from (21) and (22). To see that \(\mathscr {B}\,\mathrm {ran} \overline {S}=\{0\}\) let \(f\in \mathscr {D}(\overline {S})\), then \(\mathscr {B} \overline {S}f=\mathscr {S}^{-1}\overline {S}f-P_+ S_F^{-1}\overline {S}f=\widetilde {S}_+^{-1}\overline {S}f-P_+f=P_+f-P_+f=0\), where we used (22) and \(\mathrm {ran} \overline {S}\subset \mathscr {H}_+\) in the first equality, (21) in the second equality, and (20) in the third equality. In view of the decomposition \(\mathscr {H}=\mathrm {ran}\overline {S}\oplus U_1\oplus U_0\), \(\mathscr {D}(\mathscr {B})=\mathrm {ran} \overline {S}\,\boxplus \,\widetilde {U}_1\,\boxplus \,U_0\), the self-adjointness of \(\mathscr {B}\) and the fact that \(\mathscr {B}\,\mathrm {ran} \overline {S}=\{0\}\) and \(\mathscr {B}\,U_0=\{0\}\) imply necessarily \(\mathscr {B}\,\widetilde {U}_1\subset U_1\). □

As a direct consequence of Lemma 4 above, the restriction of \(\mathscr {B}\) to \(\widetilde {U}_1\), i.e., the operator

is a self-adjoint operator on the Hilbert space U 1 (with dense domain \(\widetilde {U}_1\)), which itself is canonically determined by \(\widetilde {S}\). The interest towards this operator B is due the following fundamental property.

Proposition 1 (B-Decomposition Formula)

Proof

One has

This identity, together with

yields \(\mathscr {D}(\widetilde {S})=\mathscr {D}(\overline {S})+(S_F^{-1}+B)\widetilde {U}_1+U_0\), and this sum is direct because if \(\mathscr {D}(\widetilde S) \ni g = f + (S_F^{-1}+B)\tilde u_1 +u_0=f+S_F^{-1}\tilde u_1 + (B\tilde u_1 +u_0)\), then, according to (6) and (14), g = 0 implies f = 0, \(\tilde u_1=0\) and u 0 = 0. Thus, in order to prove (24) it only remains to prove (*). For the inclusion \(P_+(S_F^{-1}+B)\widetilde {U}_1+U_0\subset (S_F^{-1}+B)\widetilde {U}_1+U_0\) pick \(\psi :=P_+(S_F^{-1}+B)\widetilde {u}_1+u_0\) for generic \(\widetilde {u}_1\in \widetilde {U}_1\) and u 0 ∈ U 0. From (23), from the fact that \(\widetilde {u}_1=P_+\widetilde {u}_1\), and from \(P_+\mathscr {S}^{-1}\widetilde {u}_1=\mathscr {S}^{-1}\widetilde {u}_1\) (which follows from (21)), one has

as well as

where \(u_0^{\prime }:=S_F^{-1}\widetilde {u}_1-P_+S_F^{-1}\widetilde {u}_1\in \,\mathscr {H}\ominus \mathscr {H}_+=U_0\); therefore,

which proves that \(\psi \in (S_F^{-1}+B)\widetilde {U}_1+U_0\). The opposite inclusion to establish (*), that is, \(P_+(S_F^{-1}+B)\widetilde {U}_1+U_0\supset (S_F^{-1}+B)\widetilde {U}_1+U_0\), is proved repeating the same argument in reverse order. □

2.4 Classification of All Self-Adjoint Extensions: Operator Formulation

After characterising the structure (6) of \(\mathscr {D}(S^*)\) and providing the decomposition (24) of \(\mathscr {D}(\widetilde {S})\) for a generic self-adjoint extension \(\widetilde {S}\) in terms of its B-operator, the next fundamental result in the KVB theory is the fact that the B-decomposition actually classifies all self-adjoint extensions of S.

Theorem 2 (Višik-Birman Representation Theorem)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0). There is a one-to-one correspondence between the family of the self-adjoint extensions of S on \(\mathscr {H}\) and the family of the self-adjoint operators on Hilbert subspaces of \(\ker S^*\) , that is, the collection of triples \((U_1,\widetilde {U}_1,B)\) , where U 1 is a closed subspace of \(\ker S^*\), \(\widetilde {U}_1\) is a dense subspace of U 1 , and B is a self-adjoint operator on the Hilbert space U 1 with domain \(\mathscr {D}(B)=\widetilde {U}_1\) . For each such triple, let U 0 be the closed subspace of \(\ker S^*\) defined by \(\ker S^*=U_0\oplus U_1\) . Then, in this correspondence S B ↔ B, each self-adjoint extension S B of S is given by

Proof

The fact that each self-adjoint extension of S is precisely of the form S B is the content of Proposition 1, where B is Višik’s B operator associated to the considered self-adjoint extension. Conversely, one has to prove that each operator on \(\mathscr {H}\) of the form S B above is a self-adjoint extension of S, and that the correspondence S B ↔ B is one-to-one.

Fixed \((U_1,\widetilde {U}_1,B)\) as in the statement, let us consider the corresponding S B. One sees from (25) that S B is densely defined (\(\mathscr {D}(S_B)\supset \mathscr {D}(S)\)) and it is an operator extension of S (\(S_B f=\overline {S}f\) for all \(f\in \mathscr {D}(\overline {S})\)). In fact, S B is a symmetric extension: for two generic elements of \(\mathscr {D}(S_B)\) one has

(where in the first step we used that \(\langle B\widetilde {u}_1^{\prime },\overline {S}f\rangle =\langle S^* B \widetilde {u}_1^{\prime },f\rangle =0\), \(\langle u_0^{\prime },\overline {S}f\rangle =\langle S^*u_0^{\prime },f\rangle =0\), \(\langle u_0^{\prime },\widetilde {u}_1\rangle =0\), in the second step we used the symmetry of \(\overline {S}\), the self-adjointness of \(S_F^{-1}\) and B, and the properties of the adjoint S ∗, and in the last step we used that \(\langle \overline {S}f^{\prime },B\widetilde {u}_1\rangle =\langle f^{\prime },S^*B\widetilde {u}_1\rangle =0\), \(\langle \overline {S}f^{\prime },u_0\rangle =\langle f^{\prime },S^*u_0\rangle =0\), \(\langle \widetilde {u}_1^{\prime },u_0\rangle =0\)). Therefore, S ⊂ S B ⊂ S B ∗⊂ S ∗ and in order to show that S B = S B ∗ it suffices to prove that \(\mathscr {D}(S_B)\supset \mathscr {D}({S_B}^{*})\).

Let us then pick \(h\in \mathscr {D}({S_B}^{*})\), generic. Since S B ∗⊂ S ∗, one has \(h=\varphi +S_F^{-1}\overline {v}+v\) by (6) for some \(\varphi \in \mathscr {D}(\overline {S})\) and \(v,\overline {v}\in U=\ker S^*\), and \({S_B}^{*}h=\overline {S}\varphi +\overline {v}\). Actually \(\overline {v}\in U_1\), because U = U 0 ⊕ U 1 and \(\langle \overline {v},u_0\rangle =\langle {S_B}^{*}h,u_0\rangle -\langle \overline {S}\varphi ,u_0\rangle =\langle h,S_Bu_0\rangle -\langle \varphi ,S^*u_0\rangle =\langle h-\varphi ,S^*u_0\rangle =0\ \forall u_0\in U_0\). Thus, representing v = v 0 + v 1, v 0 ∈ U 0, v 1 ∈ U 1, one writes

In order to recognise this vector to belong to \(\mathscr {D}(S_B)\), let us exploit the identity \(\langle h,S_B k\rangle =\langle S_B^*h,k\rangle \), valid \(\forall k\in \mathscr {D}(S_B)\), for the k’s of the special form \(k=f+(S_F^{-1}+B)\widetilde {u}_1\), \(f\in \mathscr {D}(\overline {S})\), \(\widetilde {u}_1\in \mathscr {D}(B)\). In this case

(indeed, \(S_B B\widetilde {u}_1=S^*B\widetilde {u}_1=0\), \(\varphi +S_F^{-1}\overline {v}\in \mathscr {D}(S_F)\), \(f+S_F^{-1}\widetilde {u}_1\in \mathscr {D}(S_F)\), \(\langle v_1+v_0,\overline {S}f\rangle =\langle S^*(v_1+v_0),f\rangle =0\), and \(\langle v_0,\widetilde {u}_1\rangle =0\)) and

(indeed, \(\langle \overline {S}\varphi ,B\widetilde {u}_1\rangle =\varphi ,S^*B\widetilde {u}_1\rangle =0\)). Equating these two expressions and using the self-adjointness of S F yields

which implies, owing to the self-adjointness of B, \(\overline {v}\in \mathscr {D}(B)\) and \(B\overline {v}=v_1\). Thus, the above decomposition for h reads now

for some \(\overline {v}\in \mathscr {D}(B)=\widetilde {U}_1\) and v 0 ∈ U 0, thus proving that \(h\in \mathscr {D}(S_B)\).

Last, one proves that the operator B in the decomposition (25) is unique and therefore the correspondence between self-adjoint extensions of S and operators of the form S B is one-to-one. Indeed, if

where \(\ker S^*=U_0\oplus U_1=U_0^{\prime }\oplus U_1^{\prime }\) and where B and B ′ are self-adjoint operators, respectively on the Hilbert spaces U 1 and \(U_1^{\prime }\), with domain, respectively, \(\widetilde {U}_1\) and \(\widetilde {U}_1^{\prime }\), then the action of S B on an arbitrary element \(g\in \mathscr {D}(S_B)\) gives, in terms of the decomposition \(g=f+(S_F^{-1}+B)\widetilde {u}_1+u_0=f^{\prime }+(S_F^{-1}+B^{\prime })\widetilde {u}^{\prime }_1+u^{\prime }_0\), \(S_Bg=\overline {S}f+\widetilde {u}_1=\overline {S}f^{\prime }+\widetilde {u}_1^{\prime }\); each sum belongs to the orthogonal sum \(\mathscr {H}=\overline {\mathrm {ran} S}\oplus \ker S^*\), whence \(\widetilde {u}_1=\widetilde {u}_1^{\prime }\) and, by injectivity of S, f = f ′ (whence also \(u_0=u^{\prime }_0\)); thus, \(\widetilde {u}_1=\widetilde {u}_1^{\prime }\) and, after taking the closure \(U_1=U_1^{\prime }\) and \(U_0=U_0^{\prime }\); this also implies \(B\widetilde {u}_1=B^{\prime }\widetilde {u}_1\), whence B = B ′. □

2.5 Characterisation of Semi-Bounded Extensions: Operator Version

A further relevant feature of the KVB theory is that the general classification (25) of Theorem 2 allows to identify special subclasses of self-adjoint extensions of S, significantly those that are bounded below, or in particular positive or also strictly positive, in terms of suitable subclasses of the corresponding B-operators in the representation (25).

In this respect, the convenient characterisation is expressed in terms of the inverse of B, more precisely of the self-adjoint operator \(B_\star ^{-1}\) on the Hilbert space

which is a Hilbert subspace of \(\ker S^*\) (recall the notation \(\ker S_B\equiv U_0\), \(\ker S^*\equiv U\), and observe that \(\overline {\mathrm {ran} B}\,\oplus \,\ker S_B\subset U_1\oplus U_0=U=\ker S^*\)), defined by

It is fair to refer to \(B_\star ^{-1}\) as “Birman’s operator”, for it is Birman who first determined and exploited its properties (Lemma 5 and Theorem 3 below).

For reference purposes, in (27) we intentionally kept Birman’s original notation [8]. A more precise definition of the operator \(B_\star ^{-1}\) is the following

where we used the decompositions \(U_1=\overline {\mathscr {D}(B)}=\overline {\mathrm {ran} B}\oplus \ker B\) and \(\widetilde {U}_1=\mathscr {D}(B)=(\mathscr {D}(B)\cap \overline {\mathrm {ran} B})\boxplus \ker B\). Thus, the action of \(B_\star ^{-1}\) on a generic element \(B\widetilde {u}_1\in \mathrm {ran} B\) is given, in view of the decomposition \(\widetilde {u}_1=z+z_0\) for some \(z\in \mathscr {D}(B)\cap \overline {\mathrm {ran} B}\) and \(z_0\in \ker B\), by \(B_\star ^{-1}B\widetilde {u}_1=z\).

Remark 6

One has

Indeed, according to (25), any \(g\in \mathscr {D}(S_B)\) decomposes for some \(f_0\in \mathscr {D}(\overline {S})\), \(\widetilde {u}_1\in \widetilde {U}_1=\mathscr {D}(B)\), \(u_0\in U_0=\ker S^*\cap \mathscr {D}(B)^\perp \) as \(g=f_0+(S_F^{-1}+B)\widetilde {u}_1+u_0=f+v\), where \(f:=f_0+S_F^{-1}\widetilde {u}_1\in \mathscr {D}(S_F)\) and (by (28)) \(v:=B\widetilde {u}_1+u_0\in \mathscr {D}(B_\star ^{-1})\). The sum in the r.h.s. of (29) is direct because if \(v\in \mathscr {D}(S_F)\cap \mathscr {D}(B_\star ^{-1})\), then \(v\in \ker S^*\) by definition (27) and hence \(\|v\|{ }^2=\langle S_F S_F^{-1}v,v\rangle =\langle S_F^{-1}v,S_Fv\rangle =\langle S_F^{-1}v,S^*v\rangle =0\). Observe, conversely, that inclusion is generically strict. Indeed, for a generic \(h\in \mathscr {D}(S_F)\dotplus \mathscr {D}(B_\star ^{-1})\) one writes, according to (7) and (28), \(h=(f_0+S_F^{-1}\widetilde {u}_1+B\widetilde {u}_1+u_0)+S_F^{-1}(u-\widetilde {u}_1)\) for some \(f_0\in \mathscr {D}(\overline {S})\), \(\widetilde {u}_1\in \mathscr {D}(B)\), \(u_0\in \ker S^*\cap \mathscr {D}(B)^\perp \), \(u\in \ker S^*\), and this is not enough to use (25) and deduce that \(h\in \mathscr {D}(S_B)\).

Lemma 5

-

(i)

If, with respect to the notation of (25) and (28), S B is a self-adjoint extension of a given densely defined symmetric operator S with positive bottom (m(S) > 0), then

$$\displaystyle \begin{aligned} \mathscr{D}(B_\star^{-1})\;\subset\;\mathscr{D}[S_B]\cap\ker S^*\,. \end{aligned} $$(30) -

(ii)

If in addition S B is bounded below , then

$$\displaystyle \begin{aligned} S_B[v_1,v_2]\;=\;\langle v_1,B_\star^{-1} v_2\rangle\qquad \quad \forall v_1,v_2\in\mathscr{D}(B_\star^{-1})\,. \end{aligned} $$(31)

Proof

The fact that \(\mathscr {D}(B_\star ^{-1})\subset \ker S^*\) is stated in the definition (27). To prove that \(\mathscr {D}(B_\star ^{-1})\subset \mathscr {D}[S_B]\), decompose an arbitrary \(v\in \mathscr {D}(B_\star ^{-1})\), according to (28), as \(v=B\widetilde {u}_1+u_0\) for some \(\widetilde {u}_1\in \mathscr {D}(B)\!\setminus \!\ker B\subset \widetilde {U}_1\) and \(u_0\in U_0=\ker S_B\). From \(v=\big ((S_F^{-1}+B)\widetilde {u}_1+u_0\big )-S_F^{-1}\widetilde {u}_1\) one can then regard v as the difference between an element in \(\mathscr {D}(S_B)\), according to (25), and an element in \(\mathscr {D}(S_F)\). Since \(\mathscr {D}(S_B)\subset \mathscr {D}[S_B]\) and \(\mathscr {D}(S_F)\subset \mathscr {D}[S_F]\subset \mathscr {D}[S_B]\) (the Friedrichs extension has the smallest form domain among all semi-bounded extensions, Theorem 15(vii)), then \(v\in \mathscr {D}[S_B]\), which proves \(\mathscr {D}(B_\star ^{-1})\subset \mathscr {D}[S_B]\) and completes the proof of (30). To prove (31), consider again an arbitrary \(v=B\widetilde {u}_1+u_0\) in \(\mathscr {D}(B_\star ^{-1})\) as above: for \(f:=S_F^{-1}\widetilde {u}_1\in \mathscr {D}(S_F)\) and \(g:=f+v=S_F^{-1}\widetilde {u}_1+B\widetilde {u}_1+u_0\in \mathscr {D}(S_B)\), one has \(S_B g=\widetilde {u}_1=S_F f\), \(B_\star ^{-1}v=\widetilde {u}_1\), and

All this is still valid irrespectively of the semi-boundedness of S B. On the other hand, if S B is bounded below, then a central result in Kreı̆n’s theory of self-adjoint extensions (see (127) quoted in appendix A.3) states that S B[f, v] = 0 for any \(f\in \mathscr {D}[S_F]\) and any \(v\in \mathscr {D}[S_B]\cap \ker S^*\) (which is what holds for f and v in the present case, owing to (i)), whence

Thus, by comparison, \(S_B[v,v]=\langle v,B_\star ^{-1}v\rangle \ \forall v\in \mathscr {D}(B_\star ^{-1})\). (31) then follows by polarisation. □

Theorem 3 (Characterisation of Semi-Bounded Extensions)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0). If, with respect to the notation of (25) and (28), S B is a self-adjoint extension of S, and if α < m(S), then

As an immediate consequence, \(m(B_\star ^{-1})\geqslant m(S_B)\) for any semi-bounded S B . In particular, positivity or strict positivity of the bottom of S B is equivalent to the same property for \(B_\star ^{-1}\) , that is,

Moreover, if \(m(B_\star ^{-1})>-m(S)\) , then

Proof

Let us start with the proof of (32). Observe that the fact that S B is bounded below by α is equivalently expressed as \(S_B[g]\geqslant \alpha \|g\|{ }^2\ \forall g\in \mathscr {D}[S_B]\). For generic \(f\in \mathscr {D}(S_F)\) and \(v\in \mathscr {D}(B_\star ^{-1})\), one has that \(g:=f+v\in \mathscr {D}[S_B]\) and \(S_B[v]=\langle v,B_\star ^{-1}v\rangle \) (Lemma 5). On the other hand, since \(v\in \mathscr {D}[S_B]\cap \ker S^*\) (Lemma 5), then S B[f, v] = 0 (owing to (127)). Therefore, \(S_B[g]=S_B[f+v]=S_F[f]+S_B[v]=\langle f,S_Ff\rangle +\langle v,B_\star ^{-1} v\rangle \). Thus, the assumption that S B is bounded below by α reads, for all such g’s,

whence also, replacing f↦λf, v↦μv,

Since α < m(S), and hence 〈f, S F f〉− α∥f∥2 > 0, inequality (ii) holds true if and only if

for arbitrary \(f\in \mathscr {D}(S_F)\) and \(v\in \mathscr {D}(B_\star ^{-1})\), which is therefore a necessary condition for S B to be bounded below by α. Condition (iii) is in fact also sufficient. To see this, decompose an arbitrary \(g\in \mathscr {D}(S_B)\) as g = f + v for some \(f\in \mathscr {D}(S_F)\) and \(v\in \mathscr {D}(B_\star ^{-1})\) (which is always possible, as observed in Remark 6) and apply (iii) to this case: one then obtains (ii) owing to α < m(S), which in turns yields (i) when λ = μ = 1; from (i) one goes back to \(S_B[g]\geqslant \alpha \|g\|{ }^2\) following in reverse order the same steps discussed at the beginning. Thus, (iii) is equivalent to the fact that S B is bounded below by α. By re-writing (iii) as

and by the fact that the above inequality is valid for arbitrary \(f\in \mathscr {D}(S_F)\) and hence holds true also when the supremum over such f’s is taken, one finds

by means of a standard operator-theoretic argument applied to the bottom-positive operator  (Lemma 6). This completes the proof of (32).

(Lemma 6). This completes the proof of (32).

From (32) one deduces immediately both the first equivalence of (33), by taking α = 0, and the implication “\(m(S_B)>0\Rightarrow m(B_\star ^{-1})>0\)” in the second equivalence of (33), because \(m(B_\star ^{-1})\geqslant m(S_B)\). Conversely, if \(m(B_\star ^{-1})>0\), then it follows from (27)–(28) that \(B_\star ^{-1}\) has a bounded inverse, that \(U_0=\ker S_B=\{0\}\), and that \(B:\mathscr {D}(B)\equiv \widetilde {U}_1\subset U_1\to U_1\) is bounded; this, in turn, implies by Lemma 4(ii) and by (23) that the operator \(\mathscr {B}\) defined in (22) is bounded, which by Lemma 4(i) means that S B has a bounded inverse (densely defined) on the whole \(\mathscr {H}_+\) and therefore (\(\mathscr {H}=\mathscr {H}_+\oplus U_0\)) on the whole \(\mathscr {H}\). This fact excludes that m(S B) = 0, and since \(m(B_\star ^{-1})>0\Rightarrow m(S_B)\geqslant 0\) by the first of (33), one finally concludes m(S B) > 0, which completes the proof of (33).

Last, it only remains to prove \(m(S_B)\geqslant m(S)m(B_\star ^{-1})(m(S)+m(B_\star ^{-1}))^{-1}\) in (34) (assuming \(m(B_\star ^{-1})>-m(S)\)). In this case, for

one has α < m(S) = m(S F) and \(m(B_\star ^{-1})=\alpha \,m(S)(m(S)-\alpha )^{-1}\), whence \((m(S)-\alpha )^{-1}\geqslant (S_F-\alpha )^{-1}\) and

Owing to (32), the latter inequality is equivalent to \(m(S_B)\geqslant \alpha \), which completes the proof of (34). □

Lemma 6

If A is a self-adjoint operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(A) > 0), then

Proof

Setting g := A 1∕2 f one has

and since |〈g, A −1∕2 h〉| attains its maximum for g = A −1∕2 h∕∥A −1∕2 h∥, the conclusion then follows. □

2.6 Characterisation of Semi-Bounded Extensions: Form Version

The operator characterisation of the semi-bounded self-adjoint extensions of S provided by Theorem 3 has the virtue that it can be directly reformulated in terms of the quadratic form associated with an extension. The result is a very clean expression of \(\mathscr {D}[S_B]\) in terms of the intrinsic space \(\mathscr {D}[S_F]\) and the additional space \(\mathscr {D}[B_\star ^{-1}]\), where B (equivalently, \(B_\star ^{-1}\)) plays the role of the “parameter” of the extension also in the form sense. This is a plus as compared to von Neumann’s characterisation (Theorem 14), for the latter only classifies the self-adjoint extensions of S by indexing each operator extension S U in terms of a unitary U acting between defect subspaces, whereas the quadratic form associated with each S U has no explicit description in terms of U.

Theorem 4 (Characterisation of Semi-Bounded Extensions—form Version)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0) and, with respect to the notation of (25) and (28), let S B be a semi-bounded (not necessarily positive) self-adjoint extension of S. Then

and

As a consequence,

and

Remark 7

Identity (35) is the form version of the inclusion (29) for operator domains, the latter holding for a generic (not necessarily semi-bounded) extension S B. Property (36) represents the actual improvement of the KVB theory, as compared to Kreı̆n’s original theory, as far as the quadratic forms of the extensions are concerned. Recall indeed that Kreı̆n’s original theory (see Sect. I and (126) in the Appendix) establishes, for a generic semi-bounded self-adjoint extension \(\widetilde {S}\) of a densely defined symmetric operator S with positive bottom, the property

What the KVB theory does in addition to (39), is therefore to characterise the space \(\mathscr {D}[\widetilde {S}]\cap \ker S^*\) in terms of the parameter B (equivalently, \(B_\star ^{-1}\)) of each semi-bounded extension.

Proof (Proof of Theorem 4)

Let us fix \(\alpha \in \mathbb {R}\) such that α < m(S B) and |α| > 1. To establish the inclusion \(\mathscr {D}[B_\star ^{-1}]\subset \mathscr {D}[S_B]\cap \ker S^*\) in (35) let us exploit the fact that \(\mathscr {D}[B_\star ^{-1}]\) is the completion of \(\mathscr {D}(B_\star ^{-1})\) in the norm associated with the scalar product

(indeed, owing to Theorem 3), \(m(B_\star ^{-1})\geqslant m(S_B)>\alpha \)), whereas \(\mathscr {D}[S_B]\) is complete in the norm associated with the scalar product

Owing to Lemma 5, \(\mathscr {D}(B_\star ^{-1})\subset \mathscr {D}[S_B]\) and

thus the \(\|\,\|{ }_{B_\star ^{-1}}\)-completion of \(\mathscr {D}(B_\star ^{-1})\) does not exceed \(\mathscr {D}[S_B]\). On the other hand,

and the r.h.s. above is obviously a norm with respect to which \(\ker S^*\) is closed: therefore the \(\|\,\|{ }_{B_\star ^{-1}}\)-completion of \(\mathscr {D}(B_\star ^{-1})\) does not exceed \(\ker S^*\) either. This proves \(\mathscr {D}[B_\star ^{-1}]\subset \mathscr {D}[S_B]\cap \ker S^*\). For the opposite inclusion, let us preliminarily observe that the assumption m(S B) > α implies

(where \(\|v\|{ }_{B_\star ^{-1}}=\|v\|{ }_{S_B}\ \forall v\in \mathscr {D}(B_\star ^{-1})\) was used), as seen already in the course of the proof of Theorem 3, when condition (iii) therein was established. Let us also remark that \(\mathscr {D}[S_F]\) is a \(\|\,\|{ }_{S_B}\)-closed subspace of \(\mathscr {D}[S_B]\) (which follows from \(\mathscr {D}[S_F]\subset \mathscr {D}[S_B]\), from \(S_B[f]=S_F[f]\ \forall f\in \mathscr {D}[S_F]\), and from \(m(S_F)\geqslant m(S_B)>\alpha \), see Theorem 15), and so is \(\mathscr {D}[B_\star ^{-1}]\) (as discussed previously in this proof). Let now \(u\in \mathscr {D}[S_B]\cap \ker S^*\), arbitrary, and let \((g_n)_{n\in \mathbb {N}}\) be a sequence in \(\mathscr {D}(S_B)\) of approximants of u in the \(\|\,\|{ }_{S_B}\)-norm. As remarked with (29), g n = f n + v n for some \(f_n\in \mathscr {D}(S_F)\) and \(v_n\in \mathscr {D}(B_\star ^{-1})\), which are both vectors in \(\mathscr {D}[S_B]\). From this and from (*) above one has

Since 1 − α −2 > 0, one deduces that both \((f_n)_{n\in \mathbb {N}}\) and \((v_n)_{n\in \mathbb {N}}\) are Cauchy sequences, respectively, in \(\mathscr {D}[S_F]\) and \(\mathscr {D}[B_\star ^{-1}]\), with respect to the topology of the \(\|\,\|{ }_{S_B}\)-norm, with limits, say, \(f_n\to f\in \mathscr {D}[S_F]\) and \(v_n\to v\in \mathscr {D}[B_\star ^{-1}]\) as n →∞. Taking n →∞ in g n = f n + v n thus yields u = f + v. Having proved above that \(\mathscr {D}[B_\star ^{-1}]\subset \ker S^*\), one therefore concludes \(f=u-v\in \ker S^*\), which together with \(f\in \mathscr {D}[S_F]\) implies f = 0 (indeed m(S F) = m(S) > 0 and hence \(\mathscr {D}[S_F]\cap \ker S^*=\{0\}\)). Then \(u=v\in \mathscr {D}[B_\star ^{-1}]\), which complete the proof of \(\mathscr {D}[B_\star ^{-1}]\supset \mathscr {D}[S_B]\cap \ker S^*\) and establishes finally (35). Coming now to the proof of (36), the identity \(\mathscr {D}[S_B]=\mathscr {D}[S_F]\dotplus \mathscr {D}[B_\star ^{-1}]\) is a direct consequence of (35) and of (39). Furthermore, owing to the fact that \(\mathscr {D}[B_\star ^{-1}]\) is closed in \(\mathscr {D}[S_B]\), identity (31) lifts to \(S_B[v_1,v_2]=B_\star ^{-1}[v_1,v_2]\ \forall v_1,v_2\in \mathscr {D}[B_\star ^{-1}]\), which allows do deduce also the second part of (36) from (39). □

2.7 Parametrisation of Distinguished Extensions: S F and S N

One recognises in formulas (25) and (36) the special parameter B (equivalently, \(B_\star ^{-1}\)) that selects, among all positive self-adjoint extensions S B, the Friedrichs extension S F or the Kreı̆n-von Neumann extension S N. (The characterisation and a survey of the main properties of S F and S N can be found, respectively, in Theorems 15–16 and in Theorems 17–18.) This is another plus with respect to von Neumann’s theory, where S F or S N are not identifiable a priori by a special choice of the unitary that labels each extension.

The result can be summarised as follows

the details of which are discussed in the following Proposition.

Proposition 2

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0) and let S B be a positive self-adjoint extension of S, parametrised by B (equivalently, by \(B_\star ^{-1}\) ) according to Theorems 2 and 4.

-

(i)

S B is the Friedrichs extension when \(\mathscr {D}[B_\star ^{-1}]=\{0\}\) , equivalently, when \(\mathscr {D}(B)=\ker S^*\) and \(Bu=0\ \forall u\in \ker S^*\).

-

(ii)

S B is the Kreı̆n-von Neumann extension when \(\mathscr {D}(B_\star ^{-1})=\mathscr {D}[B_\star ^{-1}]=\ker S^*\) and \(B_\star ^{-1}u=0\ \forall u\in \ker S^*\) ; equivalently, when \(\mathscr {D}(B)=\{0\}\).

Proof

Concerning part (i), \(\mathscr {D}[B_\star ^{-1}]=\{0\}\) follows from (36) when S B = S F. Hence also \(\mathscr {D}(B_\star ^{-1})=\{0\}\), which implies ranB = {0} (owing to (27)), that is, B is the zero operator on its domain. Comparing (7) and (25) one therefore has U 0 = {0}, \(\widetilde {U}_1=U_1=\ker S^*\), and hence \(\mathscr {D}(B)=\ker S^*\). As for part (ii), \(\mathscr {D}[B_\star ^{-1}]=\ker S^*\) follows by comparing (36), when S B = S N, with the property (119) of S N. This, together with \(S_N[u]=0\ \forall u\in \ker S^*\) (given by (118)) and with \(B_\star ^{-1}[u]=S_B[u]=S_N[u]\ \forall u\in \mathscr {D}[B_\star ^{-1}]\) (given by (36) when S B = S N), yields \(B_\star ^{-1}[u]=0\ \forall u\in \mathscr {D}[B_\star ^{-1}]\), that is, \(B_\star ^{-1}[u]\) is the zero operator on its domain and hence \(\mathscr {D}(B_\star ^{-1})=\mathscr {D}[B_\star ^{-1}]=\ker S^*\). In turn, (119) now gives \(\mathscr {D}(B_\star ^{-1})=\ker S^*=\ker S_N\), and therefore (27) (when S B = S F) yields ranB = {0}. Comparing (119) and (25) one therefore has \(U_0=\ker S^*\), \(\widetilde {U}_1=U_1=\{0\}\), and hence \(\mathscr {D}(B)=\{0\}\). □

Remark 8

The customary convention, adopted in (40)–(41), to label the Friedrichs extension formally with “\(B_\star ^{-1}=\infty \)” and the Kreı̆n-von Neumann extension formally with “B = ∞” (where it is understood that the considered operator has trivial domain {0}), is to make the labelling consistent with the ordering (37).

Remark 9

In the case in which \(S_F^{-1}\) is replaced by the inverse \(\widetilde S^{-1}\) of an invertible self-adjoint extension \(\widetilde S\) of S, there is no canonical choice any longer for the parameter B to select the Friedrichs extension S F. However, it remains true that “B = ∞” parametrises the Kreı̆n-von Neumann extension (S N) while \(B=\mathbb {O}\) parametrises \(\widetilde S\) instead of S F.

3 Equivalent Formulations of the KVB Theory

Theorems 2, 3, and 4, and Proposition 2 above are not in the form they have customarily appeared in the mathematical literature in English language that followed the original works [8, 34, 66], nor are their proofs. While we defer to the next Section a more detailed comparison with the more recent formulations, let us discuss in this Section a natural alternative parametrisation of the extensions which is equivalent to the original one provided by the original KVB theory.

In fact, this alternative arises naturally when the roles of the parameters B and \(B_\star ^{-1}\) are interchanged. Both B and \(B_\star ^{-1}\) are self-adjoint operators acting on Hilbert subspaces of \(\ker S^*\). Although these two parameters are not exactly the inverse of each other, the definition of \(B_\star ^{-1}\) resembles very much an operator inversion. Proposition 3 and Remarks 10 and 11 here below highlight the general properties of such an “inversion” mechanism. This will provide the ground to establish Theorems 5, 6, and 7 as an equivalent version, respectively, of Theorems 2, 3, and 4.

Proposition 3

Let \(\mathscr {K}\) be a Hilbert space and let \(\mathscr {S}(\mathscr {K})\) be the collection of the self-adjoint operators acting on Hilbert subspaces of \(\mathscr {K}\) . Given \(T\in \mathscr {S}(\mathscr {K})\) , let V be the closed subspace of \(\mathscr {K}\) which T acts on, with domain \(\mathscr {D}(T)\equiv \widetilde {V}\) dense in V , and let W := V ⊥ , i.e., \(\mathscr {K}=V\oplus W\) . Let ϕ(T) be the densely defined operator acting on the Hilbert subspace \(\overline {\mathrm {ran} T}\oplus W\) of \(\mathscr {K}\) defined by

Then:

-

(i)

\(\phi (T)\in \mathscr {S}(\mathscr {K})\),

-

(ii)

the map \(\phi :\mathscr {S}(\mathscr {K})\to \mathscr {S}(\mathscr {K})\) is a bijection on \(\mathscr {S}(\mathscr {K})\),

-

(iii)

ϕ 2 = ı, the identity map on \(\mathscr {S}(\mathscr {K})\) , that is, ϕ −1 = ϕ.

Remark 10

In shorts, ϕ provides a transformation of T that is similar to the inversion. More precisely, although T is in general not invertible, ϕ(T) inverts T on ranT, while it is the zero operator on the orthogonal complement in \(\mathscr {K}\) of the Hilbert subspace where T was acting on. In particular, if T is densely defined in \(\mathscr {K}\) itself and invertible, then ϕ(T) = T −1, that is, ϕ is precisely the inversion transformation.

Remark 11

By comparing (28) and (42) in the special case \(\mathscr {K}=\ker S^*\), T = B, V = U 1, and \(W=U_0=\ker S_B\), one concludes that \(B_\star ^{-1}=\phi (B)\), that is, the Birman operator \(B_\star ^{-1}\) is precisely the ϕ-inversion of the Višik operator B.

Proof (Proof of Proposition 3)

The self-adjointness of ϕ(T) on the Hilbert space \(\overline {\mathrm {ran} T}\oplus W\) is a standard and straightforward consequence of its definition. For the rest of the proof, it obviously suffices to show that ϕ(ϕ(T)) = T for any \(T\in \mathscr {S}(\mathscr {K})\). By definition the operator ϕ(T) acts on the Hilbert space \(V^{\prime }:=\overline {\mathrm {ran} T}\oplus W\) with domain \(\widetilde {V}^{\prime }:=\mathrm {ran} T\boxplus W\) dense in V ′. Setting W ′ := V ′ ⊥, in view of the decomposition \(\mathscr {K}=V^{\prime }\oplus W^{\prime }\) the operator ϕ(ϕ(T)) is therefore determined, by definition, as the operator that acts on the Hilbert subspace \(\overline {\mathrm {ran}\,\phi (T)}\oplus W^{\prime }\) of \(\mathscr {K}\) according to

Since \(V=\overline {\mathrm {ran} T}\oplus \ker T\), and hence \(\mathscr {K}=\overline {\mathrm {ran} T}\oplus \ker T\oplus W\), then \(W^{\prime }=\ker T\). Thus,

and \(\phi (\phi (T)) w^{\prime }=0=Tw^{\prime }\ \forall w^{\prime }\in W^{\prime }=\ker T\). It remains to show that ϕ(ϕ(T)) and T agree also on \(\mathscr {D}(T)\cap \overline {\mathrm {ran} T})\). In fact, if v is a vector in such a subspace, then v = ϕ(T)Tv and in view of ϕ(ϕ(T))ϕ(T)Tv = Tv one has ϕ(ϕ(T))v = Tv. This completes the proof that ϕ(ϕ(T))v = Tv for any \(v\in \mathscr {D}(T)\) and since the two operators have also the same domain, the conclusion is ϕ(ϕ(T)) = T. □

We are now in the condition of re-stating the main results of the KVB extension theory established in Sect. 2 in the equivalent form that follows.

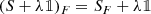

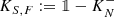

Theorem 5 (Classification of Self-Adjoint Extensions—Operator Version)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0). There is a one-to-one correspondence between the family of all self-adjoint extensions of S on \(\mathscr {H}\) and the family of the self-adjoint operators on Hilbert subspaces of \(\ker S^*\) . If T is any such operator, in the correspondence T ↔ S T each self-adjoint extension S T of S is given by

Theorem 6 (Characterisation of Semi-Bounded Extensions)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0). If, with respect to the notation of (43), S T is a self-adjoint extension of S, and if α < m(S), then

As an immediate consequence, \(m(T)\geqslant m(S_T)\) for any semi-bounded S T . In particular, positivity or strict positivity of the bottom of S T is equivalent to the same property for T, that is,

Moreover, if m(T) > −m(S), then

Theorem 7 (Characterisation of Semi-Bounded Extensions—form Version)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0) and, with respect to the notation of (43), let S T be a semi-bounded (not necessarily positive) self-adjoint extension of S. Then

and

As a consequence,

and

Proposition 4 (Parametrisation of S F and S N)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0) and let S T be a positive self-adjoint extension of S, parametrised by T according to Theorems 5 and 7.

-

(i)

S T is the Friedrichs extension when \(\mathscr {D}[T]=\{0\}\) (“ T = ∞”).

-

(ii)

S T is the Kreı̆n-von Neumann extension when \(\mathscr {D}(T)=\mathscr {D}[T]=\ker S^*\) and \(Tu=0\ \forall u\in \ker S^*\) ( \(\,T=\mathbb {O}\) ).

Proof (Proof of Theorem 5)

Let S B be a generic self-adjoint extension of S, parametrised by B according to Theorem 2, formula (25). Correspondingly, let \(B_\star ^{-1}\) be the Birman’s operator introduced in (27)–(28). First of all, we claim that S B is precisely of the form S T in (43) above where \(T=B_\star ^{-1}\). To prove that, consider a generic element \(g=f+(S_F^{-1}+B)\widetilde {u}_1+u_0\) of \(\mathscr {D}(S_B)\), as given by the decomposition (25) for some \(f\in \mathscr {D}(\overline {S})\), \(\widetilde {u}_1\in \widetilde {U}_1=\mathscr {D}(B)\), and \(u_0\in U_0=\ker S^*\cap \mathscr {D}(B)^\perp =\ker S_B\). We write \(\widetilde {u}_1=z+w\) for some \(w\in \ker B\) and some \(z\in \mathscr {D}(B)\cap \overline {\mathrm {ran} B}\) that are uniquely identified by the decomposition \(U_1=\overline {\mathscr {D}(B)}=\overline {\mathrm {ran} B}\oplus \ker B\), \(\mathscr {D}(B)=(\mathscr {D}(B)\cap \overline {\mathrm {ran} B})\boxplus \ker B\). Owing to (28), \(v:=B\widetilde {u}_1+u_0=B z+u_0\in \mathscr {D}(B_\star ^{-1})\) and \(B_\star ^{-1} v=z\). Moreover, from

one deduces that \(\ker B=\ker S^*\cap \mathscr {D}(B_\star ^{-1})^\perp \). Therefore,

that is, g is an element of \(\mathscr {D}(S_T)\) defined in (43) above with \(T=B_\star ^{-1}\). It is straightforward to go through the same arguments and decompositions in reverse order to conclude that any vector of the form \(S_F^{-1}(B_\star ^{-1} v+w)+v\), where \( v\in \mathscr {D}(B_\star ^{-1})\) and \(w\in \ker S^*\cap \mathscr {D}(B_\star ^{-1})^\perp \), can be re-written as \((S_F^{-1}+B)\widetilde {u}_1+u_0\) for \(\widetilde {u}_1\in \widetilde {U}_1=\mathscr {D}(B)\), and u 0 ∈ U 0 determined by

which proves that any \(g\in \mathscr {D}(S_T)\) is also an element of \(\mathscr {D}(S_B)\). Thus, (25) and (43) define the same domain: \(\mathscr {D}(S_B)=\mathscr {D}(S_T)\) for \(T=B_\star ^{-1}\). Since S B and S T are the restrictions to such a common domain of the same operator S ∗, then S B = S T for \(T=B_\star ^{-1}\), and the initial claim is proved. As a consequence of this and of the one-to-one correspondence S B ↔ B of Theorem 2, the self-adjoint extensions of S are all of the form S T of (43) for some self-adjoint operator T on a Hilbert subspace of \(\ker S^*\). What remains to be proved is that when T runs in the family \(\mathscr {S}(\ker S^*)\) of the self-adjoint operators on Hilbert subspaces of \(\ker S^*\), the corresponding S T’s give the whole family of self-adjoint extensions of S. This follows at once by Proposition 3, since by (25) \(B_\star ^{-1}=\phi (B)\) and ϕ is a bijection in \(\mathscr {S}(\ker S^*)\). □

Proof (Proof of Theorems 6, 7, and Proposition 4)

All statements follow at once from their original versions, respectively Theorems 3, 4, and Proposition 2, and from the fact that the extension parameter T is precisely the parameter \(B_\star ^{-1}\) in Theorems 3, 4, and Proposition 2. □

Remark 12 (Equivalence of Theorems 2 and 5)

Our arguments in the proof of Theorem 5 actually show also that the original Višik-Birman representation Theorem 2 can be deduced from Theorem 5 and that therefore the two Theorems are equivalent. Indeed, assuming the representation (43) for a generic self-adjoint extension S T of S, our argument shows that the ϕ-inverse B := ϕ(T) of the parameter T allows to rewrite \(\mathscr {D}(S_T)\) in the form \(\mathscr {D}(S_B)\) of (25) and therefore all self-adjoint extensions of S have the form \(S_B=S^*\upharpoonright \mathscr {D}(S_B)\) for some \(B\in \mathscr {S}(\ker S^*)\); moreover, since by Proposition 3 ϕ is a bijection on \(\mathscr {S}(\ker S^*)\), one concludes that when B runs in \(\mathscr {S}(\ker S^*)\) the corresponding S B exhausts the whole family of self-adjoint extensions of S, thus obtaining Theorem 2.

Let us discuss in the last part of this Section yet another equivalent formulation of the general representation theorem for self-adjoint extensions.

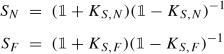

Theorem 8 (Classification of Self-Adjoint Extensions—Operator Version)

Let S be a densely defined symmetric operator on a Hilbert space \(\mathscr {H}\) with positive bottom (m(S) > 0). There is a one-to-one correspondence between the family of all self-adjoint extensions of S on \(\mathscr {H}\) and the family of the self-adjoint operators on Hilbert subspaces of \(\ker S^*\) . If T is any such operator, \(P_T:\mathscr {H}\to \mathscr {H}\) is the orthogonal projection onto \(\overline {\mathscr {D}(T)}\) , and \(P_*:\mathscr {D}(S^*)\to \mathscr {D}(S^*)\) is the (non-orthogonal, in general) projection onto \(\ker S^*\) with respect to Kreı̆n’s decomposition formula \(\mathscr {D}(S^*)=\mathscr {D}(S_F)\dotplus \ker S^*\) (Lemma 1), then in the correspondence T ↔ S T each self-adjoint extension S T of S is given by

Proposition 5

The parameter T in (51) is precisely the same as in (43), that is, the representation given in Theorem 8 is the same as the one given in Theorem 5 . In other words, the two theorems are equivalent . In particular, given a self-adjoint extension \(\widetilde {S}\) of S, its extension parameter T (i.e., the operator T for which \(\widetilde {S}=S_T\) ) is the operator acting on the Hilbert space \(\overline {P_*\mathscr {D}(\widetilde {S})}\) with domain \(\mathscr {D}(T)=P_*\mathscr {D}(\widetilde {S})\) and action \(TP_{*} g=P_T S_Tg\ \forall g\in \mathscr {D}(\widetilde {S})\).

Proof (Proof of Theorem 8 and Proposition 5)