Abstract

Domain adaptation (DA) is the topical problem of adapting models from labelled source datasets so that they perform well on target datasets where only unlabelled or partially labelled data is available. Many methods have been proposed to address this problem through different ways to minimise the domain shift between source and target datasets. In this paper we take an orthogonal perspective and propose a framework to further enhance performance by meta-learning the initial conditions of existing DA algorithms. This is challenging compared to the more widely considered setting of few-shot meta-learning, due to the length of the computation graph involved. Therefore we propose an online shortest-path meta-learning framework that is both computationally tractable and practically effective for improving DA performance. We present variants for both multi-source unsupervised domain adaptation (MSDA), and semi-supervised domain adaptation (SSDA). Importantly, our approach is agnostic to the base adaptation algorithm, and can be applied to improve many techniques. Experimentally, we demonstrate improvements on classic (DANN) and recent (MCD and MME) techniques for MSDA and SSDA, and ultimately achieve state of the art results on several DA benchmarks including the largest scale DomainNet.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Contemporary deep learning methods now provide excellent performance across a variety of computer vision tasks when ample annotated training data is available. However, this performance often degrades rapidly if models are applied to novel domains with very different data statistics from the training data, which is a problem known as domain shift. Meanwhile, data collection and annotation for every possible domain of application is expensive and sometimes impossible. This challenge has motivated extensive study in the area of domain adaptation (DA), which addresses training models that work well on a target domain using only unlabelled or partially labelled target data from that domain together with labelled data from a source domain.

Several variants of the domain adaptation problem have been studied. Single-source domain adaptation (SDA) considers adaptation from a single source domain [5, 6], while multi-source domain adaptation (MSDA) considers improving cross-domain generalization by aggregating information across multiple sources [35, 47]. Unsupervised domain adaptation (UDA) learns solely from unlabelled data in the target domain [16, 43], while semi-supervised domain adaptation (SSDA) learns from a mixture of labelled and unlabelled target domain data [9, 10]. The main means of progress has been developing improved methods for aligning representations between source(s) and the target in order to improve generalization. These methods span distribution alignment, for example by maximum mean discrepancy (MMD) [27, 48], domain adversarial training [16, 43], and cycle consistent image transformation [18, 26].

In this paper we adopt a novel research perspective that is complementary to all these existing methods. Rather than proposing a new domain adaptation strategy, we study a meta-learning framework for improving these existing adaptation algorithms. Meta-learning (a.k.a. learning to learn) has a long history [44, 45], and has re-surged recently, especially due to its efficacy in improving few-shot deep learning [12, 40, 50]. Meta-learning pipelines aim to improve learning by training some aspect of a learning algorithm such a comparison metric [50], model optimizer [40] or model initialisation [12], so as to improve outcomes according to some meta-objective such as few-shot learning efficacy [12, 40, 50] or learning speed [1]. In this paper we provide a first attempt to define a meta-learning framework for improving domain adaptive learning.

We take the perspective of meta-optimizing the initial condition [12, 33] of domain adaptive learningFootnote 1. While there are several facets of algorithms that can be meta-learned such as hyper-parameters [14] and learning rates [24]; these are somewhat tied to the base learning algorithm (domain adaptive algorithm in our case). In contrast, our framework is algorithm agnostic in that it can be used to improve many existing gradient-based domain adaptation algorithms.

Furthermore we develop variants for both unsupervised multi-source adaptation, as well as semi-supervised single source adaptation, thus providing broad potential benefit to existing frameworks and application settings. In particular we demonstrate application of our framework to the classic domain adversarial neural network (DANN) [16] algorithm, as well as the recent maxmium-classifier discrepancy (MCD) [43], and min-max entropy (MME) [41] algorithms.

Meta-learning can often be cleanly formalised as a bi-level optimization problem [14, 39]: where an outer loop optimizes the meta-parameter of interest (such as the initial condition in our case) with respect to some meta-loss (such as performance on a validation set); and the inner loop runs the learning algorithm conditioned on the chosen meta-parameter. This is tricky to apply directly in a domain adaptation scenario however, because: (i) The computation graph of the inner loop is typically long (unlike the popular few-shot meta-learning setting [12]), making meta-optimization intractable, and (ii) Especially in unsupervised domain adaptation, there is no labelled data in the target domain to define a supervised learning loss for the outer-loop meta-objective. We surmount these challenges by proposing a simple, fast and efficient meta-learning strategy based on online shortest path gradient descent [36], and defining meta-learning pipelines suited for meta-optimization of domain adaptation problems. Although motivated by initial condition learning, our online algorithm ultimately has the interpretation of intermittently performing meta-update(s) of the parameters in order to achieve the best outcome from the following DA updates (Fig. 1).

Overall, our contributions are: (i) Introducing a meta-learning framework suitable for both multi-source and semi-supervised domain adaptation settings, (ii) We demonstrate the algorithm agnostic nature of our framework through its application to several base domain adaptation methods including MME [41], DANN [16] and MCD [43], (iii) Applying our meta-learner to these base adaptation methods, we achieve state of the art performance on several MSDA and SSDA benchmarks, including the largest-scale DA benchmark, DomainNet [38].

2 Related Work

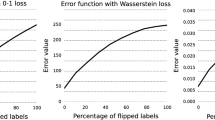

Single-Source Domain Adaptation. Single-source unsupervised domain adaptation (SDA) is a well established area [5, 6, 16, 19, 27, 29,30,31, 43, 48]. Theoretical results have bound the cross-domain generalization error in terms of domain divergence [4], and numerous algorithms have been proposed to reduce the divergence between source and target features. Representative approaches include minimising MMD distribution shift [27, 48] or Wasserstein distance [2, 51], adversarial training [16, 20, 43] or alignment by cycle-consistent image translation [18, 26]. Given the difficulty of SDA, studies have considered exploiting semi-supervised or multi-source adaptation where possible.

Semi-supervised Domain Adaptation. This setting assumes that besides the labelled source and unlabelled target domain data, there are a few labelled samples available in the target domain. Exploiting the few target labels allows better domain alignment compared to purely unsupervised approaches. Representative approaches are based on regularization [9], subspace learning [54], label smoothing [10] and entropy minimisation in the target domain [17]. The state of the art method in this area, MME, extends the entropy minimisation idea to adversarial entropy minimisation in a deep network setting [41].

Multi-source Domain Adaptation. This setting assumes there are multiple labelled source domains for training. In deep learning, simply aggregating all source domains data together often already improves performance due to bigger datasets learning a stronger representation. Theoretical results based on \(\mathcal {H}\)-divergence [4] can still apply after aggregation, and existing SDA methods that attempt to reduce source-target divergence [5, 16, 43] can be used. Meanwhile, new generalization bounds for MSDA have been derived [38, 55], which motivate algorithms that align amongst source domains as well as between source and target. Nevertheless, practical deep network optimization is non-convex, and the degree of alignment achieved depends on the details of the optimization strategy. Therefore our paradigm of meta-learning the initial condition of optimization is compatible with, and complementary to, all this prior work.

Meta-learning for Neural Networks. Meta-Learning (learning to learn) [44, 46] has experienced a recent resurgence. This has largely been driven by its efficacy for few-shot deep learning via initial condition learning [12], optimizer learning [40] and embedding learning [50]. More generally it has been applied to improve optimization efficiency [1], reinforcement learning [37], gradient-based hyperparameter optimization [14] and neural architecture search [25]. We start from the perspective of MAML [12], in terms of focusing on learning initial conditions of neural network optimization. However besides the different application (domain adaptation vs few-shot learning), our resulting algorithm is very different as we end up performing meta-optimization online while solving the target task rather than in advance of solving it [12, 39]. A few recent studies also attempted online meta-learning [13, 53], but these are designed specifically to backprop through RL [53] or few-shot supervised [13] learning. Meta-learning with domain adaptation in the inner loop has not been studied before now.

In terms of learning with multiple domains a few studies [3, 11, 21] have considered meta-learning for multi-source domain generalization, which evaluates the ability of models to generalise directly without adaptation. In practice these methods use supervised learning in their inner optimization. No meta-learning method has been proposed for the domain adaptation problem addressed here.

3 Methodology

3.1 Background

Unsupervised Domain Adaptation. Domain adaptation techniques aim to reduce the domain shift between source domain(s) \(\mathcal {D}_S\) and target domain \(\mathcal {D}_T\), in order that a model trained on labels from \(\mathcal {D}_S\) performs well when deployed on \(\mathcal {D}_T\). Commonly such algorithms train a model \(\varTheta \) with a loss \(\mathcal {L}_{\text {uda}}\) that breaks down into a term for supervised learning on the source domain \(\mathcal {L}_{\text {sup}}\) and an adaptation loss \(\mathcal {L}_{\text {a}}\) that attempts to align the target and source data

We use notation \(\mathcal {D}_S\) and \(\overline{\mathcal {D}}_T\) to indicate that the source and target domains contain labelled and unlabelled data respectively. Many existing domain adaptation algorithms [16, 38, 43, 48] fit into this template, differing in their definition of the domain alignment loss \(\mathcal {L}_{\text {a}}\). In the case of multi-source adaptation [38], \(\mathcal {D}_{S}\) may contain several source domains \(\mathcal {D}_{S}=\{ {D}_1, \dots , {D}_N\}\) and the first supervised learning term \(\mathcal {L}_{\text {sup}}\) sums the performance on all of these.

Semi-supervised Domain Adaptation. In the SSDA setting [41], we assume a sparse set of labelled target data \(\mathcal {D}_T\) is provided along with a large set of unlabelled target data \(\overline{\mathcal {D}}_{T}\). The goal is to learn a model that fits both the source and few-shot target labels \(\mathcal {L}_\text {sup}\), while also aligning the unlabelled target data to the source with an adaptation loss \(\mathcal {L}_a\).

Several existing algorithms [16, 28, 41] fit this template and hence can potentially be optimized by our framework.

Meta Learning Model Initialisation. The problem of meta-learning the initial condition of an optimization can be seen as a bi-level optimization problem [14, 39]. In this view there is a standard task-specific (inner) algorithm of interest whose initial condition we wish to optimize, and an outer-level meta-algorithm that optimizes that initial condition. This setup can be described as

where \(\mathcal {L}_{\text {inner}}(\varTheta ,\mathcal {D}_{\text {tr}})\) denotes the standard loss of the base task-specific algorithm on its training set. \(\mathcal {L}_{\text {outer}}(\varTheta ^*,\mathcal {D}_\text {val})\) denotes the validation set loss after \(\mathcal {L}_{\text {inner}}\) has been optimized, (\(\varTheta ^*={\text {argmin}}\mathcal {L}_\text {inner}\)), when starting from the initial condition set by the outer optimization. The overall goal in Eq. 3 above is thus to set the initial condition of base algorithm \(\mathcal {L}_{\text {inner}}\) such that it achieves minimum loss on the validation set. When both losses are differentiable we can in principle solve Eq. 3 by taking gradient steps on \(\mathcal {L}_\text {outer}\) as shown in Algorithm 1. However, such exact meta-learning requires backpropagating through the path of the inner optimization, which is costly and inaccurate for a long computation graph.

3.2 Meta-learning for Domain Adaptation

Overview. For meta domain adaptation, we would like to instantiate the initial condition learning idea summarised earlier in Eq. 3 in order to initialize popular domain adaptation algorithms such as [16, 41, 43] that can be represented as problems in the form of Eqs. 1 and 2, so as to maximise the resulting performance in the target domain upon deployment. To this end we will introduce in the following section appropriate definitions of the inner and outer tasks, as well as a tractable optimization strategy.

Multi-source Domain Adaptation. Suppose we have an adequate algorithm to optimize for initial conditions as required in Eq. 3. How could we apply this idea to multi-source unsupervised domain adaptation setting, given that there is no target domain training data to take the role of \(\mathcal {D}_{\text {val}}\) in providing the metric for outer loop optimization of the initial condition? Our idea is that in the multi-source domain adaptation setting, we can split available source domains into disjoint meta-training and meta-testing domains \(\mathcal {D}_S=\mathcal {D}_S^{\text {mtr}}\cup \mathcal {D}_S^{\text {mte}}\), where we actually have labels for both. Now we can let \(\mathcal {L}_\text {inner}\) be an unsupervised domain method [16, 43] \(\mathcal {L}_\text {inner}:=\mathcal {L}_\text {uda}\), and ask it to adapt from meta-train to the unlabelled meta-test domain. In the outer loop, we can then use the labels of the meta-test domain as a validation set to evaluate the adaptation performance via a supervised loss \(\mathcal {L}_\text {outer}:=\mathcal {L}_\text {sup}\), such as cross-entropy. Thus we aim to find an initial condition for our base domain adaptation method \(\mathcal {L}_\text {uda}\) that enables it to adapt effectively between source domains

where we use \(\mathcal {L}(\cdot ;\varTheta _0)\) to denote optimizing a loss from starting point \(\varTheta _0\). This initial condition could be optimized by taking gradient descent steps on the outer supervised loss using \({\text {UpdateIC}}\) from Algorithm 1. The resulting \(\varTheta _0\) is suited to adapting between all source domains hence should also be good for adapting to the target domain. Thus we would finally instantiate the same UDA algorithm using the learned initial condition, but this time between the full set of source domains, and the true unlabelled target domain \(\overline{\mathcal {D}}_T\).

An Online Solution. While conceptually simple, the problem with the direct approach above is that it requires completing domain-adaptive training multiple times in the inner optimization. Instead we propose to perform online meta-learning [53, 56] by alternating between steps of meta-optimization of Eq. 4 and steps on the final unsupervised domain adaptation problem in Eq. 6. That is, we iterate

where \((\mathcal {D})\) denotes minibatch sampling from the corresponding dataset, and we call \({\text {UpdateIC}}(\cdot )\) with a small number of inner-loop optimizations such as \(J=1\).

Our method, summarised in Fig. 1 and Algorithm 3, performs meta-learning online, by simultaneously solving the meta-objective and the target task. It translates to tuning the initial condition between taking optimization steps on the target DA task. This avoids the intractability and instability of backpropagating through the long computational graph in the exact approach that meta-optimizes \(\varTheta _0\) to completion before doing DA. Online meta-learning is also potentially advantageous in practice due to improving optimization throughout training rather than only at the start – c.f. the vanilla exact method, where the impact of the initial condition on the final outcome is very indirect.

Semi-supervised Domain Adaptation. We next consider how to adapt the ideas introduced previously to the semi-supervised domain adaptation setting. In the MSDA setting above, we divided source domains into meta-train and meta-test, used unlabeled data from meta test to drive adaptation, and then used meta-test labels to validate the adaptation performance. In SSDA we do not have multiple source domains with which to use such a meta-train/meta-test split strategy, but we do have a small amount of labeled data in the target domain that we can use to validate adaptation performance and drive initial condition optimization. By analogy to Eq. 4, we can aim to find the initial condition for the unsupervised component \(\mathcal {L}_\text {uda}\) of an SSDA method. But now we can use the few labelled examples \(\mathcal {D}_T\) to validate the adaptation in the outer loop.

The learned initial condition can then be used to instantiate the final semi-supervised domain adaptive training.

An Online Solution. The exact meta SSDA approach above suffers from the same limitations as exact MetaMSDA. So we again apply online meta-learning by iterating between meta-optimization of Eq. 7 and the final supervised domain adaptation problem of Eq. 9.

The final procedure is summarized in Algorithm 4.

3.3 Shortest Path Optimization

Meta-learning Model Initialisation. As described so far, our meta-learning approach to domain adaptation relies on the ability meta-optimize initial conditions using gradient descent steps as described in Algorithm 1. Such steps evaluate a meta-gradient that depends on the parameter \(\varTheta ^*\) output by the base domain adaptation algorithm

Evaluating the meta-gradient directly is impractical because: (i) The inner loop that runs the base domain adaptation algorithm may take multiple gradient descent iterations \(j=1\dots J\). This will trigger a large chain of higher-order gradients \(\nabla _{\varTheta _{0}}\mathcal {L}_{\text {inner}}(\cdot ), \dots ,\nabla _{\varTheta _{J-1}}\mathcal {L}_{\text {inner}}(\cdot )\). (ii) More fundamentally, several state of the art domain adaptation algorithms [41, 43] use multiple optimization steps when making updates on \(\mathcal {L}_\text {inner}\). For example, to adversarially train the deep feature extractor and classifier modules of the model in \(\varTheta \). Taking gradient steps on \(\mathcal {L}_\text {outer}(\varTheta ^*)\) thus triggers higher-order gradients, even if one only takes a single step \(J=1\) of domain adaptation optimization.

Shortest Path Optimization. To obtain the meta gradient in Eq. 10 efficiently, we use shortest-path gradient (SPG) [36]. Before optimising the innner loop, we copy parameters \(\varTheta _0\) as \(\tilde{\varTheta }_{0}\) and use \(\tilde{\varTheta }_{0}\) in the inner-level algorithm. Then, after finishing the inner loop we get the shortest-path gradient between \(\tilde{\varTheta }_{J}\) and \(\varTheta _0\).

Each meta-gradient step (Eq. 10) is then approximated as

Summary. We now have an efficient implementation of \({\text {UpdateIC}}\) for updating initial conditions as summarised in Algorithm 2. This shortest path approximation has the advantage of allowing efficient initial condition updates both for multiple iterations of inner loop optimization \(J>1\), as well as for inner loop domain adaptation algorithms that use multiple steps [41, 43]. We use this implementation for the MSDA and SSDA methods in Algorithms 3 and 4.

4 Experiments

Datasets. We evaluate our method on several multi-source domain adaptation benchmarks including PACS [22], Office-Home [49] and DomainNet [38]; as well as on the semi-supervised setting of Office-Home and DomainNet.

Base Domain Adaptation Algorithms and Ablation. Our Meta-DA framework is designed to complement existing base domain adaptation algorithms. We evaluate it in conjunction with Domain Adversarial Neural Networks (DANN, [16]) – as a representative classic approach to deep domain adaptation; as well as Maximum Classifier Discrepancy (MCD, [43]) and MinMax Entropy (MME, [41]) – as examples of state of the art multi-source and semi-supervised domain adaptation algorithms respectively. Our goal is to evaluate whether our Meta-DA framework can improve these base learners. We note that the MCD algorithm has two variants: (1) A multi-step variant that alternates between updating the classifiers and several steps of updating the feature extractor and (2) A one-step variant that uses a gradient reversal [16] layer so that classifier and feature extractor can be updated in a single gradient step. We evaluate both of these. Sequential Meta-Learning: As an ablation, we also consider an alternative fast meta-learning approach that performs all meta-updates at the start of learning, before doing DA; rather than performing meta-updates online with DA as in our proposed Meta-DA algorithms.

4.1 Multi-source Domain Adaptation

PACS: Dataset. PACS [22] was initially proposed for domain generalization and had been subsequently been re-purposed [7, 34] for multi-source domain adaptation. This dataset has four diverse domains: (A)rt painting, (C)artoon, (P)hoto and (S)ketch with seven object categories ‘dog’, ‘elephant’, ‘giraffe’, ‘guitar’, ‘house’, ‘horse’ and ‘person’ with 9991 images in total. Setting. We follow the setting in [7] and perform leave-one-domain out evaluation, setting each domain as the adaptation target in turn. As per [7], we use the ImageNet pre-trained ResNet-18 as our feature extractor for fair comparison. We train with M-SGD (batch size = 32, learning rate = \(2\times 10^{-3}\), momentum = 0.9, weight decay = \(10^{-4}\)). All the models are trained for 5k iterations before testing. Results. From the results in Table 1, we can see that: (i) Several recent methods with published results on PACS achieve similar performance, with JiGen [7] performing best. We additionally evaluate DANN and MCD including one-step MCD (os) and multi-step MCD (n = 4) variants, and find that one-step MCD performs similarly to JiGen. (ii) Applying our Meta-DA framework to DANN and MCD boosts all three base domain adaptation methods by \(1.96\%\), \(2.5\%\) and \(1.2\%\) respectively. (iii) In particular, our Meta-MCD surpasses the previous state of the art performance set by JiGen. Together these results show the broad applicability and absolute efficacy of our method. Based on these results we focus on the better performing single-step MCD in the following evaluations.

Office-Home: Dataset and Settings. Office-Home was initially proposed for the single-source domain adaptation, containing \(\approx 15,500\) images from four domains ‘artistic’, ‘clip art’, ‘product’ and ‘real-world’ with 65 different categories. We follow the setting in [8] and use ImageNet pretrained ResNet-50 as our backbone. We train all models with M-SGD (batch size = 32, learning rate = \(10^{-3}\), momentum = 0.9 and weight decay = \(10^{-4}\)) for 3k iterations. Results. From Table 2, we see that MCD achieves the best performance among the baselines. Applying our meta-learning framework improves both baselines by a small amount, and Meta-MCD achieves state-of-the-art performance on this benchmark.

DomainNet: Dataset. DomainNet is a recently benchmark [38] for multi-source domain adaptation in object recognition. It is the largest-scale DA benchmark so far, with \(\approx 0.6\) m images across six domains and 345 categories.

Settings. We follow the official train/test split protocol [38]Footnote 2. Various feature extraction backbones were used in the original paper [38], making it hard to compare results. We use ImageNet pre-trained ResNet-18 and ResNet-34 for our own implementations to facilitate direct comparison. We use M-SGD to train all the competitors (batch size = 32, learning rate = 0.001, momentum = 0.9, weight decay = 0.0001) for 10k iterationsFootnote 3. We re-train the model three times to generate standard deviations. Results. From the results in Table 3, we can see that: (i) The top group of results from [38] show that the dataset is a much more challenging domain adaptation benchmark than previous ones. Most existing domain adaptation methods (typically tuned on small-scale benchmarks) fail to improve over the source-only baseline according to the results in [38]. (ii) The middle group of ResNet-18 results show that our MCD experiment achieves comparable results to those in [38]. (iii) Our Meta-MCD and Meta-DANN methods provide a small but consistent improvement over the corresponding MCD and DANN baselines for both ResNet-18 and ResNet-34 backbones. While the improvement margins are relatively small, this is a significant outcome as the results show that the base DA methods already struggle to make a large improvement over the source-only baseline when using ResNet-18/34; and also the multi-run standard deviation is small compared to the margins. (iv) Overall our Meta-MCD achieves state-of-the-art performance on the benchmark by a small margin.

4.2 Semi-supervised Domain Adaptation

Office-Home: Setting. We follow the setting in [41]. We focus on 3-shot learning in the target domain (three annotated examples only per category), and focus on the five most difficult source-target domain pairs. We use the ImageNet pretrained AlexNet and ResNet-34 as backbone models. We train all the models with M-SGD, with batch size 24 for labelled source and target domains and 48 for the unlabelled target as in [41], learning rate is \(10^{-2}\) and \(10^{-3}\) for the fully-connected and the rest trainable layers. We also use horizontal-flipping and random-cropping data augmentation for training images. Results. From the results in Table 4, we can see that our Meta-MME does not impact performance on AlexNet. However, for a modern ResNet-34 architecture, Meta-MME provides a visible \(\sim \)0.8% accuracy gain over the MME baseline, which results in the state-of-the-art performance of SSDA on this benchmark.

DomainNet: Settings. We evaluate DomainNet for 1-1 few-shot domain adaptation as in [41]. We evaluate both AlexNet and modern ResNet-34 backbones, and apply our meta-learning method on MME. As per [41], we train our models using M-SGD where the initial learning rate is 0.01 for the fully-connected layers and 0.001 for the rest of trainable layers. During the training we use the annealing strategy in [16] to decay the learning rate, and use the same batch size as [41].

Results. From the results in Table 5, we can see our Meta-MME improves on the accuracy of the base MME algorithm in all pairwise transfer choices, and also for both backbones. These results show the consistent effectiveness of our method, as well as improving state-of-the-art for DomainNet SSDA.

4.3 Further Analysis

Discussion. Our final online algorithm can be understood as performing DA with periodic meta-updates that adjust parameters to optimize the impact of the following DA steps. From the perspective of any given DA step, the role of the preceding meta-update is to tune its initial condition.

Non-meta vs Sequential vs Online Meta. This work is the first to propose meta-learning to improve domain adaptation, and in particular to contribute an efficient and effective online meta-learning algorithm for initial condition training. Exact meta learning is intractable to compare. However, this section we compare our online meta update with the alternative sequential approximation, and non-meta alternatives for both MSDA and SSDA using A, C, P \(\rightarrow \) S and R \(\rightarrow \) C as examples. For fair comparison, we control the number of meta-updates (\({\text {UpdateIC}}\)) and base DA updates available to both sequential and online meta-learning methods to the same amount. Figure 2 shows that: (1) Sequential meta-learning method already improves the performance on the target domain comparing to vanilla domain adaptation, which confirms the potential for improvement by refining model initialization. (2) The sequential strategy has a slight advantage early in DA training, which makes sense, as all meta-updates occur in advance. But overall our online method that interleaves meta-updates and DA updates leads to higher test accuracy.

Computational Cost. Our Meta-DA imposes only a small computational overhead over the base DA algorithm. For example, comparing Meta-MCD and MCD on ResNet-34 DomainNet, the time per iteration is 2.96s vs 2.49s respectivelyFootnote 4.

Weight-Space Illustration. To investigate our method’s mechanism, we train MCD and Meta-MCD from a common initial condition on MSDA PACS when ‘Sketch’ is the target domain. We use the initial condition \(\varTheta _0\) and two different solutions (\(\varTheta _\text {Meta-MCD}\) and \(\varTheta _\text {MCD}\)) to define a plane in weight-space and colour it according to the performance at each point. We can see from Fig. 3(a) that Meta-MCD finds a solution with greater test accuracy. Figures 3(b) and (c) break down the training loss components. We can see that, in this slice, both methods managed to minimize MCD’s adaptation (classifier discrepancy) loss \(\mathcal {L}_a\) adequately, but MCD failed to minimize the supervised loss as well as Meta-MCD (\(\varTheta _\text {Meta-MCD}\) is closer to the minima than \(\varTheta _\text {MCD}\)). Note that both methods were trained to convergence in generating these solutions. This suggests that Meta-MCD’s meta-optimization step using meta-train/meta-test splits materially benefits the optimization dynamics of the downstream MSDA task.

Model Agnostic. We emphasize that, although we focused on DANN, MCD and MME, our MetaDA framework can apply to any base DA algorithm. Supplementary C shows some results for JiGen and M\(^3\)SDA algorithms.

Comparison Between DA and DG Methods. As a highly related topical problem to domain adaptation, domain generalization assumes no access to the target domain data during the training. DA and DG methods are rarely directly compared. Now we compare our Meta-MCD and MCD with some state of the art DG methods on PACS as shown in Table 6. From the results, we can see that generally DA methods outperform the DG methods with a noticeable margin, which is expected as DA methods ‘see’ the target domain data at training.

5 Conclusion

We proposed a meta-learning pipeline to improve domain adaptation by initial condition optimization. Our online shortest-path solution is efficient and effective, and provides a consistent boost to several domain adaptation algorithms, improving state of the art in both multi-source and semi-supervised settings. Our approach is agnostic to the base adaptation method, and can potentially be used to improve many DA algorithms that fit a very general template. In future we aim to meta-learn other DA hyper-parameters beyond initial conditions.

Notes

- 1.

One may not think of domain adaptation as being sensitive to initial condition, but given the lack of target domain supervision to guide learning, different initialization can lead to a significant 10–15% difference in accuracy (see Supplementary material).

- 2.

Other settings such as optimizer, iterations and data augmentation are not clearly stated in [38], making it hard to replicate their results.

- 3.

We tried training with up to 50 k, and found it did not lead to clear improvement. So, we train all models for 10 k iterations to minimise cost.

- 4.

Using GeForce RTX 2080 GPU. Xeon Gold 6130 CPU @ 2.10GHz.

- 5.

- 6.

References

Andrychowicz, M., et al.: Learning to learn by gradient descent by gradient descent. In: NeurIPS (2016)

Balaji, Y., Chellappa, R., Feizi, S.: Normalized wasserstein distance for mixture distributions with applications in adversarial learning and domain adaptation. In: ICCV (2019)

Balaji, Y., Sankaranarayanan, S., Chellappa, R.: Metareg: towards domain generalization using meta-regularization. In: NeurIPS (2018)

Ben-David, S., Blitzer, J., Crammer, K., Kulesza, A., Pereira, F., Vaughan, J.W.: A theory of learning from different domains. Mach. Learn. 79, 151–175 (2010)

Ben-David, S., Blitzer, J., Crammer, K., Pereira, F.: Analysis of representations for domain adaptation. In: NeurIPS (2006)

Bousmalis, K., Trigeorgis, G., Silberman, N., Krishnan, D., Erhan, D.: Domain separation networks. In: NeurIPS (2016)

Carlucci, F.M., D’Innocente, A., Bucci, S., Caputo, B., Tommasi, T.: Domain generalization by solving jigsaw puzzles. In: CVPR (2019)

Chang, W.G., You, T., Seo, S., Kwak, S., Han, B.: Domain-specific batch normalization for unsupervised domain adaptation. In: CVPR (2019)

Daumé, H.: Frustratingly easy domain adaptation. In: ACL (2007)

Donahue, J., Hoffman, J., Rodner, E., Saenko, K., Darrell, T.: Semi-supervised domain adaptation with instance constraints. In: CVPR (2013)

Dou, Q., Castro, D.C., Kamnitsas, K., Glocker, B.: Domain generalization via model-agnostic learning of semantic features. In: NeurIPS (2019)

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. In: ICML (2017)

Finn, C., Rajeswaran, A., Kakade, S.M., Levine, S.: Online meta-learning. In: ICML (2019)

Franceschi, L., Frasconi, P., Salzo, S., Grazzi, R., Pontil, M.: Bilevel programming for hyperparameter optimization and meta-learning. In: ICML (2018)

French, G., Mackiewicz, M., Fisher, M.: Self-ensembling for visual domain adaptation. In: ICLR (2018)

Ganin, Y., et al.: Domain-adversarial training of neural networks. In: JMLR (2016)

Grandvalet, Y., Bengio, Y.: Semi-supervised learning by entropy minimization. In: NeurIPS (2005)

Hoffman, J., et al.: CyCADA: cycle-consistent adversarial domain adaptation. In: ICML (2018)

Kim, M., Sahu, P., Gholami, B., Pavlovic, V.: Unsupervised visual domain adaptation: a deep max-margin gaussian process approach. In: CVPR (2019)

Lee, C.Y., Batra, T., Baig, M.H., Ulbricht, D.: Sliced wasserstein discrepancy for unsupervised domain adaptation. In: CVPR (2019)

Li, D., Yang, Y., Song, Y.Z., Hospedales, T.: Learning to generalize: Meta-learning for domain generalization. In: AAAI (2018)

Li, D., Yang, Y., Song, Y.Z., Hospedales, T.M.: Deeper, broader and artier domain generalization. In: ICCV (2017)

Li, D., Zhang, J., Yang, Y., Liu, C., Song, Y.Z., Hospedales, T.M.: Episodic training for domain generalization. In: The IEEE International Conference on Computer Vision (ICCV), October 2019

Li, Z., Zhou, F., Chen, F., Li, H.: Meta-SGD: learning to learn quickly for few-shot learning. arXiv:1707.09835 (2017)

Liu, H., Simonyan, K., Yang, Y.: Darts: differentiable architecture search. In: ICLR (2019)

Liu, M.Y., Tuzel, O.: Coupled generative adversarial networks. In: NeurIPS (2016)

Long, M., Cao, Y., Wang, J., Jordan, M.I.: Learning transferable features with deep adaptation networks. In: ICML (2015)

Long, M., Cao, Z., Wang, J., Jordan, M.I.: Conditional adversarial domain adaptation. In: NeurIPS (2018)

Long, M., Zhu, H., Wang, J., Jordan, M.I.: Unsupervised domain adaptation with residual transfer networks. In: NeurIPS (2016)

Long, M., Zhu, H., Wang, J., Jordan, M.I.: Deep transfer learning with joint adaptation networks. In: ICML (2017)

Luo, Y., Zheng, L., Guan, T., Yu, J., Yang, Y.: Taking a closer look at domain shift: category-level adversaries for semantics consistent domain adaptation. In: CVPR (2019)

Maaten, L.V.D., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2605 (2008)

Maclaurin, D., Duvenaud, D., Adams, R.: Gradient-based hyperparameter optimization through reversible learning. In: ICML (2015)

Mancini, M., Porzi, L., Rota Bulò, S., Caputo, B., Ricci, E.: Boosting domain adaptation by discovering latent domains. In: CVPR (2018)

Mansour, Y., Mohri, M., Rostamizadeh, A.: Domain adaptation with multiple sources. In: NeurIPS (2009)

Nichol, A., Achiam, J., Schulman, J.: On first-order meta-learning algorithms. arXiv:1803.02999 (2018)

Parisotto, E., Ghosh, S., Yalamanchi, S.B., Chinnaobireddy, V., Wu, Y., Salakhutdinov, R.: Concurrent meta reinforcement learning. arXiv:1903.02710 (2019)

Peng, X., Bai, Q., Xia, X., Huang, Z., Saenko, K., Wang, B.: Moment matching for multi-source domain adaptation. In: CVPR (2019)

Rajeswaran, A., Finn, C., Kakade, S., Levine, S.: Meta-learning with implicit gradients. In: NeurIPS (2019)

Ravi, S., Larochelle, H.: Optimization as a model for few-shot learning. In: ICLR (2016)

Saito, K., Kim, D., Sclaroff, S., Darrell, T., Saenko, K.: Semi-supervised domain adaptation via minimax entropy. In: ICCV (2019)

Saito, K., Ushiku, Y., Harada, T., Saenko, K.: Adversarial dropout regularization. In: ICLR (2018)

Saito, K., Watanabe, K., Ushiku, Y., Harada, T.: Maximum classifier discrepancy for unsupervised domain adaptation. In: CVPR (2018)

Schmidhuber, J.: Learning to control fast-weight memories: an alternative to dynamic recurrent networks. Neural Comput. 4, 131–139 (1992)

Schmidhuber, J., Zhao, J., Wiering, M.: Shifting inductive bias with success-story algorithm, adaptive Levin search, and incremental self-improvement. Mach. Learn. 28, 105–130 (1997)

Thrun, S., Pratt, L. (eds.): Learning to Learn. Kluwer Academic Publishers, Boston (1998)

Tzeng, E., Hoffman, J., Saenko, K., Darrell, T.: Adversarial discriminative domain adaptation. In: CVPR (2017)

Tzeng, E., Hoffman, J., Zhang, N., Saenko, K., Darrell, T.: Deep domain confusion: Maximizing for domain invariance. arXiv:1412.3474 (2014)

Venkateswara, H., Eusebio, J., Chakraborty, S., Panchanathan, S.: Deep hashing network for unsupervised domain adaptation. In: (IEEE) Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Vinyals, O., Blundell, C., Lillicrap, T., Wierstra, D., et al.: Matching networks for one shot learning. In: NeurIPS (2016)

Xu, P., Gurram, P., Whipps, G., Chellappa, R.: Wasserstein distance based domain adaptation for object detection. arXiv:1909.08675 (2019)

Xu, R., Chen, Z., Zuo, W., Yan, J., Lin, L.: Deep cocktail network: Multi-source unsupervised domain adaptation with category shift. In: CVPR (2018)

Xu, Z., van Hasselt, H.P., Silver, D.: Meta-gradient reinforcement learning. In: NeurIPS (2018)

Yao, T., Pan, Y., Ngo, C.W., Li, H., Mei, T.: Semi-supervised domain adaptation with subspace learning for visual recognition. In: CVPR (2015)

Zhao, H., Zhang, S., Wu, G., Moura, J.M., Costeira, J.P., Gordon, G.J.: Adversarial multiple source domain adaptation. In: NeurIPS (2018)

Zheng, Z., Oh, J., Singh, S.: On learning intrinsic rewards for policy gradient methods. In: NeurIPS (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

A Short-Path Gradient Descent

Optimizing Eq. 3 naively by Algorithm 1 would be costly and ineffective. It is costly because in the case of domain adaptation (unlike for example, few-shot learning [12], the inner loop requires many iterations). So back-propagating through the whole optimization path to update the initial \(\varTheta \) in the outer loop will produce multiple high-order gradients. For example, if the inner loop applies j iterations, we will have

then the outer loop will update the initial condition as

where higher-order gradient will be required for all items \(\scriptstyle \nabla _{\varTheta _{}^{(0)}}\mathcal {L}_{\text {uda}}(.), \dots ,\nabla _{\varTheta _{}^{(j-1)}}\mathcal {L}_{\text {uda}}(.)\) in the update of Eq. 14.

One intuitive way of eliminating higher-order gradients for computing Eq. 14 is making \(\nabla _{\varTheta _{}^{(0)}}\mathcal {L}_{\text {uda}}(.),\dots ,\nabla _{\varTheta _{}^{(j-1)}}\mathcal {L}_{\text {uda}}(.)\) constant during the optimization. Then, Eq. 14 is equivalent to

However, in order to compute Eq. 15, one still needs to store the optimization path of Eq. 13 in memory and back-propagate through it to optimize \(\varTheta \), which requires high computational load. Therefore, we propose a practical solution an iterative meta-learning algorithm to iteratively optimize the model parameters during training.

Shortest Path Optimization. To obtain the meta gradient in Eq. 15 in a more efficient way, we propose a more scalable and efficient meta-learning method using shortest-path gradient (S-P.G.) [36]. Before the optimization of Eq. 13, we copy the parameters \(\varTheta \) as \(\tilde{\varTheta }^{(0)}\) and use \(\tilde{\varTheta }^{(0)}\) in the inner-level algorithm.

then, after finishing the optimization in Eq. 16, we can get the shortest-path gradient between two items \(\tilde{\varTheta _i}^{(j)}\) and \(\varTheta _i\).

Different from Eq. 15, we use this shortest-path gradient \(\nabla _{\varTheta }^{\text {short}}\) and initial parameter \(\varTheta \) to compute \(\mathcal {L}_{\text {sup}}(.)\) as,

Then, one-step meta update of Eq. 18 will be,

Effectiveness: We can see that one update of Eq. 19 corresponds to that of Eq. 15, which proves that using shortest-path optimization has the equivalent effectiveness to the first-order meta optimization. Scalability/Efficiency: The computation memory of the first-order meta-learning increases linearly with the inner-loop update steps, which is constrained by the total GPU memory. However, for the shortest-path optimization, storing the optimization graph is no longer necessary, which makes it scalable and efficient. We also experimentally evaluate that one step shortest-path optimization is 7x faster than one-step first-order meta optimization in our setting. The overall algorithm flow is shown in Algorithm 2.

B Additional Illustrative Schematics

To better explain the contrast between our online meta-learning domain adaptation approach with the sequential meta-learning approach, we add a schematic illustration in Figure 4. The main difference between sequential and online meta-learning approaches is how do we distribute the meta and DA updates. Sequential meta-learning approach performs meta updates and DA updates sequentially. And online meta-learning conducts the alternative meta and DA updates throughout the whole training procedure.

Illustrative schematics of sequential and online meta domain adaptation. Left: Optimization paths of different approaches on domain adaptation loss (shading). (Solid line) Vanilla gradient descent on a DA objective from a fixed start point. (Multi-segment line) Online meta-learning iterates meta and gradient descent updates. (Two segment line) Sequential meta-learning provides an alternative approximation: update initial condition, then perform gradient descent. Right: (Top) Sequential meta-learning performs meta updates and DA updates sequentially. (Bottom) Online meta-learning alternates between meta-optimization and domain adaptation.

C Additional Experiments

Visualization of the Learned Features. We visualize the learned features of MCD and Meta-MCD on PACS when sketch is the target domain as shown in Fig. 5. We can see that both MCD and Meta-MCD can learn discriminative features. However, the features learned by Meta-MCD is more separable than vanilla MCD. This explains why our Meta-MCD performs better than the vanilla MCD method.

Effect of Varying S. Our online meta-learning method has iteration hyper-parameters S and J. We fix \(J=1\) throughout, and analyze the effect of varying S here using the DomainNet MSDA experiment with ResNet-18. The result in Table 7 shows that MetaDA is rather insensitive to this hyperparameter.

Varying the Number of Source Domains in MSDA. For multi-source DA, the performance of both Meta-DA and the baselines is expected to drop with fewer sources (same for SSDA if fewer labeled target domain points). To disentangle the impact of the number of sources for baseline vs Meta-DA we compare MSDA by Meta-MCD on PACS with 2 vs 3 sources. The results for Meta-MCD vs vanilla MCD are 82.30% vs 80.07% (two source, gap 2.23%) and 87.24% vs 84.79% (three source, gap 2.45%). Meta-DA margin is similar with reduction of domains. Most difference is accounted for by the impact on the base DA algorithm.

t-SNE [32] visualization of learned MCD (left) and Meta-MCD (right) features on PACS (sketch as target domain). Different colors indicate different categories.

Other Base DA Methods. Besides the base DA methods evaluated in the main paper (DANN, MCD and MME), our method is applicable to any base domain adaptation method. We use the published code of JiGenFootnote 5 and M\(^3\)SDAFootnote 6, and further apply our Meta-DA on the existing code. The results are shown in Table 8 and 9. From the results, we can see that our Meta-JiGen and Meta-M\(^3\)SDA-\(\beta \) improves over the base methods by 3.42% and 1.2% accuracy respectively, which confirms our Meta-DA’s generality. The reason we excluded these from the main results is that: (i) Re-running JiGen’s published code on our compute environment failed to replicate their published numbers. (ii) M\(^3\)SDA as a base algorithm is very slow to run comprehensive experiments on. Nevertheless, these results provide further evidence that Meta-DA can be a useful module going forward to plug in and improve future new base DA methods as well as those evaluated here.

Initialization Dependence of Domain Adaptation. One may not think of domain adaptation as being sensitive to initial condition, but given the lack of target domain supervision to guide learning, different initialization can lead to a significant difference in accuracy. To illustrate this we re-ran MCD-based DA on PACS with sketch target using different initializations. From the results in Table 10, we can see that both different classic initialization heuristics, and simple perturbation of a given initial condition with noise can lead to significant differences in final performance. This confirms that studying methods for tuning initialization provide a valid research direction for advancing DA performance.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, D., Hospedales, T. (2020). Online Meta-learning for Multi-source and Semi-supervised Domain Adaptation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12361. Springer, Cham. https://doi.org/10.1007/978-3-030-58517-4_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-58517-4_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58516-7

Online ISBN: 978-3-030-58517-4

eBook Packages: Computer ScienceComputer Science (R0)