Abstract

Human fall detection systems are an important part of assistive technology, since daily living assistance are very often required for many people in today’s aging population. Human fall detection systems play an important role in our daily life, because falls are the main obstacle for elderly people to live independently and it is also a major health concern due to aging population. There has been several researches conducted using variety of sensors to develop systems to accurately classify unintentional human fall from other activities of daily life. The three basic approaches used to develop human fall detection systems include some sort of wearable devices, ambient based devices or non-invasive vision based devices using live cameras. This study reviewed the techniques and approaches employed to device systems to detect unintentional falls and classified them based on the approaches employed and sensors used.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Assistive technology is an emerging research area since daily living assistance are very often required for many people in today’s aging populations including disabled, overweight, obese and elderly people. The main purpose of assistive technology is to provide better living and health care to those in need, especially elderly people who live alone. It is mainly aimed at allowing them to live independently in their own home as long as possible, without having to change their life style. In order, to provide better living for them, it is important to have continuous monitoring systems in their home to inform the health care representatives of any emergency attendance. Among such monitoring systems, fall detection systems are increasing in interest since statistics [1, 2] has shown that fall is the main cause of injury related death for seniors aged 79 [3, 4] or above and it is the second common source of injury related (unintentional) death for all ages [5, 6]. Furthermore, fall is the biggest threat among all other incidents to elderly and those people who are in need of support [3, 7,8,9,10,11,12,13,14,15,16]. Accordingly, fall can have severe consequences for elderly people, especially if not attended in a short period of time [17]. Similarly, unintentional human fall represents the main source of morbidity and mortality among elderly [18]. Therefore, accurate human fall detection systems are very important to support independent living. Since it had been proved that the medical consequences of a fall are highly dependent on the response and rescue time of the medical staff [19, 20], which is, in fact, only possible with an accurate and reliable fall detection systems that can provide fall alerts. Such systems are also vitally important, since there may be a case where someone losses consciousness or are unable to call for help after a fall event. Additionally, the likelihood of recovering from a fall event has also shown to be reduced if the person remain longer unattended [21]. Therefore, highly accurate fall detection systems can significantly improve the living of elderly people and enhance the general health care services too.

There has been plenty of researches conducted in this area to develop systems and algorithms for enhancing the functional ability of the elderly and patients [19]. This in fact, led to the improvement in the technologies used to develop such systems and thus enhanced the detection ratio to make such systems adaptable and acceptable. Recent researches conducted on human fall monitoring approaches for elderly people were categorized into wearable sensor based, wireless based, ambiance sensor based, vision and floor sensor/electric field sensor-based approach to distinguish the different fall detection methods employed [22]. This categorization of fall detection methods, reflects the characteristics of the movement that leads to fall. Therefore, it is also important to recognize those characteristics of movement in order to understand the existing algorithms used to detect falls and to device new algorithms to enhance the performance of such systems.

The various methods that has been used to detect human fall such as using a camera to identify a human fall posture or using various sensors to detect fall, shares some common features. From the analysis, the different fall detection methods based on various sensors were divided into three main approaches [23]. These three approaches are further divided into different sub-categories depending on the sensor and algorithm used to distinguish the detection methods. In this regard, the three basic approaches are wearable based device, camera based systems and ambience based devices [19, 23, 24] as shown in Fig. 1.

As shown in Fig. 1, wearable based device is further divided into two sub-categories based on the fall detection methods used. They are inactivity (motion based) and posture based approaches. Similarly, ambient/fusion based devices are divided into two types; those that used floor sensors or electric field and those that used posture based sensors to detect fall. Camera or vision based approach is divided into four different sub-categories.

Wearable based devices use accelerometers and gyroscopes which are embedded into garments or any wearing gadgets such as belts, wrist watches, necklace or jackets. The basic concept used to classify human fall is either identifying the posture or through activity/inactivity detection. Ambient/fusion based devices are a type of non-invasive and non-vision based approach. It either use the concept of posture identification through various sensors or uses floor vibration or electric sensors to detect the subject hitting the sensor. On the other hand, vision based (non-invasive) approach uses live cameras or multiples of such cameras to accurately detect human falls through utilizing the analytical and machine learning methods based on a computer vision model. They utilized various approaches to classify human fall including the changes in body shape between frames, activity/inactivity detection of the subject, three-dimensional (3D) analysis of the subject using more than one camera and generating a depth map of the scene with the help of depth sensors. Except the depth sensor based method, the other types in vision based approaches use RGB (Red Green Blue) cameras and therefore they are subject to rejection from users due to privacy concerns. They are also rejected due to the high cost of the systems, installation and camera calibration issues. A depth image-based approach could solve the privacy issues arising from video based systems. Studies representing this approach can also be divided into three types based on the approaches used. The first category represents those works that employed joint measurements or used human joint movements from depth information to detect fall. The second category includes the works that depended only on depth data with any supervised and unsupervised machine learning to classify human fall from other activities of daily life and the third category includes studies that make use of wearable devices along with the depth sensor. This study presents a review of these fall detection techniques. From the classification of daily life activities to the different approaches used to identify unintentional human fall are reviewed in the following sections.

2 Common Daily Life Activities and Their Associated Falls

Classification of human activities is an important research topic in the field of computer vision and rehabilitation. It is also increasingly in use for many applications including intelligent surveillance, quality of life (such as health monitoring) devices for elderly people, content-based video retrieval and human-computer interaction [25,26,27,28]. There has been plenty of researches conducted to automatically recognize human activities, yet it remains a challenging problem. Many approaches are used to identify how human moves in the scene. Techniques employed includes tracking of movements, body posture estimation, space-time shape templates and overall pattern of appearance [29,30,31,32,33,34].

Identification of characteristics of daily life activities is important in order to classify them, especially for fall detection. The characteristics of the activities can help to identify any uniqueness or dissimilarities between activities which in turn will support in distinguishing them. The characteristics of activities are basically derived in terms of height change pattern, rate of change of velocity, deviation of the height and position of the subject during and after the movement. This identification of characteristics of daily activities together with the pattern and the rate of change is important to classify them, especially unintentional human fall. Pattern of change is referred to the variation of subject’s height with respective to floor during any of the activity and the rate of change is the changes in velocity of the subject during that period. Deviation of height is the similar pattern of change of height except that this is the statistical standard deviation of that changes in height pattern. The changes in the pattern itself refers the height of the subject in different frames. In some cases, acceleration can help to identify the difference, where the changes in velocity does not clearly show the variation of speed during the movement.

Generally a human fall is an unintentional or involuntarily event that causes a person to rest on the ground or any other lower level object [35]. Basically, falls have varying meaning which may be caused from either intrinsic or extrinsic factors. Intrinsic are those that had caused from any physiological reasons and extrinsic are from the environment or other hazards. Tenetti et al. defined a fall from non-hospitalized geriatric population as “an event which results in a person coming to rest unintentionally on the ground or lower level, not as a result of a major intrinsic event (such as stroke) or overwhelming hazard.” [36]. By adapting this definition, fall for inpatient, acute, and long-term are described as “unintentionally coming to rest on the ground, floor, or other lower level, but not as a result of syncope or overwhelming external force.” [37]. Therefore, this study interchangeably uses human fall or unintentional fall to refer to any such unintended descend to the floor or on any object with or without injury which may had cause from any factors (intrinsic or extrinsic).

Some of the activities of daily life that are closely related to human fall are shown in Table 1, along with the characteristics considered in the classification of the events. The characteristics of each of the activity is divided into static and dynamic components. Dynamic component describes the change in subject’s height and rate of change of velocity during the activity. Static component will describe the position of the subject at the end of the activity, in terms of joint angles and joint position with respect to other joints.

Falls can be divided into many types from the characteristics of movements that causes falls. The work presented by Yu, divided falls into four types [24]. They are fall from sleeping (bed), fall from sitting (chair, on floor or any object), fall from walking or standing on the floor and fall from standing on a support such as ladder or any such tools. All the four types of falls do share some common characteristics even though it has significant differences [19]. This study is concerned with the first three types of fall, because the last type of fall is not common amongst elderly people since they normally occur among workers or people doing household jobs. The existence of fall like characteristics in normal daily life activities such as similarities in crouch and lying on floor with fall is the main challenge that the researchers are facing in developing systems to classify falls from human activities.

The different approaches and methods of fall detection mentioned do share a similar general framework [24]. Some of the fall detection methods used only one sensor indicator with a threshold while others used complicated algorithms and image processing to detect falls. The only distinguishing factor among them lies on the sensors used, number of sensors and their detection algorithms. Such as data acquisition can vary from single sensor to multiple sensors to sense one indicator and different cameras working together to collect data [24]. The framework followed in the three basic types of fall detection also differ from the methods of architectural design and communication between inter components.

3 Overview of Fall Detection Approaches

Most of the previous academic researches on fall detection had based their work on some sort of wearable devices with embedded sensors to identify posture and motion of the body. The sensors were either placed on garments or are in the form of wearable devices. Different types of sensors were used to make such devices and hence there are variety of methods to detect falls.

Among them accelerometer is the most extensively used sensor to realize human fall. They make use of the measure of the acceleration of the body to identify any potential fall activity. Apart from that there were some works conducted on determining falls using the human posture movements. Body orientation as posture was used to detect fall using either posture sensors or multiple of accelerometers [38, 39]. Combination of accelerometer and gyroscopic data also proved to determine falls more accurately than using a single sensor. Accelerometer can provide kinetic force while gyroscope can help to estimate the current posture [40]. The combination of two sensors can also help to identify any false measurement from anyone of the sensor.

Wearable based devices, basically employs the unique pattern of motion, the fall possess to distinguish it from other activities of daily life. Therefore such devices are prone to give false alarm by triggering fall alarm from any irregular motions of a daily life activity [24, 41]. The sensor position and the fusion of data techniques used also greatly affects the preformation of such systems [42, 43]. Similarly, it is also very likely that the user may forgot to wear the device during their daily life activities [44, 45]. Especially elderly people, because they are likely to have weaker memory. Moreover it is often rejected by the elderly due to the difficulties of the wearing such devices or garments. Regardless of these issues, they are popular for the advantages of been cheap, readily available, and easy to setup and operate.

Ambient based methods shown in Fig. 1, basically used non-invasive sensors for developing fall detection systems. This approach usually used array of sensors to identify falls through pressure sensing, vibrational data, IR sensor and single far-field microphone [46,47,48,49,50]. Similarly like wearable based approach, they do possess several disadvantages. Since it is mostly based on pressure sensor which is very prone to measure weight of all objects and thus generates high false alarms. Unlike wearable devices, it is less disturbance to the users and some of the devices like floor vibration based devices are fully non-invasive. Similarly like wearable devices it is very cost effective and does not require high installation costs [46, 48].

In overall, most of the wearable sensor based devices cannot distinguish fall from sitting especially if it is accelerometer based. Similarly, it is very often rejected by the wearer due to the difficulty of wearing such belts or garments and discomforts associated within it. Ambient based devices are also prone to generate high false alarms mainly from the pressure sensors which measure the pressure of everything.

On the other hand, vision based devices are fully non-invasive and are based on computer vision to identify human from the scene and detect falls. They used image processing to segment human subject from the scene and employ mathematical models to classify human activities. As a result, they are very accurate in identifying human falls than the other two approaches and they are fully non-invasive to the user. On the other hand, such systems are rejected by the users due to privacy concerns. Consequently, such systems were subject to the physiological effects on the users due to the recordings of their daily life activities. A brief review of the previous studies from the three basic approaches used for developing human fall detection systems were discussed in the following sub-sections.

3.1 Wearable Based Techniques

The basic approaches used to develop fall detection using wearable sensors are discussed in the following sub-sections.

3.1.1 Accelerometer Based Devices

Among the wearable devices, body posture and motion recognition using accelerometer is the most extensively used method to realize a fall. They used the measure of the acceleration of the body with respect to the position where the sensor is placed. This accelerometric data was then used to detect any potential fall activity. The acceleration data from triaxial accelerometers worn on the waist, wrist, and head were used in many studies to monitor for fall events [51,52,53,54]. Some of the studies employed machine learning such as Hidden Markov Model (HMM) [55] and Support Vector Machine (SVM) [56, 57] on the accelerometer data for fall detection. One study used discrete wavelet transformation (DWT) to process the collected data from the accelerometer placed in shoulder position of a jacket [58]. While another study used a personal server for controlling the data acquired from multiple biomedical sensors to detect fall event [59]. Smartphones are also used to take benefits of the integrated sensors for fall detection [60, 61]. Kangas et al., presented a study to determine simple threshold for an accelerometry based parameters for fall detection [62].

Some studies used vertical velocity thresholding [63] or vertical acceleration from piezo electric accelerators [64, 65] to detect human fall events. Acceleration and angular velocities were also used to differentiate real fall events from other normal daily life activities [66, 67]. Another study presented a wireless sensor node with triaxial accelerometers to identify the body acceleration for monitoring daily activities [68]. A sensor network based fall detection was also used, to monitor the user with complete privacy and security [69]. Chen et al. created a wireless low power sensor network with small, non-invasive low power motes (sensor nodes) for fall detection [70].

The changes in body motion and body position were also used for fall detection [71, 72]. Rapid impact and body orientation based fall detection proposed by Zheng et al. used a two stage fall detection algorithm which can locate the wearer and send alarm [73]. The changes in body orientation was also used to identify negative acceleration for fall detection [74]. Another study described the use of IoT on a sensor node with an accelerometer the data is transmitted to fog layer [75]. The IoT are also increasing in use in recent days with the development of the new techniques and smart sensors [20].

3.1.2 Posture Sensor Based Devices

There are some works conducted on determining fall using the posture of the subject or posture movements. Body orientation as posture is used to detect fall using either posture sensors or multiple accelerometers. Combination of tri-axial accelerometers and gyroscopes were also used to identify human behavior and posture of the subject such as the study proposed by Baek et al. which used the two sensors as a necklace sensor node for fall detection [76]. Two studies employed set of sensors to identify the movement and posture of the subject for fall detection [38, 77]. Kaluza et al. presented a posture reconstruction ideology for fall detection algorithm by locating the wireless tags which were placed on body parts (sewn on clothes) such as hips, ankles knees, wrists elbows and shoulders. This fall detection algorithm used acceleration thresholds along with velocity profiles. Acceleration was derived from the movements of the tags [39].

Another body posture based fall detector proposed by Sudarchan et al. used a trixial accelerometer placed on the lumbar region to study the tilt angle. They used the changes in acceleration in three axes to find the body posture [78]. While Kangas et al. used a waist worn tri-axial accelerometer, transceiver and microcontroller to develop a new fall detector prototype based on fall associated impact and end posture [79]. Another study used a multi-level data fusion framework on multiple sensor nodes [80].

3.1.3 Accelerometer and Gyroscope Based Devices

Combination of accelerometer and gyroscope data has also proved to determine fall more accurately than any one of the sensor alone. As discussed, accelerometer can provide kinetic force while gyroscope can help to estimate the current posture. The combination of two sensors can also help to identify any false measurement from anyone sensor. Hwang et al. used this concept to propose real time monitoring ambulatory system for human fall detection [81]. Nyan et al. also used 3D accelerometer and 2D gyroscope worn on thigh which was based on Body Area Network (BAN) to prevent from fall related injuries by inflatable airbag for hip protection before the impact. The system was based on the concept that thigh segments will not exceed a certain threshold angle to side and forward in normal daily activities except during a fall event [40]. Another study presented a novel fall detection system using same two sensors to recognize four kinds of static postures: standing, bending, sitting and lying. Motion between these static postures were considered as dynamic transitions and if the transition before lying posture is not intentional, a fall is detected [82].

Using the same two sensors, a physical activity monitoring system was presented by Dinh et al. The wearable device detects the physical activities using 3-axial accelerometer, a 2-axial gyroscope and a heartbeat detection circuit. [83]. On the other hand, Bourke et al., used a bi-axial gyroscope sensor mounted on the truck to differentiate fall from normal daily activities. Fall was determined from measurement of pitch and roll angular velocities with a threshold-based algorithm [84].

In the studies discussed above, a fall is distinguished from other normal daily life activities using the unique pattern of motion the fall possess. Therefore, it is prone to generate false alarm by triggering a fall event from a motion of any other similar movement such as lying on floor from standing. More ever it is often rejected by the elderly due to the difficulty of wearing the devices or garments. Irrespective of this, it does have the advantages of been cheap, easy to setup and operate.

3.2 Ambient Based Techniques

This approach usually used array of sensors to identify falls through pressure sensing, vibrational data, IR sensor and single far-field microphone. They make use of the ambient noise including vision, audio and floor vibration caused from any potential fall activity [85].

One study used array of vibration sensors on the floor to identify fall by analyzing location data [46]. The methods used was based on the perception that a human fall will always cause a vibration pattern on the floor and implies that the vibration generated from fall is significantly different from normal daily activities and at the same it will be different from the vibration generated by objects falling on the floor. Livak et al. based their method on the floor vibration and acoustic sensing for fall detection. The detection was based on the detection of vibration and sound signal from an accelerometer and a microphone with advanced processing techniques. The proposed system could detect falls with a high accuracy for distance up to 5 m and system is adaptive that it could be calibrated to any kind of floor and room acoustics. It was also free from ambient noise because the algorithm had to detect a vibration event in the first stage. Results from the testing database showed a sensitivity of 95% and a specificity of 95% [86].

A pressure sensor was also used to design a bed exit detection apparatus by using bladder or other fluid carrying devices in fluid communication. This is particularly a patient presence detection system that enabled the care giver to get alerted about both the presence and absence of the patient on patient carrying surface. Especially whether the patient was sitting upright or were leaning, falling forward or falling sideways [87].

The other non-invasive sensor based approach was the use of IR sensor to locate and track a thermal target in the sensor’s field of view such as the study proposed by Sixsmith et al. which was based on pyroelectric IR sensors placed on wall for detecting fall [47, 48]. A wireless sensor network with array of sensors and event detection and modalities and distributed processing for smart home monitoring application was also used for fall detection [49].

Audio signal from a single far-field microphone was also used to detect human falls in the home environment, distinguishing them from competing noise, by using audio. A study modeled each fall or noise segment using a GMM super-vector to distinguish them from background noise and classify the audio segments into falls and other types of noise using SVM built on GMM super-vector kernel [50]. One study used three radar signal variables to detect falls along with an unsupervised multi-linear feature extraction method [88]. The use of WiFi Channel state information is also an emerging non-invasive approach for fall detection. It is does has the advantage of being ubiquitous and low cost compared to the previous radar based approach [89].

Ambient approach too possesses several disadvantages like wearable devices. Since it is mostly based on pressure sensor which is very prone to measure weight of all objects, thus generates high false alarms. Unlike wearable based devices, it is less disturbance to the users. Similar like wearable based devices it is very cost effective and does not require high installation costs.

3.3 Vision Based Techniques

Vision or camera based devices are increasingly in use due to its multiple advantages over wearable and other non-invasive sensor based devices. Some of the reasons includes the capabilities of these cameras to detect multiple events simultaneously and relief from the wearing difficulties of wearable devices such as garments for fall detection. Most importantly the recorded video from camera can be used for verification after a fall event. Although vision based approach do possess the disadvantages of not preserving the users’ privacy, it is very commonly employed in many research works. Selected previous works on normal color camera or vision based devices are briefly reviewed in the following sub-sections.

3.3.1 Inactivity Approach

With this approach, a fall is detected based on the inactivity period on the floor. Camera or motion detector tracks the person to obtain motion traces and based on it, a fall is determined. The orientation change of the body was used to detect inactivity and if inactivity occurs in certain context, a fall is detected [90].

One study, demonstrated the usefulness of unusual inactivity detection as an indication for fall detection. The proposed method enables inactivity outside the usual zones of inactivity such as chairs or beds to be identified. The combination of this with body pose and motion information provided important information for fall detection [91].

3.3.2 Shape Change Approach

The main perception with this approach is that the shape of a person will change from standing to lying if a fall occurs. One study used a Hidden Markov Model (HMM) based fall detection where HMM used video features to differentiate fall from walking. A second HMM based approach used audio features to differentiate falling sound from talking [92]. Another HMM-based algorithm used multiple features extracted from silhouette: height of bounding box, magnitude of motion vector, determinant of covariance matrix and ratio of width to height of bounding box of person [93]. Thome and Miguet proposed robust Hierarchical Hidden Markov Model (HHMM) based algorithm to detect fall, where HHMM is used to model the motion. Many improvements are possible including automating the rectification processes using Hough transfer for detecting sets of parallel lines and computing the orthogonal vanishing points [94].

Another method, to detect falls on the floor using multiple cameras presented by Auvinet et al. evaluated the variation of the person’s height for fall detection. The advantages of proposed system with multiple camera were larger common field of view and detection of an occlusion in one camera could be dealt from the other camera [95]. An alternative method used, 3D shape of body extracted from multiple cameras to detect fall. This multi-camera vision system for detecting and tracking people employs warping people’s silhouette technique to exchange visual information between overlapped cameras when camera handover occurs [96].

A single wide-angle camera based method used the angle between the projected gravity vector and the line from feet to head of the human and normalized size of the upper body for fall detection [97]. Another study used a rule based algorithm with an Omni-camera and used context information for fall detection. Fall was determined based on the ratio of width to height of the bounding box of body in the image [98, 99]. One study demonstrated a machine learning framework for fall detection and daily activity classification using acceleration and angular velocity data from two public datasets. They tested the performance of artificial neural network (ANN), K-nearest neighbors (KNN), quadratic support vector machine (QSVM) and ensemble bagged tree (EBT) in recognizing the activities such as falling, walking, walking upstairs, walking downstairs, sitting, standing lying using acceleration and velocity data [100].

3.3.3 3D Head Motion Analysis

The principle behind this approach is that during a human fall, vertical motion is faster than the horizontal motion. Rouger et al. developed fall detector using monocular 3D head tracking. The method presented was based on Motion History Image (MHI) and changes in human shape. The detection method was based on the fact that the motion is large when a fall occurs. Therefore, the system first detects a large motion and if a motion is detected then the shape of the person in the video sequence are analyzed. In this stage the concept used is that during a fall, the human shape changes and at the end of the fall the person is usually on the floor for few second and with less body movements [101].

Vision based approach was the most reliable technique for fall detection compared to the other approaches [96, 99, 101]. If individual different sub-categories are compared, inactivity detection was simple in terms of processing and hence they are less reliable. Shape detection algorithms was more reliable because body shape detection could give more accurate information about fall than head detection. The 3D body shape detection used more camera and required complex computing. The recent trend in fall detection is the use of depth sensor for human detection and movement recognition. The three techniques discussed in vision based approach namely inactivity detection, shape change and 3D head motion analysis could be implemented with a single depth sensor.

3.4 Review of Depth Sensor Based Approach

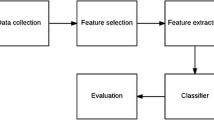

The use of depth sensors for fall detection, can be further broaden into three sub-categories depending on the approach employed to device the systems, as illustrated in Fig. 2. The differences between them is only the fall detection algorithm employed and the method used to generate potential fall alert which starts the fall confirmation process. The first category represents those works that employed joint measurements or used human joint movements from depth information to detect fall. The second category includes those that depended only on depth data with any supervised and unsupervised machine learning to classify human fall from other activities of daily life. The works that make use of an inertial devices (wearable devices) along with the depth sensor and employs either machine learning or joint data for the classification of human activities is discussed under the third category in Sect. 3.4.3.

The first and the second approach (shown in Fig. 2) uses only depth maps generated from the sensor while the third approach makes use of wearable device along with the depth data to improve the accuracy and cover the areas not visible to the depth sensor. From the study, it was found that the only option to fully utilize the benefits of being non-invasive and to achieve the confidence of the users is to use only depth images. Since the use of wearable devices along with depth sensor violates the concept of non-invasive. Thus, makes it also subject to rejection from users due to the wearing difficulties and other drawbacks of wearing devices.

The techniques employed in those that used only depth images (the first and the second approach in Fig. 2) to classify human activities are based on joint measurement and movement for fall detection. The first sub-category of the first approach (depth data) signifies the works that used fixed thresholding on the skeleton data or on extracted human joints to detect fall. The second and third sub-category in this approach represents those works that used an adaptive threshold and a combination of fixed and adaptive thresholds respectively.

The third approach shown in Fig. 2, which used wearable device along with the depth images is also classified into two sub-categories. The first category represents those that used joint measurement methods on the skeleton data or extracted human joints and a classifier on the accelerometer data. The second category includes those that used machine learning either on the accelerometer data and or on the depth images for the classification of human fall.

3.4.1 Joint Measurement and Movements Based Approaches

This section discusses on the works that had based their systems only on depth information. Including those that used skeleton data from depth sensor and those that used mathematical models and algorithms to detect fall by either extracting human subject or tracking the movements. Most of the studies used distance of key joints and velocity for fall detection. Rougier et al. used human centroid height relative to the ground and body velocity. They have also dealt with occlusions, which was a weakness of previous works and claimed to have a good detection results with an overall success rate of 98.7% [102]. While another study used distance and velocity as two algorithms [103]. The distance and y-coordinate of joints were also used as two algorithms to detect falls, where a fall is detected by thresholding the distance between these joint to the floor, if the floor is visible or detected [104]. If the floor plane is not detected, then a second algorithm is used which depends on the skeleton coordinate system. The second algorithm detects a fall if the y-coordinates of the joints mentioned are less than a given threshold.

Another study presented a distance and velocity based algorithm with four main steps, where fall detection is based on the vertical speed and the distance from the ground to head and the centroid [105]. Distance and angle based method was proposed by Yang et al. The characteristics of the shape of the moving objects is illustrated by an ellipse which is computed using a set of movement functions. The parameters calculated are the centroids of ellipses, the angles between the ellipses and the floor plane. Fall is detected when the distance and angle between the ellipses and floor plane are lower than some threshold [106].

A combination of the skeleton and RGB data from Kinect sensor was also used in a way that if skeleton data is available, the algorithm uses vertical velocity and the height to the floor plane of the human center. Otherwise, the motion map from the gray scale images which is represented by an improved kernel descriptor is input to a linear Support Vector Machine (SVM). This fall detection flowchart is based on skeleton and RGB features (which is switchable) depending on the availability [107]. On the other hand, Zhang S. et al. used three velocity features and a head to hip height difference with an adaptive threshold [108].

The velocity of the joint hitting the floor was also used to distinguish the fall accident from sitting or lying down on the floor. An abnormal action is detected if the distance between any of the joints to the floor is smaller than an adaptive threshold and any of the vertical velocities with respect to floor is larger than a velocity threshold at the same time. Fall is detected if the distance between the head and floor is lower than a recover threshold for certain period of time which is also adaptive to the height of the person [109].

Only one study used distance parameter for fall detection. They used distance from head to floor plane which is calculated in every following frame and a fall is indicated if an adaptive threshold has reached. The centroid height of the human subject is used as the second decision to confirm fall event. Fall event is confirm only when the distance from head to floor (head height) and the distance between silhouette centroid to floor (centroid height) is lower than the thresholds at the same time [110].

Similarly, one study based their fall detection mainly on velocity components. They also proposed a two-stage based fall detection where in the first stage, the vertical state of the subject from each depth image frame is characterized and then segments the ground events from vertical state time series obtained by tracking the subject over time. The vertical state of the subject is characterized using three features. The second stage confirms that a fall has occurred by using an ensemble of decision trees and a set of features extracted from on-ground event. For the computation of fall event, five features from each on-ground event is extracted [111].

The orientation of the person’s major axis and the height of the spine from floor was also used to detect fall event. For the computation of the orientation, the head, shoulder center, spine, hip and the mean position of the knees were considered. The 3D ground floor and the spine distance to floor is also calculated. Fall is detected, if the major orientation of the person is parallel to the floor and the height of the spine is near the floor [112].

Four studies employed bounding box based approach for fall detection. In the first study a fall is detected using velocity and inactivity calculations. Where velocity measurement is based on the contraction or expansion of the width, height and depth of the 3D bounding box [113]. The second study used the contraction and expansion speed of the width, height and depth of the 3D human bounding box with its position in the space [114]. The third study introduced an adaptive directional bounding boxes technique to detect falls. They used four main features to analyze fall events. They are: Directional Height (DH)/Directional Width (DW) ratio, center of gravity ratio, diagonal ratio and Bounding Box (BB)-Height ratio. They also used dynamic state machine to encompass both forward and backward tracking [115]. The fourth study used skeleton tracking along with bounding box analysis [116].

A privacy-preserving fall detection method proposed by Gasparrini et al., used raw data directly from the sensor. The data were analyzed, and the system extracts the elements to classify all the blobs in the scene through the implemented solutions. A fall is detected if the depth blob associated to a person is near to the floor [117].

A motion based fall detection approach used extracted skeleton data of human using Kinect sensor to detect and monitored the person, especially the changes in motion is examined. Fall is detected using the changes in either Y or Z coordinate of the key frame. A fall from sitting or standing is confirmed if the body motion gets involved in Y or Z coordinate [118]. Another similar approach employed the torso angle, the centroid height and their motion characteristics for human fall representation. These parameters were used to create a human torso motion model (HTMM) which is a threshold based approach for fall detection. The 3D position of the hip center and the shoulder center joints in depth images is used to build proposed model. The subject’s torso angle and the centroid height is the key features in the HTMM. They used four thresholds, first threshold is for torso angle which starts detection, the second is the threshold for the changing rate of torso angle, the third is the threshold for velocity of centroid height and the fourth is the threshold for tracking time after the torso angle exceeds the first threshold. When a person is detected, the position of the joints is extracted, and the torso angle is calculated. When this angle is greater than a given threshold, the rate of changes of the torso angle and the centroid height are recorded frame by frame for a given period of time and a fall is detected when this changing rate reaches the thresholds [119].

3.4.2 Classifier Based Approaches

Studies representing this category employed some sort of machine learning classification either directly on the depth data or on the 3D skeleton joint position from Kinect sensor. Alazrai et al. presented a new approach for fall detection using 3D skeleton joint position from Kinect sensor. This data is used to build a view-invariant descriptor for human activity representation or motion-pose geometric descriptor (MPGD). MPGD comprises of a motion and pose profile which allows the capturing of semantic context of the human movements from the video sequences. Fall detection is first expressed as a posterior-maximization problem where the posterior probability is estimated using a multi-class SVM classifier [120].

A combination of fall characterization using shape based approach and a machine learning classifier to identify human fall from other activities was proposed by Ma et al. At first human silhouette is extracted from depth images. Adaptive Gaussian Mixture Model (GMM) is used for human segmentation from background. The second step involves finding of the features of the detected subject [121]. On the other hand, another study used a deep learning classifier based human fall detection using infra-red depth sensor measurements with feature selection and Non-Linear Principal Component Analysis (NPCA) [122]. While an acoustic based fall detection system using Kinect sensor with Minimum Variance Distortion less Response (MVDR) adaptive beamforming reduced the false alarm ratio by 80% as compared to the case when no depth sensor is used [123].

Another study, accomplished fall detection using only depth images with a classifier trained on features representing the extracted person both in depth images and in point cloud [124]. The features extracted are the same as that of the study in previous section [125].

The only study that had based fall detection on statistical method employed how human moved during the last few frames for the classification. They used statistical decision making as opposed to hard-coded decision making used in related works. Duration of fall in frames, total head drop-change of head height, maximum speed (largest drop in head height), smallest head height and fraction of frames where head-height dropped is considered for fall detection [126].

3.4.3 Wearable Device and Depth Map Based Approaches

This section is dedicated for discussing the works that make use of a wearable device along with depth sensor for fall detection. The wearable devices are sometimes used to generate potential fall alert and/or are used to cover the areas not visible to the depth sensor. Either machine learning or changes in human joints from depth information are used to confirm the fall event. Two studies used joint data for fall detection along with a wearable device. The first study used the distance of the centroid of the segmented person to the ground plane with a threshold for fall detection [127]. The second study that employed joint data for fall detection presented three algorithms. The first algorithm for fall detection uses acceleration data from the wearable device on the wrist and skeleton data from Kinect sensor. A fall is detected, if three conditions is fulfilled. The second algorithm uses the same parameters and concept except that the accelerometer is placed on the waist. The third algorithm uses variation of the skeleton joints, distance of spine_base joint from floor and magnitude of waist accelerometer. Fall is detected, if the acceleration peak of greater than 3 g is observed with 2 s after the distance of the joints reaches a threshold value of 20 cm [128].

There were four studies that used classifiers for fall detection along with a wearable device. One study used a wearable accelerometer based device to indicate any potential fall activity and whether the wearer is in motion. The authentication of fall event after a potential fall indication from the accelerometer is accomplished from depth images using SVM classifier on the features extracted. The features extracted to confirm fall are the ratio of width to height of the person’s bounding box (h/w), a ratio of the height of the person’s surrounding box in current frame to the physical height of the person (h/hmax), the distance between centroid to the floor (D) and the standard deviation from the centroid for the abscissa and the applicate [125]. Similarly, other studies used a k-NN classifier for lying pose detection after a potential fall alert from a wearable device [129, 130].

While another study made the use of accelerometer as optional to indicate a potential fall and a Support Vector Machine (SVM) based person finder is used to confirm the presence of the tracked person and the head location. A cascaded classifier consisting of lying pose detector and dynamic transition detector is also executed [131]. Another approach made the use of accelerometer and video based approach switchable for different situations. Since in cases such as during changing clothes or while washing, it might not be comfortable to use the wearable sensor. In such situation the system relies on Kinect camera only [132].

A previous work [128] was extended by Cippitelli et al. which presented a fall risk estimation and fall detection tool using a wearable and vision based sensor. This work was aimed to propose an integrated system to gain both the fall risk assessment and fall detection in indoor home environment. They also provide a fall risk assessment tool with the Kinect sensor and an accelerometer placed at the chest in the same setup which can be switched when required. The test is divided into five phases, sit-to-stand, walk, turn, walk, turn-to-sit. During sit-to-stand phase, the person stands from chair to start walking. The parameters evaluated are maximum inclination of the torso angle and the time required to stand up. During the walk phase, steps of the person are extracted from both the accelerometer and the Kinect. The turn phase, is when the subject turns to walk back to the chair. The parameter extracted in this phase is the time required to perform the action (turn 180°). The parameter evaluated in the walk back phase is the cadence, because the person is not facing the Kinect sensor and therefore the skeleton data is very noisy. In the last phase, turn-to-sit (the subject turns and sit on chair) which is the time required for the movement. The time required for the entire fall risk test is also computed [133].

4 Evaluation of Fall Detection Algorithms

The related works discussed in last section, used different fall detection algorithms on depth images, which were either based on a fixed threshold value or an adaptive threshold to detect fall. While others used, threshold based wearable device to generate fall alert and then used some predefined classification on the images such as Support Vector Machine (SVM) to confirm the fall event. The following sub-sections evaluates different fall detection algorithms employed with the thresholding’s used to classify human falls.

4.1 Fixed Thresholding Based Techniques

For fall detection systems that is based only on depth images, the thresholds are basically the height of the subject from ground plane or centroid height and velocity or speed. The combination of these parameters in different order are used to detect fall. The height thresholds used in the related works varies from 0.1 to 0.6 m, via as the average height or thickness of the subjected detected on floor by the sensor is approximately 0.4 m. The velocity deviation considered to bias the decision varies from −1 to 2 m per second. These algorithms do not simply depend on the threshold to predict fall event, rather reliable detection of the joints and pattern of different activities are also considered. This helps to improve the detection rate and reduce the false alarm ratio.

A study that is based on skeleton data extracted from Kinect sensor used two algorithms for fall detection. The first algorithm used only skeleton position data and determines fall based on a single frame. The distance between each joint to floor is computed and fall is detected if the maximum distance is lower than a threshold value. The second algorithm calculates the vertical velocity of each joint to floor plane over many frames. The velocities of all the joints are averaged and if the average velocity (the negative downward velocity) is lower than a threshold of −1 meter per second, a fall is detected [103].

Another study used the human centroid height from floor plane to detect falls that are not fully occluded. If the activity is completely occluded, the body velocity prior to occlusion is analyzed to detect fall. Centroid height is the distance from the 3D centroid to the ground plane and the body velocity is the centroid displacement over a one second period. The threshold for the centroid height and velocity was determined through a training data which consists of daily activities (with some occluded activities) like walking, sitting and crouching down. The centroid height (Dtrain) and the body velocity (Vtrain) computed from the data recorded with Kinect was used to determine the two threshold from the mean value and standard deviation with 97.5% confidence interval [102]. The minimum centroid height (\(T_{D_{\text{min}}}\)) and the maximum body velocity (\(T_{V_{\text{max}}}\)) thresholds computed from the Eqs. (1) and (2) are 35.8 cm and 0.63 m/s respectively.

Fall detection algorithm in another study, used extracted raw depth data from the sensor for preprocessing and segmentation to prepare the data for the next steps. The algorithm then identifies, splits and classifies the different clusters of pixel in the frames and identifies human subject. Once the human subject is identified, it is then tracked and height information is evaluated to detect fall if the distance to floor goes below 0.4 m [117].

The proposed fall detection algorithm in [105] first detects the head position and computes its vertical speed. Fall is detected if the head speed and the head (height) satisfies the falling condition or otherwise if the centroid speed and the centroid (height) satisfies the falling condition. The fixed thresholds for the head to satisfy the falling condition is its vertical velocity higher than 2 m/s and distance from head to ground (height) less than 0.5 m. For the second condition, the threshold for centroid speed is 1 m/s and centroid height is 0.5 m.

In another algorithm, the silhouette of the moving individuals in each depth image is obtained by background subtraction and floor plane was estimated by v-disparity map [106]. The characteristics of the shape of the moving object was described by an ellipse which is computed from a set of moment functions. The centroid of ellipse and the angles between the ellipses and floor is computed and it is then converted to real world coordinates. Fall is detected when the distance from the centroid of the human body to floor and the angles between the ellipses and the floor are below some threshold. The distance threshold is 0.5 m and the angle threshold are 45°.

A 3D bounding box based fall detection algorithm used the width, height and depth as the box parameters. These parameters are estimated as the differences of the maximum and minimum points on x, y and z dimensions. The concept used is that during a fall, the height of the box will be decreasing, and the width and the depth will be increasing for lateral fall or vice versa for forward or backward fall. The parameters used for fall detection are the first derivative (speed) of the height (Vh) and the width-depth composition (VWD). The other parameter is the real-world y-coordinate of the top left vertex of the bounding box or simply the y-coordinate of head centroid (Vy). At the beginning of the algorithm, the speed values (Vy and VWD) are checked whether it is greater than their thresholds for a time (Th and TWD) interval of n frames. Here n is the selected number of frames in the sampling window (SW). The parameter (Vy) is also checked if it is less than a threshold (Ty). Then the algorithm will start tracking Vy to identify any inactivity of the subject. If the speed of Vy is less than the Th threshold for 10 frames, fall is confirmed. For experimental, the following thresholds are used. The thresholds for Th is 1.1, Ty is between 1.4 to 1.7, Ty is 0.5 and the number of frames in SW is between 5 to 8.

4.2 Adaptive Thresholding Based Techniques

The adaptive threshold used in one study was calculated by multiplying the first computed head height with 0.25, this made the method adaptive to the person with different heights [110]. If the head height is lower than the threshold value, then the centroid height is used as second judgement or else the threshold value, the centroid height and centroid position is not considered. A fall is detected if the head height and the centroid height are lower than the threshold value at the same time.

Another study refers an abnormal action if any one of the distances from hip center, head or neck to the floor plane is lower than a fall threshold (DTfall) and any of their vertical velocities with respect to floor are larger than a velocity threshold (VT) at the same time [109]. The alert is triggered, if the distance between the head and the floor are lower than the threshold (DTrecover) for a period of time. Here the threshold DTfall and DTrecover are adaptive to the height of the subject. The fall threshold DTfall is 1.5 times the distance between head and neck (height from head to neck) as shown in Eq. (3).

The recover threshold (DTrecover) is three time of DTfall as shown in Eq. (4).

The vertical velocity with respect to floor is defined in Eq. (5).

Here, n and \(n - 1\) is the nth and (n – 1)th frame and T is the frame period.

Another study used three velocity features and a head to hip height difference for fall detection, where the velocity features used adaptive thresholds. An adaptive head velocity threshold was used as a first step to detect abnormal head movement [108]. The threshold is adaptive to the person’s height (h) as follows.

The other two adaptive thresholds are hip horizontal velocity (V_hip_h) and hip vertical velocity (V_hip_v) thresholds which are also adaptive to the height of the subject. The two thresholds are:

4.3 Fixed and Adaptive Thresholding Based Techniques

A combination of fixed and adaptive threshold is used in one study, where a stationery threshold of 0.3 m is used as a threshold to detect fall if the floor plane is detected [104]. If floor plane is not visible another algorithm checks if the y-coordinates of the joints are below a given threshold to detect the fall event. This algorithm uses a kind of adaptive threshold which is simply 0.7 times the difference of y-coordinate of head and right-ankle (which depends on the height of the user). A fall is detected if the difference of absolute value of previous distance from y-coordinate of head to y-coordinate of right-ankle (at the time adaptive threshold is calculated) with any new distance between the same two joints are greater than the adaptive threshold calculated and if the y-coordinate of other joints are also below a given threshold. The joints considered in this study are head, shoulder-center, hip-center, right-angle and left-ankle.

4.4 Fusion of Thresholding and Classifier Based Techniques

This section discusses on the fall detection algorithms employed in systems that used only depth images with classifiers and those that used an initial device to identify any potential fall. Fall detection systems based on some sort of classifier, either runs the algorithm on some features representing human in depth image or directly on the depth image.

An unobtrusive fall detection proposed in a study used only depth images and person was detected on the basis of the depth reference image [124]. A low computational method is demonstrated for updating the depth reference image. The ground plane is extracted using v-disparity images, Hough transform and RANSAC algorithm. Fall is detected using a classifier trained on features of the human subject from depth images and point clouds.

A deep learning classifier for fall detection based on the infrared sensor measurement mainly focused on statistical properties as generalization. The proposed deep learning classifier consists of 5 hidden neurons as oppose to 15 hidden neurons in neural network. The structure of the proposed deep learning includes a feature selection based on Gram-Schmidt orthogonalization and NPCA block for transforming the raw data into a non-linear manifold [122].

The automated fall detection approach using only depth data presented in [121] is based on shape features and improved machine learning. The algorithm consists of shape-based fall characterization and a learning based classifier to identify human fall from other daily activities.

The rest of the depth map based studies, basically depends on an alert from the wearable device to start fall confirmation process from depth images. The wearable devices contain an accelerometer, and/or a gyroscope and the threshold used varies from 2.5 to 3 g. After the threshold is reached from the wearable device data then either a classifier is used to confirm if the subject is lying on floor or combination of height and velocity or height with a threshold is used to detect human fall.

5 Challenges and Issues in Existing Fall Detection Systems

This section discusses the main challenges that researchers are facing in developing reliable fall detection systems due to the limitation of the technologies. The major issues and pitfalls of the existing fall detection systems from all the approaches are addressed in this section.

5.1 Issue of Synchronization Between Devices

A recent trend in fall detection systems incorporates a wearable fall detector with vision based systems to improve the accuracy and to cover the areas which is not visible to the vision based device. The wearable device is sometimes configured to generate potential fall alert to the vision based mechanism that will confirm the fall event. The wearable devices are usually wirelessly connected to the main system using Bluetooth which has higher sampling rate than that of the RGB or depth cameras. In any case, the lack of synchronization between the wearable and the vision based device can cause the system to miss important timing to identify potential fall activity.

5.2 Issues with Data Fusion from Multiple Sensors

There are several issues arising in systems due to data fusion from multi-sensors for fall detection. Data fusion techniques combines data from more than one sensor and related information from associated databases to achieve maximum accuracies than using a single sensor [134, 135]. It includes issues from reliable data measurement, data communication to reliable data analysis [136].

Generally, data fusion has several advantages including improved data authenticity but there are number of issues that made it challenging [137]. The challenges in data fusion for fall detection that should be analyzed and considered before developing a fall detection frameworks includes data correlation, conflicting data, processing framework, computational power and increase in overall cost of the system [138]. Conflicting data, is meant for the issues of interpreting similar activities differently by the unrelated sensors during monitoring process. The data fusion strategy can differ from the approaches and sensors involved. Most of the current systems analyze data for each sensor component separately and apply the fusion as a final step [136]. In some cases, the raw sensor data without any preprocessing are send to a common framework for processing. The data are also processed in parallel from different sensors and it is fused with another unrelated sensor. A careful decision is required depending on the sensors used to avoid unnecessary complication in the fusion algorithm and to reduce the computation time. The computational cost will also increase due to the additional amount of data collected by the sensor.

5.3 Lack of Accuracy Due to Off-Line Training

Those studies that employed machine learning to classify human fall with off-line training data are subject to misinterpret from the differences in background color and surrounding objects. Since the background and any surrounding lighting condition of the off-line database may be completely different from the actual users’ environment. This can degrade the classification accuracy with most of the machine learning algorithms. It is likely that a learning algorithm will be biased for an input data, if it is trained from different training datasets. Thus, a learning algorithm can have a high variance for any particular input data, in case if it had shown different output values when trained on another dataset. Generally, this is about the prediction error of the classifier which is associated with the amount of bias and variance of the learning algorithm which often requires tradeoff [139]. The number of the true data and their complexities together with the dimensionality of the input space can further complicate the learning process. In addition, the difference in between the two-setups including the placement of the camera can have significant impact of the performance.

5.4 Image Extraction Timing for Fall Confirmation

Some of the fall detection algorithms that is based on machine learning to authenticate the fall event uses depth image extracted at the time when a potential fall is detected. The timing when the depth image is extracted and fed to the machine learning classifier has a significant impact on the accuracy. Those classifiers simply check for a lying posture on the floor which highly depends on a clear appearance of the subject. Therefore, the potential fall activity alert that starts the fall authentication should take care of the exact time when the subject completely rests on floor. Other than that, any occlusions present in the depth image which is fed into the classifier can degrade the performance. As a result, the performance of such algorithms highly depends on the accuracy of the potential fall alert mechanism and robustness of the classifier.

5.5 Privacy Concerns

This is in-fact the main reason for the rejection of non-invasive vision based devices that used RGB camera and utilize live video recording. Generally, users would not like to be watched or their private living be recorded for any purpose. This could be the main challenge if developing a non-invasive human fall detection system using RGB cameras.

5.6 Hardware Limitations

5.6.1 Battery Life of Wireless Devices

Stand-alone devices with wireless communications powered by batteries are prone to battery life issues. The device itself can worse the issue, if not properly designed. Power-saving strategies are required at software and circuit level to minimize the power consumption. Standby capabilities and limiting the radio transmissions are the main approaches to implement power-saving. Implementation of power saving techniques can arise other issues such as wakeup delays from standby mode and time required to start the defined process can lead to loss of critical information. The data from wireless devices build with accelerometers for fall detection or prediction also need to be toughly analyzed in-order to fine-tune the circuitry and the software behind the system to limit the radio activity. The limitation of the currently available battery technology is also one of the technological barrier for remote monitoring systems relying on wearable sensors [140].

Investigations showed that accelerometer based wearable devices, often use orientation of the sensor for fall prediction which requires a gyroscope for accurate detection of the sensor’s current orientation. Since the acceleration data alone is not enough to reliably evaluate the actual orientation of the device [141], gyroscopes are often used along with accelerometers. The use of gyroscope improves the performance of the system at the cost of an additional device, consuming extra power.

5.6.2 Limitation of Smartphones

Smartphones based fall detectors are prone to generate problems, simply because they are not intended for fall detection [142]. The functionality of the in-build accelerometer, the features of the operating system and the sensing architecture of the smartphones are not initially intended for such application, especially for a real-time fall detection [138]. Therefore, it is very likely that such fall detectors may behave differently on different phones depending on the smartphone architecture. Such fall detectors will also be subject to the processing capabilities and battery powers of the smartphones [61]. Furthermore, the accuracy of fall detection may dramatically degrade depending on where the user places the phone. For an example, if the system is designed for users to place the phone on waist and if the user mistakenly places it in his/her pocket, then the accuracy may be very low.

5.6.3 Limitation in Wireless Transmission

Wearable based fall detectors often use wireless communication including Bluetooth and ZigBee for communicating between the wearable gadget and the main system. The technologies behind these are subject to many restrictions inheriting from the limitations in wireless communication including interferences. The common issues in using Bluetooth wireless communication accounts for the capacity limitation, coverage limitation and power management. Many studies [54, 56, 143,144,145] had chosen Zigbee technology due to its advantages over Bluetooth technology. Zigbee is low cost and low powered, but the limited coverage and the replacement cost of the components are the main disadvantages. In addition, any failure in communication between the device and main system may often require technical assistance, thus making the entire system unstable and unreliable. This issue can be common for systems with components from different vendors and systems that are poorly designed.

5.7 Performance Degradation Due to Simulated Activities

Most of the real-time based fall detection system and in-fact almost all the related works are tested and validated with simulated activities. Simulated activities are far different from real life activities, especially when real life activities of elderly are simulated by healthy volunteers. As a result, the evaluation of such systems is limited, and it cannot achieve the proposed detection rate from simulated falls in real world. This could have a higher impact for velocity based algorithm [103], especially if the actual falls have shorter duration than the simulated falls.

5.8 Response Time

Response time of the sensor or camera and the device can play an important role in degrading the performance of the systems. Apart from this, the effectiveness of the fall detection algorithm (including the thresholds employed, flow and any external triggers used) can also affect the response time of the system and thus degrade the performance.

5.9 Disturbance in the Environment

The motion from the visitors [146] is also an issue for non-invasive sensor based systems, apart from the disturbance of obstacles in the scene, especially radar based approaches. To delineate such noises, additional measurements are very often required which will increase the computational cost.

6 Recent Developments and Future Directions

The three basic approaches used to develop human fall detection systems are wearable based devices, ambient based devices and vision based devices. In this work, depth map based devices are categorized under vision based approach as shown in Fig. 1. The studies representing the three basic approaches are basically structured to solve the drawbacks of one another. For an example, ambient based devices used to solve the issues in wearable based devices and wearable devices also solves some of the problems that ambient sensors failed to handle. Before the advent of cheap depth sensors in the market, vision based devices using RGB camera used to take care of the main issue in wearable and ambient based devices with even higher accuracy at the cost of expensive systems and setup. Even with RGB cameras, the concerns arising regarding the acceptability and reliability of the fall detection systems are not limited rather added its own drawbacks such as capturing and recording of color videos leading to privacy concerns. Additionally, the cost of the systems, camera calibrations, requirement of adequate lightening and setup are common issues.

The advent of cheap Red-Green-Blue-Depth (RGBD) cameras, has paved way to the development of novel systems to overcome the limitations of these previous works [125, 147]. The cheap depth sensors such as Microsoft Kinect sensor, can extract depth information of the objects in the scene even with low lighting condition. The auto calibration capability and other features of the sensor can negligibly reduce the issues concerning with RGB cameras. One of the main advantage of the Kinect sensor is that it can be place in certain places according to user requirements [132], unlike the complex installation procedure of some RBG fall detection systems. It is also worth noting that by using only the depth images it can preserve the privacy of users [132].

The first sub-categories in depth sensor based approach used joint position or measurements and its movement with thresholding for fall detection. The second sub-category used fusion of wearable and depth maps with machine learning or joint position for fall detection. The third sub-category employs machine learning or other classifier only on depth images. The research studies based on fusion of an initial devices and depth sensor is not very relevant, since their system design and performance are all subject to the drawback of wearable devices which is regarded as main causes of rejection of fall detection systems. Wearable devices are mainly rejected due to the inconvenience in carrying them during daily life activities [138]. In addition, they used the wearable device to generate any potential fall movement and to start the depth image based classifier to confirm fall. In such cases the capabilities of the depth sensor are not fully exploited and thus the actual accuracies that could be achieved are not realized. Generally, the overall performance of such systems solely depends on the effectiveness of the wearable device to identify potential fall movements.

In some of the studies, detection does not always depend on the wearable device because in certain situations like while changing cloths it is not possible to wear the device [125]. Therefore, in such cases the systems depend on the depth sensor. This requires a proper time synchronization between wireless initial device sampling rate and depth sensor frame rate. It is impossible to access and control the Kinect embedded clock [148]. But it is important to synchronize the Kinect sensor and main system and the wireless initial device in order to properly integrate such fall detection systems which depend on one another and requires switching of fall authentication process between devices.

The other two approaches in depth map based hierarchy depends only on the depth image generated from the depth sensor as illustrated in Fig. 2. From the review of literature, it was also found that exploring the depth information alone can minimize the issues faced by the previous work for fall detection. The only issue that cannot be dealt is the limitation of the Kinect sensor’s viewing spectrum, which can be solved using more than one sensor depending on the coverage requirement.

Previous works had used different techniques on the depth image to classify human fall from other activities of daily life. Some of the studies used extracted human joint measurement and movement over time to identify human fall. While others used machine learning or classifiers either on the depth image or extracted human features to detect human fall. The use of machine learning classification can have many problems apart from the computational cost and have complex implementation when compared with threshold based approaches [149]. As discussed, off-line training can degrade the accuracy and the time when the image is extracted can play an important role in identifying the lying posture. They used different algorithms to segment human subject from the depth image. Some works, developed their own preprocessing directly on the raw data, while it is not possible to achieve the established auto calibration of the Kinect sensor with manual preprocessing, even though it was not primarily developed for fall detection. If any developed preprocessing cannot auto calibrate when a subject enters and exit into the view of the sensor, then fall detection algorithm working on top of the preprocessing will not receive adequate information to make an accurate decision.

The approaches that is based only on the depth images and used joint measurements instead of classifiers basically use the distance of human joints from floor plane and their vertical velocities to classify and authenticate human fall from other activities of daily life. Various joints such as head joint height from floor, centroid height and their respective velocities are fed into an algorithm with some thresholding to identify any falling action. With this approach, some fall detection algorithms cannot work in case of occlusion, because it cannot calculate the distance to the ground [105]. The use of joint height in fall detection shows good performance for falls ending on the floor but it has failed if the end of the fall is occluded behind furniture [102]. It is also found that it could be solved using velocity just before the occlusion.

Basically, most of the related works used selected skeleton joints or features of the subject with a predefined algorithm for fall detection. The algorithms either uses a fixed or adaptive threshold within a flow to make the decision. One of the study used [126], a statistical approach with features using a Bayesian framework. It is very clear from the literature that, a lot more effort still remain, to device algorithms to follow the changes in the selected key features of the subject to fully utilize the capabilities of the depth sensor. It was found that a low-computational algorithm with statistical analysis of the key features of the subject can significantly minimize the issue of obstacles blocking the view of the subject. This could on the other hand, make the system more stable especially by avoiding the computation hungry machine learners and other classifiers.

It is also worth to be noted that none of the related works so far had utilized any fall risk level estimation during fall detection. Studies conducted on fall risk assessments were primarily aimed to identify potential fall risk patients for nursing homes or hospitals. This was achieved either through questionnaires or using sensors to detect likely physical weakness of the patients that may cause falls. This was then used to categorize patients with high fall risks or low fall risks to provide better healthcare and avoid fall injuries. Incorporating a robust fall risk level estimation protocol within the fall detection algorithm to adapt appropriate parameters depending on the movement of the user can improve the fall detection accuracy greatly. Since fall risk factors can help to adapt the fall detection process depending on the risk level of the users. This is because the nature of fall and characteristics of other activities of daily life differ with fall risk levels. For users with high risk of falls, the fall detection algorithm could switch to intensive detection process and for users with low fall risks, the algorithm could track changes after a gap, thus reducing computational costs. Since fall risk level of the user also changes overtime, such an incorporation could make any fall detection system to outperform over other available systems.

7 Performance of the Related Works on Fall Detection

This section demonstrates the performance of the related works using confusion matrix performance measures. Apart from the three basic confusion matrix measures, the other parameters considered in this evaluation are precision, F-score, Mathews correlation coefficient (MCC), error rate and miss rate.

The collected original performance measures and confusion matrix data for the related works are illustrated in Table 2. Out of the fourteen studies only six studies provided confusion matrix data. Even though, some of the performance measures does not give fair values for comparison between imbalanced data sets, parameters such as accuracy and MCC are affected only in extreme cases [150]. The following performance measures are used for the comparison.