Abstract

An incremental method of feature selection based on mutual information, called incremental Max-Relevance, and Min-Redundancy (I-mRMR), is presented. I-mRMR is an incremental version of Max-Relevance, and Min-Redundancy feature selection (mRMR), which is used to handle streaming data or large-scale data. First, Incremental Key Instance Set is proposed which composes of the non-distinguished instances by the historical selected features. Second, an incremental feature selection algorithm is designed in which the incremental key instance set, replacing of all the seen instances so far, is used in the process of adding representative features. Since the Incremental Key Instance Set is far less than the whole instances, the incremental feature selection by using this key set avoids redundant computation and save computation time and space. Finally, the experimental results show that I-mRMR could significantly or even dramatically reduce the time of feature selection with an acceptable classification accuracy.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Incremental learning is a promising approach to refreshing data mining results, which utilizes previously saved results or data structures to avoid the expense of re-computation [4, 13]. The main idea of incremental feature selection is that only part of the data are considered at one time and the results are subsequently combined. Thus incremental feature selection technique makes full use of the historical information, reduce the training scale greatly, and save training time [6, 12].

Feature selection based on mutual information has been deeply studied [2, 10, 11, 14], because mutual information (MI) [8] is a good tool to measure the correlation and redundancy among features. As a pioneer, Battiti [1] proposed a greedy selection method called MIFS based on mutual information between inputs and outputs. Considering MIFS does not work well on nonlinear problems, Kwak and Choi [5] proposed an improved feature selection method MIFS-U which is feasible and effective on nonlinear applications. However, both Battiti and Kwak’s methods omit the redundancy among features, only relevance among features and labels are considered. Peng et al. [7] then proposed a heuristic “Max-Relevance and Min-Redundancy” framework for feature selection. In [7] it is pointed that mRMR criterion is equal to max-dependency. Furthermore, Estévez and Tesmer [3] proposed an updated feature selection method, called normalized mutual information features selection. However, most of them could only be applied to static data. When new instances are arriving successively, these methods have to be re-computed on the updated datasets.

In this paper, we propose an incremental feature selection algorithm, called I-mRMR. First, Incremental Key Instance Set is proposed which is composed of the instances not distinguished by historical selected features. An incremental algorithm is then proposed based on this Incremental Key Instance Set. Finally, the numerical experiments of I-mRMR shows that I-mRMR makes full use of the historical selected features, reduce the training scale greatly, and save training time.

The remainders of this paper are organized as follows. Section 2 reviews mRMR based on the normalized mutual information. Section 3 introduces the concept of Incremental Key Instance Set and presents the incremental feature selection algorithm, I-mRMR. In Sect. 4, ten UCI datasets are employed to illustrate the effectiveness and efficiency of I-mRMR. Section 5 concludes this paper.

2 Preliminaries

In this section, MI and mRMR are reviewed. For more detailed information about them, please kindly refer to [9].

2.1 Notation Description

Given a set of original instances \(U=[x^{(1)},x^{(2)},\cdots ,x^{(n)}]^T\). Here \(U\in R^{(n\times p)}\) is a matrix with n is the number of original instances and p is the number of all features. \(x^{(i)}\in R^p\) is a row vector representing the i-th instance in U. S is the index set of selected feature subset. \(\overline{S}\) denotes the complementary set of S. \(x_t\) is a column vector representing the t-th feature. \(x_S^{(i)}\) represents a vector of \(x^{(i)}\) under feature subset S(\(i=1,\cdots ,n\)), \(Y=[y^{(1)},\cdots . y^{(n)}]^T\) is a column vector representing the label feature in U. Here \(y^{(i)}\) is the label for the i-th instance in U(\(i=1,\cdots ,n\)).

2.2 Max-Relevance and Min-Redundancy

Max-Relevance is to find the feature \(x_t\) that satisfies the following formula:

By the Max-Relevance criterion, only the relevance between the features and labels are considered, whereas the relevance among the features is not considered. Thus there may exist great redundancy among the selected features. As a result, it is necessary to make the redundancy among the selected features as small as possible.

The above two criteria are combined, called “Max-Relevance and Min-Redundancy”, and defined as follows.

Suppose that the feature subset candidate we have selected so far is \(S_{m-1}\), and \(m-1\) indicates that \(m-1\) features have been selected. And then the feature with the maximum value of \(\varPhi (D,R)\) is selected. The incremental feature selection algorithm optimizes the following formula:

2.3 Normalized Mutual Information Feature Selection

The normalized mutual information \(NI(x_k,x_t)\) between the feature \(x_k\) and the feature \(x_t\) is then defined as follows.

Therefore, “Max-Relevance and Min-Redundancy" criterion can be rewritten as follows:

3 The Proposed Incremental Algorithm

The key idea of our proposed method is to update and maintain the previously selected feature subset by finding the features more representative for discriminating the new instances from its current surrounding.

3.1 Problem Definition

When some new instances, represented by \({\bigtriangleup }U\in R^{m\times p}\)(where m represents the number of newly added instances), are added to U, \(y^{(n+j)}\) is the label for the j-th instance in \({\bigtriangleup }U\), \(j=1,\cdots ,m\). The selected feature subset S has to be updated from U to \(U\cup {\bigtriangleup }U\). The traditional method is directly to recompute the feature selection method on all seen instances \(U\cup {\bigtriangleup }U\) to obtain the updated feature subset \(S_{U\cup {\bigtriangleup }U}\). It is very time and space consuming and many redundant computations are conducted. Therefore, it is necessary to reduce the amount of computation by using some incremental mechanisms.

3.2 Incremental Key Instance Set

To incrementally update the selected feature subset S, it is necessary to find the features more representative for discriminating the new instances from its current surrounding.

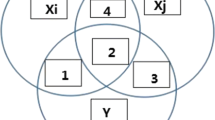

In the following we propose a concept called Incremental Key Instance Set which composes of part of the seen instances so far which are undistinguished by the original features subset S.

Definition 1

Given U, S, and \({\bigtriangleup }U\), then Incremental Key Instance Set of S, denoted by \({\bigtriangleup }I_S\), is defined as follows.

Incremental Key Instance Set \({\bigtriangleup }I_S\) composes of such instances which have the same feature values on S but the different labels, which means that the features in S could not distinguish the new instances from its current surrounding and then some new features should be added. \({\bigtriangleup }I_S\) plays the key role to find the new features.

A function that measures the significance of the feature according to the criterion of the “Max-Relevance and Min-Redundancy” is then proposed based on Increment Key Instance Set.

Definition 2

Given U, Y, F and S, for every \(k\in \overline{S}\) and \(t\in S\), the significance degree of \(x_k\) with respect to Y and S is defined as follows.

Computing the significance degrees of \(\overline{S}\) on \({\bigtriangleup }I_S\), all the features in \(\overline{S}\) are then sorted. Thus the feature with the maximum distinguishing power, i.e. maximum significance degree, is added to S.

3.3 Incremental Feature Selection Algorithm

In this subsection, we present the incremental feature selection algorithm when a set of new instances arriving. I-mRMR is designed in Algorithm 1.

4 Numerical Experiments

In this section, we conduct some numerical experiments to evaluate the proposed algorithm, I-mRMR, on ten datasets from UCI. The Max-Relevance and Min-Redundancy feature selection based on normalized mutual information, denoted by mRMR [3], as the classical non-incremental feature selection algorithm, is compared with I-mRMR.

4.1 Experimental Setup

All the experiments have been conducted on computer with CentOS release 6.5(Final), Westmere E56xx/L56xx/X56xx(Nehalem-C) and 8 GB memory. The programming language is Python. The detail experimental setting are presented as follows.

(1) Since our algorithm is only valid for discrete data, fuzzy-c-means is used to discretize those continuous data sets.

(2) Every dataset is divided into six parts equally, the first part is used as the original data set U, and remaining parts as the newly added dataset \({\bigtriangleup }U\), are added one by one.

(3) All the experimental comparison is demonstrated from three indices: running time, global speedup ratio, local speedup ratio.

Global speedup ratio:\(\frac{\sum _{streaming\;instances}RT_{mRMR}}{\sum _{streaming\;instances}RT_{I-mRMR}}\)

Where \(RT_{mRMR}\) denotes the running time of mRMR on the seen instances so far, \(RT_{I-mRMR}\) denotes the running time of I-mRMR on the seen instances so far. When the dataset is divided into six parts, \(\sum _{streaming\;instances}RT_{mRMR}\) represents the sum of six times running time of mRMR, where each time the dataset is updated when some new instances arriving.

Local speedup ratio:\(\frac{RT_{mRMR}}{RT_{I-mRMR}}\)

When the dataset is divided into six parts, the local speedup ratio is the ratio of the running time of mRMR on the whole dataset to the running time of I-mRMR when the last part arriving.

(4) To show the effectiveness of I-mRMR, SVM and KNN are used to evaluate the classification performance. And 5-fold cross validation is used in classification evaluation.

4.2 Experimental: Evaluation on UCI

To test the performance of I-mRMR, some experimental comparison and analyses are conducted on ten UCI datasets.

Compared with mRMR. In this part, I-mRMR and mRMR are compared. Both of them are feature selection methods based on the normalized mutual information of “Max-Relevance and Min-Redundancy” criterion. One main difference between them is that I-mRMR is an incremental feature selection algorithm, whereas mRMR is a non-incremental feature selection algorithm.

we demonstrate the running time of I-mRMR and mRMR when instances successively arriving and then graph them in Fig. 1.

Figure 1 clearly demonstrates that the running time of I-mRMR changes slightly, whereas the running time of mRMR increases significantly with the instances successively arriving. This shows that I-mRMR works efficiently on streaming instances, whereas mRMR works more and more less-efficiently.

To further illustrate the time superiority of I-mRMR, the global speedup ratio is then presented, seen in Table 1.

Table 1 shows that the total time of mRMR is obviously or even significantly higher than that of I-mRMR, especially on the datasets with high number of instances. This is because when some new instances arriving mRMR has to be recomputed on the whole seen instances so far, which is really time consuming. Furthermore, Table 2 demonstrates the time superiority of I-mRMR from the aspect of local speedup ratio. From Table 2 we observe that I-mRMR is significantly or even dramatically faster than mRMR. This is because I-mRMR only consider part of instances which are not distinguished by the previous selected features, whereas mRMR computes on the whole seen instances so far.

5 Conclusions

In this paper, we propose an incremental feature selection algorithm I-mRMR based on max-relevance and min-redundancy criterion. When a new set of instances is arriving, not all seen instances so far are necessary to update the feature selection results. Actually, just an Incremental Key Instance Set, which is composed of the instances undistinguished by historical selected features, is key to update the feature subset. As a result, I-mRMR is designed by using Incremental Key Instance Set, which dramatically improve the efficiency of feature selection on streaming instances. By numerical experiments, we demonstrate that the proposed incremental algorithm is significantly faster than the classical algorithm mRMR not only in the global speedup ratio but also in the local speedup ratio. Furthermore, on the extremely high-dimensional dataset, we experimentally demonstrate that our proposed feature selection algorithm I-mRMR is obviously more efficient than mRMR with an acceptable classification accuracy.

References

Battiti, R.: Using mutual information for selecting features in supervised neural net learning. IEEE Trans. Neural Netw. 5(4), 537–550 (1994)

Chandrashekar, G., Sahin, F.: A Survey on Feature Selection Methods. Pergamon Press, Inc., Oxford (2014)

Estévez, P.A., Tesmer, M., Perez, C.A., Zurada, J.M.: Normalized mutual information feature selection. IEEE Trans. Neural Netw. 20(2), 189–201 (2009)

Guyon, I., Elisseeff, A., Kaelbling, L.P.: An introduction to variable and feature selection. J. Mach. Learn. Res. 3(6), 1157–1182 (2003)

Kwak, N., Choi, C.H.: Input feature selection for classification problems. IEEE Trans. Neural Netw. 13(1), 143 (2002)

Liu, H., Setiono, R.: Incremental feature selection. Appl. Intell. 9(3), 217–230 (1998). https://doi.org/10.1023/A:1008363719778

Peng, H., Long, F., Ding, C.: Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 27(8), 1226–1238 (2005)

Rossi, F., Lendasse, A., François, D., Wertz, V., Verleysen, M.: Mutual information for the selection of relevant variables in spectrometric nonlinear modelling. Chemom. Intell. Lab. Syst. 80(2), 215–226 (2006)

Schilling, D.L.: Elements of Information Theory. Wiley, Hoboken (2003)

Sluga, D., Lotrič, U.: Quadratic mutual information feature selection. Entropy 19(4), 157 (2017)

Vergara, J.R., Estévez, P.A.: A review of feature selection methods based on mutual information. Neural Comput. Appl. 24(1), 175–186 (2014)

Xu, J., Xu, C., Zou, B., Tang, Y.Y., Peng, J., You, X.: New incremental learning algorithm with support vector machines. IEEE Trans. Syst. Man Cybern. Syst. PP(99), 1–12 (2018)

Ye, J., Li, Q., Xiong, H., Park, H., Janardan, R., Kumar, V.: IDR/QR: an incremental dimension reduction algorithm via QR decomposition. IEEE Trans. Knowl. Data Eng. 17(9), 1208–1222 (2005)

Zhang, Z., Hancock, E.R.: Mutual information criteria for feature selection. In: Pelillo, M., Hancock, E.R. (eds.) SIMBAD 2011. LNCS, vol. 7005, pp. 235–249. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-24471-1_17

Acknowledgements

This work is supported by National Key Research & Develop Plan (No. 2016YFB1000702, 2018YFB1004401), National Key R&D Program of China(2017YFB1400700), NSFC under the grant No. 61732006, 61532021, 61772536, 61772537, 61702522 and NSSFC (No. 12&ZD220), and the Fundamental Research Funds for the Central Universities, and the Research Funds of Renmin University of China (15XNLQ06). It was partially done when the authors worked in SA Center for Big Data Research in RUC. This Center is funded by a Chinese National 111 Project Attracting. This work is also supported by the Macao Science and Technology Development Fund (081/2015/A3).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Xiu, Y., Zhao, S., Chen, H., Li, C. (2019). I-mRMR: Incremental Max-Relevance, and Min-Redundancy Feature Selection. In: Shao, J., Yiu, M., Toyoda, M., Zhang, D., Wang, W., Cui, B. (eds) Web and Big Data. APWeb-WAIM 2019. Lecture Notes in Computer Science(), vol 11642. Springer, Cham. https://doi.org/10.1007/978-3-030-26075-0_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-26075-0_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-26074-3

Online ISBN: 978-3-030-26075-0

eBook Packages: Computer ScienceComputer Science (R0)