Abstract

These are the handouts of an undergraduate minicourse at the Università di Bari (see Fig. 1), in the context of the 2017 INdAM Intensive Period “Contemporary Research in elliptic PDEs and related topics”. Without any intention to serve as a throughout epitome to the subject, we hope that these notes can be of some help for a very initial introduction to a fascinating field of classical and modern research.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 The Laplace Operator

The operator mostly studied in partial differential equations is likely the so-called Laplacian, given by

Of course, one may wonder why mathematicians have a strong preference for such kind of operators—say, why not studying

Since historical traditions, scientific legacies or impositions from above by education systems would not be enough to justify such a strong interest in only one operator (plus all its modifications), it may be worth to point out a simple geometric property enjoyed by the Laplacian (and not by many other operators). Namely, Eq. (1.1) somehow reveals that the fact that a function is harmonic (i.e., that its Laplace operator vanishes in some region) is deeply related to the action of “comparing with the surrounding values and reverting to the averaged values in the neighborhood”.

To wit, the idea behind the integral representation of the Laplacian in formula (1.1) is that the Laplacian tries to model an “elastic” reaction: the vanishing of such operator should try to “revert the value of a function at some point to the values nearby”, or, in other words, from a “political” perspective, the Laplacian is a very “democratic” operator, which aims at levelling out differences in order to make things as uniform as possible. In mathematical terms, one looks at the difference between the values of a given function u and its average in a small ball of radius r, namely

In the smooth setting, a second order Taylor expansion of u and a cancellation in the integral due to odd symmetry show that  is quadratic in r, hence, in order to detect the “elastic”, or “democratic”, effect of the model at small scale, one has to divide by r

2 and take the limit as

is quadratic in r, hence, in order to detect the “elastic”, or “democratic”, effect of the model at small scale, one has to divide by r

2 and take the limit as  . This is exactly the procedure that we followed in formula (1.1).

. This is exactly the procedure that we followed in formula (1.1).

Other classical approaches to integral representations of elliptic operators come in view of potential theory and inversion operators, see e.g. [96].

This tendency to revert to the surrounding mean suggests that harmonic equations, or in general equations driven by operators “similar to the Laplacian”, possess some kind of rigidity or regularity properties that prevents the solutions to oscillate too much (of course, detecting and establishing these properties is a marvelous, and technically extremely demanding, success of modern mathematics, and we do not indulge in this set of notes on this topic of great beauty and outmost importance, and we refer, e.g. to the classical books [62, 71,72,73]).

Interestingly, the Laplacian operator, in the perspective of (1.1), is the infinitesimal limit of integral operators. In the forthcoming sections, we will discuss some other integral operators, which recover the Laplacian in an appropriate limit, and which share the same property of averaging the values of the function. Differently from what happens in (1.1), such averaging procedure will not be necessarily confined to a small neighborhood of a given point, but will rather tend to comprise all the possible values of a certain function, by possibly “weighting more” the close-by points and “less” the contributions coming from far.

2 Some Fractional Operators

We describe here the basics of some different fractionalFootnote 1 operators. The fractional exponent will be denoted by s ∈ (0, 1). For more exhaustive discussions and comparisons see e.g. [24, 49, 81, 82, 84, 91, 104, 107, 108]. For simplicity, we do not treat here the case of fractional operators of order higher than 1 (see e.g. [3,4,5, 50]).

2.1 The Fractional Laplacian

A very popular nonlocal operator is given by the fractional Laplacian

Here above, the notation “ P.V. ” stands for “in the Principal Value sense”, that is

The definition in (2.1) differs from others available in the literature since a normalizing factor has been omitted for the sake of simplicity: this multiplicative constant is only important in the limits as  and

and  , but plays no essential role for a fixed fractional parameter s ∈ (0, 1).

, but plays no essential role for a fixed fractional parameter s ∈ (0, 1).

The operator in (2.1) can be also conveniently written in the form

The expression in (2.2) reveals that the fractional Laplacian is a sort of second order difference operator, weighted by a measure supported in the whole of \(\mathbb {R}^n\) and with a polynomial decay, namely

Of course, one can give a pointwise meaning of (2.1) and (2.2) if u is sufficiently smooth and with a controlled growth at infinity (and, in fact, it is possible to set up a suitable notion of fractional Laplacian also for functions that grow polynomially at infinity, see [59]). Besides, it is possible to provide a functional framework to define such operator in the weak sense (see e.g. [106]) and a viscosity solution approach is often extremely appropriate to construct general regularity theories (see e.g. [31]).

We refer to [49] for a gentle introduction to the fractional Laplacian.

From the point of view of the Fourier Transform, denoted, as usual, by \(\widehat {\cdot }\) or by \({\mathcal {F}}\) (depending on the typographical convenience), an instructive computation (see e.g. Proposition 3.3 in [49]) shows that

for some c > 0. An appropriate choice of the normalization constant in (2.1) (also in dependence of n and s) allows us to take c = 1, and we will take this normalization for the sake of simplicity (and with the slight abuse of notation of dropping constants here and there). With this choice, the fractional Laplacian in Fourier space is simply the multiplication by the symbol |ξ|2s, consistently with the fact that the classical Laplacian corresponds to the multiplication by |ξ|2. In particular, the fractional Laplacian recoversFootnote 2 the classical Laplacian as  . In addition, it satisfies the semigroup property, for any s, s′∈ (0, 1) with s + s′⩽1,

. In addition, it satisfies the semigroup property, for any s, s′∈ (0, 1) with s + s′⩽1,

that is

As a special case of (2.4), when s = s′ = 1∕2, we have that the square root of the Laplacian applied twice produces the classical Laplacian, namely

This observation gives that if \(U:\mathbb {R}^n\times [0,+\infty )\to \mathbb {R}\) is the harmonic extensionFootnote 3 of \(u:\mathbb {R}^n\to \mathbb {R}\), i.e. if

then

See Appendix A for a confirmation of this. In a sense, formula (2.7) is a particular case of a general approach which reduces the fractional Laplacian to a local operator which is set in a halfspace with an additional dimension and may be of singular or degenerate type, see [30].

As a rather approximative “general nonsense”, we may say that the fractional Laplacian shares some common feature with the classical Laplacian. In particular, both the classical and the fractional Laplacian are invariant under translations and rotations. Moreover, a control on the size of the fractional Laplacian of a function translates, in view of (2.3), into a control of the oscillation of the function (though in a rather “global” fashion): this “democratic” tendency of the operator of “averaging out” any unevenness in the values of a function is indeed typical of “elliptic” operators—and the classical Laplacian is the prototype example in this class of operators, while the fractional Laplacian is perhaps the most natural fractional counterpart.

To make this counterpart more clear, we will say that a function u is s-harmonic in a set Ω if (− Δ)s u = 0 at any point of Ω (for simplicity, we take this notion in the “strong” sense, but equivalently one could look at distributional definitions, see e.g. Theorem 3.12 in [18]).

For example, constant functions in \(\mathbb {R}^n\) are s-harmonic in the whole space for any s ∈ (0, 1), as both (2.1) and (2.2) imply.

Another similarity between classical and fractional Laplace equations is given by the fact that notions like those of fundamental solutions, Green functions and Poisson kernels are also well-posed in the fractional case and somehow similar formulas hold true, see e.g. Definitions 1.7 and 1.8, and Theorems 2.3, 2.10, 3.1 and 3.2 in [22] (and related formulas hold true also for higher-order fractional operators, see [3,4,5, 50]).

In addition, space inversions such as the Kelvin Transform also possess invariant properties in the fractional framework, see e.g. [19] (see also Lemma 2.2 and Corollary 2.3 in [63], and in addition Proposition A.1 on page 300 in [97] for a short proof). Moreover, fractional Liouville-type results hold under various assumptions, see e.g. [64] and [59].

Another interesting link between classical and fractional operators is given by subordination formulas which permit to reconstruct fractional operators from the heat flow of classical operators, such as

see [11].

In spite of all these similarities, many important structural differences between the classical and the fractional Laplacian arise. Let us list some of them.

Difference 2.1 (Locality Versus Nonlocality)

The classical Laplacian of u at a point x only depends on the values of u in B r(x), for any r > 0.

This is not true for the fractional Laplacian. For instance, if \(u\in C^\infty _0(B_2, \,[0,1])\) with u = 1 in B 1, we have that, for any \(x\in \mathbb {R}^n\setminus B_4\),

while of course Δu(x) = 0 in this setting.

It is worth remarking that the estimate in (2.8) is somewhat optimal. Indeed, if u belongs to the Schwartz space (or space of rapidly decreasing functions)

we have that, for large |x|,

See Appendix B for the proof of this fact.

Difference 2.2 (Summability Assumptions)

The pointwise computation of the classical Laplacian on a function u does not require integrability properties on u. Conversely, formula (2.1) for u can make sense only when

which can be read as a local integrability complemented by a growth condition at infinity. This feature, which could look harmless at a first glance, can result problematic when looking for singular solutions to nonlinear problems (as, for example, in [1, 66] where there is an unavoidable integrability obstruction on a bounded domain) or in “blow-up” type arguments (as mentioned in [59], where the authors propose a way to outflank this restriction).

Difference 2.3 (Computation Along Coordinate Directions)

The classical Laplacian of u at the origin only depends on the values that u attains along the coordinate directions (or, up to a rotation, along a set of n orthogonal directions).

This is not true for the fractional Laplacian. As an example, let \(u\in C^\infty _0( B_2 (4e_1+4e_2), \,[0,1])\), with u = 1 in B 1(4e 1 + 4e 2). Let also R j be the straight line in the jth coordinate direction, that is

see Fig. 2. Then

for each j ∈{1, …, n}, and so u(te j) = 0 for all \(t\in \mathbb {R}\) and j ∈{1, …, n}. This gives that Δu(0) = 0.

On the other hand,

which says that (− Δ)s u(0)≠0.

Difference 2.4 (Harmonic Versus s-Harmonic Functions)

If Δu(0) = 1, \(\|u-v\|{ }_{C^2(B_1)}\leqslant \varepsilon \) and ε > 0 is sufficiently small (see Fig. 3) then Δv(0)⩾1 − const ε > 0, and in particular Δv(0)≠0.

Quite surprisingly, this is not true for the fractional Laplacian. More generally, in this case, as proved in [55], for any ε > 0 and any (bounded, smooth) function \(\bar u\), we can find v ε such that

A proof of this fact in dimension 1 for the sake of simplicity is given in [112] (the original paper [55] presents a complete proof in any dimension). See also [70, 99, 100] for different approaches to approximation methods in fractional settings which lead to new proofs, and very refined and quantitative statements.

We also mention that the phenomenon described in (2.11) (which can be summarized in the evocative statement that all functions are locally s-harmonic (up to a small error)) is very general, and it applies to other nonlocal operators, also independently from their possibly “elliptic” structure (for instance all functions are locally s-caloric, or s-hyperbolic, etc.). In this spirit, for completeness, in Sect. 5 we will establish the density of fractional caloric functions in one space variable, namely of the fact that for any ε > 0 and any (bounded, smooth) function \(\bar u=\bar u(x,t)\), we can find v ε = v ε(x, t) such that

We also refer to [58] for a general approach and a series of general results on this type of approximation problems with solutions of operators which are the superposition of classical differential operators with fractional Laplacians. Furthermore, similar results hold true for other nonlocal operators with memory, see [23]. See in addition [36, 37, 79] for related results on higher order fractional operators.

Difference 2.5 (Harnack Inequality)

The classical Harnack Inequality says that if u is harmonic in B 1 and u⩾0 in B 1 then

for a suitable universal constant, only depending on the dimension.

The same result is not true for s-harmonic functions. To construct an easy counterexample, let \(\bar u(x)=|x|{ }^2\) and, for a small ε > 0, let v ε be as in (2.11). Notice that, if x ∈ B 1 ∖ B 1∕4

if ε is small enough, while

These observations imply that v ε(0) < v ε(x) for all x ∈ B 1 ∖ B 1∕4 and therefore the infimum of v ε in B 1 is taken at some point \(\bar x\) in the closure of B 1∕4. Then, we define

Notice that u ε is s-harmonic in B 1, since so is v ε, and u ε⩾0 in B 1. Also, u ε is strictly positive in B 1 ∖ B 1∕4. On the other hand, since \(\bar x\in B_{1/2}\)

which implies that u ε cannot satisfy a Harnack Inequality as the one in (2.13).

In any case, it must be said that suitable Harnack Inequalities are valid also in the fractional case, under suitable “global” assumptions on the solution: for instance, the Harnack Inequality holds true for solutions that are positive in the whole of \(\mathbb {R}^n\) rather than in a given ball. We refer to [75, 76] for a comprehensive discussion on this topic and for recent developments.

Difference 2.6 (Growth from the Boundary)

Roughly speaking, solutions of Laplace equations have “linear (i.e. Lipschitz) growth from the boundary”, while solutions of fractional Laplace equations have only Hölder growth from the boundary. To understand this phenomenon, we point out that if u is continuous in the closure of B 1, with Δu = f in B 1 and u = 0 on ∂B 1, then

Notice that the term (1 −|x|) represents the distance of the point x ∈ B 1 from ∂B 1. See e.g. Appendix C for a proof of (2.14).

The case of fractional equations is very different. A first example which may be useful to keep in mind is that the function

For an elementary proof of this fact, see e.g. Section 2.4 in [24]. Remarkably, the function in (2.15) is only Hölder continuous with Hölder exponent s near the origin.

Another interesting example is given by the function

which satisfies

A proof of (2.17) based on extension methods and complex analysis is given in Appendix D.

The identity in (2.17) is in fact a special case of a more general formula, according to which the function

satisfies

For this formula, and in fact even more general ones, see [61]. See also [69] for a probabilistic approach.

Interestingly, (2.15) can be obtained from (2.19) by a blow-up at a point on the zero level set.

Notice also that

therefore, differently from the classical case, u s does not satisfy an estimate like that in (2.14).

It is also interesting to observe that the function u s is related to the function \(x_+^{s}\) via space inversion (namely, a Kelvin transform) and integration, and indeed one can also deduce (2.19) from (2.15): this fact was nicely remarked to us by Xavier Ros-Oton and Joaquim Serra, and the simple but instructive proof is sketched in Appendix E.

Difference 2.7 (Global (Up to the Boundary) Regularity)

Roughly speaking, solutions of Laplace equations are “smooth up to the boundary”, while solutions of fractional Laplace equations are not better than Hölder continuous at the boundary. To understand this phenomenon, we point out that if u is continuous in the closure of B 1,

then

See e.g. Appendix F for a proof of this fact.

The case of fractional equations is very different since the function u s in (2.18) is only Hölder continuous (with Hölder exponent s) in B 1, hence the global Lipschitz estimate in (2.21) does not hold in this case. This phenomenon can be seen as a counterpart of the one discussed in Difference 2.6. The boundary regularity for fractional Laplace problems is discussed in details in [97].

Difference 2.8 (Explosive Solutions)

Solutions of classical Laplace equations cannot attain infinite values in the whole of the boundary. For instance, if u is harmonic in B 1, then

Indeed, by the Mean Value Property for harmonic functions, for any ρ ∈ (0, 1),

from which (2.22) plainly follows (another proof follows by using the Maximum Principle instead of the Mean Value Property). On the contrary, and quite remarkably, solutions of fractional Laplace equations may “explode” at the boundary and (2.22) can be violated by s-harmonic functions in B 1 which vanish outside B 1.

For example, for

one has

and, of course, (2.22) is violated by u −1∕2. The claim in (2.24) can be proven starting from (2.17) and by suitably differentiating both sides of the equation: the details of this computation can be found in Appendix G. For completeness, we also give in Appendix H another proof of (2.24) based on complex variable and extension methods.

A geometric interpretation of (2.24) is depicted in Fig. 4 where a point x ∈ (−1, 1) is selected and the graph of u −1∕2 above the value u −1∕2(x) is drawn with a “dashed curve” (while a “solid curve” represents the graph of u −1∕2 below the value u −1∕2(x)): then, when computing the fractional Laplacian at x, the values coming from the dashed curve, compared with u −1∕2(x), provide an opposite sign with respect to the values coming from the solid curve. The “miracle” occurring in (2.24) is that these two contributions with opposite sign perfectly compensate and cancel each other, for any x ∈ (−1, 1).

The function u −1∕2 and the cancellation occurring in (2.24)

More generally, in every smooth bounded domain \(\Omega \subset \mathbb {R}^n\) it is possible to build s-harmonic functions exploding at ∂ Ω at the same rate as dist(⋅, ∂ Ω)s−1. A phenomenon of this sort was spotted in [66], and see [1] for the explicit explosion rate. See [1] also for a justification of the boundary behavior, as well as the study of Dirichlet problems prescribing a singular boundary trace.

Concerning this feature of explosive solutions at the boundary, it is interesting to point out a simple analogy with the classical Laplacian. Indeed, in view of (2.15), if s ∈ (0, 1) and we take the function \(\mathbb {R}\ni x\mapsto x_+^s\), we know that it is s-harmonic in (0, +∞) and it vanishes on the boundary (namely, the origin), and these features have a clear classical analogue for s = 1. Then, since for all s ∈ (0, 1] the derivative of \(x_+^s\) is \(x_+^{s-1}\), up to multiplicative constants, we have that the latter is s-harmonic in (0, +∞) and it blows-up at the origin when s ∈ (0, 1) (conversely, when s = 1 one can do the same computations but the resulting function is simply the characteristic function of (0, +∞) so no explosive effect arises).

Similar computations can be done in the unit ball instead of (0, +∞), and one simply gets functions that are bounded up to the boundary when s = 1, or explosive when s ∈ (0, 1) (further details in Appendices G and H).

Difference 2.9 (Decay at Infinity)

The Gaussian \(e^{-|x|{ }^2}\) reproduces the classical heat kernel. That is, the solution of the heat equation with initial datum concentrated at the origin, when considered at time t = 1∕4, produces the Gaussian (of course, the choice t = 1∕4 is only for convenience, any time t can be reduced to unit time by scaling the equation).

The fast decay prescribed by the Gaussian is special for the classical case and the fractional case exhibits power law decays at infinity. More precisely, let us consider the heat equation with initial datum concentrated at the origin, that is

and set

By taking the Fourier Transform of (2.25) in the x variable (and possibly neglecting normalization constants) one finds that

hence

and consequently

being \({\mathcal {F}}^{-1}\) the anti-Fourier Transform of the Fourier Transform \({\mathcal {F}}\). When s = 1, and neglecting the normalizing constants, the expression in (2.28) reduces to the Gaussian (since the Gaussian is the Fourier Transform of itself). On the other hand, as far as we know, there is no simple explicit representation of the fractional heat kernel in (2.28), except in the “miraculous” case s = 1∕2, in which (2.28) provides the explicit representation

See Appendix I for a proof of (2.29) using Fourier methods and Appendix J for a proof based on extension methods.

We stress that, differently from the classical case, the heat kernel \({\mathcal {G}}_{1/2}\) decays only with a power law. This is in fact a general feature of the fractional case, since, for any s ∈ (0, 1), it holds that

and, for |x|⩾1 and s ∈ (0, 1), the heat kernel \({\mathcal {G}}_s (x)\) is bounded from below and from above by \(\frac {\,{\mathrm {const}}\,}{|x|{ }^{n+2s}}\).

We refer to [78] for a detailed discussion on the fractional heat kernel. See also [13] for more information on the fractional heat equation. For precise asymptotics on fractional heat kernels, see [15, 17, 47, 95].

The decay of the heat kernel is also related to the associated distribution in probability theory: as we will see in Sect. 4.2, the heat kernel represents the probability density of finding a particle at a given point after a unit of time; the motion of such particle is driven by a random walk in the classical case and by a random process with long jumps in the fractional case and, as a counterpart, the fractional probability distribution exhibits a “long tail”, in contrast with the rapidly decreasing classical one.

Another situation in which the classical case provides exponentially fast decaying solutions while the fractional case exhibits polynomial tails is given by the Allen-Cahn equation (see e.g. Section 1.1 in [65] for a simple description of this equation also in view of phase coexistence models). For concreteness, one can consider the one-dimensional equation

For s = 1, the system in (2.31) reduces to the pendulum-like system

The solution of (2.32) is explicit and it has the form

as one can easily check. Also, by inspection, we see that such solution satisfies

Conversely, to the best of our knowledge, the solution of (2.31) has no simple explicit expression. Also, remarkably, the solution of (2.31) decays to the equilibria ± 1 only polynomially fast. Namely, as proved in Theorem 2 of [92], we have that the solution of (2.31) satisfies

and the estimates in (2.35) are optimal, namely it also holds that

See Appendix K for a proof of (2.36). In particular, (2.36) says that solutions of fractional Allen-Cahn equations such as the one in (2.31) do not satisfy the exponential decay in (2.34) which is fulfilled in the classical case.

The estimate in (2.36) can be confirmed by looking at the solution of the very similar equation

Though a simple expression of the solution of (2.37) is not available in general, the “miraculous” case s = 1∕2 possesses an explicit solution, given by

That (2.38) is a solution of (2.37) when s = 1∕2 is proved in Appendix L. Another proof of this fact using (2.29) is given in Appendix M.

The reader should not be misled by the similar typographic forms of (2.33) and (2.38), which represent two very different behaviors at infinity: indeed

and the function in (2.38) satisfies the slow decay in (2.36) (with s = 1∕2) and not the exponentially fast one in (2.34).

Equations like the one in (2.31) naturally arise, for instance, in long-range phase coexistence models and in models arising in atom dislocation in crystals, see e.g. [52, 110].

A similar slow decay also occurs in the study of fractional Schrödinger operators, see e.g. [38] and Lemma C.1 in [68]. For instance, the solution of

satisfies, for any |x|⩾1,

A heuristic motivation for a bound of this type can be “guessed” from (2.39) by thinking that, for large |x|, the function Γ should decay more or less like (− Δ)s Γ, which has “typically” the power law decay described in (2.10).

If one wishes to keep arguing in this heuristic way, also the decays in (2.30) and (2.36) may be seen as coming from an interplay between the right and the left side of the equation, in the light of the decay of the fractional Laplace operator discussed in (2.10). For instance, to heuristically justify (2.30), one may think that the solution of the fractional heat equation which starts from a Dirac’s Delta, after a unit of time (or an “infinitesimal unit” of time, if one prefers) has produced some bump, whose fractional Laplacian, in view of (2.10), may decay at infinity like \(\frac 1{|x|{ }^{n+2s}}\). Since the time derivative of the solution has to be equal to that, the solution itself, in this unit of time, gets “pushed up” by an amount like \(\frac 1{|x|{ }^{n+2s}}\) with respect to the initial datum, thus justifying (2.30).

A similar justification for (2.36) may seem more tricky, since the decay in (2.36) is only of the type \(\frac 1{|t|{ }^{2s}}\) instead of \(\frac 1{|t|{ }^{1+2s}}\), as the analysis in (2.10) would suggest. But to understand the problem, it is useful to consider the derivative of the solution \(v:=\dot u\) and deduce from (2.31) that

That is, for large |t|, the term 1 − 3u 2 gets close to 1 − 3 = −2 and so the profile at infinity may locally resemble the one driven by the equation (− Δ)s v = −2v. In this range, v has to balance its fractional Laplacian, which is expected to decay like \(\frac 1{|t|{ }^{1+2s}}\), in view of (2.10). Then, since u is the primitive of v, one may expect that its behavior at infinity is related to the primitive of \(\frac 1{|t|{ }^{1+2s}}\), and so to \(\frac 1{|t|{ }^{2s}}\), which is indeed the correct answer given by (2.36).

We are not attempting here to make these heuristic considerations rigorous, but perhaps these kinds of comments may be useful in understanding why the behavior of nonlocal equations is different from that of classical equations and to give at least a partial justification of the delicate quantitative aspects involved in a rigorous quantitative analysis (in any case, ideas like these are rigorously exploited for instance in Appendix K).

See also [21] for decay estimates of ground states of a nonlinear nonlocal problem.

We also mention that other very interesting differences in the decay of solutions arise in the study of different models for fractional porous medium equations, see e.g. [33, 34, 48].

Difference 2.10 (Finiteness Versus Infiniteness of the Mean Squared Displacement)

The mean squared displacement is a useful notion to measure the “speed of a diffusion process”, or more precisely the portion of the space that gets “invaded” at a given time by the spreading of the diffusive quantity which is concentrated at a point source at the initial time. In a formula, if u(x, t) is the fundamental solution of the diffusion equation related to the diffusion operator  , namely

, namely

being δ

0 the Dirac’s Delta, one can define the mean squared displacement relative to the diffusion process  as the “second moment” of u in the space variables, that is

as the “second moment” of u in the space variables, that is

For the classical heat equation, by Fourier Transform one sees that, when  , the fundamental solution of (2.41) is given by the classical heat kernel

, the fundamental solution of (2.41) is given by the classical heat kernel

and thereforeFootnote 4 in such case, the substitution \(y:=\frac {x}{2\sqrt {t}}\) gives that

for some C > 0. This says that the mean squared displacement of the classical heat equation is finite, and linear in the time variable.

On the other hand, in the fractional case in which  , by (2.27) the fractional heat kernel is endowed with the scaling property

, by (2.27) the fractional heat kernel is endowed with the scaling property

with \({\mathcal {G}}_s\) being as in (2.25) and (2.26). Consequently, in this case, the substitution \(y:= \frac {x}{t^{\frac 1{2s}} }\) gives that

Now, from (2.30), we know that

and therefore we infer from (2.44) that

This computation shows that, when s ∈ (0, 1), the diffusion process induced by − (− Δ)s does not possess a finite mean squared displacement, in contrast with the classical case in (2.43).

Other important differences between the classical and fractional cases arise in the study of nonlocal minimal surfaces and in related fields: just to list a few features, differently than in the classical case, nonlocal minimal surfaces typically “stick” at the boundary, see [25, 53, 56], the gradient bounds of nonlocal minimal graphs are different than in the classical case, see [26], nonlocal catenoids grow linearly and nonlocal stable cones arise in lower dimension, see [45, 46], stable surfaces of vanishing nonlocal mean curvature possess uniform perimeter bounds, see Corollary 1.8 in [42], the nonlocal mean curvature flow develops singularity also in the plane, see [41], its fattening phenomena are different, see [40], and the self-shrinking solutions are also different, see [39], and genuinely nonlocal phase transitions present stronger rigidity properties than in the classical case, see e.g. Theorem 1.2 in [60] and [67]. Furthermore, from the probabilistic viewpoint, recurrence and transiency in long-jump stochastic processes are different from the case of classical random walks, see e.g. [6] and the references therein.

We would like to conclude this list of differences with one similarity, which seems to be not very well-known. There is indeed a “nonlocal representation” for the classical Laplacian in terms of a singular kernel. It reads as

This one is somehow very close to (2.2) with one important modification: the difference operator in the numerator of the integrand has been increased in order, in such a way that it is able to compensate the singularity of the kernel in 0. We include in Appendix N a computation proving (2.46) when u is C 2, α around x. For a complete proof, involving Fourier transform techniques and providing the explicit value of the constant, we refer to [3].

2.2 The Regional (or Censored) Fractional Laplacian

A variant of the fractional Laplacian in (2.1) consists in restricting the domain of integration to a subset of \(\mathbb {R}^n\). In this direction, an interesting operator is defined by the following singular integral:

We remark that when \(\Omega :=\mathbb {R}^n\) the regional fractional Laplacian in (2.47) boils down to the standard fractional Laplacian in (2.1).

In spite of the apparent similarity, the regional fractional Laplacian and the fractional Laplacian are structurally two different operators. For instance, concerning Difference 2.4, we mention that solutions of regional fractional Laplace equations do not possess the same rich structure of those of fractional Laplace equations, and indeed

A proof of this observation will be given in Appendix O.

Interestingly, the regional fractional Laplacian turns out to be useful also in a possible setting of Neumann-type conditions in the nonlocal case, as presentedFootnote 5 in [54]. Related to this, we mention that it is possible to obtain a regional-type operator starting from the classical Laplacian coupled with Neumann boundary conditions (details about it will be given in formula (2.52) below).

2.3 The Spectral Fractional Laplacian

Another natural fractional operator arises in taking fractional powers of the eigenvalues. For this, we write

where ϕ k is the eigenfunction corresponding to the kth eigenvalue of the Dirichlet Laplacian, namely

with \(0<\lambda _0<\lambda _1\leqslant \lambda _2\leqslant \dots \). We normalize the sequence ϕ k to make it an orthonormal basis of L 2( Ω) (see e.g. page 335 in [62]). In this setting, we define

We refer to [109] for extension methods for this type of operator. Furthermore, other types of fractional operators can be defined in terms of different boundary conditions: for instance, a spectral decomposition with respect to the eigenfunctions of the Laplacians with Neumann boundary data naturally leads to an operator \((-\Delta )^s_{N,\Omega }\) (and such operator also have applications in biology, see e.g. [90] and [57]).

It is also interesting to observe that the spectral fractional Laplacian with Neumann boundary conditions can also be written in terms of a regional operator with a singular kernel. Namely, given an open and bounded set \(\Omega \subset \mathbb {R}^n\), denoting by ΔN,Ω the Laplacian operator coupled with Neumann boundary conditions on ∂ Ω, we let \({\{(\mu _j,\psi _j)\}}_{j\in \mathbb {N}}\) the pairs made up of eigenvalues and eigenfunctions of − ΔN,Ω, that is

with \(0=\mu _0<\mu _1\leqslant \mu _2\leqslant \mu _3\leqslant \dots \).

We define the following operator by making use of a spectral decomposition

Comparing with (2.50), we can consider \((-\Delta )^s_{N,\Omega }\) a spectral fractional Laplacian with respect to classical Neumann data. In this setting, the operator \((-\Delta )^s_{N,\Omega }\) is also an integrodifferential operator of regional type, in the sense that one can write

for a kernel K(x, y) which is comparable to \(\frac 1{|x-y|{ }^{n+2s}}\). We refer to Appendix P for a proof of this.

Interestingly, the fractional Laplacian and the spectral fractional Laplacian coincide, up to a constant, for periodic functions, or functions defined on the flat torus, namely

See e.g. Appendix Q for a proof of this fact.

On the other hand, striking differences between the fractional Laplacian and the spectral fractional Laplacian hold true, see e.g. [91, 107].

Interestingly, it is not true that all functions are s-harmonic with respect to the spectral fractional Laplacian, up to a small error, that is

A proof of this will be given in Appendix R. The reader can easily compare (2.54) with the setting for the fractional Laplacian discussed in Difference 2.4.

Remarkably, in spite of these differences, the spectral fractional Laplacian can also be written as an integrodifferential operator of the form

for a suitable kernel K and potential β, see Lemma 38 in [2] or Lemma 10.1 in [20]. This can be proved with analogous computations to those performed in the case of the regional fractional Laplacian in the previous paragraph.

2.4 Fractional Time Derivatives

The operators described in Sections in 2.1, 2.2, and 2.3 are often used in the mathematical description of anomalous types of diffusion (i.e. diffusive processes which produce important differences with respect to the classical heat equation, as we will discuss in Sect. 4): the main role of such nonlocal operators is usually to produce a different behavior of the diffusion process with respect to the space variables.

Other types of anomalous diffusions arise from non-standard behaviors with respect to the time variable. These aspects are often the mathematical counterpart of memory effects. As a prototype example, we recall the notion of Caputo fractional derivative, which, for any t > 0 (and up to normalizing factors that we omit for simplicity) is given by

We point out that, for regular enough functions u,

Though in principle this expression takes into account only the values of u(t) for t⩾0, hence u does not need to be defined for negative times, as pointed out e.g. in Section 2 of [7], it may be also convenient to constantly extend u in (−∞, 0). Hence, we take the convention for which u(t) = u(0) for any t⩽0. With this extension, one has that, for any t > 0,

Hence, one can write (2.57) as

This type of formulas also relates the Caputo derivative to the so-called Marchaud derivative, see e.g. [104].

In the literature, one can also consider higher order Caputo derivatives, see e.g. [85, 89] and the references therein.

Also, it is useful to consider the Caputo derivative in light of the (unilateral) Laplace Transform (see e.g. Chapter 2.8 in [94], and [86])

With this notation, up to dimensional constants, one can write (for a smooth function with exponential control at infinity) that

see Appendix S for a proof.

In this way, one can also link equations driven by the Caputo derivative to the so-called Volterra integral equations: namely one can invert the expression \( \partial ^s_{C,t} u=f\) by

for some normalization constant C > 0, see Appendix S for a proof.

It is also worth mentioning that the Caputo derivative of order s of a power gives, up to normalizing constants, the “power minus s”: more precisely, by (2.56) and using the substitution 𝜗 := τ∕t, we see that, for any r > 0,

for some C > 0.

Moreover, in relation to the comments on page 17, we have that

See Appendix U for a proof of this.

The Caputo derivatives describes a process “with memory”, in the sense that it “remembers the past”, though “old events count less than recent ones”. We sketch a memory effect of Caputo type in Appendix V.

Due to its memory effect, operators related to Caputo derivatives have found several applications in which the basic parameters of a physical system change in time, in view of the evolution of the system itself: for instance, in studying flows in porous media, when time goes, the fluid may either “obstruct” the holes of the medium, thus slowing down the diffusion, or “clean” the holes, thus making the diffusion faster, and the Caputo derivative may be a convenient approach to describe such modification in time of the diffusion coefficient, see [35].

Other applications of Caputo derivatives occur in biology and neurosciences, since the network of neurons exhibit time-fractional diffusion, also in view of their highly ramified structure, see e.g. [51] and the references therein.

We also refer to [24, 113, 115] and to the references therein for further discussions on different types of anomalous diffusions.

3 A More General Point of View: The “Master Equation”

The operators discussed in Sects. 2.1, 2.2, 2.3, and 2.4 can be framed into a more general setting, that is that of the “master equation”, see e.g. [32].

Master equations describe the evolution of a quantity in terms of averages in space and time of the quantity itself. For concreteness one can consider a quantity u = u(x, t) and describe its evolution by an equation of the kind

for some \(c\in \mathbb {R}\) and a forcing term f, and the operator L has the integral form

for a suitable measure μ (with the integral possibly taken in the principal value sense, which is omitted here for simplicity; also one can consider even more general operators by taking actions different than translations and more general ambient spaces).

Though the form of such operator is very general, one can also consider simplifying structural assumptions. For instance, one can take μ to be the space-time Lebesgue measure over \(\mathbb {R}^n\times (0,+\infty )\), namely

Another common simplifying assumption is to assume that the kernel is induced by an uncorrelated effect of the space and time variables, with the product structure

The fractional Laplacian of Sect. 2.1 is a particular case of this setting (for functions depending on the space variable), with the choice, up to normalizing constants,

More generally, for \(\Omega \subseteq \mathbb {R}^n\), the regional fractional Laplacian in Sect. 2.2 comes from the choice

Finally, in view of (2.58), for time-dependent functions, the choice

produces the Caputo derivative discussed in Sect. 2.4.

We recall that one of the fundamental structural differences in partial differential equations consists in the distinction between operators “in divergence form”, such as

and those “in non-divergence form”, such as

This structural difference can also be recovered from the master equation. Indeed, if we consider a (say, for the sake of concreteness, strictly positive, bounded and smooth) matrix function \(M:\mathbb {R}^n\to {\text{Mat }}(n\times n)\), we can take into account the master spatial operator induced by the kernel

that is, in the notation of (3.1),

Then, up to a normalizing constant, if

then

A proof of this will be given in Appendix W.

It is interesting to observe that condition (3.6) says that, if we set z := x − y, then

and so the kernel in (3.4) is invariant by exchanging x and z. This invariance naturally leads to a (possibly formal) energy functional of the form

We point out that condition (3.8) translates, roughly speaking, into the fact that the energy density in (3.9) “charges the variable x as much as the variable z”.

The study of the energy functional in (3.9) also drives to a natural quasilinear generalization, in which the fractional energy takes the form

for a suitable Φ, see e.g. [80, 114] and the references therein for further details on quasilinear nonlocal operators. See also [113] and the references therein for other type of nonlinear fractional equations.

Another case of interest (see e.g. [14]) is the one in which one considers the master equation driven by the spatial kernel

that is, in the notation of (3.1),

Then, up to a normalizing constant, if

then

A proof of this will be given in Appendix X.

We recall that nonlocal linear operators in non-divergence form can also be useful in the definition of fully nonlinear nonlocal operators, by taking appropriate infima and suprema of combinations of linear operators, see e.g. [83] and the references therein for further discussions about this topic (which is also related to stochastic games).

We also remark that understanding the role of the affine transformations of the spaces on suitable nonlocal operators (as done for instance in (3.10) and (3.10)) often permits a deeper analysis of the problem in nonlinear settings too, see e.g. the very elegant way in which a fractional Monge-Ampère equation is introduced in [29] by considering the infimum of fractional linear operators corresponding to all affine transformations of determinant one of a given multiple of the fractional Laplacian.

As a general comment, we also think that an interesting consequence of the considerations given in this section is that classical, local equations can also be seen as a limit approximation of more general master equations.

We mention that there are also many other interesting kernels, both in space and time, which can be taken into account in integral equations. Though we focused here mostly on the case of singular kernels, there are several important problems that focus on “nice” (e.g. integrable) kernels, see e.g. [8, 43, 88] and the references therein.

As a technical comment let us point out that, in a sense, the nice kernels may have computational advantages, but may provide loss of compactness and loss of regularity issues: roughly speaking, convolutions with smooth kernel are always smooth, thus any smoothness information on a convolved function gives little information on the smoothness of the original function—viceversa, if the convolution of an “object” with a singular kernel is smooth, then it means that the original object has a “good order of vanishing at the origin”. When the original object is built by the difference of a function and its translation, such vanishing implies some control of the oscillation of the function, hence opening a door towards a regularity result.

4 Probabilistic Motivations

We provide here some elementary, and somewhat heuristic, motivations for the operators described in Sect. 2 in view of probability and statistics applications. The treatment of this section is mostly colloquial and not to be taken at a strictly rigorous level (in particular, all functions are taken to be smooth, some uniformity problems are neglected, convergence is taken for granted, etc.). See e.g. [74] for rigorous explanations linking pseudo-differential operators and Markov/Lévy processes. See also [9, 12, 16, 101, 111] for other perspectives and links between probability and fractional calculus and [77] for a complete survey on jump processes and their connection to nonlocal operators.

The probabilistic approach to study nonlocal effects and the analysis of distributions with polynomial tails are also some of the cornerstones of the application of mathematical theories to finance, see e.g. [87, 93], and models with jump process for prices have been proposed in [44].

4.1 The Heat Equation and the Classical Laplacian

The prototype of parabolic equations is the heat equation

for some c > 0. The solution u may represent, for instance, a temperature, and the foundation of (4.1) lies on two basic assumptions:

-

the variation of u in a given region \(U\subset \mathbb {R}^n\) is due to the flow of some quantity \(v:\mathbb {R}^n\to \mathbb {R}^n\) through U,

-

v is produced by the local variation of u.

The first ansatz can be written as

where ν denotes the exterior normal vector of U and \({\mathcal {H}}^{n-1}\) is the standard (n − 1)-dimensional surface Hausdorff measure.

The second ansatz can be written as v = c∇u, which combined with (4.2) and the Divergence Theorem gives that

Since U is arbitrary, this gives (4.1).

Let us recall a probabilistic interpretation of (4.1). The idea is that (4.1) follows by taking suitable limits of a discrete “random walk”. For this, we take a small space scale h > 0 and a time step

We consider the random motion of a particle in the lattice \(h\mathbb {Z}^n\), as follows. At each time step, the particle can move in any coordinate direction with equal probability. That is, a particle located at \(h\bar k\in h\mathbb {Z}^n\) at time t is moved to one of the 2n points \(h\bar k\pm he_1\), …, \(h\bar k\pm h e_n\) with equal probability (here, as usual, e j denotes the jth element of the standard Euclidean basis of \(\mathbb {R}^n\)).

We now look at the expectation to find the particle at a point \(x\in h\mathbb {Z}^n\) at time \(t\in \tau \mathbb {N}\). For this, we denote by u(x, t) the probability density of such expectation. That is, the probability for the particle of lying in the spatial region B r(x) at time t is, for small r, comparable with

Then, the probability of finding a particle at the point \(x\in h\mathbb {Z}^n\) at time t + τ is the sum of the probabilities of finding the particle at a closest neighborhood of x at time t, times the probability of jumping from this site to x. That is,

Also,

Thus, subtracting u(x, t) to both sides in (4.4), dividing by τ, recalling (4.3), and taking the limit (and neglecting any possible regularity issue), we formally find that

which is (4.1).

4.2 The Fractional Laplacian and the Regional Fractional Laplacian

Now we consider an open set \(\Omega \subseteq \mathbb {R}^n\) and a discrete random process in \(h\mathbb {Z}^n\) which can be roughly speaking described in this way. The space parameter h > 0 is linked to the time step

A particle starts its journey from a given point \(h\bar k\in \Omega \) of the lattice \(h\mathbb {Z}^n\) and, at each time step τ, it can reach any other point of the lattice hk, with \(k\ne \bar k\), with probability

then the process continues following the same law. Notice that the above probability density does not allow the process to leave the domain Ω, since P h vanishes in the complement of Ω (in jargon, this process is called “censored”).

In (4.6), the constant C > 0 is needed to normalize to total probability and is defined by

We let

and

Notice that, for any \(\bar k\in \mathbb {Z}^n\), it holds that

hence, for a fixed h > 0 and \(\bar k\in \mathbb {Z}^n\), this aggregate probability does not equal to 1: this means that there is a remaining probability \(p_h(\bar k)\geqslant 0\) for which the particle does not move (in principle, such probability is small when so is h, but, for a bounded domain Ω, it is not negligible with respect to the time step, hence it must be taken into account in the analysis of the process in the general setting that we present here).

We define u(x, t) to be the probability density for the particle to lie at the point \(x\in \Omega \cap (h\mathbb {Z}^n)\) at time \(t\in \tau \mathbb {N}\). We show that, for small space and time scale, the function u is well described by the evolution of the nonlocal heat equation

for some normalization constant c > 0. To check this, up to a translation, we suppose that x = 0 ∈ Ω and we set c h := c h(0) and p h := p h(0). We observe that the probability of being at 0 at time t + τ is the sum of the probabilities of being somewhere else, say at \(hk\in h\mathbb {Z}^n\), at time t, times the probability of jumping from hk to the origin, plus the probability of staying put: that is

Thus, recalling (4.7),

So, we divide by τ and, in view of (4.5), we find that

We write this identity changing k to − k and we sum up: in this way, we obtain that

Now, for small y, if u is smooth enough,

and therefore, if we write

we (formally) have that

for small |y|.

Now, we fix δ > 0 and use the Riemann sum representation of an integral to write (for a bounded Riemann integrable function \(\varphi :\mathbb {R}^n\setminus B_\delta \to \mathbb {R}\)),

If, in addition, (4.11) is satisfied, one has that, for small δ,

From this and (4.12) we have that

Also, in view of (4.11),

Hence, (4.13) boils down to

and so, taking δ arbitrarily small,

Therefore, recalling (4.10),

This confirms (4.9).

As a final comment, in view of these calculations and those of Sect. 4.1, we may compare the classical random walk, which leads to the classical heat equation, and the long-jump random walk which leads to the nonlocal heat equation and relate such jumps to an “infinitely fast” diffusion, in the light of the computations of the associated mean squared displacements (recall (2.43) and (2.45)).

4.3 The Spectral Fractional Laplacian

Now, we briefly discuss a heuristic motivation for the fractional heat equation run by the spectral fractional Laplacian, that is

for some normalization constant c > 0. To this end, we consider a bounded and smooth set \(\Omega \subset \mathbb {R}^n\) and we define a random motion of a “distribution of particles” in Ω. For any x ∈ Ω and t⩾0, the function u(x, t) denotes the “number of particles” present at the point x at the time t. No particles lie outside Ω and we write u as a suitable superposition of eigenfunctions {ϕ k}k⩾1 of the Laplacian with Dirichlet boundary data (this is a reasonable assumption, given that such eigenfunctions provide a basis of L 2( Ω), see e.g. page 335 in [62]). In this way, we write

Namely, in the notation in (2.49), the evolution of the particle distribution u is defined on each spectral component u k and it is taken to follow a “classical” random walk, but the space/time scale is supposed to depend on k as well: namely, spectral components relative to high frequencies will move slower than the ones relative to low frequencies (namely, the time step is taken to be longer if the frequency is higher).

More precisely, for any \(k\in \mathbb {N}\), we suppose that each of the u k particles of the kth spectral component undergo a classical random walk in a lattice \(h_k\mathbb {Z}^d\), as described in Sect. 4.1, but with time step

We suppose that h k and τ k are “small space and time increments”. Namely, after a time step τ k, each of these u k(t) ϕ k(x) particles will move, with equal probability \(\frac 1{2n}\), to one of the points x ± h k e 1, …, x ± h k e n (for simplicity, we are imaging here u k to be positive; the case of negative u k represents a “lack of particles”, which is supposed to diffuse with the same law). Hence, the number of particles at time t + τ k which correspond to the kth frequency of the spectrum and which lie at the point x ∈ Ω is equal to the sum of the number of the particles at time t which lie somewhere else times the probability of jumping to x in this time step, that is, in formula,

Moreover,

Consequently, from this and (4.16),

Hence, with a formal computation, dividing by τ

k, using (4.15) and sending h

k,  (for a fixed k), we obtain

(for a fixed k), we obtain

Hence, from (2.49) (and neglecting converge issues in k), we have

that is (4.14).

4.4 Fractional Time Derivatives

We consider a model in which a bunch of people is supposed to move along the real line (say, starting at the origin) with some given velocity f, which depends on time. We consider the case in which the environment surrounding the moving people is “tricky”, and some of them risk to get stuck for some time, and they are able to “exit the trap” only by overcoming their past velocity. Concretely, we fix a function φ : [0, +∞) → [0, +∞) with

Then we define

and we notice that

Then, we denote by u(t) the position of the “generic person” at time t, with u(0) = 0. We suppose that some people, say a proportion p 1 of the total population, move with the prescribed velocity for a unit of time, after which their velocity is the difference between the prescribed velocity at that time and the one at the preceding time with respect to the time unit. In formulas, this says that there is a proportion p 1 of the total people who travels with velocity

After integrating, we thus obtain that there is a proportion p 1 of the total people whose position is described by the function

For instance, if f is constant, then the position u 1 grows linearly for a unit of time and then remains put (this would correspond to consider “stopping times” in the motion, see Fig. 5).

The motions u k described in Sect. 4.4 when the velocity field f is constant

Similarly, a proportion p 2 of the total population evolves with prescribed velocity f for two units of time, after which its velocity becomes the difference between the prescribed velocity at that time and the one at the preceding time with respect to two time units, namely

In this case, an integration gives that there is a proportion p 2 of the total people whose position is described by the function

Repeating this argument, we suppose that for each \(k\in \mathbb {N}\) we have a proportion p k of the people that move initially with the prescribed velocity f, but, after k units of time, get their velocity changed into the difference of the actual velocity field and that of k units of time before (which is indeed a “memory effect”). In this way, we have that a proportion p k of the total population moves with law of motion given by

The average position of the moving population is then given by

We now specialize the computation above for the case

with s ∈ (0, 1). Notice that the quantity in (4.17) is finite in this case, and we can denote it simply by C s. In addition, we will consider long time asymptotics in t and introduce a small time increment h which is inversely proportional to t, namely

In this way, recalling that the motion was supposed to start at the origin (i.e., u(0) = 0) and using the substitution η := 𝜗∕t, we can write (4.18) as

where we have recognized a Riemann sum in the last line.

We also point out that the conditions

are equivalent to

and, furthermore,

Therefore we use the substitution ξ := 1 − η and we exchange the order of integrations, to deduce from (4.19) that

The substitution τ := tξ then gives

which, comparing with (2.61) and possibly redefining constants, gives that \( \partial ^s_{C,t} u=f\).

Of course, one can also take into account the case in which the velocity field f is induced by a classical diffusion in space, i.e. f = Δu, and in this case one obtains the time fractional diffusive equation \(\partial ^s_{C,t} u=\Delta u\).

4.5 Fractional Time Diffusion Arising from Heterogeneous Media

A very interesting phenomenon to observe is that the geometry of the diffusion medium can naturally transform classical diffusion into an anomalous one. This feature can be very well understood by an elegant model, introduced in [10] (see also [105] and the references therein for an exhaustive account of the research in this direction) consisting in random walks on a “comb”, that we briefly reproduce here for the facility of the reader. Given ε > 0, the comb may be considered as a transmission medium that is the union of a “backbone” \({\mathcal {B}}:=\mathbb {R}\times \{0\}\) with the “fingers” \({\mathcal {P}}_k:=\{\varepsilon k\}\times \mathbb {R}\), namely

see Fig. 6.

We suppose that a particle experiences a random walk on the comb, starting at the origin, with some given horizontal and vertical speeds. In the limit, this random walk can be modeled by the diffusive equation along the comb \({\mathcal {C}}_\varepsilon \)

with d

1, d

2 > 0. The case d

1 = d

2 corresponds to equal horizontal and vertical speeds of the random walk (and this case is already quite interesting). Also, in the limit as  , we can consider the Riemann sum approximation

, we can consider the Riemann sum approximation

and \({\mathcal {C}}_\varepsilon \) tends to cover the whole of \(\mathbb {R}^2\) when ε gets small. Accordingly, at least at a formal level, as the fingers of the comb become thicker and thicker, we can think that

and reduce (4.20) to the diffusive equation in \(\mathbb {R}^2\) given by

The very interesting feature of (4.21) is that it naturally induces a fractional time diffusion along the backbone. The quantity that experiences this fractional diffusion is the total diffusive mass at a point of the backbone. Namely, one sets

and we claim that

Equation (4.23) reveals the very relevant phenomenon that a diffusion governed by the Caputo derivative may naturally arise from classical diffusion, only in view of the particular geometry of the domain.

To check (4.23), we first point out that

Then, we observe that, if a, \(b\in \mathbb {C}\), and

To check this let \(\varphi \in C^\infty _0(\mathbb {R})\). Then, integrating twice by parts,

thus proving (4.25).

We also remark that, in the notation of (4.25), we have that δ 0(y)g(y) = δ 0(y)g(0) = bδ 0(y), and so, for every \(c\in \mathbb {R}\),

Now, taking the Fourier Transform of (4.21) in the variable x, using the notation \(\hat u(\xi ,y,t)\) for the Fourier Transform of u(x, y, t), and possibly neglecting normalization constants, we get

Now, we take the Laplace Transform of (4.27) in the variable t, using the notation w(ξ, y, ω) for the Laplace Transform of \(\hat u(\xi ,y,t)\), namely \( w(\xi ,y,\omega ):={\mathcal {L}} \hat u(\xi ,y,\omega )\). In this way, recalling that

and therefore

we deduce from (4.27) that

That is, setting

we see that

and hence we can write (4.28) as

In light of (4.26), we know that this equation is solved by taking w = g, that is

As a consequence, by (4.22),

This and (4.24) give that

that is

Transforming back and recalling (2.60), we obtain (4.23), as desired.

5 All Functions Are Locally s-Caloric (Up to a Small Error): Proof of (2.12)

We let \((x,t)\in \mathbb {R}\times \mathbb {R}\) and consider the operator  . One defines

. One defines

and for any \(J\in \mathbb {N}\), we define

Notice that \({\mathcal {V}}_J\) is a linear subspace of \(\mathbb {R}^{N+1}\), for some \(N\in \mathbb {N}\). The core of the proof is to establish the maximal span condition

To this end, we argue for a contradiction and we suppose that \({\mathcal {V}}_J\) is a linear subspace strictly smaller than \(\mathbb {R}^{N+1}\): hence, there exists

such that

One considers ϕ to be the first eigenfunctions of (− Δ)s in (−1, 1) with Dirichlet data, normalized to have unit norm in \(L^2(\mathbb {R})\). Accordingly,

for some λ > 0.

In view of the boundary properties discussed in Difference 2.6, one can prove that

with o(1) infinitesimal as  . So, fixed ε, τ > 0, we define

. So, fixed ε, τ > 0, we define

This function is smooth for any x in a small neighborhood of the origin and any \(t\in \mathbb {R}\), and, in this domain,

This says that \(h_{\varepsilon ,{\tau }}\in {\mathcal {V}}\) and therefore

This, together with (5.3), implies that, for any fixed and positive τ and y,

Hence, fixed τ > 0, this identity and (5.4) yield that

with o(1) infinitesimal as  .

.

We now take \(\bar \alpha _x\) be the largest integer α x for which there exists an integer α t such that \(\bar \alpha _x+\alpha _t\in [0,J]\) and \(\nu _{(\bar \alpha _x,\alpha _t)}\ne 0\). Notice that this definition is well-posed, since not all the \(\nu _{(\alpha _x,\alpha _t)}\) can vanish, due to (5.2). Then, (5.5) becomes

since the other coefficients vanish by definition of \(\bar \alpha _x\).

Thus, we multiply (5.6) by \(\varepsilon ^{\bar \alpha _x-s} {\tau }^{-\frac {\bar \alpha _x}{2s}}\) and we take the limit as  : in this way, we obtain that

: in this way, we obtain that

Since this is valid for any τ > 0, by the Identity Principle for Polynomials we obtain that

and thus \(\nu _{(\bar \alpha _x,\alpha _t)}=0\), for any integer α t for which \(\bar \alpha _x+\alpha _t\in [0,J]\). But this is in contradiction with the definition of \(\bar \alpha _x\) and so we have completed the proof of (5.1).

From this maximal span property, the proof of (2.12) follows by scaling (arguing as done, for instance, in [112]).

〈〈The longest appendix measured 26cm (10.24in) when it was removed from 72-year-old Safranco August (Croatia) during an autopsy at the Ljudevit Jurak University Department of Pathology, Zagreb, Croatia, on 26 August 2006.〉〉

(Source: http://www.guinnessworldrecords.com/world-records/largest-appendix-removed)

Notes

- 1.

The notion (or, better to say, several possible notions) of fractional derivatives attracted the attention of many distinguished mathematicians, such as Leibniz, Bernoulli, Euler, Fourier, Abel, Liouville, Riemann, Hadamard and Riesz, among the others. A very interesting historical outline is given in pages xxvii–xxxvi of [104].

- 2.

We think that it is quite remarkable that the operator obtained by the inverse Fourier Transform of \( \,|\xi |{ }^{2} \,\widehat u\), the classical Laplacian, reduces to a local operator. This is not true for the inverse Fourier Transform of \( \,|\xi |{ }^{2s} \,\widehat u\). In this spirit, it is interesting to remark that the fact that the classical Laplacian is a local operator is not immediate from its definition in Fourier space, since computing Fourier Transforms is always a nonlocal operation.

- 3.

Some care has to be used with extension methods, since the solution of (2.6) is not unique (if U solves (2.6), then so does U(x, y) + cy for any \(c\in \mathbb {R}\)). The “right” solution of (2.6) that one has to take into account is the one with “decay at infinity”, or belonging to an “energy space”, or obtained by convolution with a Poisson-type kernel. See e.g. [24] for details.

Also, the extension method in (2.6) and (2.7) can be related to an engineering application of the fractional Laplacian motivated by the displacement of elastic membranes on thin (i.e. codimension one) obstacles, see [28]. The intuition for such application can be grasped from Figs. 7, 10, and 12. These pictures can be also useful to develop some intuition about extension methods for fractional operators and boundary reaction-diffusion equations.

- 4.

See Appendix A in [103] for a very nice explanation of the dimensional analysis and for a throughout discussion of its role in detecting fundamental solutions.

- 5.

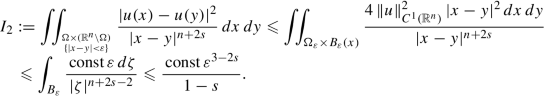

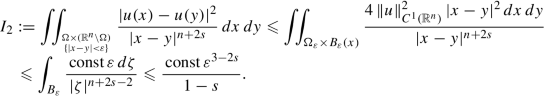

Some colleagues pointed out to us that the use of R and r in some steps of formula (5.5) of [54] are inadequate. We take this opportunity to amend such a flaw, presenting a short proof of (5.5) of [54]. Given ε > 0, we notice that

where the constants are also allowed to depend on Ω and u. Furthermore, if we define Ωε to be the set of all the points in Ω with distance less than ε from ∂ Ω, the regularity of ∂ Ω implies that the measure of Ωε is bounded by const ε, and therefore

These observations imply that

Taking ε as small as we wish, we obtain formula (5.5) in [54].

- 6.

From the geometric point of view, one can also take radial coordinates, compute the derivatives of K along the unit sphere and use scaling.

- 7.

The difficulty in proving (G.1) is that the function u 1∕2 is not differentiable at ± 1 and the derivative taken inside the integral might produce a singularity (in fact, formula (G.1) exactly says that such derivative can be performed with no harm inside the integral). The reader who is already familiar with the basics of functional analysis can prove (G.1) by using the theory of absolutely continuous functions, see e.g. Theorem 8.21 in [98]. We provide here a direct proof, available to everybody.

- 8.

As a historical remark, we recall that e −|ξ| is sometimes called the “Abel Kernel” and its Fourier Transform the “Poisson Kernel”, which in dimension 1 reduces to the “Cauchy-Lorentz, or Breit-Wigner, Distribution” (that has also classical geometric interpretations as the “Witch of Agnesi”, and so many names attached to a single function clearly demonstrate its importance in numerous applications).

- 9.

Let us mention another conceptual simplification of nonlocal problems: in this setting, the integral representation often allows the formulation of problems with minimal requirements on the functions involved (such as measurability and possibly minor pointwise or integral bounds). Conversely, in the classical setting, even to just formulate a problem, one often needs assumptions and tools from functional analysis, comprising e.g. Sobolev differentiability, distributions or functions of bounded variations.

- 10.

In complex variables, one can also interpret the function U in terms of the principal argument function

$$\displaystyle \begin{aligned}{\mathrm{Arg}}(r e^{i\varphi})=\varphi\in(-\pi,\pi],\end{aligned}$$with branch cut along the nonpositive real axis. Notice indeed that, if z = x + iy and y > 0,

$$\displaystyle \begin{aligned}{\mathrm{Arg}}(z+i)=\frac\pi2-\arctan\frac{x}{y+1}=\frac\pi2\left(1-U(x,y) \right).\end{aligned}$$This observation would also lead to (L.1).

- 11.

- 12.

A slightly different approach as that in (3.7) is to consider the energy functional in (3.9) and prove, e.g. by Taylor expansion, that it converges to the energy functional

$$\displaystyle \begin{aligned}\,{\mathrm{const}}\, \int_{\mathbb{R}^n}a_{ij}(x) \,\partial_i u(x)\,\partial_j u(x)\,dx.\end{aligned}$$On the other hand, a different proof of (3.7), that was nicely pointed out to us by Jonas Hirsch (who has also acted as a skilled cartoonist for Fig. 13) after a lecture, can be performed by taking into account the weak form of the operator in (3.5), i.e. integrating such expression against a test function \(\varphi \in C^\infty _0(\mathbb {R}^n)\), thus finding

$$\displaystyle \begin{aligned} \begin{array}{rcl} &\displaystyle &\displaystyle (1-s)\,\iint_{\mathbb{R}^n\times\mathbb{R}^n} \frac{ \big(u(x)-u(x-y)\big)\,\varphi(x)}{ |M(x-y,y)\, y|{}^{n+2s}}\,dx\,dy \\ &\displaystyle =&\displaystyle (1-s)\,\iint_{\mathbb{R}^n\times\mathbb{R}^n} \frac{ \big(u(x)-u(z)\big)\,\varphi(x)}{ |M(z,x-z)\,(x-z)|{}^{n+2s}}\,dx\,dz \\ \noalign{} &\displaystyle =&\displaystyle (1-s)\,\iint_{\mathbb{R}^n\times\mathbb{R}^n} \frac{ \big(u(z)-u(x)\big)\,\varphi(z)}{ |M(x,z-x)\,(x-z)|{}^{n+2s}}\,dx\,dz \\ &\displaystyle =&\displaystyle -(1-s)\,\iint_{\mathbb{R}^n\times\mathbb{R}^n} \frac{ \big(u(x)-u(z)\big)\,\varphi(z)}{ |M(z,x-z)\,(x-z)|{}^{n+2s}}\,dx\,dz ,\end{array} \end{aligned} $$where the structural condition (3.6) has been used in the last line. This means that the weak formulation of the operator in (3.5) can be written as

$$\displaystyle \begin{aligned}\frac{1-s}{2}\,\iint_{\mathbb{R}^n\times\mathbb{R}^n} \frac{ \big(u(x)-u(z)\big)\,\big(\varphi(x)-\varphi(z)\big)}{ |M(z,x-z)\,(x-z)|{}^{n+2s}}\,dx\,dz.\end{aligned}$$So one can expand this expression and take the limit as

, to obtain $$\displaystyle \begin{aligned}\,{\mathrm{const}}\, \int_{\mathbb{R}^n}a_{ij}(x) \,\partial_i u(x)\,\partial_j\varphi(x)\,dx,\end{aligned}$$

, to obtain $$\displaystyle \begin{aligned}\,{\mathrm{const}}\, \int_{\mathbb{R}^n}a_{ij}(x) \,\partial_i u(x)\,\partial_j\varphi(x)\,dx,\end{aligned}$$which is indeed the weak formulation of the classical divergence form operator.

References

N. Abatangelo, Large s-harmonic functions and boundary blow-up solutions for the fractional Laplacian. Discrete Contin. Dyn. Syst. 35(12), 5555–5607 (2015). https://doi.org/10.3934/dcds.2015.35.5555. MR3393247

N. Abatangelo, L. Dupaigne, Nonhomogeneous boundary conditions for the spectral fractional Laplacian. Ann. Inst. H. Poincaré Anal. Non Linéaire 34(2), 439–467 (2017). https://doi.org/10.1016/j.anihpc.2016.02.001. MR3610940

N. Abatangelo, S. Jarohs, A. Saldaña, Positive powers of the Laplacian: from hypersingular integrals to boundary value problems. Commun. Pure Appl. Anal. 17(3), 899–922 (2018). https://doi.org/10.3934/cpaa.2018045. MR3809107

N. Abatangelo, S. Jarohs, A. Saldaña, Green function and Martin kernel for higher-order fractional Laplacians in balls. Nonlinear Anal. 175, 173–190 (2018). https://doi.org/10.1016/j.na.2018.05.019. MR3830727

N. Abatangelo, S. Jarohs, A. Saldaña, On the loss of maximum principles for higher-order fractional Laplacians. Proc. Am. Math. Soc. 146(11), 4823–4835 (2018). https://doi.org/10.1090/proc/14165. MR3856149

E. Affili, S. Dipierro, E. Valdinoci, Decay estimates in time for classical and anomalous diffusion. arXiv e-prints (2018), available at 1812.09451

M. Allen, L. Caffarelli, A. Vasseur, A parabolic problem with a fractional time derivative. Arch. Ration. Mech. Anal. 221(2), 603–630 (2016). https://doi.org/10.1007/s00205-016-0969-z. MR3488533

F. Andreu-Vaillo, J.M. Mazón, J.D. Rossi, J.J. Toledo-Melero, Nonlocal Diffusion Problems, Mathematical Surveys and Monographs, vol. 165 (American Mathematical Society, Providence, 2010); Real Sociedad Matemática Española, Madrid, 2010. MR2722295

D. Applebaum, Lévy Processes and Stochastic Calculus. Cambridge Studies in Advanced Mathematics, 2nd edn., vol. 116 (Cambridge University Press, Cambridge, 2009). MR2512800

V.E. Arkhincheev, É.M. Baskin, Anomalous diffusion and drift in a comb model of percolation clusters. J. Exp. Theor. Phys. 73, 161–165 (1991)

A.V. Balakrishnan, Fractional powers of closed operators and the semigroups generated by them. Pac. J. Math. 10, 419–437 (1960). MR0115096

R. Bañuelos, K. Bogdan, Lévy processes and Fourier multipliers. J. Funct. Anal. 250(1), 197–213 (2007). https://doi.org/10.1016/j.jfa.2007.05.013. MR2345912

B. Barrios, I. Peral, F. Soria, E. Valdinoci, A Widder’s type theorem for the heat equation with nonlocal diffusion. Arch. Ration. Mech. Anal. 213(2), 629–650 (2014). https://doi.org/10.1007/s00205-014-0733-1. MR3211862

R.F. Bass, D.A. Levin, Harnack inequalities for jump processes. Potential Anal. 17(4), 375–388 (2002). https://doi.org/10.1023/A:1016378210944. MR1918242

A. Bendikov, Asymptotic formulas for symmetric stable semigroups. Expo. Math. 12(4), 381–384 (1994). MR1297844

J. Bertoin, Lévy Processes. Cambridge Tracts in Mathematics, vol. 121 (Cambridge University Press, Cambridge, 1996). MR1406564

R.M. Blumenthal, R.K. Getoor, Some theorems on stable processes. Trans. Am. Math. Soc. 95, 263–273 (1960). https://doi.org/10.2307/1993291. MR0119247

K. Bogdan, T. Byczkowski, Potential theory for the α-stable Schrödinger operator on bounded Lipschitz domains. Stud. Math. 133(1), 53–92 (1999). MR1671973

K. Bogdan, T. Żak, On Kelvin transformation. J. Theor. Probab. 19(1), 89–120 (2006). MR2256481

M. Bonforte, A. Figalli, J.L. Vázquez, Sharp global estimates for local and nonlocal porous medium-type equations in bounded domains. Anal. PDE 11(4), 945–982 (2018). https://doi.org/10.2140/apde.2018.11.945. MR3749373

L. Brasco, S. Mosconi, M. Squassina, Optimal decay of extremals for the fractional Sobolev inequality. Calc. Var. Partial Differ. Equ. 55(2), 23, 32 (2016). https://doi.org/10.1007/s00526-016-0958-y. MR3461371

C. Bucur, Some observations on the Green function for the ball in the fractional Laplace framework. Commun. Pure Appl. Anal. 15(2), 657–699 (2016). https://doi.org/10.3934/cpaa.2016.15.657. MR3461641

C. Bucur, Local density of Caputo-stationary functions in the space of smooth functions. ESAIM Control Optim. Calc. Var. 23(4), 1361–1380 (2017). https://doi.org/10.1051/cocv/2016056. MR3716924

C. Bucur, E. Valdinoci, Nonlocal Diffusion and Applications. Lecture Notes of the Unione Matematica Italiana, vol. 20 (Springer, Cham, 2016); Unione Matematica Italiana, Bologna. MR3469920

C. Bucur, L. Lombardini, E. Valdinoci, Complete stickiness of nonlocal minimal surfaces for small values of the fractional parameter. Ann. Inst. H. Poincaré Anal. Non Linéaire 36(3), 655–703 (2019)

X. Cabré, M. Cozzi, A gradient estimate for nonlocal minimal graphs. Duke Math. J. 168(5), 775–848 (2019)

X. Cabré, Y. Sire, Nonlinear equations for fractional Laplacians II: existence, uniqueness, and qualitative properties of solutions. Trans. Am. Math. Soc. 367(2), 911–941 (2015). https://doi.org/10.1090/S0002-9947-2014-05906-0. MR3280032

L.A. Caffarelli, Further regularity for the Signorini problem. Commun. Partial Differ. Equ. 4(9), 1067–1075 (1979). https://doi.org/10.1080/03605307908820119. MR542512

L. Caffarelli, F. Charro, On a fractional Monge-Ampère operator. Ann. PDE 1(1), 4, 47 (2015). MR3479063

L. Caffarelli, L. Silvestre, An extension problem related to the fractional Laplacian. Commun. Partial Differ. Equ. 32(7–9), 1245–1260 (2007). https://doi.org/10.1080/03605300600987306. MR2354493

L. Caffarelli, L. Silvestre, Regularity theory for fully nonlinear integro-differential equations. Commun. Pure Appl. Math. 62(5), 597–638 (2009). MR2494809

L. Caffarelli, L. Silvestre, Hölder regularity for generalized master equations with rough kernels, in Advances in Analysis: The Legacy of Elias M. Stein. Princeton Mathematical Series, vol. 50 (Princeton University Press, Princeton, 2014), pp. 63–83. MR3329847

L.A. Caffarelli, J.L. Vázquez, Asymptotic behaviour of a porous medium equation with fractional diffusion. Discrete Contin. Dyn. Syst. 29(4), 1393–1404 (2011). MR2773189

L. Caffarelli, F. Soria, J.L. Vázquez, Regularity of solutions of the fractional porous medium flow. J. Eur. Math. Soc. (JEMS) 15(5), 1701–1746 (2013). https://doi.org/10.4171/JEMS/401. MR3082241

M. Caputo, Linear models of dissipation whose Q is almost frequency independent. II. Fract. Calc. Appl. Anal. 11(1), 4–14 (2008). Reprinted from Geophys. J. R. Astr. Soc. 13(1967), no. 5, 529–539. MR2379269

A. Carbotti, S. Dipierro, E. Valdinoci, Local Density of Solutions to Fractional Equations. Graduate Studies in Mathematics (De Gruyter, Berlin, 2019)

A. Carbotti, S. Dipierro, E. Valdinoci, Local density of Caputo-stationary functions of any order. Complex Var. Elliptic Equ. (to appear). https://doi.org/10.1080/17476933.2018.1544631

R. Carmona, W.C. Masters, B. Simon, Relativistic Schrödinger operators: asymptotic behavior of the eigenfunctions. J. Funct. Anal. 91(1), 117–142 (1990). https://doi.org/10.1016/0022-1236(90)90049-Q. MR1054115

A. Cesaroni, M. Novaga, Symmetric self-shrinkers for the fractional mean curvature flow. ArXiv e-prints (2018), available at 1812.01847

A. Cesaroni, S. Dipierro, M. Novaga, E. Valdinoci, Fattening and nonfattening phenomena for planar nonlocal curvature flows. Math. Ann. (to appear). https://doi.org/10.1007/s00208-018-1793-6

E. Cinti, C. Sinestrari, E. Valdinoci, Neckpinch singularities in fractional mean curvature flows. Proc. Am. Math. Soc. 146(6), 2637–2646 (2018). https://doi.org/10.1090/proc/14002. MR3778164

E. Cinti, J. Serra, E. Valdinoci, Quantitative flatness results and BV-estimates for stable nonlocal minimal surfaces. J. Differ. Geom. (to appear)

J. Coville, Harnack type inequality for positive solution of some integral equation. Ann. Mat. Pura Appl. 191(3), 503–528 (2012). https://doi.org/10.1007/s10231-011-0193-2. MR2958346

J.C. Cox, The valuation of options for alternative stochastic processes. J. Finan. Econ. 3(1–2), 145–166 (1976). https://doi.org/10.1016/0304-405X(76)90023-4

M. Cozzi, E. Valdinoci, On the growth of nonlocal catenoids. Atti Accad. Naz. Lincei Rend. Lincei Mat. Appl. (to appear)

J. Dávila, M. del Pino, J. Wei, Nonlocal s-minimal surfaces and Lawson cones. J. Differ. Geom. 109(1), 111–175 (2018). https://doi.org/10.4310/jdg/1525399218. MR3798717

C.-S. Deng, R.L. Schilling, Exact Asymptotic Formulas for the Heat Kernels of Space and Time-Fractional Equations, ArXiv e-prints (2018), available at 1803.11435

A. de Pablo, F. Quirós, A. Rodríguez, J.L. Vázquez, A fractional porous medium equation. Adv. Math. 226(2), 1378–1409 (2011). https://doi.org/10.1016/j.aim.2010.07.017. MR2737788

E. Di Nezza, G. Palatucci, E. Valdinoci, Hitchhiker’s guide to the fractional Sobolev spaces. Bull. Sci. Math. 136(5), 521–573 (2012). https://doi.org/10.1016/j.bulsci.2011.12.004. MR2944369