Abstract

Several plausible modeling strategies are available to develop finite element (FE) models of ever-increasingly complex phenomena. Expert judgment is typically used to choose which strategy to employ, while the “best” modeling approach remains unknown. This paper proposes a decision analysis methodology that offers a systematic and rigorous methodology for comparing plausible modeling strategies. The proposed methodology departs from the conventional approach that considers only test-analysis correlation to select the model that provides the highest degree of fidelity-to-data. The novelty of the methodology lies in an exploration of the trade-offs between robustness to uncertainty and fidelity-to-data. Exploring robustness to model imprecision and inexactness, in addition to fidelity-to-data, lends credibility to the simulation by guaranteeing that its predictions can be trusted even if some of the modeling assumptions and input parameters are incorrect. To demonstrate this approach, an experimental configuration is analyzed in which large masses are used to load the CX-100 wind turbine blade in bending during vibration testing. Two plausible simulations are developed with differing strategies to implement these large masses using (i) a combination of point-mass and spring elements or (ii) solid elements. In this paper, the authors study the ability of the two FE models to predict the experimentally obtained natural frequencies, and the robustness of these competing models to uncertainties in the input parameters. Considering robustness for model selection provides the extent to which prediction accuracy deteriorates as the lack-of-knowledge increases. Therefore, the preferable modeling strategy is the one that offers the best compromise between fidelity-to-data and robustness to uncertainty. To predict the bending vibration of the CX-100 wind turbine blade, it is observed that the modeling strategy with solid elements is far superior to the other one in its ability to provide a compromise between fidelity-to-data and robustness to the modeling assumptions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

11.1 Introduction

11.1.1 Motivation

The wind energy industry in the United States has consistently observed the design of larger wind turbines, with the largest ones in 2011 produced with blades 61.5 m in length. In anticipation of this continued trend, blades 100 m in length are already being pursued for future wind turbines [1]. Modeling and simulation techniques can be used to economically and efficiently study the behavior of wind turbines produced at this massive scale, which enables designers to consider both aerodynamic and structural concerns early in the design process [2], and mitigate the increasing costs of full-scale testing [3]. Finite element (FE) models calibrated against experimental data have gained acceptance for routine use in studying the static and dynamic responses of wind turbine blades, as demonstrated by its inclusion in wind turbine design standards [4]. Further, FE models are advantageous to study complex load cases that arise from in-service wind loading [5, 6], as compared to the idealized loads that are implemented in full-scale experiments [7].

Assumptions and simplifications are routinely implemented in FE models to mitigate our lack-of-knowledge about the underlying physics and to reduce the computation time needed to simulate complex phenomena. For example, it has been proposed to simulate wind turbines using geometrically non-linear, one-dimensional beam elements when coupling FE models of the wind turbine structural response with computational fluid dynamics models of the surrounding airflow [8]. Here, expert judgment is used to identify the need for geometric non-linearity (as opposed to material non-linearity), which can account for large angle displacements as wind turbine blades are produced at larger scales. The use of one-dimensional beam elements is necessary to reduce computation time and make the simulation of wind turbines at the plant scale feasible. Current computing resources and code capabilities prevent the simulation of plant performance based on full-physics, full-coupling, three-dimensional representations of the structural response and air flow, which renders these simplifications necessary. As seen, FE models are only able to provide an approximation of reality due to the need for assumptions and simplifications. The main concern that arises is the extent to which predictions of a numerical simulation can be trusted, given that the models implemented rely on assumptions and simplifications.

Even though there may be a severe lack-of-knowledge about the best modeling strategy, recent wind turbine studies continue to consider a model good quality when its predictions match physical experiments after calibration or validation exercises. It is important to note that the model complexity, as influenced by the model form and number of parameters used to define the model, will also affect the quality of model output [9, 10]. For example, when comparing the output of four different wind turbine drive train models, Martins et al. [11] found that the model that accounted for mechanical damping achieved the best agreement between measurements and simulations, while the less complex models provided poorer agreement with the experimental data. Another study found it necessary to include non-linearity in the structural response of a wind turbine blade to better match the deformation observed during experimental static testing [5]. However, when the quality of numerical models are determined solely by their fidelity to experimental data, the modeling preference strategy will typically lean towards FE models that are overly complex at the risk of over-fitting the experimental data and at the cost of a poor generalization to other, non-tested settings [9]. This paradigm in modeling and simulation has been formally recognized, and it can be shown that fidelity-to-data, robustness to assumptions and predictive capability are antagonistic attributes of any family of models [12].

To quantify the effect on predictions of lack-of-knowledge introduced by modeling assumptions and simplifications, this manuscript proposes a novel, non-probabilistic decision analysis framework rooted in info-gap decision theory (IGDT). IGDT has surfaced as a useful method to study the influence of our ignorance on numerical predictions and the decisions that they support [13]. The methodology proposed herein deviates from other model selection methods (see Sect. 11.1.2), because it is non-probabilistic in nature, and is performed by assessing the trade-offs of fidelity-to-data and robustness of predictions to our lack-of-knowledge. The basic premise is that a good-quality model, while it should be able to reproduce the available measurements, should also provide predictions that are as insensitive as possible to the modeling assumptions and simplifications. In the proposed methodology, model selection is achieved through a rigorous exploration of robustness versus accuracy of predictions. Understanding these trade-offs is important for the development of robust numerical models because it is the very mechanism through which the trustworthiness of predictions can be established.

The methodology proposed in this paper is demonstrated on the bending vibration of the CX-100 wind turbine blade developed at the Sandia National Laboratories (SNL). In two earlier studies, the FE model of the CX-100 blade has undergone rigorous Verification and Validation (V&V) assessments to ensure the credibility of predictions (see Mollineaux et al. [14] for verification studies and Van Buren et al. [15] for the validation studies). These earlier studies are briefly summarized in Sect. 11.2 for completeness. More recently, the CX-100 blade was dynamically tested at the National Renewable Energy Laboratory (NREL) with large masses used to load the blade in bending. The added masses are represented in two alternative configurations, using (i) point masses and stiffening springs or (ii) high-fidelity solid elements. The ability of these two competing modeling strategies to replicate the experimentally obtained natural frequencies is discussed in Sect. 11.3. In Sect. 11.4, the fundamental principles behind IGDT are presented, and the extent to which predictions of these two competing models are robust to uncertainties in the model input parameters is quantified. The strategy that implements high-fidelity solid elements is found to be both more accurate and more robust than the use of point masses. The practical implication of these findings is that predictions, and their accuracy, can be trusted even if some of the modeling assumptions upon which the solid-element FE model relies upon are incorrect.

11.1.2 Related Literature

Model selection has been a widely pursued topic due to the lack-of-knowledge that arises in identifying an appropriate modeling strategy [16]. The importance of model selection arises from the fact that different modeling strategies will affect the quality of predictions. As early as the 1930s, it was observed that using the same data to train an algorithm and evaluate its performance can lead to a false sense of confidence in the results [17]. One method to mitigate this shortcoming in model selection is cross-validation, where experimental data are divided into at least two sets: (i) a calibration set, and (ii) at least one (or more) hold-out sets. The calibration set of experimental data is used to update the model, and the model that is able to best replicate the hold-out sets of data is considered to be the best choice. The data-splitting technique used to divide the available experimental data into different sets is known to affect the model selection process [18].

In the last decade, Bayesian methods have been widely pursued for model selection, with several approaches rooted in its theory, such as the intrinsic Bayes factor [19], fractional Bayes factor [22], deviance information criterion [23], and asymptotic approaches [24]. One advantage of Bayesian approaches is that they will naturally take structural uncertainty into account. Further, when sufficient experimental data are available, the “true” model will always be chosen by Bayesian model selection [25]. One drawback, however, is that the development of defensible prior uncertainties is often difficult and can affect the model selection outcome [26]. Despite the drawbacks to Bayesian approaches, many studies have successfully demonstrated its use in model selection [27, 28].

Several other methods have also been proposed to address the question of model selection, for example the Akaike information criterion [29], minimum description length [30], likelihood ratio tests [31], and information-theoretic measure of complexity [29, 32]. Myung [9] compares the performance of several model selection techniques, with the conclusion that model complexity must be taken into account to ensure that an overly complex model is not selected. Recent studies have also acknowledged the importance of robustness in model selection [27, 33, 34].

The current investigation proposes to address the question of model selection using IGDT, which requires the definition of a family of models to demonstrate the trade-off of fidelity-to-data and robustness to our lack-of-knowledge [13]. Such a family of models can originate from either a probabilistic or non-probabilistic description of the uncertainty and it is referred to as an info-gap model. Previous studies have successfully demonstrated the usefulness of treating uncertainties in an info-gap context, such as exploring the robustness of model predictions to uncertainties in connection stiffnesses [35] and industrial applications [36]. Herein, IGDT will be applied to model parameters to answer the question of model selection. The non-probabilistic treatment of uncertainty limits the assumptions that are applied in the analysis while accounting for model complexity. In addition, explicitly addressing the robustness to uncertainty of model predictions establishes confidence despite the lack-of-knowledge about the modeling assumptions and parameter values used in the simulation.

11.2 Model Development and Experimental Campaign

11.2.1 Development of the CX-100 FE Model

This section provides a review of the development process of the FE model of the CX-100 blade, as discussed in-depth by Mollineaux et al. [14] and Van Buren et al. [15]. The model is developed using an accurate description of the geometry, obtained from design specifications of the wind turbine blade in NuMAD, preprocessor developed at SNL, and imported into ANSYS version 12.1 with Shell-281 finite elements. The mesh discretization is based on an element size of Δx = 8 cm, which produces an overall solution uncertainty of 1.78% for the prediction of low-frequency bending modes of the blade. This mesh size is chosen because the 1.78% numerical uncertainty is comparable to a 3-σ experimental variability of 1.62%, estimated from free-free modal testing performed at the Los Alamos National Laboratory (LANL) [37].

Six independent sections are defined in the development of the FE model: shear web, root, spar cap, trailing edge, leading edge with balsa, and leading edge without balsa. With the exception of the shear web, because it is located inside the cross-section of the blade, these sections are illustrated in Fig. 11.1. Isotropic materials with smeared cross-sectional properties are used to define the material sections.

Modal testing performed at LANL in two configurations is used for calibration and validation studies: (i) free-free, where the blade is suspended with straps, and (ii) clamped-free, where a 250-kg steel bookend fixture is used to fix the base of the blade. The model parameters are calibrated to the free-free and clamped-free experimental natural frequencies in a two-step procedure [15]. To mitigate the uncertainty in the fixity at the base of the blade, fictitious springs are introduced in an attempt to implement a boundary condition that is between the ideal “fixed” and “free” cases. The mode shape vectors are used to validate the FE model, in which the modal assurance criterion is estimated to quantify the agreement of simulation results to the experimental data. An overall correlation of 84% is observed for the free-free modes and 94% for the clamped-free modes.

11.2.2 NREL Modal Testing of the CX-100 Wind Turbine Blade

The CX-100 wind turbine blade is attached to a 6,300 kg (7-ton) steel frame, effectively allowing for a fixed-free boundary condition. Modal testing is performed using a roving impact hammer test procedure under two different setups: first, in a fixed-free condition, and second with large masses clamped to the blade. A 582- and 145-kg mass are added on the blade at the 1.60- and 6.75-m locations, respectively. Four uni-axial accelerometers and one tri-axial accelerometer are used to collect data for hammer impacts at 65 locations: 47 in the flapwise directions, and 18 in the edgewise directions. Three test replicates are performed with a linear average and 150 Hz sampling frequency. The acceleration response is collected with 4,096 sampling points without a window function due to the relatively long sampling period of 11 s [38].

The experimental setups and base fixture are shown in Fig. 11.2, and the first three flapwise frequencies are listed in Table 11.1. It is important to note that the boundary condition provided in the NREL testing is different from the boundary condition used in previous modal testing performed at LANL [15, 37]. The mass-added configuration, in which there is significant mass loading and a change in the compliant boundary condition to a more rigid fixture, defines a different configuration of the CX-100 wind turbine blade.

11.2.3 Fixed-Free Model of the CX-100 Wind Turbine Blade

Calibration of the fixed-free model is re-considered due to the more rigid structure used in the NREL experiments. Five statistically significant parameters of the fixed-free FE model of the wind turbine blade are identified using a forward propagation of uncertainty and sensitivity analysis. The influential parameters are: density of the trailing edge, density of the leading edge, modulus of elasticity of the spar cap, translational springs used to model the boundary condition perpendicular to the base fixity, and density of the trailing edge. The uncertainty bounds of the trailing edge density, leading edge density, and spar cap density are increased from previous parametric studies [15], from ± 25% bounds to ± 50% bounds in the current study after an exploratory design-of-experiments suggests that larger bounds are needed for the FE model to envelope the experimental data. It is chosen to limit the increase of the parameter ranges to ± 50% due to mode swapping when the parameters are allowed to vary past these values. The upper and lower uncertainty bounds chosen for the spar cap modulus reflect the posterior uncertainty obtained from previous free-free calibration. The uncertainty bounds of boundary springs are determined from parametric studies of the fixed-free boundary condition. Measurements of the natural frequencies obtained during the NREL testing are utilized to calibrate the FE model. Instead of performing calibration as an optimization of model parameters to best-fit the experimental data, inference uncertainty quantification is performed to explore the posterior probability distribution of these three parameters. To efficiently perform the inference uncertainty quantification, the FE model is executed using a three-level, full-factorial design-of-experiments to provide the simulation data required to train a fast-running Gaussian Process Model (GPM). A Markov Chain Monte Carlo (MCMC) algorithm is used to explore the posterior distribution of parameters of the GPM emulator, where a statistical T-test retains only parameters that provide frequency predictions sufficiently “close” to those measured experimentally. This methodology relies on the theory proposed by Kennedy and O’Hagan [39] and the computational framework developed by Higdon et al. [40].

The results of the inference are summarized in Table 11.2. Columns 2–4 summarize the prior uncertainty, which represents the range of values used in the full-factorial design to train the GPMs. Columns 5–7 provide the posterior uncertainty, which are the statistics inferred from the MCMC search algorithm. The inference is successful at “learning” the value of the parameters, as indicated by the reduction of the posterior uncertainty relative to the prior range.

A graphical representation of the posterior distribution is provided in Fig. 11.3, which plots the marginal distribution of each parameter on the diagonal and a probability contour of the pairs of parameters in the off-diagonal boxes. The inference is successful in reducing the uncertainty of the spar cap modulus of elasticity and the boundary spring constants. The clustering of samples drawn towards the lower bound in Fig. 11.3 suggests that the algorithm may be attracted to values outside of the initial range provided to the GPM. However, it is chosen to keep the current analysis due to the introduction of mode swapping when the parameter values are allowed to vary outside of the prior range.

Marginal distributions and correlation functions corresponding to Table 11.2

Table 11.3 compares the experimentally obtained natural frequencies to those predicted by the FE model with the parameters set to the mean values of the posterior distribution. The simulation consistently under-predicts the experimental results by 0.5–3.4%. Due to the relatively small error in the predictions of resonant frequencies, and the fact that the predicted mode shape deflections correlate well with those measured experimentally, the accuracy of the calibrated fixed-free model is deemed acceptable. The same model is used next to explore the mass-added configuration.

11.3 Model Development for the Mass-Added Configuration of the CX-100 Wind Turbine Blade

This section discusses the development of two competing FE models used to simulate the NREL mass-added configuration. The two models pursued in this section are developed using different assumptions and simplifications, using either (i) a system of fictitious point masses and stiffening springs, or (ii) three-dimensional solid elements that represent the geometry of the added masses with high fidelity.

11.3.1 Development of the Point Mass Model

A central point mass is added to approximate the added masses at each of the two locations. The point masses are then connected by fictitious springs to the nodes of the blade to reflect the interaction between the blade and added masses. This configuration is shown in Fig. 11.4. The use of springs in this modeling strategy is adopted because exploratory FE simulations indicate that adding the point masses directly to the shell model of the blade introduces lower-order mode shapes with local deformations at the cross-sections with masses added. Fictitious springs are therefore introduced to maintain the rigidity of the blade cross-section at the locations where masses are added. This modeling strategy offers a compromise between low computational times-to-solution and a reasonable representation of the vibration mechanics.

Table 11.4 lists the parameters used to develop the point mass representation added to the three-dimensional blade model discretized with shell elements. The parameterization of this simplified modeling strategy results in the use of only six new model parameters.

To preserve the calibrated, fixed-free model discussed earlier in Sect. 11.2.3, only the spring stiffness coefficients are calibrated in the mass-added configuration (parameters 1, 2, 4, and 5 listed in Table 11.4). A parametric study is performed to evaluate the effect of the spring stiffness on frequency predictions.

Figure 11.5 shows the frequency predictions as the spring stiffness values are varied from 10 to \(1{0}^{+10}\,\mathrm{N/m}\). As the spring stiffness is increased, the natural frequencies also increase, due to the change in interaction between the blade and springs. Around a value of \(1{0}^{+6}\,\mathrm{N/m}\), the natural frequencies plateau to values that consistently under-predict the experimental natural frequencies. A calibration of the model parameters would likely converge to the upper bound of the spring stiffness values because the parametric study in Fig. 11.5 is unable to form an envelope around the experimentally obtained natural frequencies. Therefore, the value of \(1{0}^{+8}\,\mathrm{N/m}\) is chosen for the spring stiffness, indicated by the vertical black line in Fig. 11.5.

Table 11.5 compares the natural frequencies measured experimentally to those predicted by the mass-added FE model. Again, the frequencies are consistently under-predicted by the model; however, the absolute differences for the first three modes, 0.37, 0.38, and 1.13 Hz, demonstrate an acceptable fidelity-to-data despite the minimal calibration activities performed after the model was modified to include the added masses.

11.3.2 Development of the Solid Mass Model

The second modeling strategy is to represent the added-mass configuration of the blade with the highest possible degree of geometrical fidelity. Three-dimensional, solid elements are utilized to represent the geometry of the experimental setup, implementing Solid-186 elements in ANSYS. Four sections are used to define the added masses. The sections are labeled the 6.75-m mass, 1.60-m mass, and two 1.60-m offset masses in Fig. 11.6.

Four sections are used to model the added masses onto the blade, as shown in Fig. 11.6: one section for the wooden form attached to the blade at the 1.60-m station, two symmetric sections for the hydraulic actuator system at the 1.60-m station, and one section for the wooden form attached to the blade at the 6.75-m station. The wooden forms are modeled using the geometry of the masses obtained from design specifications. The geometry of offset masses of the hydraulic actuator system at the 1.60-m station is simplified into rectangular solids. Figure 11.7 compares the actual geometry of the experimental setup to the simplification implemented in the FE model. Due to its complicated geometry, expert opinion is used to identify the center of gravity of the offset mass, which introduces uncertainty in the analysis.

It is emphasized that, while this modeling strategy provides a better representation of the masses than the point-mass and spring system, the fundamental form of the model is still incorrect due to the assumption that the added masses can be represented using four homogenized sections. Another important factor is the time-to-solution: the vibration analysis of this model is significantly more expensive than the previous one. One objective of the investigation is to understand the extent to which the point-mass model is able to deliver a similar accuracy and robustness as the solid-mass model, at lower computational cost.

The parameters used to develop the solid-mass representation are listed in Table 11.6. The parameterization includes the geometry of the outset masses, represented by the center of gravity coordinates that define the masses, which influences the ability of the blade to bend in torsion. While the imperfect knowledge of the material parameters introduces parametric uncertainty in the prediction of vibration response, the imperfect knowledge of the center of gravity coordinates introduces numerical uncertainty due to the fact that the mesh changes each time that a different location of the center of gravity is implemented. This re-meshing step also contributes to significantly increasing the computational cost of the analysis. A close-up of the offset masses at the 1.60-m station is provided in Fig. 11.7, to highlight the good qualitative agreement between geometries of the experimental setup and FE model representation.

With the masses represented using solid elements, the density is back-calculated such that the weights of masses implemented in the FE model correspond to the weights of masses obtained experimentally. The elastic modulus of the wooden forms is assumed based on the documented value for balsa wood. The agreement between predictions of the FE model and experimental measurements is listed in Table 11.7.

11.4 Analysis of Robustness to Uncertainty Applied to Models of the CX-100 Wind Turbine Blade

This section discusses IGDT and how it is employed to evaluate the robustness to uncertainty of model predictions. By establishing robustness, one demonstrates the extent to which the predictions remain sufficiently accurate, even if some of the modeling assumptions and parameter values used in the simulation are incorrect. Lack-of-robustness, on the other hand, indicates that the expected level of accuracy obtained, for example, through calibration, may not be reached if some of the assumptions and parameter values happen to be incorrect. Analyzing the robustness of the FE model does not necessarily translate into a reduction of prediction uncertainty. It helps, instead, to identify a potentially dangerous situation whereby the predictions, and their accuracy, are sensitive to aspects of the modeling that may be unknown and/or uncontrolled.

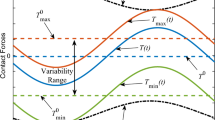

11.4.1 Conceptual Demonstration of Robustness Analysis

For the info-gap analysis presented, the allowable range of variation of model parameters is controlled using an uncertainty parameter, α. The definition of α is not essential; what matters is that increasing its value defines a nested family of models. For simplicity, the definition of α is kept unit-less: the same level of uncertainty, α, can be applied simultaneously to multiple parameters from the two modeling strategies because it has no physical unit. The second attribute of the info-gap analysis is the performance metric of the model, herein quantified using the root mean squared difference between simulation predictions and experimental observations. A conceptual illustration is provided next to describe the evaluation of the robustness of the competing FE models.

Consider a model defined using two uncertain parameters, u1 and u2. Figure 11.8a describes the nominal performance of the model, where u1 and u2 are defined using initial, best guesses or nominal values. As uncertainty, α, is increased, the parameters are allowed to vary within a range of permissible values (see Sect. 11.4.2 for how the bounds are chosen for the parameters in our application). As a result, parameters are varied from their nominal settings to become u1 and u2. Herein, the allowable range of variation of u1 and u2 is referred to as the uncertainty space. It is represented as a two-dimensional rectangle of size (α1)2 in Fig. 11.8b, c. With such changes in input parameters, the model performance either improves or degrades. We would like, therefore, to explore the best and worst achievable performances as \(\tilde{\mathrm{u}}_{1}\) and \(\tilde{\mathrm{u}}_{2}\) are allowed to venture away from their nominal values but remain within the uncertainty space defined by the parameter α1. The improvement of the performance obtained from the model is described as the opportuneness, and the degradation of performance is the robustness. At any level of uncertainty α, the opportuneness and robustness points are obtained by solving two global optimization problems that search for the best and worst performances, respectively, given the space of allowable values for u1 and u2. Figure 11.8b, c illustrate the development of the robustness and opportuneness functions.

Figure 11.8d illustrates that as the uncertainty parameter, α, increases, the uncertainty space, also increases. If the uncertainty space is defined to have nested intervals as α increases, as suggested in Fig.11.8d, then the opportuneness and robustness curves will be monotonic functions because the global optimizations that they represent are performed within ever-growing spaces. Figure 11.8e then shows the resulting opportuneness and robustness curves, developed from the evaluation of best and worst performances at three levels of uncertainty. A particular focus is placed on the robustness curve, and its shape, that are useful to evaluate the worst-case performance of the model under increasing uncertainty bounds. A “steep,” or nearly vertical, robustness curve indicates that the predictions are insensitive to increasing levels of uncertainty, α. Such an observation would be welcome as it would reinforce our conviction that the model can be applied with confidence even if some of the assumptions used for its development are questionable. Observing, on the other hand, a robustness curve with small slope, “Δα ∕ ΔR,” which denotes a small improvement of robustness “Δα” relative to a change in performance “ΔR,” indicates that the model predictions are sensitive to the values of u1 and u2 used in the simulation. Such a lack of robustness would decrease the level of trust placed in the assumptions upon which the model relies.

11.4.2 Rationale for the Definition of Uncertainty

To promote a fair comparison of the two modeling strategies, the parameters are varied in such as way that the effect on bending frequency predictions of the maximum parameter variation is consistent with the difference between the competing models at their nominal setting. Doing so ensures that the effect on predictions of the allowable range of parameter variation is consistent with the effect on predictions of varying the model forms of the competing modeling strategies. Because model selection is only applied to how the masses are modeled onto the existing shell representation, the info-gap analysis is restricted to the model parameters used to define the added masses. The two models at their nominal configuration exhibit a 20% average percent variation in the first three flapwise frequencies. The models are held at their nominal configuration while the weights of the masses are allowed to vary using the mass parameter for the point mass model, and the density parameter for the solid mass model. Table 11.8 summarizes the results of the mass-only variation, where 0% variation indicates the models held at their nominal setting.

The results presented in Table 11.8 are plotted in Fig. 11.9 for clarity. It is emphasized that the behaviors of the two curves are slightly different, even though only the mass parameters are allowed to vary in the two models. The observed difference is attributed to the combined effect of parameter variation and model form on frequency predictions. Figure 11.9 demonstrates that an 18% variation in masses is necessary to achieve the 20% variation observed between the two models at nominal configuration. Thus, the lower and upper bounds of the variation corresponding to α = 1 are defined by allowing the mass parameters to vary up to ± 18%. Having defined the parameter variations corresponding to any value of α, the info-gap analysis can be used to address the question of model selection.

11.4.3 Selection of the Mass Added Models

The info-gap analysis is performed for the competing FE models to explore the robustness of predictions obtained by each one of them. Upper and lower bounds of model parameters are defined corresponding to the level of uncertainty being evaluated, as suggested in Fig. 11.8 in the case of only two parameters. The uncertainty space is a hyper-cube defined from the lower and upper bounds for the vector of parameters u. Its size, or volume, increases monotonically depending on the level of uncertainty considered, α:

The level of uncertainty, α, is multiplied by 0.2 such that when α = 1 the parameters are varied by ± 20%, consistent with the mass-added variation pursued in Sect. 11.4.2. The robustness and opportuneness functions are evaluated in increments of α = 0. 5. For each level of uncertainty evaluated, the “fmincon” optimization solver of MatlabTM is used to search for the combination of parameters within the family of models that produces the worst-case (for robustness) or best-case (for opportuneness) performance. It is emphasized that a new input deck, that includes re-meshing in the case of the solid element model, is generated and submitted to ANSYS each time that a combination of model parameters is evaluated during the optimization. Results of the ANSYS analysis must then be uploaded back in MatlabTM memory. This strategy requires significant scripting to automate the procedure but avoids the development of statistical emulators that may introduce unwanted approximations.

To ensure that the optimization is initiated with a high-quality guess, all combinations of the upper and lower bounds, or “corners” of the hyper-cube space, are evaluated using a two-level full-factorial design-of-experiments. The optimization is then initiated using the combination of model parameters that yields the maximum or minimum performance of the full-factorial design. Because the objective is to compare the prediction accuracies of the two modeling strategies, model performance is defined as the root mean squared error of natural frequencies for the first three flapwise bending modes:

where R denotes the model performance metric, ω sim is the numerical prediction of natural frequency, and ω exp is the experimental measurement of the same frequency.

Tables 11.9 and 11.10 define the parameters associated with the competing models, along with the ranges of variation specified for the info-gap analysis at the level of uncertainty of α = 1. Note that the center of gravity parameter in Table 11.10 affects the definition of meshes used in the solid mass representation. It means that the uncertainty parameter, α, influences both the material behavior (density, elastic modulus) and numerical uncertainty of FE predictions due to the changes that it brings to the mesh discretization.

Figure 11.10 presents the results of the info-gap analysis performed on the competing FE models. The nominal performance, associated with a level of uncertainty of α = 0, clearly demonstrates that the solid mass model better reproduces the experimental data compared to the point mass model. Further, as the uncertainty parameter increases, the solid mass model remains the preferable modeling strategy. Conversely, it can be stated that the solid mass model provides a higher degree of accuracy at any level of modeling uncertainty, α. In fact, the robustness slopes of the competing models are relatively consistent despite the different representations of reality. The result of this analysis demonstrates unambiguously that the solid mass model is the preferable modeling strategy to utilize, despite the lack-of-knowledge associated with the modeling assumptions and parameters used in the simulation.

11.5 Conclusion

This manuscript discusses a decision analysis framework for model selection that considers the trade-offs in the ability of a numerical simulation to, first, replicate the experimental data and, second, provide predictions that are robust to the modeling assumptions identified in the model development process. Modeling assumptions are typically formulated when developing numerical simulations, such as the use of fictitious boundary springs or implementing smeared properties for composite materials instead of attempting to define the individual layers. Although such assumptions have become commonplace, their effect on model predictions often remains unknown. Another common practice is to consider that a model achieves sufficient “predictability” as long as its predictions reproduce the experimental measurements. Our contention is that assessing models based only on their fidelity-to-data while ignoring the effect that the modeling assumptions may exercise on predictions is not a sound strategy for model selection.

The framework discussed in this study is applied to competing models used to simulate an experimental configuration of the CX-100 wind turbine blade in which masses are added to the blade. Experimental data obtained from a fixed-free modal analysis performed at the National Renewable Energy Laboratory, with and without added masses, are utilized. The wind turbine blade is bolted to a 6,300-kg steel frame to define the fixed-free configuration. Masses are added at the 1.60- and 6.75-m sections to define the mass added configuration that enhances the flapwise bending vibrations. The FE model of the blade, developed from a previous verification and validation study, is first calibrated to measurements of the fixed-free configuration. Calibration results show that the FE model is able to replicate the experimental frequencies within an average 2% error. Two modeling strategies are then considered for implementing the masses onto the existing FE model, using (i) point masses and stiffening springs and (ii) high-fidelity solid elements. To examine the predictive capability of the mass-added FE models, limited calibration exercises are performed past the initial calibration to the fixed-free configuration. At their nominal configurations, the point mass model reproduces the experimental data to within 11.1% average error, and the solid mass model is within 8.5% average error for the first three flapwise bending natural frequencies.

An info-gap analysis is performed to address the question of model selection. An advantage of info-gap is that the formulation of prior probability distributions can be avoided because the analysis substitutes numerical optimization to statistical sampling. Further, the robustness to our lack-of-knowledge about the modeling assumptions and parameter values is accounted for when evaluating the model performance. The info-gap analysis is performed through parameter variation, where the maximum range of variation is chosen such that the change in model predictions is consistent with the change induced by the differing modeling strategies. It is observed that the solid mass model is not only more accurate, but also provides better behavior in robustness to modeling assumptions and unknown parameter values. Even though the solid mass model is a more complex representation of reality, and comes with higher computational cost, the analysis concludes unambiguously that it is the preferable modeling strategy for this application.

References

Griffith DT, Ashwill TD (2011) The Sandia 100-meter all-glass baseline wind turbine blade: SNL100-00, Sandia technical report SAND2011-3779, Sandia National Laboratories, Albuquerque

Quarton DC (1998) The evolution of wind turbine design analysis: a twenty year progress review. Wind Energy 1(S1):5–24

Veers PS, Laird DL, Carne TG, Sagartz MJ (1998) Estimation of uncertain material parameters using modal test data. In: 36th AIAA aerospace sciences meeting, Reno

Det Norske Veritas (2010) Design and manufacture of wind turbine blades, offshore and onshore Wind Turbines. Det Norske Veritas, Høvik, http://springerlink.bibliotecabuap.elogim.com/chapter/10.1007%2F978-1-4614-2431-4_2#

Jensen FM, Falzon BG, Ankersen J, Stang H (2006) Structural testing and numerical simulation of a 34-m composite wind turbine blade. Compos Struct 76(1–2):52–61

Leishman JG (2002) Challenges in modeling the unsteady aerodynamics of wind turbines. Wind Energy 5(2–3):85–132

Freebury G, Musial W (2000) Determining equivalent damage loading for full-scale wind turbine blade fatigue tests. In: 19th ASME wind energy symposium, Reno

Dalton S, Monahan L, Stevenson I, Luscher DJ, Park G, Farinholt K (2012) Towards the experimental assessment of NLBeam for modeling large deformation structural dynamics. In: Mayes R, Rixen D, Griffith DT, DeKlerk D, Chauhan S, Voormeeren SN, Allen MS (eds), Topics in experimental dynamics substructuring and wind turbine dynamics, vol 2. Springer, New York, pp 177–192

Myung J (2000) The importance of complexity in model selection. J Math Psychol 44(1):190–204

Atamturktur S, Hegenderfer J, Williams B, Egeberg M, Unal C (2012) A resource allocation framework for experiment-based validation of numerical models. J Mech Adv Mater Struct (conditionally accepted)

Martins M, Perdana A, Ledesma P, Agneholm E, Carlson O (2007) Validation of fixed speed wind turbine dynamic models with measured data. Renew Energy 32(8):1301–1316

Ben-Haim Y, Hemez FM (2012) Robustness, fidelity and prediction-looseness of models. Proc R Soc A 468(2137):227–244

Ben-Haim Y (2006) Info-gap decision theory: decisions under severe uncertainty, 2nd edn. Academic, Oxford

Mollineaux MG, Van Buren KL, Hemez FM, Atamturktur S (2012) Simulating the dynamics of wind turbine blades: part I, model development and verification. Wind Energy. doi:10.1002/we.1521

Van Buren KL, Mollineaux MG, Hemez FM, Atamturktur S (2012) Simulating the dynamics of wind turbine blades: Part II, model validation and uncertainty quantification. Wind Energy. doi:10.100/we1522

Draper D (1995) Assessment and propagation of model uncertainty. J R Stat Soc B 57(1):45–97

Larson SC (1931) The shrinkage of the coefficient of multiple correlation. J Educ Psychol 22(1):45–55

Kadane JB, Dickey JM (1980) Bayesian decision theory and the simplification of models. In: Kmenta J, Ramsey J (eds) Evaluation of econometric models. Academic, New York, pp 245–268

Arlot S, Celisse A (2010) A survey of cross-validation procedures for model selection. Stat Surv 4:40–79

Berger J, Pericchi L (1996) The intrinsic Bayes factor for model selection and prediction. J Am Stat Assoc 91(433):109–122

Graybill FA (1976) Theory and application of the linear model. Wadsworth, Belmont

O’Hagan A (1995) Fractional Bayes factor for model comparison. J R Stat Soc Ser B 57(1):99–138

Spiegelhalter DJ, Best NG, Carlin BP, van der Linde A (2002) Bayesian measure of model complexity and fit (with discussion). J R Stat Soc Ser B 64(4):583–649

Wasserman L (2000) Bayesian model selection and model averaging. J Math Psychol 44(1):92–107

Berger JO, Pericchi LR (2001) Objective Bayesian methods for model selection: introduction and comparison (with discussion). In: Lahiri P (ed) Model selection. IMS, Beachwood, pp 135–207

Kadane JB, Lazar NA (2004) Methods and criteria for model selection. J Am Stat Assoc 99(465):279–290

Terejanu G, Oliver T, Simmons C (2011) Application of predictive model selection to coupled models. In: Proceedings of the world congress on engineering and computer science, San Francisco

Beck JL, Yuen KV (2004) Model selection using response measurements: Bayesian probabilistic approach. J Eng Mech 130(2):192–203

Bozdogan H (2000) Akaike information criterion and recent developments in information complexity. J Math Psychol 44(1):62–91

Grunwald P (2000) Model selection based on minimum description length. J Math Psychol 44(1):133–152

Posada D, Buckley TR (2004) Model selection and model averaging in phylogenetics: advantages of Akaike information criterion and Bayesian approaches over likelihood ratio tests. Syst Biol 53(5):793–808

Bozdogan H, Bearse PM (1997) Model selection using informational complexity with applications to vector autoregressive (VAR) models. In: Dowe D (ed) Information, statistics, and induction in sciences (ISIS) anthology. Springer, Berlin/New York

Müller S, Welsh AH (2005) Outlier robust model selection in linear regression. J Am Stat Assoc 100(472):1297–1310

Johnson JB, Omland KS (2004) Model selection in ecology and evolution. Trends Ecol Evol 19(2):101–108

Vinot P, Cogan S, Ben-Haim Y (2002) Reliability of structural dynamic models based on info-gap models. In: 20th international modal analysis conference, Los Angeles

Hot A, Cogan S, Foltête A, Kerschen G, Buffe F, Buffe J, Behar S (2012) Design of uncertain pre-stressed space structures: an info-gap approach. In: Simmermacher T, Cogan S., Horta LG, Barthorpe R (eds) Topics in model validation and uncertainty quantification, vol 4. Springer, New York, pp 13–20

Deines K, Marinone T, Schultz R, Farinholt K, Park G (2011) Modal analysis and structural health monitoring investigation of CX-100 wind turbine blade. In: Proulx T (ed.) Rotating machinery, structural health monitoring, shock and vibration, vol 5. Springer, New York, pp 413–438

Farinholt K, Taylor SG, Park G, Ammerman CM (2012) Full-scale fatigue tests of CX-100 wind turbine blades: part I – testing. SPIE Proc

Kennedy M, O’Hagan A (2000) Predicting the output from a complex computer code when fast approximations are available. Biom 87(1):1–13

Higdon D, Gattiker J, Williams B, Rightley M (2008) Computer model calibration using high-dimensional output. J Am Stat Assoc 103(482):570–583

Acknowledgements

This work is performed under the auspices of the Laboratory Directed Research and Development project “Intelligent Wind Turbines” at the Los Alamos National Laboratory (LANL). The authors are grateful to Dr. Curtt Ammerman, project leader, for his continued support and technical leadership. LANL is operated by the Los Alamos National Security, LLC for the National Nuclear Security Administration of the U.S. Department of Energy under contract DE-AC52-06NA25396.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 The Society for Experimental Mechanics, Inc.

About this paper

Cite this paper

Van Buren, K.L., Atamturktur, S., Hemez, F.M. (2013). Simulating the Dynamics of the CX-100 Wind Turbine Blade: Model Selection Using a Robustness Criterion. In: Simmermacher, T., Cogan, S., Moaveni, B., Papadimitriou, C. (eds) Topics in Model Validation and Uncertainty Quantification, Volume 5. Conference Proceedings of the Society for Experimental Mechanics Series. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-6564-5_11

Download citation

DOI: https://doi.org/10.1007/978-1-4614-6564-5_11

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-6563-8

Online ISBN: 978-1-4614-6564-5

eBook Packages: EngineeringEngineering (R0)