Abstract

This chapter presents an overview of situationally-induced impairments and disabilities, or SIIDs, which are caused by situations, contexts, or environments that negatively affect the abilities of people interacting with technology, especially when they are on-the-go. Although the lived experience of SIIDs is, of course, unlike that of health-induced impairments and disabilities, both can be approached from an accessibility point-of-view, as both benefit from improving access and use in view of constraints on ability. This chapter motivates the need for the conception of SIIDs, relates the history of this conception, and places SIIDs within a larger framework of Wobbrock et al.’s ability-based design (ACM Trans Access Comput 3(3), 2011, Commun ACM 61(6):62–71, 2018). Various SIIDs are named, categorized, and linked to prior research that investigates them. They are also illustrated with examples in a space defined by two dimensions, location and duration, which describe the source of the impairing forces and the length of those forces’ persistence, respectively. Results from empirical studies are offered, which show how situational factors affect technology use and to what extent. Finally, specific projects undertaken by this chapter’s author and his collaborators show how some situational factors can be addressed in interactive computing through advanced sensing, modeling, and adapting to users and situations. As interactive computing continues to move beyond the desktop and into the larger dynamic world, SIIDs will continue to affect all users, with implications for human attention, action, autonomy, and safety.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

The computer user of today would be quite unrecognizable to the computer user of 30 years ago. Most likely, that user sat comfortably at a desk, typed with two hands, and was not distracted or bothered by outside people, noises, forces, or situations. He would have enjoyed ample lighting, a dry environment, moderate ambient temperatures, and a physically safe environment. Of course, these conditions describe most of today’s office computing environments as well. But the computer user of today can also be described quite differently (Kristoffersen and Ljungberg 1999). Today, such a user might be walking through an outdoor space, her attention repeatedly diverted to her surroundings as she navigates among people, along sidewalks, through doors, up and down stairs, and amidst moving vehicles. She might be in the rain, her screen getting wet. Her hands might be cold so that her fingers feel stiff and clumsy. She might only be able to hold her computer in one hand, as her other arm carries groceries, luggage, or an infant. She might be doing all of this at night, when lighting is dim and uneven, or in the blazing heat of a sunny day, with sweat and glare making it difficult to use her screen.

The computer in the above scenario is not much like the desktop computer of 30 years ago. Today’s mobile and wearable computers, especially smartphones, tablets, and smartwatches, enable us to interact with computers in a variety of situations, contexts, and environments. But the flexibility of computing in these settings does not come for free—it comes at a cost to our cognitive, perceptual, motor, and social abilities. These abilities are taxed all the more in mobile, dynamic settings, where we must attend to more than just a computer on our desk.

The notion that situations, contexts, and environments can negatively affect our abilities, particularly when it comes to our use of computers, has been framed in terms of disability (Gajos et al. 2012; Newell 1995; Sears et al. 2003; Sears and Young 2003; Vanderheiden 1997; Wobbrock 2006). When disability is conceptualized as limits on ability, then a notion of “situational disabilities” is meaningful, because situations of many kinds clearly limit the expression of our abilities. In recent years, an increasing number of studies show how various situations, contexts, and environments negatively affect people’s abilities to interact with computing systems (e.g., Dobbelstein et al. 2017; Lin et al. 2007; Ng et al. 2014a; Sarsenbayeva et al. 2016, 2018). Also, researchers in human–computer interaction have developed working prototypes to demonstrate the feasibility of sensing and overcoming situational disabilities (e.g., Goel et al. 2012a; Mariakakis et al. 2015; Qian et al. 2013b; Sarsenbayeva et al. 2017a), often employing smartphone sensors and machine learning to adapt interfaces to better suit their users in given contexts.

Despite a trend in framing certain challenges as arising from situational disabilities, the concept of situational disabilities is not without controversy. One might argue that calling a “disability” that which can be alleviated by a change in circumstances diminishes the lived experiences of those with lifelong disabilities. A person experiencing a situational disability suffers neither the sting of stigma nor the exile of exclusion. Modern social scientists acknowledge that disability is as much a cultural identifier as it is a personal ascription (Mankoff et al. 2010; Reid-Cunningham 2009; Sinclair 2010), and nondisabled people experiencing a situational disability take no part in, and make no contribution to, a “disability culture.” In fact, neither a person experiencing a situational disability, nor anyone observing him or her, regards that person as having a disability at all. No accommodations are required; no laws must be enacted; no rights must be protected or enshrined. Perhaps, therefore, the notion of SIIDs is not only wrong, but also misguided and offensive.

Indeed, the aforementioned arguments have merit. There should be no confusing situational disabilities with sensory, cognitive, or health-related disabilities. And yet, many researchers today find that the notion of situational disabilities offers a useful perspective, providing at least three benefits:

First, the notion of situational disabilities highlights that everyone experiences limits to their abilities, sometimes drastic ones (Saulynas et al. 2017; Saulynas and Kuber 2018), when interacting with technology in dynamic situations, contexts, or environments. The notion is a reminder that disability is not just “about others” but about people generally, and accessibility is for everyone to varying degrees. Perhaps, this perspective simply redefines the term “disability” to be synonymous with “inability,” in which case, one could promote the phrase “situational inabilities”; but thus far, the field has adopted a disability lens and viewed situational challenges to ability in terms of accessibility.

Second, the notion of situational disabilities is about finding design solutions that benefit multiple people. Of course, the experience of a person holding a bag of groceries is nothing like the experience of a person with one arm; but a smartphone capable of being operated easily by one hand might be usable by and beneficial to both people. In this sense, when one allows for situational disabilities in one’s design thinking, one approaches designing for all users and their abilities—for what they can do in a given situation, and not what they cannot do (Bowe 1987; Chickowski 2004; Newell 1995; Wobbrock et al. 2011, 2018).

Third, situational disabilities have real, even life or death, consequences because people’s abilities are significantly diminished by them. For example, the popular press has reported regularly on walking “smartphone zombies” who have hit and been hit by other people, objects, and vehicles (Brody 2015; Haberman 2018; Richtel 2010). In 2009–2010, The New York Times ran an entire series on the negative impacts on human attention due to texting while driving (Richtel et al. 2009). Frighteningly, the Federal Communications Commission estimates that at any given daylight moment in the United States, 481,000 drivers are texting while driving.Footnote 1

Cities around the world are attempting to remedy these dangers. In Stockholm, Sweden, traffic signs alert drivers to oblivious walking texters.Footnote 2 In Chongqing, China, city officials have divided their sidewalks into two lanes, one for people fixated on their smartphones and one for people promising to refrain.Footnote 3 London, England experimented with padded lampposts along some of its lanes after injurious collisions by texting walkers.Footnote 4 In Bodegraven, near Amsterdam, red lights at busy intersections illuminate the sidewalks at people’s feet so that smartphone users looking down halt before entering crosswalks prematurely.Footnote 5 The Utah Transit Authority fines people $50 USD for distracted walking near light rail tracks, which includes texting while walking.Footnote 6 In Honolulu, Hawaii and Montclair, California, recent distracted walking laws make it illegal to text while crossing the street (Haberman 2018).Footnote 7

When faced with the challenges and consequences of situational disabilities, creators of interactive technologies must ask what they can do to better understand these negative effects and how to design solutions to address them. This chapter is devoted to furthering these aims.

2 Background

Andrew Sears and Mark Young first joined the words “situational” and “disabilities” together in 2003. The full phrase for their concept was “situationally-induced impairments and disabilities (SIIDs)” (Sears and Young 2003) (p. 488). Their key observation was:

Both the environment in which an individual is working and the current context (e.g., the activities in which the person is engaged) can contribute to the existence of impairments, disabilities, and handicaps (p. 488).

In a paper that same year, Sears et al. (2003) focused on the relevance of SIIDs to ubiquitous computing:

As computers are embedded into everyday things, the situations users encounter become more variable. As a result, situationally-induced impairment and disabilities (SIID) [sic] will become more common and user interfaces will play an even more important role. … Both the work environment and the activities the individual is engaged in can lead to SIID (pp. 1298, 1300).

In both papers, the authors borrowed from the World Health Organization (WHO) (World Health Organization 2000) when distinguishing among impairments, disabilities, and handicaps. This chapter will generally use the acronym SIIDs, and it is worth noting the distinctions between impairments, disabilities, and handicaps. According to Sears and Young (2003), who paraphrase the WHO classification:

-

Impairments are “a loss or abnormality of body structure or function.” Impairments generally manifest as limitations to perception, action, or cognition; they occur at a functional level. Impairments can be caused by health conditions. For example, arthritis (a health condition) might cause stiffness in the fingers (an impairment). Impairments can also be caused by a user’s environment. For example, stiffness in the fingers might also be caused by cold temperatures from prolonged outdoor exposure.

-

Disabilities are “difficulties an individual may have in executing a task or action.” Disabilities are generally activity limitations; they occur at a task level. They might be caused by impairments. For example, stiffness in the fingers (an impairment) might lead to the inability to use a smartphone keyboard (a disability). Disabilities might also be caused by the user’s context. For example, walking might cause enough extraneous body motion that using a smartphone keyboard is too difficult without stopping.

-

Handicaps are “problems an individual may experience in involvement in life situations.” Handicaps are generally restrictions on participation in society; they occur at a social level. They are often caused by disabilities. For example, difficulty using a computer keyboard (a disability) might result in the inability to search for and apply to jobs online (a handicap). Handicaps might also be caused by a user’s situation. For example, the distraction caused by incoming text messages might make it difficult for a user to participate in face-to-face conversations at a meeting or cocktail party.

Although this chapter will refer to SIIDs as encompassing both “situational impairments” and “situational disabilities,” it is useful to consider their difference. For the purpose of technology design, “situational disabilities” is a helpful notion because it is at the level of tasks and activities that design opportunities present themselves—i.e., how to make a certain task or activity more achievable for users. In contrast, “situational impairments” says nothing about the specific tasks or activities being attempted. For example, addressing stiff fingers due to cold temperatures (a situational impairment) says nothing about the task being attempted or the technology being used; a remedy might simply be to wear gloves. But if the intended activity is “texting on a small smartphone keyboard,” then stiff fingers and gloves are both likely to be a problem. In considering how to design technologies to be more usable and accessible in the presence of SIIDs, we consider how we can better enable accomplishing specific tasks and activities.

Sears and Young (2003) were not the first to observe that situations, contexts, and environments can give rise to disabling conditions. In 1995, eight years prior, Alan F. Newell began his edited volume on Extra-Ordinary Human-Computer Interaction with a chapter containing a subsection entitled, “People are handicapped by their environments” (Newell 1995) (pp. 8-9). In it, he described a soldier on a battlefield:

He or she can be blinded by smoke, be deafened by gunfire, be mobility impaired by being up to the waist in mud, have poor tactile sensitivity and dexterity because of wearing a chemical warfare suit, and be cognitively impaired because of being scared stiff of being killed—and this is before the solider is wounded! If one were to measure the effective abilities of a person in such an environment, they would be poor enough over a number of dimensions for him or her to be classified as severely disabled in a more normal environment (p. 9).

Newell went on to argue that everyone has a certain set of abilities and degrees of those abilities, and situations, contexts, and environments play a major role in affecting the expression of abilities in all people.

Two years after Newell, in 1997, Gregg C. Vanderheiden (1997) articulated the benefits of designing for people in disabling situations, arguing that when done successfully, it creates more accessible interfaces for people with disabilities also:

If we design systems which are truly ubiquitous and nomadic; that we can use whether we are walking down the hall, driving the car, sitting at our workstation, or sitting in a meeting; that we can use when we’re under stress or distracted; and that make it easy for us to locate and use new services—we will have created systems which are accessible to almost anyone with a physical or sensory disability (p. 1439).

Vanderheiden (1997) further emphasized:

[D]ifferent environments will put constraints on the type of physical and sensory input and output techniques that will work (e.g., it is difficult to use a keyboard when walking; it is difficult and dangerous to use visual displays when driving a car; and speech input and output, which work great in a car, may not be usable in a shared environment, in a noisy mall, in the midst of a meeting, or while in the library). … [M]ost all of the issues around providing access to people with disabilities will be addressed if we simply address the issues raised by [this] “range of environments” (p. 1440).

Two years later, in 1999, Steinar Kristoffersen and Fredrik Ljungberg (1999) published a seminal study of mobile group work, observing in the process that the impediments to successful interaction are not only due to deficiencies in mobile platform design, but due to the situations in which such platforms are used. In studying telecommunications engineers and maritime consultants, they observed:

The context in which these people use computers is very different from the office … Four important features of the work contexts studied are: (1) Tasks external to operating the mobile computer are the most important, as opposed to tasks taking place “in the computer” (e.g., a spreadsheet for an office worker); (2) Users’ hands are often used to manipulate physical objects, as opposed to users in the traditional office setting, whose hands are safely and ergonomically placed on the keyboard; (3) Users may be involved in tasks (“outside the computer”) that demand a high level of visual attention (to avoid danger as well as monitor progress), as opposed to the traditional office setting where a large degree of visual attention is usually directed at the computer; (4) Users may be highly mobile during the task, as opposed to in the office, where doing and typing are often separated (p. 276) (emphasis theirs).

In 2006, three years after Sears and Young coined their “SIIDs” acronym, and still prior to the advent of the Apple iPhone in 2007, Wobbrock (2006) identified four trends in mobile computing, one of which was the need to make mobile devices more usable in the presence of situational impairments. Wobbrock wrote:

As mobile devices permeate our lives, greater opportunities exist for interacting with computers away from the desktop. But the contexts of mobile device use are far more varied, and potentially compromised, than the contexts in which we interact with desktop computers. For example, a person using a mobile device on the beach in San Diego may struggle to read the device’s screen due to glare caused by bright sunlight, while a user on an icy sidewalk in Pittsburgh may have gloves on and be unable to accurately press keys or extract a stylus (p. 132).

Wobbrock (2006) went on to suggest design opportunities that could help reduce the negative impacts of SIIDs:

Ultimately, it should be feasible to construct devices and interfaces that automatically adjust themselves to better accommodate situational impairments. … A device could sense environmental factors like glare, light levels, temperature, walking speed, gloves, ambient noise—perhaps even user attention and distraction—and adjust its displays and input mechanisms accordingly. For example, imagine a device that is aware of cold temperatures, low light levels, and a user who is walking and wearing gloves. The device could automatically adjust its contrast, turn on its backlight, and enlarge its font and soft buttons so as to make the use of a stylus unnecessary. If it detects street noise it could raise the volume of its speakers or go into vibration mode. In short, understanding situational impairments presents us with opportunities for better user models, improved accessibility, and adaptive user interfaces (p. 132).

Although more than a dozen years have passed since these ideas were proposed, and in that time we have seen an explosion of “smart” and wearable devices, these devices still remain largely oblivious to their users’ situations, contexts, and environments. Even in the research literature, only a handful of projects demonstrate the sensing, modeling, and adaptive capabilities necessary to approach the kind of accommodations proposed above.Footnote 8 Clearly, more progress in developing “situationally aware” and “situationally accessible” technologies is needed.

The early writings by Sears et al. (2003), Sears and Young (2003), Newell (1995), Vanderheiden (1997), Kristoffersen and Ljungberg (1999), Wobbrock (2006), and others clearly established the link between situation, accessibility, and disability that underlies the notion of SIIDs today. Most recently, Wobbrock et al. (2011, 2018) developed ability-based design as a holistic design approach that takes both ability and situation into account, unifying “designing for people with disabilities” and “designing for people in disabling situations.” Although a full treatment of ability-based design is beyond the current scope, it represents the most unified conception of SIIDs and their relation to accessible design to date. What seems necessary going forward are more technological breakthroughs and infrastructure to enable designers and engineers to sense the presence of (or potential for) SIIDs and overcome them.

3 Situations, Contexts, and Environments

This chapter has, thus far, used the words “situation,” “context,” and “environment” rather loosely and interchangeably. Here is neither the first place to do so nor the first place to attempt a more formal separation of these terms. In the abstract of their highly cited article on context-aware computing, Dey et al. (2001) utilize all three of these words within their one-sentence definition:

By context, we refer to any information that characterizes a situation related to the interaction between humans, applications, and the surrounding environment (p. 97) (emphasis ours).

The precise meanings of these terms in computing have not reached consensus despite being discussed for decades (see, e.g., Bristow et al. 2004; Dey et al. 2001; Dourish 2004; Pascoe 1998; Schmidt et al. 1999; Sears et al. 2003). Nonetheless, the terms present relevant differences that are useful when discussing SIIDs. For our purposes, we employ the following distinctions, which we admit are not always in keeping with definitions from prior work since those, too, are mixed:

-

Situation refers to the specific circumstance in which the user finds him- or herself. The situation encompasses the “immediate now” of the user.

-

Context refers to the current activities in which the user is generally engaged, including the user’s purpose, goals, and motivations for those activities, and the user’s physical, mental, and emotional state while doing those activities.

-

Environment refers to the larger setting the user is in, including both the physical and social setting.

The three terms above, progressing from situation to context to environment, increase scope in time and space. A situation is highly specific, immediate, and local. A context is broader, as activities have a narrative arc to them, including what came before and what comes next; moreover, users undergo a process of doing, feeling, and experiencing along this arc. An environment is broader still, encompassing physical and social dimensions beyond the user’s immediate locale but that influence the user nonetheless.

An example helps make the above distinctions clear. Consider a worker in a factory (the environment) welding metal parts while wearing a dark welder’s mask (the context). A red light on a nearby wall suddenly illuminates (the situation), but is not visible through the welder’s dark mask (an impairment), causing the welder to remain unaware of a potential safety hazard (a disability), thereby violating company protocol by failing to evacuate the building (a handicap).

The above distinctions make clear, then, that the term “situational impairment” refers to a functional limitation experienced by a user in a specific circumstance; similarly, the term “situational disability” refers to the task or activity limitation experienced by a user in a specific circumstance. These two notions are therefore combined in “situationally-induced impairments and disabilities,” or SIIDs (Sears et al. 2003; Sears and Young 2003).

4 A Categorized List of Factors That Can Cause SIIDs

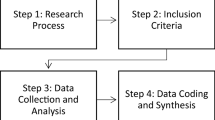

The expanse of potential impairing or disabling factors that can arise for users of interactive computing technologies is vast indeed. Table 5.1 offers a list of such factors, assembled in part from prior sources (Abdolrahmani et al. 2016; Kane et al. 2008; Newell 1995; Sarsenbayeva et al. 2017b; Saulynas et al. 2017; Sears et al. 2003; Sears and Young 2003; Vanderheiden 1997; Wobbrock et al. 2018) and categorized here in an original scheme (behavioral, environmental, attentional, affective, social, and technological). References to empirical studies that have explored each factor are listed, along with technological inventions that have attempted to sense or accommodate that factor. The references assembled are not comprehensive, but they give the interested reader plenty to peruse.

5 A Two-Dimensional Space of Impairing and Disabling Factors

Within the framework of ability-based design, Wobbrock et al. (2018) defined a two-dimensional space in which examples of impairing and disabling factors can be arranged. Portraying this space allows one to consider a broad range of factors, both health-induced and situationally-induced. Specifically, one axis for this space is Location, which refers to whether the potentially disabling factor comes from within the user (“intrinsic”), arises external to the user (“extrinsic”), or is a mix of both. Another axis is Duration, a spectrum for indicating whether the potentially disabling factor ranges from very short-lived to very long-lived. SIIDs tend to arise from short-lived extrinsic factors, but they are not limited to this zone. Table 5.2, adapted from prior work (Wobbrock et al. 2018, p. 67), shows an example in each zone of the two-dimensional space.

6 Some Empirical Results of SIIDs in Mobile Human–Computer Interaction

This section highlights some empirical results from studies of situational factors found in Table 5.1. Three factors that can affect people’s interactions with mobile devices and services are discussed: walking, cold temperatures, and divided attention. Each is addressed in turn.

6.1 The Effects of Walking

Perhaps unsurprisingly, walking has received the most attention by researchers wishing to understand the effects of SIIDs on mobile computing, especially on the use of smartphones. It is not only users’ abilities that are affected by walking—it is walking itself that is also affected by interacting when mobile. For example, walking speed slows by about 30–40% when interacting with a handheld touch screen device (Barnard et al. 2005; Bergstrom-Lehtovirta et al. 2011; Brewster et al. 2003; Lin et al. 2007; Marentakis and Brewster 2006; Mizobuchi et al. 2005; Oulasvirta et al. 2005; Schedlbauer et al. 2006; Schildbach and Rukzio 2010; Vadas et al. 2006). Here, we report specifically on target acquisition (i.e., pointing), text entry, and text readability while walking.

Target Acquisition. Prior studies have shown that walking reduces human motor performance. In a stylus-based target-tapping task modeled with Fitts’ law (Fitts 1954; MacKenzie 1992; Soukoreff and MacKenzie 2004), Lin et al. (2005, 2007) demonstrated the appropriateness of that law and showed that Fitts’ throughput, a combined speed–accuracy measure of pointing efficiency, was 18.2% higher when seated than when walking. Schedlbauer and Heines (2007) also confirmed the suitability of Fitts’ law for modeling pointing performance while walking, and measured standing to have 8.9% higher throughput than walking. They also observed a 2.4× increase in stylus-tapping errors while walking. Chamberlain and Kalawsky (2004) conducted a stylus-tapping test, finding a 19.3% increase in target acquisition time when walking than when standing.

Today’s handheld mobile devices operate more often with fingers than with styli. Schildbach and Rukzio (2010) evaluated finger-based target acquisition while walking, finding a 31.4% increase in time and 30.4% increase in errors when walking compared to standing for small targets (6.74 sq. mm).Footnote 9 Bergstrom-Lehtovirta et al. (2011) also examined finger touch, but across a range of walking speeds, finding selection accuracy to be 100% while standing, 85% while walking at 50% of one’s preferred walking speed (PWS), 80% at full PWS, and degrading quickly thereafter to only 60% at 140% of PWS.

Human performance with wearable computers is also subject to the adverse effects of walking. Zucco et al. (2006) evaluated four handheld input devices in pointing tasks with a heads-up display. While standing, the gyroscope had the fastest selection time at 32.2 s, but while walking, it was the slowest at 120.1 s. The trackball, which had been second while standing at 36.6 s, was the fastest while walking at 37.6 s. Error rates were lowest for the gyroscope when standing and the touchpad when walking. More recently, Dobbelstein et al. (2017) evaluated targeting on a smartwatch while standing and walking, seeing a standing error rate of 2.9% more than triple to 9.7% when walking.

Text Entry. Prior studies of walking with a mobile device have also focused a great deal on text entry, a fundamental task in mobile computing. Mizobuchi et al. (2005) tested stylus keyboards with users who were standing or walking, finding that text entry speed was slower for walking for all but the largest key size. Text entry error rates were also generally higher when walking.Footnote 10 For thumb-based, rather than stylus-based, touch screen text entry, Nicolau and Jorge (2012) found that when text entry and walking speeds were maintained from standing to walking, insertion errors increased from 4.3 to 7.0%, substitution errors increased from 3.8 to 5.5%, and omission errors increased from 1.7 to 3.0%. Clawson et al. (2014) studied the effects of walking on text entry with hardware keys, such as the mini-Qwerty keyboards found on BlackBerry devices. After training each of 36 participants for 300 minutes to become expert mini-Qwerty typists, their study showed that seated and standing text entry rates were about 56.7 words per minute (WPM), while walking entry rates were about 52.5 WPM, a statistically significant reduction. Error rates, however, did not exhibit a difference for the experts tested.

Using keys, whether “soft” or “hard,” to enter text while walking is a difficult task, and other input modalities might be better suited. Price et al. (2004) investigated speech-based text entry while walking, finding that walking increases speech recognition error rates by about 18.3% with an IBM Via Voice Pro system; however, first training the recognizer while walking improves recognition for both walking and seated scenarios. Along similar lines, Vertanen and Kristensson (2009) evaluated Parakeet, a novel mobile user interface atop the PocketSphinx speech recognizer (Huggins-Daines et al. 2006). Entry rates for Parakeet were 18.4 WPM indoors and 12.8 WPM outdoors. Speech recognition error rates were 16.2% indoors and 25.6% outdoors. The authors noted the influence of other situational impairments besides walking, including wind and sunlight glare, adding further difficulty to the outdoor tasks.

Text Readability. Input while walking is only half the challenge; output is affected by walking, too. For example, studies have examined users’ ability to read and comprehend text while on-the-go. Early work on this topic by Mustonen et al. (2004) found that reading speed, visual search speed, and visual search accuracy significantly decreased with increasing walking speed. Similarly, Barnard et al. (2007) conducted a reading comprehension task on a personal digital assistant (PDA) with sitting and walking participants, finding that walking increased reading time by about 14.0% over sitting, and was about 10.0% less accurate. Vadas et al. (2006) obtained a similar result: reading comprehension was 17.1% more accurate for seated participants than walking participants. Similarly, Schildbach and Rukzio (2010) saw an 18.6% decrease in reading speed due to walking in a mobile reading task.

Although much more could be said about the effects of walking on mobile human–computer interaction, this brief review makes it clear that walking imposes a significant hindrance on users’ motor performance, text comprehension, and visual search. Of course, walking imposes additional constraints on people’s abilities, too, such as generating body movement, dividing attention, causing fatigue, and so on. Such effects could be isolated and studied further.

6.2 The Effects of Cold Temperatures

In many parts of the world and for many activities, mobile devices are used out of doors. Capacitive touch screens usually function best when bare fingers are used to operate them, raising the possibility that ambient temperature could be an issue. Two recent investigations have examined the effects of cold temperatures on mobile human–computer interaction. Sarsenbayeva et al. (2016) investigated the effects of cold temperatures on both fine motor performance and visual search time, finding the former was reduced significantly by cold but not the latter. Specifically, after about 10 min of standing in a –10 °C room, touch screen target acquisition in cold temperatures was 2.5% slower, and 4.7% less accurate, than in warm temperatures (a 20 °C room). The authors report that 16 of 24 participants “felt they were less precise in cold rather than in warm [temperatures] … [because of a] sense of cold and numb fingers” (p. 92).

A follow-up study by Goncalves et al. (2017) produced findings from a formal Fitts’ law-style target acquisition task using index fingers and thumbs on a smartphone. They found that Fitts’ throughput was higher in warm temperatures (a 24 °C room) than in cold temperatures (a –10 °C room) for index fingers and thumbs. Interestingly, speed was slower in cold temperatures, but accuracy was lower only for the thumb, not for the index finger. As the authors observed:

One potential reason why this effect was stronger in one-handed operation (i.e. using the thumb) is that […] [the task] required thumb movement and dexterity, whereas when completing the task with the index finger, no finger dexterity was required since the task required more of the wrist movement, than finger movement (p. 362).

Commendably, the authors of these studies did not stop with their empirical findings, but proceeded to take initial steps to sense ambient cold using temperature effects on a smartphone’s battery Sarsenbayeva et al. (2017a). Perhaps future mobile devices and interfaces used for prolonged periods in cold weather will automatically adapt to such environments.

6.3 The Effects of Divided Attention and Distraction

In 1971, Herb Simon famously wrote (Simon 1971):

In an information-rich world, the wealth of information means a dearth of something else: a scarcity of whatever it is that information consumes. What information consumes is rather obvious: it consumes the attention of its recipients. Hence a wealth of information creates a poverty of attention and a need to allocate that attention efficiently among the overabundance of information sources that might consume it (pp. 40–41).

Today, nigh on 50 years after Simon’s quote, it is even more relevant in the context of mobile human–computer interaction, where situational, contextual, and environmental factors can contribute to regular and repeated distractions, resulting in highly fragmented and divided attention.

Oulasvirta (2005) and Oulasvirta et al. (2005) were pioneers in quantifying just how fragmented our attention is when computing on-the-go. Specifically, they studied attention fragmentation arising from participants moving through urban settings: walking down quiet and busy streets, riding escalators, riding buses, and eating at cafés. Findings indicate that on a mobile Web browsing task, depending on the situation, participants’ attention focused on the device for only about 6–16 s before switching away for about 4–8 s and then returning. Clearly, the fragmentation of our attention during mobile interactions is very different from that during focused desktop work (Kristoffersen and Ljungberg 1999).

Bragdon et al. (2011) studied three different levels of distraction, with a particular interest in how touch screen gestures compare to soft buttons. Distractions were operationalized using situation awareness tasks, with three levels: sitting with no distractions, treadmill walking with a moderate situation awareness task, and sitting with an attention-saturating task. They found that bezel marks (Roth and Turner 2009)—swipe gestures that begin off-screen on a device’s bezel and come onto the screen to form a specific shape (e.g., an “L”)—were 13.4–18.7% faster with slightly better accuracy than conventional soft buttons for the distraction tasks. The time taken to use soft buttons degraded with increasing levels of distraction, but not so with bezel marks. Also, the number of glances at the screen with soft buttons was over 10× as much than for bezel marks, occurring on 98.8% of trials compared to just 3.5% for bezel marks! These results show that conventional touch screen soft buttons demand much more time and attention than do touch screen gestures.

People with health-induced impairments and disabilities also experience SIIDs (Kane et al. 2009). Abdolrahmani et al. (2016) conducted interviews with eight blind participants about their experiences of SIIDs. Problems that emerged included the challenges of one-handed device use while using a cane; the inability to hear auditory feedback in noisy or crowded settings; an unwillingness to use a device on buses or trains due to privacy and security concerns; difficulties entering text when riding public transportation due to vibration and ambient noise; cold and windy weather affecting device use; the inability to use a device while encumbered (e.g., while carrying shopping bags); the demands of attending to the environment (e.g., curbs, steps, cars, etc.) while also interacting with a device; and the challenge of covertly and quickly interacting with a device without violating social norms (e.g., when in a meeting). Thus, the SIIDs experienced by blind users are much the same as, but more intrusive than, the SIIDs experienced by sighted users. Although challenging to design, interfaces that enable blind users to overcome SIIDs undoubtedly would be more usable interfaces for sighted people, too.

7 Some Example Projects Addressing SIIDs in Mobile Human–Computer Interaction

General context-aware computing infrastructures have been pursued for many years (e.g., Dey et al. 2001; Mäntyjärvi and Seppänen 2003; Pascoe 1998; Schmidt et al. 1999a, b), but as shown in Table 5.1, specific technological innovations have also been pursued to sense or accommodate certain SIIDs. In this section, six specific projects by the author and his collaborators are reviewed. These projects attempt to sense, model, and in some cases, ameliorate, the impairing or disabling effects of walking, one-handed grips, diverted gaze, and even intoxication. In every project, only commodity devices are used without any custom or add-on sensors. Here, only brief descriptions of each project are given; for more in-depth treatments, the reader is directed to the original sources.

Walking User Interfaces. Kane et al. (2008) explored walking user interfaces (WUIs), which adapt their screen elements to whether the user is walking or standing. Specifically, in their prototype, buttons, list items, and fonts all increased 2–3× in size when moving from standing to walking. Study results showed that walking with a nonadaptive interface increased task time by 18%, but with an adaptive WUI, task time was not increased.

WalkType. Goel et al. (2012a) addressed the challenge of two-thumb touch screen typing while walking. Their prototype utilized machine learning to detect systematic inward rotations of the thumbs during walking. Specifically, features including finger location, touch duration, and travel distance were combined with accelerometer readings to train decision trees for classifying keypresses. In a study, WalkType was about 50% more accurate and 12% faster than an equivalent conventional keyboard, making mobile text entry much more accurate while walking.

GripSense. WalkType assumed a hand posture of two-thumb typing using two hands, common for mobile text entry. To detect hand posture in the first place, Goel et al. (2012b) created GripSense, which detected one- or two-handed interaction, thumb or index finger use, use on a table, and even screen pressure (without using a pressure-sensitive screen). GripSense worked by using interaction signals (e.g., touch down/up, thumb/finger swipe arc, etc.) as well as tilt inference from the accelerometers. For pressure sensing, it measured the dampening of the gyroscope when the vibration motor was “pulsed” in a short burst during a long-press on the screen. GripSense could also detect when a device was squeezed (e.g., to silence an incoming call without removing the device from a pocket). GripSense’s classification accuracy was about 99.7% for device in-hand versus on-the-table, 84.3% for distinguishing three hand postures within five taps or swipes, and 95.1% for distinguishing three levels of pressure.

ContextType. In something of a blend of WalkType and GripSense, Goel et al. (2013) created ContextType, a system that improved touch screen typing by inferring hand posture to employ different underlying keypress classification models. Specifically, ContextType differentiated between typing with two thumbs, the left or right thumb only, and the index finger. ContextType combined a user’s personalized touch model with a language model to classify touch events as keypresses, improving text entry accuracy by 20.6%.

SwitchBack. In light of Oulasvirta’s (2005) and Oulasvirta et al.’s (2005) findings about fragmented and divided attention, Mariakakis et al. (2015) created SwitchBack, which aided users returning their gaze to a screen after looking away. Specifically, SwitchBack tracked a user’s gaze position using the front-facing smartphone camera. When the user looked away from the screen, SwitchBack noted the last viewed screen position; when the user returned her gaze, SwitchBack highlighted the last viewed screen area. The SwitchBack prototype was implemented primarily for screens full of text, such as newspaper articles, where “finding one’s place” in a sea of words and letters can be a significant challenge when reading on-the-go. In their study, Mariakakis et al. found that SwitchBack had an error rate of only 3.9% and improved mobile reading speeds by 7.7%.

Drunk User Interfaces. Mariakakis et al. (2018) showed how to detect blood alcohol level (BAL) using nothing more than a commodity smartphone. They termed a set of user interfaces for administering a quick battery of human performance tasks “drunk user interfaces,” and these included interfaces for (1) touch screen typing, (2) swiping, (3) holding a smartphone flat and still while also obtaining heart rate measurements through the phone’s camera covered by the index finger (Han et al. 2015), (4) simple reaction time, and (5) choice reaction time. The DUI app, which combined these tasks, used random forest machine learning to create personalized models of task performance for each user. In their longitudinal study, which progressively intoxicated participants over subsequent days, Mariakakis et al. showed that DUI estimated a person’s BAL as measured by a breathalyzer with an absolute mean error of 0.004% ± 0.005%, and a Pearson correlation of r = 0.96. This high level of accuracy was achievable in the DUI app in just over four minutes of use!

8 Future Directions

This chapter has provided an overview of SIIDs. Collectively, the topic of SIIDs covers a large space concerning both science and invention, ranging from studying the effects of certain activities (like walking or driving) on mobile interaction, to devising ways of sensing, modeling, and adapting to environmental factors and their effects on users, like cold temperatures. Even though we are approaching 20 years since Sears and Young coined the term “situationally-induced impairments and disabilities” (Sears and Young 2003), we have only begun to understand and overcome SIIDs.

Future work should continue to pursue a deeper, quantitative, and qualitative understanding of SIIDs and their effects on users, especially during mobile human-computer interaction. This improved understanding can then guide the development of better methods of sensing, modeling, and adapting to SIIDs. The example projects by the author and his collaborators, described above, show how much can be done with commodity smartphone sensors, including detecting gait, grip, gaze point, and even blood alcohol level. Custom sensors included on future devices ought to be able to do much more, and motivation for them to do so might come from compelling studies showing how SIIDs affect users and usage. Clever adaptive strategies can then improve devices’ interfaces for mobile users in just the right ways, at just the right times.

For researchers and developers, software and sensor toolkits to support the rapid development and deployment of platform-independent context-aware applications and services would be a welcome priority for future work. Ideally, such toolkits must take full advantage of each mobile platform on which they are deployed, while allowing developers to remain above the gritty details of specific hardware and software configurations. Simplifying the development and deployment of context-aware applications and services will enable the greater proliferation of context-awareness, with benefits to all.

9 Author’s Opinion of the Field

Including a chapter on situationally-induced impairments and disabilities (SIIDs) in a book on Web and computer accessibility is admittedly controversial, and perhaps to some readers, objectionable. At the outset of this chapter, I presented my arguments for why SIIDs are real, relevant, and even potentially dangerous—in my view, they are worthy of our research and development attention. But SIIDs do sit apart from sensory, cognitive, motor, and mobility impairments and disabilities, and they should indeed be regarded differently. To date, researchers within the fields of assistive technology and accessible computing have not widely embraced SIIDs as a research topic. For example, the ACM’s flagship accessible computing conference, ASSETS, has published very few papers devoted to the topic thus far. This lack of embrace is, I think, less due to a rejection of SIIDs as worthy of study and more due to a lack of awareness of SIIDs as accessibility challenges. Furthermore, as a research topic, SIIDs sit at the intersection of accessible computing and ubiquitous computing, subfields that share few researchers between them. Where research into SIIDs does appear, it tends to be published at mainstream conferences in human–computer interaction, mobile computing, or ubiquitous computing. Publications tend to present scientific studies about the effects of SIIDs more often than technological solutions for ameliorating those effects. The predominance of studies over inventions betrays a relatively immature topic of inquiry, one whose scientific foundations are still being established. Ultimately, the range of issues raised by SIIDs is vast, and solutions to SIIDs will come both from within and beyond the field of accessible computing. That is a good thing, as SIIDs have the potential to broaden the conversation about accessibility and its relevance not just to people with disabilities, but to all users of technology.

10 Conclusion

This chapter has presented situationally-induced impairments and disabilities, or SIIDs—what they are; their origins; how they relate to health-induced impairments, disabilities, and handicaps; the distinctions between situations, contexts, and environments; a categorization of many situational factors and prior research on them; a two-dimensional space of SIIDs with examples; some empirical findings about SIIDs as they relate to mobile human–computer interaction; and a series of technological innovations by the author and his collaborators for sensing and overcoming them.

It is the author’s hope that the reader will be convinced that SIIDs, however, momentary, are real. They matter, because successful interactions with technologies are key to enjoying the advantages, privileges, and responsibilities that those technologies bring. SIIDs deserve our attention, because we can do more to make technologies respect human situations, contexts, and environments and better serve their users. In the end, more than just usability is at stake: safety, health, engagement with others, participation in society, and a sense that we control our devices, rather than our devices controlling us, depends on getting this right.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

Some of the author’s projects are offered as examples near the end of this chapter.

- 9.

This target size was based on the Apple iPhone Human Interface Guidelines of 2009.

- 10.

Unfortunately, specific numeric results are not reported directly in the paper. They are graphed but only support visual estimation.

References

Abdolrahmani A, Kuber R, Hurst A (2016) An empirical investigation of the situationally-induced impairments experienced by blind mobile device users. In: Proceedings of the ACM web for all conference (W4A’16). ACM Press, New York. Article No. 21. https://doi.org/10.1145/2899475.2899482

Adamczyk PD, Bailey BP (2004) If not now, when?: the effects of interruption at different moments within task execution. In: Proceedings of the ACM conference on human factors in computing systems (CHI’04). ACM Press, New York, pp 271–278. https://doi.org/10.1145/985692.985727

Alm H, Nilsson L (1994) Changes in driver behaviour as a function of handsfree mobile phones—a simulator study. Accid Anal Prev 26(4):441–451. https://doi.org/10.1016/0001-4575(94)90035-3

Alm H, Nilsson L (1995) The effects of a mobile telephone task on driver behaviour in a car following situation. Accid Anal Prev 27(5):707–715. https://doi.org/10.1016/0001-4575(95)00026-V

Azenkot S, Zhai S (2012) Touch behavior with different postures on soft smartphone keyboards. In: Proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’14). ACM Press, New York, pp 251–260. https://doi.org/10.1145/2371574.2371612

Azenkot S, Bennett CL, Ladner RE (2013) DigiTaps: eyes-free number entry on touchscreens with minimal audio feedback. In: Proceedings of the ACM symposium on user interface software and technology (UIST’13). ACM Press, New York, pp 85–90. https://doi.org/10.1145/2501988.2502056

Barnard L, Yi JS, Jacko JA, Sears A (2005) An empirical comparison of use-in-motion evaluation scenarios for mobile computing devices. Int J Hum-Comput Stud 62(4):487–520. https://doi.org/10.1016/j.ijhcs.2004.12.002

Barnard L, Yi JS, Jacko JA, Sears A (2007) Capturing the effects of context on human performance in mobile computing systems. Pers Ubiquitous Comput 11(2):81–96. https://doi.org/10.1007/s00779-006-0063-x

Baudisch P, Chu G (2009) Back-of-device interaction allows creating very small touch devices. In: Proceedings of the ACM conference on human factors in computing systems (CHI’09). ACM Press, New York, pp 1923–1932. https://doi.org/10.1145/1518701.1518995

Baudisch P, Xie X, Wang C, Ma W-Y (2004) Collapse-to-zoom: viewing web pages on small screen devices by interactively removing irrelevant content. In: Proceedings of the ACM symposium on user interface software and technology (UIST’04). ACM Press, New York, pp 91–94. https://doi.org/10.1145/1029632.1029647

Bergstrom-Lehtovirta J, Oulasvirta A, Brewster S (2011) The effects of walking speed on target acquisition on a touchscreen interface. In: Proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’11). ACM Press, New York, pp 143–146. https://doi.org/10.1145/2037373.2037396

Blomkvist A-C, Gard G (2000) Computer usage with cold hands; an experiment with pointing devices. Int J Occup Saf Ergon 6(4):429–450. https://doi.org/10.1080/10803548.2000.11076466

Boring S, Ledo D, Chen XA, Marquardt N, Tang A, Greenberg S (2012) The fat thumb: using the thumb’s contact size for single-handed mobile interaction. In: Proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’12). ACM Press, New York, pp 39–48. https://doi.org/10.1145/2371574.2371582

Bowe F (1987) Making computers accessible to disabled people. M.I.T. Technol Rev 90:52–59, 72

Bragdon A, Nelson E, Li Y, Hinckley K (2011) Experimental analysis of touch-screen gesture designs in mobile environments. In: Proceedings of the ACM conference on human factors in computing systems (CHI’11). ACM Press, New York, pp 403–412. https://doi.org/10.1145/1978942.1979000

Brewster S (2002) Overcoming the lack of screen space on mobile computers. Pers Ubiquitous Comput 6(3):188–205. https://doi.org/10.1007/s007790200019

Brewster S, Lumsden J, Bell M, Hall M, Tasker S (2003) Multimodal “eyes-free” interaction techniques for wearable devices. In: Proceedings of the ACM conference on human factors in computing systems (CHI’03). ACM Press, New York, pp 473–480. https://doi.org/10.1145/642611.642694

Brewster S, Chohan F, Brown L (2007). Tactile feedback for mobile interactions. In: Proceedings of the ACM conference on human factors in computing systems (CHI’07). ACM Press, New York, pp 159–162. https://doi.org/10.1145/1240624.1240649

Bristow HW, Baber C, Cross J, Knight JF, Woolley SI (2004) Defining and evaluating context for wearable computing. Int J Hum-Comput Stud 60(5–6):798–819. https://doi.org/10.1016/j.ijhcs.2003.11.009

Brody JE (2015) Not just drivers driven to distraction. The New York Times, p D5. https://well.blogs.nytimes.com/2015/12/07/its-not-just-drivers-being-driven-to-distraction/

Brookhuis KA, de Vries G, de Waard D (1991) The effects of mobile telephoning on driving performance. Accid Anal Prev 23(4):309–316. https://doi.org/10.1016/0001-4575(91)90008-S

Brown ID, Tickner AH, Simmonds DCV (1969) Interference between concurrent tasks of driving and telephoning. J Appl Psychol 53(5):419–424. https://doi.org/10.1037/h0028103

Brumby DP, Salvucci DD, Howes A (2009) Focus on driving: how cognitive constraints shape the adaptation of strategy when dialing while driving. In: Proceedings of the ACM conference on human factors in computing systems (CHI’09). ACM Press, New York, pp 1629–1638. https://doi.org/10.1145/1518701.1518950

Chamberlain A, Kalawsky R (2004) A comparative investigation into two pointing systems for use with wearable computers while mobile. In: Proceedings of the IEEE international symposium on wearable computers (ISWC’04). IEEE Computer Society, Los Alamitos, pp 110–117. https://doi.org/10.1109/ISWC.2004.1

Chen XA, Grossman T, Fitzmaurice G (2014) Swipeboard: a text entry technique for ultra-small interfaces that supports novice to expert transitions. In: Proceedings of the ACM symposium on user interface software and technology (UIST’14). ACM Press, New York, pp 615–620. https://doi.org/10.1145/2642918.2647354

Cheng L-P, Hsiao F-I, Liu Y-T, Chen MY (2012a) iRotate: automatic screen rotation based on face orientation. In: Proceedings of the ACM conference on human factors in computing systems (CHI’12). ACM Press, New York, pp 2203–2210. https://doi.org/10.1145/2207676.2208374

Cheng L-P, Hsiao F-I, Liu Y-T, Chen MY (2012b) iRotate grasp: automatic screen rotation based on grasp of mobile devices. In: Adjunct proceedings of the ACM symposium on user interface software and technology (UIST’12). ACM Press, New York, pp 15–16. https://doi.org/10.1145/2380296.2380305

Chickowski E (2004) It’s all about access. Alsk Airl Mag 28(12):26–31, 80–82

Christie J, Klein RM, Watters C (2004) A comparison of simple hierarchy and grid metaphors for option layouts on small-size screens. Int J Hum-Comput Stud 60(5–6):564–584. https://doi.org/10.1016/j.ijhcs.2003.10.003

Ciman M, Wac K (2018) Individuals’ stress assessment using human-smartphone interaction analysis. IEEE Trans Affect Comput 9(1):51–65. https://doi.org/10.1109/TAFFC.2016.2592504

Ciman M, Wac K, Gaggi O (2015) iSenseStress: assessing stress through human-smartphone interaction analysis. In: Proceedings of the 9th international conference on pervasive computing technologies for healthcare (PervasiveHealth’15). ICST, Brussels, pp 84–91. https://dl.acm.org/citation.cfm?id=2826178

Clarkson E, Clawson J, Lyons K, Starner T (2005) An empirical study of typing rates on mini-QWERTY keyboards. In: Extended abstracts of the ACM conference on human factors in computing systems (CHI’05). ACM Press, New York, pp 1288–1291. https://doi.org/10.1145/1056808.1056898

Clawson J, Starner T, Kohlsdorf D, Quigley DP, Gilliland S (2014) Texting while walking: an evaluation of mini-qwerty text input while on-the-go. In: Proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’14). ACM Press, New York, pp 339–348. https://doi.org/10.1145/2628363.2628408

Costa J, Adams AT, Jung MF, Guimbretière F, Choudhury T (2016) EmotionCheck: leveraging bodily signals and false feedback to regulate our emotions. In: Proceedings of the ACM conference on pervasive and ubiquitous computing (UbiComp’16). ACM Press, New York, pp 758–769. https://doi.org/10.1145/2971648.2971752

Czerwinski M, Horvitz E, Wilhite S (2004) A diary study of task switching and interruptions. In: Proceedings of the ACM conference on human factors in computing systems (CHI’04). ACM Press, New York, pp 175–182. https://doi.org/10.1145/985692.985715

Dey AK, Abowd GD, Salber D (2001) A conceptual framework and a toolkit for supporting the rapid prototyping of context-aware applications. Hum-Comput Interact 16(2):97–166. https://doi.org/10.1207/S15327051HCI16234_02

Dinges DF, Powell JW (1985) Microcomputer analyses of performance on a portable, simple visual RT task during sustained operations. Behav Res Methods Instrum Comput 17(6):652–655. https://doi.org/10.3758/BF03200977

Dobbelstein D, Haas G, Rukzio E (2017) The effects of mobility, encumbrance, and (non-)dominant hand on interaction with smartwatches. In: Proceedings of the ACM international symposium on wearable computers (ISWC’17). ACM Press, New York, pp 90–93. https://doi.org/10.1145/3123021.3123033

Dourish P (2004) What we talk about when we talk about context. Pers Ubiquitous Comput 8(1):19–30. https://doi.org/10.1007/s00779-003-0253-8

Fisher AJ, Christie AW (1965) A note on disability glare. Vis Res 5(10–11):565–571. https://doi.org/10.1016/0042-6989(65)90089-1

Fischer JE, Greenhalgh C, Benford S (2011) Investigating episodes of mobile phone activity as indicators of opportune moments to deliver notifications. In: Proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’11). ACM Press, New York, pp 181–190. https://doi.org/10.1145/2037373.2037402

Fitts PM (1954) The information capacity of the human motor system in controlling the amplitude of movement. J Exp Psychol 47(6):381–391

Flatla DR, Gutwin C (2010) Individual models of color differentiation to improve interpretability of information visualization. In: Proceedings of the ACM conference on human factors in computing systems (CHI’10). ACM Press, New York, pp 2563–2572. https://doi.org/10.1145/1753326.1753715

Flatla DR, Gutwin C (2012a) SSMRecolor: improving recoloring tools with situation-specific models of color differentiation. In: Proceedings of the ACM conference on human factors in computing systems (CHI’12). ACM Press, New York, pp 2297–2306. https://doi.org/10.1145/2207676.2208388

Flatla DR, Gutwin C (2012b) Situation-specific models of color differentiation. ACM Trans Access Comput 4(3). Article No. 13. https://doi.org/10.1145/2399193.2399197

Fogarty J, Hudson SE, Atkeson CG, Avrahami D, Forlizzi J, Kiesler S, Lee JC, Yang J (2005) Predicting human interruptibility with sensors. ACM Trans Comput-Hum Interact 12(1):119–146. https://doi.org/10.1145/1057237.1057243

Fridman L, Reimer B, Mehler B, Freeman WT (2018) Cognitive load estimation in the wild. In: Proceedings of the ACM conference on human factors in computing systems (CHI’18). ACM Press, New York. Paper No. 652. https://doi.org/10.1145/3173574.3174226

Fry GA, Alpern M (1953) The effect of a peripheral glare source upon the apparent brightness of an object. J Opt Soc Am 43(3):189–195. https://doi.org/10.1364/JOSA.43.000189

Fussell SR, Grenville D, Kiesler S, Forlizzi J, Wichansky AM (2002) Accessing multi-modal information on cell phones while sitting and driving. In: Proceedings of the human factors and ergonomics society 46th annual meeting (HFES’02). Human Factors and Ergonomics Society, Santa Monica, pp 1809–1813. https://doi.org/10.1177/154193120204602207

Gajos KZ, Hurst A, Findlater L (2012) Personalized dynamic accessibility. Interactions 19(2):69–73. https://doi.org/10.1145/2090150.2090167

Ghandeharioun A, Picard R (2017) BrightBeat: effortlessly influencing breathing for cultivating calmness and focus. In: Extended abstracts of the ACM conference on human factors in computing systems (CHI’17). ACM Press, New York, pp 1624–1631. https://doi.org/10.1145/3027063.3053164

Ghosh D, Foong PS, Zhao S, Chen D, Fjeld M (2018) EDITalk: towards designing eyes-free interactions for mobile word processing. In: Proceedings of the ACM conference on human factors in computing systems (CHI’18). ACM Press, New York. Paper No. 403. https://doi.org/10.1145/3173574.3173977

Goel M, Findlater L, Wobbrock JO (2012a) WalkType: using accelerometer data to accommodate situational impairments in mobile touch screen text entry. In: Proceedings of the ACM conference on human factors in computing systems (CHI’12). ACM Press, New York, pp 2687–2696. https://doi.org/10.1145/2207676.2208662

Goel M, Wobbrock JO, Patel SN (2012b) GripSense: using built-in sensors to detect hand posture and pressure on commodity mobile phones. In: Proceedings of the ACM symposium on user interface software and technology (UIST’12). ACM Press, New York, pp 545–554. https://doi.org/10.1145/2380116.2380184

Goel M, Jansen A, Mandel T, Patel SN, Wobbrock JO (2013) ContextType: using hand posture information to improve mobile touch screen text entry. In: Proceedings of the ACM conference on human factors in computing systems (CHI’13). ACM Press, New York, pp 2795–2798. https://doi.org/10.1145/2470654.2481386

Goncalves J, Sarsenbayeva Z, van Berkel N, Luo C, Hosio S, Risanen S, Rintamäki H, Kostakos V (2017) Tapping task performance on smartphones in cold temperature. Interact Comput 29(3):355–367. https://doi.org/10.1093/iwc/iww029

Gonzalez VM, Mark G (2004) Constant, constant, multi-tasking craziness: managing multiple working spheres. In: Proceedings of the ACM conference on human factors in computing systems (CHI’04). ACM Press, New York, pp 113–120. https://doi.org/10.1145/985692.985707

Goodman MJ, Tijerina L, Bents FD, Wierwille WW (1999) Using cellular telephones in vehicles: safe or unsafe? Transp Hum Factors 1(1):3–42. https://doi.org/10.1207/sthf0101_2

Haberman C (2018) The dangers of walking while texting. The New York Times, p SR10. https://www.nytimes.com/2018/03/17/opinion/do-not-read-this-editorial-while-walking.html

Haigney DE, Taylor RG, Westerman SJ (2000) Concurrent mobile (cellular) phone use and driving performance: task demand characteristics and compensatory processes. Transp Res Part F 3(3):113–121. https://doi.org/10.1016/S1369-8478(00)00020-6

Halvey M, Wilson G, Brewster S, Hughes S (2012) Baby it’s cold outside: the influence of ambient temperature and humidity on thermal feedback. In: Proceedings of the ACM conference on human factors in computing systems (CHI’12). ACM Press, New York, pp 715–724. https://doi.org/10.1145/2207676.2207779

Han T, Xiao X, Shi L, Canny J, Wang J (2015) Balancing accuracy and fun: designing camera based mobile games for implicit heart rate monitoring. In: Proceedings of the ACM conference on human factors in computing systems (CHI’15). ACM Press, New York, pp 847–856. https://doi.org/10.1145/2702123.2702502

Hernandez J, Paredes P, Roseway A, Czerwinski M (2014) Under pressure: sensing stress of computer users. In: Proceedings of the ACM conference on human factors in computing systems (CHI’14). ACM Press, New York, pp 51–60. https://doi.org/10.1145/2556288.2557165

Hincapié-Ramos JD, Irani P (2013) CrashAlert: enhancing peripheral alertness for eyes-busy mobile interaction while walking. In: Proceedings of the ACM conference on human factors in computing systems (CHI’13). ACM Press, New York, pp 3385–3388. https://doi.org/10.1145/2470654.2466463

Horvitz E, Jacobs A, Hovel D (1999) Attention-sensitive alerting. In: Proceedings of the conference on uncertainty in artificial intelligence (UAI’99). Morgan Kaufmann, San Francisco, pp 305–313. https://dl.acm.org/citation.cfm?id=2073831

Horvitz E, Kadie C, Paek T, Hovel D (2003) Models of attention in computing and communication: from principles to applications. Commun ACM 46(3):52–59. https://doi.org/10.1145/636772.636798

Huggins-Daines D, Kumar M, Chan A, Black AW, Ravishankar M, Rudnicky AI (2006) Pocketsphinx: a free, real-time continuous speech recognition system for hand-held devices. In: Proceedings of the IEEE international conference on acoustics speech and signal processing (ICASSP’06). IEEE Signal Processing Society, Piscataway, pp 185–188. https://doi.org/10.1109/ICASSP.2006.1659988

Huot S, Lecolinet E (2006) SpiraList: a compact visualization technique for one-handed interaction with large lists on mobile devices. In: Proceedings of the Nordic conference on human-computer interaction (NordiCHI’06). ACM Press, New York, pp 445–448. https://doi.org/10.1145/1182475.1182533

Iqbal ST, Horvitz E (2007) Disruption and recovery of computing tasks: field study, analysis, and directions. In: Proceedings of the ACM conference on human factors in computing systems (CHI’07). ACM Press, New York, pp 677–686. https://doi.org/10.1145/1240624.1240730

Kane SK, Wobbrock JO, Smith IE (2008) Getting off the treadmill: evaluating walking user interfaces for mobile devices in public spaces. In: Proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’08). ACM Press, New York, pp 109–118. https://doi.org/10.1145/1409240.1409253

Kane SK, Jayant C, Wobbrock JO, Ladner RE (2009) Freedom to roam: a study of mobile device adoption and accessibility for people with visual and motor disabilities. In: Proceedings of the ACM SIGACCESS conference on computers and accessibility (ASSETS’09). ACM Press, New York, pp 115–122. https://doi.org/10.1145/1639642.1639663

Karlson AK, Bederson BB (2007) ThumbSpace: generalized one-handed input for touchscreen-based mobile devices. In: Proceedings of the IFIP TC13 11th international conference on human-computer interaction (INTERACT’07). Lecture notes in computer science, vol 4662. Springer, Berlin, pp 324–338. https://doi.org/10.1007/978-3-540-74796-3_30

Karlson AK, Bederson BB, SanGiovanni J (2005) AppLens and LaunchTile: two designs for one-handed thumb use on small devices. In: Proceedings of the ACM conference on human factors in computing systems (CHI’05). ACM Press, New York, pp 201–210. https://doi.org/10.1145/1054972.1055001

Karlson AK, Bederson BB, Contreras-Vidal JL (2008) Understanding one-handed use of mobile devices. In: Lumsden J (ed.) Handbook of research on user interface design and evaluation for mobile technology. IGI Global, Hershey, pp 86–101. http://www.irma-international.org/chapter/understanding-one-handed-use-mobile/21825/

Karlson AK, Iqbal ST, Meyers B, Ramos G, Lee K, Tang JC (2010) Mobile taskflow in context: a screenshot study of smartphone usage. In: Proceedings of the ACM conference on human factors in computing systems (CHI’10). ACM Press, New York, pp 2009–2018. https://doi.org/10.1145/1753326.1753631

Kern D, Marshall P, Schmidt A (2010) Gazemarks: gaze-based visual placeholders to ease attention switching. In: Proceedings of the ACM conference on human factors in computing systems (CHI’10). ACM Press, New York, pp 2093–2102. https://doi.org/10.1145/1753326.1753646

Kim L, Albers MJ (2001) Web design issues when searching for information in a small screen display. In: Proceedings of the ACM conference on computer documentation (SIGDOC’01). ACM Press, New York, pp 193–200. https://doi.org/10.1145/501516.501555

Kosch T, Hassib M, Woźniak PW, Buschek D, Alt F (2018) Your eyes tell: leveraging smooth pursuit for assessing cognitive workload. In: Proceedings of the ACM conference on human factors in computing systems (CHI’18). ACM Press, New York. Paper No. 436. https://doi.org/10.1145/3173574.3174010

Kristoffersen S, Ljungberg F (1999) Making place to make IT work: empirical explorations of HCI for mobile CSCW. In: Proceedings of the ACM conference on supporting group work (GROUP’99). ACM Press, New York, pp 276–285. https://doi.org/10.1145/320297.320330

Le HV, Mayer S, Bader P, Henze N (2018) Fingers’ range and comfortable area for one-handed smartphone interaction beyond the touchscreen. In: Proceedings of the ACM conference on human factors in computing systems (CHI’18). ACM Press, New York. Paper No. 31. https://doi.org/10.1145/3173574.3173605

Levy DM, Wobbrock JO, Kaszniak AW, Ostergren M (2011) Initial results from a study of the effects of meditation on multitasking performance. In: Extended abstracts of the ACM conference on human factors in computing systems (CHI’11). ACM Press, New York, pp 2011–2016. https://doi.org/10.1145/1979742.1979862

Levy DM, Wobbrock JO, Kaszniak AW, Ostergren M (2012) The effects of mindfulness meditation training on multitasking in a high-stress information environment. In: Proceedings of graphics interface (GI’12). Canadian Information Processing Society, Toronto, pp 45–52. https://dl.acm.org/citation.cfm?id=2305285

Li KA, Baudisch P, Hinckley K (2008) Blindsight: eyes-free access to mobile phones. In: Proceedings of the ACM conference on human factors in computing systems (CHI’08). ACM Press, New York, pp 1389–1398. https://doi.org/10.1145/1357054.1357273

LiKamWa R, Zhong L (2011) SUAVE: sensor-based user-aware viewing enhancement for mobile device displays. In: Adjunct proceedings of the ACM symposium on user interface software and technology (UIST’11). ACM Press, New York, pp 5–6. https://doi.org/10.1145/2046396.2046400

Lim H, An G, Cho Y, Lee K, Suh B (2016) WhichHand: automatic recognition of a smartphone’s position in the hand using a smartwatch. In: Adjunct proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’16). ACM Press, New York, pp 675–681. https://doi.org/10.1145/2957265.2961857

Lin M, Price KJ, Goldman R, Sears A, Jacko, JA (2005) Tapping on the move—Fitts’ law under mobile conditions. In: Proceedings of the 16th annual information resources management association international conference (IRMA’05). Idea Group, Hershey, pp 132–135. http://www.irma-international.org/proceeding-paper/tapping-move-fitts-law-under/32557/

Lin M, Goldman R, Price KJ, Sears A, Jacko J (2007) How do people tap when walking? An empirical investigation of nomadic data entry. Int J Hum-Comput Stud 65(9):759–769. https://doi.org/10.1016/j.ijhcs.2007.04.001

Lu J-M, Lo Y-C (2018) Can interventions based on user interface design help reduce the risks associated with smartphone use while walking? In: Proceedings of the 20th congress of the international ergonomics association (IEA’18). Advances in intelligent systems and computing, vol 819. Springer Nature, Switzerland, pp 268–273. https://doi.org/10.1007/978-3-319-96089-0_29

Lumsden J, Brewster S (2003). A paradigm shift: alternative interaction techniques for use with mobile and wearable devices. In: Proceedings of the conference of the IBM centre for advanced studies on collaborative research (CASCON’03). IBM Press, Indianapolis, pp 197–210. https://dl.acm.org/citation.cfm?id=961322.961355

MacKay B, Dearman D, Inkpen K, Watters C (2005) Walk ’n scroll: a comparison of software-based navigation techniques for different levels of mobility. In: Proceedings of the ACM conference on human-computer interaction with mobile devices and services (MobileHCI’05). ACM Press, New York, pp 183–190. https://doi.org/10.1145/1085777.1085808

MacKenzie IS (1992) Fitts’ law as a research and design tool in human-computer interaction. Hum-Comput Interact 7(1):91–139. https://doi.org/10.1207/s15327051hci0701_3

MacKenzie IS, Castellucci SJ (2012) Reducing visual demand for gestural text input on touchscreen devices. In: Extended abstracts of the ACM conference on human factors in computing systems (CHI’12). ACM Press, New York, pp 2585–2590. https://doi.org/10.1145/2212776.2223840

Macpherson K, Tigwell GW, Menzies R, Flatla DR (2018) BrightLights: gamifying data capture for situational visual impairments. In: Proceedings of the ACM SIGACCESS conference on computers and accessibility (ASSETS’18). ACM Press, New York, pp 355–357. https://doi.org/10.1145/3234695.3241030

Maehr W (2008) eMotion: estimation of user’s emotional state by mouse motions. VDM Verlag, Saarbrücken. https://dl.acm.org/citation.cfm?id=1522361

Manalavan P, Samar A, Schneider M, Kiesler S, Siewiorek D (2002) In-car cell phone use: mitigating risk by signaling remote callers. In: Extended abstracts of the ACM conference on human factors in computing systems (CHI’02). ACM Press, New York, pp 790–791. https://doi.org/10.1145/506443.506599

Mankoff J, Hayes GR, Kasnitz D (2010) Disability studies as a source of critical inquiry for the field of assistive technology. In: Proceedings of the ACM SIGACCESS conference on computers and accessibility (ASSETS’10). ACM Press, New York, pp 3–10. https://doi.org/10.1145/1878803.1878807

Mäntyjärvi J, Seppänen T (2003) Adapting applications in handheld devices using fuzzy context information. Interact Comput 15(4):521–538. https://doi.org/10.1016/S0953-5438(03)00038-9

Marentakis GN, Brewster SA (2006) Effects of feedback, mobility and index of difficulty on deictic spatial audio target acquisition in the horizontal plane. In: Proceedings of the ACM conference on human factors in computing systems (CHI’06). ACM Press, New York, pp 359–368. https://doi.org/10.1145/1124772.1124826

Mariakakis A, Goel M, Aumi MTI, Patel SN, Wobbrock JO (2015) SwitchBack: using focus and saccade tracking to guide users’ attention for mobile task resumption. In: Proceedings of the ACM conference on human factors in computing systems (CHI’15). ACM Press, New York, pp 2953–2962. https://doi.org/10.1145/2702123.2702539

Mariakakis A, Parsi S, Patel SN, Wobbrock JO (2018) Drunk user interfaces: determining blood alcohol level through everyday smartphone tasks. In: Proceedings of the ACM conference on human factors in computing systems (CHI’18). ACM Press, New York. Paper No. 234. https://doi.org/10.1145/3173574.3173808

Mark G, Gonzalez VM, Harris J (2005) No task left behind? Examining the nature of fragmented work. In: Proceedings of the ACM conference on human factors in computing systems (CHI’05). ACM Press, New York, pp 321–330. https://doi.org/10.1145/1054972.1055017

Mark G, Gudith D, Klocke U (2008) The cost of interrupted work: more speed and stress. In: Proceedings of the ACM conference on human factors in computing systems (CHI’08). ACM Press, New York, pp 107–110. https://doi.org/10.1145/1357054.1357072

Mayer S, Lischke L, Woźniak PW, Henze N (2018) Evaluating the disruptiveness of mobile interactions: a mixed-method approach. In: Proceedings of the ACM conference on human factors in computing systems (CHI’18). ACM Press, New York. Paper No. 406. https://doi.org/10.1145/3173574.3173980

McFarlane DC (2002) Comparison of four primary methods for coordinating the interruption of people in human-computer interaction. Hum-Comput Interact 17(1):63–139. https://doi.org/10.1207/S15327051HCI1701_2

McKnight AJ, McKnight AS (1993) The effect of cellular phone use upon driver attention. Accid Anal Prev 25(3):259–265. https://doi.org/10.1016/0001-4575(93)90020-W

Miniotas D, Spakov O, Evreinov G (2003) Symbol creator: an alternative eye-based text entry technique with low demand for screen space. In: Proceedings of the IFIP TC13 9th international conference on human-computer interaction (INTERACT’03). IOS Press, Amsterdam, pp 137–143. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.97.1753

Miyaki T, Rekimoto J (2009) GraspZoom: zooming and scrolling control model for single-handed mobile interaction. In: Proceedings of ACM conference on human-computer interaction with mobile devices and services (MobileHCI’09). ACM Press, New York. Article No. 11. https://doi.org/10.1145/1613858.1613872

Mizobuchi S, Chignell M, Newton D (2005) Mobile text entry: relationship between walking speed and text input task difficulty. In: Proceedings of ACM conference on human-computer interaction with mobile devices and services (MobileHCI’05). ACM Press, New York, pp 122–128. https://doi.org/10.1145/1085777.1085798

Moraveji N, Olson B, Nguyen T, Saadat M, Khalighi Y, Pea R, Heer J (2011) Peripheral paced respiration: influencing user physiology during information work. In: Proceedings of the ACM symposium on user interface software and technology (UIST’11). ACM Press, New York, pp 423–428. https://doi.org/10.1145/2047196.2047250

Moraveji N, Adiseshan A, Hagiwara T (2012) BreathTray: augmenting respiration self-regulation without cognitive deficit. In: Extended abstracts of the ACM conference on human factors in computing systems (CHI’12). ACM Press, New York, pp 2405–2410. https://doi.org/10.1145/2212776.2223810

Mott ME, Wobbrock JO (2019) Cluster touch: improving smartphone touch accuracy for people with motor and situational impairments. In: Proceedings of the ACM conference on human factors in computing systems (CHI’19). ACM Press, New York. To appear

Mustonen T, Olkkonen M, Häkkinen J (2004) Examining mobile phone text legibility while walking. In: Extended abstracts of the ACM conference on human factors in computing systems (CHI’04). ACM Press, New York, pp 1243–1246. https://doi.org/10.1145/985921.986034

Naftali M, Findlater L (2014) Accessibility in context: understanding the truly mobile experience of smartphone users with motor impairments. In: Proceedings of the ACM SIGACCESS conference on computers and accessibility (ASSETS’14). ACM Press, New York, pp 209–216. https://doi.org/10.1145/2661334.2661372

Newell AF (1995) Extra-ordinary human-computer interaction. In: Edwards ADN (ed) Extra-ordinary human-computer interaction: interfaces for users with disabilities. Cambridge University Press, Cambridge, pp 3–18