Abstract

Motor imagery (MI) electroencephalogram (EEG) recognition is currently widely used in brain-computer interface (BCI) devices for people with motor disabilities to achieve various motor interaction functions with the outside world. Over 70% of recent researches use convolutional neural networks (CNN) for recognition of MI. However, using CNN is often difficult to fully utilize the temporal features of long time series in EEG. It may lead to part of the difference information among subjects not being learned by the CNN. In this paper, we introduce a multi-branch CNN that integrates a gate recurrent unit (GRU) module. In this model, the serial module extracts rough features in the temporal and spatial domains, and the parallel module uses different scale convolution blocks and GRU modules to extract time series information in different ranges to improve the precision of learning. This training strategy not only retains the advantages of CNN in extracting temporal and spatial features, but also makes full use of the long time series information extracted by GRU so as to improve the classification accuracy. Experimental results show that our proposed framework has higher performance compared to other typical models, such as DeepNet, EEGNet. The within-subject average classification accuracy reaches 74.4% on BCI competition IV-2a dataset, and the minimum accuracy among subjects increases by 5.6%. This indicates that the proposed model has good generalization ability among different subjects, thus promoting the implementation of personalized real-time BCI devices in the future.

This work is supported by the National Natural Science Foundation of China under Grant No. 62072468.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Electroencephalogram (EEG)

- Motor Imagery (MI)

- Convolutional neural network (CNN)

- Gate Recurrent Unit (GRU)

1 Introduction

Electroencephalogram (EEG) signals contain important information about human conditions, and brain-computer interface (BCI) systems with EEG are currently widely used for normal interaction between people with related disabilities through external devices [3], as well as brainprint identification [7]. For these purposes, using computers to decode EEG into understandable language becomes one of the research focuses of BCI technology.

Motor imagery (MI) refers to imagining an action in the brain but not actually executing it [14], which is different from the EEG signals generated during actual actions [16], and has a wide range of application scenarios. In the field of healthcare, it can be used for the rehabilitation process of patients with paralysis and stroke [11], and can also help patients with motor disorders communicate with the outside world through BCI devices [10], such as mobile assisted robots [5].

EEG signals have relatively low signal to noise ratio and large individual differences [25], and the current research results are not sufficient to achieve a satisfactory level of application. The ultimate goal of EEG signal recognition should be robust, automatic, and with high-precision [1]. In traditional methods, the processing of MI-EEG signals is usually composed of three steps: signal preprocessing, feature extraction, and classification [2]. Traditional feature extraction methods such as common spatial pattern can be used to construct optimal spatial filters. And Principal Component Analysis can be used for data preprocessing which can extract more effective features [24]. However, they are often difficult to meet the actual needs, and often need manual debugging.

In recent years, the widely used deep learning model not only overcomes the limitations of specific tasks, but also further improves the classification accuracy based on data preprocessing. With the future progress in the fields of computer vision and natural language processing, the recognition of MI will be more accurate and rapid. The convolutional neural network (CNN) framework, which is widely used in EEG processing, is successfully applied in MI recognition since its inception. The deep CNN model can use parallel multiscale filter banks to process data, and the resulting inter subject individual network has a good effect on cross subject classification [18]. Using batch normalization (BN) [8], exponential linear units (ELU), and reasonably designing training strategies, the decoding performance of deep convolutional networks [17] are effectively improved.

Recent research on recognizing MI mainly concentrates on various CNN models, as presented in Fig. 1 [1]. This figure also affirms that CNN has an excellent capability to classify EEG-based MI. Nonetheless, the employment of CNN models in MI classification encounters some limitations as the research progressed. For example, although CNN has strong ability to extract local features, its performance in extracting long time series features is poor. However, most studies in improving CNN do not consider the long term series features of the data itself.

In this study, we propose an improved CNN model for EEG-based MI classification tasks. Long-term features in MI signals are extracted using the gate recurrent unit (GRU), while temporal and spatial features are extracted by the convolutional blocks. The biggest difference between our method and other hybrid CNN methods is that GRU extracts features in parallel. This process does not forget the features obtained after convolution operations, and can fully leverage the advantages of CNN in extracting local features and GRU in extracting long time series features. This approach proves to be highly effective in compensating for the large individual difference present in EEG-based MI signals. The proposed model is evaluated on the BCI competition IV-2a, a highly challenging dataset, and achieves competitive accuracy level in within-subject classification. The manuscript is structured as follows: Sect. 2 introduces the related works. Section 3 describes the proposed model’s architecture in detail. Section 4 covers the experimental setup including the dataset, performance metrics, and analysis of the experimental results. The limitations and potential of the proposed model are further discussed. Section 5 summarizes the paper.

2 Related Works

For the problem of less EEG-based MI data, literature [23] uses data augmentation to construct artificial EEG frames to expand the dataset and maintain the original multi-channel EEG input. In order to better address the problem of large individual differences in EEG, literature [5] conducts more in-depth research on feature extraction methods using mixed scale convolution blocks, and finds that the optimal convolution scale varies depending on the subject. And then they use different convolution kernels to extract temporal features. Further, a series parallel structure is used in the deep framework to extract deep features at different scales in the time domain, space domain, and frequency domain [25]. Distinguishing temporal convolution from spatial convolution to extract the feature representation of EEG signals in different spaces simultaneously is a design idea for general parallel structures [9].

A small number of researchers focus on developing and utilizing other networks to improve classification accuracy, such as using wavelet instead of convolutional layer [23]. Among them, recurrent neural networks (RNN) [19] have a good performance. And long short-term memory (LSTM) networks are feasible in analyzing and learning longer range data sequences [15], such as stacked LSTM using FFT preprocessing and downsampling [4] or hybrid LSTM models with CNN decoders [6]. Literature [22] designs a shared neural network independent of the subjects. It uses all data from different subjects to form a model.

3 Methods

3.1 The Framework of the Model Proposed

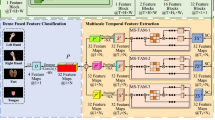

The main framework is shown in Fig. 2. We first extract the shallow features using a shallow feature extraction module (SFE) that can retain time series features [10]. In this process, a portion of the disordered noise can also be removed. Next, we use a deep feature extraction module (DFE) to extract temporal features from three different views, namely, features in a short time period, features in a medium time period, and features in a long time period. Then, we make corresponding feature concatenate according to the data format to get the classification results. The framework of the SFE and DFE will be described in the next section.

3.2 The Framework of the Two Modules

The SFE is shown in Fig. 3. Firstly, the EEG signals from multiple channels are converted into 1D multi-channel data through channel projection. We used a total of 4.5 s of MI and rest process data, which is an input length of 1125. The advantage is that the collected 1D signals are directly utilized, without the need for additional transformations, such as converting to a designed fixed form of 2D feature map. Next we apply temporal and spatial convolutions after converting the channel dimensions to extract shallow features. The input is 1D time series information from 22 channels. Channel projection using \(1 \times 1\) kernel convolution layer and converts the features into 35 channels. In order to better process the feature, the number of channels is converted into the second dimension of the data, which is achieved through BN. Then \(1 \times 11\) kernel is applied to extract spatial features and transforming the output into 25 channels, i.e. (25, 35, 1115). \(35 \times 1\) time kernel is used to convert the second dimension to the temporal dimension, the common input for the DFE module is obtained. We record it as Z.

The DFE module consists of three branches. On the one hand, we continue to exploit the advantages of CNN in extracting EEG-based MI signal features, using different convolution kernels to extract high-level features on medium and short time scales. The medium time scale information is extracted by dilated convolution with a dilation rate of 2, which means skipping a data point and select a point as the object of the convolution operation. The convolution kernel size is shown in (1).

where d is the size of the convolution kernel, ker is the original size of the kernel, and p is the dilation rate. This can expand the receptive field of the convolution, resulting in the desired medium length of time feature. The receptive field can be calculated by (2).

where f is the receptive field, ker is the original size of the kernel, and p is the dilation rate. On the other hand, there are large individual differences in EEG-based MI signals. Using GRU to extract the long time series features of EEG data can better solve the problem without destroying the feature extracted by CNN. The DFE architecture is shown in Fig. 4. The first branch performs a max pooling and a convolution operation with a size of (100, \(1 \times 1\)). And then performing \(11 \times 1\) filtered convolution to obtain a medium length time feature (100, 1, 371). The second branch performs a max pooling and convolution operation with 100 convolution kernels of size \(1 \times 1\). And then performing a convolution operation with size \(11 \times 1\) to obtain a short time feature, and then fuses the first two branches and the Z after max pooling to obtain a feature of (225, 1, 371). In order to highlight the role of long-term features, we perform continuous pooling, convolution, and pooling of the first two branch fused feature to reduce the size of it. Finally, we obtain a 1D feature by flatten, and the temporal dimension of the feature obtained after each pooling is one-third of the original size with convolution kernel size \(1 \times 11\). In the third branch, Z is put into GRU, and the hidden size of the GRU is set to 128, that is, 128 hidden state neurons. We select the last hidden state of the hidden layer as the output of the third branch, and concatenate the flattened results obtained from the first two branches to obtain a 1D feature with a length of 8453. The hidden layer output is as follows:

where \(y_t\) is the hidden state variable at time t, \(z_t\) is the state variable of the update gate at time t, \(y_{t-1}\) is the hidden state variable at time \(t-1\), \(d_t\) is the candidate hidden state variable at time t, and \(y_t\) is affected by both the input at time t and the candidate hidden state variable at time \(t-1\). Equation (3) means that each hidden state variable \(y_t\) is affected by the previous hidden state variable \(y_{t-1}\). When long-term sequence information is used as input, GRU continuously forgets and remembers to enable the hidden state variable \(y_t\) to obtain important information in the sequence.

At last, we put the feature into fully connected layer to obtain an output with a length of 4, and use it as the input of softmax to finally obtain the classification result. ELU is used as the activation function for convolution and fully connected layers. Dropout and BN are used before and after convolution and fully connected layer to reduce the risk of overfitting.

The loss function uses cross entropy loss.

where l is the loss, \(td_i (x)\) is the probability distribution of the i-th tag in the target domain, and \(pd_i (x)\) is the probability distribution of the i-th tag in the prediction domain.

4 Experiment

4.1 Dataset

The BCI competition IV-2a dataset is a widely used MI dataset [1], which is a 4-class dataset that includes four types of MI: left hand, right hand, tongue, and foot. EEG data were obtained from 9 subjects, each of whom completed a session on two different dates. One session was used as the training and validation set for the classifier, and the other session was used as the test set. Each session is further divided into 6 runs, and each run contains 48 trials. These 48 trials have 12 of each type, including left hand, right hand, tongue, and foot, but the order is random. The process of a trial is as follows. When t = 0 s, the screen displays the cross and emits a prompt tone. When t = 2 s, an MI category prompt appears on the screen, indicating the MI category that the subject needs to perform next. The prompt lasts for 1.25 s. When t = 3 s, the subject begins to perform the corresponding MI, which lasts for 3 s. When t = 6 s, this trial ends, enters a short rest, and then proceeds to the next trial. The experimental content and corresponding time are shown in Fig. 5.

Including the short break, each trial takes approximately 8 s. The collected data includes a total of 22 EEG channels and 3 electrooculogram channels, with a sampling signal frequency of 250 Hz. Bandpass filtering is performed between 0.5 Hz and 100 Hz, and a 50 Hz notch filter is used to remove line noise.

4.2 Train Approach

The experiment uses the Adam optimizer, which has been proven to be the most widely used and effective EEG-based MI classification optimization algorithm [2], with advantages such as simple implementation and low memory requirements. The initial learning rate is set to 0.0001, and the adaptive attenuation learning rate can ensure the generalization ability for multiple subjects to a certain extent. Because during the experiment, it can be observed that the convergence speed is slightly different when using data from different subjects for training. If a fixed learning rate is used, it may lead to significant differences in training speed among subjects. The batch size for training is 260, and the batch size for validation is 20.

The BCI competition IV-2a dataset is stored in gdf format during collection, which is a commonly used format for EEG signals. It needs to be decoded into mat format for training. The converted data size should be (2, 288, 22, 1125), where 2 is the data and its labels, 288 is the number of trials per session, and 1125 is the number of sampling points per trial.

This paper randomly divides the training data of each subject into 10 equal parts, with one part serving as the validation part and the other parts serving as the training parts. We repeat the experiment 10 times and calculate the average of the results. The above method is called the 10-fold cross method. After the experiment, 9 separate models were established for 9 subjects, and for each model, the training and testing data came from the same subject. This kind of within-subject classification does not require data from others for training, and has high application value in real-time BCI devices.

4.3 Experiment Results

We compared the proposed framework with deep learning methods such as DeepNet, EEGNet, ShallowNet and SPCNN. The results of these methods are introduced from literature [25], and we used the same experimental setup and training methodologies as these methods. The average test accuracy is shown in Fig. 6. In our method, the average testing accuracy of within-subject classification reaches 74.4%.

Due to the individual differences among subjects, the average test accuracy often fluctuates greatly in the within-subject classification [21]. The comparison for each subject is shown in Table 1. We focus on the maximum deviation from the average, as this directly affects the experience among BCI users. In our method, the lowest testing accuracy can reach 63.3%, which is 5.6% higher than the SPCNN [25] method. The maximum deviation from the average is 17.8% which means that the user experience of BCI will not be too bad.

The smaller the value of cross entropy loss, the better the prediction effect of the model. One reason why the recognition accuracy is not very high is that the data distribution of the test data and the training data in the BCI competition IV-2a dataset is different, and they are collected on different days. One usage scenario for BCI devices is to collect data from the same day for real-time training and use. In this case, the magnitude of cross entropy loss under the same training time for the same equipment has become an important indicator of practicality. Due to the different convergence rates of various models, we uniformly select the minimum loss comparison at 1000 epochs, as shown in Table 2. Our method is superior to the classic DeepNet [25] and EEGNet [25] methods.

4.4 Ablation Study

The effectiveness of CNN in extracting features at different scales has been proven by a large number of studies [24]. For the effectiveness of the GRU branch, the accuracy comparison before and after removing the GRU branch is shown in Table 3. It can be seen that the extraction of deep features by GRU plays a certain role in improving the accuracy of the model.

The training set and the test set are data from different dates with a certain number of days interval, and their distribution may vary. Therefore, there are certain requirements for the generalization ability of the network. By using GRU to learn more deep features from EEG, the problem of low recognition accuracy caused by such differences can be alleviated. Compared with LSTM, GRU has a lower complexity and a corresponding reduction in the amount of parameters and computation, which makes it more advantageous when faced with multi-layer neural networks with less data.

4.5 Limitation and Prospect

The network framework proposed in this paper is a little complex for the dataset used. Although methods such as dropout and BN are used, overfitting is still prone to occur on some subjects. In addition, It is obvious that significantly increasing the GRU output will amplify the noise in EEG data, which will cause some invalid features learned, thus discounting the classification accuracy [20]. In addition, an ideal real-time BCI device should use fewer channels rather than a large number of electrodes in the head [13]. However, in existing studies, using fewer channels is difficult to achieve satisfactory performance. Appropriate processing of \(\delta \) (\(\delta \), 0.5-3 Hz), \(\theta \) (\(\theta \), 4-7 Hz), \(\alpha \) ( \(\alpha \), 8-13 Hz), \(\beta \) (\(\beta \), 14-30 Hz) and \(\gamma \) (\(\gamma \), 30-80 Hz) frequency bands can also improve the recognition performance [12], which will further accelerate the development of the BCI field.

5 Conclusion

In this paper, we propose a parallel model combining CNN and GRU, which is capable of learning features from three views. The model uses convolution layer and dilated convolution layer to extract local features, and GRU to extract global time features. Our model can extract time and spatial features of different scales, overcoming individual differences in MI recognition to a certain extent, and has better recognition performance than mainstream networks in within-subject classification. The experimental results also show that the multi-branch parallel structure have good generalization ability among different subjects and the parallel GRU is proven to be effective.

References

Al-Saegh, A., Dawwd, S.A., Abdul-Jabbar, J.M.: Deep learning for motor imagery EEG-based classification: a review. Biomed. Signal Process. Control 63, 102172 (2021)

Altaheri, H., et al.: Deep learning techniques for classification of electroencephalogram (EEG) motor imagery (MI) signals: a review. Neural Comput. Appl. 35, 1–42 (2021)

Ang, K.K., Guan, C.: EEG-based strategies to detect motor imagery for control and rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 25(4), 392–401 (2016)

Bai, Z., Yang, R., Liang, Y.: Mental task classification using electroencephalogram signal. arXiv preprint arXiv:1910.03023 (2019)

Dai, G., Zhou, J., Huang, J., Wang, N.: HS-CNN: a CNN with hybrid convolution scale for EEG motor imagery classification. J. Neural Eng. 17(1), 016025 (2020)

Garcia-Moreno, F.M., Bermudez-Edo, M., Rodríguez-Fórtiz, M.J., Garrido, J.L.: A CNN-LSTM deep learning classifier for motor imagery EEG detection using a low-invasive and low-cost BCI headband. In: 2020 16th International Conference on Intelligent Environments (IE), pp. 84–91. IEEE (2020)

Jin, X., et al.: CTNN: a convolutional tensor-train neural network for multi-task brainprint recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 103–112 (2020)

Li, G., et al.: An EEG data processing approach for emotion recognition. IEEE Sens. J. 22(11), 10751–10763 (2022)

Li, X., et al.: EEG motor imagery classification based on multi-spatial convolutional neural network. In: 2022 5th International Conference on Artificial Intelligence and Big Data (ICAIBD), pp. 433–437. IEEE (2022)

Li, Y., Zhang, X.R., Zhang, B., Lei, M.Y., Cui, W.G., Guo, Y.Z.: A channel-projection mixed-scale convolutional neural network for motor imagery EEG decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 27(6), 1170–1180 (2019)

Lin, P.J., et al.: CNN-based prognosis of BCI rehabilitation using EEG from first session BCI training. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 1936–1943 (2021)

Mai, N.D., Long, N.M.H., Chung, W.Y.: 1D-CNN-based BCI system for detecting emotional states using a wireless and wearable 8-channel custom-designed EEG headset. In: 2021 IEEE International Conference on Flexible and Printable Sensors and Systems (FLEPS), pp. 1–4. IEEE (2021)

Mattioli, F., Porcaro, C., Baldassarre, G.: A 1D CNN for high accuracy classification and transfer learning in motor imagery EEG-based brain-computer interface. J. Neural Eng. 18(6), 066053 (2022)

Musallam, Y.K., et al.: Electroencephalography-based motor imagery classification using temporal convolutional network fusion. Biomed. Signal Process. Control 69, 102826 (2021)

Pathan, S.M.K., Rana, M.M.: Investigation on classification of motor imagery signal using bidirectional LSTM with effect of dropout layers. In: 2022 International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE), pp. 1–5. IEEE (2022)

Petoku, E., Capi, G.: Object movement motor imagery for EEG based BCI system using convolutional neural networks. In: 2021 9th International Winter Conference on Brain-Computer Interface (BCI), pp. 1–5. IEEE (2021)

Schirrmeister, R.T., et al.: Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 38(11), 5391–5420 (2017)

Wu, H., et al.: A parallel multiscale filter bank convolutional neural networks for motor imagery EEG classification. Front. Neurosci. 13, 1275 (2019)

Yang, Y., Wu, Q., Qiu, M., Wang, Y., Chen, X.: Emotion recognition from multi-channel EEG through parallel convolutional recurrent neural network. In: 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–7. IEEE (2018)

Zhang, D., Yao, L., Chen, K., Wang, S., Chang, X., Liu, Y.: Making sense of spatio-temporal preserving representations for EEG-based human intention recognition. IEEE Trans. Cybern. 50(7), 3033–3044 (2019)

Zhang, H.Y., Stevenson, C.E., Jung, T.P., Ko, L.W.: Stress-induced effects in resting EEG spectra predict the performance of SSVEP-based BCI. IEEE Trans. Neural Syst. Rehabil. Eng. 28(8), 1771–1780 (2020)

Zhang, R., Zong, Q., Dou, L., Zhao, X.: A novel hybrid deep learning scheme for four-class motor imagery classification. J. Neural Eng. 16(6), 066004 (2019)

Zhang, Z., et al.: A novel deep learning approach with data augmentation to classify motor imagery signals. IEEE Access 7, 15945–15954 (2019)

Zhao, X., Zhang, H., Zhu, G., You, F., Kuang, S., Sun, L.: A multi-branch 3D convolutional neural network for EEG-based motor imagery classification. IEEE Trans. Neural Syst. Rehabil. Eng. 27(10), 2164–2177 (2019)

Zhao, X., et al.: Deep CNN model based on serial-parallel structure optimization for four-class motor imagery EEG classification. Biomed. Signal Process. Control 72, 103338 (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Zhang, J., Guo, W., Yu, H., Wang, Y. (2024). Motor Imagery EEG Recognition Based on an Improved Convolutional Neural Network with Parallel Gate Recurrent Unit. In: Liu, Q., et al. Pattern Recognition and Computer Vision. PRCV 2023. Lecture Notes in Computer Science, vol 14432. Springer, Singapore. https://doi.org/10.1007/978-981-99-8543-2_26

Download citation

DOI: https://doi.org/10.1007/978-981-99-8543-2_26

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-8542-5

Online ISBN: 978-981-99-8543-2

eBook Packages: Computer ScienceComputer Science (R0)