Abstract

A recommendation system is important in today’s world because it suggests many options to the user that may be useful or appealing. These kinds of recommending systems are used in many applications and devices to focus on delivering the advertisements to a specific set of people. Even the commercial and social network websites depend on the recommendation system as it helps to recommend on basis of user profiles. In this paper, we analyzed the open-source software GitHub, which is a mandatory tool for the developers as it helps them to connect and exchange their works with fellow developers. We have predicted and analyzed the spread of followers to a particular user based on their repository details and nature of relation. This system would be beneficial for the developer to approach or work with other users in the same domain. Nowadays, GitHub is one of the fast-growing platforms with millions of users sharing their projects and by implementing the recommender system helps the user to reduce their time in searching for the similar users. This recommendation system is tested on the real-time data of an existing GitHub user by implementing different concepts of social network analysis such as centrality measure, similarity measure, link prediction, triads, and natural language processing concept stemming.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

By offering code hosting services and team-based software development frameworks, GitHub has gained the attention of many software engineers globally as a prominent software development platform, a social coding, and a web-based Git repository hosting service that enables the developers to engage in open-source project documentation, design, coding, and testing in a socio-cultural context. The important feature of this platform is that it allows following other users or organizations, so that they may get alerts about their public activity. This feature has enhanced user collaboration by encouraging them to explore more repositories and promoting the conversation on GitHub.

Moreover, GitHub is considered as a social media platform designed specifically for developers where they can duplicate the relevant projects and then customize the features of those projects to match their requirements. Also, users can ask for assistance from or have a conversation with people who are on their following lists when they run into technical difficulties while developing a repository, bug patches, code restructuring, and feature enhancements.

Even though the developers have the option to search for the projects or do the above things, it takes a lot of time and might disappoint them. So, to overcome this we analyzed a method to identify and recommend the followers working on similar domains to the user by using different centrality measures of network analysis, link prediction between the users, and recommending followers based on the content-based filtering technique.

The paper has five sections where Sect. 2 describes the related works and emphasizes the key characteristics used by us to implement the different measures of social network analysis and some techniques to identify the prominent followers. Section 3 has a detailed explanation of methodology analysis and the experimental findings from the implementation used to evaluate the effectiveness of the analysis are documented in Sect. 4. Finally the conclusion is given in Sect. 5.

2 Related Works

Initially we need to understand how GitHub works and what features it offers. As a result, we referred to a number of research studies conducted on GitHub with various objectives, where (Joy et al. 2018; Sarwar 2021; Koskela et al. 2018) focused on understanding and analyzing the various parameters of the open-source software GitHub. They were able to identify the key parameters as the number of contributions, programming languages, project size, and project age, and they investigated their significance by developing a conceptual model and experimenting with GitHub data. They were able to confirm that the above parameters have a greater impact on project performance as a result of this.

In paper Radha et al. (2017) proposed the implemented of different centrality measures where the USA road network to attain congestion-free shipment. They analyzed the road network based on the performance indicators and identified ways to reduce traffic issues that could aid in traffic management.

Thangavelu and Jyotishi (2018), Sharma and Mahajan (2017), and Gajanayake et al. (2020) proposed a detailed analysis of GitHub work and its projects. They primarily focused on identifying the characteristics of the firm behind the influence of the performance of the open-source project at different stages. In order to experiment they developed a model with four stages such as issues, forks, pull requests, and releases. Through this, they were able to examine the factors of success of the open-source project at different levels. We were able to gain an understanding of how GitHub works and the role of parameters as a result of these works.

Identification of a key person in the network using the well-known centrality measures such as degree, betweenness, closeness, katz, and Eigenvector centrality was proposed by Landher et al. (2010) and Yadav et al. (2019). They were able to conclude that Katz centrality is better for the network they chose based on the distance between nodes, the number of shortest paths, and ranking by implementing the above measure.

Farooq et al. (2018) implemented the above measures as well as and also the coefficient clustering and page rank to a covert network and were able to visualize the metrics that assisted them in identifying the most influential node. These two works served as a foundation for our research because they provided insight into how to implement those measures in our network.

Liu et al. (2019) proposed a method for predicting links between paper citations based on keywords, times, and author information. A series of experiments were also performed on the real-time dataset. They were able to correlate two papers and create a link in the citation network by assigning a value to a pair that was as accurate as possible. As a result, the dataset validated the feasibility of their proposal. We got an idea for implementing the link prediction to our dataset from this work.

Furthermore, to gain a better understanding of other methods for determining the social network’s most significant node, we referred to the work of Ramya and Bagavathi Sivakumar (2021), who demonstrated that DC-FNN classification outperforms fixed clustering and NLP-based sentiment analysis in identifying the influential node in the Twitter dataset based on categories such as pricing, service, and timeliness. They tested these approaches on the likelihood function using iterative logistic regression analysis and the temporal influential model (TIM), and the results show that they are more accurate than other methods in terms of precision, recall, and f-measure. We gained an understanding of the topic's role in a node's influence level as a result of this research.

3 Methodologies of Analysis

3.1 Data Description

We considered the essential GitHub values such as user, followers, following, gists, and numrepos, because we used real-time data from GitHub users. The values were adequate for our analysis, and a more detailed explanation is provided in the following sections.

3.2 Overview of the Work Flow Model

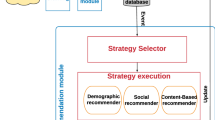

In this diagram, we depicted the flow of methods that assisted us in obtaining the required analysis. There are six divisions in total, with the first two illustrating GitHub access and follower analysis, and the outputs obtained being passed to the other four divisions for detailed analysis. The first division represents the calculation of centrality measures, and the second division represents prediction and follower analysis, which leads to the recommendation of the top 10 followers to the user. Although these two divisions are related, we separated them for clarity. Finally, the fourth division represents the prediction of future links between followers. As needed for our research, the output from each division is considered for analysis in other divisions (Fig. 1).

3.3 Centrality Measures

Our methods consist of different stages where the results obtained from each stage are used in other stages to obtain an efficient output. Initially, we explored and understood the spread of followers for a particular user or developer by establishing a connection to the GitHub API (PyGitHub) using Personal Access Token, and then the username of a particular user (here the user is ‘Anshul Mehta’) was given to get the ego-centric graph of the first level of followers in Fig. 2. For better understanding we explored the followers of the followers and plotted the directed graph.

As our first objective, we implemented centrality measures to identify the prominent follower in the network. Basically, centrality is used in a big network to estimate an individual's properties. The degree of interpersonal interaction and communication within a social network is also measured by centrality. More connections between network members result in greater centrality and always the community development in any online social network is mainly driven by individual nodes having high centrality.

3.3.1 Degree Centrality Measure

Degree centrality (DC) is a metric of exposure that evaluates a node's network connectedness using the number of direct contacts to the node.

In Eq. (1), the adjacency matrix aiv was used to determine the degree centrality of node v.

3.3.2 Betweenness Centrality Measure

Betweenness centrality (BC) measures the length of time a user or node acts as a bridge between two nodes in a sizable community network while taking into account the quickest path.

In Eq. (2), the terms \(\sigma_{st}\) and \(\sigma_{st} \left( v \right)\) stand for the total number of shortest routes from node s to node t and the number of paths that passes through v, respectively.

3.3.3 Closeness Centrality Measure

Closeness centrality (CC) is a measure of how long it takes for information to go from one node to every other node in a network and it is also the length of the shortest path between any two nodes in a complicated network graph.

In Eq. (3), \(d\left( {x,y} \right)\) is the measured distance between the x and y.

3.3.4 Eigenvector Centrality Measure

Eigenvector centrality (EC) is used to assess a node's effect over other nodes in the network. A node’s influence will be stronger if it is connected to significant nodes, but its impact on other nodes will be low if it is connected to less important nodes. So, the significance of the node’s neighbours determines its relevance

In Eq. (4), \(v = \left( {v1,2, \ldots } \right)\) is the eigenvector for the highest eigenvalue \(\tau_{\max } \left( A \right)\) of the adjacent matrix A.

3.4 Similarity Measures

In addition to that coefficient clustering was calculated to identify how closely the followers were present in a network. The obtained values of each follower were stored as a data frame with basic values of a GitHub such as the number of repositories, gists, number of followers, and number of following to get an idea of data spread and analyze the influential parameters.

As a part of the next research, we implemented the link prediction using the cosine distance for the obtained network. Link prediction is used to find a collection of missing or future linkages between the users by forecasting and estimating the likelihood of each non-existing link in the network.

In Eq. (5), the terms \(x.y\) and \(\left| {\left| x \right|} \right|\) and \(\left| {\left| y \right|} \right|\) are the dot product and the length of the vectors x and y, whereas the cross product of the vectors x and y is \(\left| {\left| x \right|} \right|*\left| {\left| y \right|} \right|\).

Whereas, the cosine of the angle created by the two vectors is used to calculate how similar two users or objects are when they are treated as two vectors in m-dimensional space. During filtering, it is often used to compare two documents, and subsequently more and more regularly to compare two individuals or other aspects instead of simply documents. Since it is often utilized in positive space, it determines how close 0 and 1 are in value.

Then to get a clear idea of similarities among the followers, we employed the follower similarity which identifies the similarities between the followers based on ratings given by them and also found the feature similarities in which the similar features among the followers were evaluated. The data frame consisting of these values was formed in Fig. 3. In addition, the pairwise cosine was found to get a clear idea of similarities between the followers. By using these data, similar followers were found based on the aggregate similarity score (based on the number of gists, repositories, followers, and following) with a developer.

As we were able to identify similar followers now, we focused on finding the potential followers to recommend the user based on the content filtration technique. Here the keywords of the repositories are considered as content. The reason for the content-based recommendation is that it recommends based on the user preferences and this would help us in recommending the followers to the developer.

To implement the recommendation, we initially found the keywords of repositories by stage-wise like name, description, and language. Here comes the natural language processing (NLP) where the strings were converted to lowercase and by stemming, we found the keywords. This was done in two approaches such as bag of words and TF-IDF.

In Eq. (6), TF(t, d) is the term frequency of term ‘t’ that appears in a document ‘d’ and IDF(t) is the inverse document frequency.

Bag of words is an NLP model used to count the frequency of each word in a corpus for that document, whereas TF-IDF is used to quantify the importance of the relevance of string representation in documents among the collection of documents. As we obtained the words, now again the cosine distance was checked in a bag of words and TF-IDF to find the similarity. These words were then stored and sorted with the corresponding indexes. Now we developed a recommender where the index fetched from the similarity array and sorted the distance in descending order to get the top 10 followers for both bag of words and TF-IDF.

As we found the followers for the user now, we identified the list of followers who can be recommended to the user. In addition, this was done to all the first-level followers of the developer to check whether our method worked properly or not. From this, we obtained the end result of recommending the followers to the user working on similar domains.

Finally, the future prediction between the user’s followers was calculated using the Jaccard coefficient and Adamic Adar coefficient, and the results were visualized to get an idea of links. We discovered the network’s common triads to gain a better understanding of the links and influence of followers.

4 Experimental Results

This section carries out a thorough experimental and quantitative study of the analysis undertaken and the results were shown with an explanation. Initially, the graph was obtained and then the centrality measures were implemented on the follower’s network in Figs. 4 and 5 depicts the centrality of common neighbours.

Further, the link prediction between the followers was identified using cosine distance and values for the corresponding followers were stored in the data frame as in Fig. 6.

The cosine similarity was calculated between the followers and the features of the data frame which helped us to get an idea of the relations between the followers as in Fig. 7.

The above values were then added to the data frame as in Figs. 8 and 9.

Based on these values the similar feature of the user was calculated by considering the ratings given and it was mapped to the gists as a similar score. So, when we give the gists number the corresponding features get displayed as in Fig. 10.

Based on the score of similar features, the aggregate score was calculated which helped us to find similar followers to the user as in Fig. 11.

As a next step for recommending the followers, we used the content-based filtering approach where the keywords of repositories, such as name, description, and language were extracted and added to the data frame by converting strings to lowercase and stemming as in Fig. 12.

By the method of TF-IDF and bag of words, the frequency of words was found and then cosine similarity was used to identify the similarity between the TF-IDF vectors which was then stored in the sorted list as in Fig. 13.

From the above diagram, we can infer some of the known keywords such as JavaScript, stake, dart, etc. We may thus conclude that extraction was successful since we have now identified the followers who, in light of the list of followers, can be suggested to the user as in Figs. 14 and 15.

The follower’s list closer to the user is printed from the data frame where we can see some followers are the same in both the methods. Figure 16 shows the list of top 10 followers from both the methods.

At last, the objective of our research is to recommend the top 10 followers to the user based on the working of followers in similar technologies or domains. So, the recommendation is done as in Fig. 17.

Additionally, in order to identify the future link prediction between the followers we find Jaccard coefficient and Adamic Adar coefficient measures and also visualized the prediction for the top 100 and top 10 followers. Figure 18 depicts scores of Adamic Adar and Jaccard coefficient.

As per the scores, the graph was plotted where the lines between followers shows the future link predictions for the top 100 and top 10 followers. Figures 19 and 20 depict visualization for Adamic Adar and Jaccard coefficient for top 10 followers.

From these graphs, we could be able to infer that there are high chances of future links between the followers of users. As the outliers are the same in these graphs, all graphs are nearly giving the same prediction of links. Figure 21 depicts the scores between the followers.

The outer clusters remain the same and all the inner clusters are concentrated for a few users forming the bigger circle. On basis of scores obtained from the Jaccard coefficient and Adamic Adar coefficient, the top 10 most predicted links between the user and his followers were evaluated.

The common triads in the network were found to get an idea of links and influence followers. Triads are a set of three actors and the smallest community possible in a network. Through this, we were able to identify that user and closest follower present in all triads as in Fig. 22.

5 Conclusion

Every day, more people join GitHub, but identifying users working in a similar domain is critical for developers so that they can collaborate and work together. We discussed the recommendation system designed specifically for the user and their followers in this paper. We were able to achieve our goals by employing approaches such as centrality measures, link prediction with standard algorithms, and content-based recommendations based on the developer’s real-time data. The results of the tests and a thorough quantitative analysis indicate that the metrics used are effective in suggesting notable followers to the network user.

References

Farooq A, Joyia GJ, Uzair M, Akram U (2018, Mar) Detection of influential nodes using social networks analysis based on network metrics. In: 2018 International conference on computing, mathematics and engineering technologies (iCoMET). IEEE, pp 1–6

Gajanayake RGUS, Hiras MHM, Gunathunga PIN, Supun EJ, Karunasenna A, Bandara P (2020, Dec) Candidate selection for the interview using GitHub profile and user analysis for the position of software engineer. In: 2020 2nd International conference on advancements in computing (ICAC), vol 1. IEEE, pp 168–173

Joy A, Thangavelu S, Jyotishi A (2018, Feb) Performance of GitHub open-source software project: an empirical analysis. In: 2018 Second international conference on advances in electronics, computers and communications (ICAECC). IEEE, pp 1–6

Koskela M, Simola I, Stefanidis K (2018, Sept) Open-source software recommendations using GitHub. In: International conference on theory and practice of digital libraries. Springer, Cham, pp 279–285

Landherr A, Friedl B, Heidemann J (2010) A critical review of centrality measures in social networks. Bus Inf Syst Eng 2(6):371–385

Liu H, Kou H, Yan C, Qi L (2019) Link prediction in paper citation network to construct paper correlation graph. EURASIP J Wirel Commun Netw 2019(1):1–12

Radha D, Kavikuil K, Keerthi R (2017, Dec) Centrality measures to analyze transport network for congestion free shipment. In: 2017 2nd International conference on computational systems and information technology for sustainable solution (CSITSS). IEEE, pp 1–5

Ramya GR, Bagavathi Sivakumar P (2021) An incremental learning temporal influence model for identifying topical influencers on Twitter dataset. Soc Netw Anal Min 11(1):1–16

Sarwar MU (2021) Recommending whom to follow on GitHub. Doctoral dissertation, North Dakota State University

Sharma S, Mahajan A (2017) Suggestive approaches to create a recommender system for GitHub. Int J Inf Technol Comput Sci 9(8):48–55

Thangavelu S, Jyotishi A (2018, June) Determinants of open source software project performance: a stage-wise analysis of GitHub projects. In: Proceedings of the 2018 ACM SIGMIS conference on computers and people research, pp 41–42

Yadav AK, Johari R, Dahiya R (2019, Oct) Identification of centrality measures in social network using network science. In: 2019 International conference on computing, communication, and intelligent systems (ICCCIS). IEEE, pp 229–234

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Nagaraj, R., Ramya, G.R., Yougesh Raj, S. (2024). GitHub Users Recommendations Based on Repositories and User Profile. In: Shetty, N.R., Prasad, N.H., Nagaraj, H.C. (eds) Advances in Communication and Applications . ERCICA 2023. Lecture Notes in Electrical Engineering, vol 1105. Springer, Singapore. https://doi.org/10.1007/978-981-99-7633-1_10

Download citation

DOI: https://doi.org/10.1007/978-981-99-7633-1_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-7632-4

Online ISBN: 978-981-99-7633-1

eBook Packages: EngineeringEngineering (R0)