Abstract

Multi-source RS image fusion technology is mainly a data processing technology to organize and correlate the image data of the same scene under different imaging modes through specific calculation rules, and then obtain more accurate, perfect and rich information of comprehensive images. The information contained in remote sensing (RS) images of different sensors is imprecise, uncertain and fuzzy to varying degrees, so the fusion method used in fusing these information must solve these problems. The way to solve the problem of image registration is to solve the problem of relative correction of images. On the basis of analyzing and discussing the principle, hierarchy, structure and characteristics of multi-source RS image data fusion, this article puts forward the extraction and fusion technology of multi-source RS image geographic information combined with artificial intelligence (AI) algorithm. Compared with K-means classification method, this classification fusion method can effectively reduce the uncertain information in the classification process and improve the classification accuracy. The results verify the feasibility of the extraction and fusion method of geographic information from multi-source RS images proposed in this article in practical application.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Artificial intelligence

- Multi-source remote sensing images

- Geographic information

- Multi-source remote sensing images

- K-means classification method

1 Introduction

With the growth of modern RS technology, various Earth observation satellites continuously provide RS images with different spatial resolution, temporal resolution and spectral resolution. This multi-platform, multi-temporal, multi-spectral and multi-resolution RS image data is coming in at an alarming rate, forming a multi-source image pyramid in the same area [1]. As far as image information is concerned, there are multi-spectral images, visible light images, infrared images and synthetic aperture radar images, and there are also some materials about other attribute information [2]. Making full use of information from different sources to classify RS images will inevitably improve the classification accuracy. In RS images, the larger the values of spectral resolution and spatial resolution, the stronger the ability to distinguish different spectral features and the clearer the edges of the scene [3]. With the continuous growth of modern RS technology, the quality requirements of RS images in environmental detection, precision agriculture, urban planning and other fields are increasing [4]. The traditional RS image registration is completed by selecting control points. Generally, this method can achieve good results in the application of RS images with the same sensor, but if it is used in images with different imaging characteristics, there is a big error in registration [5]. This is due to the terrain differences caused by different imaging mechanisms of different images, which makes it difficult to select control points with the same name. On the other hand, the method of selecting control points requires a lot of manual intervention, so it is time-consuming and laborious [6]. Multi-source RS image fusion technology is mainly a data processing technology to organize and correlate the image data of the same scene under different imaging modes through specific calculation rules, and then obtain a more accurate, perfect and rich comprehensive image [7]. The biggest advantage of RS technology is that it can obtain a wide range of observation data in a short time, and display these data to the public through images and other means. Multi-modal data fusion technology represented by multi-spectral images and panchromatic images is the most widely used in multi-source RS image fusion processing [8]. The main purpose of this multi-modal data fusion is: aiming at the characteristics of low spatial resolution and high spectral resolution of multi-spectral images and high spatial resolution and low spectral resolution of panchromatic images, and the fusion algorithm is used to maximize and improve the spectral resolution and spatial resolution of comprehensive images, so that the spatial geometric features and spectral features can reach an optimal balance [9, 10]. Spatio-temporal fusion technology of optical RS data can generate fusion results with high temporal resolution and high spatial resolution corresponding to the scene image time through low spatial resolution and high spatial resolution image sequences and low spatial resolution images with predicted time [11]. On the basis of analyzing and discussing the principle, hierarchy, structure and characteristics of multi-source RS image data fusion, this article puts forward the extraction and fusion technology of multi-source RS image geographic information combined with AI algorithm.

2 Methodology

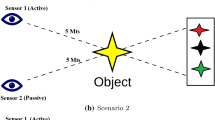

2.1 Basic Theory of Multi-source Data Fusion

RS image map is a kind of map which uses RS images and certain map symbols to represent the geographical spatial distribution and environmental conditions of cartographic objects. The drawing content of RS image map is mainly composed of images, and then some map symbols are used to mark it on the images, which is more conducive to expressing or explaining the drawing objects. Image map is richer in ground information than ordinary map, which has distinct contents and clear and easy-to-read drawings, which can not only show the specific content of the map, but also show all the information on the ground in a three-dimensional way [12]. Multi-source images include a lot of redundant information, and the fusion results make the system more fault-tolerant, reliable and robust, which reduces uncertainty and provides higher detection accuracy. At the same time, it can also eliminate the influence of irrelevant noise and improve detection ability. Geographical information is regional, multi-dimensional and dynamic, which plays an important role in various fields and brings convenience to people's life and work.

The production of geographic information data has the characteristics of long cycle, high cost, large engineering quantity and complex technology, which requires researchers to improve the technology of extracting information to obtain geographic information efficiently and intelligently, and further serve the growth of mankind. More complementary information can be obtained from images obtained from different types of sensors. The result of fusion makes various data optimized and integrated, providing more and richer information. Through feature-level image fusion, relevant feature information can be mined in the original image, the credibility of feature information can be increased, false features can be eliminated and new composite features can be established. Maps and RS images can show all kinds of geographical information in detail, and they can show all kinds of geographical changes in real time, making the geographical information obtained by people more accurate and three dimensional. The dynamic monitoring process of RS geographic information is shown in Fig. 2.1.

For feature extraction from different images, it is necessary to fuse them according to the same type of features on their respective images, so that useful image features can be extracted with high confidence. Feature-level fusion is suitable for the situation that it is impossible to combine multi-source data at pixel level, and it is simple and practical in many cases. Decision-level fusion is a high-level fusion. According to the requirements of the application, it first classifies the images, determines the characteristic images in each category and then carries out fusion processing. Distinguish the important targets contained in various images from other targets, carry out accurate and rapid inspection, increase the recognition and effectively extract the required geographic information. Generally, we can use microscopic feature extraction, dynamic change detection and other methods. The layered processing of ground objects can make full use of the characteristics of various ground objects in different bands and get good results.

2.2 Geographic Information Extraction and Fusion Algorithm of RS Image

Multi-source remote sensing image fusion is a composite model structure, which integrates the information provided by remote sensing image data sources of different sensors to obtain high-quality image information, and at the same time eliminates the redundancy and contradiction between multi-sensor information, complements them, reduces their uncertainty and ambiguity, so as to enhance the clarity of information in the image, improve the accuracy, reliability and utilization rate of interpretation and form a relatively complete and consistent information description of the target. The purpose of remote sensing data fusion is to synthesize the multi-band information of a single sensor or the information provided by different types of sensors, improve the timeliness and reliability of remote sensing information extraction and improve the efficiency of data use [13]. For multi-source RS images, registration refers to the optimal superposition of two or more image data from the same target area, at the same time or at different times, from different perspectives and obtained by the same or different sensors in the same coordinate system. Because the image matching accuracy of feature points is relatively high, feature extraction must be carried out before image matching.

Features are divided into point features and line features. Although point features are good, they often encounter noise points, so line features must be supplemented for identification. Feature-level fusion refers to the feature extraction of registered data, then the correlation processing, so that each sensor can get the feature vectors of the same target, and finally these feature vectors are fused for image classification or target recognition. Its advantage is that considerable information compression is realized, and the features provided are directly related to decision analysis. The data in \(X_{j}\) can be described by linear regression model:

The linear regression model corresponding to data segment \(X_{j}\) is:

The model parameter vector is:

The linear regression model parameter \(a_{j}\) is called the trend characteristic value of \(X_{j}\). The first data element \(x\left( {t_{j + 1,1} } \right)\) of \(X_{j + 1}\) is called the dividing point of \(X_{j}\).

Multi-source RS image data fusion covers a wide range, which obviously emphasizes the structure and foundation of RS image data fusion, rather than the processing technology and method itself as usually emphasized. Signal-level fusion refers to mixing signals from different sensors in some form to produce fused information with better quality and reliability. The function of pixel level fusion is to increase useful information components in the image in order to improve the effects of processing such as segmentation and feature extraction. Feature-level fusion enables the extraction of useful image features with high confidence. Symbol-level fusion allows information from multiple sources to be effectively utilized at the highest abstract level. Feature-level image fusion can not only increase the possibility of extracting feature information from images, but also obtain some useful composite features.

Features are abstracted and extracted from image pixel information. Typical feature information used for fusion includes edges, corners, textures, similar brightness regions and so on. The advantage of feature-level fusion is that it realizes considerable information compression and is convenient for real-time processing. Because the extracted features are directly related to decision analysis, the fusion results can give the feature information needed for decision analysis to the maximum extent.

Overlapping several image blocks whose image layout distance is less than a given threshold to form an image layout matrix \(Z\), obtaining a set of processed image layout blocks \(Y\) through 3D transformation of the image layout matrix \(Z\), and finally performing weighted average on all image layout blocks in each group \(Y\) to obtain a preliminary estimated image layout \(Y\):

where \(X\) represents the characteristic function of an image layout block in the interval [0,1], and the image layout block set \(Y\) and the weight function \(\omega_{h}\) are expressed as:

where \(h\left[ {T_{3d} \left( Z \right)} \right]\) stands for 3D transformation of image layout, \(T_{3d}^{ - 1}\) stands for inverse 3D transformation form of image layout, \(d\) stands for spatial dimension and \(h\) stands for hard threshold shrinkage coefficient.

Where \(\sigma^{2}\) stands for zero mean Gaussian noise variance and \(N_{h}\) stands for nonzero coefficient retained after hard threshold filtering. The image layout \(Y_{b}\) is further grouped and matched to obtain the 3D matrix \(Y_{w}\) of RS image layout, and the original noise image layout block is matched to obtain the 3D matrix \(Z_{w}\) according to the corresponding coordinate information of \(Y_{w}\). Performing inverse 3D transformation on the processed image layout matrix to obtain the processed image layout block set \(Y_{wi}\), and finally performing weighted average on the spatial RS image layout blocks in \(Y_{wi}\) to obtain the final estimated RS image layout:

The weight function \(\omega_{wi}\) of the RS image layout block set \(Y_{wi}\) is given by the following formula:

where \(W\) stands for empirical Wiener filtering shrinkage coefficient.

Remote sensing image fusion is one of the effective ways to solve the problem of multi-source mass data enrichment, which will help to enhance the ability of multi-data analysis and environmental dynamic detection, improve the timeliness and reliability of remote sensing information extraction, effectively improve the utilization rate of data, provide a good foundation for large-scale remote sensing application research and make full use of the remote sensing data obtained by spending a lot of money, that is, it has the characteristics of redundancy, complementarity, time limit and low cost.

3 Result Analysis and Discussion

Extracting geographic information from map images or RS images belongs to the problem of computer image understanding. The simulation operating system is Windows 11, the processor is Core i7 13700k, the graphics card is RTX 3070, the memory is 16 GB and the hard disk capacity is 1 TB. In our experiment and evaluation, we selected four polarimetric SAR image data, one from the spaceborne system (RADARSAT-2 of Canadian Space Agency) and the other from the airborne system (NASA/JPL CALTECH ARSAR). Image understanding is to use computer system to help explain the meaning of images, so as to realize the interpretation of the objective world by using image information. In order to complete a visual task, it is necessary to determine what information needs to be obtained from the objective world through image acquisition, what information needs to be extracted from the image through image processing and analysis and what information needs to be further processed to obtain the required explanation. In the training stage, according to the chromaticity information, different types of regions are artificially selected as training samples of the single subset in each information source identification framework and the gray average and variance of pixels in the selected different types of regions are used as the characteristics of obtaining the basic probability distribution function of each single subset. Figure 2.2 shows the influence of RS platform geographic information extraction and fusion on evenly distributed datasets. Figure 2.3 shows the influence of geographic information extraction and fusion on real datasets.

Because the node algorithm is simplified and compressed sensing is used to process the transmitted data, the data transmission amount of this scheme is greatly reduced, so the energy consumption is also low. The most important step in the integration process is to transform all or part of the organizational standards and organizational meanings of multi-source heterogeneous data based on a unified data model, so that the integrated dataset has a global unified data model externally and the heterogeneous data are fully compatible with each other internally.

For the fusion of high spatial resolution images and low spatial resolution multispectral images, the purpose is to obtain multispectral images with enhanced spatial resolution. In fact, enhancing the resolution of multispectral images by fusion will inevitably lead to more or less changes in the spectral characteristics of multispectral images. By training the designed information fusion model with discrete geographic data, better network weights can be obtained. Then, substituting the obtained network weights into this model can become the basic model of geographic information extraction and fusion. Compare the output data of the information fusion model with the real geographic data, as shown in Fig. 2.4.

It is not difficult to see that the learning results of multi-source RS image geographic information fusion model are convergent and can approximate the original data well. Filter the rules according to the time sequence constraints satisfied by the attributes before and after the rules, and get the time sequence rules. Because the echo process of SAR sensor signal transmission is quite complicated, there are inevitably many error sources. The gain and error generated by radar antenna, transmitter and receiver, imaging processor and other parts will cause signal distortion, and radar images cannot accurately reflect the echo characteristics of ground objects. The radiometric calibration of SAR data can correct the gain and error of the whole SAR system from signal transmission to image generation by establishing the relationship between radar image and target cross-sectional area and backscattering coefficient, and convert the original data received by the sensor into backscattering coefficient.

For the general data fusion process, with the improvement of the fusion level, the higher the abstraction of data, the lower the homogeneity of each sensor, the higher the unity of data representation, the greater the data conversion, and the higher the fault tolerance of the system, but the lower the fusion level. The more detailed information is saved by fusion, but the processing capacity of fusion data increases, which requires high registration accuracy between the data used in fusion, and the dependence of fusion method on data source and its characteristics increases, which makes it difficult to give a general method of data fusion and has poor fault tolerance. There are two main factors that affect the output of information fusion model, namely whether the learning ability of the model is efficient and whether it has excellent generalization ability, and the input and output variables in the model will also affect the implementation effect of the model. Comparing this model with the traditional K-means algorithm, the result is shown in Fig. 2.5.

As can be seen from Fig. 2.5, after many iterations, the MAE of this method is obviously superior to the traditional K-means algorithm, and the error is reduced by 31.66%. The results show that the improved information fusion strategy enhances the robustness of the model and the rationality of the initial weight threshold setting, and effectively improves the classification performance of the model. The relative registration and fusion of multiple different images can not only provide a large range of high-resolution multi-spectral digital orthophoto images, but also provide a good basic data layer for geographic information systems. The data with low spatial resolution and high spectral resolution can be fused with the data with high spatial resolution and low spectral resolution by image fusion technology to obtain an image with the advantages of both data.

4 Conclusions

Multi-source RS image fusion technology is mainly a data processing technology to organize and correlate the image data of the same scene under different imaging modes through specific calculation rules, and then obtain more accurate, perfect and rich information of comprehensive images. When different sensors take RS images of the same terrain area, they will get many RS images, all of which have their own advantages and disadvantages. Complementary combination design fusion rules generate multi-source RS images, so as to achieve a fused image with better vision, clearer target, good redundancy and high complementarity and strong structure. Multi-source images include a lot of redundant information, and the fusion results make the system more fault-tolerant, reliable and robust, which reduces uncertainty and provides higher detection accuracy. On the basis of analyzing and discussing the principle, hierarchy, structure and characteristics of multi-source RS image data fusion, this article puts forward the extraction and fusion technology of multi-source RS image geographic information combined with AI algorithm. After many iterations, the MAE of this method is obviously better than the traditional K-means algorithm, and the error is reduced by 31.66%. The results show that the improved information fusion strategy enhances the robustness of the model and the rationality of the initial weight threshold setting, and effectively improves the classification performance of the model.

There are still many methods to measure the statistical characteristics of images, and the calculation indexes to judge the quality of fusion results have not been unified. Therefore, when constructing fractional differential, the measurement index selected in this paper has some limitations, and the selection of statistical features can be further studied in combination with the information difference between multi-spectral images and panchromatic images. The application of multi-spectral remote sensing data GIS in resource sharing and interoperability also needs further and deeper research.

References

Guan, X., Shen, H., Gan, W., et al.: A 33-year NPP monitoring study in Southwest China by the fusion of multi-source remote sensing and station data. Remote Sens. 9(10), 1082 (2017)

Li, X., Zhang, H., Yu, J., et al.: Spatial–temporal analysis of urban ecological comfort index derived from remote sensing data: a case study of Hefei, China. J. Appl. Remote Sens. 15(4), 042403 (2021)

Hiroki, M., Chikako, N., Iwan, R., et al.: Monitoring of an Indonesian tropical wetland by machine learning-based data fusion of passive and active microwave sensors. Remote Sens. 10(8), 1235 (2018)

Ansari, A., Danyali, H., Helfroush, M.S.: HS remote sensing image restoration using fusion with MS images by EM algorithm. IET Signal Proc. 11(1), 95–103 (2017)

Li, X., Long, J., Zhang, M., et al.: Coniferous plantations growing stock volume estimation using advanced remote sensing algorithms and various fused data. Remote Sens. 13(17), 3468 (2021)

Han, Y., Liu, Y., Hong, Z., et al.: Sea ice image classification based on heterogeneous data fusion and deep learning. Remote Sens. 13(4), 592 (2021)

Ren, J., Yang, W., Yang, X., et al.: Optimization of fusion method for GF-2 satellite remote sensing images based on the classification effect. Earth Sci. Res. J. 23(2), 163–169 (2019)

Elmannai, H., Salhi, A., Hamdi, M., et al.: Rule-based classification framework for remote sensing data. J. Appl. Remote Sens. 13(1), 1 (2019)

Douaoui, A., Nicolas, H., Walter, C.: Detecting salinity hazards within a semiarid context by means of combining soil and remote-sensing data. Geoderma 134(1–2), 217–230 (2017)

Kussul, N., Lavreniuk, M., Skakun, S., et al.: Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017(99), 1–5 (2017)

Khodadadzadeh, M., Li, J., Prasad, S., et al.: Fusion of hyperspectral and LiDAR remote sensing data using multiple feature learning. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 8(6), 2971–2983 (2017)

Sukawattanavijit, C., Jie, C., Zhang, H.: GA-SVM algorithm for improving land-cover classification using SAR and optical remote sensing data. IEEE Geosci. Remote Sens. Lett. 14(3), 284–288 (2017)

Mondal, A., Khare, D., Kundu, S., et al.: Spatial soil organic carbon (SOC) prediction by regression kriging using remote sensing data. Egypt. J. Remote Sens. Space Sci. 20(1), 61–70 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, Z. (2024). Extraction and Fusion of Geographic Information from Multi-source Remote Sensing Images Based on Artificial Intelligence. In: Kountchev, R., Patnaik, S., Nakamatsu, K., Kountcheva, R. (eds) Proceedings of International Conference on Artificial Intelligence and Communication Technologies (ICAICT 2023). ICAICT 2023. Smart Innovation, Systems and Technologies, vol 368. Springer, Singapore. https://doi.org/10.1007/978-981-99-6641-7_2

Download citation

DOI: https://doi.org/10.1007/978-981-99-6641-7_2

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-6640-0

Online ISBN: 978-981-99-6641-7

eBook Packages: EngineeringEngineering (R0)